Design of Multimodal Sensor Module for Outdoor Robot Surveillance System

Abstract

:1. Introduction

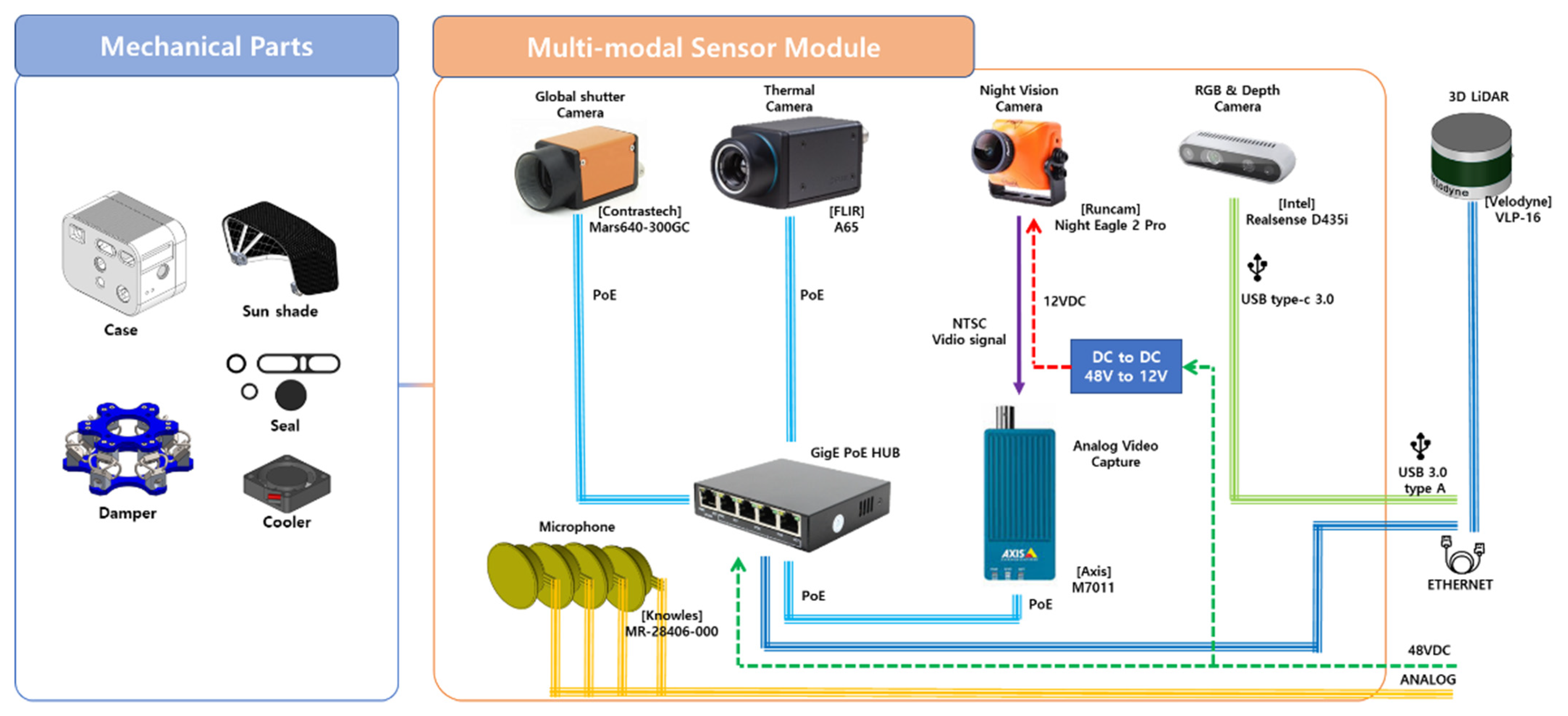

2. Design of a Multi-Modal Sensor System

2.1. Multi-Modal Sensor System Configuration

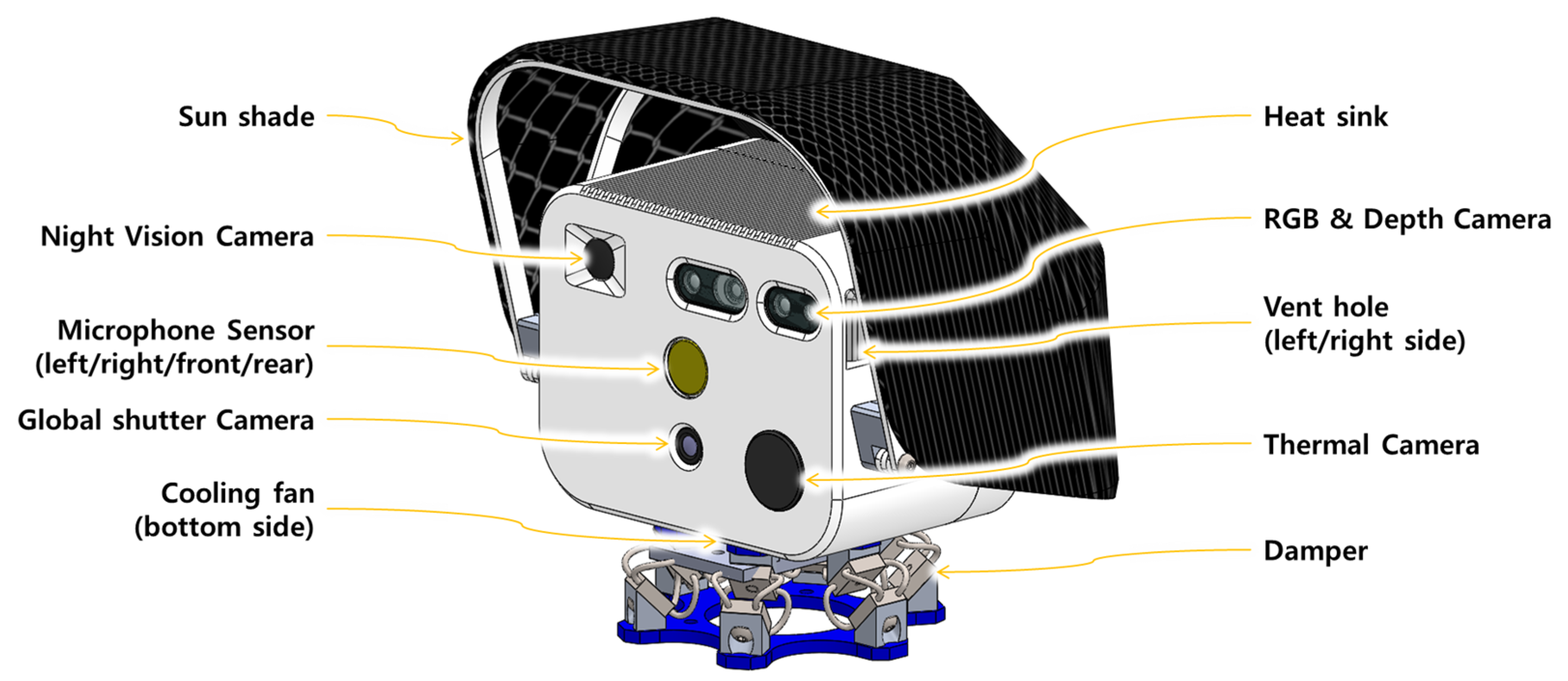

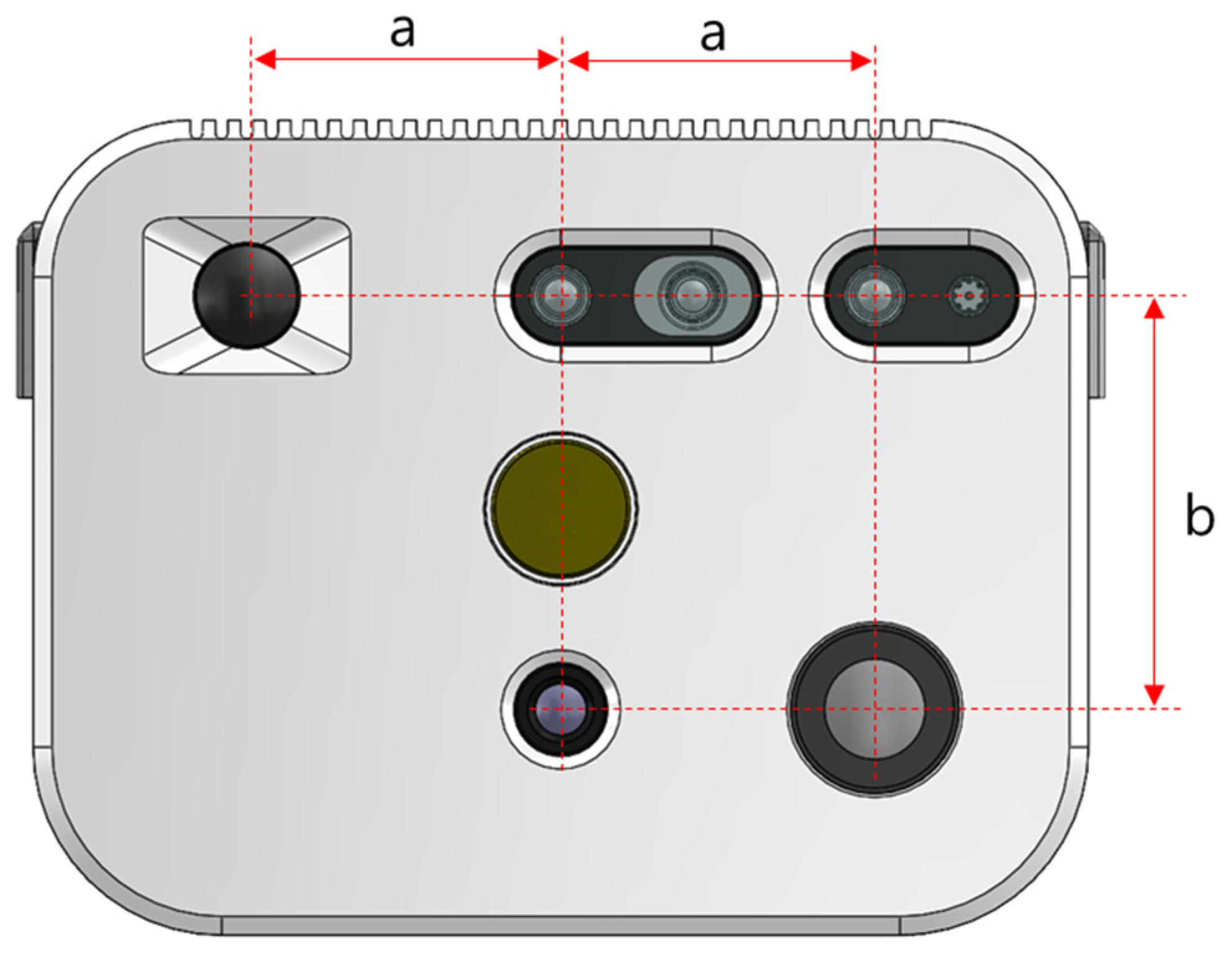

2.2. Overall Structure and Arrangement of Multi-Modal Sensor Module

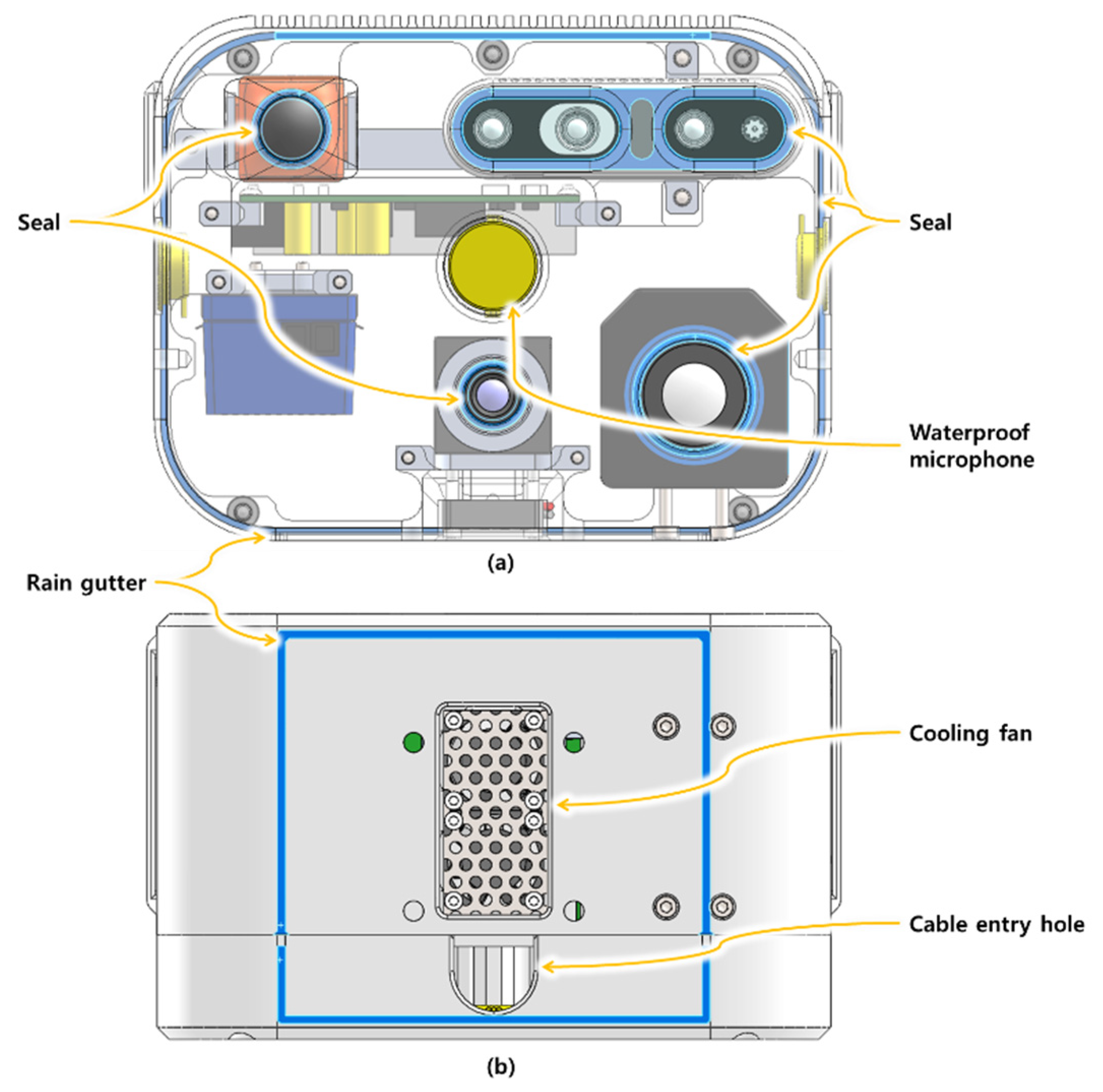

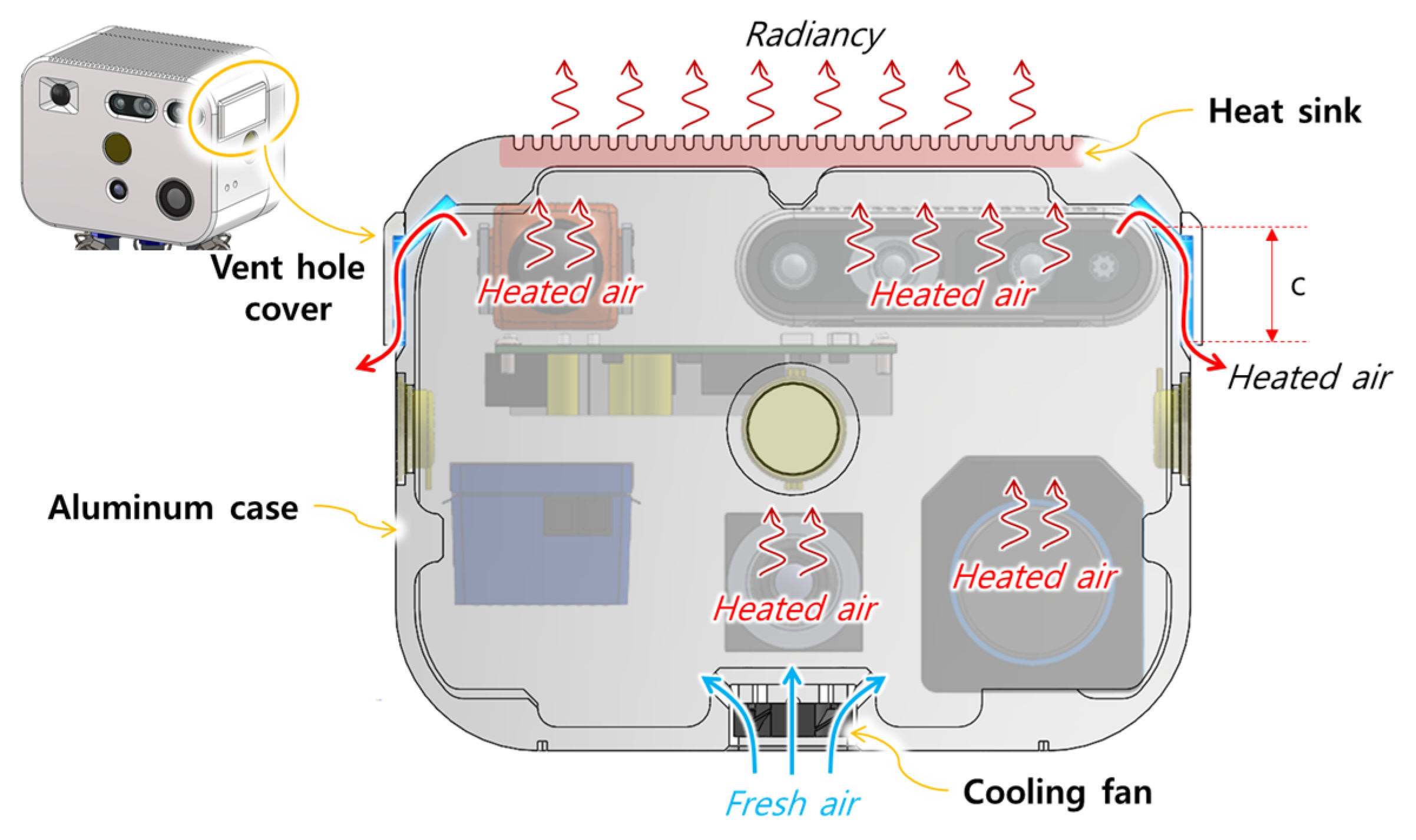

2.3. Waterproof and Cooling Design

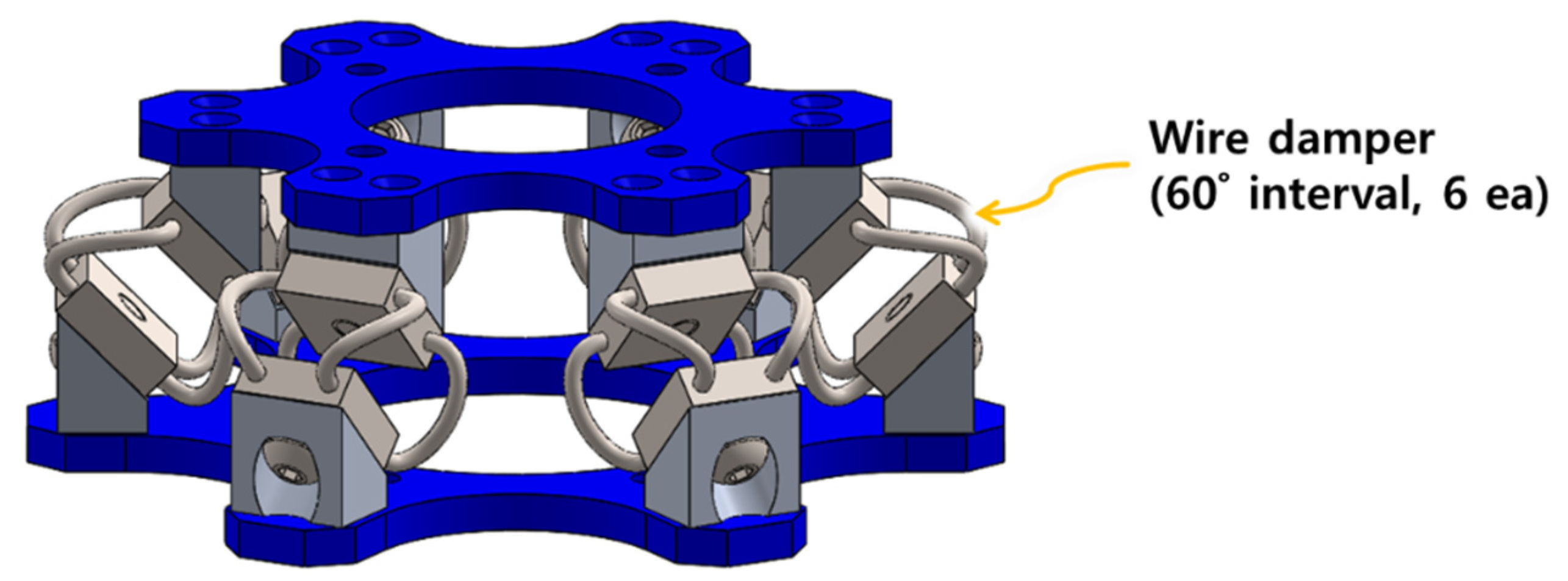

2.4. Sunshade and Damper Design

3. Multi-Modal Sensor Calibration Method

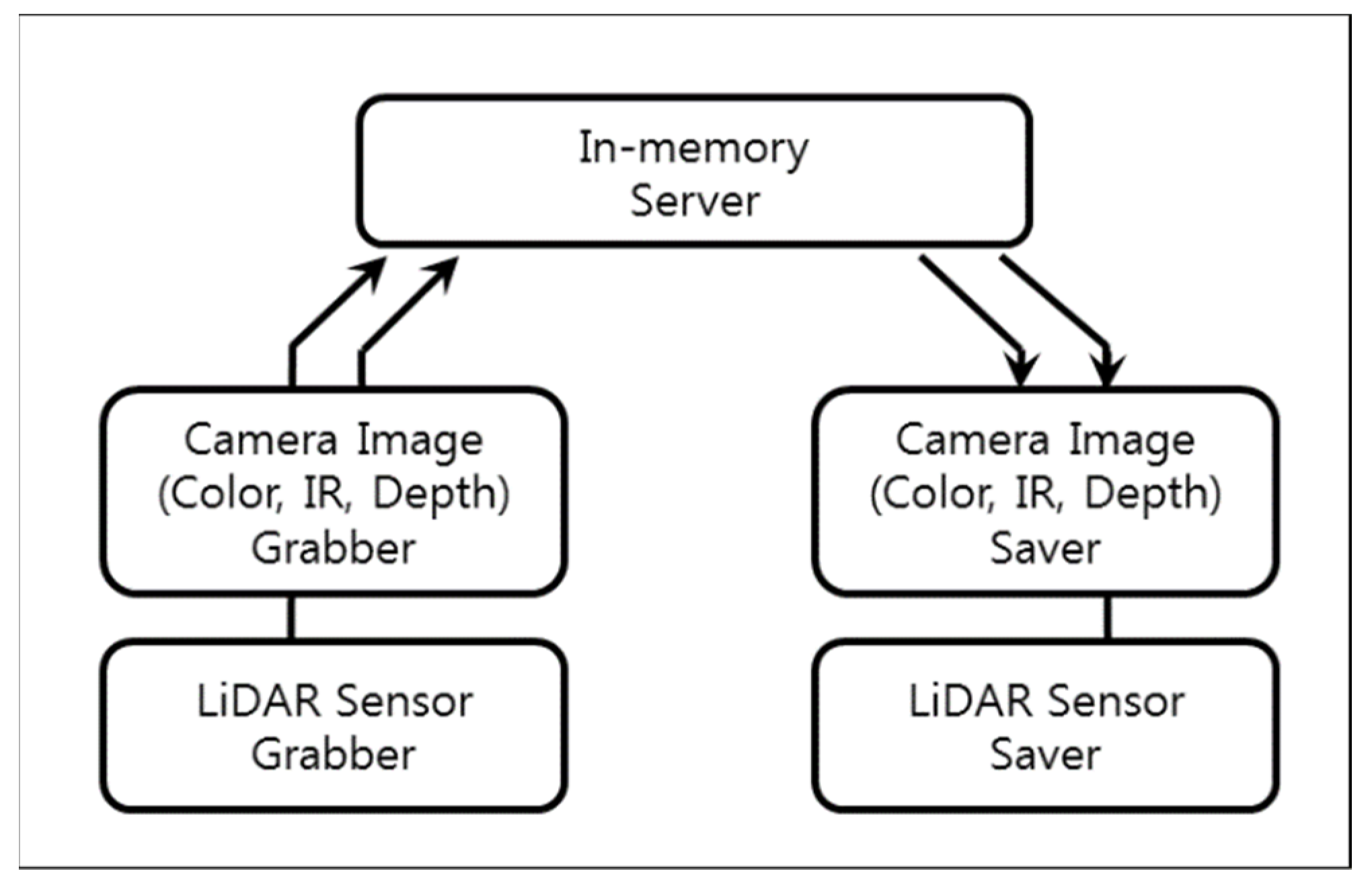

3.1. Multi-Modal Sensor Synchronization

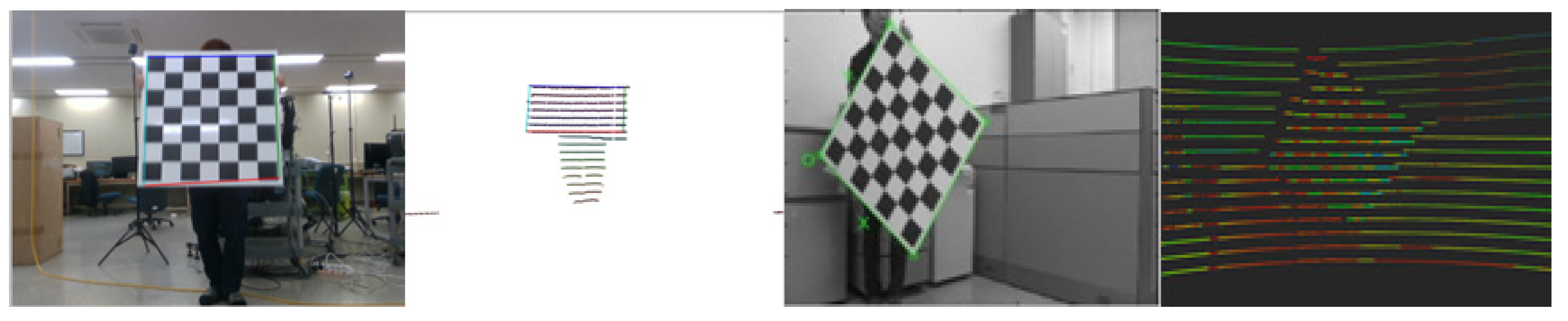

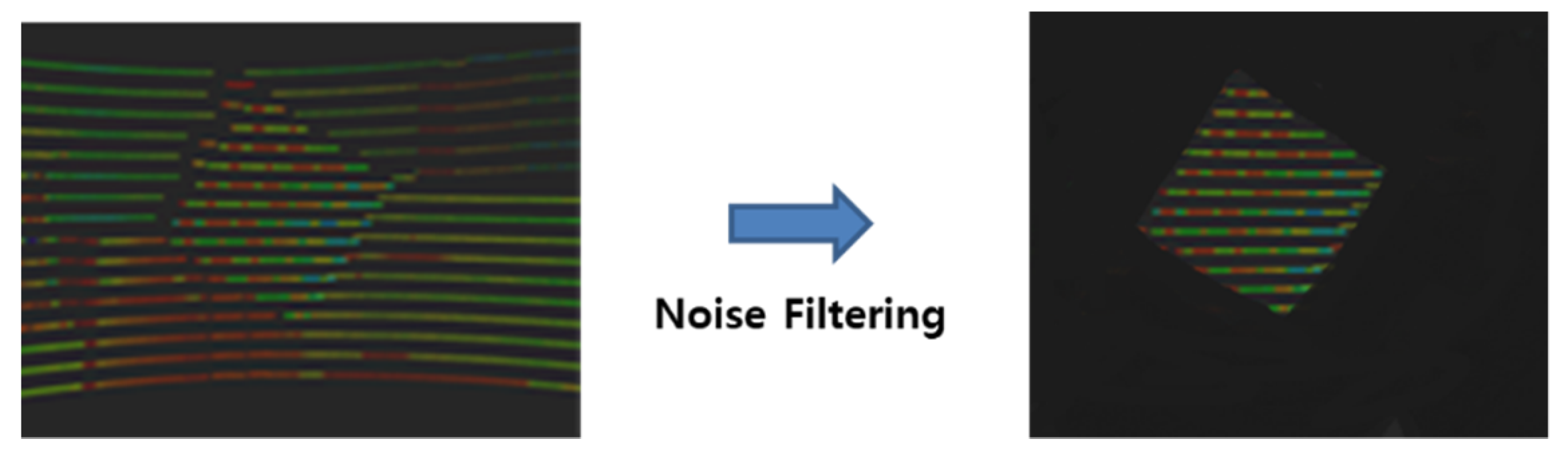

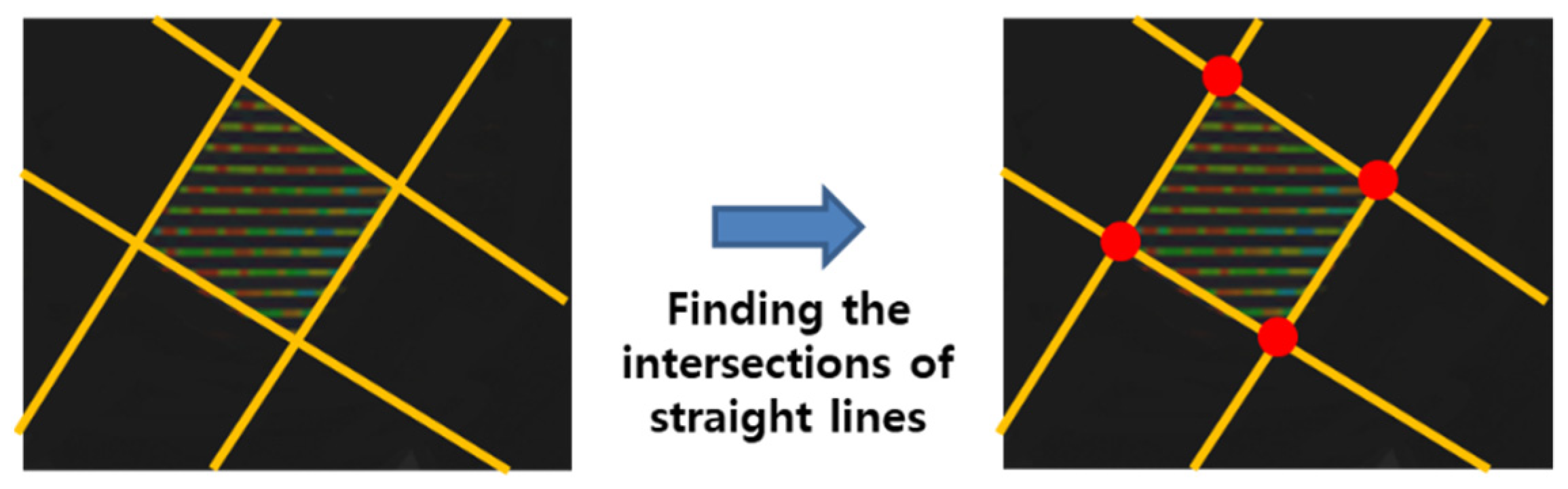

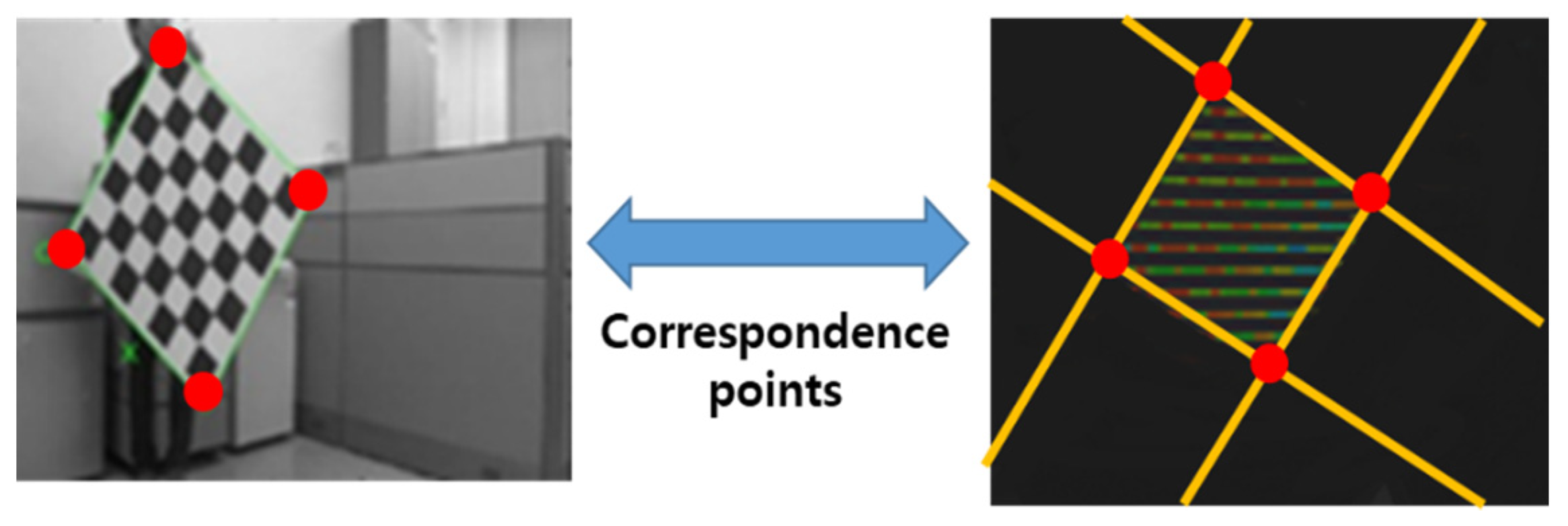

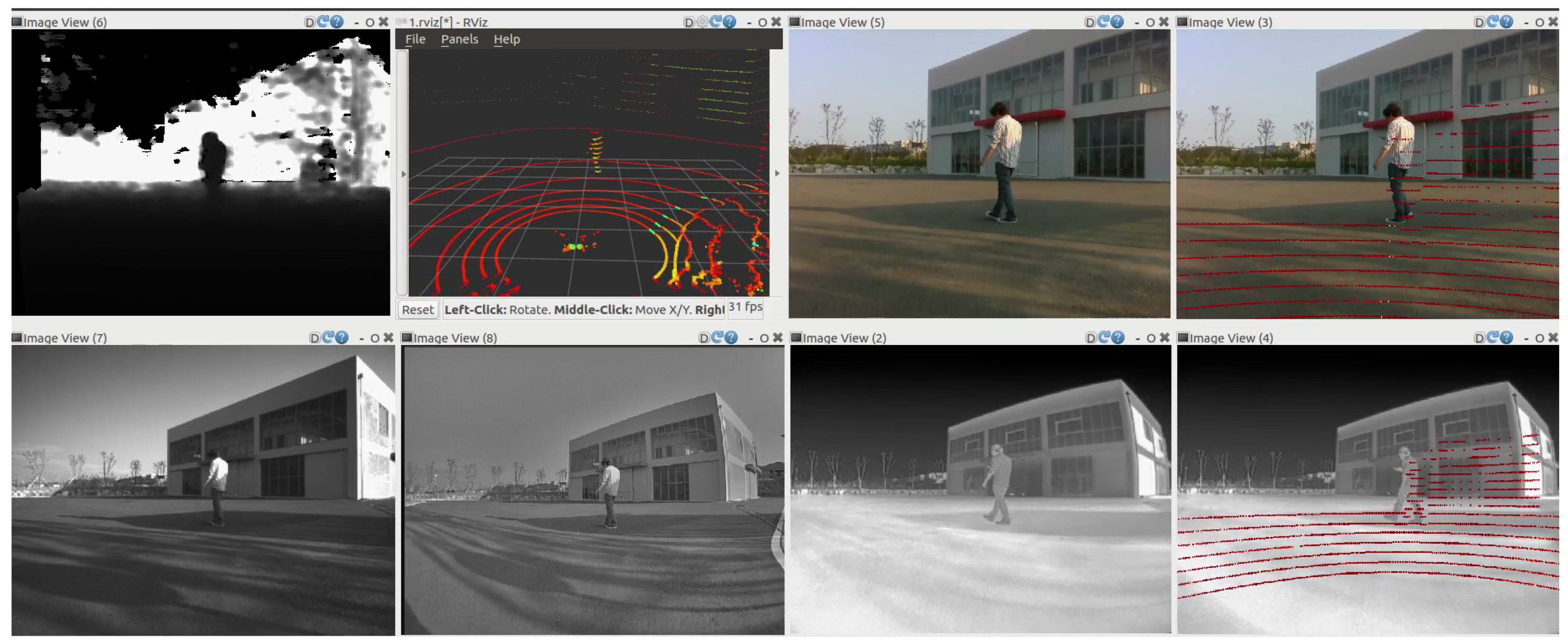

3.2. Multi-Modal Sensor Calibration Method

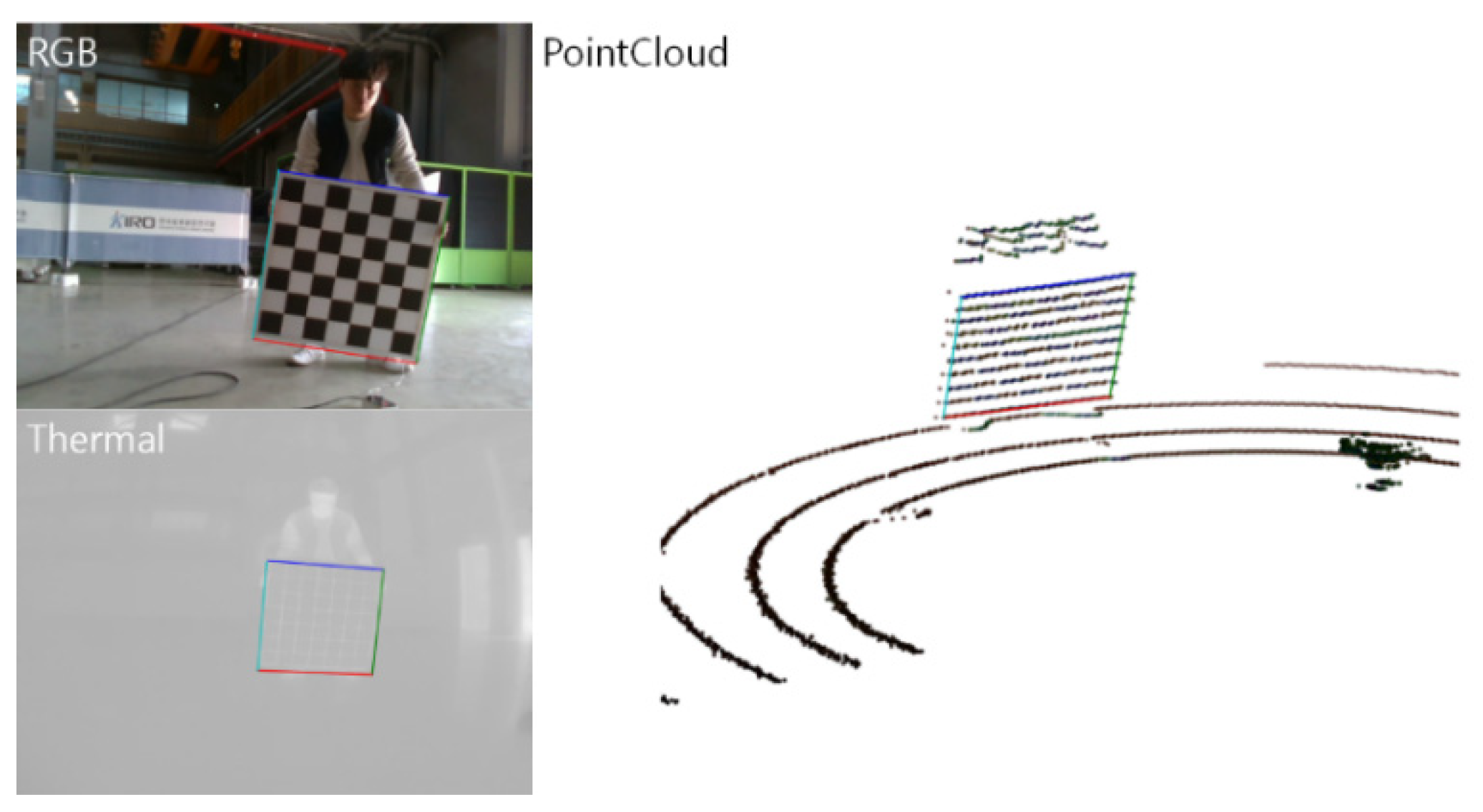

4. Application of Multi-Modal Sensor Calibration Method

4.1. Computing for Multi-Modal Sensor System

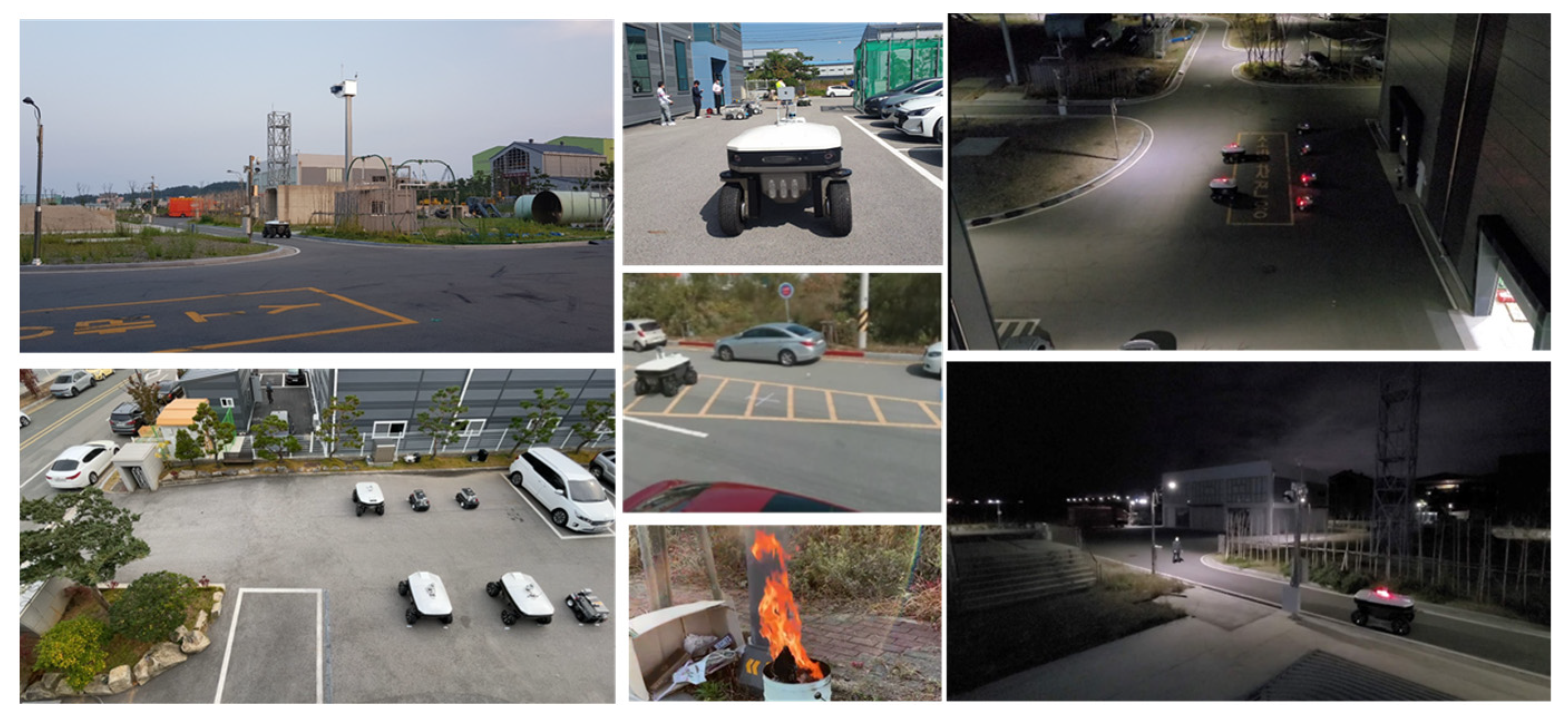

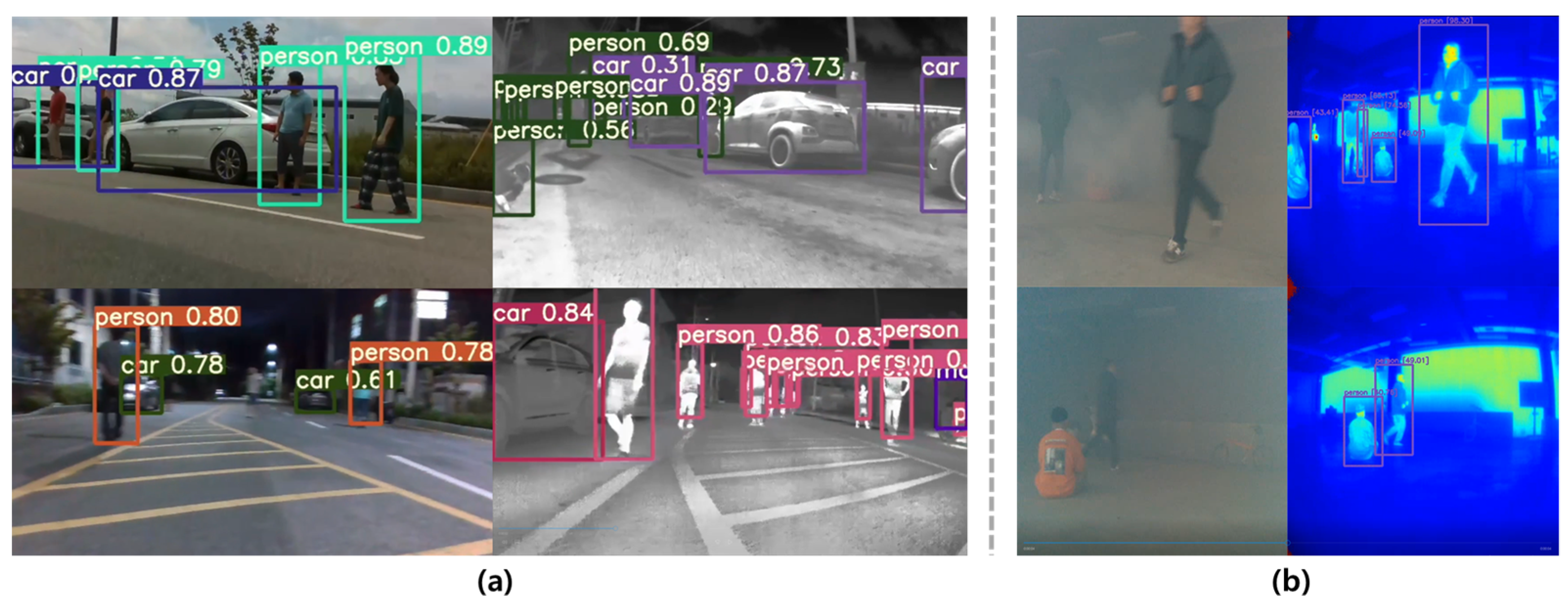

4.2. Multi-Modal Sensor System Install and Test

5. Conclusions and Future Works

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Haritaoglu, I.; Harwood, D.; Davis, S.L. A Fast Background Scene Modeling and Maintenance for Outdoor Surveillance. In Proceedings of the 15th International Conference on Pattern Recognition (ICPR), Barcelona, Spain, 3–8 September 2000; pp. 179–183. Available online: https://ieeexplore.ieee.org/abstract/document/902890 (accessed on 7 March 2022).

- Kurada, S.; Bradley, C. A review of machine vision sensors for tool condition monitoring. Comput. Ind. 1997, 34, 55–72. Available online: https://www.sciencedirect.com/science/article/pii/S0166361596000759 (accessed on 7 March 2022). [CrossRef]

- Naverlabs Dataset. Available online: http://github.com/naver/kapture (accessed on 7 March 2022).

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. Available online: https://journals.sagepub.com/doi/full/10.1177/0278364913491297 (accessed on 7 March 2022). [CrossRef] [Green Version]

- Chen, Y.L.; Jahanshahi, M.R.; Manjunatha, P.; Gan, W.; Abdelbarr, M.; Masri, S.F.; Gerber, B.B.; Caffrey, J.P. Inexpensive Multimodal Sensor Fusion System for Autonomous Data Acquisition of Road Surface Conditions. Sensors 2016, 21, 7731–7743. Available online: https://ieeexplore.ieee.org/abstract/document/7556304 (accessed on 7 March 2022). [CrossRef]

- Bechtel, B.; Alexander, P.J.; Böhner, J.; Ching, J.; Conrad, O.; Feddema, J.; Mills, G.; See, L.; Stewart, I. Mapping Local Climate Zones for a Worldwide Database of the Form and Function of Cities. ISPRS Int. J. Geo-Inf. 2015, 4, 199–219. Available online: https://www.mdpi.com/2220–9964/4/1/199 (accessed on 7 March 2022). [CrossRef] [Green Version]

- Ferryman, J.; Shahrokni, A. PETS2009: Dataset and challenge. In Proceedings of the Twelfth IEEE International Workshop on Performance Evaluation of Tracking and Surveillance, Snowbird, UT, USA, 7–9 December 2009; Available online: https://ieeexplore.ieee.org/abstract/document/5399556 (accessed on 7 March 2022).

- Woznowski, P.; Fafoutis, X.; Song, T.; Hannuna, S.; Camplani, M.; Tao, L.; Paiement, A.; Mellios, E.; Haghighi, M.; Zhu, N.; et al. A Multi-modal Sensor Infrastructure for Healthcare in a Residential Environment. In Proceedings of the IEEE International Conference on Communication Workshop (ICCW), London, UK, 8–12 June 2015; Available online: https://ieeexplore.ieee.org/abstract/document/7247190 (accessed on 7 March 2022).

- Harper, S.E.; Schmitz, D.G.; Adamczyk, P.G.; Thelen, D.G. Fusion of Wearable Kinetic and Kinematic Sensors to Estimate Triceps Surae Work during Outdoor Locomotion on Slopes. Sensors 2022, 22, 1589. Available online: https://www.mdpi.com/1424–8220/22/4/1589 (accessed on 7 March 2022). [CrossRef] [PubMed]

- Weiner, P.; Neef, C.; Shibata, Y.; Nakamura, Y.; Asfour, T. An Embedded, Multi-Modal Sensor System for Scalable Robotic and Prosthetic Hand Fingers. Sensors 2020, 20, 101. Available online: https://www.mdpi.com/1424–8220/20/1/101 (accessed on 7 March 2022). [CrossRef] [PubMed] [Green Version]

- Marín, L.; Vallés, M.; Soriano, Á.; Valera, Á.; Albertos, P. Multi Sensor Fusion Framework for Indoor-Outdoor Localization of Limited Resour. Sensors 2013, 13, 14133–14160. Available online: https://www.mdpi.com/1424-8220/13/10/14133 (accessed on 7 March 2022). [CrossRef] [PubMed]

- Klingbeil, L.; Reiner, R.; Romanovas, M.; Traechtler, M.; Manoli, Y. Multi-modal Sensor Data and Information Fusion for Localization in Indoor Environments. In Proceedings of the 7th Workshop on Positioning, Navigation and Communication (WPNC), Dresden, Germany, 11–12 March 2010; Available online: https://ieeexplore.ieee.org/abstract/document/5654128 (accessed on 7 March 2022).

- Vachmanus, S.; Ravankar, A.A.; Emaru, T.; Kobayashi, Y. Multi-Modal Sensor Fusion-Based Semantic Segmentation for Snow Driving Scenarios. Sensors 2021, 21, 16839–16851. Available online: https://ieeexplore.ieee.org/abstract/document/9420724 (accessed on 7 March 2022). [CrossRef]

- Hong, B.; Zhou, Y.; Qin, H.; Wei, Z.; Liu, H.; Yang, Y. Few-Shot Object Detection Using Multimodal Sensor Systems of Unmanned Surface Vehicles. Sensors 2022, 22, 1511. Available online: https://www.mdpi.com/1424–8220/22/4/1511 (accessed on 7 March 2022). [CrossRef] [PubMed]

- Haris, M.; Glowacz, A. Navigating an Automated Driving Vehicle via the Early Fusion of Multi-Modality. Sensors 2022, 22, 1425. Available online: https://www.mdpi.com/1424–8220/22/4/1425 (accessed on 7 March 2022). [CrossRef] [PubMed]

- Khatab, E.; Onsy, A.; Abouelfarag, A. Evaluation of 3D Vulnerable Objects’ Detection Using a Multi-Sensors System for Autonomous Vehicles. Sensors 2022, 22, 1663. Available online: https://www.mdpi.com/1424–8220/22/4/1663 (accessed on 7 March 2022). [CrossRef] [PubMed]

- Park, J.H.; Sim, K.B. A Design of Mobile Robot based on Network Camera and Sound Source Localization for Intelligent Surveillance System. In Proceedings of the International Conference on Control, Automation and Systems (ICCAS), Seoul, Korea, 14–17 October 2008; Available online: https://ieeexplore.ieee.org/abstract/document/4694586 (accessed on 7 March 2022).

- Raimondo, D.M.; Kariotoglou, N.; Summers, S.; Lygeros, J. Probabilistic certification of pan-tilt-zoom camera surveillance systems. In Proceedings of the IEEE Conference on Decision and Control and European Control Conference (CDC-ECC), Orlando, FL, USA, 12–15 December 2011; Available online: https://ieeexplore.ieee.org/abstract/document/6161534 (accessed on 7 March 2022).

- Prati, A.; Vezzani, R.; Benini, L.; Farella, E.; Farella, P. An Integrated Multi-Modal Sensor Network for Video Surveillance. In Proceedings of the Third ACM International Workshop on Video Surveillance & Sensor Networks, Singapore, 11 November 2005; Available online: https://dl.acm.org/doi/abs/10.1145/1099396.1099415 (accessed on 7 March 2022).

- Chakravarty, P.; Jarvis, R. External Cameras & A Mobile Robot: A Collaborative Surveillance System. In Proceedings of the Australasian Conference on Robotics and Automation (ACRA), Sydney, Australia, 2–4 December 2009; Available online: https://www.araa.asn.au/acra/acra2009/papers/pap135s1.pdf (accessed on 7 March 2022).

- Menegatti, E.; Mumolo, E.; Nolich, M.; Pagello, E. A Surveillance System based on Audio and Video Sensory Agents cooperating with a Mobile Robot. In Proceedings of the 8th International Conference on Intelligent Autonomous Systems (IAS-8), Amsterdam, The Netherlands, 10–13 March 2004; Available online: https://www.academia.edu/9115938/A_Surveillance_System_based_on_Audio_and_Video_Sensory_Agents_cooperating_with_a_Mobile_Robot (accessed on 13 July 2022).

- Wu, X.; Gong, H.; Chen, P.; Zhong, Z.; Yangsheng, X. Surveillance Robot Utilizing Video and Audio Information. J. Intell. Robot. Syst. 2009, 55, 403–421. Available online: https://link.springer.com/article/10.1007/s10846–008–9297–3 (accessed on 7 March 2022). [CrossRef]

- López, J.; Pérez, D.; Paz, E.; Santana, A. WatchBot: A building maintenance and surveillance system based on autonomous robots. Robot. Auton. Syst. 2013, 61, 1559–1571. Available online: https://www.sciencedirect.com/science/article/pii/S0921889013001218 (accessed on 7 March 2022). [CrossRef]

- Siebel, N.T.; Maybank, S. The ADVISOR Visual Surveillance System. In Proceedings of the ECCV 2004 Workshop Applications of Computer Vision (ACV), Prague, Czech Republic, 11–14 May 2004; Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.158.4852&rep=rep1&type=pdf (accessed on 7 March 2022).

- Clavel, C.; Ehrette, T.; Richard, G. Events Detection for an Audio-based Surveillance System. In Proceedings of the IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6–7 July 2005; Available online: https://ieeexplore.ieee.org/abstract/document/1521669 (accessed on 7 March 2022).

- Zhang, T.; Chowdhery, A.; Bahl, P.; Jamieson, K.; Banerjee, S. The Design and Implementation of a Wireless Video Surveillance System. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking (MoviCom’15), Paris, France, 7–11 September 2015; Available online: https://dl.acm.org/doi/abs/10.1145/2789168.2790123 (accessed on 7 March 2022).

- Chun, W.H.; Papanikolopoulos, N. Robot Surveillance and Security. In Robot Surveillance and Security, 2nd ed.; Siciliano, B., Khatib, O., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; Volume 1, pp. 1605–1626. Available online: https://link.springer.com/chapter/10.1007/978–3-319–32552–1_61 (accessed on 7 March 2022).

- Wang, W.; Yuan, X.; Wu, X.; Liu, Y. Fast Image Dehazing Method Based on Linear Transformation. IEEE Trans. Multimed. 2017, 19, 1142–1155. [Google Scholar] [CrossRef]

- Ouahabi, A. (Ed.) Signal and Image Multiresolution Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2012; Available online: https://www.wiley.com/en-us/9781118568668 (accessed on 8 July 2022).

- Haneche, H.; Ouahabi, A.; Boudraa, B. New mobile communication system design for Rayleigh environments based on compressed sensing-source coding. IET Commun. 2019, 13, 2375–2385. [Google Scholar] [CrossRef]

- Mahdaoui, A.E.; Ouahabi, A.; Moulay, M.S. Image denoising using a compressive sensing approach based on regularization constraints. Sensors 2022, 22, 2199. [Google Scholar] [CrossRef] [PubMed]

- What Is Memcached? Available online: http://www.memcached.org/ (accessed on 7 March 2022).

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. Available online: https://ieeexplore.ieee.org/abstract/document/888718 (accessed on 7 March 2022). [CrossRef] [Green Version]

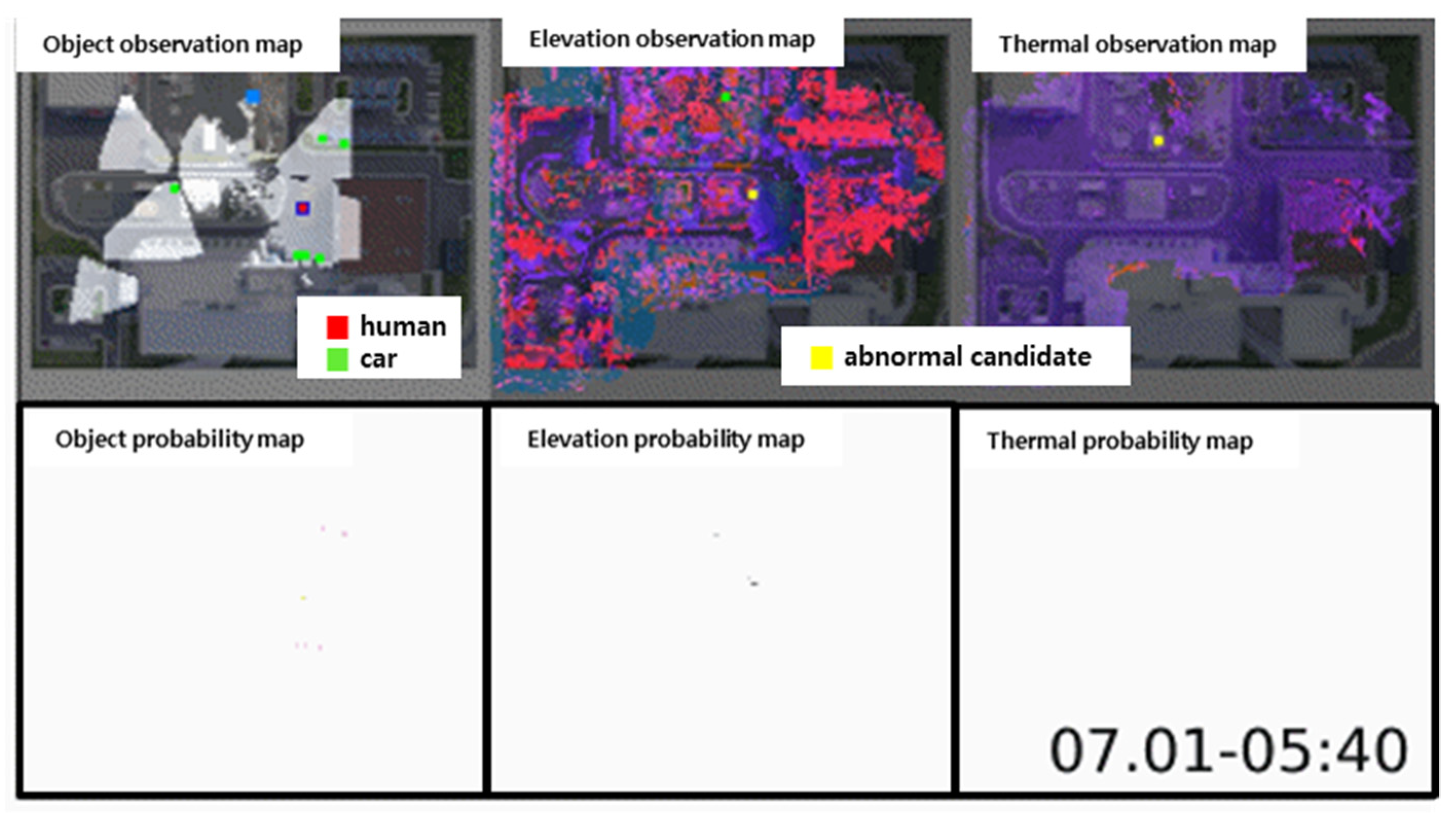

- Shin, H.; Na, K.I.; Chang, J.; Uhm, T. Multimodal layer surveillance map based anomaly detection using multi-agents for smart city security. ETRI J. 2022, 44, 183–193. Available online: https://onlinelibrary.wiley.com/doi/full/10.4218/etrij.2021–0395 (accessed on 7 March 2022). [CrossRef]

| Sensors | Field of View |

|---|---|

| RGB and Depth Camera | IR(2EA) 85.2° (H) × 58° (V), RGB 69.4° (H) × 42.5° (V) |

| Thermal Camera | 90° (H) × 69° (V) |

| Night Vision Camera | 116.8° (H) × 101.3° (V) |

| 3D LiDAR | 360° (H) × 30° (V) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Uhm, T.; Park, J.; Lee, J.; Bae, G.; Ki, G.; Choi, Y. Design of Multimodal Sensor Module for Outdoor Robot Surveillance System. Electronics 2022, 11, 2214. https://doi.org/10.3390/electronics11142214

Uhm T, Park J, Lee J, Bae G, Ki G, Choi Y. Design of Multimodal Sensor Module for Outdoor Robot Surveillance System. Electronics. 2022; 11(14):2214. https://doi.org/10.3390/electronics11142214

Chicago/Turabian StyleUhm, Taeyoung, Jeongwoo Park, Jungwoo Lee, Gideok Bae, Geonhui Ki, and Youngho Choi. 2022. "Design of Multimodal Sensor Module for Outdoor Robot Surveillance System" Electronics 11, no. 14: 2214. https://doi.org/10.3390/electronics11142214