1. Introduction

Quantum devices controlled by gate voltages have wide-ranging applications, spanning from quantum computation, spintronics as well as the exploration of fundamental physics [

1]. An important class are spin based quantum dot arrays, which are candidates for universal quantum computers [

2]. In these devices, electrostatic forces are used to trap single electrons at discrete locations, so called quantum dots. Finding the correct control parameters to confine the desired amounts of electrons (e.g., one electron on each dot) or to move single electrons between different quantum dot locations is a key challenge and primary bottleneck in developing these devices.

In this work, we tackle the problem of controlling the electron transitions between quantum dot locations. We present an algorithm that is capable of discovering the set of possible electron transitions as well as their correct control parameters and demonstrate its performance on a real device. Our algorithm is based on a connection to computational geometry and phrases the optimization problem as estimating the facets of a convex polytope from measurements. While this problem is NP-hard, an approximated solution performs well and thus our algorithm represents the first practical automatic tuning algorithm which has the prospects to accurately learn the set of state-transitions in more than two dimensions and which can be scaled to more than 2 or 3 quantum dots on real devices. We demonstrate its practicality on a simulated device with four quantum dots as well as a real device with four quantum dots (three qubit dots plus one sensor dot), for which a full discovery of all transitions is already outside the scope of human tuning capabilities. Our contributions are the following:

We develop an algorithm that aims to find a sparse approximate solution of a convex polytope from measurements with as few facets as possible. For this we extend the non-convex large margin approach introduced by [

3].

We proof a lemma that can be used to test the correctness of a learned polytope from measurements. Our result leads to an active learning scheme that iteratively improves the polytope estimate by adding informative samples that systematically disproves the previous solution.

We show applicability of our algorithm on a real quantum dot array, specifically a foundry-fabricated silicon device that is currently being developed for spin qubit applications.

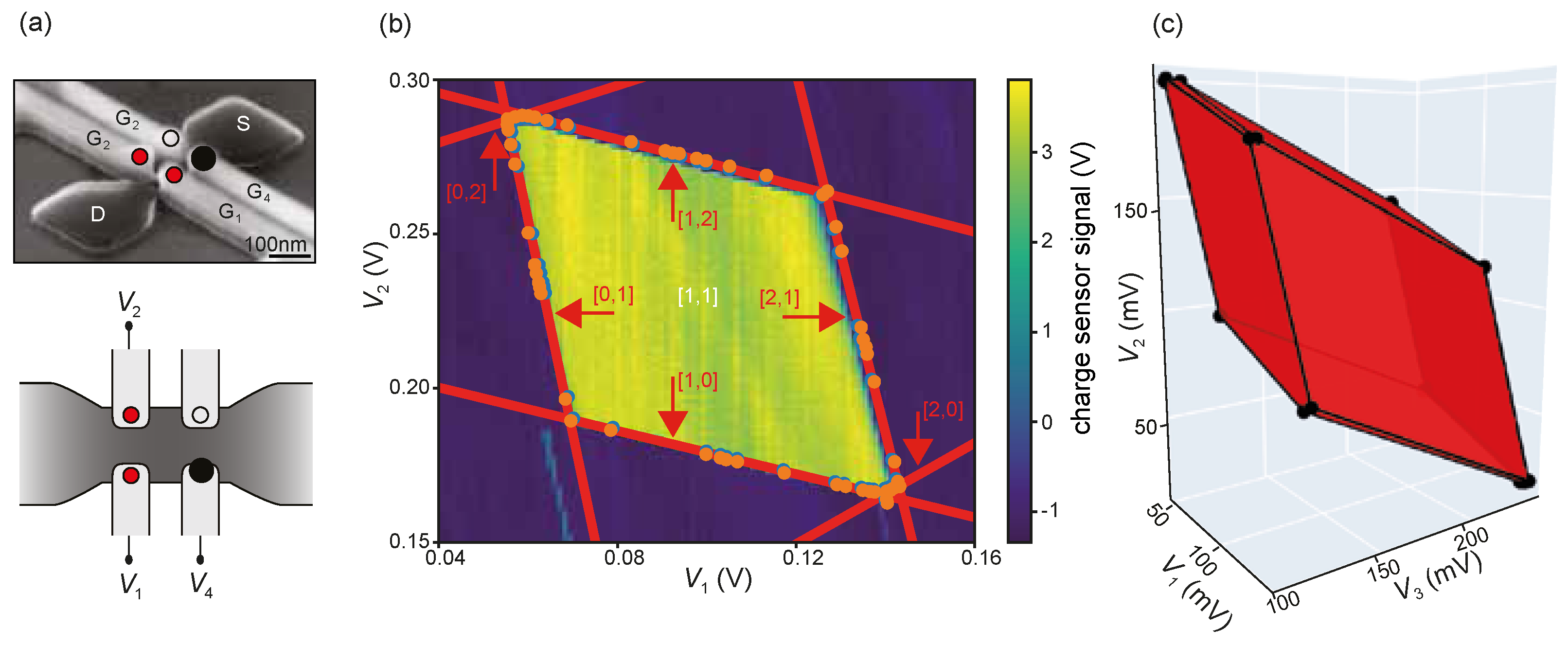

In this work, we target capacitively coupled quantum dot arrays such as the one depicted in

Figure 1a (see also [

4]). In this device, each gate-electrode is able to create a quantum dot below it, by choosing appropriate gate-voltages. We will refer to the vector of electron occupations on each dot as the state of the device. Due to the weak tunnel coupling of dots, the ground state of the device is essentially determined by classical physics and can be described well via the constant interaction model [

5]. In this model, the set of control parameters associated with a specific ground state forms a convex polytope. Its boundary is formed by the intersection of linear boundaries, each representing a state transition to another state [

1]. With this polytope, the control task can be solved directly by selecting a control parameter path that leaves the current state through the desired state transition into the neighbouring target state. This way it is possible to program core operations in the array. For example, to entangle the spin of two electrons on neighbouring dots via Heisenberg exchange coupling [

6], an experimenter must identify the transition that moves both electrons on the same dot and then pick a trajectory through this transition and back to the original state. For example, in a linear device with three quantum dots with one electron on each dot (i.e., a device in state [1, 1, 1]), entangling the middle electron with its neighbours requires finding the transitions to states [0, 2, 1] and [1, 2, 0], or alternatively [2, 0, 1] and [1, 0, 2].

Unfortunately, due to manufacturing imprecisions, the parameters of the constant interaction model are unknown and nonlinear effects lead to small deviations of the model, even though ground-states of weakly coupled quantum-dot arrays typically resemble convex polytopes. Thus, each manufactured device must be tuned individually in order to find the correct control parameters.

The problem of finding the control parameters becomes daunting in larger devices, as each added dot requires at least one additional gate electrode with its own control parameter. Qubit-qubit connectivities required for quantum simulations and quantum computing also constraints the geometrical layout of the quantum dots and their gate electrodes on a chip: Instead of individual isolated qubit dots with dedicated sensor dots, the current trend is to fabricate dense arrays of coupled qubit dots that are monitored by as few proximal sensor dots as possible. This complicates the tune-up in several ways. First, individual gate voltages no longer act locally, meaning that changing the gate voltage of one qubit also affects the potential of other, nearby qubits. Second, the signal of each sensor dot responds to charge changes of multiple dots, making it more difficult to determine which of the dots is making a transition. For example, in our device, a single charge sensor is responsible for monitoring all quantum dots, which means that its signal must be interpreted and does not directly relate to individual electron transitions [

7].

Currently, experts find the correct control parameters manually using ad-hoc tuning protocols [

8,

9], which are tedious and time consuming. This limits devices to require tuning of at most three control parameters at once. The field has made first steps into automating parts of this process. For the problem of automating

state identification, i.e., estimating the electron count on each quantum dot given a set of control parameters, estimation algorithms can be grouped in two main directions. The first direction uses CNN-based approaches [

10,

11], which requires dense rastering of image planes within the parameter space. An alternative approach explores the use of line searches to obtain a dataset of state transitions which are modeled using deep neural networks [

12,

13,

14], which allows human experts to label the discovered regions. Both approaches have only been applied to devices with at most two quantum dots and a maximum of three tunable parameters.

While promising, these approaches are not easily transferred to the task of finding

state transitions. While a perfect model for state-identification can also be used to solve the task of state transitions, it is difficult to reach the required accuracy around the boundaries. As the important transitions are routinely very small, high resolution measurements are required to find them. However, obtaining a dataset with the required resolution in higher dimensions is very expensive. For the line-search based approaches mentioned above, it has been shown [

15] that for a naive measurement design that does not have any knowledge of the position of transitions, the number of line-searches required to correctly identify all transitions of a state increases exponentially with the dimensionality. The key difficulty lies in obtaining a grid of measurements fine enough to obtain enough points on the smallest facets of the polytope to estimate the slopes. Since the hyperareas of facets can vary significantly (see

Figure 1c), the grid must be very fine to discover all facets of interest reliably.

Even if enough data is available, evaluating the learned model for the task of finding state transitions is difficult. Typical machine-learning methodology focuses on measuring accuracy, e.g., the fraction of correctly classified points in the dataset. However, the effect of a missing facet on the accuracy can be very small. Indeed, in our approach, we obtain measures of correctly classified volume (as measured by intersection over union, IoU) above 99.9%, while the learned polytopes can still contain significant errors in the boundaries.

The use of deep-neural networks adds another challenge, as these models are difficult to interpret. Even if the model learns to correctly classify the boundaries between states, it does not directly answer the question whether a transition between two states exists or what the position and size of the transition is. Thus, the experimenter would have to search for the desired transition in the learned model.

In this work, we approach the difficult tuning step of finding the transitions after the initial tuning of the barrier gates and tuning of the sensor dots. We assume, that the experimenter already found a point inside a state of interest and needs to find the exact location of state-transitions to neighbouring states. We do not assume that the experimenter knows which transitions exists, but we assume that the experimenter can correctly identify and label any detected transition. Further, we require that polytopes have finite volume in gate-voltage space. This means, that either all dots are occupied by at least one electron, or the voltage space is bounded from below. This does not add a limitation in practice, as lower bounds are typically imposed by the device software to protect the device from damage.

While our model depends on weakly tunnel coupled quantum dots to ensure that the ground states form convex polytopes, we do not require that it abides to the constant interaction model exactly. For example, in the constant interaction model, the polytopes for states [1, 1, 1] and [2, 3, 1] are just translated versions of each other. This is not even true in well-behaved real devices, and our model does not assume this.

Inspired by the aforementioned line search based approaches, we use line searches to obtain an initial dataset, which is iteratively improved upon.

We combine this with an interpretable model of the polytope which learns individual state transitions. In line with the device limitations and measurement challenges outlined above, we will only assume that the sensor reliably detects that a state transition occurred, but not which state the device transitioned to. Using such a sensor, it is possible to locate a state transition in control parameter space using a line search procedure starting from a point within the convex polytope of a state. The resulting line search brackets the position of a state transition by pairs of control parameters , each defining one point inside and outside of the (unknown) polytope. This allows for high precision measurements and locates the boundary within a margin of , which is a tuneable parameter chosen based on the trade-off between line search precision and short measurement times.

Under the conditions outlined above, finding the true polytope from measurements is a hard machine learning task. Even if all parameters of the constant interaction model are known, computing the boundary of the polytope requires solving the subspace intersection problem [

16], with the number of intersections exponential in the number of quantum dots. Indeed, already finding the device state given a set of

known parameters of the constant interaction model requires solving an integer quadratic program, which is NP-hard in the number of quantum dots [

17]. Thus, it is unlikely that learning the polytope from measurements is substantially easier. In fact, if we are given a training set of points which are labeled according to whether they are in- or outside the polytope, then finding the optimal large margin polytope is NP-hard. (See [

3] for a review on recent results). When relaxing the task to allowing a polytope with more facets than the optimal polytope, it has been shown that there exists a polynomial time algorithm which fits

ℓ points to a polytope that has at most a factor of

more facets than the optimal polytope and has strong approximation guarantees [

3]. However, the algorithmic complexity in our setting is

and thus too large for practical application. It is clear that allowing even more facets will make the task substantially easier, e.g., by adding one facet for each point outside the polytope, the task can be solved using

classification tasks, each separating one point outside the polytope from all points inside it. Practical algorithms therefore forego finding the optimal number of facets and use an approximate polynomial time solver [

18,

19]. Howevever, there is a lack of numerically stable general purpose optimization algorithms that aim for a

minimal amount of facets and which go beyond convergence in a volume metric (for example Hausdorff in [

19]). This is especially problematic in our task, as each facet of the unknown polytope constitutes an operational resource (relocation of individual electrons in the array). Producing an estimate with too many (unphysical) facets might make it impractical to filter out the relevant facets. Our approach is similar to [

3] in that we repeatedly solve convex relaxations of a large margin classification problem. In contrast to the related work, we use a numerically stable second order solver instead of stochastic gradient descent and design the problem such that it produces solutions with as few facets as possible. Random perturbations to the solutions found allow the discovery of different local optima from which we choose the best.

Our machine learning task extends over pure polytope estimation from measurements as our goal is not only to find the optimal polytope given a fixed set of points, but a polytope that generalizes well and accurately reflects the true polytope with all its facets. This is difficult to achieve using an independent and identically distributed dataset as facets with small surface area are unlikely to be found using naive random sampling. Instead, a scheme is required that actively improves our dataset and ensures that we find all relevant facets that are detectable by our hardware. We base our scheme on a theoretical result which can be used to assess whether two polytopes are the same. Intuitively, we must perform validation measurements on each facet to show that it is a facet of the true polytope, but we must also search for small (still undetected) facets that might be hiding near vertices of the current polytope. The resulting algorithm performs a line search in the directions of the corners and through each facet of our current best estimate of the polytope. If our polytope is correct, all state transitions of our model will be contained between the pairs of the performed line searches. Otherwise, we obtain examples of new points which we can add to our dataset and fit a new convex polytope. This process is repeated until we either run out of computation time or all performed line searches are correct. The details of our algorithm are given in the method section.

To investigate the performance and quality of our algorithm, we test it on three different setups: Two simulated problems in 3 or 4 dimensions based on either a device simulation or using constructed polytopes from Voronoi regions, and as third application the real device shown in

Figure 1a, where we activate two qubit dots (and the sensor dot) and let human experts verify the result. We further apply the algorithm to the same device with all three qubit dots activated. However, in this case no ground truth is available for comparison. In all simulated experiments, we compare our algorithm to an idealized baseline algorithm that additionally has access to the exact state information while estimating the polytope from measurements and otherwise uses the same active learning protocol. This algorithm assumes a more powerful device with a sensor signal that provides exact state information. (In principle, such a device can be realized by careful design of the charge sensor.) This idealized algorithm is useful to differentiate between the impact of our active learning scheme and the impact of approximately solving the NP-hard estimation problem.

The structure of the paper is as follows: We begin by defining the notation of our paper. In

Section 2.2, we introduce the constant interaction model which links between polytope estimation and charge transitions in quantum dot devices. In

Section 2.3, we describe the main algorithm by first introducing a meta algorithm for active learning followed by a description of our algorithm for fitting a polytope to measurements. We describe our experiments in

Section 3, present our results in

Section 4 and end with a discussion and conclusion of the paper in

Section 5.

3. Experiments

We implemented our algorithm by solving problem (

7) with cvxpy [

23,

24] using the ECOS solver for SOCP problems [

25]. We compute halfspace intersections, convex hulls and their volumes using the Qhull library [

16]. To save computation time, we include new point pairs

into the training dataset only if it does not already contain any pair

with

. The algorithm terminates when the distance between the sampled points

to the boundary of the polytope is smaller than

. To assess the performance of our algorithms, we compared our approach, where possible, to an algorithm that has perfect information regarding which point pair is cut by which hyperplane. For this, we additionally compute the state

of

, pick

as the number of different observed states and solve (

7) using the obtained

. This way, the problem becomes convex and can be solved efficiently. We refer to this algorithm as our baseline.

To quantify the quality of the obtained convex polytopes, we define a matching error between the set of facets in the ground truth

and the facets in the estimated convex polytope

. For this, we compute the minimum angle between the normal of the facet

and the normals of all

: We report an error if there is no

with angle smaller than 10 degrees:

This is a sufficient metric for estimating closeness of the facets: as the stopping condition of the algorithm already ensures that the estimated polytope must be very similar to the true polytope in terms of intersection over union, it is only the directions of the facet hyperplanes and their number that needs to be assessed here. To verify this claim, we also compute the intersection over union between the estimated and true ground truth polytopes:

To test our algorithms, we devise three experiments: Two simulated problems as well as one application on a real device. As application, we consider the device shown in

Figure 1a, consisting of a narrow silicon nanowire through which electrons can flow from source (S) to drain (D). Four gate electrodes (G

) are capacitively coupled to the nanowire, and under correct tuning of their voltages (

), quantum dots are formed that confine single electrons in small regions (tens of nanometers) of the nanowire. One of the gate electrodes (G

) is connected to a high frequency circuit and produces a sensor signal that responds very quickly to any rearrangements of electrons inside the nanowire [

4]. This sensor signal remains constant under voltage changes (line searches) of gate electrodes G

, G

, and G

, as long as the state does not change. Experimentally, this is accomplished by compensating

for any voltage changes applied to G

, such that the potential of the sensor dot is independent of the three control voltages

. This requires estimating the coefficients of a linear compensation function, which was done once before the algorithm is run. As soon as the charge state changes, i.e., a state boundary is encountered, the sensor signal drops and generates one

pair. The device in

Figure 1a can create up to four quantum dots. We applied our algorithm to a state with dot 1 and dot 2 each occupied by one electron, and use the two gate voltage parameters

and

to control this

configuration. (Dot 3 is kept empty by keeping

fixed at a sufficiently negative voltage). We repeat this experiment tuned such that an electron occupies the dot under G

as well and estimate the polytope using all three gate voltage parameters in the

configuration.

For the simulated experiments, we implement a line search which returns point pairs such that , where we vary across the experiments to measure the impact of the quality of the line search. As our first simulated problem, we generate a set of polytopes via Voronoi regions. For this, we sample 30 points from a d-dimensional normal distribution in with zero mean and diagonal covariance matrix with entries . For each set, we compute a Voronoi triangulation. We discard the sample if the origin is not inside a closed Voronoi region or this region extends outside the set . On this problem, we can use the Voronoi region around the origin as ground truth. This way, we obtain 100 convex polytopes with between 6 and 12 facets for and . For the baseline algorithm, we use the index of the closest point as state of the Voronoi region.

As our second problem, we simulate a quantum dot array with 3 or 4 qubit dots using the constant interaction model. For simplicity, we omit an additional sensor dot that would normally sense the charge transitions within these triple- and quadruple-dot devices. Specifically, we generate 100 different device simulations by choosing one set of realistic device capacitances and adding noise to simulate variations in device manufacturing. To be able to use a similar tuning as in the Voronoi experiment, we re-scale the parameter space by a factor of 100 so that the polytopes cover roughly the volume. As the ground truth polytope for each simulated device we compute that region in control-voltage space that has a ground state with a [1, 1, 1] or [1, 1, 1, 1] charge configuration, i.e., exactly one electron per dot. These calculations yield 14 facets for all simulated triple-dot devices, and 30 facets for all simulated quadruple-dot devices. Errors made by the algorithm in finding the correct facets are discussed below.

As parameters, we chose in all experiments , and . In the simulated experiments, we vary , choose and . For the baseline algorithm, we use the same parameters except . We obtain the initial dataset X by performing 100 line searches in random directions starting from a known point inside P (e.g., the origin for the Voronoi region based dataset). For the real device, we implemented a line search in hardware using a DAC voltage generator. Instead of using the single point returned by the hardware, we add a confidence interval with , which takes into account the experimental measurement uncertainty. This choice of is equivalent to the setting in the simulated device on the re-scaled parameters. Further, we pick and . The initial dataset was obtained via line searches using 10 random directions.

4. Results

In our experiments, we first investigated how close the learned polytope is to the true polytope on the simulated devices, depending on the line search accuracy

. For this, we computed the intersection over union of true and estimated polytopes and found an expected value between 0.9996 (

) and 0.995 (

), which are both very close to the optimum of 1. We did neither observe any relevant differences to the baseline algorithm, nor large differences between 3 and 4 dimensions. We then measured how many of the linear decision functions in the ground truth polytope were not approximated well in the estimated polytope. The recorded number of matching errors on the two simulated datasets as a function of the precision of the line search,

, can be seen in

Figure 2. For both datasets, the error increased with

, where for the simulated devices, we saw a far steeper increase than for the Voronoi regions. For small values of

, we observed less than 0.1 matching errors, which increased to 4.5 at

in 4D. Here, the baseline algorithm performed better.

To investigate the nature of the errors, we computed the hyper volume of the state transitions as a measure of size of the facet in the polytope. The histograms of volumes as well as relative distributions of errors are shown in

Figure 3. As can be seen from the figure, most errors appeared on facets with small volume. Further, we observed that facets with small volumes were estimated more reliably as the line search accuracy improved. To give a more qualitative impression of the errors observed, we visualized two examples of an error by creating random cuts through the 4D parameter space of the simulated device in such a way that an unmatched facet in the ground truth is visible within the cut. See

Figure 4 for common examples, extracted from a problem instance with 4 errors and obtained with

.

For the real device, the obtained model of our algorithm is shown in

Figure 1 in red color. In 2D, human experts verified the correctness of the state transitions and annotated the resulting states, based on a dense 2D raster scan shown in the background of

Figure 1b [

4]. We can see that the algorithm found two very small facets which are invisible on the raster scan. In 3D no ground truth was available, but qualitatively the 10 facets found by the algorithm (six large ones and two pairs of thin slabs in

Figure 1c) are in line with how the experts understand this device: The 6 large transitions correspond to adding or removing one electron from each qubit dot, whereas the two pairs of smaller facets correspond to moving an electron between dot 1 and dot 2, or between dot 2 and dot 3. We further verified in simulations of the constant interaction model, including a simulation of the sensor dot, that our polytope is consistent with specific choices of the device parameters.

Finally, we investigated the run time and convergence of the algorithm. We measured the convergence speed of the algorithm in terms of the imprecision of the fit measured by our stopping criterion. For this, we measure the maximum distance a point in the training set

to the boundary of the polytope

estimated using the dataset

. To be exact, we compute

. If this distance is smaller than

, the algorithm terminates. To get a better understanding of the expected behaviour, we define a number of precision targets

,

geometrically spaced between 10 and

. We then measure after each iteration how many precision targets have been reached and store the size of the dataset

. In

Figure 5 we report as an average over all trials the fraction of targets reached after a certain number of line-searches as well as the number of line-searches needed to ensure that 75% of runs terminate (i.e., reach all targets). We see that in all cases, 75% of runs terminate within 300 (3D) and 1300 (4D) line-searches with approximately 95% of total targets reached. However, our results also show that the remaining 25% of runs can take a long time to reach the final targets. This behaviour seems to not be influenced by the choice of

.

The run time of the algorithm for the real device (including line searches) was less than a minute for two dots and 30 min for three dots (15 min measurement time for 180 line searches). Computing an instance of the 4D problem on the simulated device takes approximately one hour on a single core CPU.

5. Discussion

Our results show that it is possible to reliably estimate the polytope associated with state transitions out of a specific charge state in a quantum dot array. Overall, it appears that the algorithm finds a good estimate of the true polytope volumes and facet shapes within the control parameter space formed by the array’s gate voltages. While the underlying optimization problem is non-convex, we did not find signs of bad local optima. This is likely because we verify the estimated polytope using new measurements which are designed to find potential modeling errors. Thus, a local optimum is likely caught and results in an new training iteration. This hypothesis is strengthened by the fact that we observe that with higher precision of the line search the distribution of errors is shifted towards smaller facets, showing that we are better at disproving local optima.

An example of the superiority of our approach to rastering can be seen in

Figure 1b. Here, we can see that the algorithm managed to find two very small facets (labeled

and

) which are invisible on the raster scan. Operationally, these are two very important facets in the device as they amount to transitions of electrons

between quantum dots, while the other transitions are electrons entering or leaving the array. For the purposes of quantum computation, such inter-dot tunneling processes effectively turn on wave-function overlap between two electrons, which is a key resource to manipulate their spin states via Heisenberg exchange coupling [

6]. The same holds for the 3D polytope estimated in

Figure 1c, where small facets corresponding to inter-dot transitions have been found.

The results in

Figure 1c also highlight another challenge of using existing 2D raster scan approaches for devices with higher dimensions. While 2D raster-scan based approaches can be used to correctly identify the position or existence of some transition on the scan, the correct interpretation of the transition can become difficult. For example, when creating a 2D scan from

Figure 1c by fixing the

component to a constant value, the resulting shape of the 2D slice of the polytope changes significantly depending on the chosen value of

, as different transitions become visible on the slice. Thus, from a single slice alone it becomes difficult to accurately assess the actual state transition. However, using the full 3D figure, it becomes relatively easy for an expert to label most of the transitions.

Compared to random sampling, our sampling approach using active learning seems to be superior. For random sampling, the number of samples requires depends largely on the relative size of a facet compared to the overall surface area [

15]. Thus, for the small size of facets on our polytope, an average of 1500 samples is significantly less than expected via random sampling.

Still, our algorithm is not perfect. Comparing the performance of our algorithm to the idealized baseline algorithm indicates that the majority of errors are introduced by the difficulties of solving the NP-hard polytope estimation problem, while our active learning scheme seems to works very well. Thus, our work will likely profit from continued development of improved and faster estimation algorithms.

We have successfully applied our algorithm to a real 2 × 2 quantum dot array, constituting the first automatic discovery of the full set of state transitions in the literature for a device with more than 2 dots.

As the underlying estimation problems are NP-hard and we observed a steep increase of run-time already going from 3D to 4D, we foresee practical limits of our algorithm for controlling large quantum dot arrays. However, in practice, one may not need to find all facets in order to implement desired qubit functionalities. For example, universal quantum computers can be constructed entirely from single- and two-qubit operations, meaning that many facets associated with a multi-dot state may be operationally irrelevant (such as facets corresponding to multi-electron co-tunneling transitions, which are likely very small facets that are hard to find). Alternatively, it might be possible to partition the device in blocks of smaller arrays, for which polytopes can be estimated independently. For example, for a linear array of many quantum dots, it might be possible to estimate all relevant local transitions of a quantum dot using its two-neighbourhood in the dot connection graph, a 5-dimensional subspace, which we have shown is already within our estimation capabilities. Another possible solution is to use more and better sensor signals to identify the state transitions.

A potential shortcoming is our assumption of linear state transitions, which form a convex polytope. This assumption is not true for devices that exhibit device instabilities or strongly tunnel coupled quantum dots, for which the approximation of the ground states via the constant interaction model is invalid and the ground states can not be well approximated by convex polytopes anymore. However, stable, non-hysteretic materials are essential prerequisites for quantum processors, and recent material improvements already led to very stable materials. Thus, we expect that future weakly coupled devices will fulfill this assumption to a much higher degree than devices that are available today.