AIBot: A Novel Botnet Capable of Performing Distributed Artificial Intelligence Computing

Abstract

:1. Introduction

1.1. Botnet Evolution

1.2. Motivation and Reason

1.3. Contributions and Structure

2. Background and Related Work

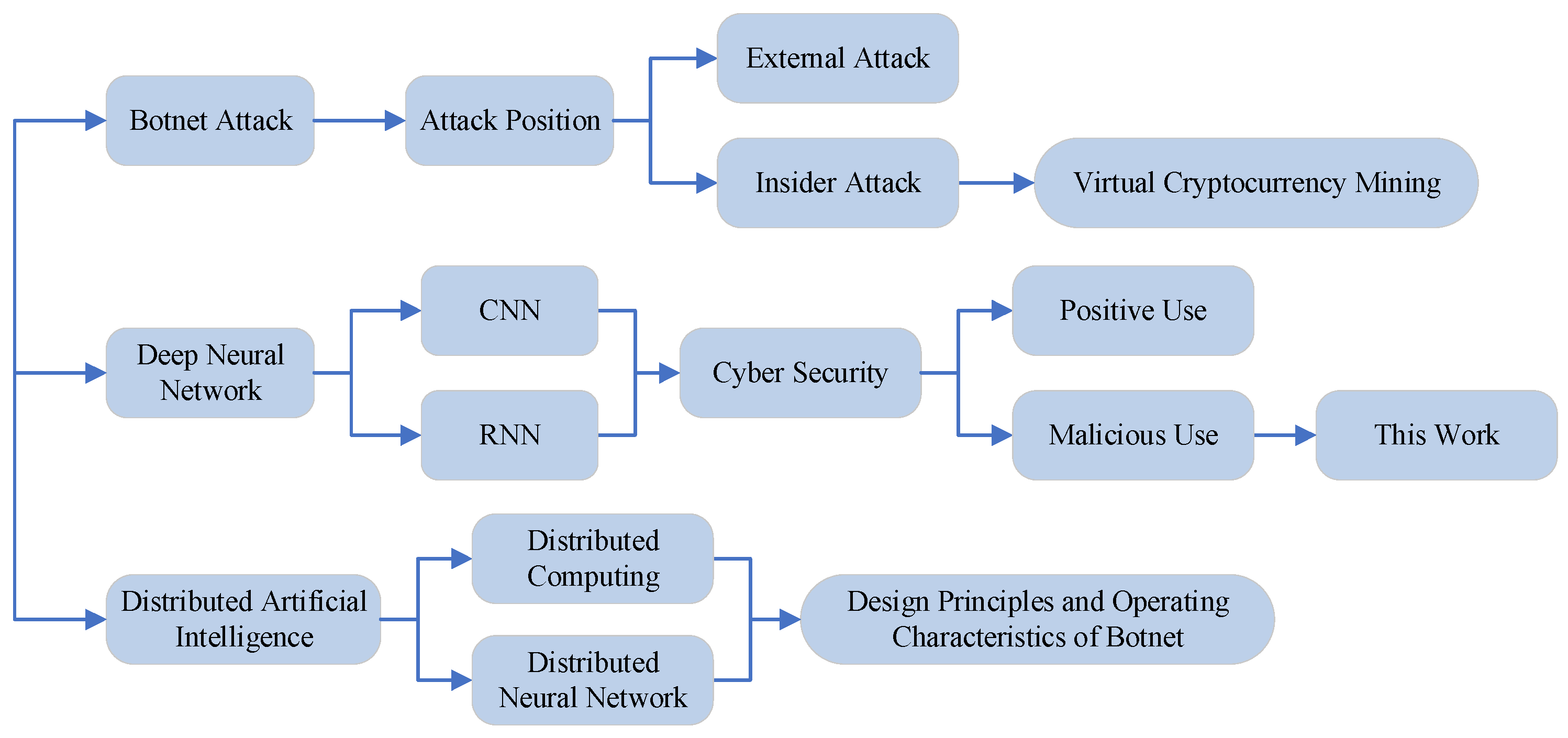

2.1. Botnet Attack

2.2. Deep Neural Network

2.3. Distributed Artificial Intelligence

3. Difficulties and Solutions

3.1. Difficulties

- Bot program size: In order for a device to run the corresponding intelligent learning model, a bot program needs to be loaded with the structure and parameters of the computational model, and neural networks often have thousands or even tens of thousands of parameters. Therefore, the size of this type of bot program is positively correlated with the number of parameters contained in the model and is significantly larger than that of traditional bot programs;

- Communication interaction traffic: Due to the large scale of the numbers of neural network parameters, the downloading of the files may generate extensive communication traffic. On the other hand, although multiple nodes working together to complete the computation process can take advantage of the number of nodes in the botnet, this also generates additional communication traffic for collaborative interactions between nodes as a result;

- Node resource consumption: When a botnet utilizes distributed node resources to collaboratively perform intelligent computing tasks, the CPU needs to perform a large number of parallel computations in a short time, and the computational resources of the nodes will be in a high occupancy state. Although most of the controlled nodes are in an unattended state, the long-term high occupancy rate still increases the risk of botnet exposure.

3.2. Solutions

- Simplify model: In order to accommodate the characteristics of the botnet, the algorithmic model needs to be simplified. In fact, the performance of a fully trained shallow neural network model is basically able to meet the requirements when processing the task. The reason for increasing the number of neural network layers is to further improve the performance of the model, but at the cost of an exponential increase in the number of parameters. Considering the balance between program size and model performance, organizing nodes to run a simpler neural network collaboratively is a feasible solution. For CNNs, the number of model layers is scaled down to reduce the number of parameters while ensuring usability; for RNNs, the number of parameters in the recurrent units can be scaled down by setting the vectors to a lower dimensionality.

- Reduce traffic: Reducing the traffic interactions between nodes due to the computation process is an important part of making a botnet run intelligent algorithms. In order to reduce the large number of data interactions for intermediate results in the computation process, when allocating computation tasks, one can try to let a single node run the complete computation unit, complete the process of intermediate results inside the node and only output the final result of the computation unit externally. For the communication generated by the loaded model, the download traffic is reduced by the “simplify model” approach described above. For the input sources, the input data (e.g., images, corpus, etc.) are obtained locally at the node; i.e., the data collection and processing process is completed locally at the node.

- Decompose computation: The following methods can be used to decompose the computation quantity. First, the computation quantity of each node can be allocated according to the device performance—i.e., the hardware performance of the node can be determined by means of node sensing and detection, etc.—and the number of computation units in the node can be allocated according to a certain proportional coefficient so as to control the computation resource occupation rate of the node. Second, when the bot program executes the computation task, a waiting time can be added to the computation process (i.e., a time interval is inserted between the computation steps), and the duration of resource occupation can be actively reduced by means of intermittent execution.

4. Proposed Botnet Model

4.1. Formal Representation

4.2. Framework of AIBot

| Algorithm 1Distributed deployment of neural networks in AIBot |

| Input: node set ZOMBIE, neural network model Model, initial network structure network Output: AIBot that can perform intelligent computation in a distributed manner

|

- I.

- The botmaster controls a large number of network devices as node resources for performing large-scale distributed computing;

- II.

- The nodes are classified by obtaining various attributes through the Node state identification mechanism;

- III.

- The botmaster launches the attack campaign and determines the type of neural network to be run based on the type of task;

- IV.

- Neural network models are decomposed into distributable deployable form by applying neural network decomposition mechanisms according to the type of neural network;

- V.

- The adaptive network structure mechanism is used to transform the interconnection relationship between nodes according to the neural network type;

- VI.

- The distributed deployment of neural networks in the botnets is completed based on steps II and IV;

- VII.

- Distributed intelligent computing tasks based on neural network models are performed on the basis of steps V and VI.

4.3. Two Implementations of AIBot

4.3.1. C-AIBot

4.3.2. R-AIBot

4.3.3. Bot Design

| Algorithm 2 AIBot-bot |

|

4.4. Architecture of AIBot

- Cloud controlling layer: This layer is where the botmaster is located and has the highest authority over the control and management of the entire botnet, communicating with the intermediate processing layer through covert means, such as anonymous networks and blockchain protocols, to give orders and receive feedback. The botmaster is responsible for the state monitoring and daily maintenance of the botnet, releasing version updates and plug-in tools and specifying attack targets and methods. In AIBot, the botmaster adjusts the intelligent computing model according to the actual effect and updates the relevant parameters and thresholds;

- Intermediate processing layer: This layer mainly consists of high-performance nodes in the botnet, including server nodes with high credibility and reliability, and adopts a backup redundancy mechanism to avoid “single point of failure”. The intermediate processing layer acts as the equivalent of a C&C server in a traditional botnet, connecting directly with the underlying botnet nodes to receive commands from the higher-level controllers and to direct the lower-level edge nodes to perform specific tasks. In AIBot, the intermediate processing layer takes up part of the computational tasks of the deep neural network with an advantage in hardware performance, thus improving the accuracy of the computational results. Since AIBot needs to run multiple intelligent computational models, the intermediate processing layer is responsible for making the lower nodes adjust to the corresponding network structure;

- Edge computing layer: This layer concentrates most of the nodes in the botnet, including low-profile PCs, home routers and various lightweight connected endpoints, such as IoT devices. The inherent security flaws of such devices make them vulnerable to be captured as bot nodes, which, in turn, can serve as massively distributed computing resources under the control of botnets. The nodes in the edge computing layer can form different subnets of the botnet according to their network location to perform intelligent computing tasks, and the reachable public nodes are responsible for the communication with the upper layer network. As the target task and computational model change, the network structure of the edge computing layer is adjusted accordingly under the control of the intermediate processing layer.

5. Experiments and Evaluation

5.1. C-AIBot Evaluation

5.1.1. Experimental Setup

5.1.2. Performance Evaluation

5.2. R-AIBot Evaluation

5.2.1. Experimental Setup

5.2.2. Performance Evaluation

5.3. AIBot Efficiency Evaluation

6. Discussion

6.1. Limitations

6.2. Defense Analysis

- Based on bot program: (i) Although AIBot simplifies and compresses the computational model, the bot program still contains a much larger number of parameters than ordinary programs, which can be exploited by defenders for static identification of malicious code. (ii) The computation process of CNNs and RNNs contains a large number of matrix operations, and it is difficult to use similar large-scale parallel computation in ordinary programs, which can be exploited by defenders for dynamic analysis of malicious codes;

- Based on traffic analysis: (i) A large amount of interactive communication between nodes occurs during the task execution in AIBot. The intermediate results of the intelligent computation are mainly passed on in the network in the form of probability vectors and text vectors, and the defender can characterize this abnormal communication traffic. (ii) AIBot performs intelligent computing in the form of a subnet of the botnet, and the nodes communicate with each other with certain laws. The defender can extract the network structure and, thus, infiltrate the botnet by mining the connection relationship between the nodes;

- Based on terminal behavior: Although AIBot uses optimized node computation, intelligent computation based on neural networks will inevitably generate transient high-resource occupancy, so continuous monitoring of hardware resource occupancy, detecting abnormalities and issuing alerts, is still a proven defense tool.

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Vormayr, G.; Zseby, T.; Fabini, J. Botnet communication patterns. IEEE Commun. Surv. Tutor. 2017, 19, 2768–2796. [Google Scholar] [CrossRef]

- Fang, B.; Cui, X.; Wang, W. Survey of botnets. J. Comput. Res. Dev. 2011, 48, 1315. [Google Scholar]

- Seymour, J.; Tully, P. Weaponizing data science for social engineering: Automated E2E spear phishing on Twitter. Black Hat USA 2016, 37, 1–39. [Google Scholar]

- Antonakakis, M.; April, T.; Bailey, M.; Bernhard, M.; Bursztein, E.; Cochran, J.; Durumeric, Z.; Halderman, J.A.; Invernizzi, L.; Kallitsis, M.; et al. Understanding the mirai botnet. In Proceedings of the 26th USENIX security symposium (USENIX Security 17), Vancouver, BC, Canada, 23 May 2017; pp. 1093–1110. [Google Scholar]

- Dange, S.; Chatterjee, M. IoT Botnet: The largest threat to the IoT network. In Data Communication and Networks; Springer: Singapore, 2020; pp. 137–157. [Google Scholar]

- Zhang, J.; Zhang, R.; Zhang, Y.; Yan, G. The rise of social botnets: Attacks and countermeasures. IEEE Trans. Depend. Secur. Comput. 2016, 15, 1068–1082. [Google Scholar] [CrossRef] [Green Version]

- Ferrara, E. “Manipulation and abuse on social media” by Emilio Ferrara with Ching-man Au Yeung as coordinator. ACM SIGWEB Newsl. 2015, (Spring), 1–9. [Google Scholar] [CrossRef] [Green Version]

- Casenove, M.; Miraglia, A. Botnet over Tor: The illusion of hiding. In Proceedings of the 2014 6th International Conference on Cyber Conflict (CyCon 2014) IEEE, Tallinn, Estonia, 3–6 June 2014; pp. 273–282. [Google Scholar]

- Anagnostopoulos, M.; Kambourakis, G.; Drakatos, P.; Karavolos, M.; Kotsilitis, S.; Yau, D.K.Y. Botnet command and control architectures revisited: Tor hidden services and fluxing. In Proceedings of the International Conference on Web Information Systems Engineering, Puschino, Russia, 7–11 October 2017; Springer: Cham, Switzerland, 2017; pp. 517–527. [Google Scholar]

- Fajana, O.; Owenson, G.; Cocea, M. Torbot stalker: Detecting tor botnets through intelligent circuit data analysis. In Proceedings of the 2018 IEEE 17th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 1–3 November 2018; pp. 1–8. [Google Scholar]

- Li, K.; Fang, B.; Cui, X.; Liu, Q. Study of botnets trends. J. Comput. Res. Dev. 2016, 53, 2189. [Google Scholar]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaad, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Zhang, Z.; Yin, L.; Peng, Y.; Li, D. A quick survey on large scale distributed deep learning systems. In Proceedings of the 2018 IEEE 24th International Conference on Parallel and Distributed Systems (ICPADS), Singapore, 11–13 December 2018; pp. 1052–1056. [Google Scholar]

- Weaver, R. Visualizing and modeling the scanning behavior of the conficker botnet in the presence of user and network activity. IEEE Trans. Inf. Secur. 2015, 10, 1039–1051. [Google Scholar] [CrossRef]

- Xie, Y.; Yu, F.; Achan, K.; Panigrahy, R.; Hulten, G.; Osipko, I. Spamming botnets: Signatures and characteristics. ACM SIGCOMM Comput. Commun. Rev. 2008, 38, 171–182. [Google Scholar] [CrossRef]

- Plohmann, D.; Gerhards-Padilla, E. Case study of the miner botnet. In Proceedings of the 2012 4th International Conference on Cyber Conflict (CYCON 2012) IEEE, Tallinn, Estonia, 5–8 June 2012; pp. 1–16. [Google Scholar]

- Shah, N.; Farik, M. Ransomware-Threats Vulnerabilities and Recommendations. Int. J. Sci. Technol. Res. 2017, 6, 307–309. [Google Scholar]

- Berman, D.S.; Buczak, A.L.; Chavis, J.S.; Corbett, C.L. A survey of deep learning methods for cyber security. Information 2019, 10, 122. [Google Scholar] [CrossRef] [Green Version]

- McDermott, C.D.; Majdani, F.; Petrovski, A.V. Botnet detection in the internet of things using deep learning approaches. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN) IEEE, Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Yi, S.; Li, C.; Li, Q. A survey of fog computing: Concepts, applications and issues. In Proceedings of the 2015 Workshop on Mobile Big Data, Hangzhou, China, 21 June 2015; pp. 37–42. [Google Scholar]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Verbraeken, J.; Wolting, M.; Katzy, J.; Kloppenburg, J.; Verbelen, T.; Rellermeyer, J.S. A survey on distributed machine learning. ACM Comput. Surv. (CSUR) 2020, 53, 1–33. [Google Scholar] [CrossRef] [Green Version]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Branchynet: Fast inference via early exiting from deep neural networks. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR) IEEE, Cancun, Mexico, 4–8 December 2016; pp. 2464–2469. [Google Scholar]

- Teerapittayanon, S.; McDanel, B.; Kung, H.T. Distributed deep neural networks over the cloud, the edge and end devices. In Proceedings of the 2017 IEEE 37th international conference on distributed computing systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 328–339, IEEE. [Google Scholar]

- Kim, Y.D.; Park, E.; Yoo, S.; Choi, T.; Yang, L.; Shin, D. Compression of deep convolutional neural networks for fast and low power mobile applications. arXiv 2015, arXiv:1511.06530. [Google Scholar]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. In Handbook of Systemic Autoimmune Diseases; Elsevier Ltd.: Amsterdam, The Netherlands, 2009; Volume 1. [Google Scholar]

- Trump_tweet_dataset. Available online: http://chirag2796.pythonanywhere.com/trump_tweet_dataset (accessed on 20 June 2022).

- Radanliev, P.; De Roure, D.; Maple, C.; Ani, U. Super-forecasting the ‘technological singularity’ risks from artificial intelligence. Evol. Syst. 2022, 13, 747–757. [Google Scholar] [CrossRef]

- Radanliev, P.; De Roure, D.; Maple, C.; Santos, O. Forecasts on Future Evolution of Artificial Intelligence and Intelligent Systems. IEEE Access 2022, 10, 45280–45288. [Google Scholar] [CrossRef]

| Pre-Attack State | Type of Attack | |

|---|---|---|

| 1 | Initial state | C-AIBot |

| 2 | R-AIBot | |

| 3 | C-AIBot | C-AIBot |

| 4 | R-AIBot | |

| 5 | R-AIBot | C-AIBot |

| 6 | R-AIBot |

| tC (%) | tP (%) | tN (%) | tM (%) | tR (%) | |

|---|---|---|---|---|---|

| 1 | 12.17 | 47.73 | 23.87 | 11.93 | 4.30 |

| 2 | 4.02 | 63.04 | 15.76 | 15.77 | 1.42 |

| 3 | 20.93 | 22.27 | 24.25 | 23.24 | 9.30 |

| 4 | 3.06 | 61.16 | 19.11 | 15.29 | 0.14 |

| 5 | 12.40 | 13.76 | 13.78 | 55.10 | 4.96 |

| 6 | 8.70 | 43.48 | 32.61 | 10.87 | 4.35 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Shu, H.; Huang, Y.; Yang, J. AIBot: A Novel Botnet Capable of Performing Distributed Artificial Intelligence Computing. Electronics 2022, 11, 3241. https://doi.org/10.3390/electronics11193241

Zhao H, Shu H, Huang Y, Yang J. AIBot: A Novel Botnet Capable of Performing Distributed Artificial Intelligence Computing. Electronics. 2022; 11(19):3241. https://doi.org/10.3390/electronics11193241

Chicago/Turabian StyleZhao, Hao, Hui Shu, Yuyao Huang, and Ju Yang. 2022. "AIBot: A Novel Botnet Capable of Performing Distributed Artificial Intelligence Computing" Electronics 11, no. 19: 3241. https://doi.org/10.3390/electronics11193241

APA StyleZhao, H., Shu, H., Huang, Y., & Yang, J. (2022). AIBot: A Novel Botnet Capable of Performing Distributed Artificial Intelligence Computing. Electronics, 11(19), 3241. https://doi.org/10.3390/electronics11193241