Multi-Scale Presentation of Spatial Context for Cultural Heritage Applications

Abstract

:1. Introduction

- By integrating the semantic information with digital assets, we facilitate the understanding and appreciation of cultural heritage sites and foster awareness and engagement, to serve their protection and conservation.

- We communicate the meaning of CH sites to document their significance, through scientific and scholarly authoritative information sources.

- By offering digital representations of spatial and geographical context, we support the safeguarding of tangible and intangible values of CH sites in their natural settings and social contexts.

- We support the authenticity of CH sites, by communicating the significance of their social and historical context as well as their cultural values, to protect them from inaccurate or inappropriate interpretations.

- The offering of contextual information contributes to the sustainable conservation of CH sites, by promoting the understanding of conservation efforts.

- To encourage inclusiveness in the interpretation of CH sites, we facilitate the involvement of stakeholders and associated communities in the development of interpretations, by offering simple-to-use authoring interfaces.

- We develop technical guidelines for heritage interpretation and presentation, including technologies, and research, along with guidelines on how to use them.

2. Background and Related Work

2.1. Cultural Mapping

2.2. 3D Digitization

2.3. Digital Assets in Games

2.4. Virtual Environments in Cultural Heritage

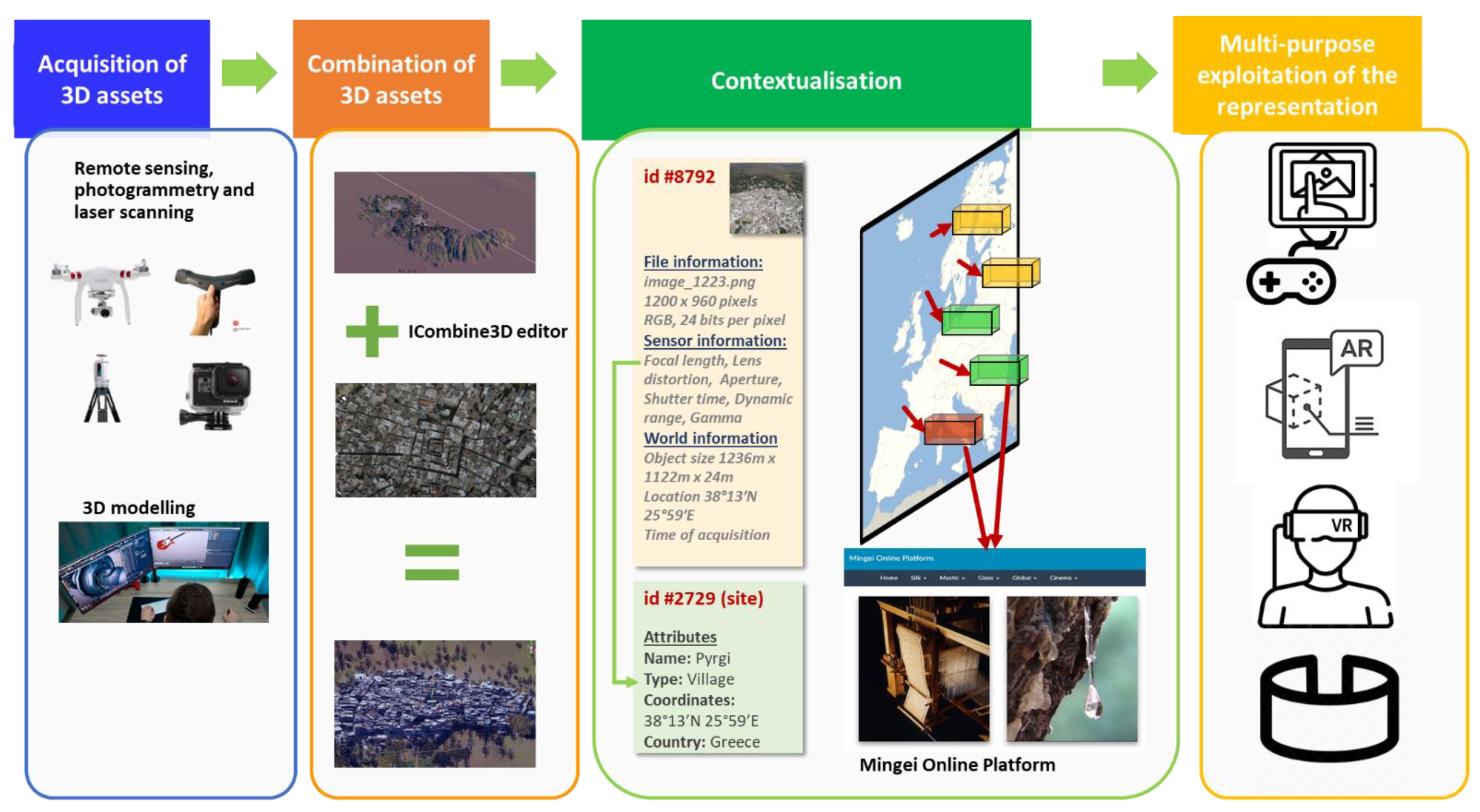

3. The Proposed Approach

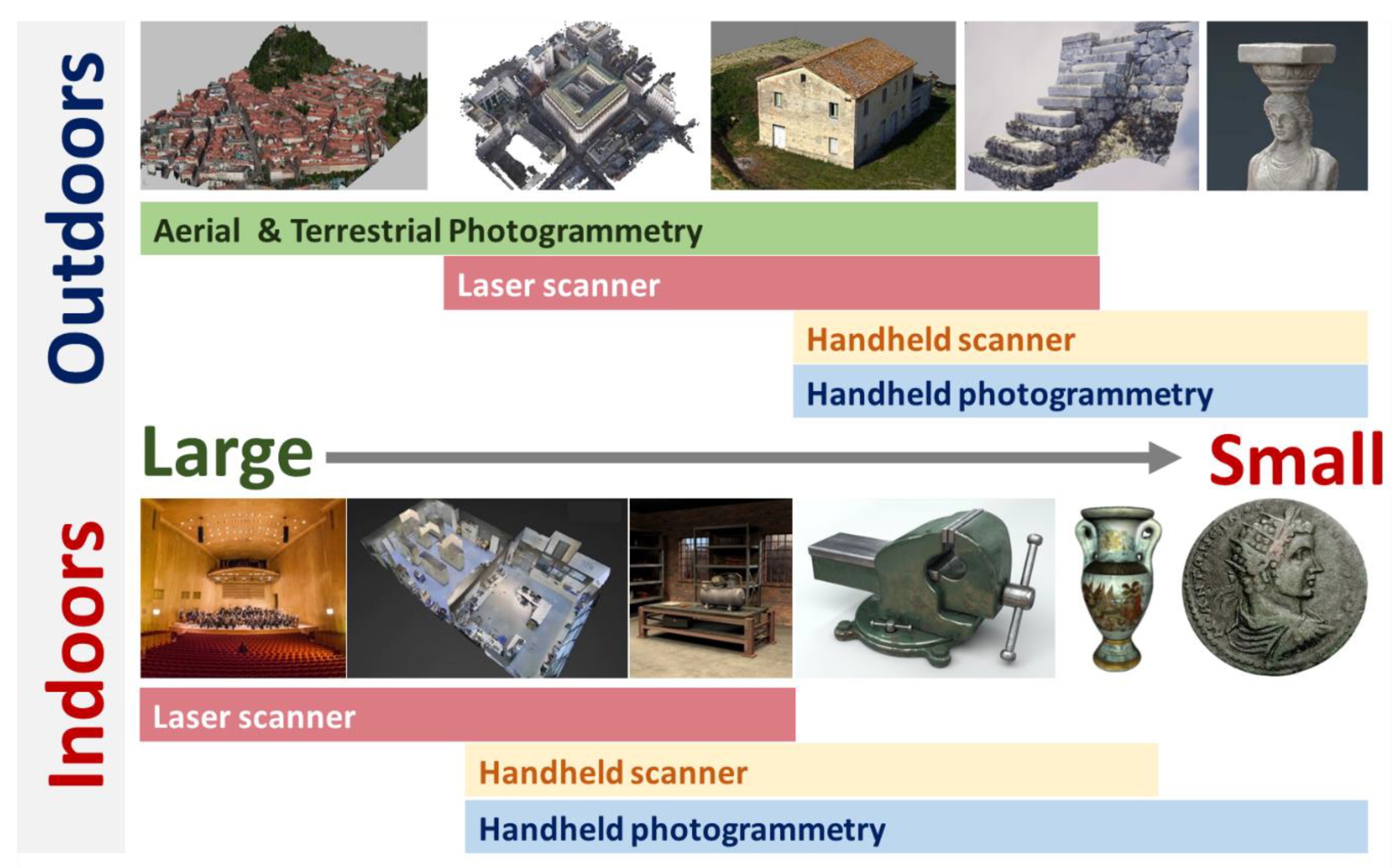

3.1. Acquisition of 3D Assets

3.1.1. Remote Sensing

3.1.2. Photogrammetry and Laser Scanning

3.1.3. 3D Modeling

3.2. Combination of 3D Assets

3.3. Contextualization

3.4. Multi-Purpose Exploitation of the Representation

4. Use Cases

4.1. Acquisition of 3D Assets

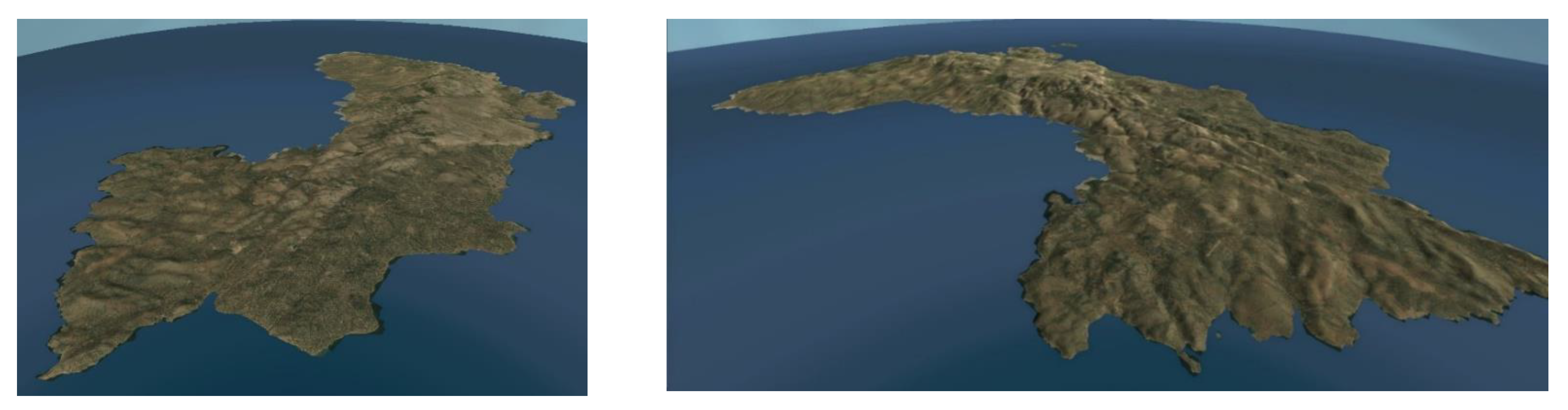

4.1.1. Remote Sensing

4.1.2. Spatial and Geographical Context Representation Using Photogrammetry and Laser Scanning

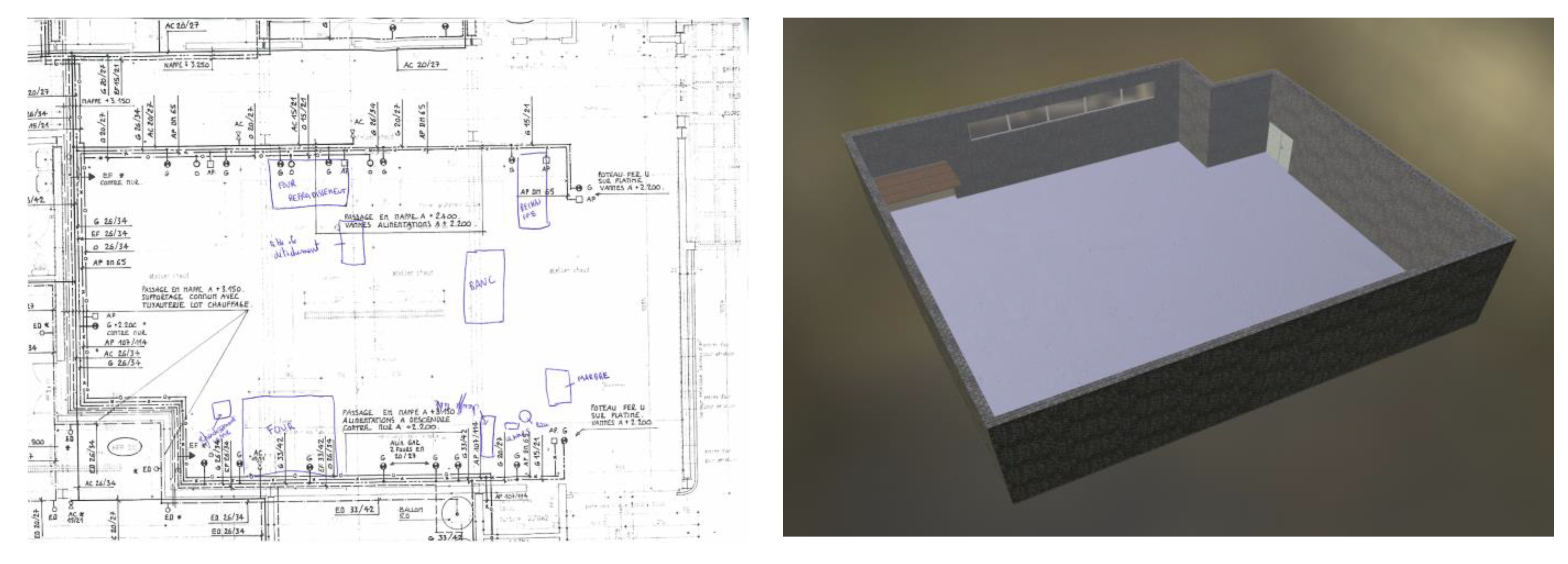

4.1.3. Spatial and Geographical Context Representation Using 3D Modeling

4.2. Combination of 3D Assets

4.3. Contextualization

4.4. Multipurpose Exploitation of the Representation

4.4.1. Airborne

4.4.2. The Chios Exploration Game

4.4.3. Glass Workshop Walkthrough

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- ICOMOS. Ename Charter—The Charter for the Interpretation and Presentation of Cultural Heritage Sites. In Proceedings of the ICOMOS 16th General Assembly, Québec, QC, Canada, 29 September–4 October 2008. [Google Scholar]

- Clark, S. Young. Keynote Speech. Cultural Mapping Symposium and Workshop, Australia. 1995. Available online: https://bangkok.unesco.org/content/cultural-mapping (accessed on 10 October 2021).

- Petrescu, F. The Use of GIS Technology in Cultural Heritage, In Proceedings of the XXI International Symposium CIPA 2007, Athens, Greece, 1–6 October 2007.

- Australia ICOMOS. The Burra Charter: The Australia ICOMOS Charter for Places of Cultural Significance. Available online: http://openarchive.icomos.org/id/eprint/2145/ (accessed on 16 January 2021).

- UNESCO; ICCROM; ICOMOS; IUCN. Managing Cultural World Heritage; United Nations Educational, Scientific and Cultural Organization: Paris, France, 2013. [Google Scholar]

- CEMAT. European Rural Heritage Observation Guide—CEMAT. 2003. Available online: https://rm.coe.int/16806f7cc2 (accessed on 22 July 2021).

- Donkin, L. Crafts and Conservation: Synthesis Report for ICCROM. 2001. Available online: https://www.iccrom.org/publication/crafts-and-conservation-synthesis-report-iccrom (accessed on 20 September 2020).

- Barber, D.M.; Dallas, R.W.; Mills, J.P. Laser scanning for architectural conservation. J. Archit. Conserv. 2006, 12, 35–52. [Google Scholar] [CrossRef]

- Song, L.; Li, X.; Yang, Y.-g.; Zhu, X.; Guo, Q.; Liu, H. Structured-Light Based 3D Reconstruction System for Cultural Relic Packaging. Sensors 2018, 18, 2981. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rodríguez-Gonzálvez, P.; Muñoz-Nieto, A.L.; del Pozo, S.; Sanchez-Aparicio, L.J.; Gonzalez-Aguilera, D.; Micoli, L.; Barsanti, S.G.; Guidi, G.; Mills, J.; Fieber, K.; et al. 4D Reconstruction and Visualization of Cultural Heritage: Analyzing Our Legacy through Time. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 609–616. [Google Scholar] [CrossRef] [Green Version]

- Pix4d. Available online: https://www.pix4d.com/ (accessed on 20 September 2021).

- Faro Focus. Available online: https://www.faro.com/en/Products/Hardware/Focus-Laser-Scanners (accessed on 16 September 2021).

- Corns, A. 3D-ICONS: D7.3-Guidelines and Case Studies (Final). Zenodo 2013, 59–68. [Google Scholar] [CrossRef]

- Parys, R.; Schilling, A. Incremental large-scale 3D reconstruction. In Proceedings of the IEEE International Conference on 3D Imaging, Modeling, Processing, Visualization & Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 416–423. [Google Scholar]

- Statham, N. Use of photogrammetry in video games: A historical overview. Games Cult. 2020, 15, 289–307. [Google Scholar] [CrossRef]

- Ryckman, M. Exploring the Graffiti of The Division. Ubiblog. 2016. Available online: http://blog.ubi.com/exploring-the-graffiti-of-the-division-interview-with-amr-din (accessed on 16 January 2021).

- Steinman, G. Far Cry 4—Vice Dev Diary & Quest for Everest. UbiBlog. 2014. Available online: http://blog.ubi.com/far-cry-4-vice-developer-diary-quest-for-everest (accessed on 16 January 2021).

- Starwars, E.A. How We Used Photogrammetry to Capture Every Last Detail for Star Wars Battlefront. StarWars EA. 2015. Available online: http://starwars.ea.com/starwars/battle front/news/how-we-used-photogrammetry (accessed on 10 September 2021).

- Brown, K.; Hamilton, A. Photogrammetry and “Star Wars Battlefront”. In Proceedings of theGDC 2016: Game Developer Conference, San Francisco, CA, USA, 14–16 March 2016. [Google Scholar]

- Azzam, J. Porting A Real-Life Castle into Your Game When You’re Broke. GDC. 2017. Available online: http://www.gdcvault.com/play/1023997/Porting-a-Real-Life-Castle (accessed on 16 January 2021).

- Bishop, L.; Chris, C.; Michal, J. Photogrammetry for Games: Art, Technology and Pipeline Integration for Amazing Worlds; GDC: San Francisco, CA, USA, 2017. [Google Scholar]

- Photomodeler Technologies, How Is Photogrammetry Used in Video Games? 2020. Available online: https://www.photomodeler.com/how-is-photogrammetry-used-in-video-games/ (accessed on 20 September 2021).

- Maximov, A. Future of Art Production in Games. In Proceedings of the GDC 2017: Game Developer Conference, San Francisco: UBM Tech, San Francisco, CA, USA, 2–6 March 2017. [Google Scholar]

- Tscheu, F.; Buhalis, D. Augmented reality at cultural heritage sites. In Information and Communication Technologies in Tourism; Springer: Cham, Switzerland, 2016; pp. 607–619. [Google Scholar]

- Partarakis, N.; Zabulis, X.; Foukarakis, M.; Moutsaki, M.; Zidianakis, E.; Patakos, A.; Adami, I.; Kaplanidi, D.; Ringas, C.; Tasiopoulou, E. Supporting Sign Language Narrations in the Museum. Heritage 2021, 5, 1. [Google Scholar] [CrossRef]

- Partarakis, N.; Klironomos, I.; Antona, M.; Margetis, G.; Grammenos, D.; Stephanidis, C. Accessibility of cultural heritage exhibits. In International Conference on Universal Access in Human-Computer Interaction; Springer: Cham, Switzerland, 2016; pp. 444–455. [Google Scholar]

- Doulgeraki, C.; Partarakis, N.; Mourouzis, A.; Stephanidis, C. A development toolkit for unified web-based user interfaces. In International Conference on Computers for Handicapped Persons; Springer: Berlin/Heidelberg, Germany, 2008; pp. 346–353. [Google Scholar]

- Wang, H.; Zou, X.; Liu, C.; Liu, T.; Chen, J. Study on a location method for bio-objects in virtual environment based on neural network and fuzzy reasoning. In International Conference on Intelligent Robotics and Applications; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1004–1012. [Google Scholar]

- Wang, H.; Zou, X.; Liu, C.; Lu, J.; Liu, T. Study on behavior simulation for picking manipulator in virtual environment based on binocular stereo vision. In Proceedings of the 2008 Asia Simulation Conference-7th International Conference on System Simulation and Scientific Computing, Beijing, China, 10–12 October 2008; pp. 27–31. [Google Scholar]

- Partarakis, N.; Antona, M.; Stephanidis, C. Adaptable, personalizable and multi user museum exhibits. In Curating the Digital; Springer: Cham, Switzerland, 2016; pp. 167–179. [Google Scholar]

- Zidianakis, E.; Partarakis, N.; Antona, M.; Stephanidis, C. Building a sensory infrastructure to support interaction and monitoring in ambient intelligence environments. In International Conference on Distributed, Ambient, and Pervasive Interactions; Springer: Cham, Switzerland, 2014; pp. 519–529. [Google Scholar]

- Zidianakis, E.; Partarakis, N.; Ntoa, S.; Dimopoulos, A.; Kopidaki, S.; Ntagianta, A.; Ntafotis, E.; Xhako, A.; Pervolarakis, Z.; Kontaki, E.; et al. The Invisible Museum: A User-Centric Platform for Creating Virtual 3D Exhibitions with VR Support. Electronics 2021, 10, 363. [Google Scholar] [CrossRef]

- Partarakis, N.; Zabulis, X.; Antona, M.; Stephanidis, C. Transforming Heritage Crafts to Engaging Digital Experiences; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Fast-Berglund, Å.; Gong, L.; Li, D. Testing and validating Extended Reality (xR) technologies in manufacturing. Procedia Manuf. 2018, 25, 31–38. [Google Scholar] [CrossRef]

- Margetis, G.; Papagiannakis, G.; Stephanidis, C. Realistic Natural Interaction with Virtual Statues in X-Reality Environments. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci 2019, XLII-2/W11, 801–808. [Google Scholar] [CrossRef] [Green Version]

- Mingei Online Platform (MOP). Available online: http://mop.mingei-project.eu/ (accessed on 20 September 2021).

- Zabulis, X.; Meghini, C.; Partarakis, N.; Kaplanidi, D.; Doulgeraki, P.; Karuzaki, E.; Stefanidi, E.; Evdemon, T.; Metilli, D.; Bartalesi, V.; et al. What is needed to digitise knowledge on Heritage Crafts? Mem. Rev. 2019. [Google Scholar]

- Zabulis, X.; Meghini, C.; Partarakis, N.; Beisswenger, C.; Dubois, A.; Fasoula, M.; Galanakis, G. Representation and preservation of Heritage Crafts. Sustainability 2020, 12, 1461. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Zhu, C.; Gold, C. Digital Terrain Modeling: Principles and Methodology; CRC press: Boca Raton, FL, USA, 2004. [Google Scholar]

- Capaldo, P.; Nascetti, A.; Porfiri, M.; Pieralice, F.; Fratarcangeli, F.; Crespi, M.; Toutin, T. Evaluation and comparison of different radargrammetric approaches for Digital Surface Models generation from COSMO-SkyMed, TerraSAR-X, RADARSAT-2 imagery: Analysis of Beauport (Canada) test site. ISPRS J. Photogramm. Remote Sens. 2015, 100, 60–70. [Google Scholar] [CrossRef]

- Pepe, M.; Prezioso, G. Two Approaches for Dense DSM Generation from Aerial Digital Oblique Camera System. In GISTAM; SCITEPRESS—Science and Technology Publications Ida: Setúbal, Portugal, 2016; pp. 63–70. [Google Scholar]

- Hu, J.; You, S.; Neumann, U. Approaches to large-scale urban modeling. IEEE Comput. Graph. Appl. 2003, 23, 62–69. [Google Scholar]

- Heightmap. Available online: https://en.wikipedia.org/wiki/Heightmap (accessed on 10 October 2021).

- Tangram Heightmapper. Available online: https://tangrams.github.io/heightmapper/ (accessed on 16 January 2020).

- Agrawal, A.; Radhakrishna, M.; Joshi, R.C. Geometry-Based Mapping and Rendering of Vector Data over LOD Phototextured 3D Terrain Models; Skala-UNION Agency: Plzen, Czech Republic, 2006. [Google Scholar]

- Partarakis, N.; Kaplanidi, D.; Doulgeraki, P.; Karuzaki, E.; Petraki, A.; Metilli, D.; Bartalesi, V.; Adami, I.; Meghini, C.; Zabulis, X. Representation and Presentation of Culinary Tradition as Cultural Heritage. Heritage 2021, 4, 612–640. [Google Scholar] [CrossRef]

- Partarakis, N.N.P.; Doulgeraki, P.P.D.; Karuzaki, E.E.K.; Adami, I.I.A.; Ntoa, S.S.N.; Metilli, D.D.M.; Bartalesi, V.V.B.; Meghini, C.C.M.; Marketakis, Y.Y.M.; Kaplanidi, D.D.M.; et al. Representation of Socio-historical Context to Support the Authoring and Presentation of Multimodal Narratives: The Mingei Online Platform. J. Comput. Cult. Heritage 2021, 15, 1–26. [Google Scholar] [CrossRef]

- Mingei Project’s Website. Available online: https://www.mingei-project.eu/ (accessed on 10 May 2021).

- GeoNames. Available online: http://www.geonames.org/ (accessed on 16 January 2021).

- Zidianakis, E.; Chatziantoniou, A.; Dimopoulos, A.; Galanakis, G.; Michelakis, A.; Neroutsou, V.; Ntoa, S.; Paparoulis, S.; Antona, M.; Stephanidis, C. A Technological Framework for Rapid Prototyping of X-reality Applications for Interactive 3D Spaces. In Advances in Intelligent Systems and Computing; Springer: Singapore, 2021; pp. 99–106. [Google Scholar]

- Partarakis, N.; Patsiouras, N.; Evdemon, T.; Doulgeraki, P.; Karuzaki, E.; Stefanidi, E.; Ntoa, S.; Meghini, C.; Kaplanidi, D.; Fasoula, M.; et al. Enhancing the Educational Value of Tangible and Intangible Dimensions of Traditional Crafts Through Role-Play Gaming. In International Conference on ArtsIT, Interactivity and Game Creation; Springer: Cham, Switzerland, 2020; pp. 243–254. [Google Scholar]

- Know Your Genres: Third-Person Shooters—Xbox Wire. Available online: News.xbox.com (accessed on 17 July 2021).

- The Explorer: 3D Game Kit. Available online: https://learn.unity.com/project/3d-game-kit (accessed on 16 January 2020).

- Unity3D Asset Store. Available online: https://assetstore.unity.com/ (accessed on 16 January 2020).

- Luebke, D.; Reddy, M.; Cohen, J.D.; Varshney, A.; Watson, B.; Huebner, R. Level of Detail for 3D Graphics; Morgan Kaufmann: Burlington, MA, USA, 2003. [Google Scholar]

- Stefanidi, E.; Partarakis, N.; Zabulis, X.; Papagiannakis, G. An approach for the visualization of crafts and machine usage in virtual environments. In Proceedings of the 13th International Conference on Advances in Computer-Human Interactions, Valencia, Spain, 21–25 November 2020; pp. 21–25. [Google Scholar]

- Stefanidi, E.; Partarakis, N.; Zabulis, X.; Zikas, P.; Papagiannakis, G.; Thalmann, N.M. TooltY: An approach for the combination of motion capture and 3D reconstruction to present tool usage in 3D environments. In Intelligent Scene Modeling and Human-Computer Interaction; Springer: Cham, Switzerland, 2021; pp. 165–180. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Partarakis, N.; Zabulis, X.; Patsiouras, N.; Chatjiantoniou, A.; Zidianakis, E.; Mantinaki, E.; Kaplanidi, D.; Ringas, C.; Tasiopoulou, E.; Dubois, A.; et al. Multi-Scale Presentation of Spatial Context for Cultural Heritage Applications. Electronics 2022, 11, 195. https://doi.org/10.3390/electronics11020195

Partarakis N, Zabulis X, Patsiouras N, Chatjiantoniou A, Zidianakis E, Mantinaki E, Kaplanidi D, Ringas C, Tasiopoulou E, Dubois A, et al. Multi-Scale Presentation of Spatial Context for Cultural Heritage Applications. Electronics. 2022; 11(2):195. https://doi.org/10.3390/electronics11020195

Chicago/Turabian StylePartarakis, Nikolaos, Xenophon Zabulis, Nikolaos Patsiouras, Antonios Chatjiantoniou, Emmanouil Zidianakis, Eleni Mantinaki, Danae Kaplanidi, Christodoulos Ringas, Eleana Tasiopoulou, Arnaud Dubois, and et al. 2022. "Multi-Scale Presentation of Spatial Context for Cultural Heritage Applications" Electronics 11, no. 2: 195. https://doi.org/10.3390/electronics11020195

APA StylePartarakis, N., Zabulis, X., Patsiouras, N., Chatjiantoniou, A., Zidianakis, E., Mantinaki, E., Kaplanidi, D., Ringas, C., Tasiopoulou, E., Dubois, A., & Carre, A. L. (2022). Multi-Scale Presentation of Spatial Context for Cultural Heritage Applications. Electronics, 11(2), 195. https://doi.org/10.3390/electronics11020195