End-to-End Decoupled Training: A Robust Deep Learning Method for Long-Tailed Classification of Dermoscopic Images for Skin Lesion Classification

Abstract

:1. Introduction

- We propose two new loss functions, and , able to weight samples more efficiently so as to guide the network to focus on informative samples;

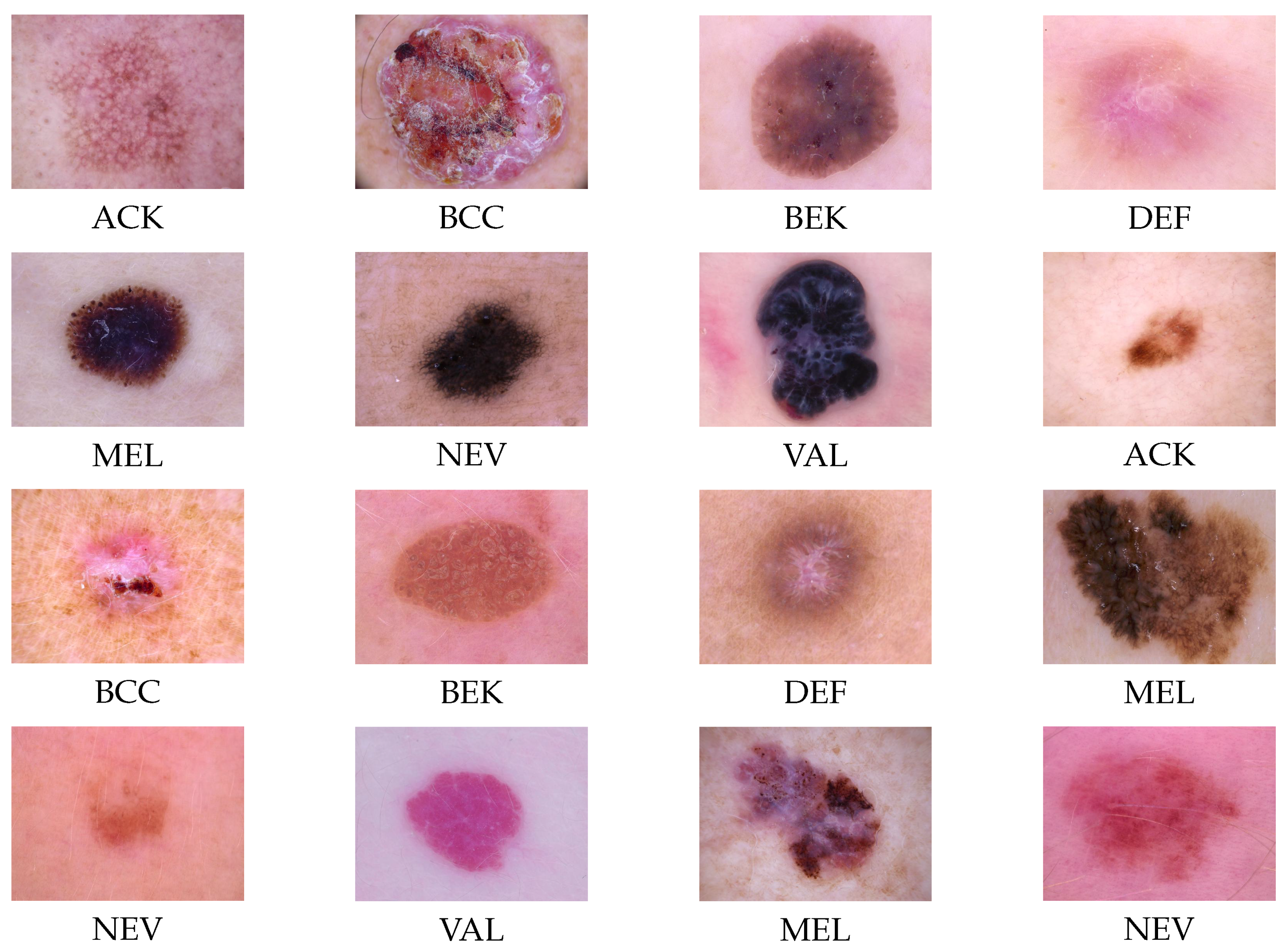

- We propose an approach able to handle both the class imbalance issue and the outlier issue;

- We propose a new learning scheme for the decoupled training following an end-to-end process;

- We demonstrate the strength of our method on the ISIC 2018 long-tail benchmark dataset and show improved performance over both existing methods that deal with the class imbalance problem and prior works on the same tasks.

2. Related Work

2.1. Design of CAD System for Skin Lesion Detection

2.1.1. CAD Based on One CNN

2.1.2. CAD Based on an Ensemble of CNN

2.1.3. CAD Based on CNNs Combined with Other Classifiers

2.2. Methods for Handling Long-Tail Distributions

2.2.1. Data-Level Approach

2.2.2. Classifier-Level Methods

2.2.3. Decoupled Training

3. Problem Setting and Analysis

3.1. Problem Setting

3.2. Analysis

4. Materials and Methodology

4.1. Theoretical Motivation

4.2. Definition of Loss Functions

- When the gradient of a sample was very large, corresponding to near 0, the loss went to 0, and the model was less affected by outliers.

- When the gradient of a sample was very low, corresponding to near 1, the loss went to 0, which prevented the model from being overwhelmed by easy samples.

4.3. Description of the Proposed Learning Framework

4.4. Dataset Description and Preparation

4.5. Training of the Convolutional Neural Network

4.6. Evaluation Metrics

5. Results

- We conducted an ablative study to analyze which of the commonly used loss function CE and was more appropriate for stage one;

- We compared our full pipeline with common methods in the literature proposed for handling class imbalance, namely cost-sensitive loss (CS) [38], class-balanced loss by effective number of classes (CB) [23], focal loss (FL) [22], label-distribution-aware margin loss (LDAM) [41], influence-balanced Loss (IB) [60], bag of tricks (BAGs) [50] and decoupled training [21];

- We compared our approach with prior works developing CAD systems for SL classification;

- We analyzed the best performance achieved with our pipelines.

5.1. Comparative Study of Our Approach with SOA Approach for Handling Class Imbalance

5.2. Performance of the Best Model with Our Approach

5.3. Comparative Study with Other CAD Systems for Skin Lesion Detection

5.4. Ablative Study

5.4.1. Effectiveness of Loss for the First Stage of Decoupled Training

5.4.2. Effectiveness of Our Learning Scheme Compared to a Conventional Scheme

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Strzelecki, M.H.; Strąkowska, M.; Kozłowski, M.; Urbańczyk, T.; Wielowieyska-Szybińska, D.; Kociołek, M. Skin Lesion Detection Algorithms in Whole Body Images. Sensors 2021, 21, 6639. [Google Scholar] [CrossRef] [PubMed]

- Haggenmüller, S.; Maron, R.C.; Hekler, A.; Utikal, J.S.; Barata, C.; Barnhill, R.L.; Beltraminelli, H.; Berking, C.; Betz-Stablein, B.; Blum, A.; et al. Skin cancer classification via convolutional neural networks: Systematic review of studies involving human experts. Eur. J. Cancer 2021, 156, 202–216. [Google Scholar] [CrossRef] [PubMed]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A.; et al. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef] [PubMed]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Klode, J.; Hauschild, A.; Berking, C.; Schilling, B.; Haferkamp, S.; Schadendorf, D.; Holland-Letz, T.; et al. Deep learning outperformed 136 of 157 dermatologists in a head-to-head dermoscopic melanoma image classification task. Eur. J. Cancer 2019, 113, 47–54. [Google Scholar] [CrossRef] [Green Version]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Lucius, M.; De All, J.; De All, J.A.; Belvisi, M.; Radizza, L.; Lanfranconi, M.; Lorenzatti, V.; Galmarini, C.M. Deep Neural Frameworks Improve the Accuracy of General Practitioners in the Classification of Pigmented Skin Lesions. Diagnostics 2020, 10, 969. [Google Scholar] [CrossRef]

- Yao, P.; Shen, S.; Xu, M.; Liu, P.; Zhang, F.; Xing, J.; Shao, P.; Kaffenberger, B.; Xu, R.X. Single model deep learning on imbalanced small datasets for skin lesion classification. IEEE Trans. Med. Imaging 2022, 41, 1242–1254. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, Y.; Xia, Y.; Shen, C. Attention Residual Learning for Skin Lesion Classification. IEEE Trans. Med. Imaging 2019, 38, 2092–2103. [Google Scholar] [CrossRef]

- Yap, J.; Yolland, W.; Tschandl, P. Multimodal skin lesion classification using deep learning. Exp. Dermatol. 2018, 27, 1261–1267. [Google Scholar] [CrossRef] [Green Version]

- Gessert, N.; Sentker, T.; Madesta, F.; Schmitz, R.; Kniep, H.; Baltruschat, I.; Werner, R.; Schlaefer, A. Skin Lesion Classification Using CNNs With Patch-Based Attention and Diagnosis-Guided Loss Weighting. IEEE Trans. Biomed. Eng. 2020, 67, 495–503. [Google Scholar] [CrossRef]

- Foahom Gouabou, A.C.; Damoiseaux, J.L.; Monnier, J.; Iguernaissi, R.; Moudafi, A.; Merad, D. Ensemble Method of Convolutional Neural Networks with Directed Acyclic Graph Using Dermoscopic Images: Melanoma Detection Application. Sensors 2021, 21, 3999. [Google Scholar] [CrossRef] [PubMed]

- Almaraz-Damian, J.A.; Ponomaryov, V.; Sadovnychiy, S.; Castillejos-Fernandez, H. Melanoma and Nevus Skin Lesion Classification Using Handcraft and Deep Learning Feature Fusion via Mutual Information Measures. Entropy 2020, 22, 484. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mahbod, A.; Schaefer, G.; Ellinger, I.; Ecker, R.; Pitiot, A.; Wang, C. Fusing fine-tuned deep features for skin lesion classification. Comput. Med. Imaging Graph. 2019, 71, 19–29. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hagerty, J.R.; Stanley, R.J.; Almubarak, H.A.; Lama, N.; Kasmi, R.; Guo, P.; Drugge, R.J.; Rabinovitz, H.S.; Oliviero, M.; Stoecker, W.V. Deep learning and handcrafted method fusion: Higher diagnostic accuracy for melanoma dermoscopy images. IEEE J. Biomed. Health Inform. 2019, 23, 1385–1391. [Google Scholar] [CrossRef] [PubMed]

- Popescu, D.; El-Khatib, M.; El-Khatib, H.; Ichim, L. New Trends in Melanoma Detection Using Neural Networks: A Systematic Review. Sensors 2022, 22, 496. [Google Scholar] [CrossRef]

- Adegun, A.; Viriri, S. Deep learning techniques for skin lesion analysis and melanoma cancer detection: A survey of state-of-the-art. Artif. Intell. Rev. 2021, 54, 811–841. [Google Scholar] [CrossRef]

- Yang, L.; Jiang, H.; Song, Q.; Guo, J. A Survey on Long-Tailed Visual Recognition. Int. J. Comput. Vis. 2022, 130, 1837–1872. [Google Scholar] [CrossRef]

- Tan, J.; Lu, X.; Zhang, G.; Yin, C.; Li, Q. Equalization Loss v2: A New Gradient Balance Approach for Long-tailed Object Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 1685–1694. [Google Scholar] [CrossRef]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Helba, B.; Kalloo, A.; Liopyris, K.; Marchetti, M.; et al. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic). arXiv 2019, arXiv:1902.03368. [Google Scholar] [CrossRef]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Zhang, Z. You Only Need End-to-End Training for Long-Tailed Recognition. arXiv 2021, arXiv:2112.05958. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.; Lin, T.Y.; Song, Y.; Belongie, S. Class-balanced loss based on effective number of samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9268–9277. [Google Scholar] [CrossRef] [Green Version]

- Cubuk, E.D.; Zoph, B.; Shlens, J.; Le, Q.V. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 702–703. [Google Scholar] [CrossRef]

- Harangi, B. Skin lesion classification with ensembles of deep convolutional neural networks. J. Biomed. Inform. 2018, 86, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Abunadi, I.; Senan, E.M. Deep learning and machine learning techniques of diagnosis dermoscopy images for early detection of skin diseases. Electronics 2021, 10, 3158. [Google Scholar] [CrossRef]

- Zhang, Y.; Kang, B.; Hooi, B.; Yan, S.; Feng, J. Deep long-tailed learning: A survey. arXiv 2021, arXiv:2110.04596. [Google Scholar] [CrossRef]

- Chawla, N.V. Data Mining for Imbalanced Datasets: An Overview. In Data Mining and Knowledge Discovery Handbook; Maimon, O., Rokach, L., Eds.; Springer: Boston, MA, USA, 2010; pp. 875–886. [Google Scholar] [CrossRef] [Green Version]

- Fernández, A.; Garcia, S.; Herrera, F.; Chawla, N.V. SMOTE for learning from imbalanced data: Progress and challenges, marking the 15-year anniversary. J. Artif. Intell. Res. 2018, 61, 863–905. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar] [CrossRef]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6023–6032. [Google Scholar] [CrossRef]

- Devries, T.; Taylor, G.W. Improved Regularization of Convolutional Neural Networks with Cutout. arXiv 2017, arXiv:1708.04552. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Zareapoor, M.; Shen, L.; Sadka, A.H.; Yang, J. Imbalanced data learning by minority class augmentation using capsule adversarial networks. Neurocomputing 2021, 459, 481–493. [Google Scholar] [CrossRef]

- Ali-Gombe, A.; Elyan, E. MFC-GAN: Class-imbalanced dataset classification using Multiple Fake Class Generative Adversarial Network. Neurocomputing 2019, 361, 212–221. [Google Scholar] [CrossRef]

- Deepshikha, K.; Naman, A. Removing Class Imbalance using Polarity-GAN: An Uncertainty Sampling Approach. arXiv 2020, arXiv:2012.04937. [Google Scholar] [CrossRef]

- Mani, I.; Zhang, I. kNN approach to unbalanced data distributions: A case study involving information extraction. In Proceedings of the Workshop on Learning from Imbalanced Datasets, Washington, DC, USA, 21–24 August 2003; Volume 126. [Google Scholar]

- Elkan, C. The Foundations of Cost-Sensitive Learning. In Proceedings of the Seventeenth International Conference on Artificial Intelligence, Seattle, WA, USA, 4–10 August 2001; Volume 1. [Google Scholar]

- Aurelio, Y.S.; de Almeida, G.M.; de Castro, C.L.; Braga, A.P. Learning from Imbalanced Data Sets with Weighted Cross-Entropy Function. Neural Process. Lett. 2019, 50, 1937–1949. [Google Scholar] [CrossRef]

- Ren, J.; Yu, C.; Ma, X.; Zhao, H.; Yi, S. Balanced meta-softmax for long-tailed visual recognition. Adv. Neural Inf. Process. Syst. 2020, 33, 4175–4186. [Google Scholar] [CrossRef]

- Tan, J.; Wang, C.; Li, B.; Li, Q.; Ouyang, W.; Yin, C.; Yan, J. Equalization loss for long-tailed object recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11662–11671. [Google Scholar] [CrossRef]

- Cao, K.; Wei, C.; Gaidon, A.; Arechiga, N.; Ma, T. Learning imbalanced datasets with label-distribution-aware margin loss. Adv. Neural Inf. Process. Syst. 2019, 32, 1567–1578. [Google Scholar] [CrossRef]

- Menon, A.K.; Jayasumana, S.; Rawat, A.S.; Jain, H.; Veit, A.; Kumar, S. Long-tail learning via logit adjustment. In Proceedings of the International Conference on Learning Representations, Virtual Event, 3–7 May 2021. [Google Scholar] [CrossRef]

- Li, Z.; Kamnitsas, K.; Glocker, B. Overfitting of neural nets under class imbalance: Analysis and improvements for segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 402–410. [Google Scholar] [CrossRef]

- Hong, Y.; Han, S.; Choi, K., K.; Seo, S.; Kim, B.; Chang, B. Disentangling label distribution for long-tailed visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6626–6636. [Google Scholar] [CrossRef]

- Kang, B.; Xie, S.; Rohrbach, M.; Yan, Z.; Gordo, A.; Feng, J.; Kalantidis, Y. Decoupling Representation and Classifier for Long-Tailed Recognition. arXiv 2020, arXiv:1910.09217. [Google Scholar] [CrossRef]

- Kang, B.; Li, Y.; Xie, S.; Yuan, Z.; Feng, J. Exploring balanced feature spaces for representation learning. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Zhang, S.; Li, Z.; Yan, S.; He, X.; Sun, J. Distribution alignment: A unified framework for long-tail visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2361–2370. [Google Scholar] [CrossRef]

- Wang, C.; Gao, S.; Gao, C.; Wang, P.; Pei, W.; Pan, L.; Xu, Z. Label-Aware Distribution Calibration for Long-tailed Classification. arXiv 2021, arXiv:2111.04901. [Google Scholar] [CrossRef]

- Desai, A.; Wu, T.Y.; Tripathi, S.; Vasconcelos, N. Learning of visual relations: The devil is in the tails. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15404–15413. [Google Scholar] [CrossRef]

- Zhang, Y.; Wei, X.S.; Zhou, B.; Wu, J. Bag of Tricks for Long-Tailed Visual Recognition with Deep Convolutional Neural Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021; Volume 35, pp. 3447–3455. [Google Scholar] [CrossRef]

- Sinha, S.; Ohashi, H.; Nakamura, K. Class-wise difficulty-balanced loss for solving class-imbalance. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar] [CrossRef]

- Dong, Q.; Gong, S.; Zhu, X. Class rectification hard mining for imbalanced deep learning. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1851–1860. [Google Scholar] [CrossRef] [Green Version]

- Cui, Y.; Zhou, F.; Lin, Y.; Belongie, S. Fine-grained categorization and dataset bootstrapping using deep metric learning with humans in the loop. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1153–1162. [Google Scholar] [CrossRef]

- Oh Song, H.; Xiang, Y.; Jegelka, S.; Savarese, S. Deep metric learning via lifted structured feature embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4004–4012. [Google Scholar] [CrossRef]

- Li, B.; Liu, Y.; Wang, X. Gradient Harmonized Single-Stage Detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8577–8584. [Google Scholar] [CrossRef] [Green Version]

- Weijer, J.v.d.; Gevers, T.; Gijsenij, A. Edge-Based Color Constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar] [CrossRef]

- Pham, T.C.; Luong, C.M.; Hoang, V.D.; Doucet, A. AI outperformed every dermatologist in dermoscopic melanoma diagnosis, using an optimized deep-CNN architecture with custom mini-batch logic and loss function. Sci. Rep. 2021, 11, 17485. [Google Scholar] [CrossRef]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar] [CrossRef] [Green Version]

- Park, S.; Lim, J.; Jeon, Y.; Choi, J.Y. Influence-balanced loss for imbalanced visual classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 735–744. [Google Scholar] [CrossRef]

- Barata, C.; Celebi, M.E.; Marques, J.S. Explainable skin lesion diagnosis using taxonomies. Pattern Recognit. 2021, 110, 107413. [Google Scholar] [CrossRef]

- Al-masni, M.A.; Kim, D.H.; Kim, T.S. Multiple skin lesions diagnostics via integrated deep convolutional networks for segmentation and classification. Comput. Methods Programs Biomed. 2020, 190, 105351. [Google Scholar] [CrossRef]

- Garg, R.; Maheshwari, S.; Shukla, A. Decision support system for detection and classification of skin cancer using CNN. In Innovations in Computational Intelligence and Computer Vision; Springer: Berlin/Heidelberg, Germany, 2021; pp. 578–586. [Google Scholar] [CrossRef]

| Block N° | Layer Name | Resolution | Filter Size | Number of Layers |

|---|---|---|---|---|

| 1 | Conv | 300 × 300 | 3 × 3 | 1 |

| 2 | MBConv1 | 150 × 150 | 3 × 3 | 2 |

| 3 | MBConv6 | 150 × 150 | 5 × 5 | 3 |

| 4 | MBConv6 | 75 × 75 | 3 × 3 | 3 |

| 5 | MBConv6 | 38 × 38 | 3 × 3 | 5 |

| 6 | MBConv6 | 19 × 19 | 5 × 5 | 5 |

| 7 | MBConv6 | 10 × 10 | 5 × 5 | 6 |

| 8 | MBConv6 | 10 × 10 | 3 × 3 | 2 |

| 9 | Conv | 10 × 10 | 1 × 1 | 1 |

| 10 | Global pooling | 10 × 10 | 1 | |

| 11 | Dense layer | 10 × 10 | 1 |

| Methods | Head | Medium | Tail | All |

|---|---|---|---|---|

| CS [38] | 0.76 ± 0.02 | 0.83 ± 0.02 | 0.95 ± 0.03 | 0.84 ± 0.01 |

| CB [23] | 0.79 ± 0.01 | 0.86 ± 0.01 | 0.95 ± 0.04 | 0.85 ± 0.01 |

| FL [22] | 0.80 ± 0.01 | 0.83 ± 0.05 | 0.88 ± 0.04 | 0.83 ± 0.01 |

| LDAM [41] | 0.76 ± 0.03 | 0.78 ± 0.01 | 0.93 ± 0.04 | 0.82 ± 0.02 |

| IB [60] | 0.83 ± 0.01 | 0.81 ± 0.04 | 0.87 ± 0.01 | 0.82 ± 0.02 |

| BAGs [50] | 0.79 ± 0.01 | 0.84 ± 0.02 | 0.92 ± 0.02 | 0.85 ± 0.02 |

| Decoupled learning [21] | 0.80 ± 0.01 | 0.82 ± 0.02 | 0.88 ± 0.02 | 0.83 ± 0.02 |

| Our method | 0.81 ± 0.01 | 0.88 ± 0.02 | 0.98 ± 0.01 | 0.87 ± 0.01 |

| Works | Methods | BACC |

|---|---|---|

| Al-masni et al. [62] | Single CNN | 0.81 |

| Gessert et al. [10] | Ensemble of CNNs | 0.76 |

| Yao et al. [7] | Single CNN | 0.86 |

| Garg et al. [63] | Single CNN | 0.74 |

| Barata et al. [61] | Ensemble of CNNs | 0.73 |

| Our method | Single CNN | 0.88 |

| Stage One Methods | BACC |

|---|---|

| CE | 0.82 ± 0.01 |

| loss | 0.83 ± 0.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Foahom Gouabou, A.C.; Iguernaissi, R.; Damoiseaux, J.-L.; Moudafi, A.; Merad, D. End-to-End Decoupled Training: A Robust Deep Learning Method for Long-Tailed Classification of Dermoscopic Images for Skin Lesion Classification. Electronics 2022, 11, 3275. https://doi.org/10.3390/electronics11203275

Foahom Gouabou AC, Iguernaissi R, Damoiseaux J-L, Moudafi A, Merad D. End-to-End Decoupled Training: A Robust Deep Learning Method for Long-Tailed Classification of Dermoscopic Images for Skin Lesion Classification. Electronics. 2022; 11(20):3275. https://doi.org/10.3390/electronics11203275

Chicago/Turabian StyleFoahom Gouabou, Arthur Cartel, Rabah Iguernaissi, Jean-Luc Damoiseaux, Abdellatif Moudafi, and Djamal Merad. 2022. "End-to-End Decoupled Training: A Robust Deep Learning Method for Long-Tailed Classification of Dermoscopic Images for Skin Lesion Classification" Electronics 11, no. 20: 3275. https://doi.org/10.3390/electronics11203275