In this section, a review of the bias problem from different aspects including machine learning, GNN algorithms, RSs, and GNN-based RSs is presented. There is extensive work in the literature studying biases from different perspectives. However, in this section, we also analyze the most important works regarding the bias problem in general, focusing mainly on RSs and more specifically on GNN-based RSs.

2.1. Bias in Machine Learning

In order to have a better understanding of the bias problem regarding different aspects and how this problem can be transferred to the results of a recommender system, here, we provide different definitions of bias at different levels such as the statistical meaning of bias and bias in Machine Learning (ML).

According to [

24], statistical bias relates to systematic errors produced by the measurement or sampling process. Within them, it is necessary to differentiate between errors caused by random chance and those caused by bias.

Data are the input in ML algorithms. When this input is biased, this bias can be transferred into the model generated by the algorithm, leading to unfair decisions and a reduction in quality [

25,

26]. These problems can have severe financial, social, and reputational effects on companies [

8].

Algorithmic bias in ML-based models stems from abnormal datasets, weak models, poor algorithm designs, or historical human biases [

27]. Algorithmic bias also can happen due to the problem of under-fitting in the training phase, which can be caused by a mixture of limitations in the training data and model capacity issues [

28]. The factors affecting this mechanism are irreducible error (Bayes error), regularization mechanisms, class imbalance, and under-represented categories [

28].

There are various types of general biases happening in different stages of CRISP-DM, a well-known standard process for data mining introduced by IBM in 2015, which breaks the data process into six different stages including business understanding, data understanding, data preparation, modeling, evaluation, and development. The types of described biases are social bias, measurement bias, representation bias, label bias, algorithmic bias, evaluation bias, deployment bias, and feedback bias [

8]. Social bias happens when the data transfer biases in society to the model on a large scale. Measurement bias arises due to human error in the business understanding phase, especially in working with sensitive features such as age and gender. This kind of bias can also happen during the data preparation phase. Representation bias, moreover, occurs during data collection and sampling when the sample or data distribution does not represent the real underlying distribution. Label bias can be seen during the data preparation phase when labels are chosen for the prediction task. Choosing the best label for a dataset could be very difficult due to vagueness and cultural or individual variety. Algorithmic bias can also happen in the modeling phase due to model parameters or technical issues such as model misclassification [

29].

It is also important to know, based on training data statistics, whether a model can amplify existing bias in data [

29]. Evaluation bias, which happens in the evaluation phase, can happen because of the differences between the training and test data population. Finally, deployment bias can happen after model implementation in a complicated socio-technical environment.

Bias in ML can also lead to unfair results. Fairness in machine learning can be categorized into ten classes: statistical party, equalized odds, equal opportunity, disparate impact, disparate mistreatment, treatment equality, general entropy index, individual fairness (formerly, fairness through awareness), fairness through unawareness, and counterfactual fairness [

30].

A systematic, controlled study on bias amplification is provided in [

29]. To reach this, a heavily controlled simple image classification problem was taken into consideration. The results showed different factors including the accuracy of the model, model capacity, model overconfidence, and size of the training data are correlated with bias amplification. Furthermore, The results also illustrated that bias amplification can be different during training time, and also, the difficulty of classification tasks in recognizing group membership can influence bias amplification.

2.2. Bias in GNNs

GNNs and their variants have shown great performance for a wide range of graph learning tasks. However, they face remarkable computational challenges due to the increasing sizes of current datasets. Graph convolutions’ multilayers mean recursively developing the neighbor aggregation in a top-down method, which can lead to a neighborhood whose size is growing based on the number of layers. If the graph is scale-free and condensed, a large part of the graph is required to compute the embeddings, also with a few layers, which is infeasible for large-scale graphs [

31,

32].

Other researches showed that GNNs perform better with homophilous nodes rather than heterophilous ones. A homophily ratio is defined in order to examine whether a graph is homophilous or heterophilous. Graphs with higher homophily ratios are considered homophilous, and graphs with lower ratios are non-homophilous [

33].

Although GNNs usually provide better accuracy in results, most of the existing GNNs do not take the fairness issue into consideration, which can result in discrimination toward certain demographic subgroups with specific values of features that can be considered sensitive, such as age, gender, and race. The decision made by the implemented GNNs can be highly affected by these kinds of discrimination [

32,

34,

35]. In addition, a wide range of ML systems are trained with human-generated data; hence, there is a clear need to comprehend and mitigate bias toward demographic groups in GNN approaches [

36].

Biased results in GNN algorithms can stem from different reasons, the most important of which is a biased network structure. Although it is very important to detect which part of this network structure can lead to bias, it is believed this bias can be due to the message passing mechanism in the GNN’s main operation. There are several challenges to understanding bias in the network structure, including the Fairness Notion Gap, Usability Gap, and Faithfulness Gap. The Fairness Notion Gap points to how to measure bias at the instance level. The Usability Gap points to the fact that it is also vital to find the edges in the computational graph most influential on the fairness degree of its prediction. The final edges cannot be considered the ones that contributed the most to this fairness. The Faithfulness Gap points to the need to ensure that gathered bias explanations indicate the true reasoning results based on the chosen model [

34]. Furthermore, bias can also lead to a distribution shift between training and testing data, especially among labels [

37].

2.3. Bias in RSs

The quality of recommendations provided by different RSs is various for different users based on their characteristics and sensitive information including age, gender, race, and personality. This behavior conflicts with European Commission (EC) regulations: “obligations for ex-ante testing, risk management and human oversight of AI systems to minimize the risk of erroneous or biased AI-assisted decisions in critical areas such as education and training, employment, important services, law enforcement, and the judiciary” [

7]. According to this regulation, AI systems should follow EU fundamental rights such as the right not to be discriminated against, respecting individuals’ private life, and personal data protection [

7]. Moreover, biased results in RSs can cause user dissatisfaction [

38].

Considering the bias issue in RSs is one of the most important factors leading to unfair decisions and discrimination, and this issue clearly disagrees with the mentioned regulations. The work presented in [

2] indicates that bias can be divided into three potential categories, which can be the first considered for recognition: bias in input data, computational bias, which may stem from the algorithm and can be added to team decisions, and outcome bias. This is an expansion of the bias categorization previously introduced by Baeza-Yates (2016) [

16], which helps break down the circular behavior into seven different types of biases in a circular format. Data bias, which is observational rather than experimental, happens when the distribution of the training data differs from the ideal test data distribution and consists of: selection bias, exposure bias, conformity bias, and position bias. Algorithmic bias can also happen during the different stages of the modeling process including training, evaluation, and feature engineering. Popularity bias, unfairness, and inductive bias can be the results of this particular type of bias. Popularity bias stems from the long-tail phenomenon in RSs. This common issue happens when a small number of very popular items have the most interaction in the system. This can lead to a neglection of the model toward unpopular items and give a higher score to the more popular ones [

2]. Together, the previously mentioned biases can create a circle graph in which biased data move from one stage to the next, where additional and new biases are introduced [

2,

39]. This circular behavior of biases increases the complexity to recognize where actions are needed. Exposure bias happens due to the exposure of specific parts of items for users achieved from implicit feedback, and it can also be caused by popularity bias due to the recommendation of the most popular items [

2,

40]. In other words, bias can result in the limitation of the users’ choices and contaminate users’ feedback, which can amplify exposure bias [

41]. Among the mentioned types of biases, popularity bias has been considered the most important type in the field of RSs [

42].

Another proposed classification by Ashokan and Hass (2021) [

30] for bias considers three main categories in more detailed sub-categories consisting of: data generation bias, which includes historical, representation, measurement, population, sampling, and Simpson’s paradox biases. Historical bias refers to already-existing bias from socio-technical issues. Representation bias can be created due to the sampling phase. Measurement bias happens during selecting, utilizing, and measuring specific features. Population bias happens when the dataset distribution differs from the real-world population. Sampling bias also could be due to error while creating random subgroups. Simpson’s paradox means bias from the distinction in the behavior of population subgroups in the aggregation phase [

43].

Model building and evaluation bias include evaluation, aggregation, popularity, algorithmic, omitted variable, demographic, and temporal biases. Evaluation bias can arise during the model evaluation phase. Aggregation bias can happen due to the wrong assumptions about the effects of population on the model’s results. Popularity occurs because of more popular items gaining more interactions [

42,

44]. Algorithmic bias can happen due to technical issues inside the used algorithm. Omitted variable bias takes place because of not choosing one or more essential variables for the model. Demographic bias happens due to differences in user demographic groups (e.g., age and gender) being treated differently [

30,

45]. Temporal bias stems from behavior and population differences with the passage of time [

30,

46].

Deployment and user interaction biases include behavioral, content production, linking, presentation, social, emergent, observer, interaction, and ranking biases. Behavioral bias can occur due to the dissimilarity of users’ behavior in the dataset. Content production bias exists due to differences in the users’ generated contents including structural, lexical, semantic, and syntactic varieties. Linking bias arises when network attributes from user activities do not truly represent the user behavior. Presentation bias happens during the presentation of information. Social bias also happens when preferences deliberately are given to certain groups and affect their judgments [

30,

47]. Emergent bias arises because of the difference in the real users’ behavior and users’ behavior in the dataset. Observer bias could happen when researchers’ expectations are unintentionally injected into the research data. Interaction bias can be created due to the difference in the means of users’ interaction with a system. Finally, ranking bias occurs when top-ranked results are more exposed [

30].

Due to the impact the biases have on the model’s decision, it is important to consider all types of biases; however, the most recent publications mainly aimed to solve exposure bias, popularity bias, unfairness, and the bias loop effect. One of the most important challenges of the previous approaches is the trade-off between the model’s performance and bias mitigation, which is believed to be based on the chosen scenario [

2]. The definition of fairness also may depend on the domain, but this issue has recently drawn much attention [

48].

In the recommendation area, a model that simulates multiple rounds of a bias feedback loop in a social network was proposed in [

39] in order to analyze the consequence of this feedback loop in the long run. This model uses different control parameters including the level of homophily in the network, the relative size of the groups, the choice among many new link recommenders, and the choice between three various stochastic use behavior models, which decide whether each recommendation would be accepted or not. The results of this experimental study showed that a minority group with a high level of homophily can receive an excessive advantage in exposure from all link recommenders. On the other hand, if the group is heterophilic, it becomes under-exposed. Furthermore, the level of homophily in the minority group can influence the disparate exposure speed, and the relative size of the minority can magnify the effect. Both minority and majority classes based on their level of homophily can experience the “rich-get-richer” effect.

In [

1], the authors worked on Conversational Recommender Systems (CRSs) and systematically investigated the popularity bias issue in state-of-the-art CRSs from various perspectives including exposure rate, success rate, and conversational utility. This article proposed a suite of popularity bias metrics that are specifically designed for CRSs. The work presented in [

49] also focused on popularity bias and the long-tail problem in RSs. In addition, this paper introduced useful metrics for measuring the long-tail phenomenon on items. To complete the previously mentioned issue, Reference [

30] continued this analysis further to measure algorithmic bias and fairness in a rating-based recommender system. This work considered various types of biases and fairness. Besides, this work proposed fairness metrics by analyzing two domains.

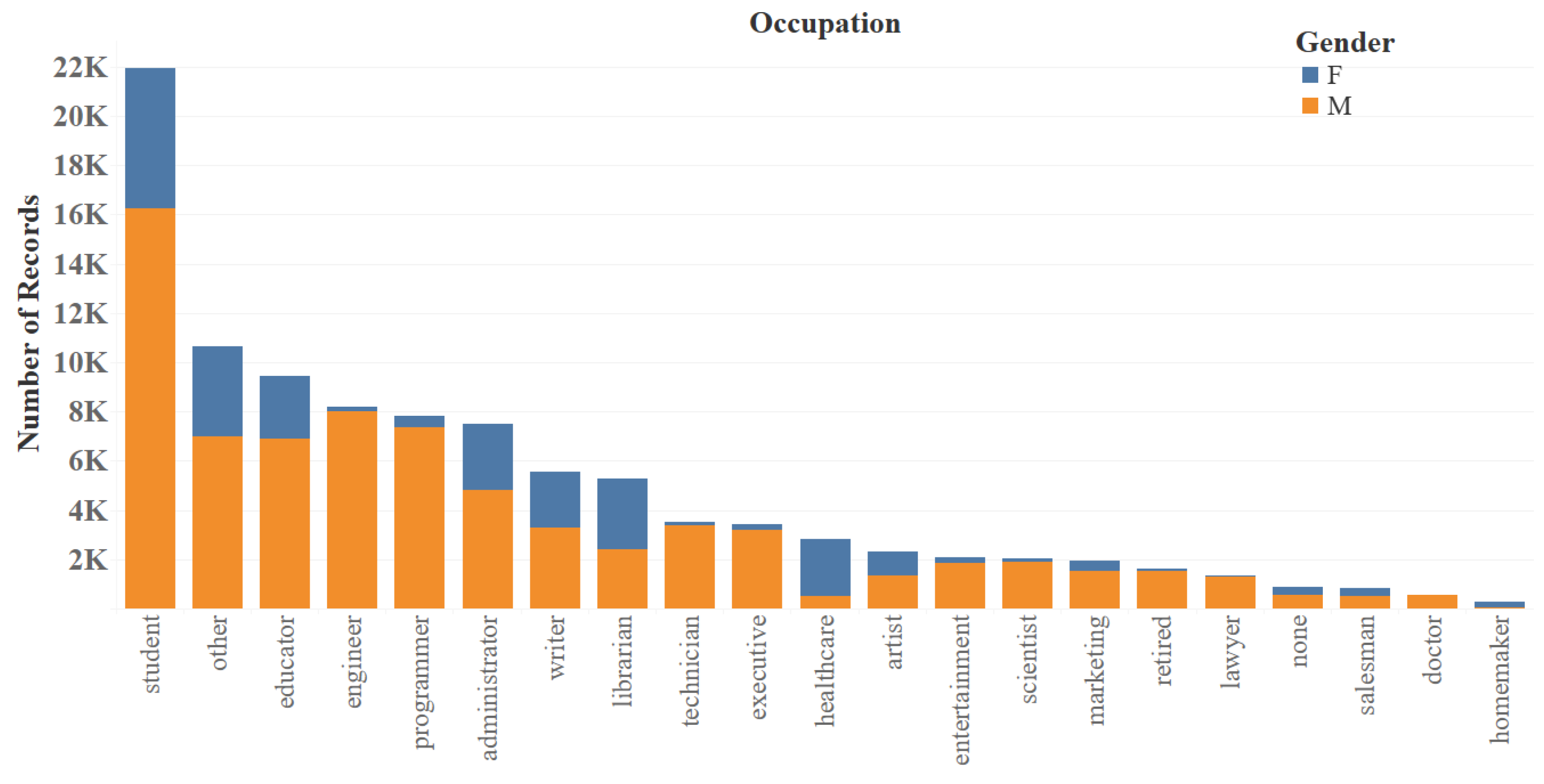

In some works including [

50,

51], the sensitive attribute gender was taken into consideration. Unbiased Gender Recommendation (UGRec) was introduced in [

50] in order to balance performance among males and females. Aiming at seizing the users’ preferences, an information aggregation component was designed to learn the representation of users and items from the user–item graph. To improve representation, a multihop mechanism was proposed by the aggregation of users’ higher-order neighbors. An end-to-end training framework with adversarial learning was also used to avoid an impact on the accuracy. This framework is capable of removing gender-specific features and maintaining common features. An exploratory analysis of gender bias and discrimination in music RSs was conducted in [

51]. The main aim of this work was to investigate which CF approach enhances or reduces artist gender bias. To reach this, the Preference Ratio (PR) and Bias Disparity (BD) metrics were used to measure the results. The results showed that the CF RS can amplify the gender bias problem in a real-world LastFM dataset.

Other work proposed by [

52] also focused on gender bias in RSs for two book rating datasets, Amazon and book-crossing. In this research, a model-agnostic bias mitigation approach was introduced with respect to the accuracy of the system. Two recommender system approaches were used from the K-nearest neighbors’ family. The results showed a significant decrease in the bias with little impact on the accuracy of the models.

2.4. Bias in GNN-Based RSs

Specific sensitive attributes that reinforce an already existing bias in the network of GNN-based RSs have drawn attention toward measuring fairness in supervised methods. The metrics used for this purpose make the proportion of sensitive attribute values in a protected group classified as positive to be the same as the unprotected group [

14,

53].

The behavior of user–item interaction does not explicitly include any sensitive information from users, but due to the high correlation between users and their attributes, directly applying modern user and item representation learning can result in the leakage of the users’ sensitive information [

14]. Furthermore, considering the graph-based nature of RSs, users do not have independence, and they are implicitly correlated with other users who share similar behavior, which can result in vital problems in previous models and the basics of CF recommendations [

14]. In addition, current GNN algorithms are suffering from societal bias in data, which limits the generalization power of the models [

15]. In the graph structure, nodes of similar sensitive attributes are prone to be connected, and this nature can result in critical bias in decision-making due to differences between representations from nodes of similar sensitive information and other nodes of other sensitive features [

15]. Some approaches also consider graph embedding methods used in Online Social Networks (OSNs). Graph embedding methods are one of the best tools for data mining, which connect each user with a lower-dimensional vector that contains structural information within the network. This information can include the user’s neighborhood, popularity, etc. [

53]. According to previous research, OSNs suffer from discrimination, favoring the majority group, which is against anti-discrimination laws [

53].

The work presented in [

54] focused on calibrating the long-tail issue in session-based recommendations, which can be divided into Recurrent Neural Network-based (RNN) models and GNN-based models. This work used different metrics to evaluate the models (e.g., MRR and recall) and measured popularity bias including coverage and tail coverage. Besides, a calibration module was proposed that uses the session representation to predict the ratio of items from the tail in the recommendation list. A curriculum training strategy with two stages also was used to enhance the accuracy of predictions in the calibration module.

In [

55], an investigation into graph-based Collaborative Filtering (CF) approaches for RSs was performed. In this work, two-fold performances for accuracy and novelty for currently used graph-based CF methods were taken into consideration. The results indicated that symmetric neighborhood aggregation in most of the graph-based CF models amplifies the popularity bias in RSs. In addition, this amplification can be expanded by the increase in the depth of graph propagation.

Considering works on the bias and fairness problem in GNN-based RSs, they are very limited. Most of the research works in this area focus on sensitive information in RSs. The work in [

14] focused on eliminating sensitive information in representation learning, to achieve fair representation learning for a fair recommendation. To address this problem, a model-agnostic graph-based perspective for fairness-aware representation learning was introduced. The proposed model uses user and item embeddings from any recommendation models as the input and defines a sensitive feature set. In addition, the proposed model works as a filter to obscure any sensitive information in the defined set without damaging the accuracy of the recommendation. In this structure, every user can be considered an ego-centric graph structure, which helps the filters work under a graph-based adversarial training process. The discriminators were designed to make predictions of the attribute of concern, and the training of the filters was addressed to the removal of any sensitive information that can leak from the user-centric graph structure. This model was examined through two real-world datasets and showed high performance.

The aim of [

15] was to overcome two major challenges in the fairness of GGN-based RSs with limited sensitive information: first, how to eradicate discrimination by fixing the insufficiency of sensitive attributes; second, the confirmation of the fairness in the GNN classifier in the RS. To tackle the mentioned issues, a new approach called FairGNN was introduced for fair node classification. To predict some of the sensitive attributes with noise, which can lead to a fair classification, an estimator for a sensitive attribute in the GNN was used in FairGNN, which can work in an atmosphere including an adversary on different datasets. The results of the experiments on real-world datasets showed that the proposed model can work effectively with respect to fairness and classification performance.

Another work [

53] focused on quantifying and tackling fairness problems in graph embedding methods by using the node2vec approach for GNN-based RSs. To address this problem, this article provided a new study method for the algorithmic fairness of node2vec. In addition, the statistical parity method (which uses sensitive attributes of pairs of users to measure fairness for groups) was extended and the novel idea of the Equality of Representation was proposed to calculate fairness in a friendship RS. The node2vec approach then was applied to the real-world OSN dataset to discover biases in the recommendations caused by unfair graph embeddings. Finally, as an extension of node2vec, a new fairness-aware graph embedding algorithm called Fairwalk was introduced.

The exposure bias problem in GNN-based RSs was addressed in [

56]. In this paper, a neighbor aggregation via an inverse propensity approach was proposed. The mentioned approach balances the biased local structure of each target node by obtaining the user–item propensity score for each interaction in the graph, and then, the inverse propensity score with Laplacian normalization is used as the edge weight for the process of neighbor aggregation. This leads to highlighting the less-popular neighbors in an embedding. The results showed that the debiasing method works successfully, hence increasing the performance of the model.

Considering the mentioned challenges in RSs, bias amplification is one of the most important subjects that needs to be taken into consideration. Moreover, based on the GNN structure, the accuracy of the models can be enhanced, but the bias problem can be even worse. This problem is clearly against mentioned guidelines and needs further investigation. On the other hand, sensitive attributes such as gender in GNN-based RSs are very important, and fairness toward these attributes needs to be taken into consideration.