Abstract

The integration of artificial intelligence (AI) technology into the Internet of Vehicles (IoV) has provided smart services for intelligent connected vehicles (ICVs). However, due to gradually upgrading to ICVs, an increasing number of external communications interfaces exposes the in-vehicle networks (IVNs) to malicious network intrusion. The malicious intruders can take over the compromised ICVs and mediately intrude into the ICVs connected through IoV. Therefore, it is urgent to develop IVN intrusion detection methods for IoV security protection. In this paper, a ConvLSTM-based IVN intrusion detection method is developed by leveraging the periodicity of the network message ID. For training the ConvLSTM model, a federated learning (FL) framework with client selection is proposed. The fundamental FL framework works in the client-server mode. ICVs are the local clients, and mobile edge computing (MEC) servers connected to base stations (BSs) function as the parameter servers. Based on the framework, a proximal policy optimization (PPO)-based federated client selection (FCS) scheme is further developed to optimize the model accuracy and system overhead of federated ConvLSTM model training. Simulations are conducted by the exploitation of real-world IoV scenario settings and IVN datasets. The results indicate that by exploiting the ConvLSTM, the model size and convergence time are dramatically reduced, and the 95%-beyond detection accuracy is maintained. The results also unveil that the PPO-based FCS scheme outperforms the benchmarks on the convergence rate, model accuracy, and system overhead.

1. Introduction

With the rapid deployment of the Internet of Vehicles (IoV), traditional vehicles are gradually upgraded to intelligent connected vehicles (ICVs) and equipped with an increasing number of on-board units (OBUs) for efficient communications [,]. The increasing number of vehicle-to-everything (V2X) communication modules has empowered the intelligent services of IoV while exposing the in-vehicle networks (IVNs) of ICVs to malicious network intrusion []. As one of the most commonly deployed in-vehicle communication networks, the controller area network (CAN) lacks security mechanisms []. Through cracking the external communication interfaces, attackers can run into the internal networks and take over the compromised vehicles [,]. Furthermore, vehicles connected with the compromised vehicles can be intruded on through the IoV. Hence, the development of in-vehicle network intrusion detection methods is a necessity.

The intruder of IVNs can strike malicious attacks. These attacks can subsequently incur irregular patterns of network messages. Thus, network message modeling and prediction have become a potential solution to malicious intrusion detection. Recently, artificial intelligence (AI) technology, particularly deep neural networks (DNNs) that have the advantage of non-linear and high-dimensional data modeling, has been widely used for network intrusion detection [,]. Providing the incremental complexity of the IVN message pattern, research efforts have been spared on applying the DNNs to the IVN intrusion detection []. However, the design of DNN for IVN messages still needs to be studied.

In terms of the DNN model training, it is generally computation-intensive that demands a massive amount of data and resources. An individual ICV can hardly satisfy the requirements. While for the centralized DNN model training conducted in the cloud, the wireless data uploading meets the risk of privacy leakage and high access latency, so the uRLLC in IoV can hardly be guaranteed []. Under such a situation, federated learning (FL) with the advantages of secure multi-party computation and privacy preservation is considered to be a promising solution [,].

An FL framework generally works in either client-server mode or peer-to-peer mode. In IoV, the client-server FL framework is commonly adopted, where ICVs are the clients and mobile edge computing (MEC) servers connected to base stations (BSs) are the parameter servers. Within such a framework, the clients hold their own datasets and conduct the model training locally. After each round of local training, the model is uploaded to the parameter server for global aggregation []. With FL, ICVs can hold their data to avoid leakage, and model parameters are uploaded instead of massive raw data to release the burden of communication bandwidth. Nevertheless, due to the heterogeneity of ICVs, it is generally neither possible nor efficient to adopt all of them as federated clients. Therefore, to maximize the model training accuracy and minimize the related overhead, client selection also needs to be investigated [].

In this paper, we mainly focus on two problems, the DNN-enabled intrusion detection and the client selection for federated DNN model training in IoV.

1.1. Related Work

The research efforts dedicated to the DNN design and its application to the IVN intrusion detection are overviewed in Section 1.1.1. The efforts on the deep reinforcement learning (DRL)-based client selection for FL in IoV are analyzed in Section 1.1.2.

1.1.1. DNN-Enabled IVN Intrusion Detection

Syntax Parsing: The authors in [] are the first to apply DNNs to IVN intrusion detection. The data payload in a CAN message is processed by semantics for feature extraction. A linearly combinational DNN model is built as the binary classifier for the distinction between illegal packets and normal ones. The illegal packets are generated by injecting packets with a manipulated data field. Finally, the total detection accuracy is around 97.8%. However, the feature extraction relies on semantics, while for security, most manufacturers do not publish their semantics interpreting protocols.

Image Conversion: In [], the authors convert the feature extracted from the physical layer signal of the CAN bus into an image. A convolutional neural network (CNN) model is built for the feature image. The CNN-based method can be applied to the spoofing attack detection and achieves a 90%-beyond accuracy with limited training data. Seo et al. convert the message into an image as well []. A generative adversarial network (GAN) model is built for image modeling. The method can detect unknown attacks with 98% accuracy. However, the conversion to the 2D image and the image processing brings an extra computational burden.

Time Series Prediction: In [], the authors have proposed an LSTM-based method. Similar to [], it also selects the 64-bit data field as the feature vector, but it does not parse the syntax. For each CAN message ID, a separate LSTM model is set up for the prediction of the incoming data field. For each ID, performance is evaluated individually from the perspective of interleaving, drop, discontinuity, unusual, and reverse anomalies, and the results are quite diverse. The authors in [] have conducted a similar work. Instead of LSTM, hierarchical temporal memory (HTM) is used for data sequence prediction. Considering the dozens of legal IDs in a vehicle, building separate prediction models for all the IDs is computation-intensive. Zhu et al. select both the message time interval and the 64-bit data field to build a feature vector []. An LSTM model is built for the combined feature. The proposed method has been applied to spoof, replay, flood, drop, and tamper attacks and obtains a 90%-beyond accuracy. Instead of the 64-bit data field, the authors in [] propose an IVN intrusion detection method based on the LSTM-based vectorized ID time series prediction. The F1-score of the insertion detection is 0.9. That of the drop and illegal ID is 0.84 and 1, separately. In our previous work, we have also modeled the ID sequence as a times series and proposed an LSTM-based ID prediction and intrusion detection method, where the LSTM model is trained by the fundamental FL []. The overall detection accuracy is over 90%. Providing the one-hot encoded ID vector is sparse, a pre-processing layer is considered to be involved in the DNN model in future work to reduce the vector dimension and improve the computational efficiency.

In this work, we adopt the time series prediction while considering the characteristics of the input vector and thus propose a convolutional LSTM (ConvLSTM)-based network intrusion detection method. By exploiting the ConvLSTM, the input vector can be preprocessed with the convolutional filter and then modeled with LSTM for the prediction. As compared to modeling with LSTM only, the neural network model size and convergence time are dramatically reduced while maintaining identical accuracy.

1.1.2. DRL-Based Federated Client Selection for IoV

In IoV, most of the existing works have adopted the client-server FL framework. In [], the authors adopt vehicles as clients, while deploying road-side units (RSUs) as parameter servers. A generalized Pareto distribution is trained by the FL for the data queue length of a vehicle exceeding a predefined threshold, to solve the optimization problem of power and resource allocation. In [], a double-layered blockchain is developed as the FL framework. The lower layer is similar to []. In the upper layer, the RSUs turn out to be the clients and BSs are the parameter servers. The blockchain is developed for secure knowledge sharing in IoV. In [], a hybrid blockchain is proposed for FL. The roles of vehicles and RSUs are similar to []. While for maintaining the hybrid blockchain, the vehicles jointly build the directed acyclic graph in the basic layer, and RSUs form up the upper mainchain.

To further optimize the efficiency and accuracy of FL, the issue of client selection in the client-server FL framework needs to be studied. AI technology has been gradually applied to the autonomous organization and management of Internet of Everything systems [,]. As one of the main branches of AI for decision-making, DRL is becoming a promising solution to federated client selection (FCS).

In [], the adaptive client selection problem is formulated as a Markov decision process (MDP). The state space is the combinational set of resources and capabilities of local clients. The action space is the combo of client selection actions. The DRL algorithm, double deep Q network (DDQN), is adopted to resolve the MDP problem. By exploiting the DDQN, the related overhead of FL has been reduced compared to the traditional optimization algorithm-based client selection. In [], based on the proposed hybrid blockchain-based FL framework; the client selection is formulated as an MDP. The DRL algorithm adopted is deep deterministic policy gradient (DDPG). DDQN is a value-based DRL algorithm that optimizes the Q-value, while DDPG is the policy-gradient that optimizes the policy directly. Considering the characteristics of client selection and the advantages of policy-gradient DRL algorithms, proximal policy optimization (PPO) is adopted in our previous work [] for federated client selection. It outperforms the DDQN-based client selection on the convergence rate and model accuracy of FL.

This work applies a client-server FL framework with the PPO-based FCS scheme to the ConvLSTM neural network model training. To improve the model accuracy of FL, the weight divergence is involved in the client selection criterion to evaluate the quality of local datasets of candidate clients. Except for the analysis of the convergence rate, test accuracy, and system overhead of training, intrusion detection based on the federated ConvLSTM model is evaluated. The federated ConvLSTM-based IVN intrusion detection can dramatically reduce the convergence time and system overhead while maintaining identical detection accuracy.

1.2. Contributions

Overall, to resolve the above issues, a ConvLSTM-based IVN intrusion detection method is first proposed, followed by a federated ConvLSTM neural network model training scheme with client selection. Specifically, by leveraging the periodicity of the CAN message ID, the ID is vectorized by one-hot encoding, and a ConvLSTM neural network is built for the modeling and prediction of the ID vector series. An ID prediction-based intrusion detection algorithm is proposed then. Furthermore, for training the ConvLSTM, an FL framework with the DRL-based FCS scheme is developed. The FL framework fundamentally works in a client-server way. Based on the framework, an MDP is formulated for FCS, where the local data quality, computational and communication capabilities, and energy capacity of candidate clients are all considered. The MDP problem of FCS is finally solved by a policy-gradient DRL algorithm, namely, proximal policy optimization (PPO). The main contributions are summarized as follows.

- A ConvLSTM-based intrusion detection method is developed for malicious attack detection. Simulation results indicate that by exploiting the ConvLSTM, the input ID vector can be preprocessed with the convolutional filter first and then modeled with LSTM for the prediction, which dramatically reduces the model size and improves the convergence rate while maintaining the 95%-beyond detection accuracy.

- For ConvLSTM training, a client-server FL framework is built. To further optimize the performance of the federated ConvLSTM model training, an MDP is set up for formulating the problem of FCS.

- A PPO-based FCS scheme is proposed for resolving the MDP problem. Simulation results unveil that the proposed scheme jointly optimizes the convergence rate, model accuracy, and related overhead of FL as compared to the benchmarks, including centralized model training, fundamental FL without an FCS scheme, and FL with the DDQN-based FCS scheme.

The rest of this paper is organized in the following way. In Section 2, the fundamental system model and typical malicious attacks are introduced. Section 3 and Section 4 propose approaches of the ConvLSTM-based in-vehicle network intrusion detection and the federated ConvLSTM neural network model training with client selection, respectively. Performance evaluation has been conducted in Section 5. Finally, this work is summarized in Section 6.

2. Preliminaries

This section introduces the preliminaries of this work. The mathematical notations are summarized in Table 1 first, followed by the fundamental system model and the malicious attacks struck by network intrusion.

Table 1.

Summary of Notations.

2.1. Fundamental System Model

The IoV system mainly consists of three computing layers, including local, edge, and cloud. The local computing layer is formed by the ICVs. The edge computing layer is composed of MEC servers and their connected BSs. The cloud computing layer is mainly supported by the cloud server. In the client-server FL framework, ICVs and MEC servers work as the local clients and parameter servers, respectively. Moreover, the communication and system overhead models of the IoV system are set up as follows.

2.1.1. Communication Model

The 5G NR-V2X is used as the C-V2X communication model [,]. For V2I (vehicle-to-infrastructure) communications, the data rate of the uplink from vehicle j to the MEC server is

where B is the channel bandwidth. and are the transmission power and the distance from vehicle j to the MEC server, respectively. is the path loss exponent. Furthermore, h is the fading coefficient of the uplink Rayleigh fading channel. Finally, is the power of the additive white Gaussian noise (AWGN).

2.1.2. Latency Model

It assumes that the data size for the model training of client j is , and the model size after local training is .

2.1.3. Energy Consumption Model

The energy consumption of local model training of client j is calculated as

where is the chip architecture-dependent effective switched capacitance. The energy consumption of the model uploading to a MEC server after local training is

2.1.4. Local Data Quality Model

To evaluate the quality of the local data of a client, weight divergence is introduced to this work []. Specifically, the weight divergence is defined as

where is the model weight after local model training. is the model weight after global aggregation at the parameter server.

2.2. Malicious Attacks

Four kinds of malicious attacks that frequently occur in IVNs are considered, including spoofing, replay, drop, and Denial-of-Service (DoS) []. The impact of the attacks is depicted in Figure 1.

Figure 1.

Diagram of malicious attacks in an in-vehicle CAN network.

Spoofing: The attacker injects forged messages with a legal ID at irregular times.

Replay: The attacker captures the normal in-vehicle communication message and injects it in the coming time.

Drop: The attacker isolates normal in-vehicle communication messages.

DoS: The attacker forges high prioritized messages in consecutive, e.g., CAN message with ID 0x0000, and thus hinders the normal in-vehicle communications.

3. ConvLSTM-Based In-Vehicle Network Intrusion Detection

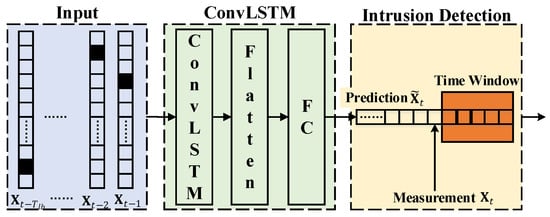

The overall diagram is shown in Figure 2, and details are provided as follows.

Figure 2.

Diagram of ConvLSTM-based in-vehicle network intrusion detection method.

3.1. One-Hot Encoding

The ID of a CAN message is an 11-bit value up to 0x07FF. In the proposed method, an ID is vectorized by one-hot encoding so that the distance between different IDs can be enlarged. An ID is encoded to an L-length binary vector . To avoid the overlength of the vector, L is set as the number of in-vehicle legal IDs instead of the upper bound 0x07FF.

3.2. ConvLSTM Neural Network

The ConvLSTM neural network is built in Figure 2. It is a sequential model that consists of a ConvLSTM layer [], a flatten layer, and a fully-connected (FC) layer. Specifically, the input is the vectorized ID series, and is the time step size. The activation function of the last layer is softmax. The ConvLSTM neural network finally outputs an L-length vector, . The loss function is calculated by categorical cross-entropy,

where is the training target vector.

3.3. Intrusion Detection

The output of the ConvLSTM neural network is an L-length softmax vector. Hence, for intrusion detection, it is converted to an L-length binary vector first. In , only the bit of with the highest probability is set to 1. The error between the predicted and measured vectors is

where is the measured vector. is a fixed value, empirically, .

Considering the randomness of the in-vehicle communications, the error cumulated over a time window is applied as the detection criterion. A network intrusion is flagged, when the cumulative error is beyond a predefined threshold . The detection criterion is defined as

where is the size of the time window.

4. Federated ConvLSTM Neural Network Model Training with Client Selection

First of all, the problem of FCS is formulated as an MDP. To resolve the problem, the PPO-based FCS scheme is developed. Finally, the process of the federated ConvLSTM neural network model training is provided.

4.1. Problem Formulation for Federated Client Selection

The problem of FCS is firstly formulated as an MDP .

4.1.1. State Space S

The union of the state set of each client forms the state space,

where refers to the state of an arbitrary client j,

where is the calculated weight divergence, is the available CPU-cycle frequency, is the remaining energy, and is the uplink data rate.

4.1.2. Action Space A

Assuming that is the binary selection action of client j, 1 for selected and 0 for unselected, the action space is formed as

where ∏ is the Cartesian product.

4.1.3. State Transition P

The transition from the current state to the next state is determined by the combination of all the clients. It supposes that the weight divergence and available CPU-cycle frequency keep stable during the training process, which means in the state transition, and stay unchanged. The data rate is determined by the communication model.

In terms of energy consumption, if client j is selected, its energy consumption is estimated by (7). For the unselected case, we assume that no related energy consumption occurs. Therefore, the remaining energy is updated by

where is the selection action of client j at time index t.

4.1.4. Reward Function R

In corresponding to the optimization objective of federated client selection, the reward function is set up as

where N is the number of selected clients, and K is the number of candidate clients. is the normalized weighted divergence,

is the normalized energy consumption,

is the normalized latency,

4.2. PPO-Based Federated Client Selection

In this subsection, a PPO-based FCS scheme is developed to solve the MDP problem formulated in Section 4.1. The pseudocode is listed in Algorithm 1.

| Algorithm 1 PPO-based FCS Scheme |

|

PPO is a policy-gradient DRL algorithm []. In PPO, the policy is formulated by a neural network with parameters , . The objective function of the policy is given as

where refers to the policy that chooses action providing state at time index t. , is the trajectory of actor i running policy in the environment for timesteps, where the reward collected for choosing action providing state is determined by (16). is the set of trajectories. is the clip function of policy update and is defined by

where is a hyperparameter of the clip function, empirically, . is the advantage function and is given by

where and are the discount and smoothing factors, respectively. Empirically, and . is the temporal difference error,

where the next state is generated based on the rule of state transition defined in Section 4.1.3. is the value function formulated by a neural network with parameters . The value function is updated by

where is the expected reward,

4.3. Federated ConvLSTM Neural Network Model Training

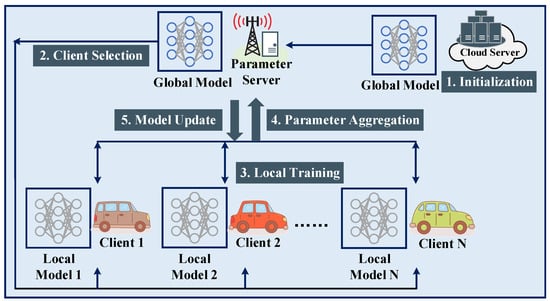

The process of federated ConvLSTM neural network model training is shown in Figure 3, and the details are supplemented as follows.

Figure 3.

Diagram of federated AI model training with client selection in IoV.

4.3.1. Neural Network Model Initialization

In the cloud, a ConvLSTM model is initialized for each vehicular series as the global model. The cloud then downloads the global ConvLSTM model to the MEC server. The candidate vehicles send FL request information to the server.

4.3.2. Federated Client Selection

- The candidate vehicles of identical vehicular series are replied with an acknowledgment. Subsequently, the global ConvLSTM model is downloaded to the candidates.

- After the pre-round training, the candidate vehicles upload their local information to the MEC server, including the model parameters after training , available CPU-cycle frequency , and remaining energy .

- The MEC server then selects N vehicles out of K candidates by executing the PPO-based FCS scheme, i.e., Algorithm 1. The state of a candidate vehicle includes . and are offered by candidates. is calculated based on by using (8). In this work, is estimated by the communication model (1). In practical application scenarios, is measured through onsite communications.

- The MEC server notifies the selected vehicles with an acceptance notice and the unselected vehicles with a rejection notice.

4.3.3. Local Model Training

The MEC server downloads the global ConvLSTM model to the N-selected vehicular clients. Let the loss function of the ConvLSTM model (9) be the objective function . For FL, the objective function is penalized by

where is the model parameter, and d is its dimension. is the number of data samples held by client j. N is the number of selected clients. is the total number of data samples. is the local function,

where is the local dataset, .

In this work, the clients perform the local training with their datasets by mini-batch gradient descent, . Upon the completion of local training, the clients send the updated model parameters to the MEC server, .

4.3.4. Global Model Update by Parameter Aggregation

At the MEC server, the global model is updated by the parameter aggregation, namely,

In this work, the client-server FL framework adopts the synchronous mode. Each round of parameter aggregation is executed when the updated model parameters from all the N-selected clients are collected.

4.3.5. Local Model Update

After the global parameter aggregation at the MEC server, the updated global model is sent back to the vehicles. The vehicles update their models with the new release.

The local model training and parameter aggregation repeat until the loss function converges or the iteration reaches the upper limit.

Finally, the convergent global ConvLSTM model is sent to the authenticated vehicles. In the meantime, a copy of the global ConvLSTM model is uploaded to the cloud.

5. Performance Evaluation

In this section, simulations have been conducted to evaluate the performance of the proposed methods, where the experimental settings are provided first, and then the numerical results are insightfully analyzed.

5.1. Experimental Settings

The environmental settings of the experimental platform are Ubuntu 22.04 LTS, Intel Xeon Gold 6230R CPU, 128G RAM, and NVIDIA GeForce RTX 3090 GPU.

5.1.1. Fundamentals

The fundamental system model of an IoV system is parameterized in Table 2 [,]. Particularly, the transmission power and CPU-cycle frequency are randomly generated within the range. In PPO, the neural networks of policy and value function are both set up by multilayer perception (MLP). The MLP of policy takes the state vector as input and outputs the actor vector. The number of units in two hidden layers are both 64 and are all activated by tanh. The MLP of value function takes the state vector as input as well while outputing the “value". The number of units in two hidden layers are 64 and are all activated by tanh. For PPO training, the number of actors is 1, the timesteps is 20, the number of epochs is 50, the optimizer used is Adam, and the learning rate of the policy and value function are and , respectively.

Table 2.

Parameter Settings.

5.1.2. Dataset

Simulations are conducted based on the attack-free dataset of CAN messages published by the HCR Lab of Korea University []. The dataset has over one million samples in the format “Timestamp|Arbitration ID|RTR|DLC|Data Payload”. Instead of data pre-processing, including data cleaning, filtering, and extraction, we keep all the original samples in the data with randomness and redundancy, which keeps the practical characteristics of CAN messages.

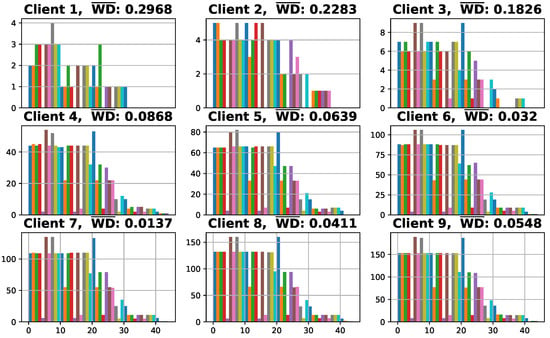

In this work, for centralized training, 10,000 data samples are used for training, including 20% for validation, and 2000 data samples are used for testing. For the federated training, the datasets held by clients are adjusted to be non-iid. We assume that there are nine candidate clients, and each has 50, 100, 150, 1000, 1500, 2000, 2500, 3000, and 3500 data samples, respectively. The distributions of local datasets are demonstrated in Figure 4. In each subfigure, the horizontal and vertical axes refer to the index of 45 legal IDs in the CAN message dataset and the number of corresponding samples, respectively. Moreover, is the normalized weight divergence, where the weight divergence is calculated based on the weight of the output layer of the ConvLSTM neural network model by using (8). It can be observed that the values of clients are higher than that of the other clients. The reason is that the extent of local data imbalance of clients is higher due to the small number of local data samples.

Figure 4.

Distribution of local datasets.

5.1.3. Attack Models

To mimic the attacks, the following items are generated and injected into the original dataset. Each attack randomly occurs 500 times.

Spoofing: A message with a random legal ID is injected twice at random time indexes.

Replay: A normal message is eavesdropped and injected twice right after.

Drop: Three messages are dropped off in consecutive.

DoS: Five forged messages with the ID “0x0000” are injected in consecutive.

5.1.4. Evaluation Metrics

To evaluate the performance of network intrusion detection, the receiver operating characteristic (ROC) curve is adopted. The horizontal and vertical axes of the ROC curve are the false positive rate (FPR) and true positive rate (TPR), respectively. TPR and FPR are defined by

where TP, FN, FP, and TN refer to the true positive, false negative, false positive, and true negative, respectively.

Furthermore, the precision, recall, F1-score, and accuracy are adopted as well, which are defined by

5.2. Analysis of IVN Intrusion Detection

To evaluate the performance of the ConvLSTM-based intrusion detection method, LSTM neural networks with CAN message ID vectorization (LSTM-Vector) and normalized series of CAN message ID (LSTM-Series) are adopted as benchmarks []. The structures of ConvLSTM and benchmarks are listed in Table 3.

Table 3.

Neural Network Structure: ConvLSTM and Benchmarks.

The comparison on the ROC curve between the proposed ConvLSTM and benchmarks is shown in Figure 5a, where the four types of attacks are considered. For the detection criterion in (11), the time window is set to 5. The threshold varies from 0 to 200 for LSTM-Vector and ConvLSTM, while it varies from 0 to 0.6 for LSTM-Series. Based on the results in Figure 5a, the threshold is set to 165 for LSTM-Vector and ConvLSTM and 0.6 for LSTM-Series in the following experiments because the TPR and FPR are then optimal.

Figure 5.

Comparison of network intrusion detection between proposed ConvLSTM-based method and benchmarks, i.e., LSTM-Vector and LSTM-Series: (a) ROC curve, (b) precision, recall, F1-score, and accuracy.

The comparison of the other evaluation metrics is demonstrated in both Table 4 and Figure 5b. It can be observed that the LSTM-Series can detect the spoofing and DoS attacks with an identical accuracy as the LSTM-Vector and the ConvLSTM, where the bias is about . However, LSTM-Series does not perform well in the detection of replay and drop attacks, where the bias is more than . Specifically, the F1-score and accuracy of the LSTM-Series on drop attack are 0.751 and 0.783, and those on replay attack are 0.667 and 0.890. Thus, it implies that the LSTM-Series taking the normalized series of CAN message ID is not sensitive to minor changes and can hardly detect certain attacks.

Table 4.

Numerical results: ConvLSTM and benchmarks.

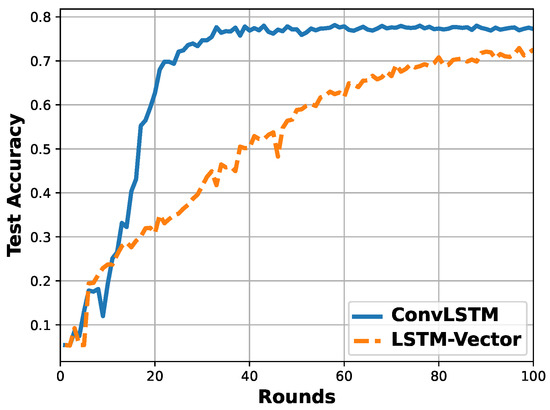

In terms of the two vector-based methods, namely, LSTM-Vector and ConvLSTM, the detection accuracy of the four types of attacks are identical with a bias of around . However, the test accuracy per round shown in Figure 6 indicates that the ConvLSTM converges faster than the LSTM-Vector. Furthermore, the model size of ConvLSTM with 256,621 parameters is smaller than that of LSTM-Vector with 3,457,453 parameters. It implies that the ConvLSTM can potentially reduce the communication overhead when conducting the model training in a federated way. The ConvLSTM can process the sparse input vector with a convolutional filter and maintain accuracy with a lightweight model. Hence, the proposed ConvLSTM is adopted for in-vehicle network intrusion detection.

Figure 6.

Comparison of test accuracy per round between LSTM-Vector and ConvLSTM.

5.3. Analysis of Federated Client Selection

The proposed PPO-based FCS scheme is evaluated here, and the DDQN-based FCS [] and fundamental FL without FCS are used as benchmarks. For the case of fundamental FL, all the clients in Figure 4 are selected.

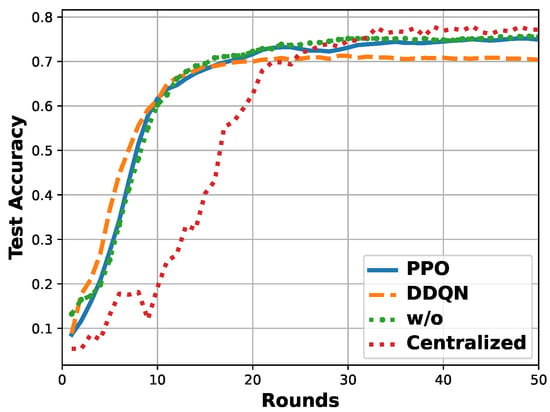

Test Accuracy: The test accuracy per round is shown in Figure 7, where except for the two mentioned benchmarks, centralized training is also involved. The test dataset used is a balanced dataset with 2000 samples. To reach the 70%-accuracy, the round of the FL with the PPO-based FCS is 16, and the round of FL with the DDQN-based FCS, fundamental FL without FCS (w/o), and centralized are 19, 16, and 25, respectively. The accuracy of FL with the PPO-based FCS finally reaches 0.751, and that of FL with the DDQN-based FCS, FL without FCS, and centralized converge to 0.706, 0.756, and 0.772, respectively.

Figure 7.

Comparison of test accuracy per round among different ConvLSTM model training schemes: FL with PPO-based FCS, FL with DDQN-based FCS, FL without FCS (w/o), and centralized.

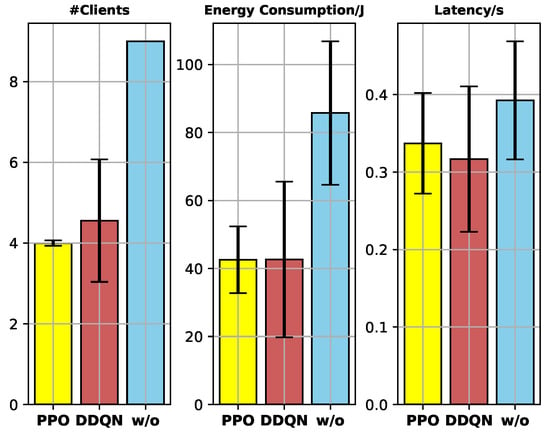

System overhead: The test of client selection is conducted 500 times. In terms of the numerical results, the mean and standard deviation of the number of selected clients (#clients), energy consumption (J), and latency (s) are illustrated in Figure 8.

Figure 8.

Results: #clients, energy consumption (J), and latency (s).

- Federated model training converges faster than the centralized one when the total number of data samples used for training is the same. The reason is that for each round, the clients of FL conduct the local training parallelly and jointly contribute to the model update.

- Compared to the fundamental FL, the proposed FCS scheme dramatically reduces the system overhead while maintaining the model training accuracy, due to the unselected clients with low data quality not contributing to the model training much.

- Compared to the DDQN-based FCS scheme, the proposed FCS scheme performs more stably with a 95.52% lower standard deviation of #clients. It is because PPO is a policy-gradient DRL algorithm that optimizes the policy directly, and the usage of the clip function enhances the stability of the policy update.

Computational Complexity: Based on the environmental settings in Section 5.1, the time cost of DRL training and DRL-based client selection are recorded. The training time cost of PPO and DDQN are 17.566 s and 8.109 s, respectively. The time cost per execution of client selection using PPO and DDQN-based FCS schemes are 3.443 ms and 2.875 ms, respectively. The training of PPO costs longer time than DDQN. The reason is that the PPO is an on-policy DRL algorithm, while DDQN is an off-policy one with higher sample efficiency. However, due to the DRL training being executed at the MEC server, the training time cost of PPO is acceptable. Furthermore, the time cost per execution of client selection is identical for PPO and DDQN. Thus, although the training of PPO takes a longer time, the time efficiency of the PPO-based client selection can guarantee real-time execution.

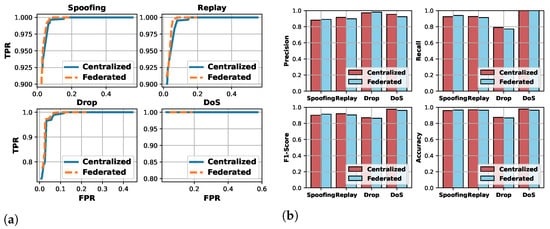

Network Intrusion Detection: The ConvLSTM model trained by the FL with the PPO-based FCS scheme (i.e., federated ConvLSTM) is applied to network intrusion detection. Numerical results are listed in Table 5. The comparison between the federated and centralized ConvLSTM-based methods on the ROC curve and evaluation metrics are shown in Figure 9. It can be observed that for the four types of attacks, the difference in accuracy between the federated ConvLSTM-based detection and the centralized-based one is about . It unveils that despite the 0.021-accuracy loss of the federated ConvLSTM model training compared to the centralized one, it can be relieved by the cumulation (11), and the accuracy of the network intrusion detection is identical.

Table 5.

Numerical results: Federated ConvLSTM.

Figure 9.

Comparison of network intrusion detection between centralized and federated ConvLSTM-based methods: (a) ROC curve, (b) precision, recall, F1-score, and accuracy.

6. Conclusions

To protect IoV security, a ConvLSTM-based IVN intrusion detection method has been developed. For the ConvLSTM training in IoV, a client-server FL framework was proposed. In the framework, ICVs acted as clients, and MEC servers connecting to BSs functioned as parameter servers. To further optimize the performance of the FL framework, a PPO-based FCS scheme was developed. Based on the practical IoV scenario settings and IVN datasets, simulation experiments have been conducted. The results indicated that by exploiting the ConvLSTM, the neural network model size and convergence time were dramatically reduced while maintaining the 95%-beyond detection accuracy. The results also unveiled that the PPO-based FCS scheme outperformed on the convergence rate, model accuracy, and related overhead of FL, as compared to the benchmarks including centralized model training, fundamental FL without an FCS scheme, and FL with the DDQN-based FCS scheme. In future work, we are considering modeling the IoV as a multi-agent system to formulate the complex interactions among multiple ICVs and the overall environment.

Author Contributions

Conceptualization, T.Y. and J.H.; methodology, T.Y.; software, J.Y.; validation, J.Y., J.H. and T.Y.; formal analysis, T.Y.; investigation, J.H.; resources, J.H.; data curation, T.Y.; writing—original draft preparation, J.Y.; writing—review and editing, T.Y.; visualization, J.Y.; supervision, J.H. and T.Y.; project administration, J.H. and T.Y.; funding acquisition, T.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China, grant number 62101373; and the Natural Science Foundation of Jiangsu Province, grant number BK20200858.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AWGN | Additive White Gaussian Noise |

| BS | Base Station |

| CAN | Controller Area Network |

| ConvLSTM | Convolutional Long-Short Term Memory |

| C-V2X | Cellular-Vehicle-to-Everything |

| DDPG | Deep Deterministic Policy Gradient |

| DoS | Denial-of-Service |

| DNN | Deep Neural Network |

| DRL | Deep Reinforcement Learning |

| FCS | Federated Client Selection |

| FL | Federated Learning |

| FPR | False Positive Rate |

| GAN | Generative Adversarial Network |

| HTM | Hierarchical Temporal Memory |

| IoV | Internet of Vehicles |

| ICV | Intelligent Connected Vehicle |

| IVN | In-Vehicle Network |

| MDP | Markov Decision Process |

| MEC | Mobile Edge Computing |

| MLP | Multi-Layer Perception |

| OBU | On-Board Unit |

| PPO | Proximal Policy Optimization |

| ROC | Receiver Operating Characteristic |

| RSU | Road-Side Unit |

| TPR | True Positive Rate |

| uRLLC | ultra-Reliable Low-Latency Communications |

| V2I | Vehicle-to-Infrastructure |

| V2X | Vehicle-to-Everything |

References

- Zeng, W.; Khalid, M.A.S.; Chowdhury, S. In-Vehicle Networks Outlook: Achievements and Challenges. IEEE Commun. Surv. Tuts. 2016, 18, 1552–1571. [Google Scholar] [CrossRef]

- Aliwa, E.; Rana, O.; Perera, C.; Burnap, P. Cyberattacks and Countermeasures for In-Vehicle Networks. ACM Comput. Surv. (CSUR) 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Khatri, N.; Shrestha, R.; Nam, S.Y. Security Issues with In-vehicle Networks, and Enhanced Countermeasures Based on Blockchain. Electronics 2021, 10, 893. [Google Scholar] [CrossRef]

- Karopoulos, G.; Kambourakis, G.; Chatzoglou, E.; Hernández-Ramos, J.L.; Kouliaridis, V. Demystifying In-Vehicle Intrusion Detection Systems: A Survey of Surveys and a Meta-Taxonomy. Electronics 2022, 11, 1072. [Google Scholar] [CrossRef]

- Nie, S.; Liu, L.; Du, Y. Free-Fall: Hacking Tesla from Wireless to CAN Bus. In Proceedings of the Black Hat USA, Las Vegas, NV, USA, 22–27 July 2017. [Google Scholar]

- Miller, C.; Valasek, C. Remote Exploitation of An Unaltered Passenger Vehicle. In Proceedings of the Black Hat USA, Las Vegas, NV, USA, 1–6 August 2015. [Google Scholar]

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A New Comprehensive Realistic Cyber Security Dataset of IoT and IIoT Applications for Centralized and Federated Learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Dahou, A.; Abd Elaziz, M.; Chelloug, S.A.; Awadallah, M.A.; Al-Betar, M.A.; Al-qaness, M.A.; Forestiero, A. Intrusion Detection System for IoT Based on Deep Learning and Modified Reptile Search Algorithm. Comput. Intell. Neurosci. 2022, 2022, 6473507. [Google Scholar] [CrossRef]

- Wu, W.; Li, R.; Xie, G.; An, J.; Bai, Y.; Zhou, J.; Li, K. A Survey of Intrusion Detection for In-Vehicle Networks. IEEE Trans. Intell. Transp. Syst. 2020, 21, 919–933. [Google Scholar] [CrossRef]

- Zhang, J.; Letaief, K.B. Mobile Edge Intelligence and Computing for the Internet of Vehicles. Proc. IEEE 2019, 108, 246–261. [Google Scholar] [CrossRef]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Distributed Federated Learning for Ultra-Reliable Low-Latency Vehicular Communications. IEEE Trans. Commun. 2020, 68, 1146–1159. [Google Scholar] [CrossRef]

- Manias, D.M.; Shami, A. Making a Case for Federated Learning in the Internet of Vehicles and Intelligent Transportation Systems. IEEE Netw. 2021, 35, 88–94. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the Artificial Intelligence and Statistics PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Nishio, T.; Yonetani, R. Client Selection for Federated Learning with Heterogeneous Resources in Mobile Edge. In Proceedings of the IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar]

- Kang, M.J.; Kang, J.W.; Tang, T. Intrusion Detection System Using Deep Neural Network for In-Vehicle Network Security. PLoS ONE 2016, 11, e0155781. [Google Scholar] [CrossRef] [PubMed]

- Moore, M.R.; Vann, J.M. Anomaly Detection of Cyber Physical Network Data Using 2D Images. In Proceedings of the International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–5. [Google Scholar]

- Seo, E.; Song, H.M.; Kim, H.K. GIDS: GAN based Intrusion Detection System for In-Vehicle Network. In Proceedings of the IEEE 16th Annual Conference on Privacy, Security and Trust (PST), Belfast, UK, 28–30 August 2018; pp. 1–6. [Google Scholar]

- Taylor, A.; Leblanc, S.; Japkowicz, N. Anomaly Detection in Automobile Control Network Data with Long Short-Term Memory Networks. In Proceedings of the IEEE International Conference on Data Science and Advanced Analytics, Montreal, QC, Canada, 17–19 October 2016; pp. 130–139. [Google Scholar]

- Wang, C.; Zhao, Z.; Gong, L.; Zhu, L.; Liu, Z.; Cheng, X. A Distributed Anomaly Detection System for In-Vehicle Network Using HTM. IEEE Access 2018, 6, 9091–9098. [Google Scholar] [CrossRef]

- Zhu, K.; Chen, Z.; Peng, Y.; Zhang, L. Mobile Edge Assisted Literal Multi-Dimensional Anomaly Detection of In-Vehicle Network Using LSTM. IEEE Trans. Veh. Technol. 2019, 68, 4275–4284. [Google Scholar] [CrossRef]

- Desta, A.K.; Ohira, S.; Arai, I.; Fujikawa, K. ID Sequence Analysis for Intrusion Detection in the CAN bus using Long Short Term Memory Networks. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; pp. 1–6. [Google Scholar]

- Yu, T.; Hua, G.; Wang, H.; Yang, J.; Hu, J. Federated-LSTM based Network Intrusion Detection Method for Intelligent Connected Vehicles. In Proceedings of the IEEE International Conference on Communications (ICC), Seoul, Korea, 16–20 May 2022; pp. 4324–4329. [Google Scholar]

- Chai, H.; Leng, S.; Chen, Y.; Zhang, K. A Hierarchical Blockchain-Enabled Federated Learning Algorithm for Knowledge Sharing in Internet of Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3975–3986. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, X.; Zhang, K.; Maharjan, S.; Zhang, Y. Blockchain Empowered Asynchronous Federated Learning for Secure Data Sharing in Internet of Vehicles. IEEE Trans. Veh. Technol. 2020, 69, 4298–4311. [Google Scholar] [CrossRef]

- Fragkos, G.; Lebien, S.; Tsiropoulou, E.E. Artificial Intelligent Multi-Access Edge Computing Servers Management. IEEE Access 2020, 8, 171292–171304. [Google Scholar] [CrossRef]

- Forestiero, A.; Papuzzo, G. Agents-based Algorithm for A Distributed Information System in Internet of Things. IEEE Internet Things J. 2021, 8, 16548–16558. [Google Scholar] [CrossRef]

- Zhang, H.; Xie, Z.; Zarei, R.; Wu, T.; Chen, K. Adaptive Client Selection in Resource Constrained Federated Learning Systems: A Deep Reinforcement Learning Approach. IEEE Access 2021, 9, 98423–98432. [Google Scholar] [CrossRef]

- Yu, T.; Wang, X.; Yang, J.; Hu, J. Proximal Policy Optimization-based Federated Client Selection for Internet of Vehicles. In Proceedings of the 2022 IEEE/CIC International Conference on Communications in China (ICCC), Foshan, China, 11–13 August 2022; pp. 648–653. [Google Scholar]

- Raza, S.; Wang, S.; Ahmed, M.; Anwar, M.R.; Mirza, M.A.; Khan, W.U. Task Offloading and Resource Allocation for IoV Using 5G NR-V2X Communication. IEEE Internet Things J. 2022, 9, 10397–10410. [Google Scholar] [CrossRef]

- Wang, H.; Li, X.; Ji, H.; Zhang, H. Federated Offloading Scheme to Minimize Latency in MEC-Enabled Vehicular Networks. In Proceedings of the 2018 IEEE Globecom Workshops (GC Wkshps), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated Learning with Non-IID Data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- Ji, H.; Wang, Y.; Qin, H.; Wang, Y.; Li, H. Comparative Performance Evaluation of Intrusion Detection Methods for In-Vehicle Networks. IEEE Access 2018, 6, 37523–37532. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the 28th International Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Wu, Y.; Wu, J.; Chen, L.; Yan, J.; Han, Y. Load Balance Guaranteed Vehicle-to-Vehicle Computation Offloading for Min-Max Fairness in VANETs. IEEE Trans. Intell. Transp. Syst. 2022, 23, 11994–12013. [Google Scholar] [CrossRef]

- Lee, H.; Jeong, S.H.; Kim, H.K. OTIDS: A Novel Intrusion Detection System for In-Vehicle Network by Using Remote Frame. In Proceedings of the IEEE 15th Annual Conference on Privacy, Security and Trust (PST), Calgary, AB, Canada, 28–30 August 2017; pp. 57–66. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).