FLIA: Architecture of Collaborated Mobile GPU and FPGA Heterogeneous Computing

Abstract

:1. Introduction

- The comprehensible FLIA and its implementation facilitate developers to employ heterogeneous computing applications.

- A case study developed on the collaborated mobile GPU and FPGA platform proves the effectiveness of heterogeneous computing.

2. Background and Related Work

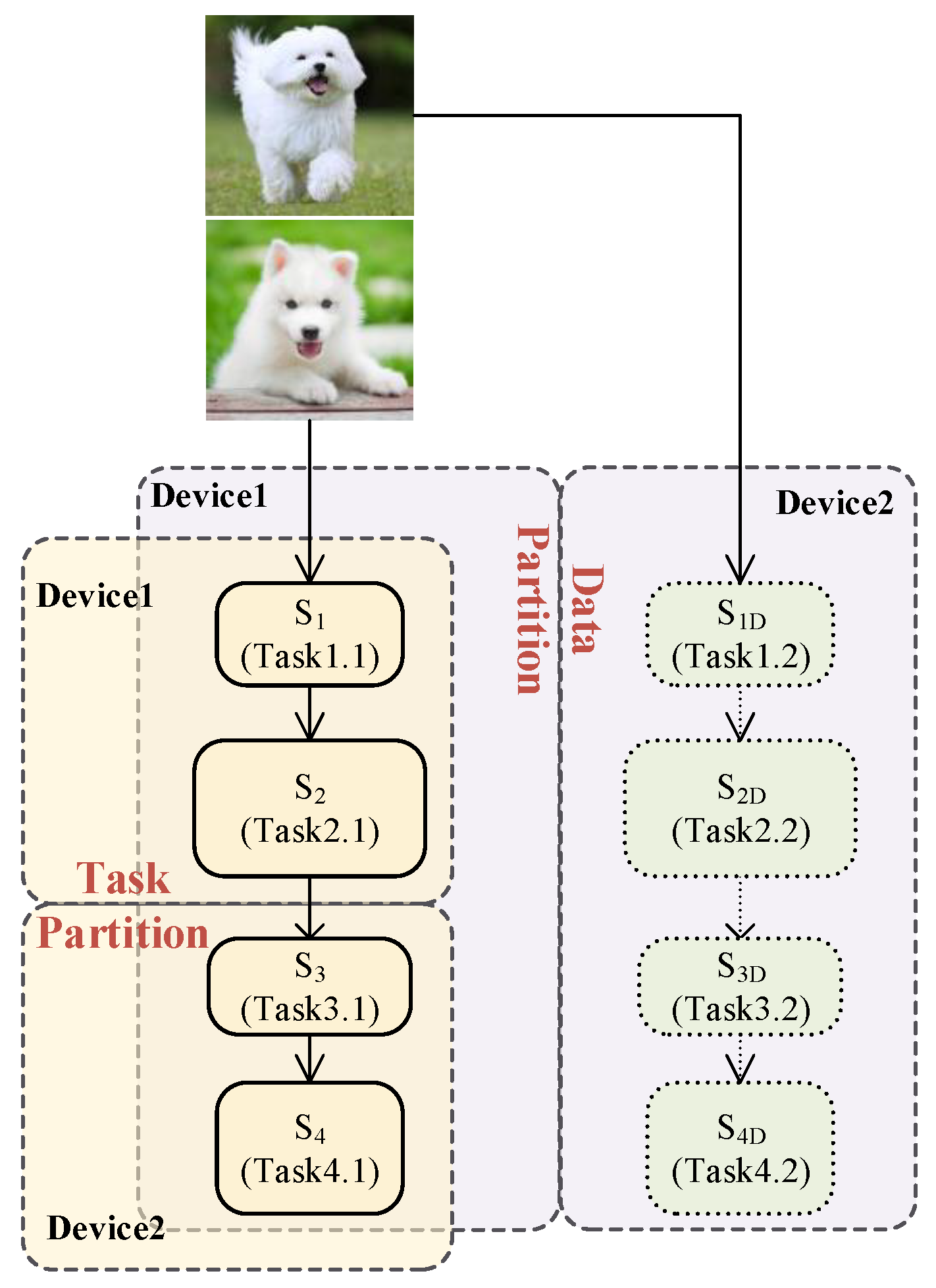

2.1. Heterogeneous Computing Workload Partioning

2.2. OpenCL Runtime

3. Flow-Lead-In Architecture for Heterogeneous Computing

- Portability: As an abstraction of the computational unit, the servant does not dedicate to the specific accelerator.

- Encapsulation: The servant is a self-fulfilled module. The developers only focus on the algorithm of the servant, knowing neither the details of FLIA runtime nor the internal implementation of other servants.

- Parallelism: Multiple servants can run on heterogeneous accelerators in parallel to improve system performance.

- Reusability: The design of the servant can be reused on various accelerators.

3.1. Model Definition

3.2. Model Analysis

- Vertex: The servant is regarded as the vertex of the graph.

- Edge: The execution-flow is regarded as the edge of the graph.

- Weight of edge: The sum of a servant’s execution duration and its initiated execution-flow’s communication duration.

- Path: A path between servants and means that the execution result of is processed by one or many servants finally sent to . Such as Figure 4, there are two paths between the servants and .

4. Implementation of FLIA

4.1. Servant Implementation

4.2. Execution-Flow Implementation

5. Case Studies

6. Results

6.1. Experimental Setup

6.2. Servants Acceleration

6.3. Heterogeneous Computing Evaluation

6.4. Power Evaluation

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ross, J.A.; Richie, D.A.; Song, J.P.; Shires, D.R.; Pollock, L.L. A case study of OpenCL on an Android mobile GPU. In Proceedings of the High PERFORMANCE Extreme Computing Conference, Waltham, MA, USA, 9–11 September 2014; pp. 1–6. [Google Scholar]

- Seewald, A.; Schultz, U.P.; Ebeid, E.; Midtiby, H.S. Coarse-Grained Computation-Oriented Energy Modeling for Heterogeneous Parallel Embedded Systems. Int. J. Parallel Program. 2021, 49, 136–157. [Google Scholar] [CrossRef]

- Kim, S.K.; Man, K.S. Efficient Path Tracer for the Presence of Mobile Virtual Reality. Hum.-Cent. Comput. Inf. Sci. 2021, 11, 1–14. [Google Scholar]

- Wang, Z.; Jiang, Z.; Wang, Z.; Tang, X.; Liu, C.; Yin, S.; Hu, Y. Enabling Latency-Aware Data Initialization for Integrated CPU/GPU Heterogeneous Platform. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 39, 3433–3444. [Google Scholar] [CrossRef]

- Jordan, M.G.; Korol, G.; Rutzig, M.B.; Beck, A.C.S. Resource-Aware Collaborative Allocation for CPU-FPGA Cloud Environments. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 1655–1659. [Google Scholar] [CrossRef]

- Belviranli, M.E.; Bhuyan, L.N.; Gupta, R. A dynamic self-scheduling scheme for heterogeneous multiprocessor architectures. ACM Trans. Arch. Code Optim. 2013, 9, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez, A.; Navarro, A.; Nikov, K.; Nunez-Yanez, J.; Gran, R.; Gracia, D.S.; Asenjo, R. Lightweight asynchronous scheduling in heterogeneous reconfigurable systems. J. Syst. Arch. 2022, 124, 102398. [Google Scholar] [CrossRef]

- Guzmán, M.A.D.; Nozal, R.; Tejero, R.G.; Villarroya-Gaudó, M.; Gracia, D.S.; Bosque, J.L. Cooperative CPU, GPU, and FPGA heterogeneous execution with EngineCL. J. Supercomput. 2019, 75, 1732–1746. [Google Scholar] [CrossRef]

- Xu, J.; Li, K.; Chen, Y. Real-time task scheduling for FPGA-based multicore systems with communication delay. Microprocess. Microsyst. 2022, 90, 104468. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, J.; Li, X.; Wang, A.; Zhou, X. Hardware Implementation on FPGA for Task-Level Parallel Dataflow Execution Engine. IEEE Trans. Parallel Distrib. Syst. 2015, 27, 2303–2315. [Google Scholar] [CrossRef]

- Vaishnav, A.; Pham, K.D.; Koch, D.; Garside, J. Resource Elastic Virtualization for FPGAs Using OpenCL. In Proceedings of the 2018 28th International Conference on Field Programmable Logic and Applications (FPL), Dublin, Ireland, 27–31 August 2018; pp. 111–1117. [Google Scholar]

- Vaishnav, A.; Pham, K.D.; Koch, D. Heterogeneous Resource-Elastic Scheduling for CPU+FPGA Architectures. In Proceedings of the 10th International Symposium on Highly-Efficient Accelerators and Reconfigurable Technologies, Nagasaki, Japan, 6–7 June 2019. [Google Scholar] [CrossRef]

- Huang, S.; Chang, L.W.; El Hajj, I.; Garcia de Gonzalo, S.; Gómez-Luna, J.; Chalamalasetti, S.R.; El-Hadedy, M.; Milojicic, D.; Mutlu, O.; Hwu, W.M.; et al. Analysis and Modeling of Collaborative Execution Strategies for Heterogeneous CPU-FPGA Architectures. In Proceedings of the 2019 ACM/SPEC International Conference on Performance Engineering, Mumbai, India, 7–11 April 2019. [Google Scholar] [CrossRef]

- Aman-Allah, H.; Maarouf, K.; Hanna, E.; Amer, I.; Mattavelli, M. CAL Dataflow Components for an MPEG RVC AVC Baseline Encoder. J. Signal Process. Syst. 2009, 63, 227–239. [Google Scholar] [CrossRef] [Green Version]

- Abdelhalim, M.B.; Habib, E.D. An integrated high-level hardware/software partitioning methodology. Des. Autom. Embed. Syst. 2011, 15, 19–50. [Google Scholar] [CrossRef]

- Vaishnav, A.; Pham, K.D.; Koch, D. Live Migration for OpenCL FPGA Accelerators. In Proceedings of the International Conference on Field Programmable Technology (FPT), Naha, Japan, 10–14 December 2018. [Google Scholar]

- Jin, Z.; Finkel, H. Base64 Encoding on OpenCL FPGA Platform. In FPGA ’19: Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays; Association for Computing Machinery: New York, NY, USA, 2019; p. 116. [Google Scholar]

- Cheng, K.T.; Wang, Y.C. Using mobile GPU for general-purpose computing—a case study of face recognition on smartphones. In Proceedings of the International Symposium on Vlsi Design, Automation and Test, Hsinchu, Taiwan, 25–28 April 2011; pp. 1–4. [Google Scholar]

- Wang, G.; Xiong, Y.; Yun, J.; Cavallaro, J.R. Accelerating computer vision algorithms using OpenCL framework on the mobile GPU—A case study. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 2629–2633. [Google Scholar]

- Rister, B.; Wang, G.; Wu, M.; Cavallaro, J.R. A fast and efficient sift detector using the mobile GPU. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 2674–2678. [Google Scholar]

- Muslim, F.B.; Ma, L.; Roozmeh, M.; Lavagno, L. Efficient FPGA Implementation of OpenCL High-Performance Computing Applications via High-Level Synthesis. IEEE Access 2017, 5, 2747–2762. [Google Scholar] [CrossRef]

- Stone, J.E.; Gohara, D.; Shi, G. OpenCL: A Parallel Programming Standard for Heterogeneous Computing Systems. Comput. Sci. Eng. 2010, 12, 66–72. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jääskeläinen, P.; Korhonen, V.; Koskela, M.; Takala, J.; Egiazarian, K.; Danielyan, A.; Cruz, C.; Price, J.; McIntosh-Smith, S. Exploiting Task Parallelism with OpenCL: A Case Study. J. Signal Process. Syst. 2019, 91, 33–46. [Google Scholar] [CrossRef] [Green Version]

- Zhou, K.; Wan, B.; Li, X.; Zhang, B.; Zhao, C.; Wang, C. Supporting Predictable Servant-Based Execution Model on Multicore Platforms. In Proceedings of the 2018 IEEE 20th International Conference on High Performance Computing and Communications; IEEE 16th International Conference on Smart City; IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Exeter, UK, 28–30 June 2018; pp. 667–674. [Google Scholar]

- Wan, B.; Li, X.; Zhang, B.; Zhou, K.; Luo, H.; Wang, C.; Chen, X.; Zhou, X. A Predictable Servant-Based Execution Model for Safety-Critical Systems. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Guangzhou, China, 12–15 December 2017; pp. 892–896. [Google Scholar]

- Zhou, X.H.; Luo, S.; Wang, F.; Qi, J. Data-driven uniform programming model for reconfigurable computing. Acta Electron. Sin. 2007, 35, 2123–2128. [Google Scholar]

- Li, W. Research on software mapping technology of waveform three-dimensional information of digital oscilloscope. J. Electron. Meas. Instrum. 2010, 24, 1018–1023. [Google Scholar]

- Seo, H.; Liu, Z.; Großschädl, J.; Kim, H. Efficient arithmetic on ARM-NEON and its application for high-speed RSA implementation. Secur. Commun. Netw. 2016, 9, 5401–5411. [Google Scholar] [CrossRef] [Green Version]

- Melpignano, D.; Benini, L.; Flamand, E.; Jego, B.; Lepley, T.; Haugou, G.; Clermidy, F.; Dutoit, D. Platform 2012, a many-core computing accelerator for embedded SoCs: Performance evaluation of visual analytics applications. In Proceedings of the Design Automation Conference, San Francisco, CA, USA, 3–7 June 2012; pp. 1137–1142. [Google Scholar]

- Czajkowski, T.S.; Aydonat, U.; Denisenko, D.; Freeman, J.; Kinsner, M.; Neto, D.; Wong, J.; Yiannacouras, P.; Singh, D.P. From opencl to high-performance hardware on FPGAS. In Proceedings of the International Conference on Field Programmable Logic and Applications, Oslo, Norway, 29–31 August 2012; pp. 531–534. [Google Scholar]

- Zhang, K.; Wu, B. Task Scheduling for GPU Heterogeneous Cluster. In Proceedings of the 2012 IEEE International Conference on Cluster Computing (Cluster) Workshops, Beijing, China, 24–28 September 2012; pp. 161–169. [Google Scholar]

- Lucas, E.D.; Sanchez-Elez, M.; Pardines, I. DSPONE48: A methodology for automatically synthesize HDL focus on the reuse of DSP slices. J. Parallel Distrib. Comput. 2017, 106, 132–142. [Google Scholar] [CrossRef]

| SA | α | β | γ | δ | ε | ||

| SX | α | β | γ | δ | |||

| SY | α | β | γ | δ | |||

| SZ | α | β | γ | δ | |||

| SB | α | β | γ | ||||

| t0 | t1 | t2 | t3 | t4 | t5 | t6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, N.; Wang, C.; Zhou, X. FLIA: Architecture of Collaborated Mobile GPU and FPGA Heterogeneous Computing. Electronics 2022, 11, 3756. https://doi.org/10.3390/electronics11223756

Hu N, Wang C, Zhou X. FLIA: Architecture of Collaborated Mobile GPU and FPGA Heterogeneous Computing. Electronics. 2022; 11(22):3756. https://doi.org/10.3390/electronics11223756

Chicago/Turabian StyleHu, Nan, Chao Wang, and Xuehai Zhou. 2022. "FLIA: Architecture of Collaborated Mobile GPU and FPGA Heterogeneous Computing" Electronics 11, no. 22: 3756. https://doi.org/10.3390/electronics11223756