Automatic Knee Injury Identification through Thermal Image Processing and Convolutional Neural Networks

Abstract

1. Introduction

2. Theoretical Background

2.1. Thermography

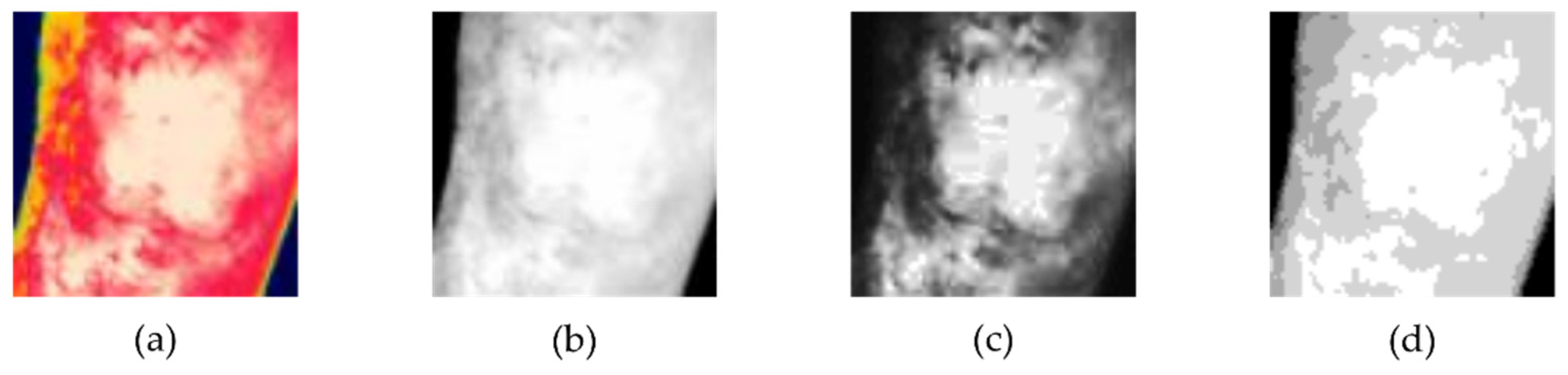

2.2. Image Processing

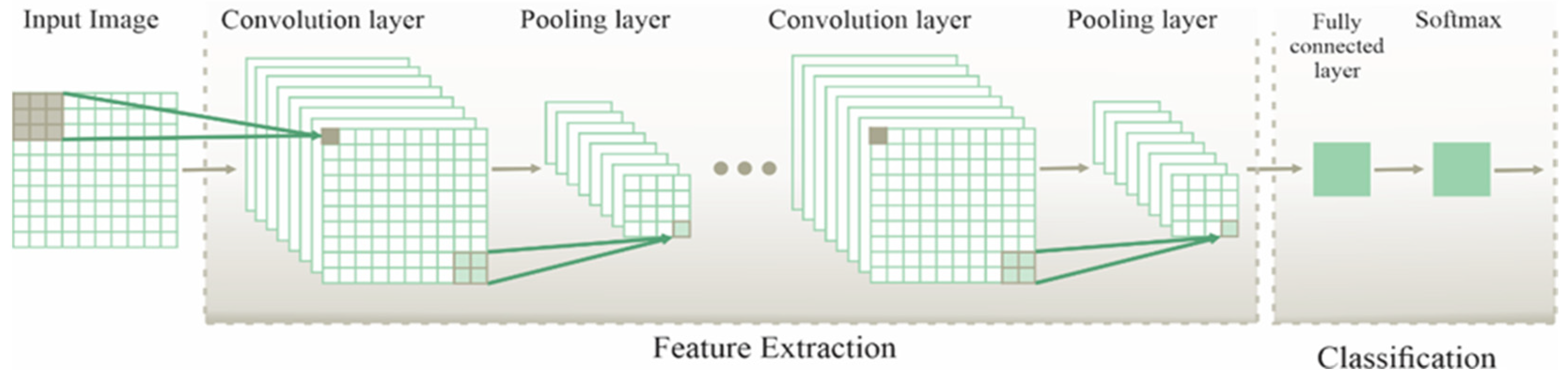

2.3. Convolutional Neural Network

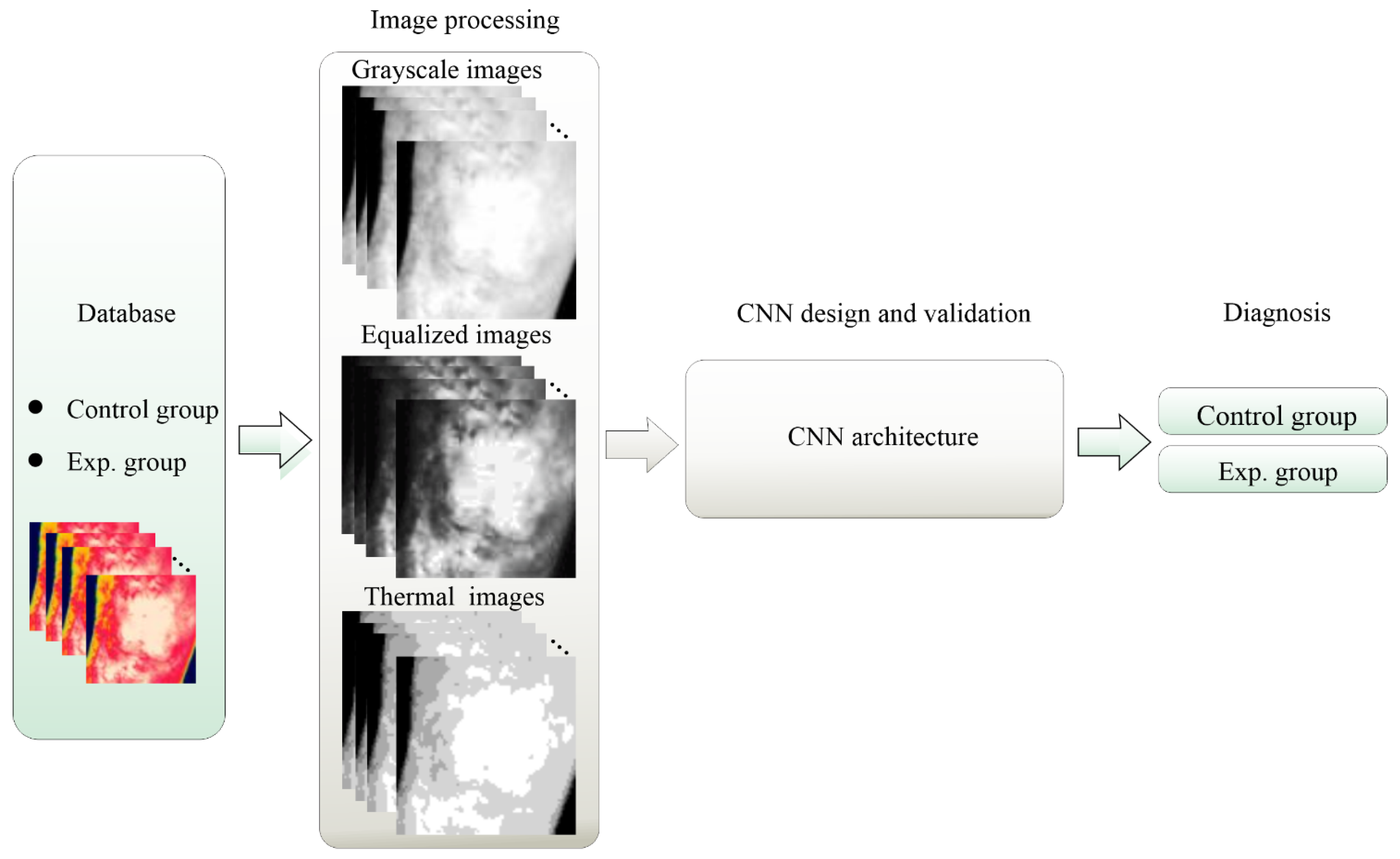

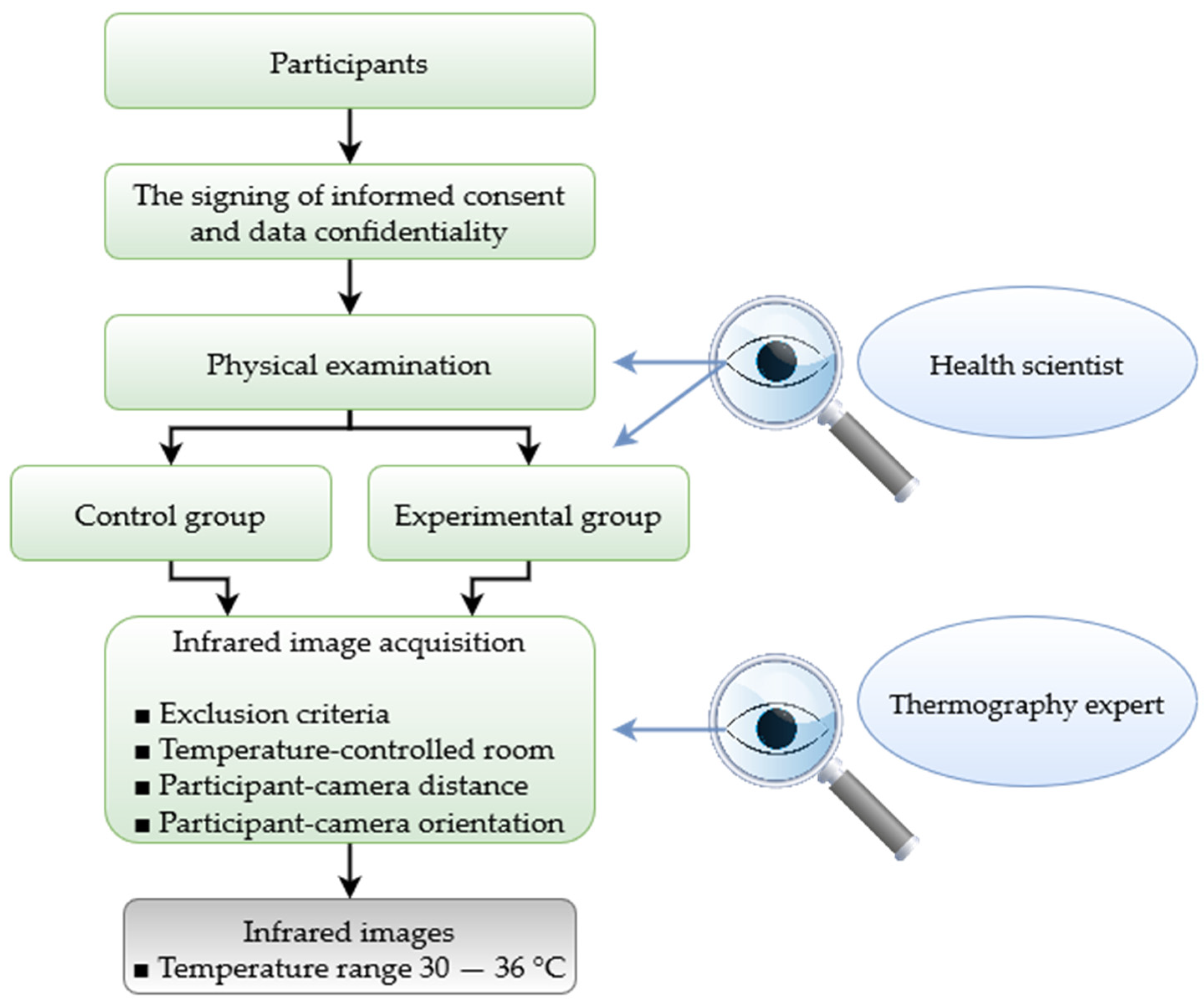

3. Methodology

4. Experimental Setup and Results

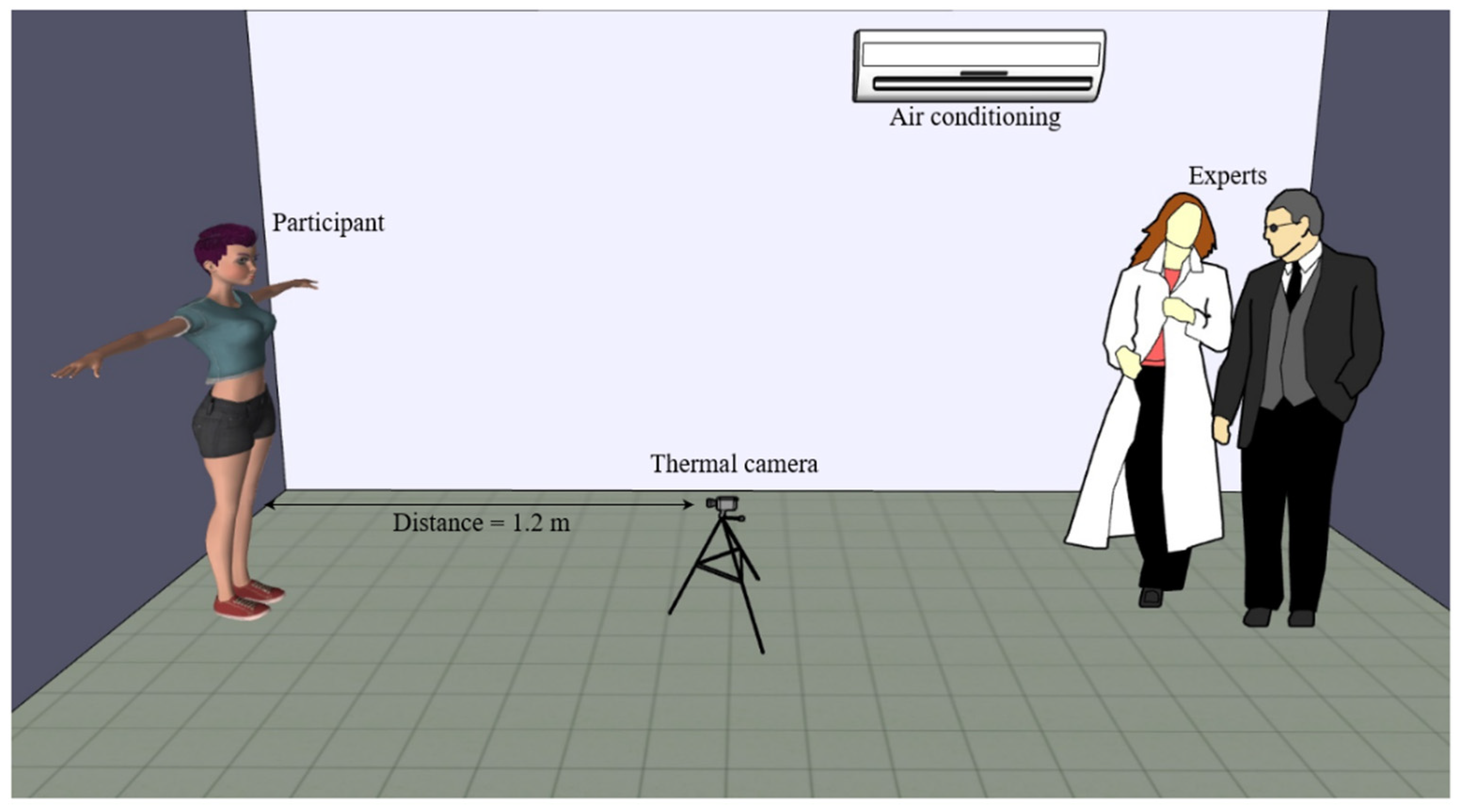

4.1. Experimental Setup

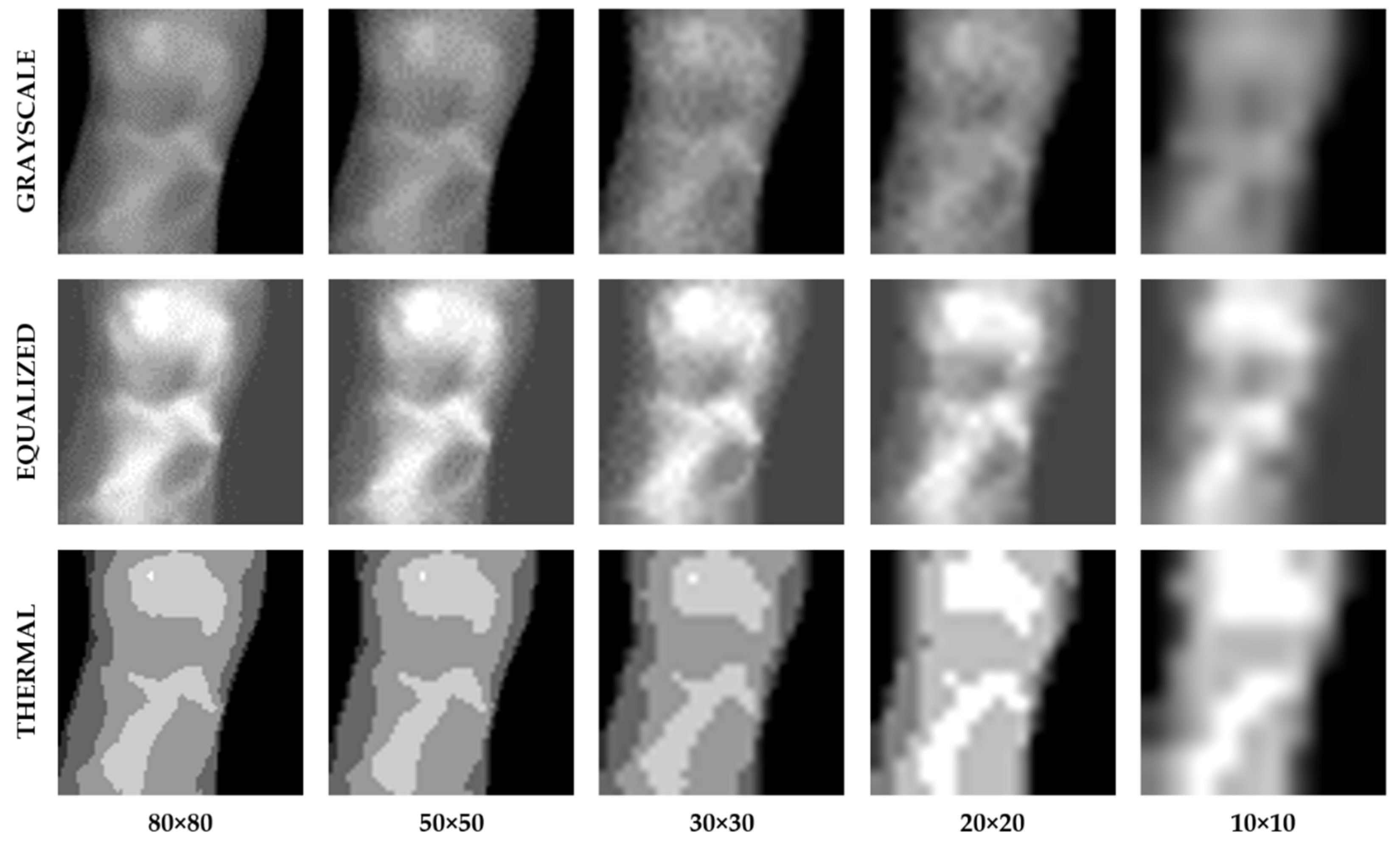

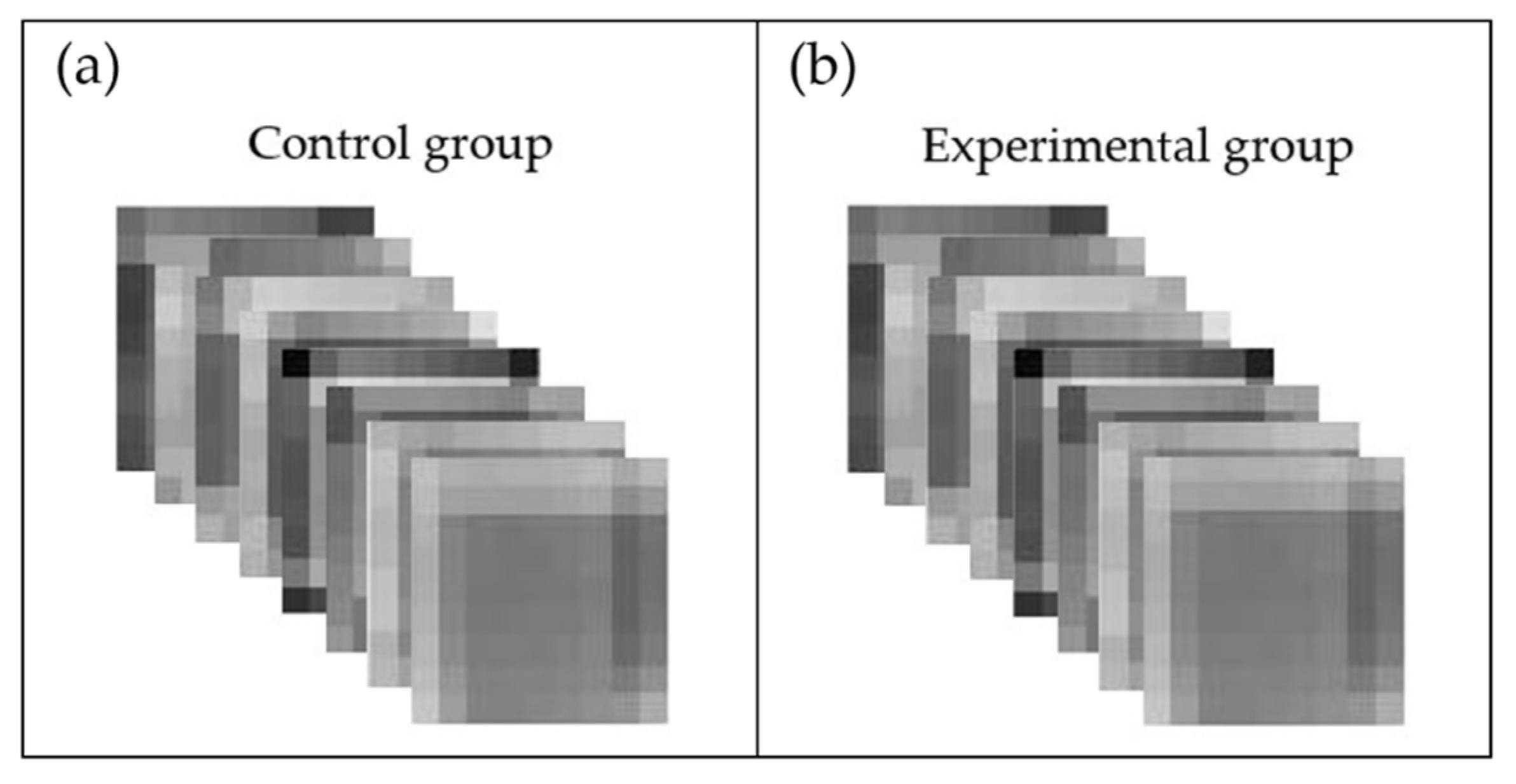

4.2. Image Size Preprocessing

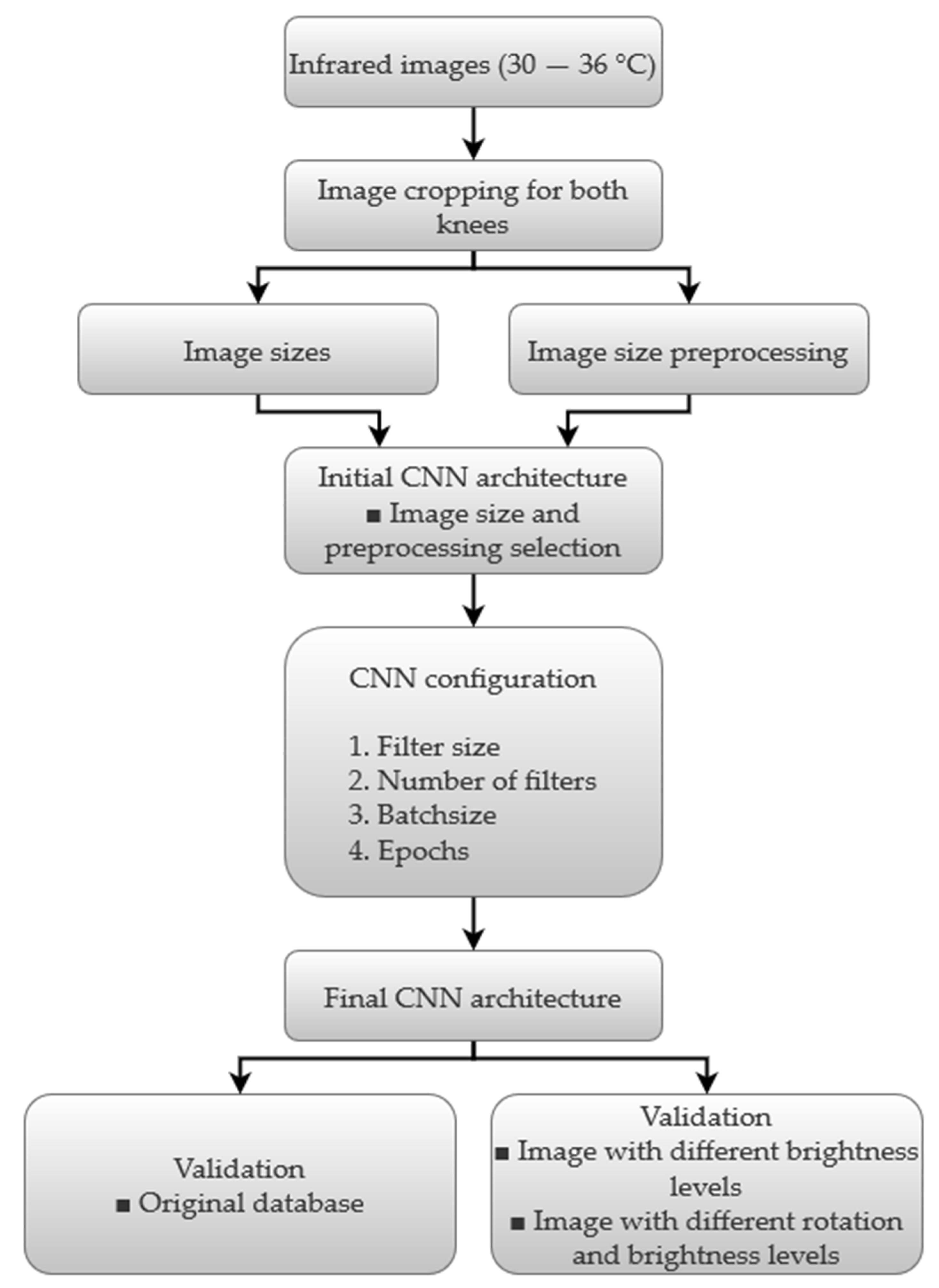

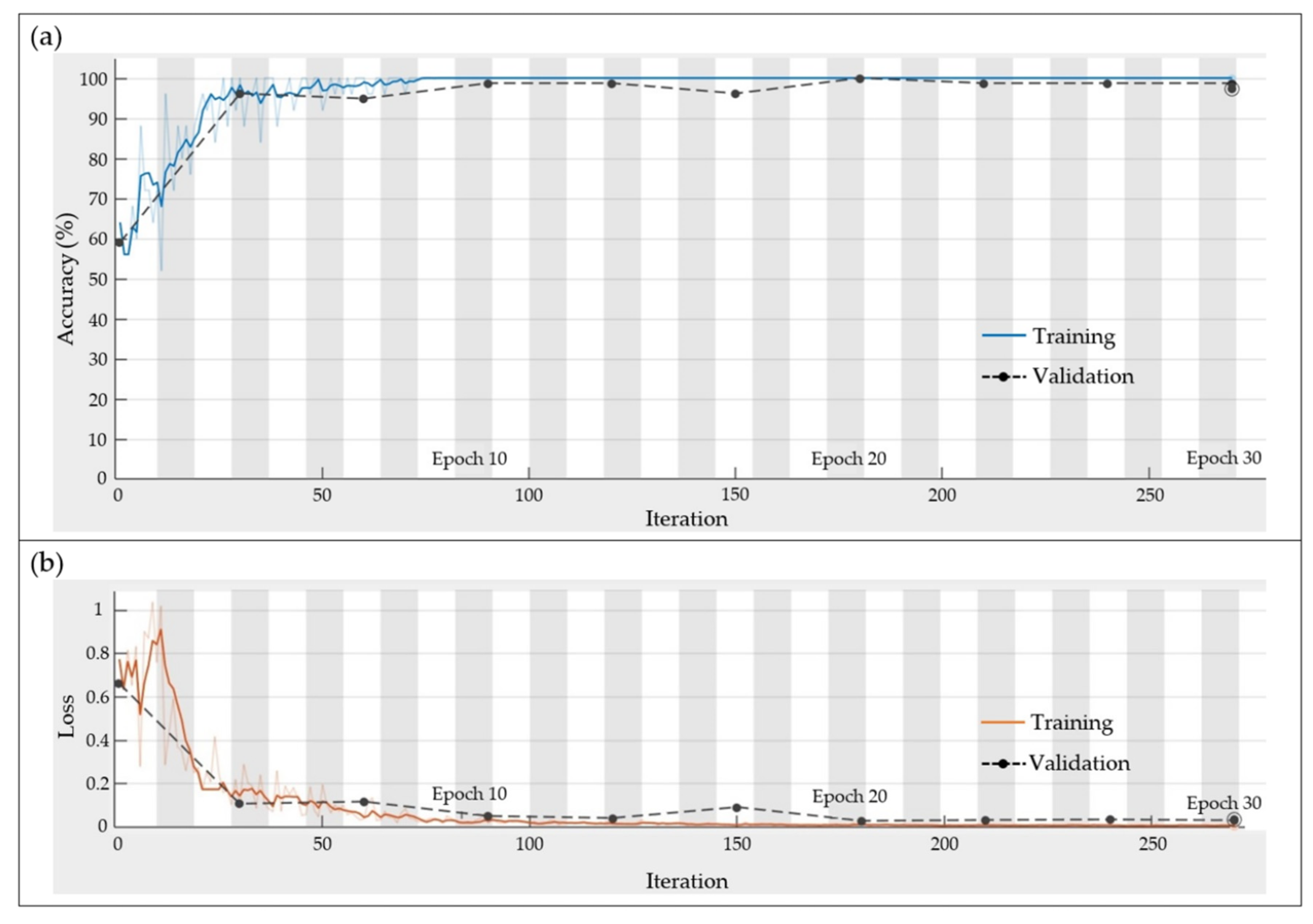

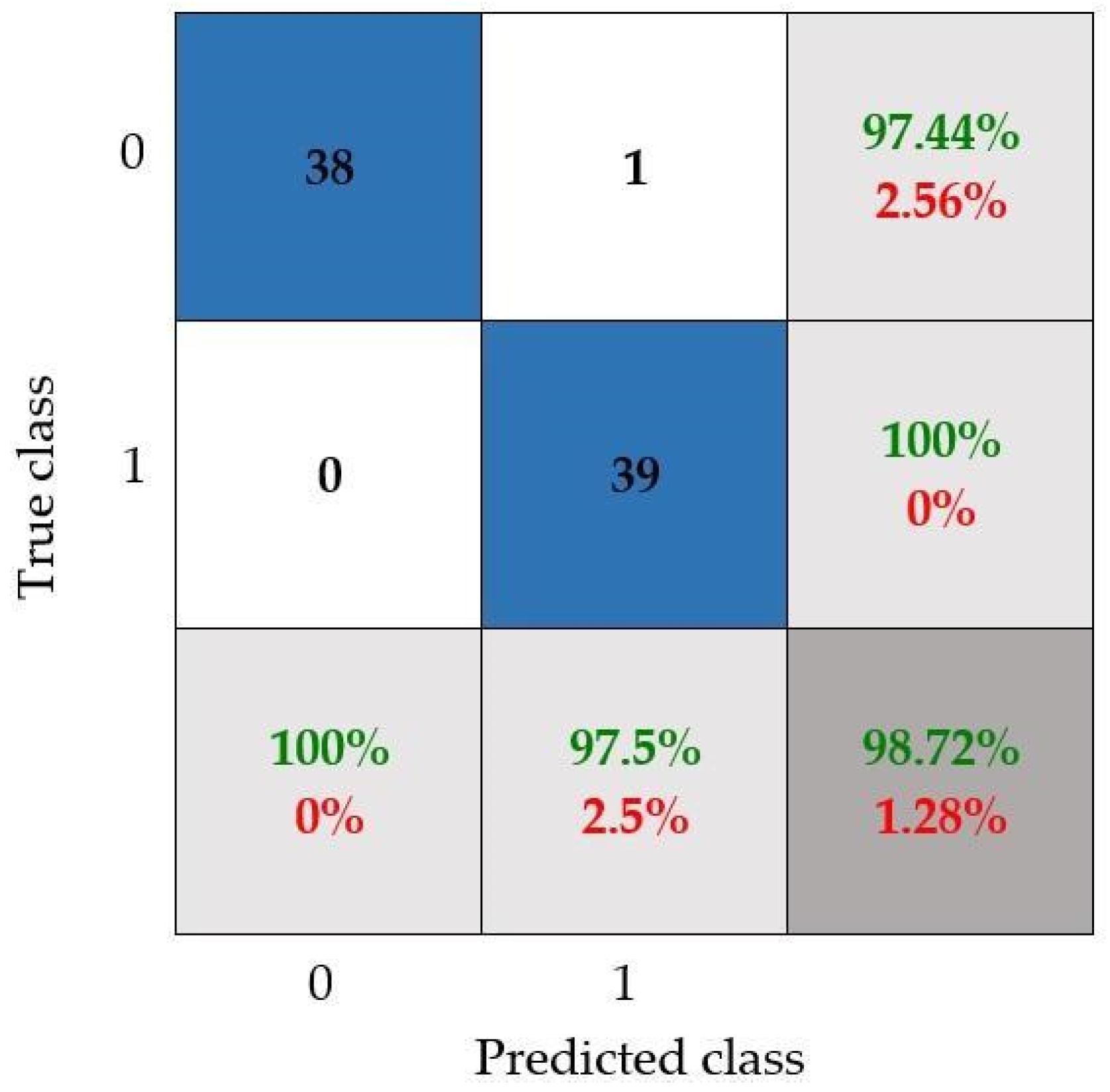

4.3. CNN Results

CNN Configuration

5. Discussion

6. Conclusions

- The proposed CNN architecture is one of the most basic architectures; consequently, the computational burden is lower than the one required by others works. Therefore, the proposal becomes an attractive and suitable solution if low-end processors are used.

- The CNN model that has been configured by following the proposed methodology reached 98.72% accuracy, allowing us to adequately differentiate between both knee conditions.

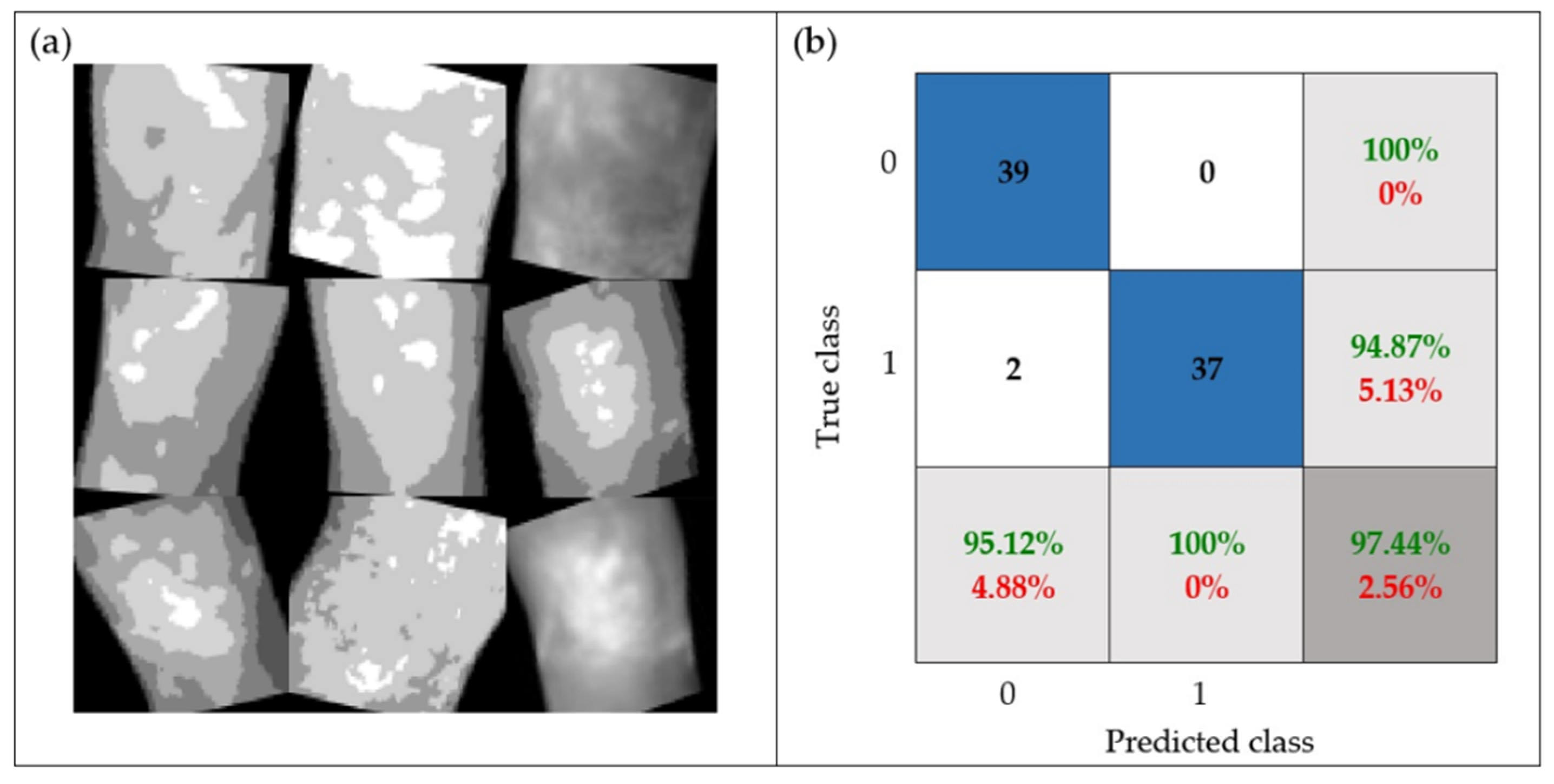

- The robustness of the proposal was tested through a set of images with random rotation angles and different levels of brightness (i.e., possible real conditions in practice), achieving 97.44% accuracy.

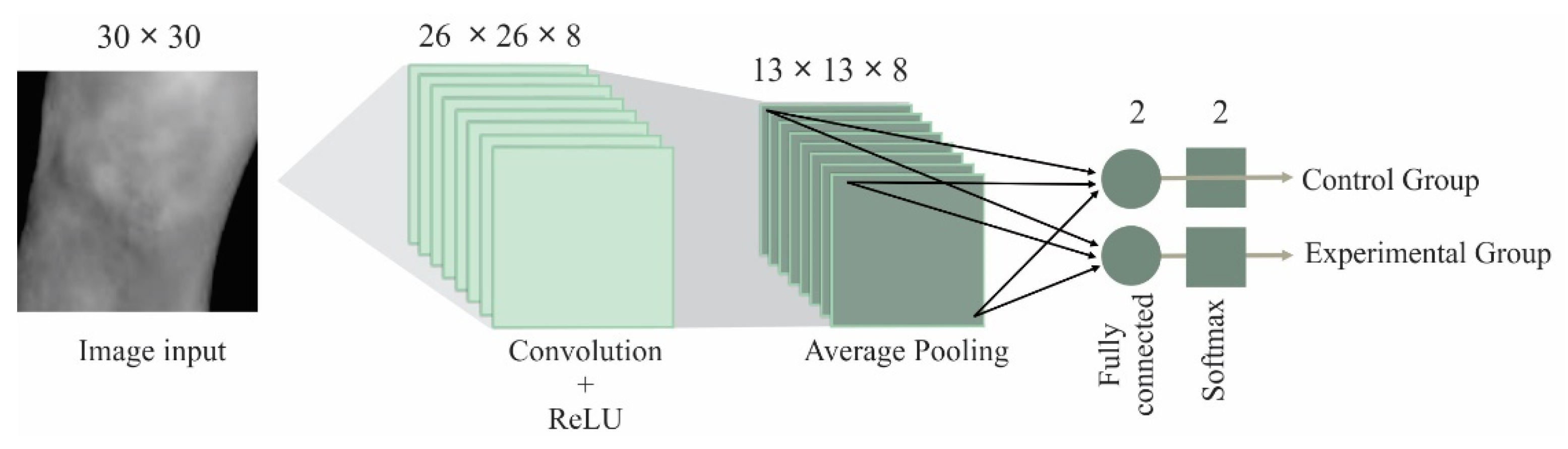

- The best results were obtained by using a CNN with the following configuration: input image size = 30 × 30, filter size = 5 × 5, number of filters = 8, batch size = 25, and epochs = 30), where the thermal images provided the best results. This architecture is an efficient CNN in terms of computational time and accuracy; this configuration allows us to obtain a low computational burden.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Davenport, M.; Oczypok, M.P. Knee and Leg Injuries. Emerg. Med. Clin. N. Am. 2020, 38, 143–165. [Google Scholar] [CrossRef] [PubMed]

- Scandurra, G.; Cardillo, E.; Giusi, G.; Ciofi, C.; Alonso, E.; Giannetti, R. Portable Knee Health Monitoring System by Impedance Spectroscopy Based on Audio-Board. Electronics 2021, 10, 460. [Google Scholar] [CrossRef]

- Roth, T.S.; Osbahr, D.C. Knee Injuries in Elite Level Soccer Players. Am. J. Orthop. 2018, 47, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Hopper, M.A.; Grainger, A.J. Knee Injuries. In Essential Radiology for Sports Medicine; Robinson, P., Ed.; Springer: New York, NY, USA, 2010; pp. 1–28. ISBN 978-1-4419-5972-0. [Google Scholar]

- Rothenberg, P.; Grau, L.; Kaplan, L.; Baraga, M.G. Knee Injuries in American Football: An Epidemiological Review. Am. J. Orthop. 2016, 45, 368–373. [Google Scholar] [PubMed]

- Blake, M.H.; Johnson, D.L. Knee Meniscus Injuries: Common Problems and Solutions. Clin. Sports Med. 2018, 37, 293–306. [Google Scholar] [CrossRef]

- Strickland, C.D.; Ho, C.K.; Merkle, A.N.; Vidal, A.F. MR Imaging of Knee Cartilage Injury and Repair Surgeries. Magn. Reson. Imaging Clin. N. Am. 2022, 30, 227–239. [Google Scholar] [CrossRef]

- Cimino, F.; Volk, B.S.; Setter, D. Anterior Cruciate Ligament Injury: Diagnosis, Management, and Prevention. Am. Fam. Physician 2010, 82, 917–922. [Google Scholar]

- Joshi, K.; Suganthi, K. Anterior Cruciate Ligament Tear Detection Based on Deep Convolutional Neural Network. Diagnostics 2022, 12, 2314. [Google Scholar] [CrossRef]

- Morelli, V.; Braxton, T.M. Meniscal, Plica, Patellar, and Patellofemoral Injuries of the Knee; Updates, Controversies and Advancements. Prim. Care Clin. Off. Pract. 2013, 40, 357–382. [Google Scholar] [CrossRef]

- Hetta, W.; Niazi, G. MRI in Assessment of Sports Related Knee Injuries. Egypt. J. Radiol. Nucl. Med. 2014, 45, 1153–1161. [Google Scholar] [CrossRef]

- Siouras, A.; Moustakidis, S.; Giannakidis, A.; Chalatsis, G.; Liampas, I.; Vlychou, M.; Hantes, M.; Tasoulis, S.; Tsaopoulos, D. Knee Injury Detection Using Deep Learning on MRI Studies: A Systematic Review. Diagnostics 2022, 12, 537. [Google Scholar] [CrossRef] [PubMed]

- Garwood, E.R.; Tai, R.; Joshi, G.; Watts, V.G.J. The Use of Artificial Intelligence in the Evaluation of Knee Pathology. Semin. Musculoskelet Radiol. 2020, 24, 21–29. [Google Scholar] [CrossRef]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and Explainability of Artificial Intelligence in Medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, 1–13. [Google Scholar] [CrossRef]

- Rguibi, Z.; Hajami, A.; Zitouni, D.; Elqaraoui, A.; Bedraoui, A. CXAI: Explaining Convolutional Neural Networks for Medical Imaging Diagnostic. Electronics 2022, 11, 1775. [Google Scholar] [CrossRef]

- Qiu, X.; Liu, Z.; Zhuang, M.; Cheng, D.; Zhu, C.; Zhang, X. Fusion of CNN1 and CNN2-Based Magnetic Resonance Image Diagnosis of Knee Meniscus Injury and a Comparative Analysis with Computed Tomography. Comput. Methods Programs Biomed. 2021, 211, 106297. [Google Scholar] [CrossRef] [PubMed]

- Cueva, J.H.; Castillo, D.; Espinós-Morató, H.; Durán, D.; Díaz, P.; Lakshminarayanan, V. Detection and Classification of Knee Osteoarthritis. Diagnostics 2022, 12, 2362. [Google Scholar] [CrossRef] [PubMed]

- Antony, J.; McGuinness, K.; O’Connor, N.E.; Moran, K. Quantifying Radiographic Knee Osteoarthritis Severity Using Deep Convolutional Neural Networks. In Proceedings of the International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016; pp. 1195–1200. [Google Scholar] [CrossRef]

- Raj, A.; Vishwanathan, S.; Ajani, B.; Krishnan, K.; Agarwal, H. Automatic Knee Cartilage Segmentation Using Fully Volumetric Convolutional Neural Networks for Evaluation of Osteoarthritis. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 851–854. [Google Scholar]

- Chen, P.; Gao, L.; Shi, X.; Allen, K.; Yang, L. Fully Automatic Knee Osteoarthritis Severity Grading Using Deep Neural Networks with a Novel Ordinal Loss. Comput. Med. Imaging Graph. 2019, 75, 84–92. [Google Scholar] [CrossRef] [PubMed]

- Tiulpin, A.; Saarakkala, S. Automatic Grading of Individual Knee Osteoarthritis Features in Plain Radiographs Using Deep Convolutional Neural Networks. Diagnostics 2020, 10, 932. [Google Scholar] [CrossRef]

- Menezes, P.; Rhea, M.; Herdy, C.; Simão, R. Effects of Strength Training Program and Infrared Thermography in Soccer Athletes Injuries. Sports 2018, 6, 148. [Google Scholar] [CrossRef]

- Fernández-Cuevas, I.; Bouzas Marins, J.C.; Arnáiz Lastras, J.; Gómez Carmona, P.M.; Piñonosa Cano, S.; García-Concepción, M.Á.; Sillero-Quintana, M. Classification of Factors Influencing the Use of Infrared Thermography in Humans: A Review. Infrared. Phys. Technol. 2015, 71, 28–55. [Google Scholar] [CrossRef]

- Sillero-Quintana, M.; Gomez-Carmona, P.M.; Fernández-Cuevas, I. Infrared Thermography as a Means of Monitoring and Preventing Sports Injuries; IGI Global: Hershey, PA, USA, 2017; ISBN 9781522520726. [Google Scholar]

- dos Santos Bunn, P.; Miranda, M.E.K.; Rodrigues, A.I.; de Souza Sodré, R.; Neves, E.B.; Bezerra da Silva, E. Infrared Thermography and Musculoskeletal Injuries: A Systematic Review with Meta-Analysis. Infrared. Phys. Technol. 2020, 109, 103435. [Google Scholar] [CrossRef]

- Fokam, D.; Lehmann, C. Clinical Assessment of Arthritic Knee Pain by Infrared Thermography. J. Basic Clin. Physiol. Pharmacol. 2019, 30, 1–5. [Google Scholar] [CrossRef]

- Denoble, A.E.; Hall, N.; Pieper, C.F.; Kraus, V.B. Patellar Skin Surface Temperature by Thermography Reflects Knee Osteoarthritis Severity. Clin. Med. Insights Arthritis Musculoskelet Disord. 2010, 3, 69–75. [Google Scholar] [CrossRef] [PubMed]

- Arfaoui, A.; Bouzid, M.A.; Pron, H.; Taiar, R.; Polidori, G. Application of Infrared Thermography as a Diagnostic Tool of Knee Osteoarthritis. J. Therm. Sci. Technol. 2012, 7, 227–235. [Google Scholar] [CrossRef][Green Version]

- Jin, C.; Yang, Y.; Xue, Z.J.; Liu, K.M.; Liu, J. Automated Analysis Method for Screening Knee Osteoarthritis Using Medical Infrared Thermography. J. Med. Biol. Eng. 2013, 33, 471–477. [Google Scholar] [CrossRef]

- Kumar, P.; Gaurav, A.; Rajnish, R.K.; Sharma, S.; Kumar, V.; Aggarwal, S.; Patel, S. Applications of Thermal Imaging with Infrared Thermography in Orthopaedics. J. Clin. Orthop. Trauma 2022, 24, 101722. [Google Scholar] [CrossRef]

- Lahiri, B.B.; Bagavathiappan, S.; Jayakumar, T.; Philip, J. Medical Applications of Infrared Thermography: A Review. Infrared. Phys. Technol. 2012, 55, 221–235. [Google Scholar] [CrossRef]

- Frize, M.; Adéa, C.; Payeur, P.; di Primio, G.; Karsh, J.; Ogungbemile, A. Detection of Rheumatoid Arthritis Using Infrared Imaging. In Medical Imaging 2011: Image Processing; Dawant, B.M., Haynor, D.R., Eds.; SPIE: Bellingham, DC, USA, 2011; Volume 7962, p. 79620M. [Google Scholar]

- Guzaitis, J.; Kadusauskiene, A.; Raisutis, R. Algorithm for Automated Foot Detection in Thermal and Optical Images for Temperature Asymmetry Analysis. Electronics 2021, 10, 571. [Google Scholar] [CrossRef]

- Usamentiaga, R.; Venegas, P.; Guerediaga, J.; Vega, L.; Molleda, J.; Bulnes, F. Infrared Thermography for Temperature Measurement and Non-Destructive Testing. Sensors 2014, 14, 12305–12348. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Gonzalez, R.C., Woods, R.E., Eds.; Pearson Education: New York, NY, USA, 2017; ISBN 9780133356724. [Google Scholar]

- Cruz-Albarran, I.A.; Benitez-Rangel, J.P.; Osornio-Rios, R.A.; Dominguez-Trejo, B.; Rodriguez-Medina, D.A.; Morales-Hernandez, L.A. A Methodology Based on Infrared Thermography for the Study of Stress in Hands of Young People during the Trier Social Stress Test. Infrared. Phys. Technol. 2018, 93, 116–123. [Google Scholar] [CrossRef]

- Liu, T.; Xu, H.; Ragulskis, M.; Cao, M.; Ostachowicz, W. A Data-Driven Damage Identification Framework Based on Transmissibility Function Datasets and One-Dimensional Convolutional Neural Networks: Verification on a Structural Health Monitoring Benchmark Structure. Sensors 2020, 20, 1059. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ieracitano, C.; Mammone, N.; Bramanti, A.; Hussain, A.; Morabito, F.C. A Convolutional Neural Network Approach for Classification of Dementia Stages Based on 2D-Spectral Representation of EEG Recordings. Neurocomputing 2019, 323, 96–107. [Google Scholar] [CrossRef]

- Mammone, N.; Ieracitano, C.; Morabito, F.C. A Deep CNN Approach to Decode Motor Preparation of Upper Limbs from Time–Frequency Maps of EEG Signals at Source Level. Neural Netw. 2020, 124, 357–372. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.H.; Zhao, X.P.; Wu, J.X.; Xie, Y.Y.; Zhang, Y.H. Motor Fault Diagnosis Based on Short-Time Fourier Transform and Convolutional Neural Network. Chin. J. Mech. Eng. (Engl. Ed.) 2017, 30, 1357–1368. [Google Scholar] [CrossRef]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of Pooling Operations in Convolutional Architectures for Object Recognition. In Proceedings of the 20th International Conference on Artificial Neural Networks (ICANN), Thessaloniki, Greece, 3 September 2010; pp. 92–101. [Google Scholar]

- Moreira, D.G.; Costello, J.T.; Brito, C.J.; Adamczyk, J.G.; Ammer, K.; Bach, A.J.E.; Costa, C.M.A.; Eglin, C.; Fernandes, A.A.; Fernández-Cuevas, I.; et al. Thermographic Imaging in Sports and Exercise Medicine: A Delphi Study and Consensus Statement on the Measurement of Human Skin Temperature. J. Therm. Biol. 2017, 69, 155–162. [Google Scholar] [CrossRef]

- Javed Awan, M.; Mohd Rahim, M.; Salim, N.; Mohammed, M.; Garcia-Zapirain, B.; Abdulkareem, K. Efficient Detection of Knee Anterior Cruciate Ligament from Magnetic Resonance Imaging Using Deep Learning Approach. Diagnostics 2021, 11, 105. [Google Scholar] [CrossRef]

- Sarvamangala, D.R.; Kulkarni, R.V. Grading of Knee Osteoarthritis Using Convolutional Neural Networks. Neural Process. Lett. 2021, 53, 2985–3009. [Google Scholar] [CrossRef]

- Yunus, U.; Amin, J.; Sharif, M.; Yasmin, M.; Kadry, S.; Krishnamoorthy, S. Recognition of Knee Osteoarthritis (KOA) Using YOLOv2 and Classification Based on Convolutional Neural Network. Life 2022, 12, 1126. [Google Scholar] [CrossRef]

- Bardhan, S.; Nath, S.; Debnath, T.; Bhattacharjee, D.; Bhowmik, M.K. Designing of an Inflammatory Knee Joint Thermogram Dataset for Arthritis Classification Using Deep Convolution Neural Network. Quant. Infrared Thermogr. J. 2022, 19, 145–171. [Google Scholar] [CrossRef]

| Resources | Specifications |

|---|---|

| Participants |

|

| Experts | Health professionals and thermography experts. |

| Ethical Issues | Letters of informed consent and data confidentiality with the ethics committee approval. |

| Technological equipment |

|

| Room for image acquisition |

|

| Image Size | Grayscale | Equalized | Thermal | |||

|---|---|---|---|---|---|---|

| Accuracy | Training Time | Accuracy | Training Time | Accuracy | Training Time | |

| 80 × 80 | 72% | 24.18 s | 50% | 23.80 s | 58% | 22.93 s |

| 50 × 50 | 70% | 22.21 s | 50% | 21.31 s | 70% | 22.56 s |

| 30 × 30 | 80% | 21.12 s | 56% | 20.96 s | 86% | 21.40 s |

| 20 × 20 | 76% | 20.69 s | 62% | 20.98 s | 82% | 20.99 s |

| 10 × 10 | 74% | 21.43 s | 58% | 21.20 s | 75% | 20.98 s |

| Name | Type | Activations | Learnables |

|---|---|---|---|

| Input | Image input | 30 × 30 × 1 | |

| Conv | Convolution | 26 × 26 × 8 | Weights 5 × 5 × 1 × 8 and Bias 1 × 1 × 8 |

| Relu | Rectified linear unit | 26 × 26 × 8 | |

| 2 × 2-AP | Average Pooling | 13 × 13 × 8 | |

| FC | Fully connected | 1 × 1 × 2 | Weights 2 × 1352 and Bias 2 × 1 |

| SM | SoftMax | 1 × 1 × 2 | |

| Class | Classification output |

| Input Images | Confusion Matrix Indicators | ||||

|---|---|---|---|---|---|

| Rotation | Brightness | Accuracy | Precision | Recall | F1-Score |

| No | 10% | 97.44% | 95.12% | 100.00% | 97.50% |

| No | 20% | 94.87% | 90.69% | 100.00% | 95.11% |

| No | 30% | 94.87% | 90.69% | 100.00% | 95.11% |

| Yes | 10% | 97.44% | 95.12% | 100.00% | 97.50% |

| Yes | 20% | 93.58% | 90.49% | 97.22% | 93.72% |

| Yes | 30% | 92.31% | 94.59% | 90.24% | 92.36% |

| Average | 95.09% | 92.78% | 97.91% | 95.22% | |

| Work | Method | Input Image | Accuracy |

|---|---|---|---|

| [16] | Fusion of CNN1 and CNN2 (CNNf) | MRI and Computer tomography | 93.86% |

| [9] | Three-layered compact parallel deep convolutional neural network (CPDCNN) | MRI | 96.60% |

| [17] | Deep Siamese Convolutional Neural Networks | X-ray | 61% |

| [44] | 14 layers ResNet-14 architecture of convolutional neural network | MRI | 92% |

| [45] | Multiscale convolutional blocks in convolutional neural network (MCBCNN) | X-ray | 95% |

| [46] | Local Binary Pattern—Principal Component Analysis and YOLOv2 | X-ray | 90.6% |

| [29] | Feature extraction—Support Vector Machine (SVM) | Thermographic images | 85.49% |

| [47] | Thermographic image processing—shallow learning and deep learning (VGG16 and VGG19) | Thermographic images | 96% |

| Proposed work | Image preprocessing and convolutional neural network | Thermographic images | 97.44% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trejo-Chavez, O.; Amezquita-Sanchez, J.P.; Huerta-Rosales, J.R.; Morales-Hernandez, L.A.; Cruz-Albarran, I.A.; Valtierra-Rodriguez, M. Automatic Knee Injury Identification through Thermal Image Processing and Convolutional Neural Networks. Electronics 2022, 11, 3987. https://doi.org/10.3390/electronics11233987

Trejo-Chavez O, Amezquita-Sanchez JP, Huerta-Rosales JR, Morales-Hernandez LA, Cruz-Albarran IA, Valtierra-Rodriguez M. Automatic Knee Injury Identification through Thermal Image Processing and Convolutional Neural Networks. Electronics. 2022; 11(23):3987. https://doi.org/10.3390/electronics11233987

Chicago/Turabian StyleTrejo-Chavez, Omar, Juan P. Amezquita-Sanchez, Jose R. Huerta-Rosales, Luis A. Morales-Hernandez, Irving A. Cruz-Albarran, and Martin Valtierra-Rodriguez. 2022. "Automatic Knee Injury Identification through Thermal Image Processing and Convolutional Neural Networks" Electronics 11, no. 23: 3987. https://doi.org/10.3390/electronics11233987

APA StyleTrejo-Chavez, O., Amezquita-Sanchez, J. P., Huerta-Rosales, J. R., Morales-Hernandez, L. A., Cruz-Albarran, I. A., & Valtierra-Rodriguez, M. (2022). Automatic Knee Injury Identification through Thermal Image Processing and Convolutional Neural Networks. Electronics, 11(23), 3987. https://doi.org/10.3390/electronics11233987