Development and Testing of Performance Scale Application as an Effective Electronic Tool to Enhance Students’ Academic Achievements

Abstract

:1. Introduction

2. Literature Review

2.1. Performance Scale Application

2.2. Student’s Academic Achievement

2.3. Choice of Specialty

2.4. Data Mining Methods

3. Materials and Methods

3.1. Participants and Sampling

3.2. Cronbach’s reliability

3.3. Research Tool

3.4. Research Framework

3.5. The System Development

3.5.1. Machine Learning Algorithm

- (A)

- Supervised learning

- N = 1

- A: (M × Z)* → H

- from (M × Z)* into the hypothesis space H. For a given H1

- sample ls ∫ (M × Z)* represents A(ls) with the function replaced by the algorithm D.

- (B)

- Linear predictor

3.5.2. Extra Trees Algorithm

| Algorithm 1: |

| ## extraTrees(m, z, ntree = 605, mtry = if (!is.null(z) && !is.factor(z)) max(floor(ncol(x)/3), 1) else floor(sqrt(ncol(x))), nodesize = if (!is.null(z) && !is.factor(z)) 7 else 1, numRandomCuts = 1, evenCuts = FALSE, numThreads = 1, quantile = F, weights = NULL, subsetSizes = NULL, subsetGroups = NULL, tasks = NULL, probOfTaskCuts = mtry/ncol(x), numRandomTaskCuts = 1, na.action = “stop”, ...) |

- (C)

- Set of independent binary extra tree regression

- …. . =

- .

- (D)

- Extracting, transforming, and feature selection

- (i)

- TF-IDF Feature Extractor

- (ii)

- VectorSlicer

- (iii)

- Locality-Sensitive Hashing

3.6. Ethical Committee Approval for Individual Security

3.7. Data Analysis

4. Results

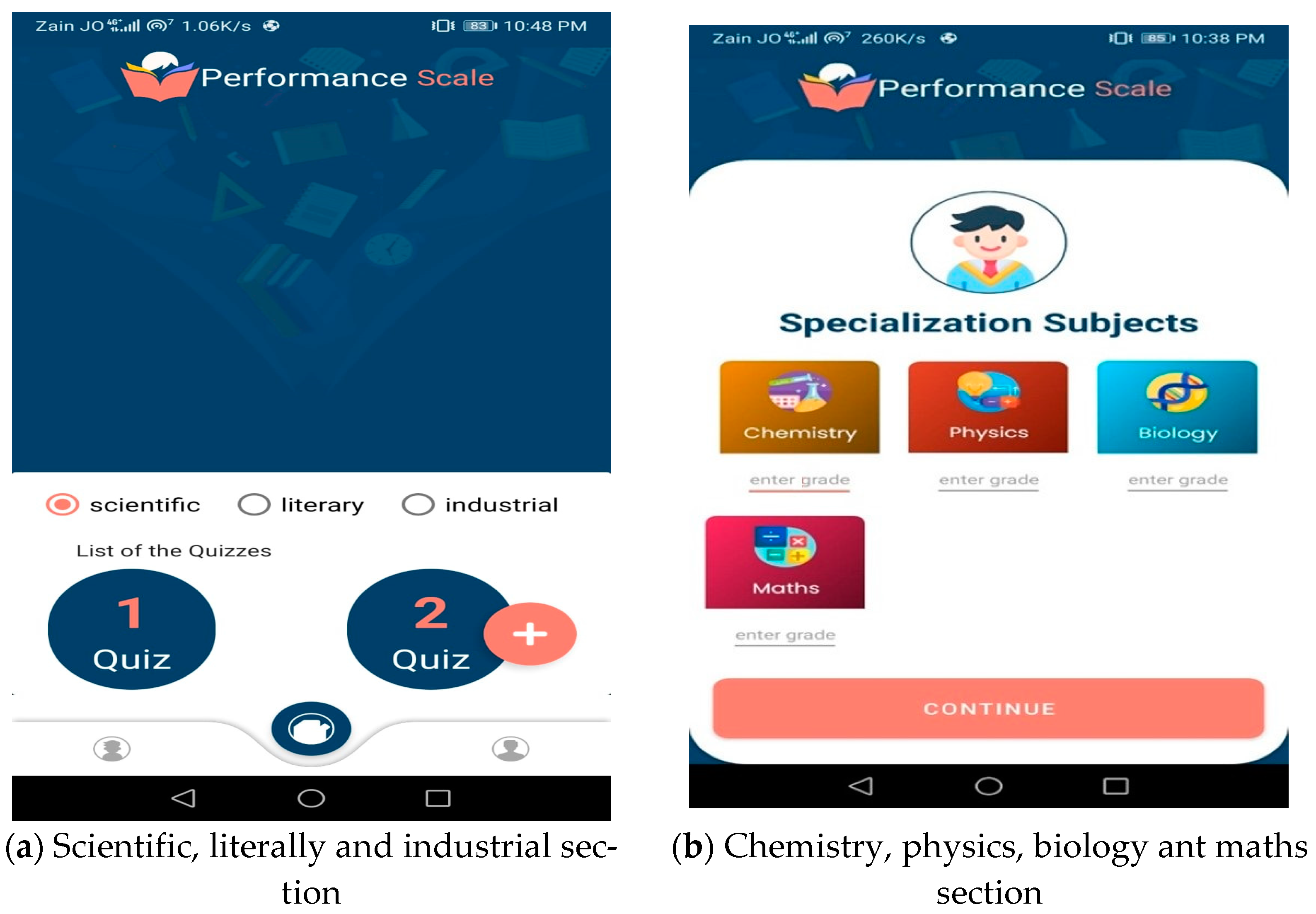

4.1. Performance Scale Application and its Features

- ➢

- First Screen:

- ➢

- Second Screen:

- ➢

- Third Screen:

4.2. PSA Response Times

4.3. PSA Backend Calls

4.4. Descriptive Statistics

4.5. Cronbach’s Reliability

4.6. Testsing of Hypotheses

5. Discussion

5.1. Conclusions and Recommendations

5.2. Limitations

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ozdemir, D.; Ozturk, F. The Investigation of Mobile Virtual Reality Application Instructional Content in Geography Education: Academic Achievement, Presence, and Student Interaction. Intern. J. Hum.–Comp. Interact. 2022, 38, 1487–1503. [Google Scholar] [CrossRef]

- Cachia, M.; Lynam, S.; Stock, R. Academic success: Is it just about the grades? High. Educ. Pedag. 2018, 3, 434–439. [Google Scholar] [CrossRef] [Green Version]

- Öztürk, M. The effect of computer-supported teaching applications on 8th grade students’ academic success and knowledge retention in science. Hasan Ali Yücel Egit. Fakültesi Derg. 2017, 14, 59. [Google Scholar] [CrossRef]

- Hsiao, H.S.; Lin, Y.W.; Lin, K.Y.; Lin, C.Y.; Chen, J.H.; Chen, J.C. Using robot-based practices to develop an activity that incorporated the 6E model to improve elementary school students’ learning performances. Inter. Learn. Env. 2022, 30, 85–99. [Google Scholar] [CrossRef]

- Siwawetkul, W.; Koraneekij, P. Effectof 5E instructional model on mobile technology to enhance reasoning ability of lower primary school students. Kasetsart. J. Soc. Sci. 2020, 41, 40–45. [Google Scholar]

- Rohmah, F.; Pramono, S.E.; Yusuf, A. Problem based learning assisted by mobile learning to improve conceptual understanding of primary school students. Educ. Manag. 2020, 9, 51–58. [Google Scholar]

- Pilatso, P.; Chukwuere, J.E. Investigating the Impact of Fourth-Generation (4G) Mobile Technologies on Student Academic Performance. Nig. J. Technol. 2022, 41, 547–559. [Google Scholar] [CrossRef]

- Astuti, I.; Suratman, D. Development of Mobile Learning Based on A Scientific Approach to Learning Elementary School Themes. J. Sci. 2022, 10, 291–301. [Google Scholar] [CrossRef]

- El Gourari, A.; Skouri, M.; Raoufi, M.; Ouatik, F. The future of the transition to E-learning and distance learning using artificial intelligence. In Proceedings of the 2020 Sixth International Conference on e-Learning, Sakheer, Bahrain, 14 December 2020; pp. 279–284. [Google Scholar] [CrossRef]

- Amjad, S.; Younas, M.; Anwar, M.; Shaheen, Q.; Shiraz, M.; Gani, A. Data Mining Techniques to Analyze the Impact of Social Media on Academic Performance of High School Students. Wirel. Comm. Mob. Comp. 2022, 20, 2022. [Google Scholar] [CrossRef]

- Malkawi, N.A.M. Attitudes of Secondary School Students in Jordan toward Contemporary Educational Concepts. J. Educ. Learn. 2020, 9, 229–236. [Google Scholar] [CrossRef] [Green Version]

- Jordan, M.M.; Duckett, N.D. Universities confront ‘tech disruption’: Perceptions of student engagement online using two learning management systems. J. Pub. Prof. Sociol. 2018, 10, 4. [Google Scholar]

- Terhorst, Y.; Philippi, P.; Sander, L.B.; Schultchen, D.; Paganini, S.; Bardus, M.; Messner, E.M. Validation of the mobile application rating scale (MARS). PLoS ONE 2020, 15, e0241480. [Google Scholar] [CrossRef] [PubMed]

- Meddar, J.M.; Ponnapalli, A.; Azhar, R.; Turchioe, M.R.; Duran, A.T.; Creber, R.M. A Structured Review of Commercially Available Cardiac Rehabilitation mHealth Applications Using the Mobile Application Rating Scale. J. Cardiopul. Rehab. Prev. 2022, 42, 141–147. [Google Scholar] [CrossRef] [PubMed]

- Nafasov, M.M.; Akhtamova, L.A.Q.; Sadullaeva, F.A. Technology of creating educational mobile applications. Acad. Res. Educ. Sci. 2022, 3, 926–933. [Google Scholar]

- Sevinç, T.A.Ş.; Yavuz, A. The Effect of Geometry Teaching Designed with the Developed Mobile Application on the Academic Achievement of the Students. Necatibey Eğitim Fakültesi Elektronik Fen ve Matematik Eğitimi Dergisi 2021, 16, 227–245. [Google Scholar] [CrossRef]

- Sayibu, M.; Jianxun, C.; Akintunde, T.Y.; Hafeez, R.O.; Koroma, J.; Amosun, T.S.; Shahani, R. Nexus between students’ attitude towards self-learning, Tencent APP usability, mobile-learning, and innovative performance. Soc. Sci. Hum. Open 2021, 4, 100217. [Google Scholar] [CrossRef]

- Almaiah, M.A.; Al-Khasawneh, A.; Althunibat, A. Exploring the critical challenges and factors influencing the E-learning system usage during COVID-19 pandemic. Educ. Infor. Technol. 2020, 25, 5261–5280. [Google Scholar] [CrossRef] [PubMed]

- Kew, S.N.; Tasir, Z. Developing a learning analytics intervention in e-learning to enhance students’ learning performance: A case study. Educ. Infor. Technol. 2022, 27, 1–36. [Google Scholar] [CrossRef]

- Rasheed, H.M.W.; He, Y.; Khalid, J.; Khizar, H.M.U.; Sharif, S. The relationship between e-learning and academic performance of students. J. Pub. Aff. 2022, 22, e2492. [Google Scholar] [CrossRef]

- Bayar, M.F.; Kurt, U. Effects of mobile learning science course on students’ academic achievement and their opinions about the course. Sci. Educ. Intern. 2021, 32, 254–263. [Google Scholar] [CrossRef]

- Yan, Z. Self-assessment in the process of self-regulated learning and its relationship with academic achievement. Assess. Eval. High. Educ. 2020, 45, 224–238. [Google Scholar] [CrossRef]

- Bernacki, M.L.; Greene, J.A.; Crompton, H. Mobile technology, learning, and achievement: Advances in understanding and measuring the role of mobile technology in education. Contemp. Educ. Psychol. 2020, 60, 101827. [Google Scholar] [CrossRef]

- Wahyu, Y.; Supardi, K.; Mulu, M.; Narut, Y.F. The Effect of Problem-Based Approach Assisted with Mobile Augmented Reality toward Students’ Science Process Skills and Achievement. Prisma Sains Jurnal Pengkajian Ilmu dan Pembelajaran Matematika dan IPA IKIP Mataram 2021, 9, 420–427. [Google Scholar] [CrossRef]

- Mergany, N.N.; Dafalla, A.E.; Awooda, E. Effect of mobile learning on academic achievement and attitude of Sudanese dental students: A preliminary study. BMC Med. Educ. 2021, 21, 1–7. [Google Scholar] [CrossRef]

- Ugur-Erdogmus, F.; Çakır, R. Effect of Gamified Mobile Applications and the Role of Player Types on the Achievement of Students. J. Educ. Comp. Res. 2022, 60, 1063–1080. [Google Scholar] [CrossRef]

- Sandra, L.; Lumbangaol, F.; Matsuo, T. Machine learning algorithm to predict student’s performance: A systematic literature review. TEM J. 2021, 10, 1919–1927. [Google Scholar] [CrossRef]

- Hossain, M.S.; Rahman, M.F.; Uddin, M.K. Analyzing and Predicting Learner Sentiment toward Specialty Schools Using Machine Learning Techniques. In Applying Data Science and Learning Analytics Throughout a Learner’s Lifespan; Goran, T., Demeter, M., Hayes, H., Eds.; IGI Global: Hershey, PA, USA, 2022; pp. 133–158. [Google Scholar] [CrossRef]

- Halde, R.R. Application of Machine Learning algorithms for betterment in education system. In Proceedings of the 2016 International Conference on Automatic Control and Dynamic Optimization Techniques, Pune, India, 4 September 2016; pp. 1110–1114. [Google Scholar] [CrossRef]

- Ofori, F.; Maina, E.; Gitonga, R. Using machine learning algorithms to predict students’ performance and improve learning outcome: A literature based review. J. Infor. Technol. 2020, 4. Available online: https://stratfordjournals.org/journals/index.php/Journal-of-Information-and-Techn/article/view/480 (accessed on 23 October 2022).

- Sokkhey, P.; Okazaki, T. Hybrid machine learning algorithms for predicting academic performance. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 32–41. [Google Scholar] [CrossRef] [Green Version]

- Alonso-Virgós, L.; Fondon, M.D.; Espada, J.P.; Crespo, R.G. Women in science and technology studies. A study about the influence of parents on their children’s choice of speciality. And about the trend of the different specialities in Spanish students. In Proceedings of the 2021 IEEE Global Engineering Education Conference (EDUCON), Vienna, Austria, 21 April 2021; pp. 122–130. [Google Scholar] [CrossRef]

- Ogowewo, B.O. Factors Influencing Career Choice Among Secondary School Students: Implications for Career Guidance. Intern. J. Interdiscip. Soc. Sci. 2010, 5. Available online: https://www.semanticscholar.org/paper/Factors-Influencing-Career-Choice-Among-Secondary-Ogowewo/8bce4379678b487a4e3ae77817b2d22a94b6a94b (accessed on 23 October 2022). [CrossRef]

- Stoyanov, S.R.; Hides, L.; Kavanagh, D.J.; Wilson, H. Development and validation of the user version of the Mobile Application Rating Scale (uMARS). JMIR mHealth uHealth 2016, 4, e5849. [Google Scholar] [CrossRef] [Green Version]

- Chao, C.M. Factors determining the behavioral intention to use mobile learning: An application and extension of the UTAUT model. Front. Psychol. 2019, 10, 1652. [Google Scholar] [CrossRef]

- Baashar, Y.; Alkawsi, G.; Mustafa, A.; Alkahtani, A.A.; Alsariera, Y.A.; Ali, A.Q.; Tiong, S.K. Toward predicting student’s academic performance using artificial neural networks (ANNs). Appl. Sci. 2022, 12, 1289. [Google Scholar] [CrossRef]

- Ahsan, M.M.; Mahmud, M.P.; Saha, P.K.; Gupta, K.D.; Siddique, Z. Effect of data scaling methods on machine learning algorithms and model performance. Technology 2021, 9, 52. [Google Scholar] [CrossRef]

- Yousafzai, B.K.; Khan, S.A.; Rahman, T.; Khan, I.; Ullah, I.; Ur Rehman, A.; Baz, M.; Hamam, H.; Cheikhrouhou, O. Student-performulator: Student academic performance using hybrid deep neural network. Sustainability 2021, 31, 9775. [Google Scholar] [CrossRef]

- Sekeroglu, B.; Dimililer, K.; Tuncal, K. Student performance prediction and classification using machine learning algorithms. In Proceedings of the 2019 8th International Conference on Educational and Information Technology, Cambridge, UK, 7 March 2019; pp. 7–11. [Google Scholar] [CrossRef]

- Shams, A.; Ajmal, F.; Jumani, N.B. Effectiveness of Mobile Learning for Academic Progress of MS Education Students: A Case Study of Distance Learning Program at IIUI. Pak. J. Dist. Online Learn. 2021, 7, 33–46. [Google Scholar]

- Lim, H.; Kim, S.; Chung, K.M.; Lee, K.; Kim, T.; Heo, J. Is college students’ trajectory associated with academic performance? Comp. Educ. 2022, 178, 104397. [Google Scholar] [CrossRef]

- Nawar, S.; Mouazen, A.M. Predictive performance of mobile vis-near infrared spectroscopy for key soil properties at different geographical scales by using spiking and data mining techniques. Catena 2017, 151, 118–129. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Z.; Yin, H.; Luo, Y.; Gong, J.; Yang, Y.; Lin, M. Quantitative Analysis of Mobile Application User Interface Design. In Proceedings of the 2019 IEEE 38th International Performance Computing and Communications Conference, London, UK, 14 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Peterson, R.A.; Kim, Y. On the relationship between coefficient alpha and composite reliability. J. Appl. Psychol. 2013, 98, 194. [Google Scholar] [CrossRef] [PubMed]

- Bonett, D.G.; Wright, T.A. Cronbach’s alpha reliability: Interval estimation, hypothesis testing, and sample size planning. J. Organ. Behav. 2015, 36, 3–15. [Google Scholar] [CrossRef]

- Franke, G.; Sarstedt, M. Heuristics versus statistics in discriminant validity testing: A comparison of four procedures. Internet Res. 2019, 29, 430–447. [Google Scholar] [CrossRef]

- Farrell, A.M.; Rudd, J.M. Factor analysis and discriminant validity: A brief review of some practical issues. Anzmac 2009, 9, 10–21. [Google Scholar]

- Buchanan, E.A.; Hvizdak, E.E. Online survey tools: Ethical and methodological concerns of human research ethics committees. J. Emp. Res. Hum. Res. Ethics 2009, 4, 37–48. [Google Scholar] [CrossRef]

- Bimayu, W.; Nopriadi, N. Learning Based on Information Technology and Learning Strategy Communication in Facing Industrial Revolution Era 4.0. Int. J. Educ. Rev. 2019, 1, 77–86. [Google Scholar] [CrossRef] [Green Version]

- Brown, C.; Spiro, J.; Quinton, S. The role of research ethics committees: Friend or foe in educational research? An exploratory study. Br. Educ. Res. J. 2020, 46, 747–769. [Google Scholar] [CrossRef]

- Hilkenmeier, F.; Bohndick, C.; Bohndick, T.; Hilkenmeier, J. Assessing distinctiveness in multidimensional instruments without access to raw data–a manifest Fornell-Larcker criterion. Front. Psychol. 2020, 11, 223. [Google Scholar] [CrossRef] [Green Version]

- Anis, L.; Perez, G.; Benzies, K.M.; Ewashen, C.; Hart, M.; Letourneau, N. Convergent validity of three measures of reflective function: Parent development interview, parental reflective function questionnaire, and reflective function questionnaire. Front. Psychol. 2020, 11, 574719. [Google Scholar] [CrossRef] [PubMed]

- Shrestha, N. Factor analysis as a tool for survey analysis. Am. J. Appl. Math. Stat. 2021, 9, 4–11. [Google Scholar] [CrossRef]

- Liu, P.; Li, Y. Response time evaluation of mobile applications combining network protocol analysis and information fusion. Infor. Softw. Technol. 2022, 145, 106838. [Google Scholar] [CrossRef]

- Sari, R.; Sumarmi, S.; Astina, I.; Utomo, D.; Ridhwan, R. Measuring students scientific learning perception and critical thinking skill using paper-based testing: School and gender differences. Int. J. Emerg. Technol. Learn. 2019, 14, 132–149. [Google Scholar] [CrossRef]

- Gültekin, S.B.; Altun, T. Investigating the Impact of Activities Based on Scientific Process Skills on 4th Grade Students’ Problem-Solving Skills. Intern. Electron. J. Elem. Educ. 2022, 14, 491–500. [Google Scholar] [CrossRef]

- Huang, R.T.; Jabor, M.K.; Tang, T.W.; Chang, S.C. Examine the moderating role of mobile technology anxiety in mobile learning: A modified model of goal-directed behavior. Asia Pac. Educ. Rev. 2022, 23, 101–113. [Google Scholar] [CrossRef]

- Qiu, F.; Zhang, G.; Sheng, X.; Jiang, L.; Zhu, L.; Xiang, Q.; Chen, P.K. Predicting students’ performance in e-learning using learning process and behaviour data. Sci. Rep. 2022, 12, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Sereno, M.A. The Impact of a Personalized Learning Framework on Student Achievement. Edgewood College. 2018. Available online: https://www.proquest.com/docview/2157983676?pq-origsite=gscholarfromopenview=true (accessed on 23 October 2022).

- Bourekkache, S.; Tigane, S.; Kazar, O.; Kahloul, L. Mobile and personalized learning system for computer science students. In Proceedings of the 2020 Sixth International Conference on e-Learning, Sakheer, Bahrain, 6–7 December 2020; pp. 189–193. [Google Scholar] [CrossRef]

- Alkhateeb, M.A.; Al-Duwairi, A.M. The Effect of Using Mobile Applications (GeoGebra and Sketchpad) on the Students’ Achievement. Intern. Electron. J. Math. Educ. 2019, 14, 523–533. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blinova, O. What Covid Taught Us About Assessment: Students’perceptions Of Academic Integrity in Distance Learning. In Proceedings of the INTED 2022 Conference, Valencia, Spain, 7–8 March 2022; p. 8. [Google Scholar] [CrossRef]

- Al-Alami, Z.M.; Adwan, S.W.; Alsous, M. Remote learning during Covid-19 lockdown: A study on anatomy and histology education for pharmacy students in Jordan. Anatom. Sci. Educ. 2022, 15, 249. [Google Scholar] [CrossRef] [PubMed]

- De Backer, L.; Van Keer, H.; De Smedt, F.; Merchie, E.; Valcke, M. Identifying regulation profiles during computer-supported collaborative learning and examining their relation with students’ performance, motivation, and self-efficacy for learning. Comp. Educ. 2022, 179, 104421. [Google Scholar] [CrossRef]

- Fairlamb, S. We need to talk about self-esteem: The effect of contingent self-worth on student achievement and well-being. Sch. Teach. Learn. Psychol. 2022, 8, 45. [Google Scholar] [CrossRef]

- Lei, H.; Chiu, M.M.; Wang, D.; Wang, C.; Xie, T. Effects of game-based learning on students’ achievement in science: A meta-analysis. J. Educ. Comp. Res. 2022, 60, 1373–1398. [Google Scholar] [CrossRef]

| Axe No. | Variables | Symbol | Sub Axe | No. Items | |

|---|---|---|---|---|---|

| 1 | Personal Data | PD | Specialty: Scientific, Literary, Industrial | 1 | |

| 2 | Performance scale (independent variable) | IME | Improving evaluation (* X1, X2, …, X5) | 5 | |

| 3 | Mobile learning skills (dependent variables) | IMC | Improving communication (* A1, A2, …, A7) | Personalized learning A1, A2, A3 | 3 |

| Distance learning A4, A5, A6 | 3 | ||||

| A7 | 1 | ||||

| IMSC | Improving scientific content (* B1, B2, …, B5) | Self-learning B1, B2, B3 | 3 | ||

| B4, B5 | 2 | ||||

| SOL | Satisfaction of learning (* C1, C2, …, C10) | Specialty learning C1, C2, C3, C4 | 4 | ||

| Mobile learning D5, D6 | 2 | ||||

| C7, C8, C9, C10 | 4 | ||||

| Specialty | Frequency | Percent |

|---|---|---|

| scientific | 36 | 49% |

| literary | 31 | 41% |

| industry | 7 | 10% |

| Total | 74 | 100 |

| KMO and Bartlett’s Test | ||

|---|---|---|

| Kaiser–Meyer–Olkin Measure of Sampling Adequacy. | 0.873 | |

| Bartlett’s Test of Sphericity | Approx. Chi-Square | 2187.205 |

| Df | 351 | |

| Sig. | 0.000 | |

| Item | Unstandardized Coefficients | Standardized Coefficients | t | Sig. | Collinearity Statistics | ||

|---|---|---|---|---|---|---|---|

| B | Std. Error | Beta | Tolerance | VIF | |||

| (Constant) | −0.455 | 0.286 | −1.589 | 0.122 | |||

| IME | 0.365 | 0.107 | 0.35 | 3.417 | 0.002 | 0.311 | 3.215 |

| IMC | 0.318 | 0.134 | 0.27 | 2.374 | 0.024 | 0.253 | 3.957 |

| IMSC | 0.416 | 0.139 | 0.388 | 2.998 | 0.005 | 0.195 | 5.139 |

| Variable | Min | Max | Skew | C.R. | Kurtosis | C.R. |

|---|---|---|---|---|---|---|

| IME | 2.2 | 5 | −0.597 | −2.109 | 0.215 | 0.38 |

| IMC | 2 | 5 | −0.422 | −1.491 | 0.211 | 0.373 |

| IMSC | 2.14 | 5 | −0.595 | −2.103 | 0.381 | 0.674 |

| SOL | 1.8 | 5 | −0.647 | −2.287 | 1.175 | 2.077 |

| Multivariate | 19.294 | 12.059 |

| Cod | Item | Item-Total Correlation | Cronbach’s Alpha if Item Deleted | Factor Loadings | Cronbach’s Alpha Analysis |

|---|---|---|---|---|---|

| Improving evaluation | 0.909 | ||||

| IME | X1* | 0.680 | 0.978 | 0.685 | |

| X2* | 0.810 | 0.977 | 0.869 | ||

| X3* | 0.656 | 0.978 | 0.743 | ||

| X4* | 0.660 | 0.978 | 0.789 | ||

| X5* | 0.704 | 0.978 | 0.852 | ||

| Improving communication | 0.929 | ||||

| IMC | A1* | 0.693 | 0.978 | 0.797 | |

| A2* | 0.721 | 0.978 | 0.837 | ||

| A3* | 0.744 | 0.978 | 0.824 | ||

| A4* | 0.733 | 0.978 | 0.795 | ||

| A5* | 0.782 | 0.978 | 0.879 | ||

| A6* | 0.737 | 0.978 | 0.780 | ||

| A7* | 0.822 | 0.977 | 0.837 | ||

| Improving scientific content | 0.941 | ||||

| IMSC | B1* | 0.778 | 0.978 | 0.858 | |

| B2* | 0.771 | 0.978 | 0.843 | ||

| B3 | 0.677 | 0.978 | 0.791 | ||

| B4 | 0.688 | 0.978 | 0.688 | ||

| B5 | 0.768 | 0.978 | 0.826 | ||

| Satisfaction of learning | 0.954 | ||||

| SOL | C1* | 0.727 | 0.978 | 0.813 | |

| C2* | 0.765 | 0.978 | 0.840 | ||

| C3* | 0.738 | 0.978 | 0.854 | ||

| C4* | 0.837 | 0.977 | 0.798 | ||

| C5* | 0.833 | 0.977 | 0.862 | ||

| C6* | 0.884 | 0.977 | 0.834 | ||

| C7* | 0.731 | 0.978 | 0.851 | ||

| C8* | 0.753 | 0.978 | 0.774 | ||

| C9* | 0.725 | 0.978 | 0.866 | ||

| C10* | 0.732 | 0.978 | 0.764 | ||

| Total | 0.978 | ||||

| Fornell–Larcker Criterion | Construct Reliability and Validity | |||||||

|---|---|---|---|---|---|---|---|---|

| Item | IME | IMC | IMSC | SOL | CA | RA | CR | AVE |

| IME | 0.791 | 0.847 | 0.853 | 0.892 | 0.625 | |||

| IMC | 0.849 | 0.822 | 0.920 | 0.921 | 0.936 | 0.675 | ||

| IMSC | 0.830 | 0.846 | 0.804 | 0.861 | 0.865 | 0.901 | 0.646 | |

| SOL | 0.834 | 0.836 | 0.897 | 0.826 | 0.948 | 0.955 | 0.956 | 0.683 |

| Effect | Wilks’ λ | F | df1 | df2 | Sig. | η2 | Result |

|---|---|---|---|---|---|---|---|

| Intercept | 0.798 | 5.636 | 3 | 67 | 0.002 | 0.202 | Supported |

| IME | 0.304 | 51.208 | 3 | 67 | 0.000 | 0.696 | Supported |

| Specialty | 0.760 | 3.279 | 6 | 134 | 0.005 | 0.128 | Supported |

| IME - Specialty | 0.803 | 2.585 | 6 | 134 | 0.021 | 0.104 | Supported |

| Name of Test | Item | F | df1 | df2 | Sig. |

|---|---|---|---|---|---|

| Levene’s Test of Equality of Error Variances | IMC | 1.847 | 2 | 72 | 0.165 |

| IMSC | 0.456 | 2 | 72 | 0.636 | |

| SOL | 0.027 | 2 | 72 | 0.974 | |

| Box’s M | 15.127 | 1.102 | 12 | 1257.455 | 0.354 |

| H | Independent | Relationship | Dependent V. | Estimate | S.E. | C.R. | p | Result |

|---|---|---|---|---|---|---|---|---|

| H2 | IME | ⟶ | IMC | 0.849 | 0.119 | 6.478 | *** | Supported |

| H3 | IME | ⟶ | IMSC | 0.834 | 0.138 | 6.707 | *** | Supported |

| H4 | IME | ⟶ | SOL | 0.830 | 0.149 | 6.614 | *** | Supported |

| H5 | IME | ⟶ | Personalized L. | 0.791 | 0.123 | 6.491 | *** | Supported |

| H6 | IME | ⟶ | Distance L. | 0.803 | 0.128 | 6.154 | *** | Supported |

| H7 | IME | ⟶ | Mobile L. | 0.721 | 0.123 | 6.097 | *** | Supported |

| H8 | IME | ⟶ | Self L. | 0.833 | 0.155 | 6.547 | *** | Supported |

| H9 | IME | ⟶ | Specialty L. | 0.765 | 0.123 | 6.245 | *** | Supported |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ozdamli, F.; Ababneh, M.; Karagozlu, D.; Aljarrah, A. Development and Testing of Performance Scale Application as an Effective Electronic Tool to Enhance Students’ Academic Achievements. Electronics 2022, 11, 4023. https://doi.org/10.3390/electronics11234023

Ozdamli F, Ababneh M, Karagozlu D, Aljarrah A. Development and Testing of Performance Scale Application as an Effective Electronic Tool to Enhance Students’ Academic Achievements. Electronics. 2022; 11(23):4023. https://doi.org/10.3390/electronics11234023

Chicago/Turabian StyleOzdamli, Fezile, Mustafa Ababneh, Damla Karagozlu, and Aayat Aljarrah. 2022. "Development and Testing of Performance Scale Application as an Effective Electronic Tool to Enhance Students’ Academic Achievements" Electronics 11, no. 23: 4023. https://doi.org/10.3390/electronics11234023

APA StyleOzdamli, F., Ababneh, M., Karagozlu, D., & Aljarrah, A. (2022). Development and Testing of Performance Scale Application as an Effective Electronic Tool to Enhance Students’ Academic Achievements. Electronics, 11(23), 4023. https://doi.org/10.3390/electronics11234023