SUKRY: Suricata IDS with Enhanced kNN Algorithm on Raspberry Pi for Classifying IoT Botnet Attacks

Abstract

:1. Introduction

- SUKRY, a novel Suricata IDS with an enhanced kNN algorithm on the Raspberry Pi machine.

- The use of five evaluation factors (accuracy, precision, recall, F1 score and execution time) to justify the best among three feature selection techniques in enhancing the performance of the kNN algorithm is novel.

- The application of kNN-FS (a combination between the kNN algorithm and the Forward Selection technique) as the core machine learning model in developing SUKRY.

2. Relevant Studies

3. Methodology

3.1. Dataset

3.2. Dataset Preprocessing

3.3. Feature Selection Techniques and kNN Algorithm

3.4. Performance Analysis

3.5. SUKRY Implementation

4. Analysis and Discussion

4.1. kNN Algorithm with Information Gain (kNN-IG)

4.2. kNN Algorithm with Forward Selection (kNN-FS)

4.3. kNN Algorithm with Backward Elimination (kNN-BE)

4.4. Performance Comparison

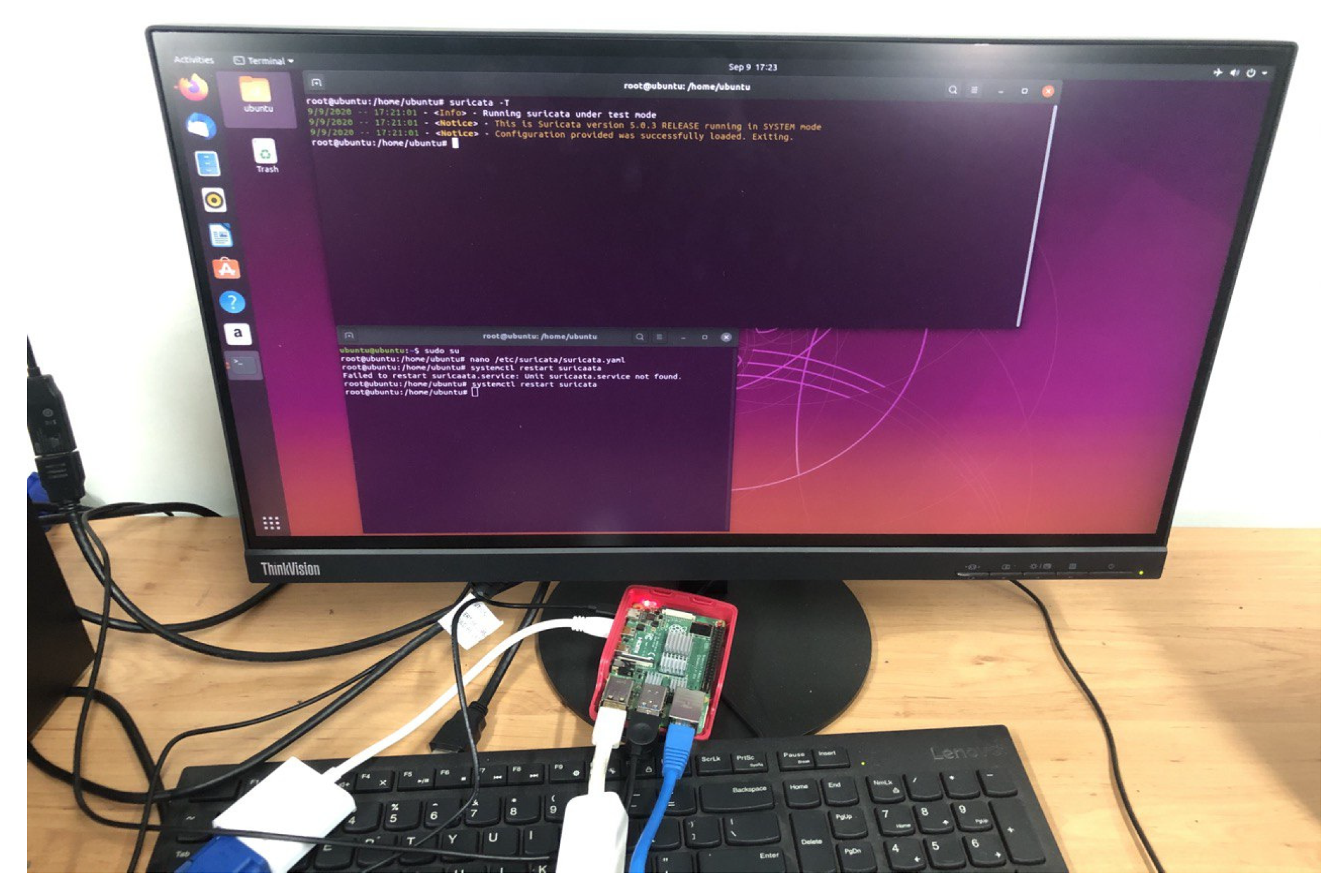

4.5. SUKRY Implementation

- -

- Obtain Raspberry Pi OS from https://www.raspberrypi.com/software/ (accessed on 20 December 2020) [55];

- -

- Install Raspberry Pi OS on the Raspberry Pi machine;

- -

- Obtain Suricata from https://suricata.io/download/ (accessed on 20 December 2020) [56];

- -

- Install Suricata on Raspberry Pi OS;

- -

- Obtain OPNIDS from https://github.com/OPNids (accessed on 20 December 2020) [57];

- -

- Install OPNIDS over Suricata to enable machine learning models being implemented over Suricata;

- -

- Running kNN-FS model using OPNIDS.

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sicari, S.; Rizzardi, A.; Coen-Porisini, A. 5G in the internet of things era: An overview on security and privacy challenges. Comput. Netw. 2020, 179, 107345. [Google Scholar] [CrossRef]

- Stoyanova, M.; Nikoloudakis, Y.; Panagiotakis, S.; Pallis, E.; Markakis, E.K. A survey on the internet of things (IoT) forensics: Challenges, approaches, and open issues. IEEE Commun. Surv. Tutor. 2020, 22, 1191–1221. [Google Scholar] [CrossRef]

- Sisinni, E.; Saifullah, A.; Han, S.; Jennehag, U.; Gidlund, M. Industrial internet of things: Challenges, opportunities, and di-rections. IEEE Trans. Industr. Inform. 2018, 14, 4724–4734. [Google Scholar] [CrossRef]

- Agadakos, I.; Chen, C.Y.; Campanelli, M.; Anantharaman, P.; Hasan, M.; Copos, B.; Lindqvist, U. Jumping the air gap: Mod-eling cyber-physical attack paths in the Internet-of-Things. In Proceedings of the 2017 Workshop on Cyber-Physical Systems Security and PrivaCy, Dallas, TX, USA, 3 November 2017; pp. 37–48. [Google Scholar]

- Radanliev, P.; De Roure, D.C.; Nicolescu, R.; Huth, M.; Montalvo, R.M.; Cannady, S.; Burnap, P. Future developments in cyber risk assessment for the internet of things. Comput. Ind. 2018, 102, 14–22. [Google Scholar] [CrossRef]

- Bertino, E.; Islam, N. Botnets and internet of things security. Computer 2017, 50, 76–79. [Google Scholar] [CrossRef]

- Sun, L.; Du, Q. A Review of Physical Layer Security Techniques for Internet of Things: Challenges and Solutions. Entropy 2018, 20, 730. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zitta, T.; Neruda, M.; Vojtech, L. The security of RFID readers with IDS/IPS solution using Raspberry Pi. In Proceedings of the 2017 18th International Carpathian Control Conference, Sinaia, Romania, 28–31 May 2017; pp. 316–320. [Google Scholar]

- Tirumala, S.S.; Sathu, H.; Sarrafzadeh, A. Free and open source intrusion detection systems: A study. In Proceedings of the 2015 International Conference on Machine Learning and Cybernetics (ICMLC), Guangzhou, China, 12–15 July 2015; Volume 1, pp. 205–210. [Google Scholar]

- Guo, Z.; Harris, I.G.; Jiang, Y.; Tsaur, L.F. An efficient approach to prevent battery exhaustion attack on BLE-based mesh networks. In Proceedings of the 2017 International Conference on Computing, Networking and Communications (ICNC), Santa Clara, CA, USA, 26–29 January 2017; pp. 1–5. [Google Scholar]

- Anthi, E.; Williams, L.; Burnap, P. Pulse: An Adaptive Intrusion Detection for the Internet of Things. In Proceedings of the Living in the Internet of Things: Cybersecurity of the IoT-2018, London, UK, 28–29 March 2018; p. 35. [Google Scholar]

- Liao, Y.; Vemuri, V. Use of K-Nearest Neighbor classifier for intrusion detection. Comput. Secur. 2002, 21, 439–448. [Google Scholar] [CrossRef]

- Binkley, J.R.; Singh, S. An Algorithm for Anomaly-Based Botnet Detection; SRUTI 6; USENIX: Berkeley, CA, USA, 2006; p. 7. [Google Scholar]

- Kondo, S.; Sato, N. Botnet traffic detection techniques by C&C session classification using SVM. In Advances in Information and Computer Security; Springer: Berlin/Heidelberg, Germany, 2007; pp. 91–104. ISBN 9783540756507. [Google Scholar]

- Seufert, S.; O’Brien, D. Machine learning for automatic defence against distributed denial of service attacks. In Proceedings of the 2007 IEEE International Conference on Communications, Glasgow, Scotland, 24–28 June 2007; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar]

- Vargas, H.; Lozano-Garzon, C.; Montoya, G.A.; Donoso, Y. Detection of Security Attacks in Industrial IoT Networks: A Blockchain and Machine Learning Approach. Electronics 2021, 10, 2662. [Google Scholar] [CrossRef]

- Berral, J.L.; Poggi, N.; Alonso, J.; Gavaldà, R.; Torres, J.; Parashar, M. Adaptive distributed mechanism against flooding network attacks based on machine learning. In Proceedings of the 1st ACM workshop on Workshop on AISec–AISec ’08, Alexandria, VA, USA, 27 October 2008; ACM Press: New York, NY, USA, 2008. [Google Scholar]

- Eslahi, M.; Salleh, R.; Anuar, N.B. Bots and botnets: An overview of characteristics, detection and challenges. In Proceedings of the 2012 IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, 23–25 November 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Simkhada, E.; Shrestha, E.; Pandit, S.; Sherchand, U.; Dissanayaka, A.M. Security threats/attacks via botnets and botnet detection & prevention techniques in computer networks: A review. In Proceedings of the Midwest Instruction and Computing Symposium (MICS); North Dakota State University: Fargo, ND, USA, 2019. [Google Scholar]

- Rashid, M.; Kamruzzaman, J.; Hassan, M.; Imam, T.; Gordon, S. Cyberattacks Detection in IoT-Based Smart City Applications Using Machine Learning Techniques. Int. J. Environ. Res. Public Health 2020, 17, 9347. [Google Scholar] [CrossRef]

- Dwibedi, S.; Pujari, M.; Sun, W. A comparative study on contemporary intrusion detection “datasets” for machine learning research. In Proceedings of the 2020 IEEE International Conference on Intelligence and Security Informatics (ISI), Arlington, VA, USA, 9–10 November 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Pacheco, Y.; Sun, W. Adversarial Machine Learning: A Comparative Study on Contemporary Intrusion Detection Datasets. In Proceedings of the 7th International Conference on Information Systems Security and Privacy, Austria, Vienna, 11–13 February 2021. [Google Scholar]

- Aswal, K.; Dobhal, D.C.; Pathak, H. Comparative analysis of machine learning algorithms for identification of BOT attack on the Internet of Vehicles (IoV). In Proceedings of the 2020 International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 26–28 February 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Hasan, M.; Islam, M.; Zarif, M.; Hashem, M. Attack and anomaly detection in IoT sensors in IoT sites using machine learning approaches. Internet Things 2019, 7, 100059. [Google Scholar] [CrossRef]

- Bedi, P.; Mewada, S.; Vatti, R.; Singh, C.; Dhindsa, K.; Ponnusamy, M.; Sikarwar, R. Detection of attacks in IoT sensors networks using machine learning algorithm. Microprocess. Microsyst. 2021, 82, 103814. [Google Scholar] [CrossRef]

- Singh, K.; Guntuku, S.C.; Thakur, A.; Hota, C. Big Data Analytics framework for Peer-to-Peer Botnet detection using Random Forests. Inf. Sci. 2014, 278, 488–497. [Google Scholar] [CrossRef]

- Chen, J.; Li, K.; Tang, Z.; Bilal, K.; Yu, S.; Weng, C.; Li, K. A parallel random forest algorithm for big data in a spark cloud computing environment. IEEE Trans. Parallel Distrib. Syst. 2017, 28, 919–933. [Google Scholar] [CrossRef] [Green Version]

- Yusof, M.; Saudi, M.M.; Ridzuan, F. A new mobile botnet classification based on permission and API calls. In Proceedings of the 2017 Seventh International Conference on Emerging Security Technologies (EST), Canterbury, UK, 6–8 September 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Duan, M.; Li, K.; Liao, X.; Li, K. A parallel multiclassification algorithm for big data using an extreme learning machine. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2337–2351. [Google Scholar] [CrossRef]

- Vengatesan, K.; Kumar, A.; Parthibhan, M.; Singhal, A.; Rajesh, R. Analysis of Mirai botnet malware issues and its prediction methods in internet of things. In Lecture Notes on Data Engineering and Communications Technologies; Springer International Publishing: Cham, Switzerland, 2020; pp. 120–126. ISBN 9783030246426. [Google Scholar]

- Marjani, M.; Nasaruddin, F.; Gani, A.; Karim, A.; Hashem, I.A.T.; Siddiqa, A.; Yaqoob, I. Big IoT data analytics: Architecture, opportunities, and open research challenges. IEEE Access 2017, 5, 5247–5261. [Google Scholar]

- Gadelrab, M.S.; ElSheikh, M.; Ghoneim, M.A.; Rashwan, M. BotCap: Machine Learning Approach for Botnet Detection Based on Statistical Features. Int. J. Commun. Netw. Inf. Secur. 2018, 10, 563–579. [Google Scholar]

- Hoang, X.; Nguyen, Q. Botnet detection based on machine learning techniques using DNS query data. Future Internet 2018, 10, 43. [Google Scholar] [CrossRef] [Green Version]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J.; Alazab, A. A novel ensemble of Hybrid Intrusion Detection System for detecting Internet of Things attacks. Electronics 2019, 8, 1210. [Google Scholar] [CrossRef] [Green Version]

- Al-Mashhadi, S.; Anbar, M.; Hasbullah, I.; Alamiedy, T.A. Hybrid rule-based botnet detection approach using machine learning for analysing DNS traffic. PeerJ. Comput. Sci. 2021, 7, e640. [Google Scholar] [CrossRef]

- Wang, W.; Shang, Y.; He, Y.; Li, Y.; Liu, J. BotMark: Automated botnet detection with hybrid analysis of flow-based and graph-based traffic behaviors. Inf. Sci. 2020, 511, 284–296. [Google Scholar] [CrossRef]

- Rambabu, K.; Venkatram, N. Ensemble classification using traffic flow metrics to predict distributed denial of service scope in the Internet of Things (IoT) networks. Comput. Electr. Eng. 2021, 96, 107444. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J.; Alazab, A. Hybrid Intrusion Detection System Based on the Stacking Ensemble of C5 Decision Tree Classifier and One Class Support Vector Machine. Electronics 2020, 9, 173. [Google Scholar] [CrossRef] [Green Version]

- Moustafa, N.; Turnbull, B.; Choo, K. An Ensemble Intrusion Detection Technique Based on Proposed Statistical Flow Features for Protecting Network Traffic of Internet of Things. IEEE Internet Things J. 2019, 6, 4815–4830. [Google Scholar] [CrossRef]

- Farhat, S.; Abdelkader, M.; Meddeb-Makhlouf, A.; Zarai, F. Comparative study of classification algorithms for cloud IDS using NSL-KDD dataset in WEKA. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Celil, O.K.U.R.; Dener, M. Detecting IoT Botnet Attacks Using Machine Learning Methods. In Proceedings of the 2020 International Conference on Information Security and Cryptology (ISCTURKEY), Ankara, Turkey, 3–4 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 31–37. [Google Scholar]

- Soe, Y.N.; Feng, Y.; Santosa, P.I.; Hartanto, R.; Sakurai, K. Towards a Lightweight Detection System for Cyber Attacks in the IoT Environment Using Corresponding Features. Electronics 2020, 9, 144. [Google Scholar] [CrossRef] [Green Version]

- Churcher, A.; Ullah, R.; Ahmad, J.; ur Rehman, S.; Masood, F.; Gogate, M.; Alqahtani, F.; Nour, B.; Buchanan, W. An Experimental Analysis of Attack Classification Using Machine Learning in IoT Networks. Sensors 2021, 21, 446. [Google Scholar] [CrossRef] [PubMed]

- Mrabet, H.; Belguith, S.; Alhomoud, A.; Jemai, A. A Survey of IoT Security Based on a Layered Architecture of Sensing and Data Analysis. Sensors 2020, 20, 3625. [Google Scholar] [CrossRef]

- Wazirali, R. An Improved Intrusion Detection System Based on KNN Hyperparameter Tuning and Cross-Validation. Arabian J. Sci. Eng. 2020, 45, 10859–10873. [Google Scholar] [CrossRef]

- Kotu, V.; Deshpande, B. Predictive Analytics and Data Mining: Concepts and Practice with Rapidminer; Morgan Kaufmann: Burlington, MA, USA, 2014. [Google Scholar]

- Epishkina, A.; Zapechnikov, S. A syllabus on data mining and machine learning with applications to cybersecurity. In Proceedings of the 2016 Third International Conference on Digital Information Processing, Data Mining, and Wireless Communications (DIPDMWC), Moscow, Russia, 6–8 July 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Panthong, R.; Srivihok, A. Wrapper feature subset selection for dimension reduction based on ensemble learning algorithm. Procedia Comput. Sci. 2015, 72, 162–169. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.; Schowe, B.; Sivakumar, V.; Morik, K. Feature Selection for High-Dimensional Data with Rapidminer; Universitätsbibliothek Dortmund: Dortmund, Germany, 2012. [Google Scholar]

- Koroniotis, N.; Moustafa, N.; Sitnikova, E.; Turnbull, B. Towards the development of realistic botnet dataset in the Internet of Things for network forensic analytics: Bot-IoT dataset. Future Gener. Comput. Syst. 2019, 100, 779–796. [Google Scholar] [CrossRef] [Green Version]

- Ge, M.; Fu, X.; Syed, N.; Baig, Z.; Teo, G.; Robles-Kelly, A. Deep learning-based intrusion detection for IoT networks. In Proceedings of the 2019 IEEE 24th Pacific Rim International Symposium on Dependable Computing (PRDC), Kyoto, Japan, 1–3 December 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Alejandre, F.V.; Cortes, N.C.; Anaya, E.A. Feature selection to detect botnets using machine learning algorithms. In Proceedings of the 2017 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 22–24 February 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Su, S.; Sun, Y.; Gao, X.; Qiu, J.; Tian, Z. A correlation-change based feature selection method for IoT equipment anomaly detection. Appl. Sci. 2019, 9, 437. [Google Scholar] [CrossRef] [Green Version]

- Shobana, M.; Poonkuzhali, S. A Novel Approach for Detecting IoT Botnet Using Balanced Network Traffic Attributes. In Service-Oriented Computing—ICSOC 2020 Workshops; Springer International Publishing: Cham, Switzerland, 2021; pp. 534–548. ISBN 9783030763510. [Google Scholar]

- Raspberry, O.S. Available online: https://www.raspberrypi.com/software/ (accessed on 20 December 2020).

- Suricata. Available online: https://suricata.io/download/ (accessed on 20 December 2020).

- OpNIDS. Available online: https://github.com/OPNids (accessed on 20 December 2020).

- Muñoz, A.; Farao, A.; Correia, J.R.C.; Xenakis, C. P2ISE: Preserving Project Integrity in CI/CD Based on Secure Elements. Information 2021, 12, 357. [Google Scholar] [CrossRef]

- Bahsi, H.; Nomm, S.; La Torre, F.B. Dimensionality reduction for machine learning based IoT botnet detection. In Proceedings of the 2018 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), Singapore, 18–21 November 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Kumar, A.; Lim, T.J. EDIMA: Early detection of IoT malware network activity using machine learning techniques. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

| Features | Description |

|---|---|

| pkSeqID | Row Identifier |

| Stime | Record start time |

| Flgs | Flow state flags seen in transactions |

| flgs_number | Numerical representation of feature flags |

| Proto | Textual representation of transaction protocols present in network flow |

| proto_number | Numerical representation of feature proto |

| Saddr | Source IP address |

| Sport | Source port number |

| daddr | Destination IP address |

| port | Destination port number |

| Pkts | Total count of packets in transactions |

| Bytes | Total number of bytes in transactions |

| state | Transaction state |

| state_number | Numerical representation of feature state |

| Ltime | Last time record |

| Seq | Argus sequence number |

| Dur | Record total duration |

| mean | Average duration of aggregated records |

| Stddev | Standard deviation of aggregated records |

| Sum | Total duration of aggregated records |

| Min | Minimum duration of aggregated records |

| Max | Maximum duration of aggregated records |

| Spkts | Source-to-destination packet count |

| Dpkts | Destination-to-source packet count |

| Sbytes | Source-to-destination byte count |

| Dbytes | Destination-to-source byte count |

| Rate | Total packets per second in transaction |

| Srate | Source-to-destination packets per second |

| Drate | Destination-to-source packets per second |

| TnBPSrcIP | Total Number of bytes per source IP |

| TnBPDstIP | Total Number of bytes per Destination IP. |

| TnP_PSrcIP | Total Number of packets per source IP. |

| TnP_PDstIP | Total Number of packets per Destination IP. |

| TnP_PerProto | Total Number of packets per protocol. |

| TnP_Per_Dport | Total Number of packets per port |

| AR_P_Proto_P_SrcIP | Average rate per protocol per Source IP. (calculated by pkts/dur) |

| AR_P_Proto_P_DstIP | Average rate per protocol per Destination IP. |

| N_IN_Conn_P_SrcIP | Number of inbound connections per source IP. |

| N_IN_Conn_P_DstIP | Number of inbound connections per destination IP. |

| AR_P_Proto_P_Sport | Average rate per protocol per sport |

| AR_P_Proto_P_Dport | Average rate per protocol per port |

| Pkts_P_State_P_Protocol_P_DestIP | Number of packets grouped by state of flows and protocols per destination IP. |

| Pkts_P_State_P_Protocol_P_SrcIP | Number of packets grouped by state of flows and protocols per source IP. |

| Attack | Class label: 0 for Normal traffic, 1 for Attack Traffic |

| Category | Traffic categories |

| Subcategory | Traffic subcategory |

| A | B | C | D | E | Precision | |

|---|---|---|---|---|---|---|

| A = DdoS | X11 | X12 | X13 | X14 | X15 | P1 |

| B = Recons | X21 | X22 | X23 | X24 | X25 | P2 |

| C = DoS | X31 | X32 | X33 | X34 | X35 | P3 |

| D = Normal | X41 | X42 | X43 | X44 | X45 | P4 |

| E = Theft | X51 | X52 | X53 | X54 | X55 | P5 |

| Recall | R1 | R2 | R3 | R4 | R5 |

| Original Number of Features | Number of Features after IG |

|---|---|

| 46 | 23 |

| Original Number of Features | Number of Features after FS |

|---|---|

| 46 | 8 |

| Original Number of Features | Number of Features after BE |

|---|---|

| 46 | 35 |

| Accuracy (%) | Recall (%) | Precision (%) | F1 Score (%) | Time (s) | |

|---|---|---|---|---|---|

| kNN-IG | 99.72 | 96.26 | 99.56 | 97.88 | 14 |

| kNN-FS | 99.89 | 97.82 | 99.77 | 98.78 | 7 |

| kNN-BE | 99.86 | 97.29 | 99.05 | 98.16 | 18 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Syamsuddin, I.; Barukab, O.M. SUKRY: Suricata IDS with Enhanced kNN Algorithm on Raspberry Pi for Classifying IoT Botnet Attacks. Electronics 2022, 11, 737. https://doi.org/10.3390/electronics11050737

Syamsuddin I, Barukab OM. SUKRY: Suricata IDS with Enhanced kNN Algorithm on Raspberry Pi for Classifying IoT Botnet Attacks. Electronics. 2022; 11(5):737. https://doi.org/10.3390/electronics11050737

Chicago/Turabian StyleSyamsuddin, Irfan, and Omar Mohammed Barukab. 2022. "SUKRY: Suricata IDS with Enhanced kNN Algorithm on Raspberry Pi for Classifying IoT Botnet Attacks" Electronics 11, no. 5: 737. https://doi.org/10.3390/electronics11050737

APA StyleSyamsuddin, I., & Barukab, O. M. (2022). SUKRY: Suricata IDS with Enhanced kNN Algorithm on Raspberry Pi for Classifying IoT Botnet Attacks. Electronics, 11(5), 737. https://doi.org/10.3390/electronics11050737