1. Introduction

SSDs (solid-state drives) based on NAND flash memory are attractive due to various benefits such as high I/O performance, low power consumption, and shock resistance [

1]. However, because of erase-before-write constraints and limited erase count of NAND flash memory, the increase in write requests adversely affects the lifespan of the SSD. Therefore, most SSDs use DRAM-based volatile write buffers inside the SSD to improve the write performance and the life of the SSD by absorbing the number of writes in NAND flash memory. However, the volatile write buffers do not guarantee the persistence of buffered data in the event of a sudden power-off [

2,

3].

Therefore, the fsync() system call should be invoked after executing the write command to flush buffered data from SSD internal write buffer to NAND flash memory. However, frequent flushing causes the degradation of I/O performance and reduces the efficiency of internal write buffers [

4]. Therefore, SSDs employ the power-loss-protection (PLP) logic, which safely writes data from the internal write buffer to NAND flash memory using back-up power of SSD-internal capacitors in the event of a sudden power-off. It is mainly used for enterprise SSDs in data centers that require high reliability [

5].

However, the existing PLP method has two limitations. The first is that, as the capacity of the SSD increases, the capacity of the internal write buffer also increases, but the number of capacitors cannot be increased infinitely due to the limitation of the space inside the SSD and the unstable price of capacitor material (Tantalum) [

6,

7]. The second is that in the existing block device interface it is not possible to distinguish data that require persistence in the internal buffer. Therefore, to achieve high reliability, persistence must be guaranteed for the entire area of the internal buffer in case of power failure, which greatly increases the required capacity of the capacitor.

The SSD internal buffer is used to store not only user data but also meta information of the FTL (Flash Translation Layer), such as a mapping table. In particular, legacy SSDs typically consume most of the in-storage DRAM for a page-level mapping table. Therefore, existing studies have mainly focused on minimizing the capacitance required to ensure the persistence of the mapping table in the event of a sudden power-off. SpartanSSD [

6], the latest study on PLP, reduced the amount of the capacitance dramatically by recording a journal for mapping table updates and guaranteeing persistence only for the mapping table journal in case of sudden power-off. On the other hand, since the size of user data is negligible compared to the mapping table, it was not seriously considered in previous studies.

However, in ZNS SSDs [

8,

9], the amount of capacitance required for PLP mainly depends on the size of internal write buffer because of two reasons as follows. First, unlike conventional block-interface SSDs, ZNS SSDs do not need to retain a large internal mapping table in SSD internal DRAM. ZNS (Zoned Namespace) is a new storage interface that divides NAND flash memory into fixed-size zones that must be sequentially written. Additionally, because the ZNS interface exposes the zone to the host, the SSD internal mapping table is not required. Therefore, ZNS SSDs can utilize the DRAM buffer as a large write buffer for user data instead of using it to store the mapping table. The second reason is that ZNS SSDs need a much larger write buffer than legacy SSDs to support many I/O streams. The ZNS interface supports performance isolation and minimizes garbage collection overhead by allowing the host to allocate separate zones to different I/O streams. However, because of the size difference between the program unit of NAND flash (typically, multiple NAND pages aggregated across several NAND planes to maximize internal parallelism) and the page size of the host (typically, 4 KB), host writes should be buffered before NAND write. Therefore, each stream needs its own buffer space for write buffering [

7]. Recently, ZNS SSDs have been required to support more than thousands of I/O streams. As SSD capacity increases, the number of multi-streams also increases, so ZNS SSDs will require larger write buffers in the future. We need to consider that NVMe can support 65 K streams and write unit size of NAND flash will increase to maximize the internal parallelism of SSDs. Therefore, we need to reduce the amount of capacitance while ensuring durability of write buffer, because capacitors cannot be added indefinitely due to the limitation of SSD internal space and the cost. Although there have been studies on reducing the number of capacitors by limiting the size of dirty data in the write buffer, the efficiency of the write buffer can be reduced due to frequent flushing [

10].

Therefore, in this paper, we propose a set of techniques that can minimize the amount of capacitance required for PLP while retaining data integrity during sudden power-off. At first, we propose the selective power-loss-protection method, which selectively guarantees the persistence of user data in the SSD internal buffer. This technique was based on the following observation: most file systems or databases have their own way of ensuring data integrity, such as WAL (Write-Ahead Log) and journaling techniques [

11,

12]. Therefore, it is sufficient to ensure the durability of the journal or WAL stored in the volatile buffer for data recovery. So, we modified the ZNS (Zoned Namespace) write command to deliver PLP-enable request for specific data to an SSD. The proposed selective-PLP was implemented by modifying FEMU (QEMU-based Flash Emulator) and evaluated using RocksDB. The experimental results show that it reduced the amount of SSD-internal capacitance by 50 to 90%. The second method is to shorten the PLP execution time by writing the user data in the SSD internal buffer to the NAND flash memory in parallel as much as possible. For ZNS SSD, because the host determines the zone to which data is written, the data in the write buffer must be recorded in the pre-designated zone during PLP. However, when write requests are concentrated in a specific NAND plane, the PLP latency increases, which means that the SSD-internal capacitance should be increased. Therefore, in this paper, we propose a technique for temporarily recording buffered data in multiple planes to maximize parallelism. Here, the temporarily recorded data is copied to the original location when power is restored. This technique was also implemented by modifying the FEMU, and according to experimental results, the PLP latency was reduced by up to 96%.

The rest of the paper is organized as follows.

Section 2 briefly describes the related background topics, such as a ZNS SSD and PLP.

Section 3 reviews previous studies on supporting battery-backed durable cache to the SSD and reducing the number of capacitors required for PLP.

Section 4 presents the selective-PLP method to ensure the durability only for WAL and the balanced flush method to write buffered data to multiple NAND planes in parallel to minimize the latency of PLP. In

Section 5, we evaluate the proposed methods with FEMU and RocksDB. Finally, we conclude this paper in

Section 6.

2. Background

In this section, we introduce some backgrounds related to our paper. Note that some important abbreviations are explained in Abbreviations part.

2.1. Conventional SSDs vs. ZNS SSDs

NAND flash memory-based SSDs achieve a significant performance improvement compared to conventional HDDs (hard disk drives) [

13]. However, because of erase-before-write constraints of NAND flash memory, SSDs employ firmware-level software FTL, which manages the L2P(Logical-to-Physical) mapping table to support out-place updates and garbage collection. As a result, a host can use an SSD as traditional block device through FTL [

14,

15]. However, because the erase unit (i.e., NAND block) is much larger than read/write unit (i.e., NAND page) in NAND flash memory, SSDs suffer from write amplification caused by valid page copies during garbage collection. Moreover, this can be worsened because SSD cannot receive any useful information from the host, allowing it to differentiate between hot and cold data. Therefore, host-managed SSD interfaces such as ZNS [

8] and open-channel SSD [

16] get attraction because they allow the host to perform data placement to minimize the garbage collection overhead.

In particular, the ZNS is an emerging storage interface that was recently defined by NVMe (NVM Express) specification [

17]. The ZNS SSD divides NAND flash memory into fixed-size zones, which must be written sequentially(

Figure 1) [

9]. Moreover, zones are exposed to the host, allowing the host to control the data placement on SSDs. Consequently, the ZNS SSD has several advantages compared to conventional SSDs. At first, the ZNS SSD does not require an SSD internal mapping table, whereas the legacy block-interface SSDs require a large mapping table, typically equivalent to 0.1% of the storage capacity. Therefore, the ZNS SSD can utilize SSD internal DRAM as a large write buffer instead of the internal mapping table. This improves the SSD’s I/O performance and lifespan by reducing the number of NAND writes [

18]. Second, the garbage collection overhead can be reduced dramatically by data placement considering data hotness and I/O stream. On the other hand, a user-level library (e.g., libzbd) or ZNS-aware file system (e.g., zonefs, F2FS and BtrFS) is required to manipulate ZNS SSDs [

9]. For example, RocksDB, a representative key-value store, can use ZNS SSDs through ZenFS, which is a file system plugin for RocksDB developed by Western Digital [

19].

2.2. Power-Loss Protection

Most SSDs have a DRAM-based volatile buffer to improve I/O performance and expand the lifespan of SSDs. However, a volatile buffer does not guarantee the durability of the data during sudden power-off [

2,

3]. Therefore, enterprise SSDs employ power-loss protection, which writes all buffered data to the NAND flash memory by consuming back-up power of the capacitors [

20]. As you can see in

Figure 2, power supply is switched from the external power source to the internal capacitor bank when a power-loss event is detected. Note that the amount of the capacitors required for the PLP is proportional to the size of the volatile buffer [

6,

7,

18].

Typically, SSDs use an internal buffer to store not only user data but also meta data for FTL. However, the conventional block-interface SSD consumes most of volatile buffer to store a page-level mapping table with a size of 0.1% of the storage capacity. Therefore, the conventional SSDs use more than 97% of the DRAM buffer for the mapping table, while only a few megabytes can be used as a user data buffer [

6]. As a result, the amount of capacitance required for PLP depends primarily on the size of the mapping table, not the size of the write buffer.

In other words, ZNS SSDs do not need to be concerned with the capacitance for guaranteeing the durability of mapping table because it does not use the internal mapping table. Instead, ZNS SSDs need a much larger size of write buffer to support many I/O streams. In multi-tenant systems, ZNS SSDs can provide performance isolation between different I/O streams and minimize garbage collection overhead by allowing the host to allocate separate zones to each I/O streams. However, because of the size difference between the program unit of NAND flash (typically, multiple NAND pages aggregated across several NAND planes to maximize internal parallelism) and the page size of the host (typically, 4 KB), host writes should be buffered before NAND write. Therefore, each I/O stream needs its own buffer space for write buffering. Note that each ZNS SSD defines maximum active zones, which determines the maximum number of I/O streams that can be used simultaneously. The maximum active zones depends on the size of write buffer and back-up capacitance. In addition, as the SSD capacity increases, the maximum active zone will increase, so the demand for the write buffer of the ZNS SSD will also increase in the future. However, because the back-up capacitance cannot increase indefinitely due to the limitation of SSD internal space and the cost, it is worth finding a way to reduce the amount of capacitance while ensuring the durability of the write buffer.

2.3. RocksDB and ZenFS

RocksDB is an LSM tree-based key-value engine [

12]. RocksDB uses a write buffer implemented as a Skip List called MemTable. Write buffers first try to improve performance by buffering I/O going to storage. All writes are written to the MemTable in memory and the WAL in a storage. When the size of the MemTable reaches a threshold, it is written to a storage in the format of a Sorted String Table (SST) file. Here, the WAL is created for data recovery in case of sudden power-off, and when the SST file is safely written to the SSD, it is deleted or transferred to other persistent storage.

ZenFS is an on-disk file system for Zoned Block Devices that comes as a plugin to RocksDB. It is a file system for transferring FTL functions to the host and uses ZNS SSDs operating in user space [

8]. ZenFS uses journal zones and data zones to manage file systems. The journal zone stores data on maintaining ZenFS file system, such as Super Block Extent Map, and the data zone stores file contents.

3. Related Work

DuraSSD [

18] proposed a battery-backed buffer cache to ensure atomicity of writes to the SSD. However, the traditional database engines use the fsync() system call to achieve write endurance, and SSD devices store cache as storage on command. In this process, typical database engines stop working while fsync() is being processed, which incurs a significant overhead in latency. Thus, DuraSSD achieved the performance improvement by eliminating flush commands from the file system with battery-backed durable cache.

Additionally, for fast writing, only the dirty data in the buffer are written. Since the buffer pool and in-memory data structures are small, all the data are written to the dump area and the abnormal end state is saved for recovery. Since the dump area must be created quickly in the event of a power loss, garbage collection is not performed, so it always remains available. When a system is rebooted after sudden power-off, the recovery process proceeds while charging the capacitors. If a power-loss situation is recognized by checking an abnormal shutdown state during the booting process, the recovery manager is called to recover the data stored in the dump area.

Viyojit [

10] aims to reduce the correlation between DRAM capacity and capacitor capacity by exploiting the distortion in the application working configuration to reduce the amount of capacitor required. To reduce the flush operation time, this study focused on only writing dirty pages in an actual power-loss situation. To prove this, the actual workload of Microsoft’s Azure blob storage, Cosmos, Page rank, and Search index serving production were analyzed. As a result, it was confirmed that only about 15% of the data of the entire file system were written within 1 h. The write-pattern analysis shows that the ratio of writes was small, but most of the writes consumed unique pages, or the ratio of writes was high and most of the unique pages were written for the overall workload. The study addresses this issue by limiting the number of dirty pages in the DRAM buffer in SSDs. Here, the dirty pages are managed using a list sorted by update order, similarly to the LRU. When the number of dirty pages reaches a threshold due to a high number of writes, the oldest dirty pages in the buffer are written to storage and removed from the dirty page list.

SpartanSSD [

6] is one of the most recent studies to reduce the capacitance for PLP of SSD. In this paper, the authors proposed a technique that guarantees durability only for the information necessary for the mapping table recovery in sudden power-off recovery (SPOR) instead of the entire mapping table. According to the paper, a technique called

elastic journaling was inspired by journaling or write-ahead logging used in filesystems or databases. SpartanSSD writes an update log of the mapping table in the journal area, which is maintained in a battery-backed part of the internal DRAM buffer to ensure durability. When the journal area is full, all the mapping table entries in the volatile memory are flushed to NAND flash memory and the journal area is reclaimed. Because SpartanSSD needs to guarantee durability only for the mapping table journal, not the entire mapping table, the required capacitance for PLP can be reduced by more than 97%. However, ZNS SSDs cannot enjoy the capacitance reduction effect of

elastic journaling because most of the internal DRAM buffer is used for user data. Therefore, in this paper, we propose a method to reduce the capacitance required for PLP while maintaining data integrity.

4. Selective Power-Loss Protection

4.1. Motivation

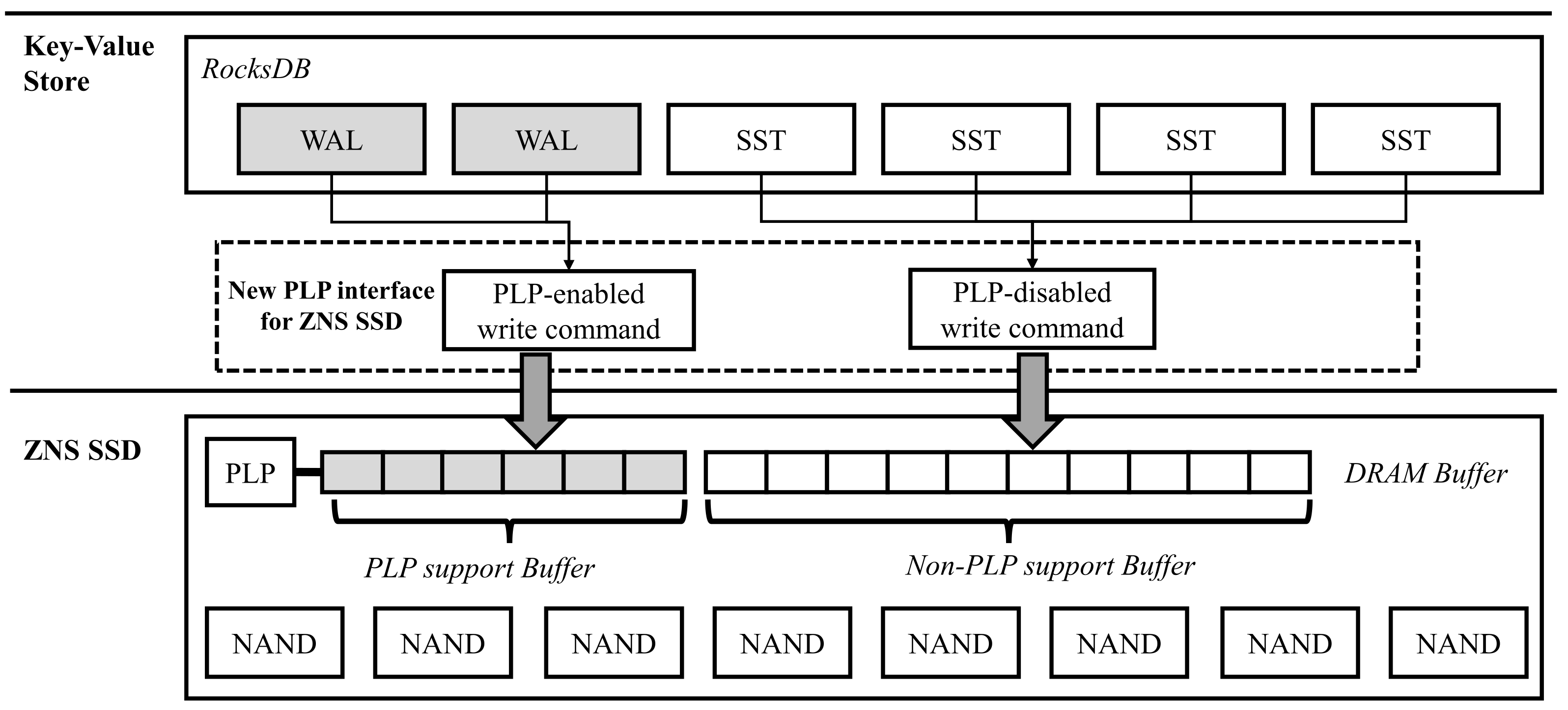

As mentioned above, conventional SSDs allocate most of their DRAM buffer to storing the mapping table. Therefore, the previous study focused on minimizing the capacitance required for maintaining durability of mapping table. However, in ZNS SSDs, the total capacitance required for PLP depends on the amount of user data in the volatile memory buffer, because ZNS SSDs do not use an internal mapping table. We are motivated by the fact that most filesystems and databases guarantee data integrity through their own recovery scheme, such as WAL and journaling. This means that there is no need to write the entire user data in the volatile memory buffer to the NAND flash memory in the event of sudden power-off. Even if the user data in the volatile memory buffer are lost during a sudden power-off, they can be recovered by using WAL or journal during SPOR. Therefore, in this section, we present a selective power-loss-protection method that guarantees durability only for the WAL or journal in the event of sudden power-off. As you can see in

Figure 3, the proposed method provides new PLP interface and PLP-support buffer management policy for ZNS SSD. Because of the WAL or the journal being much smaller than other user data, the capacitance required to persist with them during sudden power-off can be significantly reduced in comparison to the previous full-protection PLP.

4.2. Selective Power-Loss-Protection (Selective-PLP) Interface

To ensure durability of the WAL or journal during sudden power-off, they should be separated from the user data. Therefore, we present a new write interface to transfer a PLP flag with a write command. Here, the PLP flag indicates whether the data requested to be written needs to be durable during a sudden power-off. The latest NVMe specification supports PMR (Persistent Memory Region), persistent memory which is located on the NVMe SSD. According to the specification, the PMR feature enables the host to access persistent memory such as PRAM (Phase-change Random Access Memory) and MRAM (Magnetic RAM) or battery-backed DRAM installed in NVMe SSD via memory-mapped I/O. Therefore, we can consider that NVMe SSDs selectively guarantee the durability of user data by writing a journal or WAL to the PMR of NVMe. However, because the existing databases or filesystems usually record the WAL or journal through file I/O, replacing them with memory reads/writes to the PMR might require extensive code modifications. Moreover, since the correlation between PMR and zones is not yet clearly defined in the NVMe specification, implementation using PMR may be tricky. Therefore, to achieve an intuitive and simple implementation, we utilized the unused bit of the ZNS write command to transmit the PLP flag to ZNS SSDs. Moreover, we can customize a vendor-specific NVMe admin command to request durability for specific write requests. Because NVMe standard allows customizing NVMe admin commands, it may be a more practical way to apply the proposed Selective-PLP to the real products. However, in the case of using the additional admin command, the additional overhead should be evaluated. Therefore, in this paper, we focused on verifying our idea with the proof of concept implemented by utilizing an unused bit of existing write command.

We implemented a new write interface PLP_write(), which the host application will use to pass the PLP flag with the ZNS write command instead of pwrite(). As shown in

Figure 4, PLP_write() uses ioctl() to deliver PLP flag via the existing NVMe ZNS write command [

17]. Command Dword3 (CDW3), which is not used in the existing NVMe write command, is used to represent PLP flag. Note that durability of the write request is guaranteed if it is 1, otherwise it is not guaranteed. When a ZNS SSD receives a write request, CDW3 is checked to determine whether durability should be guaranteed. In other words, if PLP flag is 1, the user data is stored in the PLP-supported durable buffer. The proposed interface is implemented by using emulated ZNS SSD supported by FEMU [

21], an NVMe SSD emulator.

Figure 4 describes how the PLP_write() is handled through ZNS emulated by FEMU.

4.3. Buffer Management Scheme for Selective-PLP

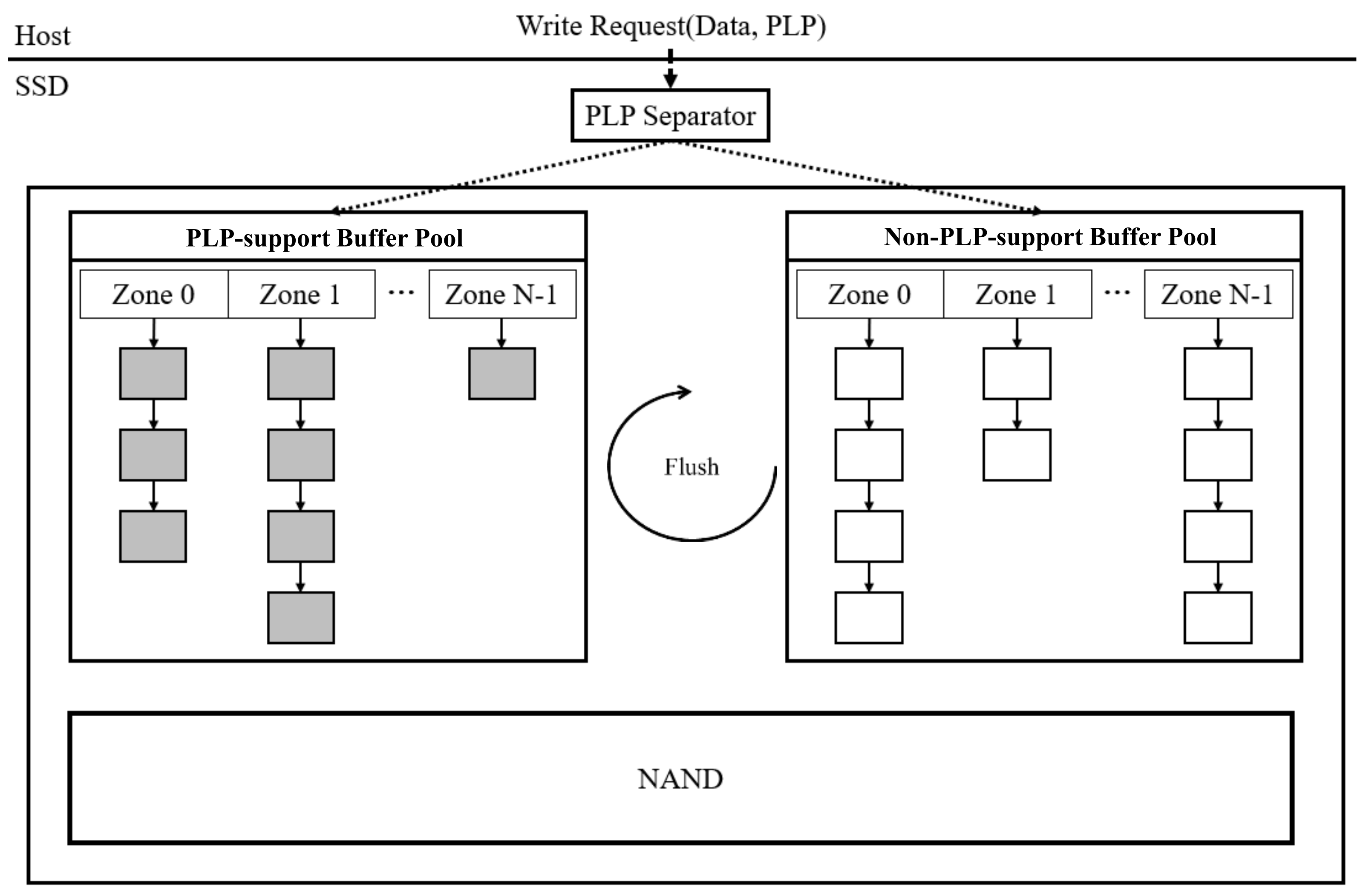

In this section, we present the buffer management scheme of the selective-PLP. As shown in

Figure 5, to support the selective-PLP, the DRAM buffer should be divided into PLP-support buffer region(PLP buffer) and non-PLP-support buffer region(non-PLP buffer). Moreover, because the host determines the data placement in ZNS SSDs, the zone associated with each buffered data has already been determined. Therefore, it is necessary to manage the buffer by zone. To manage buffer space, the

buffer hit table and the

buffer list are employed(described in

Figure 6). At first, the

buffer hit table maintains a reference bit for each sector because the LRU approximation algorithm based on a reference bit is used for buffer space management. Therefore, when a read command is received from the host, the ZNS SSD first scans the

buffer hit table to find the requested sector with sector number. Additionally, buffer units associated with each zone are maintained in a separated list. Second, the

buffer list is a data structure used to maintain the buffers associated with each zone. As mentioned above, because the buffered data should be written sequentially to the associated zone, it is efficient to maintain buffers per associated zone.

In addition, we modified the operation of the flush() command issued from the host for the PLP buffer based on the idea of DuraSSD [

18], which improved performance by removing the flush of the battery-backed durable cache. Essentially, the host calls the flush() command to ensure the durability of all the data in the volatile memory buffer. However, in the case of the PLP buffer, durability is already guaranteed due to the pre-loaded capacitors. Therefore, the number of NAND writes for the PLP buffer can be reduced by ignoring the flush() command for the PLP buffer. Moreover, because the flushing buffer means that new incoming requests are suspended until the flush operation is finished to ensure write ordering, the I/O performance significantly decreases. Therefore, the I/O performance is significantly improved by ignoring the flush() command for the PLP buffer. In addition, if the buffer usage exceeds 70% of the total capacity of each buffer, all the buffered data are written to the NAND flash memory. Note that the threshold of the buffer usage is a configurable parameter.

The buffer flush is implemented in two ways. First is to write the data in the buffer to the NAND flash memory, and the other is to discard the data without writing. The former is used when the buffer usage exceeds a predetermined threshold, or when a flush() command is issued by the host. The latter is used for the zones that are already reset. This means that all data contained in the zone are invalid due to deletion or out-place updates. Therefore, we can omit writing invalid data of the reset zone to the NAND flash memory.

The overall buffer management scheme consists of two steps. First, a buffer pool is created during the ZNS SSD initialization process. The buffer pool is composed of sector-sized buffers, and each buffer has its own metadata structure, a buffer header. Through this, the overhead of buffer allocation is reduced because the frequent memory allocation and deallocation operations can be omitted by using a pre-allocation buffer pool. Second, the buffer allocation and return process is as follows. When a buffer is requested for a new write request, a free buffer is allocated from the buffer pool. After that, data are written to the allocated buffer, and the buffer is inserted into the PLP or the non-PLP buffer list according to the PLP flag of the write request. If the buffer is used up, the buffer is removed from the buffer list and returned to the buffer pool.

4.4. ZenFS Modification for Selective-PLP

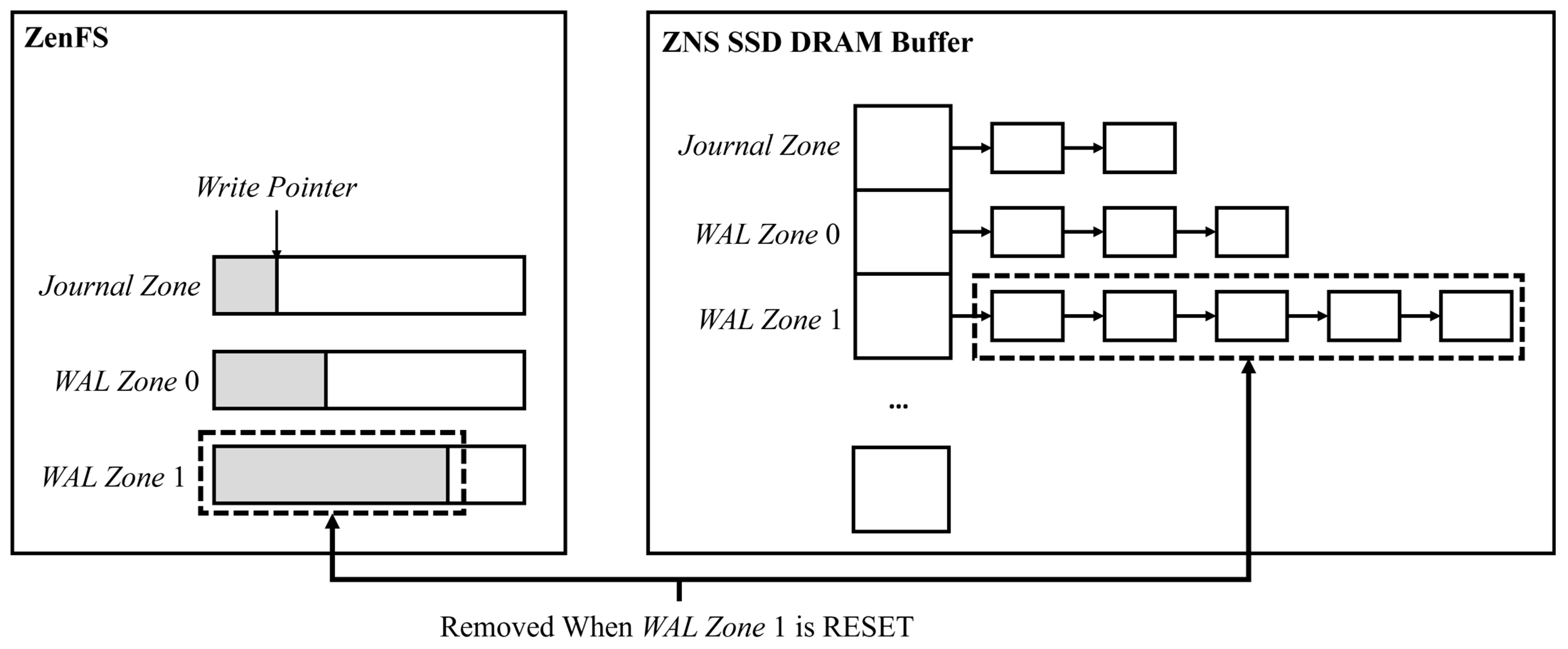

To apply selective-PLP to ZenFS of RocksDB, we modified ZenFS as follows. First, zones are divided into two categories: durable zones and normal zones. Here, a durable zone indicates that data written into the zone should be guaranteed to be durable by using PLP buffer. As shown in

Figure 7, we have two kinds of durable zone:

journal zone and

WAL zone. A

journal zone is used for metadata of ZenFS, such as Super Block, Zone Extent Mapping Table, and Extent Management. A

WAL zone is used for write-ahead log of RocksDB. On the other side, data written to normal zones are buffered in non-PLP buffer. STT files of RocksDB, which have key-value data, are buffered in non-PLP buffer. Therefore, in a sudden power-off, key-value data in the non-PLP buffer cannot have guaranteed durability. However, because the WAL in the PLP buffer is guaranteed a durability, RocksDB can recover the lost key-value data during sudden power-off recovery (SPOR) process.

The existing ZenFS stores files with a similar life cycle in the same zone. Therefore, to separate durable zones and normal zones, we added a criterion to distinguish whether it is a WAL file or ZenFS metadata. As a result, WAL file and ZenFS metadata are simply classified and allocated to the durable zone. Moreover, it was confirmed that ZenFS uses two zones for WAL. Additionally, because WAL is deleted after key-value data is normally written, NAND write for WAL can be eliminated by using PLP buffer with a size of two zones or more. In fact, in the experimental environment, since the size of a zone is 16 MB, only the 32 MB PLP buffer is enough to store WAL of RocksDB. Since the two WAL zones are written alternately, there is never a case where both have no free space simultaneously. As a result, in our experiments, a PLP buffer of size 32 MB is sufficient to ensure durability for WAL as well as metadata for ZenFS.

4.5. Balanced Flush to Minimize Flush Latency

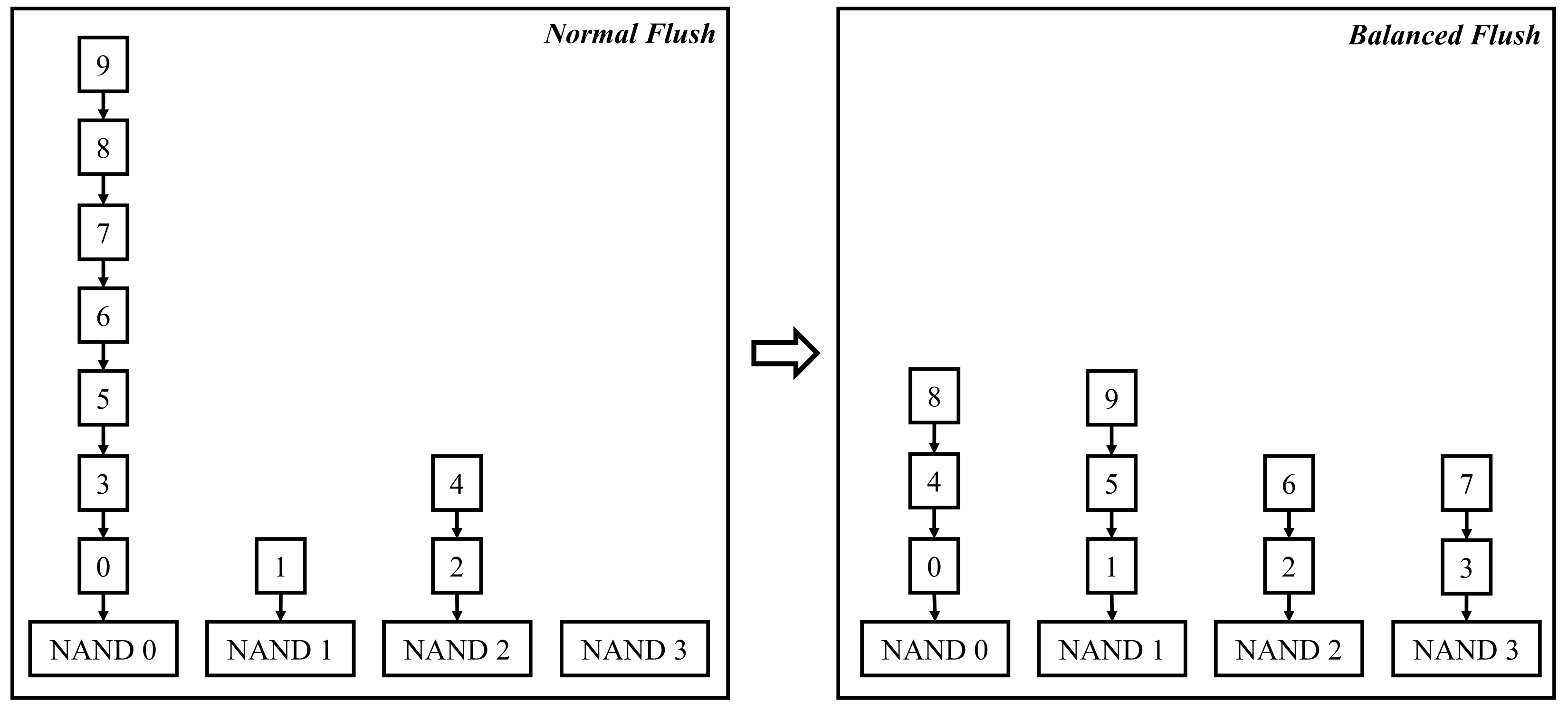

Due to the limited capacity of the capacitor, the buffered data in PLP buffer needs to be flushed quickly to NAND flash in a sudden power-loss situation. However, as you can see in

Figure 8, in a power-loss situation, the time taken for flushing increases if the physical addresses of the buffered data are concentrated in a specific NAND plane. To solve this problem, we propose a balanced flush method to minimize the time required for flushing PLP buffer to NAND flash.

As described in

Figure 8, the balanced flush temporarily writes the buffered data in multiple NAND planes to maximize parallelism. Here, the temporarily recorded data is copied to the original location when power is restored. The original address of the buffered data is stored in the OOB (Out-Of-Band) area of NAND page. As described in

Figure 9, each physical page of NAND flash memory has an OOB (Out-Of-Band) area [

22]. OOB can be used to store an additional information such as Bad Block Marking and ECC (Error Correction Codes), and is mainly used for error checking or recovery [

23]. Since OOB has sufficient space (typically, 1/32 of data area of NAND page) and no additional write latency, it is suitable for storing original address of buffered data. In the event of sudden power-off, the data in the PLP buffer is flushed to the NAND chip, which currently shows the lowest latency, not the original location specified by the host. Then, the original address is stored in the OOB area for data restoring. In SPOR, the OOB area is checked to determine whether the page is written by the balanced flush, then the page is restored to original location by using the address stored in OOB area.

Since FEMU [

21], the ZNS SSD emulator, operates based on memory, all data are removed when the actual emulator is shut down. Therefore, the proposed balanced flush method was verified by randomly generated sudden power-off event. First, when a sudden power-off event occurs at any point in time, locks are acquired for all buffer units. After that, all read commands are ignored while only write commands for the data in the PLP buffer are processed. After making two copies of the PLP buffer, they are used for the normal flush and the balanced flush, respectively. Moreover, since the delay time model of FEMU must also not interfere with each measurement, the delay time model is also copied for calculating flush latency. In verification, the data in the copied buffer are applied to the copied latency model to return the oldest timeline of each chip. After that, compared with the oldest timeline just before copying, the time equal to the difference is calculated as the time taken for flushing.

6. Conclusions

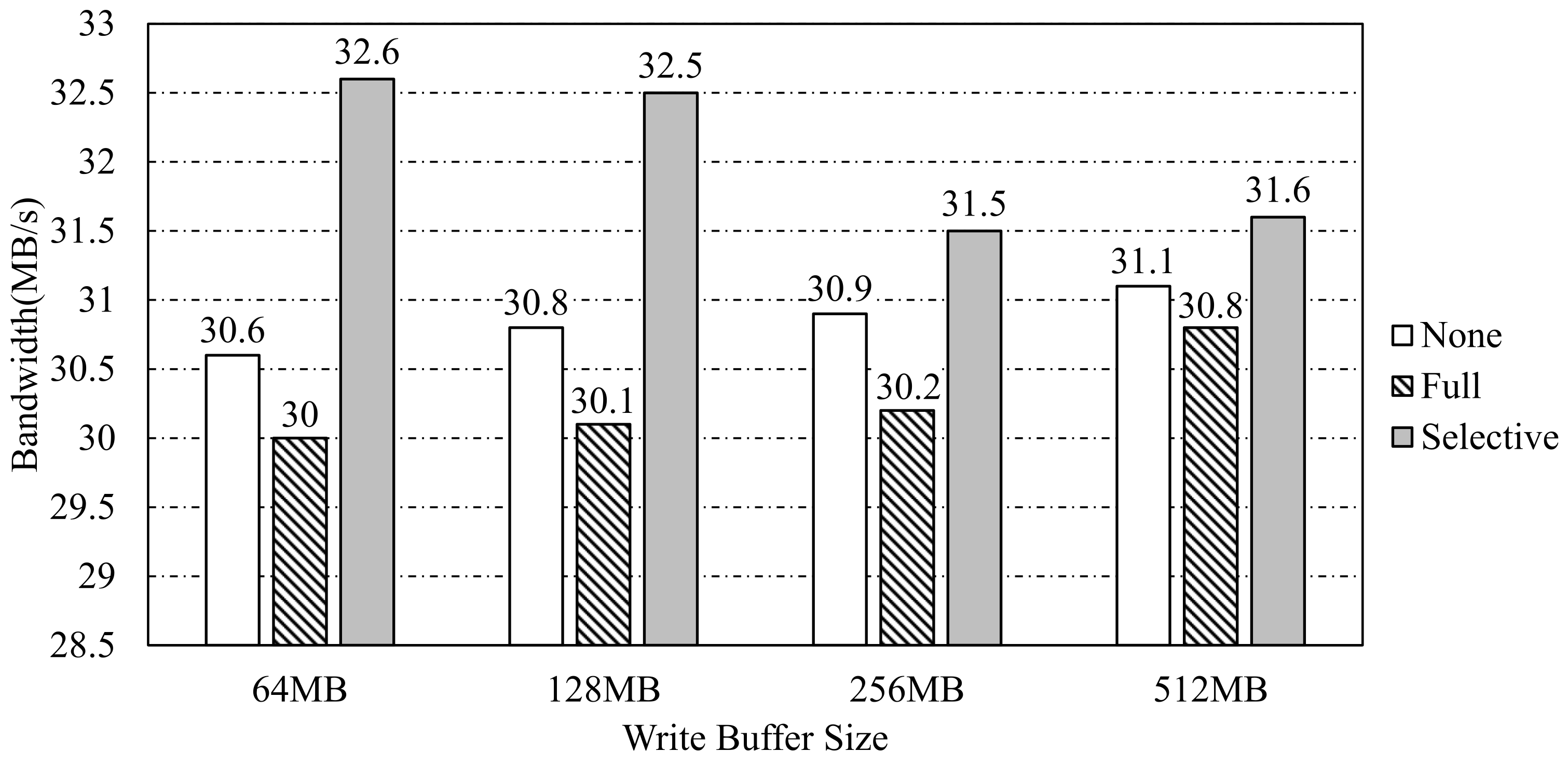

Most SSDs have a DRAM-based volatile buffer to improve I/O performance and expand the lifespan of SSDs by absorbing write requests. However, because a volatile buffer does not guarantee the durability of the buffered data during a sudden power-off, enterprise SSD employs power-loss-protection logic by using internal capacitors. However, as capacity and parallelism of SSDs increase, a lager write buffer becomes required. Especially, emerging ZNS SSDs require a much larger write buffer than legacy block-interface SSDs to support many I/O streams in multi-tenant systems because each stream needs its own buffer space for write buffering. However, the amount of capacitance required to ensure the durability of the write buffer cannot be increased infinitely due to the limitations of internal space and the cost. In this paper, we proposed a selective power-loss-protection method for ZNS SSDs to reduce the capacitance required for PLP. By using a new write interface, PLP_write(), that can deliver a PLP flag to ZNS SSD, the ZNS SSD can ensure the durability of the WAL or journal. Because file systems and databases have their own recovery mechanisms using WAL or journal, the Selective-PLP achieves the same level of reliability while dramatically reducing the amount of capacitance for PLP. Moreover, ZenFS of RocksDB is modified to guarantee durability for only WAL files of RocksDB by using PLP_write(). According to our evaluation results, the performance of RocksDB increased by an average of 15% compared to None-PLP due to ignoring the flush() command for the PLP buffer. In addition, the Selective-PLP achieves performance improvement comparable to Full-PLP with only 6–50% of the capacitance of Full-PLP. These experimental results indicate that the same level of reliability and performance improvement as Full-PLP can be achieved with much less capacitance by selectively supporting PLP only for data essential for data recovery, such as WAL or journal. Moreover, the balanced flush decreased the time taken for PLP by about 96% on average by distributing write requests across multiple NAND chips.