Abstract

Hand gesture recognition is an area of study that attempts to identify human gestures through mathematical algorithms, and can be used in several fields, such as communication between deaf-mute people, human–computer interaction, intelligent driving, and virtual reality. However, changes in scale and angle, as well as complex skin-like backgrounds, make gesture recognition quite challenging. In this paper, we propose a robust recognition approach for multi-scale as well as multi-angle hand gestures against complex backgrounds. First, hand gestures are segmented from complex backgrounds using the single Gaussian model and K-means algorithm. Then, the HOG feature and an improved 9ULBP feature are fused into the HOG-9ULBP feature, which is invariant in scale and rotation and enables accurate feature extraction. Finally, SVM is adopted to complete the hand gesture classification. Experimental results show that the proposed method achieves the highest accuracy of 99.01%, 97.50%, and 98.72% on the self-collected dataset, the NUS dataset, and the MU HandImages ASL dataset, respectively.

1. Introduction

Hand gesture-based human–computer interaction technologies are widely used in driving assistance, sports activity assistance, underwater rescue, firefighting assistance, etc. [1,2]. According to the World Health Organization, millions of people around the world suffer from hearing impairment [3]. Most of them can only communicate with others through sign language or gestures [4]. Gesture-based human–computer interaction can greatly improve the living quality of people suffering from hearing impairment [5,6]. Although many achievements have been made in gesture recognition, changes in scale, angle, and position, as well as complex backgrounds still adversely affect the recognition system [7,8,9].

The general gesture recognition procedure consists of pre-processing, feature extraction, and classification. Skin color segmentation is a critical step in the image pre-processing and greatly affects the feature extraction and gesture recognition. However, it is relatively difficult to segment gestures from complex backgrounds, especially under skin-like interference [10,11,12]. As to the feature extraction of multi-scale multi-angle (MSMA) gestures, there are two challenges which need to be addressed: (I) how to extract the most distinguished features from the gesture images; (II) how to increase robustness to the changes in the scale and rotation.

The widely used features in gesture recognition are Hu moments [13,14], Krawtchouk moments [15,16], histogram of oriented gradients (HOG) [17] and local binary patterns (LBP) [18,19]. Hu Moments are an image descriptor used to characterize the shape of an object in an image. Krawtchouk moments are a set of moments formed using Krawtchouk polynomials as the basis function set, and found to be robust to viewpoint changes and capable of achieving good recognition with a small number of training samples. HOG can fully capture the edge and contour information [20,21]. LBP can effectively describe the texture characteristics [22,23] and its improved feature can possess the property of rotation invariance [24]. Because many images have rich contour and texture information, some researchers combined HOG and LBP to increase the recognition precision, such as the detection of building appearance [25], brain tumor detection [26], the gesture recognition in Android devices [27] and pedestrian detection [28]. To increase the scale and/or rotation invariance of the recognition systems, ref. [15] used rotation normalization to align the gestures. Ref. [29] put forward a contour direction-based normalization method to increase the recognition rate of multi-scale gestures. Ref. [30] proposed a radiation projection transformation model to identify the MSMA static gestures. However, the proposed approach was based on the contour information and may cause misclassification when the boundary contours were similar. Ref. [31] proposed a descriptor incorporating three types of parameters of multi-scales to make a discriminative representation of the hand shape. Ref. [32] put forward an algorithm to detect the state of the fingers by the parallel feature of the finger contours. The proposed approach has rotation invariance, but it is vulnerable to the modeling accuracy.

Hence, there is an immediate need for the development of a robust hand gesture recognition method. In this paper, we propose an improved 9 uniform LBP feature and combine it with the HOG feature to realize the feature extraction of MSMA hand gestures, and then use support vector machine (SVM) to complete the feature classification. To verify the effectiveness of the proposed method, we construct a dataset via Kinect with 20,000 MSMA hand gesture images against different backgrounds. Experiments are then carried out on the self-made dataset, the NUS dataset and the MU HandImages ASL dataset. Experimental results on these datasets verify the effectiveness and superiority of our method. The main contributions of this paper can be summarized as follows:

- We use single Gaussian models (SGM) and the K-means algorithm to segment hand gestures against complex backgrounds. The SGM method can segment hand gestures from a general non-skin background. However, for the skin-like background, the skin color pixels and the skin color-like pixels cannot be correctly segmented. After noticing that there are three types of pixels in the images after SGM segmentation, i.e., the black background pixels, the skin color-like pixels and the skin color pixels, we further use the K-means algorithm to cluster these three kinds of pixels, to separate the skin color-like pixels and skin color pixels and achieve segmentation of hand gestures against the skin-like interference.

- We propose an improved 9ULBP descriptor and combine it with the HOG feature for feature extraction of MSMA hand gestures. The HOG feature is used to capture the contour information and maintain the scale invariance. The proposed 9ULBP descriptor can capture the texture information effectively and is rotationally invariant. So the combined HOG-9ULBP feature has not only the scale and rotation invariance, but also rich contour and texture information. SVM is then used to complete the feature classification.

2. Methods

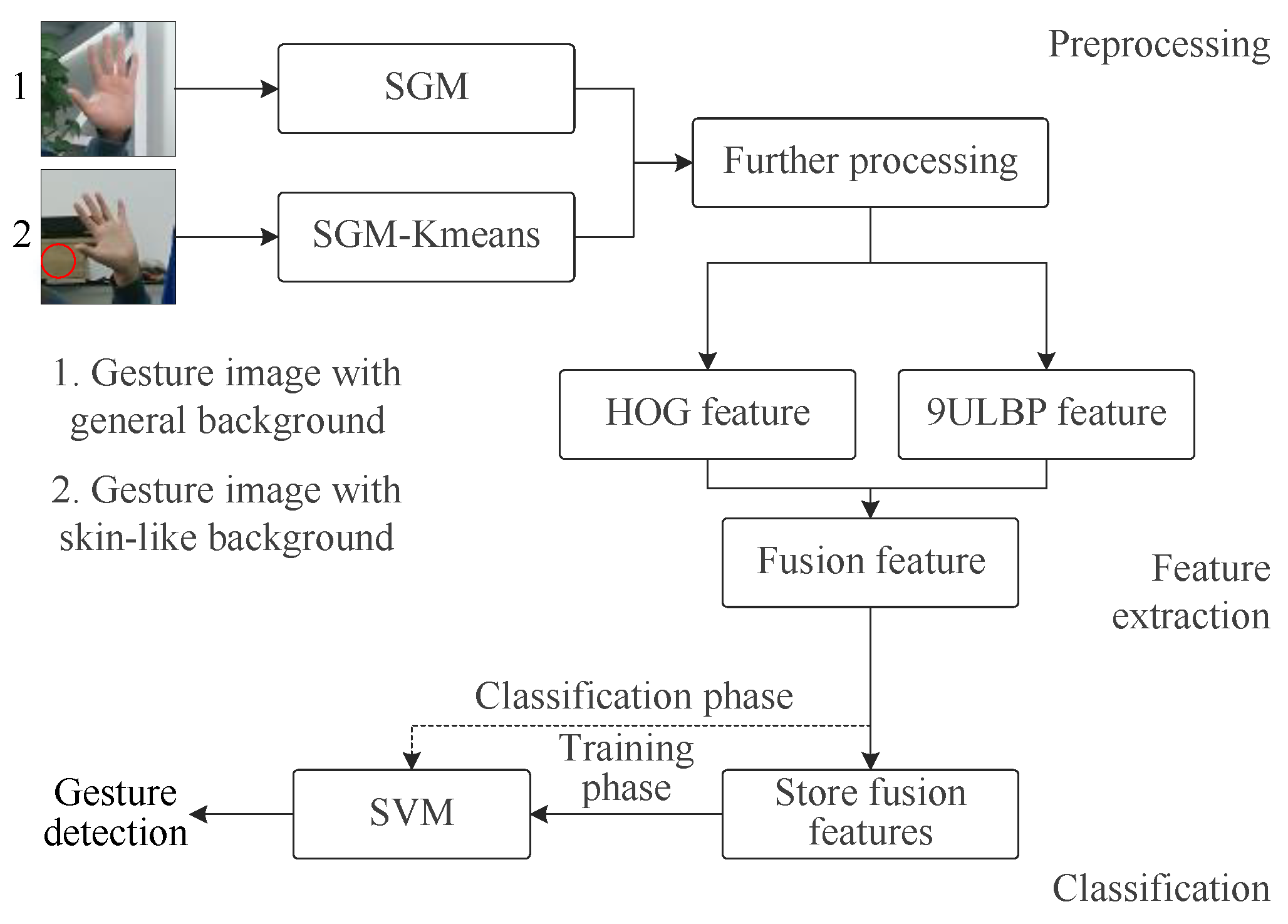

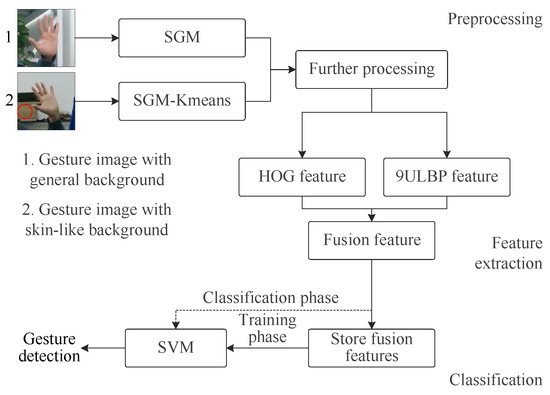

The proposed hand gesture recognition method includes the hand gesture segmentation against complex backgrounds, the feature extraction by combing the HOG feature and an improved 9ULBP descriptor and the gesture classification via SVM. The framework of the proposed recognition method is shown in Figure 1.

Figure 1.

The framework of the proposed gesture recognition method.

2.1. Hand Gesture Segmentation

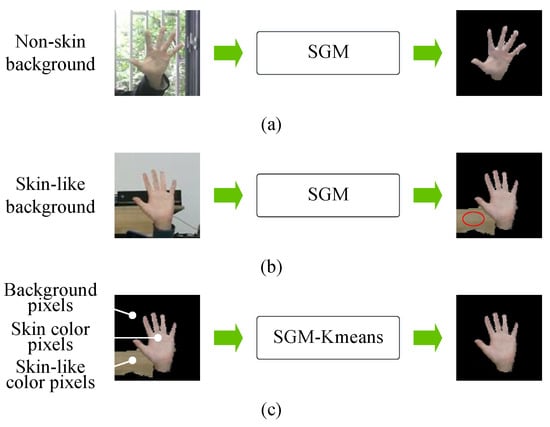

In this subsection, we classify the background in two different types: the skin-like background and the non-skin background. We use the SGM to segment hand gestures against the non-skin background and SGM-Kmeans method to segment hand gestures against the skin-like background.

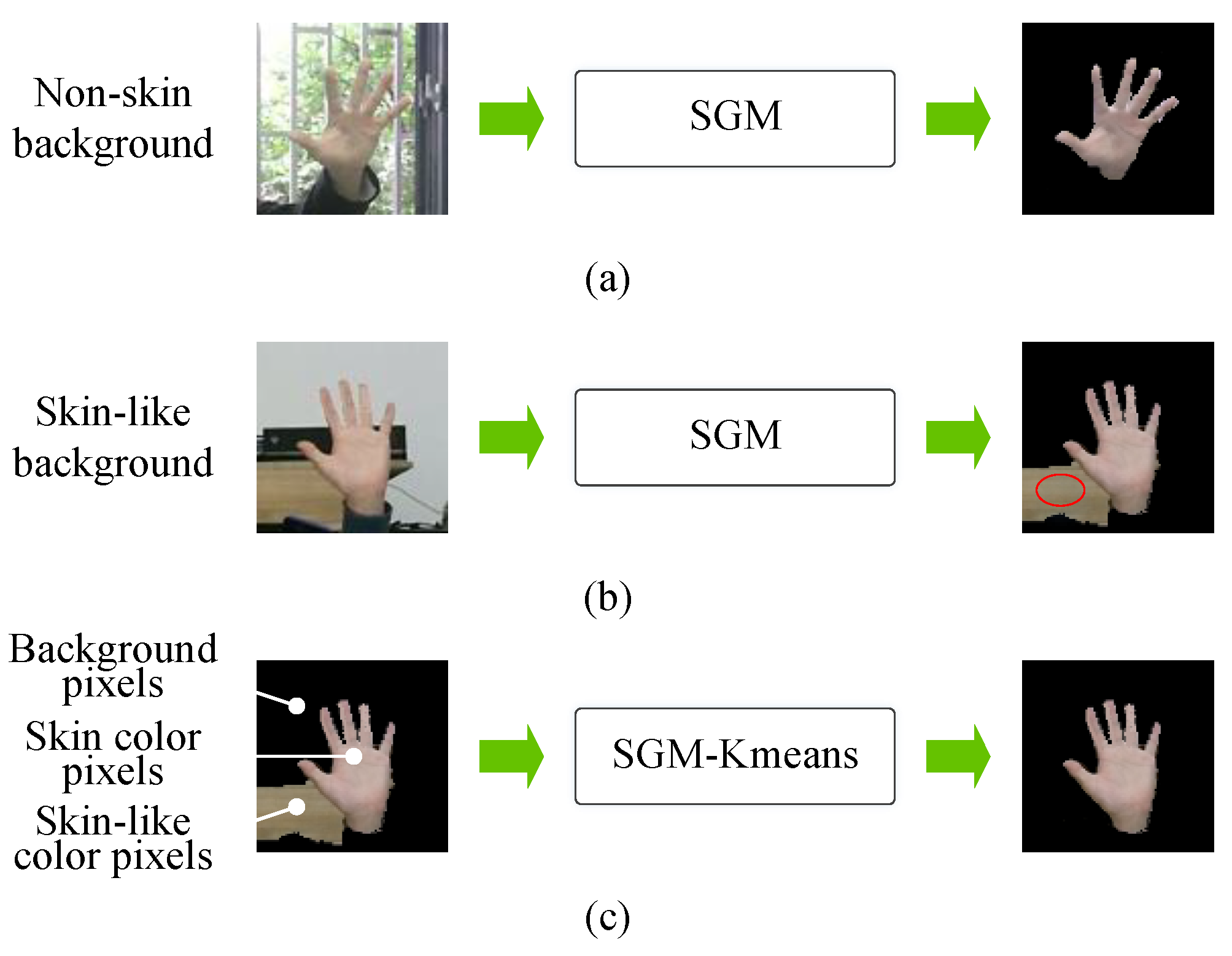

Skin color is one of the most prominent surface features of the human body and can be applied to gesture segmentation by detecting skin color [33,34]. As shown in Figure 2, SGM can effectively segment gestures from non-skin backgrounds, but cannot eliminate the interference of skin-like backgrounds. By observing the images after SMG segmentation in Figure 2b, we find that there are three kinds of pixels, i.e., the skin color, the skin-like background and the black background. To segment the skin color hand gesture from the skin-like background, we need to cluster the three different kinds of pixels and eliminate the skin-like interferences. Experiments show that the K-means algorithm can effectively separate the three different kinds of pixels and the skin-like interference can be eliminated effectively, where K is chosen as 3, as shown in Figure 2c. The segmented gesture images are converted to grayscale images and scaled to pixels to reduce the data processing burden in feature extraction and gesture classification.

Figure 2.

(a) Gesture segmentation in non-skin background by SGM method. (b) Gesture segmentation in skin-like background by SGM method. (c) Gesture segmentation in skin-like background by SGM-Kmeans method.

2.2. Feature Extraction

MSMA hand gestures are naturally used for communications. So the feature not only needs to capture the characteristics of the gestures, but also has the property of scale and rotation invariance. In this subsection, we combine the HOG feature and an improved 9ULBP descriptor to realize the feature extraction.

2.2.1. HOG Feature

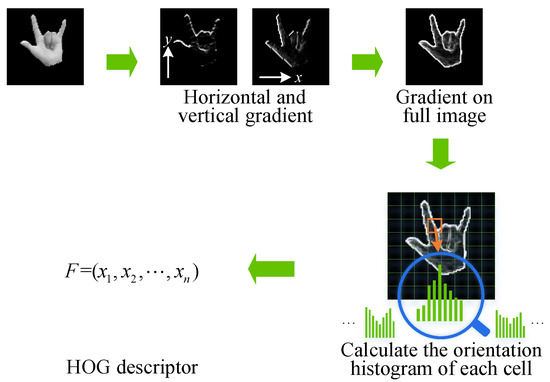

The fundamental principle of the HOG feature is that the shape or contour of an object in an image can be described by the distribution of its intensity gradients and edge directions. Because of its robustness to the appearance and contour information of the human body, the HOG feature can effectively characterize the edges and contours of the human body and has achieved great success in gesture detection and recognition. Moreover, the HOG feature also possesses good geometric invariance and can be used for the feature description of multi-scale images.

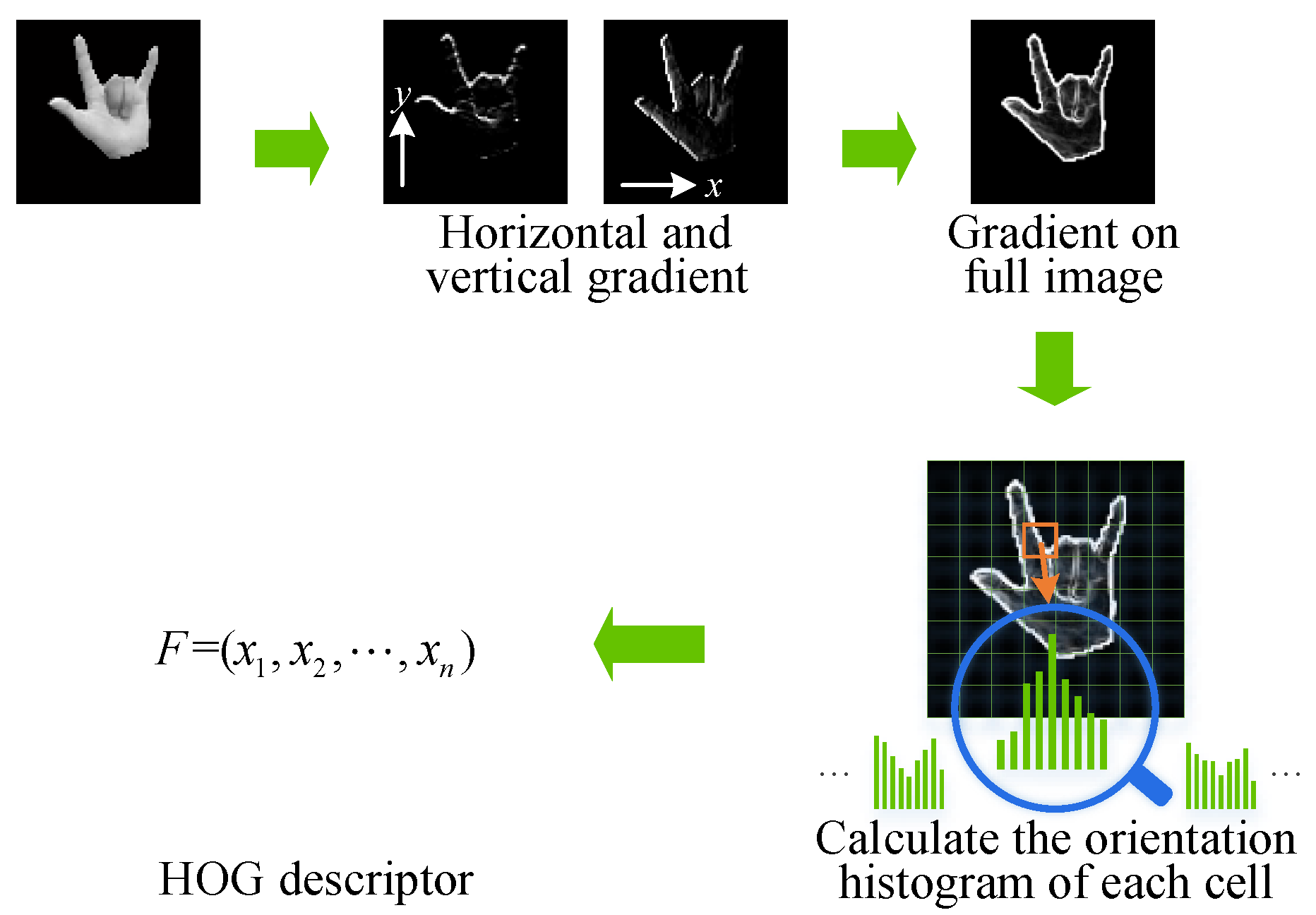

The main steps to obtain the HOG feature descriptor are as follows:

- Calculate the horizontal gradient and vertical gradient of each pixel , respectively:where is the pixel value.

- Calculate the gradient magnitude and orientation as follows:

- Calculate the orientation histogram vector of each cell. We take the calculation of 9-dimensional gradient orientation histogram vector for instance. First, the image is divided into some specified blocks and cells. Then, the range is equally divided into 9 bins. Let represent , respectively. For each pixel in a cell, if , let . The obtained is then -norm normalized as follows:where is a very small normalization constant. Finally, the 9-dimensional gradient orientation histogram vector for each cell can be formulated as

- HOG feature calculation. The calculation procedure of the HOG feature is illustrated in Figure 3. First, the HOG feature of each block is obtained by sequentially connecting the gradient orientation histogram of all the cells in the block. Then, the HOG feature of the entire image is obtained using the 4-pixel stride overlap strategy.

Figure 3. The calculation of HOG feature.

Figure 3. The calculation of HOG feature.

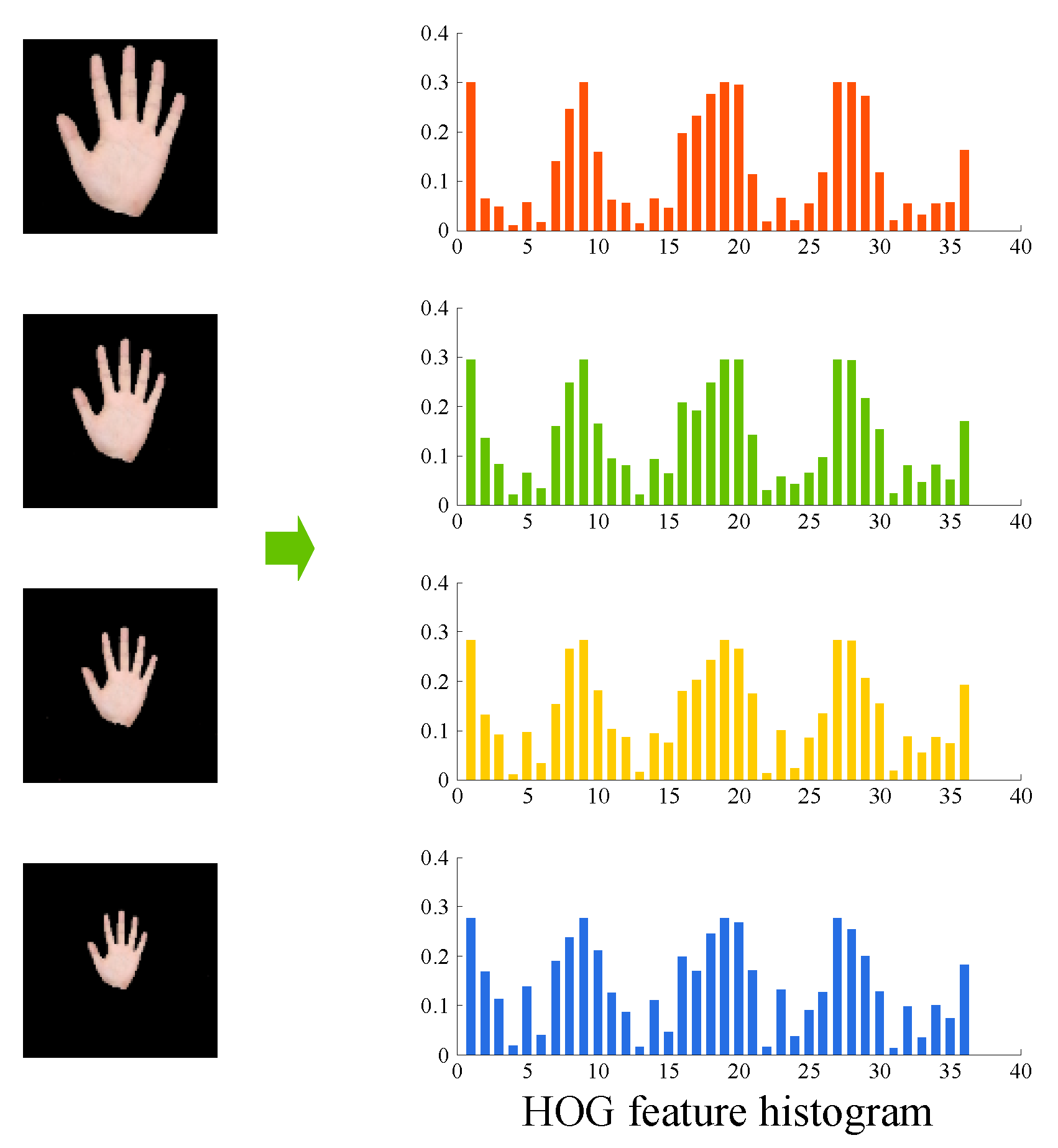

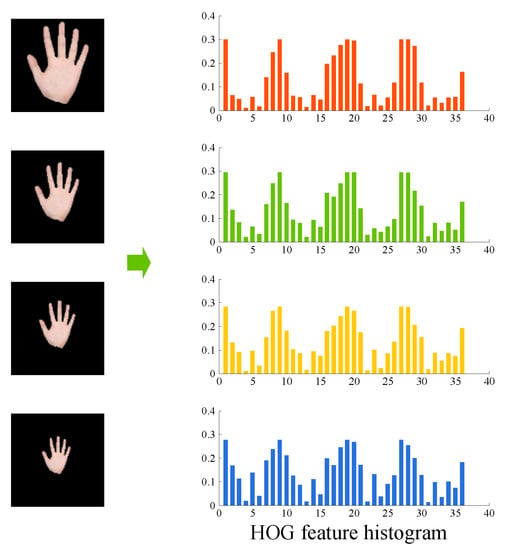

To verify the scale invariance of the feature, we extract the HOG feature of 4 hand gestures with different scales, as shown in Figure 4. It can be seen that the histograms of different scale gestures possess high similarity, which demonstrates the scale invariance of the HOG feature.

Figure 4.

The scale invariance of HOG feature.

2.2.2. 9ULBP Feature

It is known that in addition to the contour and shape information, the hand gestures have rich texture information. LBP can be used to describe local texture feature and is widely used in texture segmentation [22,35,36]. In the following, we first introduce the calculation procedure of the conventional LBP descriptor and then propose an improved 9ULBP descriptor to capture the texture information and improve the rotation invariance of the conventional LBP feature.

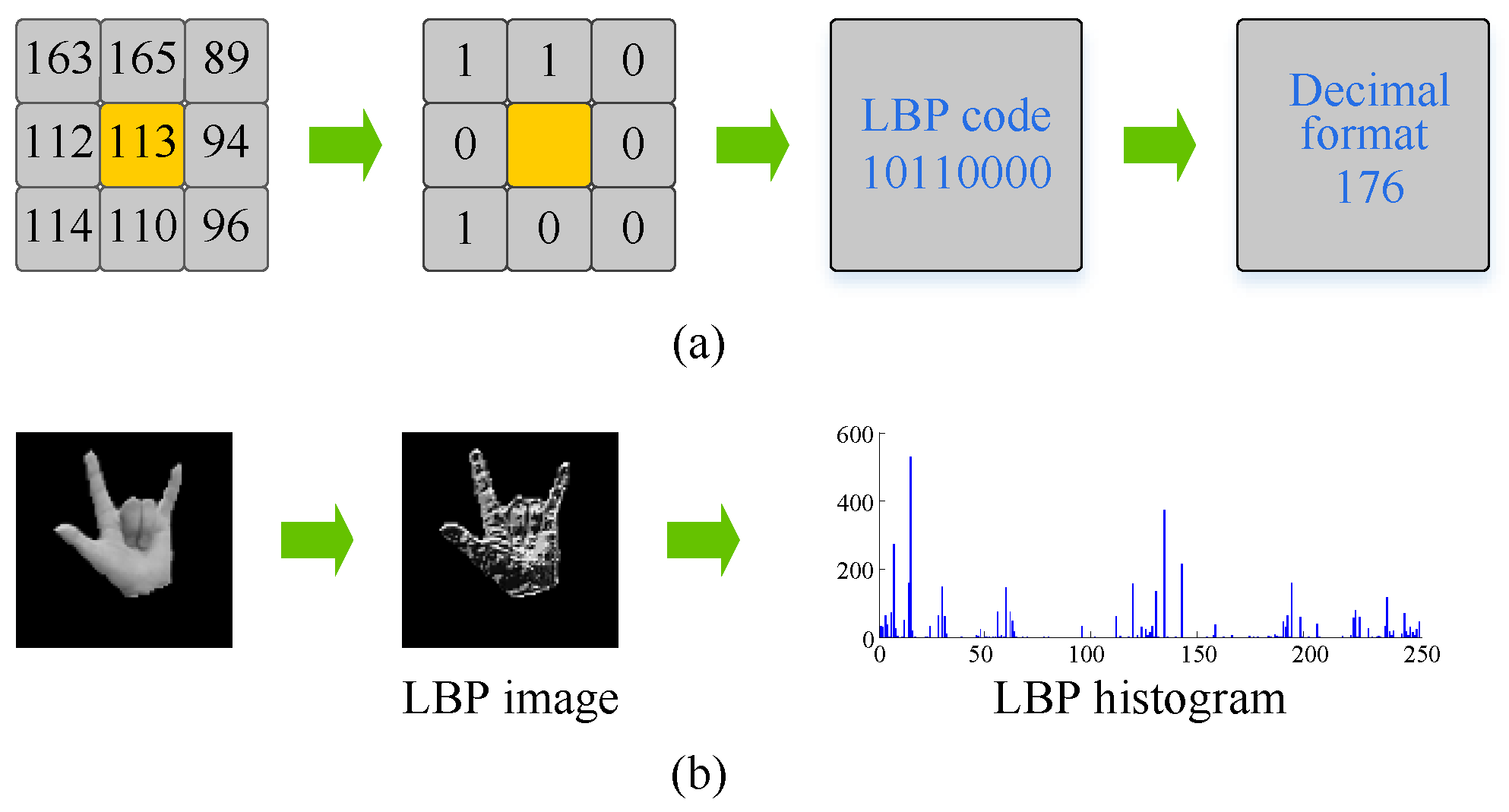

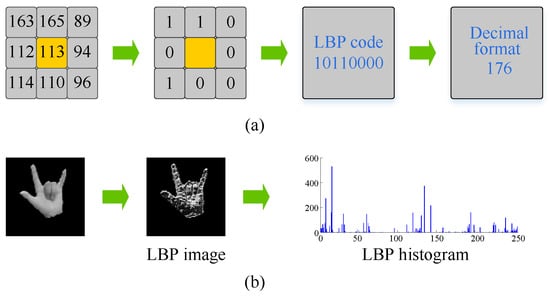

Figure 5 shows the calculation procedure of the LBP feature. First, the thresholds of the pixel values in the neighborhood are set according to the value of the center pixel. Next, the thresholds are concatenated into an 8-bit binary number to obtain the LBP code of the center pixel. Then, the LBP code is converted to its decimal format. Finally, the decimal values of the entire image are used to generate the histogram (LBP descriptor).

Figure 5.

(a) Calculation of the LBP code. (b) Calculation of the LBP descriptor.

The LBP descriptor has 256 individual patterns. It is obvious that for hand gestures after preprocessing, the substantial amount of patterns are detrimental to the texture extraction and the description of texture features, because too many patterns will not only make the feature vector dimension too long, but also make the histogram too sparse. The sparse property of the LBP histogram distribution of hand gestures can be seen in Figure 5b. Therefore, it is of great importance to seek a texture analysis scheme suitable to the texture characteristics of segmented hand gestures. By observing that the uniform patterns histogram can provide better texture analysis than the histogram of individual patterns [37], we use the uniform pattern LBP descriptors in texture analysis of hand gestures.

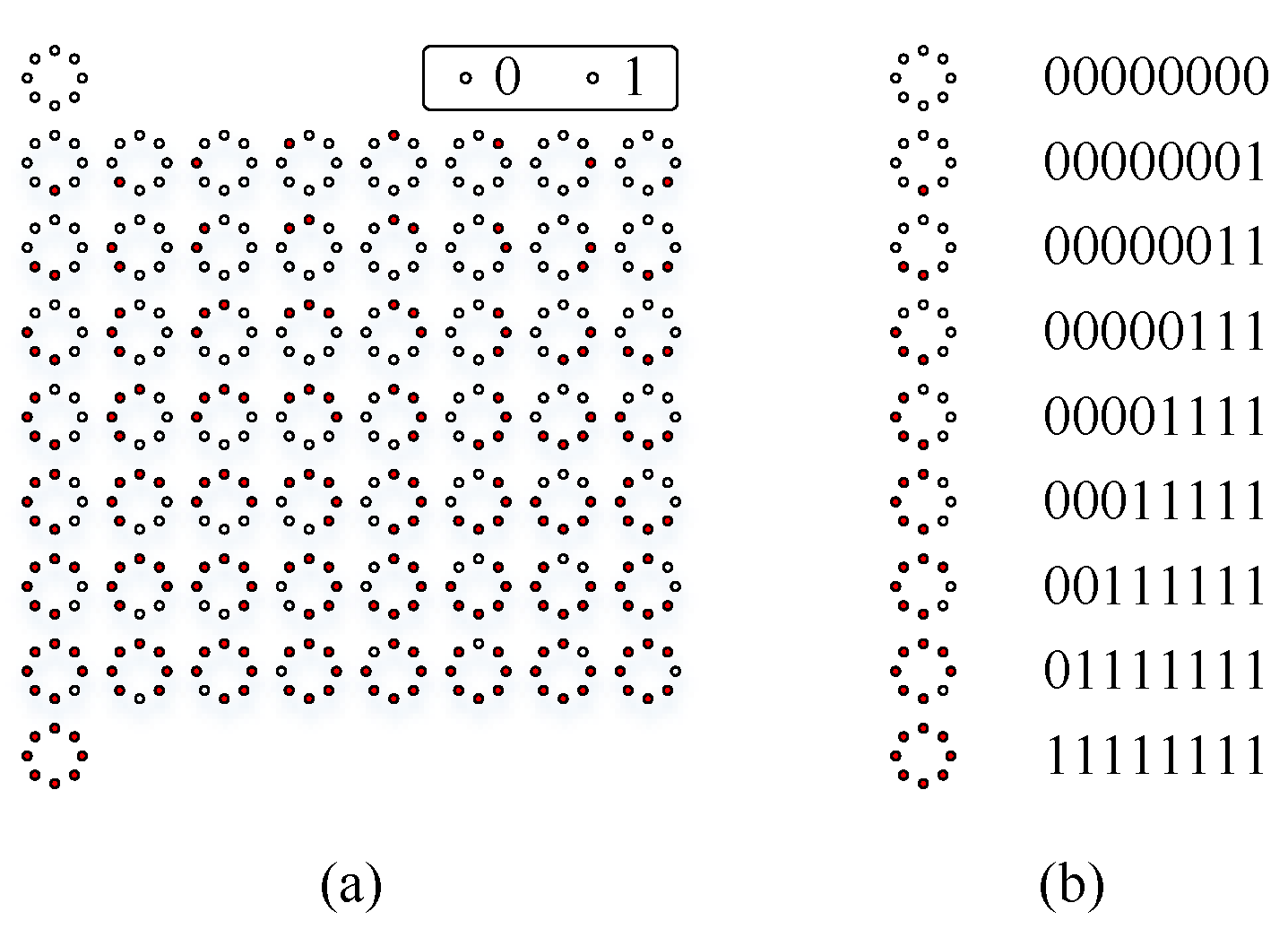

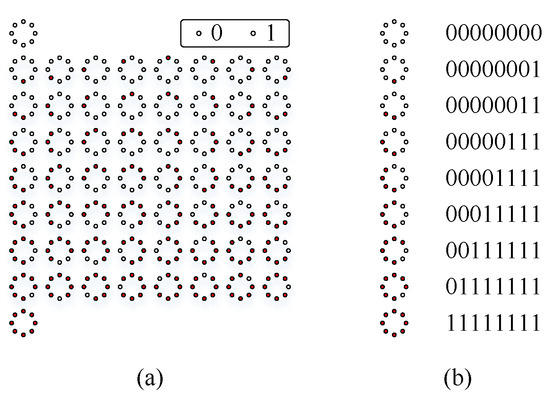

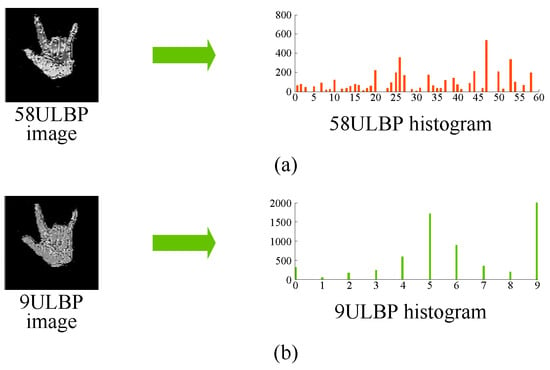

The uniformity measure U needs to be introduced to define the uniform patterns, which corresponds to the number of the spatial transitions (bitwise 0/1 changes) in the pattern. A uniform pattern refers to a pattern with a limited transition in a cyclic binary and a uniform operator which has different uniform LBP patterns, where R refers to the radius and P refers to the number of sampling points. When the sampling point P is 8 and the radius R is 1, the corresponding 58 uniform pattern (58ULBP) can gain the highest proportion in texture analysis [38]. So we take 58ULBP for further discussion. Figure 6a shows the 58ULBP codes, and Figure 7a shows the histogram of the 58ULBP image. It can be seen that for hand gesture recognition, the histogram distribution is sparse and nearly 20 patterns are not present, which indicates that the pattern is still excessive.

Figure 6.

(a) 58 uniform pattern LBP codes. (b) 9 uniform pattern LBP codes.

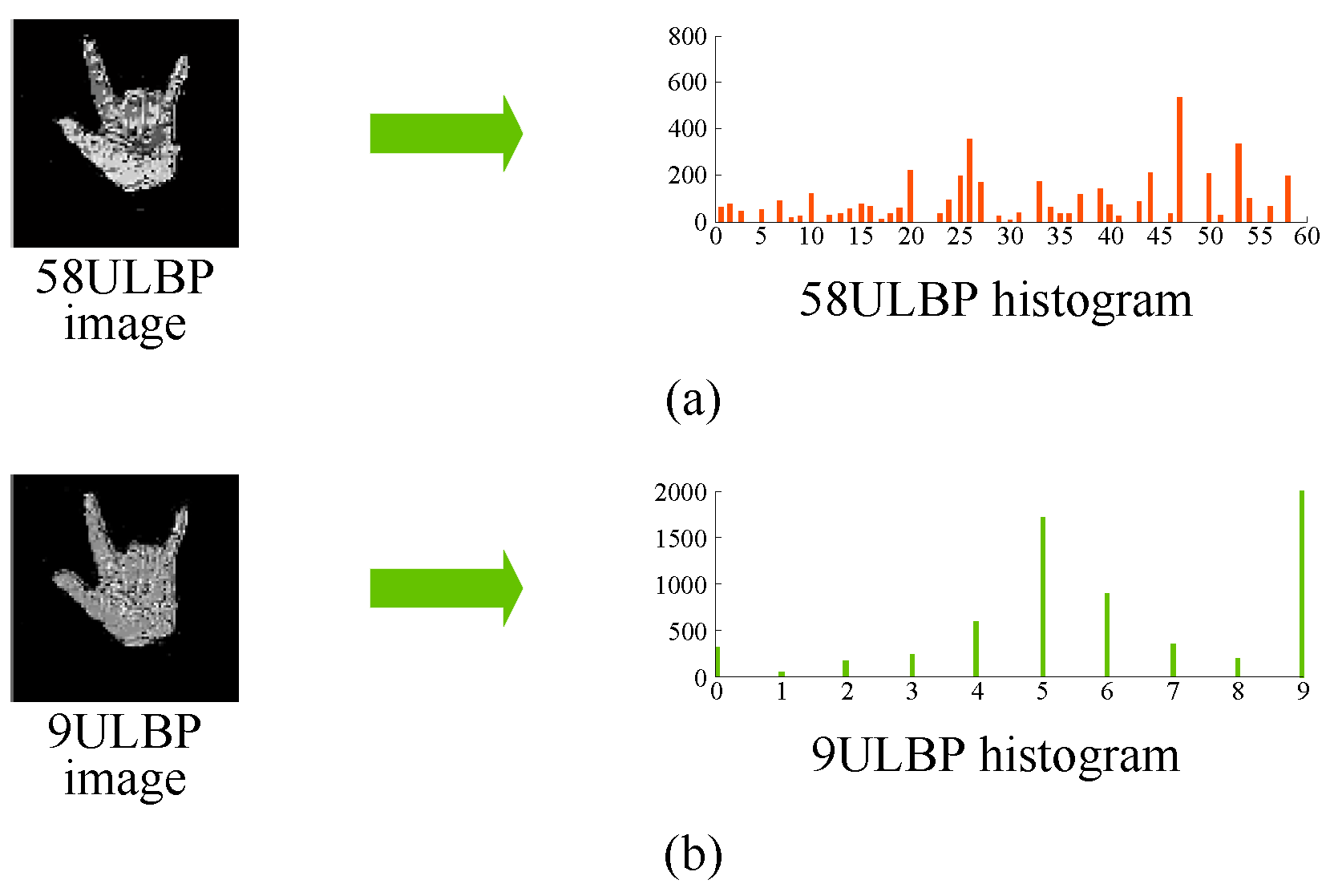

Figure 7.

(a) 58ULBP image and its histogram. (b) 9ULBP image and its histogram.

To further reduce the patterns, we introduce an improved 9ULBP based on the 58ULBP by limiting the uniformity measure in binary rather than in cyclic binary. The 9ULBP descriptor can be obtained as follows:

- Count the number of 1s in the 58ULBP code.

- Divide the LBP codes into 9 categories based on the number of 1s.

- Calculate decimal values of LBP codes in each class to find the smallest LBP code.

- Choose the smallest LBP code as the 9ULBP code.

By this way, we can transform the 58ULBP codes to 9ULBP codes, as shown in Figure 6b. The corresponding 9ULBP image and the histogram are shown in Figure 7b.

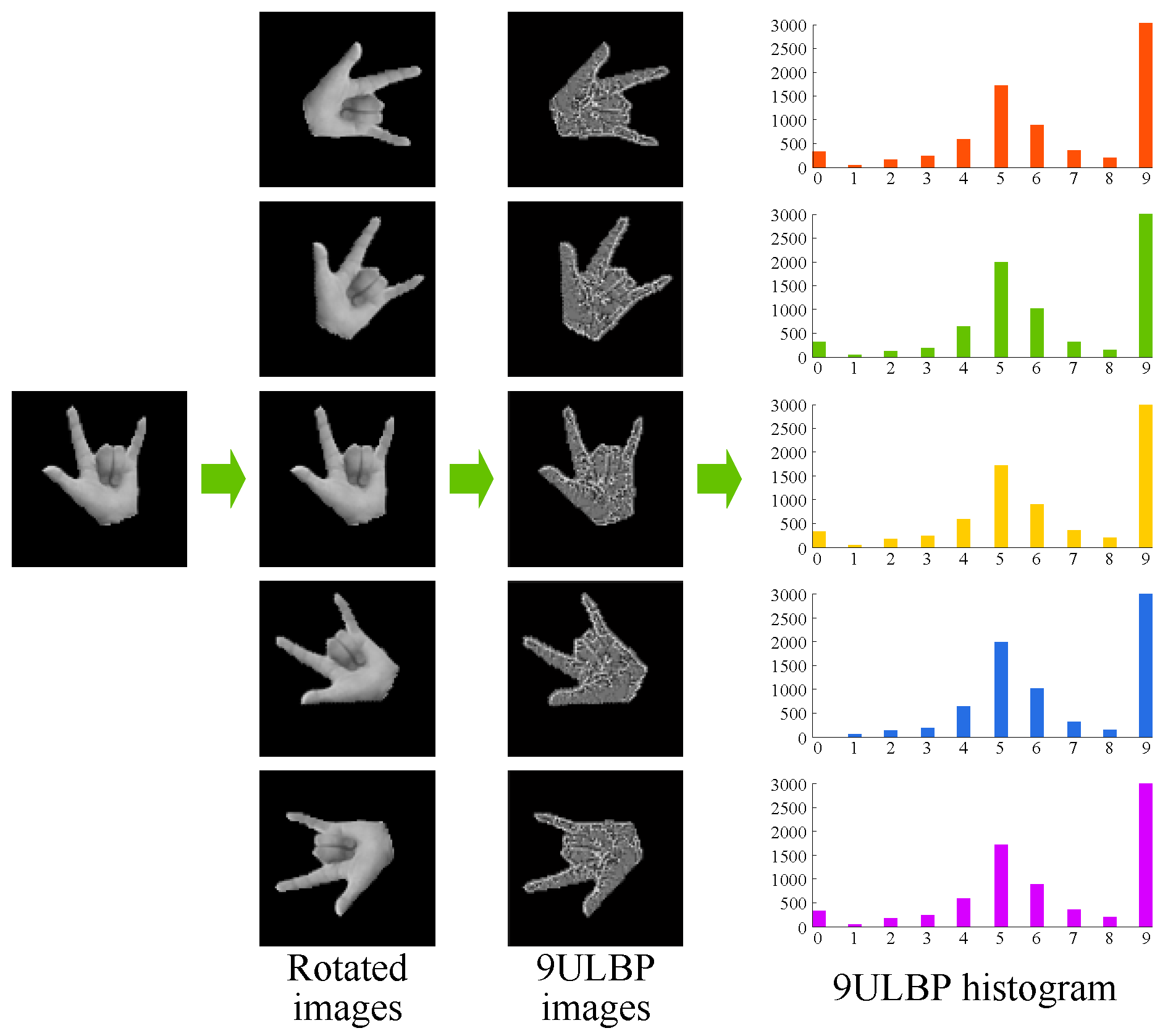

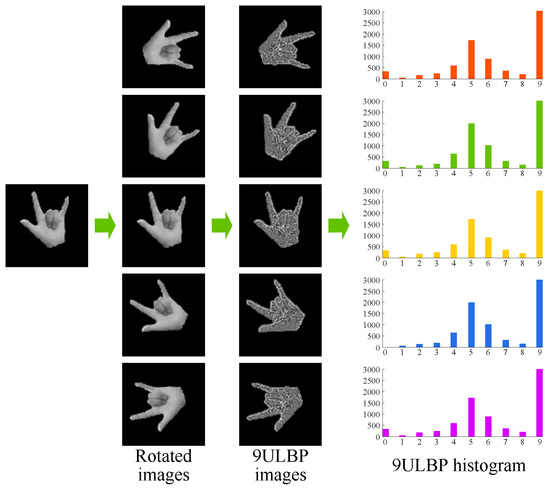

It can be seen that the histogram and pattern of 9ULBP can also capture the texture characteristics of the gesture image. Moreover, because the procedure of calculating the 9ULBP code can be viewed as rotating the 58ULBP code clockwise until it reaches the corresponding smallest decimal number in the same class, the improved 9ULBP descriptor has the property of rotation invariance. To verify it, we rotate a hand gesture image in five directions and then extract their 9ULBP histograms, respectively. The experimental results are shown in Figure 8. It can be seen that the 9ULBP histogram is not affected by the image rotation. Therefore, the proposed 9ULBP code can not only reduce the dimension of LBP descriptor, but also enhance its rotation invariance, which is of great importance for the feature extraction of multi-angle hand gestures.

Figure 8.

Rotation invariance of 9ULBP descriptor.

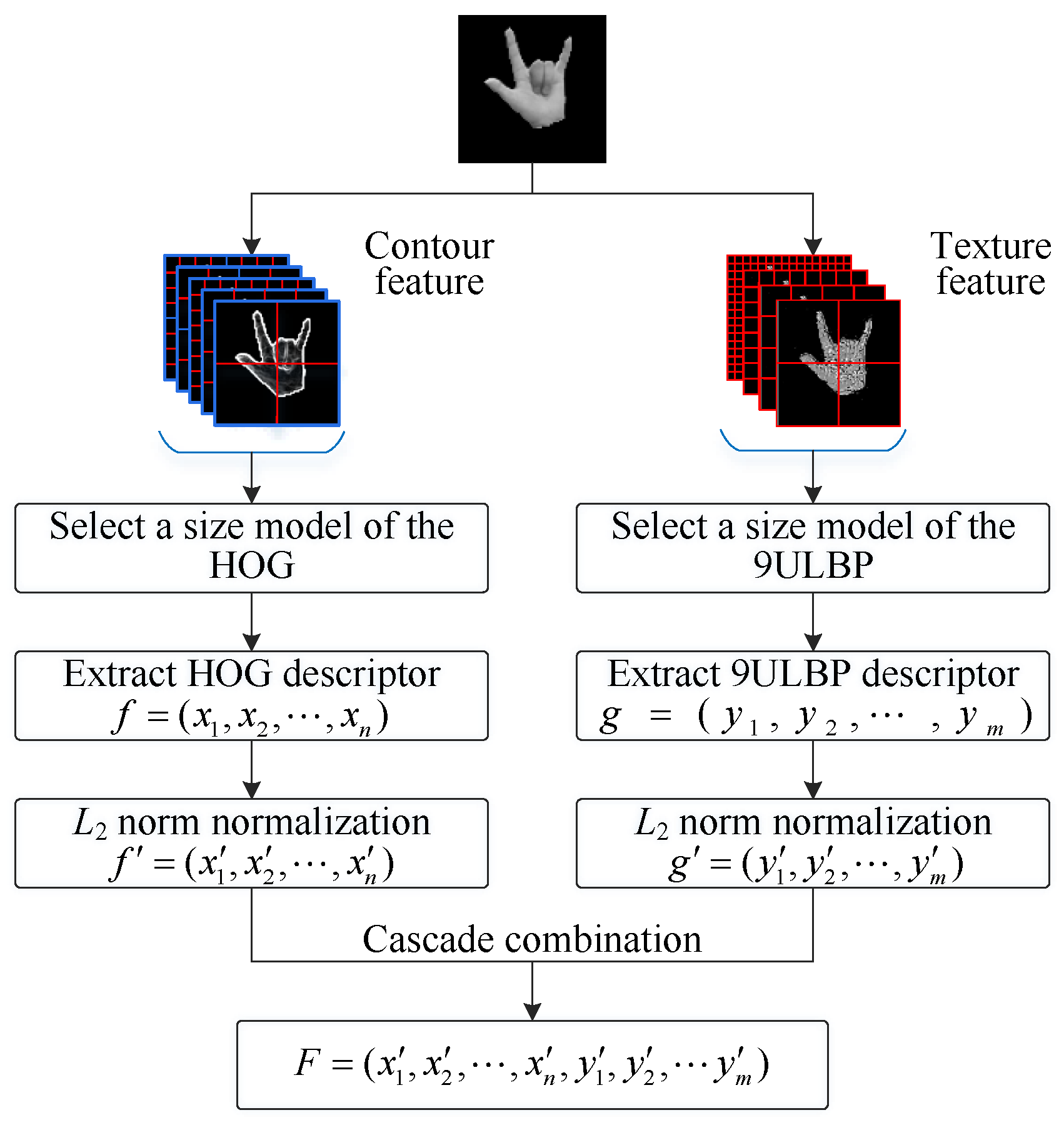

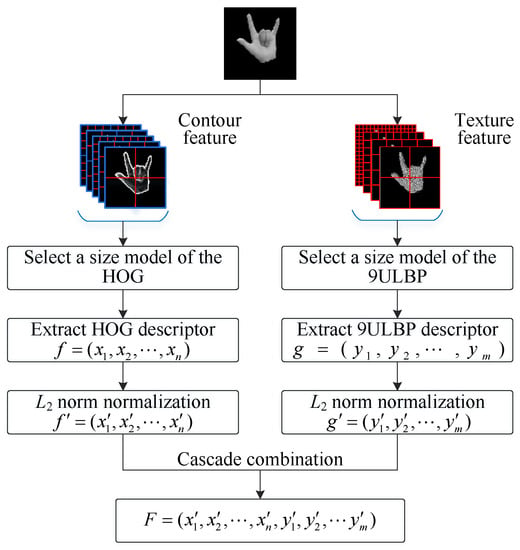

2.2.3. Feature Fusion

The HOG feature has good scale invariance and can describe the contour of gestures well. The proposed 9ULBP feature has excellent rotaional invariance and can capture the texture of gestures. It is reasonable to fuse the HOG and 9ULBP features to obtain a new HOG-9ULBP feature for the gesture recognition.

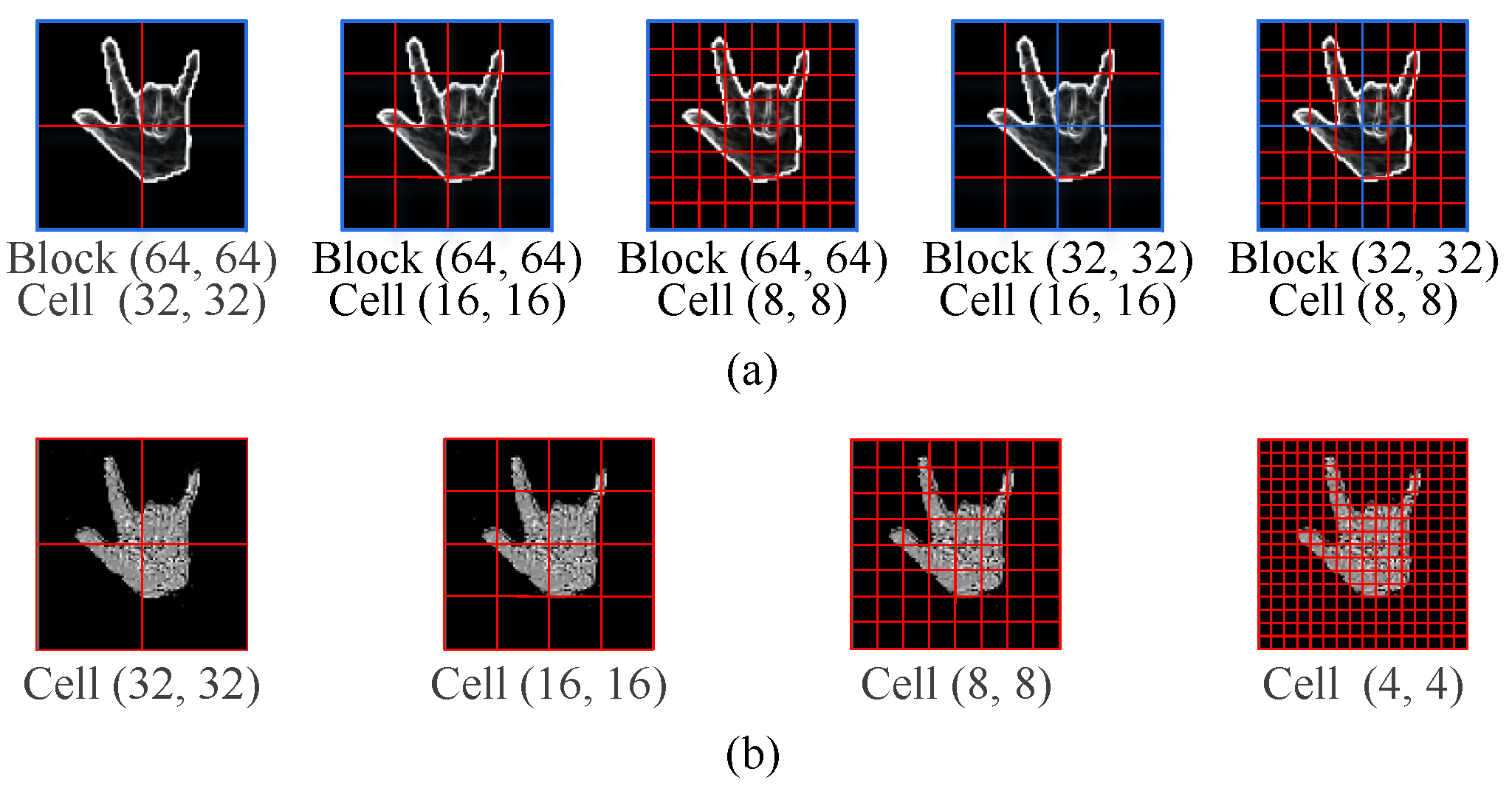

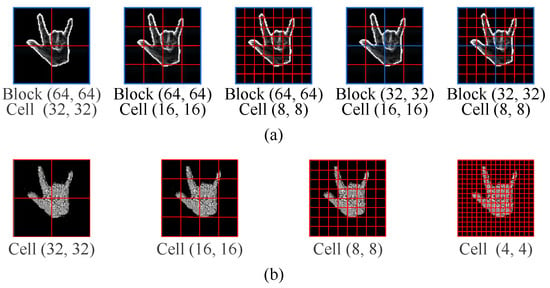

HOG and 9ULBP with different sizes perform differently in capturing the outline and texture of hand gestures. If the block size and cell size are too large, the information of descriptors may be insufficient. Conversely, if the sizes are too small, the redundant information in the extracted descriptors may be excessive, or even lead to a dimensionality disaster. To obtain an optimal size, we compare the HOG and 9ULBP features with different block sizes and cell sizes as shown in Figure 9. In our experiments, we compare the HOG-9ULBP feature with different sizes to obtain the best fusion characteristics. The procedure for the feature fusion of HOG and 9ULBP is shown in Figure 10.

Figure 9.

(a) Different size models of HOG. (b) Different size models of 9ULBP.

Figure 10.

Feature fusion procedure.

2.3. Gesture Classification

We use SVM to complete the gesture classification. SVM is a type of classifier with a supervisor, whose operation is based on finding optimal separation between different classes. Compared to other classifiers, SVM is based on the principle of structural risk minimization (SRM) to ensure that machine learning has a good generalization performance [39]. The principle of SVM is to transform the classification problem to a convex optimization problem [40]. Moreover, SVM uses the statistical theory to find the optimal solution and needs, relatively, a much smaller number of samples. During the experiments, we use the SVM based on radial basis function (RBF) due to its good nonlinear mapping capability. Ten cross-validation experiments are carried out to determine the SVM parameters and to obtain the best classifier model.

3. Results

In this section, we carry out comparative experiments to verify the effectiveness of the proposed MSMA hand gesture recognition algorithm. Experiments are performed on a Windows 10 system with a 3.40 GHz 8-core Intel Core i7-6700 processor and 8 GB of main memory.

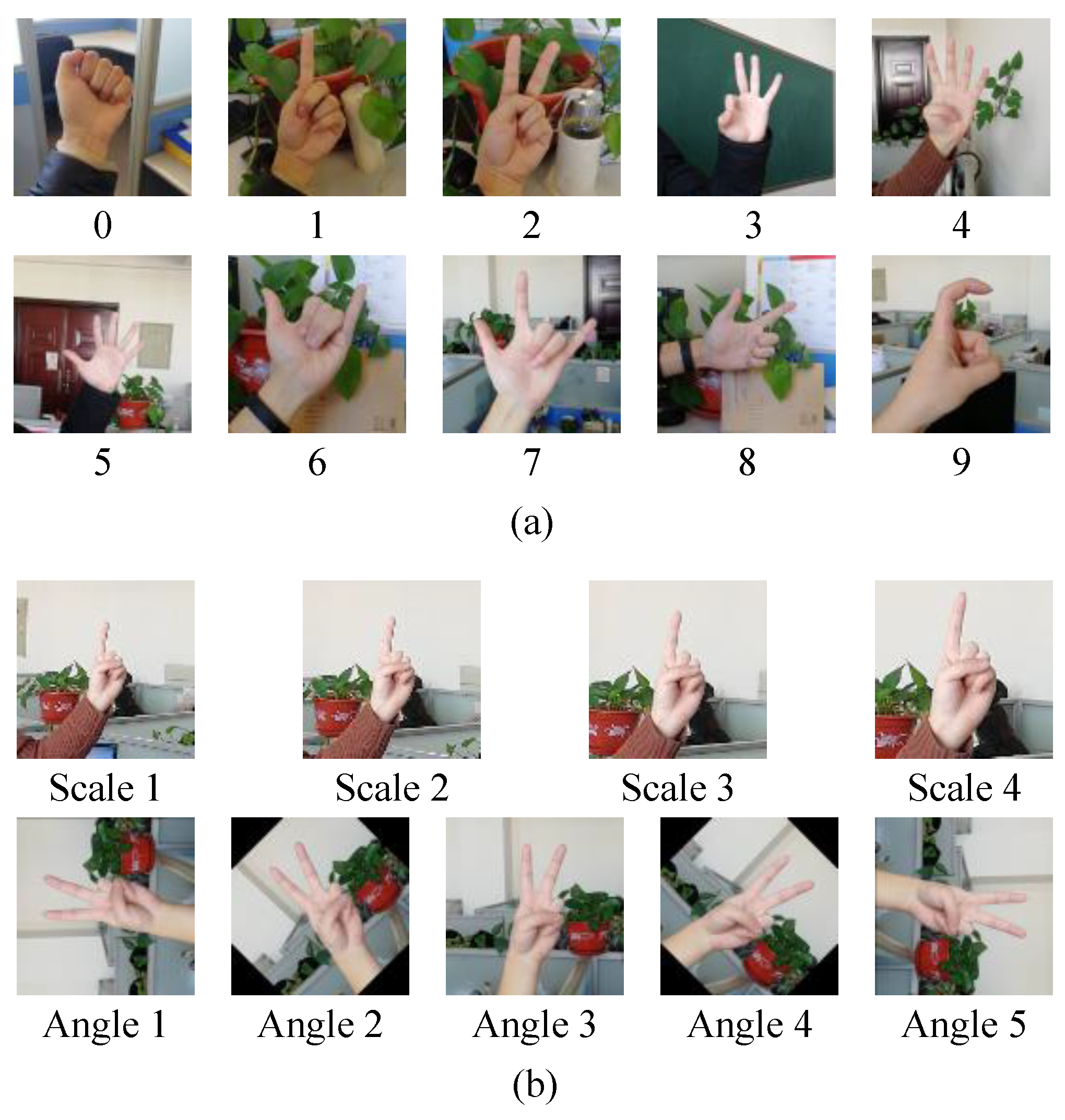

3.1. Dataset Creation

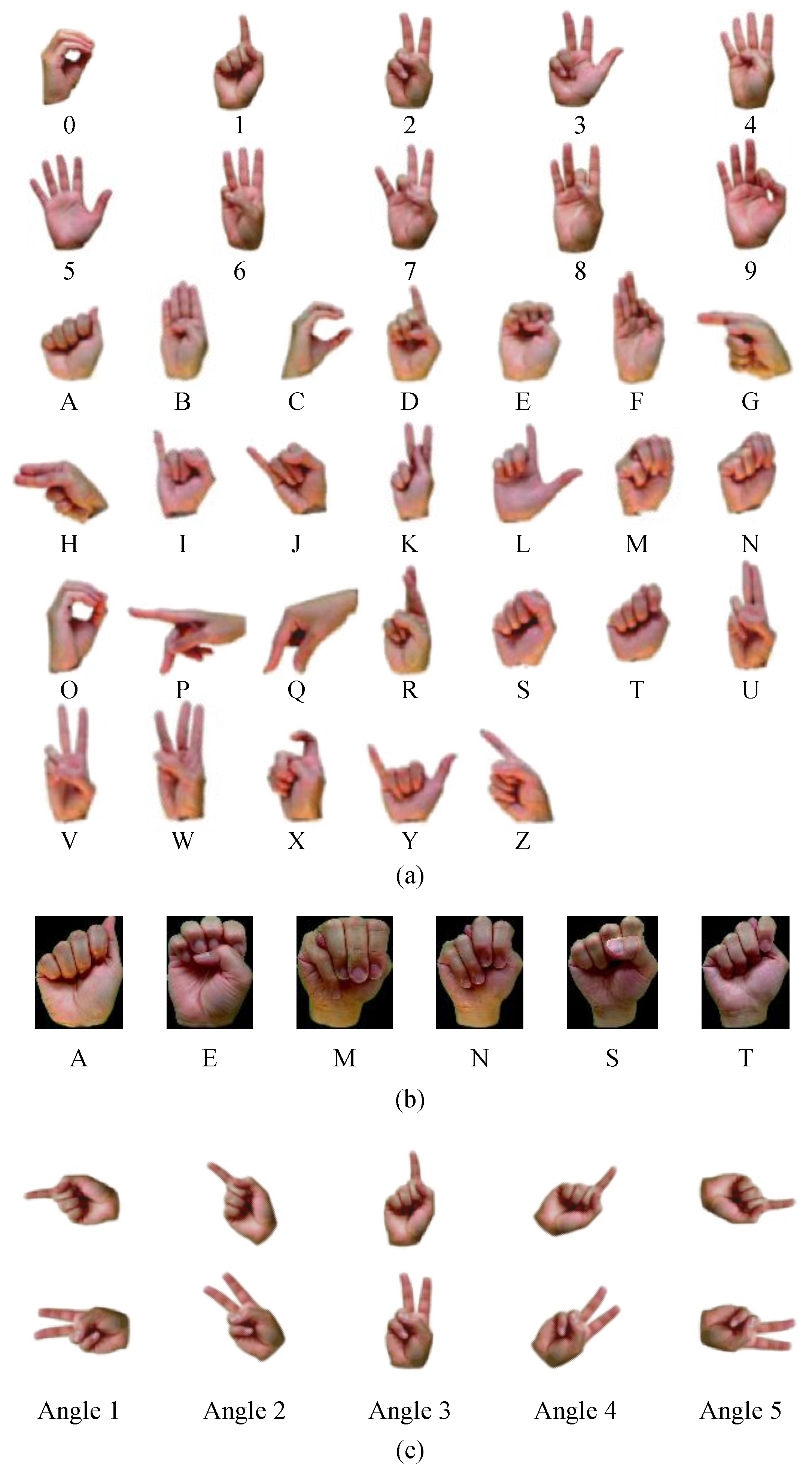

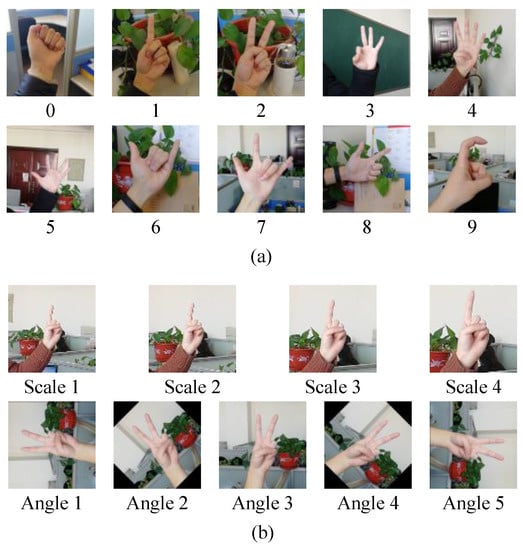

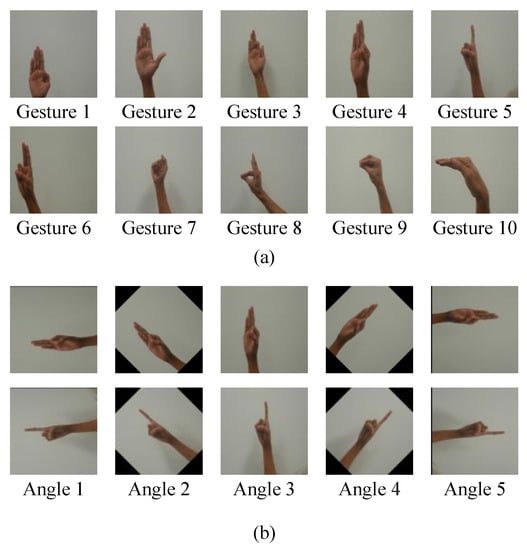

Because currently the MSMA hand gesture datasets are few, we first use deep camera Kinect to create a dataset containing 20,000 MSMA gestures with complex backgrounds. The dataset contains 10 most widely used symbols of 20 people in different backgrounds, which are shown in Figure 11a. The procedure of the production of the homemade dataset is as follows:

Figure 11.

(a) 10 kinds of self-collected hand gesture images. (b) Gesture images with different scales and angles.

- Use Kinect’s human skeleton recognition technology to track the center point of the right hand palm.

- Intercept the right hand gesture pictures at four different scales.

- Rotate the obtained gesture images in five directions (−90, −45, 0, 45, 90).

- Organize these multi-scale and multi-angle gesture images into the dataset.

Figure 11b shows the scale and angle characteristic in the dataset. All the gestures are characterized by different scales and angles, which will increase the difficulty for accurate gesture recognition.

3.2. Experiment on Self-Collected Dataset

We first determine the parameters of HOG and 9ULBP descriptors via experiments. The performance of HOG and 9ULBP descriptors with different sizes are shown in Table 1 and Table 2, respectively. We can see that using either the HOG feature or 9ULBP feature to characterize MSMA gestures can only achieve relatively low recognition rates, not exceeding and , respectively. The recognition rate does not monotonously increase with respect to the descriptor dimension. The reason for this is that there is more redundant information in the high-dimensional descriptors and the redundant information can lead to performance degradation. In [41], it is shown that the performance of the fusion feature is much better than the single feature, but the parameters will affect the feature extraction performance. Next, we carry out experiments to seek the optimal feature fusion scheme.

Table 1.

Performance of HOG with different sizes.

Table 2.

Performance of 9ULBP with different sizes.

Now we fuse the HOG and 9ULBP descriptors with different sizes into new HOG-9ULBP descriptors, which are fed to the SVM, and the experimental results are shown in Table 3. We can see that compared with HOG or 9ULBP features, the proposed HOG-9ULBP fusion feature can significantly improve the recognition rate. The reason is that the combined HOG and LBP feature can present both the contour and texture information, and possess the characteristic of scale invariance and rotation invariance. We can also see that under different dimensions, the recognition rate is different. The system can achieve optimal classification performance when combining the 576-dimensional HOG feature and 576-dimensional LBP descriptor.

Table 3.

Performance of HOG-9ULBP descriptors with different sizes.

Finally, we compare the proposed method with the optimal parameters with the other gesture recognition methods, i.e., LBP feature and SVM [8], Krawtchouk polynomials and minimum distance (KMD) classifier [15], HOG feature and SVM [17], contour feature and SVM [29], fast region-based convolutional neural networks (R-CNN) [42] and Hu moment feature and SVM [43]. The experimental results on the self-collected dataset are shown in Table 4. We can see that the proposed method has the highest recognition precision of 99.01%.

Table 4.

Comparison results on self-collected dataset.

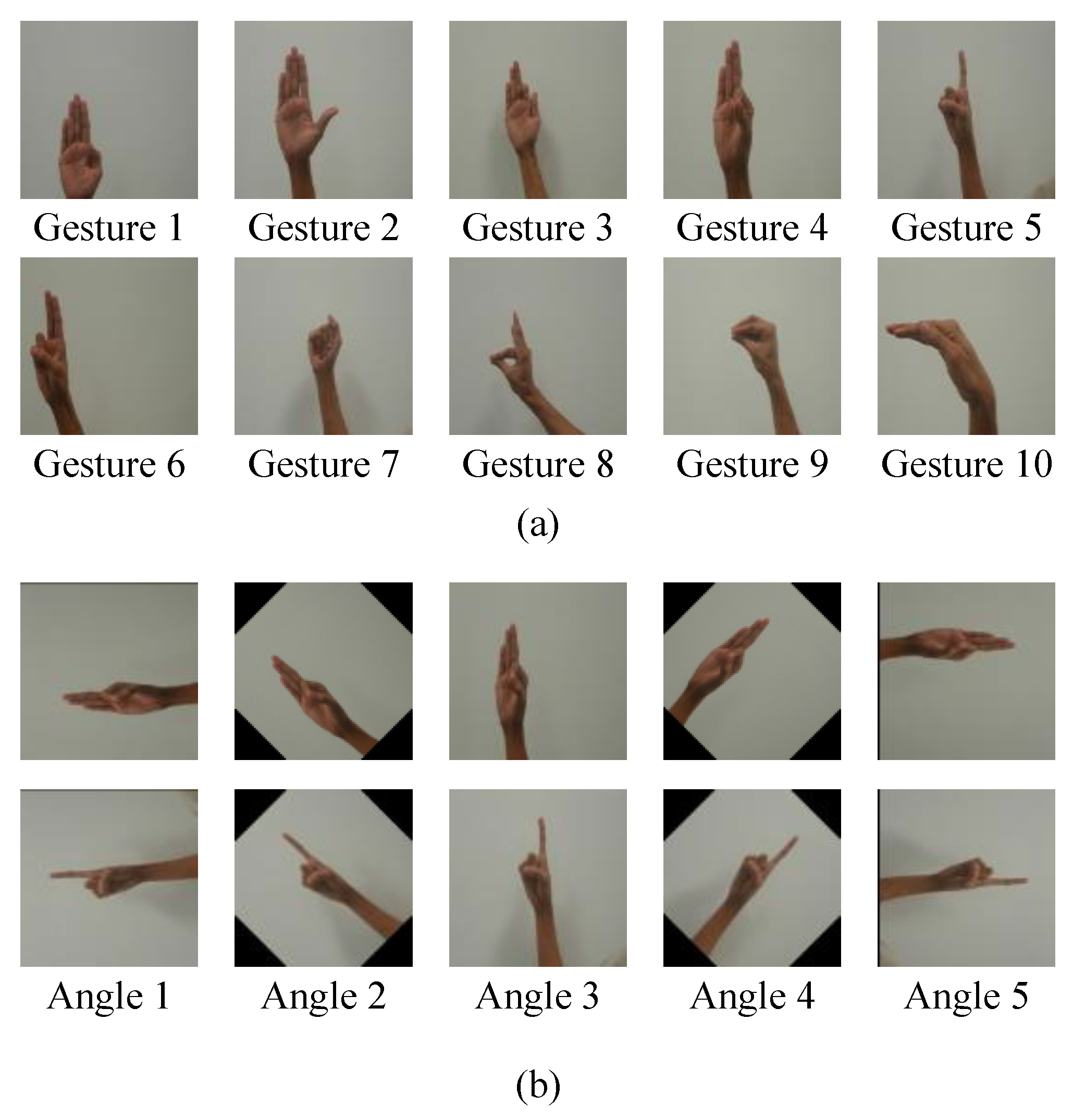

3.3. Experiment on the NUS Dataset

The NUS dataset [44] consists of 10 classes of gestures with 24 sample images per class as shown in Figure 12a, which are constructed by varying the position and size of the hand within the image frame. These gestures have less inter-class variations in appearance, which will undoubtedly increase the difficulty of recognition.

Figure 12.

(a) Several images in the NUS dataset. (b) The rotated images.

To further verify the effectiveness of the proposed MSMA gesture recognition approach on the NUS dataset, we rotate each gesture image in five directions, as shown in Figure 12b. During the experiments, we divide the dataset into two parts: a training set with 600 images and a test set with 600 images. The 10 cross-validation method was used to obtain the optimal SVM parameters. The comparative experimental results on the NUS dataset are shown in Table 5. It can be seen that the proposed method has the highest recognition rate of 97.5%.

Table 5.

Comparison results on the NUS dataset.

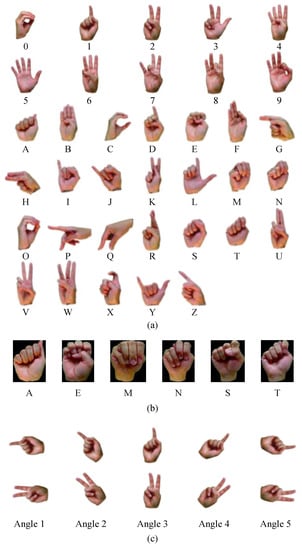

3.4. Experiment on the MU HandImages ASL Dataset

The MU HandImages ASL dataset [47] contains 2425 images of five individuals for each of the 36 American sign language gestures. The 36 gestures consist of 26 letters and 10 digits, which are shown in Figure 13a. Moreover, as shown in Figure 13b, within the dataset some gestures are highly similar and will definitely increase the recognition difficulties.

Figure 13.

(a) Images in the MU HandImages ASL dataset. (b) Some similar gesture images in the MU HandImages ASL dataset. (c) The rotated images in five directions based on the MU HandImages ASL dataset.

To further demonstrate the performance of the proposed MSMA gestures recognition algorithm, we rotate each image in five directions, as shown in Figure 13c and obtain an extended dataset with 12,125 images. We divide the dataset into two parts, i.e., a training set with 6062 images and a test set with 6063 images. The 10 cross-validation method was used to obtain the optimal SVM model. We can see from the experimental results (Table 6) that compared to other methods, the proposed method has the highest recognition rate of 98.72%.

Table 6.

Comparison results on the MU HandImages ASL dataset.

4. Conclusions

We propose a novel hand gesture recognition method that can effectively recognize MSMA gestures against complex backgrounds. First, the SGM-Kmeans method is put forward to segment gestures from complex backgrounds. Then, the HOG feature and an improved 9ULBP feature are combined to obtain a novel gesture feature. The proposed HOG-9ULBP feature not only has the rich contour information and texture information, but also the scale invariance and rotation invariance. Comparative experimental results show that the proposed gesture recognition method can achieve a higher recognition rate. In the future, we will add in-depth information to tackle MSMA gesture recognition problems in space and will investigate the dynamic gesture recognition based on depth and RGB information.

Author Contributions

Conceptualization, J.L. and G.B.; methodology, J.H. and S.Z.; software, C.L.; validation, C.L., J.H. and Y.S.; writing—original draft preparation, J.L. and J.H.; writing—review and editing, J.L., C.L., J.H. and S.Z.; visualization, J.L. and J.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Scientific Research Project of Colleges and Universities in Henan Province (grant number 20A120011), the Henan Province Science and Technology Key Project (grant number 222102210233), the National Natural Science Foundation of China (grant number 11947058, 61973103, 61473265, 61803344) and the Outstanding Foreign Scientist Support Project in Henan Province of China (grant number GZS2019008).

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We thank Y. Liu for assistance with the experiments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chakraborty, H.K.; Sarma, D.; Bhuyan, M.K.; Macdorman, K.F. Review of constraints on vision-based gesture recognition for human–computer interaction. IET Comput. Vis. 2018, 12, 3–15. [Google Scholar] [CrossRef]

- Guo, L.; Lu, Z.; Yao, L. Human–Machine Interaction Sensing Technology Based on Hand Gesture Recognition: A Review. IEEE Trans.-Hum.-Mach. Syst. 2021, 51, 300–309. [Google Scholar] [CrossRef]

- Deafness and Hearing Loss. Available online: https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss (accessed on 14 February 2022).

- Neiva, D.H.; Zanchettin, C. Gesture recognition: A review focusing on sign language in a mobile context. Expert Syst. Appl. 2018, 103, 159–183. [Google Scholar] [CrossRef]

- Setiawardhana; Hakkun, R.Y.; Baharuddin, A. Sign language learning based on Android for deaf and speech impaired people. In Proceedings of the 2015 International Electronics Symposium, Surabaya, Indonesia, 29–30 September 2015; pp. 114–117. [Google Scholar]

- Aly, W.; Aly, S.; Almotairi, S. User-independent american sign language alphabet recognition based on depth image and PCANet features. IEEE Access 2019, 7, 123138–123150. [Google Scholar] [CrossRef]

- Pisharady, P.K.; Saerbeck, M. Recent methods and databases in vision-based hand gesture recognition: A review. Comput. Vis. Image Underst. 2015, 141, 152–165. [Google Scholar] [CrossRef]

- Zou, C.; Liu, Y.; Wang, J.; Si, H. Deformable Part Model Based Hand Detection against Complex Backgrounds. Adv. Image Graph. Technol. 2016, 634, 149–159. [Google Scholar]

- Choudhury, A.; Talukdar, A.K.; Sarma, K.K. A novel hand segmentation method for multiple-hand gesture recognition system under complex background. In Proceedings of the International Conference on Signal Processing and Integrated Networks, Noida, India, 20–21 February 2014; pp. 136–140. [Google Scholar]

- Stergiopoulou, E.; Sgouropoulos, K.; Nikolaou, N.; Papamarkos, N.; Mitianoudis, N. Real time hand detection in a complex background. Eng. Appl. Artif. Intell. 2014, 35, 54–70. [Google Scholar] [CrossRef]

- Cheng, F.C.; Chen, B.H.; Huang, S.C. A background model re-initialization method based on sudden luminance change detection. Eng. Appl. Artif. Intell. 2015, 38, 138–146. [Google Scholar] [CrossRef]

- Ban, Y.; Kim, S.K.; Kim, S.; Toh, K.A.; Lee, S. Face detection based on skin color likelihood. Pattern Recognit. 2014, 47, 1573–1585. [Google Scholar] [CrossRef]

- Hu, M.K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Li, G.; Ou, Q.; Luo, J. An Improved Hu-moment Algorithm in Gesture Recognition Based on Kinect Sensor. Inf. Technol. J. 2013, 12, 2963–2968. [Google Scholar]

- Priyal, S.P.; Bora, P.K. A robust static hand gesture recognition system using geometry based normalizations and Krawtchouk moments. Pattern Recognit. 2013, 48, 2202–2219. [Google Scholar] [CrossRef]

- Al-Utaibi, K.A.; Abdulhussain, S.H.; Mahmmod, B.M.; Naser, M.A.; Alsabah, M.; Sait, S.M. Reliable recurrence algorithm for high-order Krawtchouk polynomials. Entropy 2021, 23, 1162. [Google Scholar] [CrossRef]

- Žemgulys, J.; Raudonis, V.; Maskeliūnas, R.; Damaševičius, R. Recognition of basketball referee signals from videos using Histogram of Oriented Gradients (HOG) and Support Vector Machine (SVM). Procedia Comput. Sci. 2018, 130, 953–960. [Google Scholar] [CrossRef]

- Maqueda, A.I.; Del-Blanco, C.R.; Jaureguizar, F. Human–Computer Interaction based on Visual Hand-Gesture Recognition using Volumetric Spatiograms of Local Binary Patterns. Comput. Vis. Image Underst. 2015, 141, 126–137. [Google Scholar] [CrossRef] [Green Version]

- Zhou, S.; Liu, Y.H.; Li, K.Q. Recognition of multi-scale multi-angle gestures based on HOG-LBP feature. In Proceedings of the Internationla Conference on Control, Automation, Robotics and Vision, Singapore, 18–21 November 2018; pp. 407–412. [Google Scholar]

- Yao, S.; Pan, S.; Wang, T.; Zheng, C.; Shen, W.; Chong, Y. A new pedestrian detection method based on combined HOG and LSS features. Neurocomputing 2015, 151, 1006–1014. [Google Scholar] [CrossRef]

- Muhammad, A.; Muhammad, J.I.; Iftikhar, A.; Madini, O.A.; Rayed, A.; Mohammad, B.; Muhammad, W. Real-time surveillance through face recognition using HOG and feedforward neural networks. IEEE Access 2019, 7, 121236–121244. [Google Scholar]

- Anwer, R.M.; Khan, F.S.; Weijer, J.; Molinier, M.; Laaksonen, J. Binary patterns encoded convolutional neural networks for texture recognition and remote sensing scene classification. ISPRS J. Photogramm. Remote Sens. 2018, 138, 74–85. [Google Scholar] [CrossRef] [Green Version]

- Singh, S.; Chintalacheruvu, S.C.K.; Garg, S.; Giri, Y.; Kumar, M. Efficient Face Identification and Authentication Tool for Biometric Attendance System. In Proceedings of the 2021 8th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 26–27 August 2021; pp. 379–383. [Google Scholar]

- Zhu, C.; Wang, R. Local multiple patterns based multiresolution gray-scale and rotation invariant texture classification. Inf. Sci. 2012, 187, 93–109. [Google Scholar] [CrossRef]

- Konstantinidis, D.; Stathaki, T.; Argyriou, V.; Grammalidis, N. Building detection using enhanced HOG-LBP features and region refinement processes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Kumar, M.; Rani, A.; Raheja, S.; Munjal, G. Automatic Brain Tumor Detection Using Machine Learning and Mixed Supervision. In Evolving Role of AI and IoMT in the Healthcare Market; Springer: Cham, Switzerland, 2021; pp. 247–262. [Google Scholar]

- Lahiani, H.; Neji, M. A survey on hand gesture recognition for mobile devices. Int. J. Intell. Syst. Technol. Appl. 2020, 19, 458–485. [Google Scholar]

- Zheng, C.H.; Pei, W.J.; Yan, Q.; Chong, Y.W. Pedestrian detection based on gradient and texture feature integration. Neurocomputing 2017, 228, 71–78. [Google Scholar] [CrossRef] [Green Version]

- Ren, Y.; Xie, X.; Li, G.; Wang, Z. Hand Gesture Recognition With Multiscale Weighted Histogram of Contour Direction Normalization for Wearable Applications. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 364–377. [Google Scholar] [CrossRef]

- Liang, Z.; Sun, Z.; Cao, M. Recognition of static human gesture based on radiant projection transform and Fourier transform. In Proceedings of the International Congress on Image and Signal Processing, Sanya, China, 27–30 May 2008; pp. 635–640. [Google Scholar]

- Huang, Y.; Yang, J. A multi-scale descriptor for real time RGB-D hand gesture recognition. Pattern Recognit. Lett. 2021, 144, 97–104. [Google Scholar] [CrossRef]

- Zhou, Y.; Jiang, G.; Lin, Y. A novel finger and hand pose estimation technique for real-time hand gesture recognition. Pattern Recognit. 2015, 49, 102–114. [Google Scholar] [CrossRef]

- Chakraborty, B.K.; Bhuyan, M.K.; Kumar, S. Combining image and global pixel distribution model for skin colour segmentation. Pattern Recognit. Lett. 2017, 88, 33–40. [Google Scholar] [CrossRef]

- Kakumanu, P.; Makrogiannis, S.; Bourbakis, N. A survey of skin-color modeling and detection methods. Pattern Recognit. 2007, 40, 1106–1122. [Google Scholar] [CrossRef]

- Sun, J.; Wu, X. Infrared target recognition based on improved joint local ternary pattern. Opt. Eng. 2016, 55, 53–101. [Google Scholar] [CrossRef]

- Lategahn, H.; Gross, S.; Stehle, T.; Aach, T. Texture classification by modeling joint distributions of local patterns with Gaussian mixtures. IEEE Trans. Image Process. 2010, 19, 1548–1557. [Google Scholar] [CrossRef] [PubMed]

- Xia, S.; Chen, P.; Zhang, J.; Li, X.; Wang, B. Utilization of rotation-invariant uniform lbp histogram distribution and statistics of connected regions in automatic image annotation based on multi-label learning. Neurocomputing 2017, 228, 11–18. [Google Scholar] [CrossRef] [Green Version]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Yang, Y.T.; Fishbain, B.; Hochbaum, D.S.; Norman, E.B.; Swanberg, E. The Supervised Normalized Cut Method for Detecting, Classifying, and Identifying Special Nuclear Materials. Informs J. Comput. 2013, 26, 45–58. [Google Scholar]

- Richhariya, B.; Tanveer, M. Eeg signal classification using universum support vector machine. Expert Syst. Appl. 2018, 106, 169–182. [Google Scholar] [CrossRef]

- Tang, H.; Liu, H.; Xiao, W.; Sebe, N. Fast and robust dynamic hand gesture recognition via key frames extraction and feature fusion. Neurocomputing 2019, 331, 424–433. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Kelly, D.; Mcdonald, J.; Markham, C. A person independent system for recognition of hand postures used in sign language. Pattern Recognit. Lett. 2010, 31, 1359–1368. [Google Scholar] [CrossRef] [Green Version]

- Kumar, P.P.; Vadakkepat, P.; Loh, A.P. Hand posture and face recognition using a Fuzzy-Rough Approach. Int. J. Humanoid Robot. 2010, 7, 331–356. [Google Scholar] [CrossRef]

- Gupta, S.; Trivedi, M.C.; Kamya, S. Hand Skin Classification from Other Skin Objects Using Multi-direction 3D Color-Texture Feature and Cascaded Neural Network Classifier. Adv. Intell. Syst. Comput. 2016, 409, 523–534. [Google Scholar]

- Pisharady, P.K.; Vadakkepat, P.; Loh, A.P. Attention Based Detection and Recognition of Hand Postures Against Complex Backgrounds. Int. J. Comput. Vis. 2013, 101, 403–419. [Google Scholar] [CrossRef]

- Barczak, A.L.C.; Reyes, N.H.; Abastillas, M.; Piccio, A.; Susnjak, T. A New 2D Static Hand Gesture Colour Image Dataset for ASL Gestures. Ph.D. Thesis, Massey University, Palmerston North, New Zealand, 2011. [Google Scholar]

- Zhuang, H.; Yang, M.; Cui, Z.; Zheng, Q. A method for static hand gesture recognition based on non-negative matrix factorization and compressive sensing. Iaeng Int. J. Comput. Sci. 2017, 44, 52–59. [Google Scholar]

- Aowal, M.A.; Zaman, A.S.; Rahman, S.M.M.; Hatzinakos, D. Static hand gesture recognition using discriminative 2D Zernike moments. In Proceedings of the TENCON IEEE Region 10 Conference, Bangkok, Thailand, 22–25 October 2014; pp. 1–5. [Google Scholar]

- Kumar, V.; Nandi, G.C.; Kala, R. Static hand gesture recognition using stacked Denoising Sparse Autoencoders. In Proceedings of the International Conference on Contemporary Computing, Noida, India, 7–9 August 2014; pp. 99–104. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).