Research on Human Gait Phase Recognition Algorithm Based on Multi-Source Information Fusion

Abstract

1. Introduction

2. Research Methodology

2.1. Acquisition of Multi-Source Motion Information

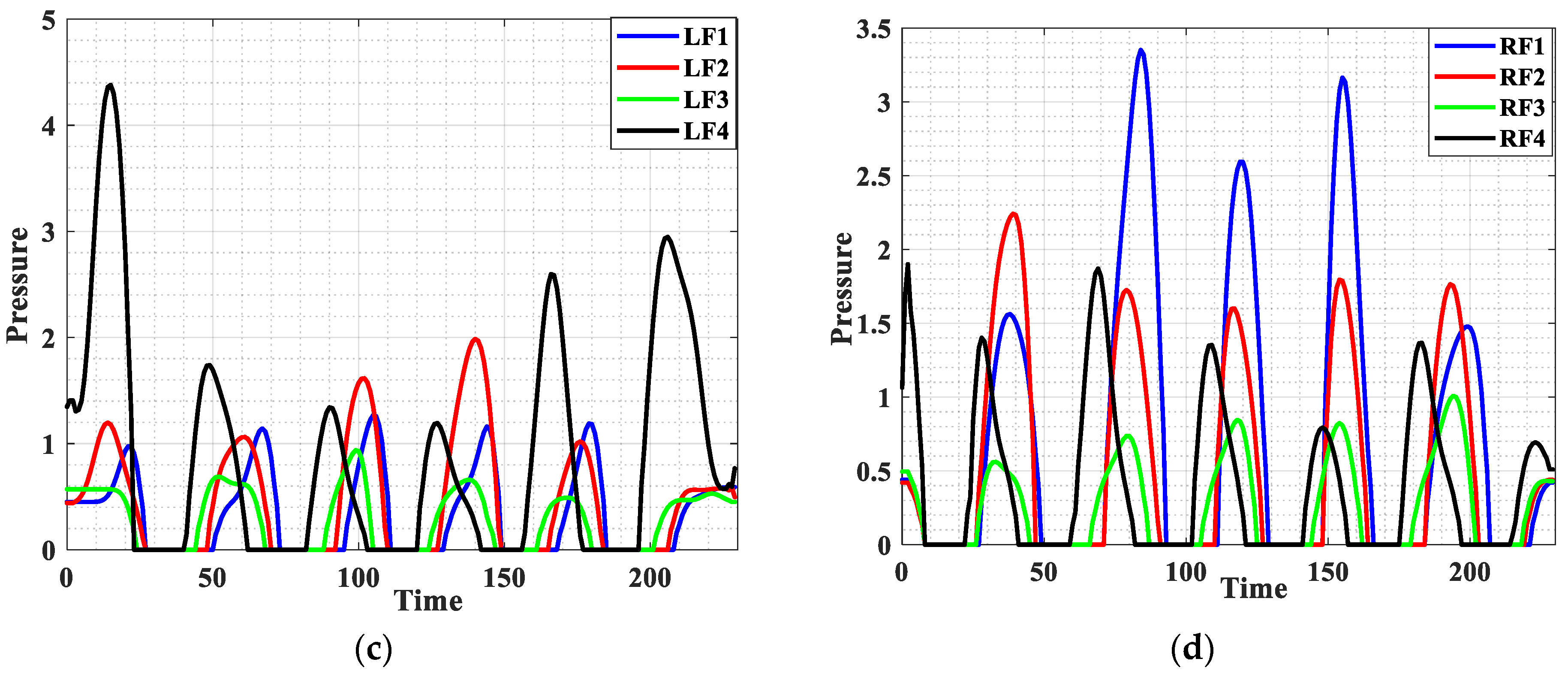

2.1.1. Plantar Pressure Information Collection Design

2.1.2. Inertial Data Sensing Unit

2.1.3. Microcontroller and Wireless Bluetooth Transmission Module

2.2. Data Preprocessing and Gait Analysis

2.2.1. Preprocessing of Sensor Signals

| Algorithm 1 An Improved Moving Average Filtering Algorithm |

| Input: Original data , number of repeated filtering , width of sliding window serial number of original data sampling points . Output: Filtered data |

| 1. for all do; 2. Formula (5) is obtained 3. ; times filtered data; 4. end for 5. Initialization , ; 6. Search for all zero data segments in ; 7. while ; 8. Set ; 9. Set ; 10. for all do; 11. 12. 13. end for 14. end while |

2.2.2. Feature Extraction and Feature Fusion

2.2.3. Analysis of Gait Phase

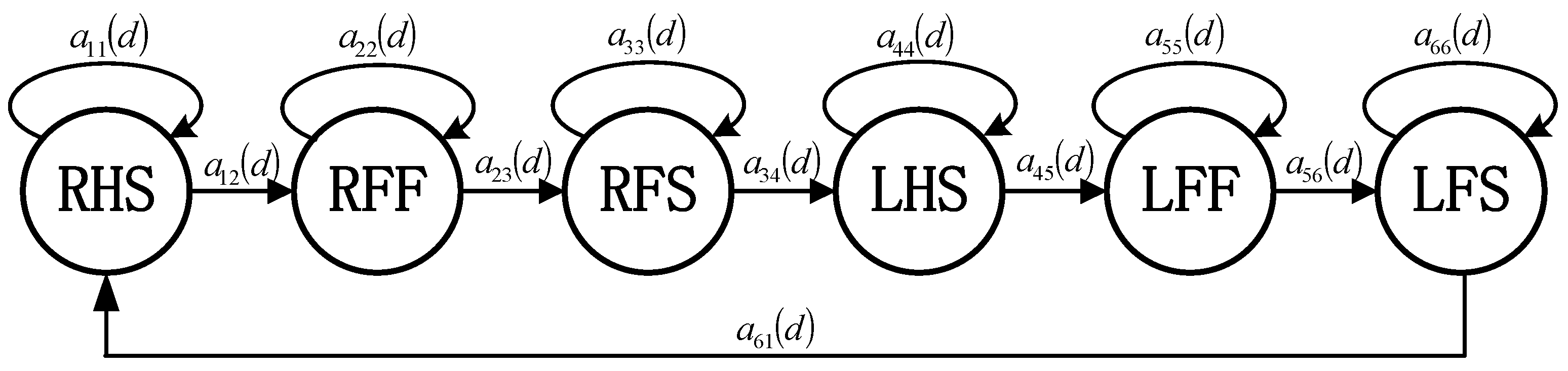

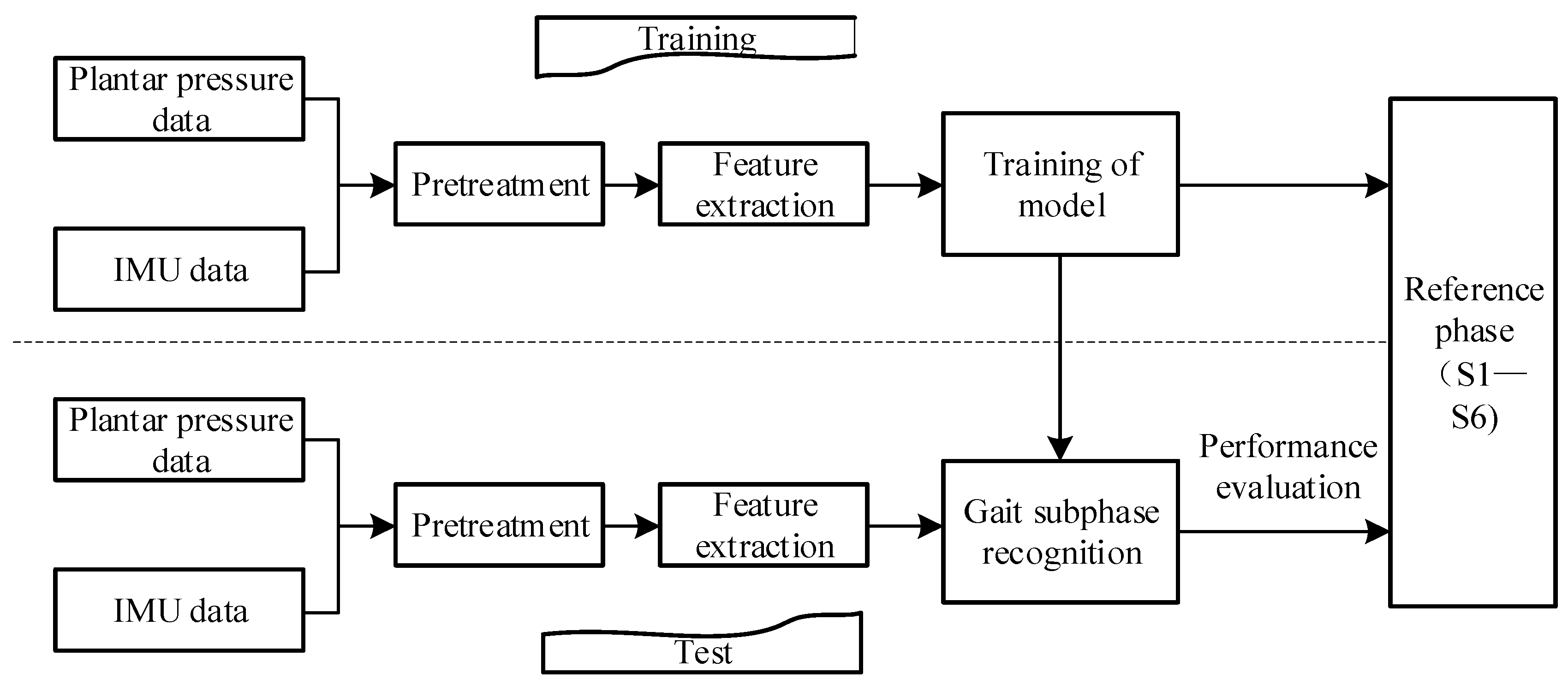

3. Gait Recognition Algorithm Based on Hidden Markov

3.1. Description of Hidden Markov Model with Dwell Time

3.2. Model Training

3.3. Subphase Recognition Based on HMM Model

4. Experiment and Result Analysis

4.1. Experimental Data Collection

4.2. Algorithm Performance Evaluation

4.2.1. Recognition Based on Single-Channel Sensors

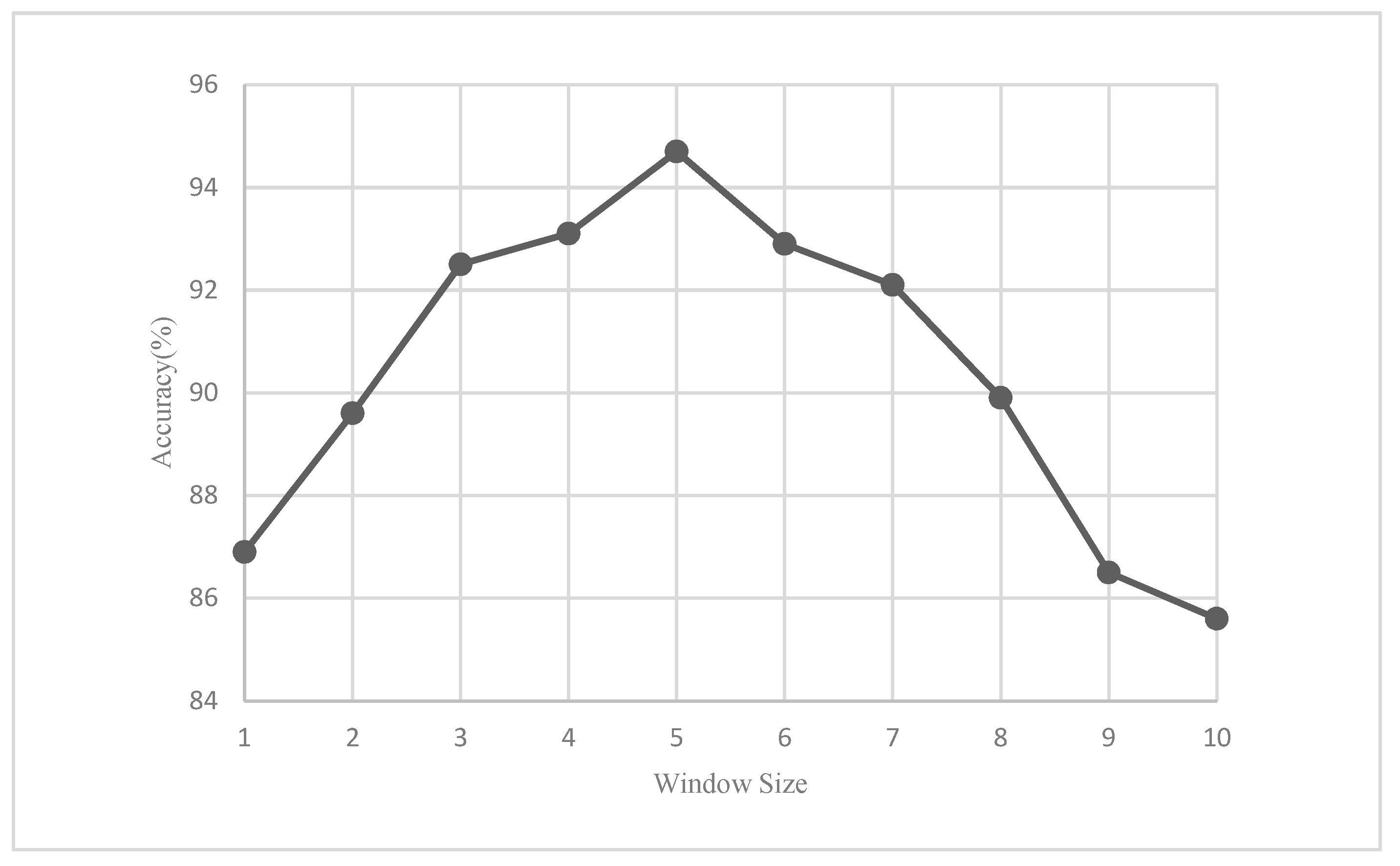

4.2.2. Window Size Evaluation

4.2.3. Gait Recognition Performance Evaluation of Different Classifiers

4.2.4. Leave-One-Out Validation

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Y.Y.; Ma, Y. Development and application of a new walking training device for lower limb rehabilitation. Med. J. Natl. Defending Forces Northwest China 2021, 42, 654–658. [Google Scholar]

- Gao, Q. The effect of early hyperbaric oxygen combined with lower limb rehabilitation robot training on walking ability of patients with cerebral apoplexy hemiplegia. Pract. J. Clin. Med. 2018, 15, 98–100. [Google Scholar]

- Warraich, Z.; Kleim, J.A. Neural plasticity: The biological substrate for neurorehabilitation. PM & R 2010, 2, S208–S219. [Google Scholar]

- Shepherd, M.K.; Rouse, E.J. Design and validation of a torque-controllable knee exoskeleton for sit-to-stand assistance. IEEE/ASME Trans. Mechatron. 2017, 22, 1695–1704. [Google Scholar] [CrossRef]

- Zhou, J.; Cao, Z.F.; Dong, X.L.; Lin, X.D. Security and privacy in cloud-assisted wireless wearable communications: Challenges, solutions and, future directions. IEEE Wireless Commun. 2015, 22, 136–144. [Google Scholar] [CrossRef]

- Wang, Y.; Du, B.; Shen, Y.; Wu, K.; Zhao, G.; Sun, J.; Wen, H. EV-gait: Event-based robust gait recognition using dynamic vision sensors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 6358–6367. [Google Scholar]

- Chen, C. UTD-MHAD: A multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In Proceedings of the IEEE International Conference on Image Processing, Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Liu, N.; Su, Z.; Li, Q.; Zhao, H.; Qi, W. A Combined CKF-PSR Method For Random Noise Compensation of Vibratory Gyroscopes. J. Ind. Inf. Integr. 2021, 25, 100241. [Google Scholar] [CrossRef]

- Jia, X.H.; Wang, T.; Liu, J.Y.; Li, T.J. Gait recognition and intention perception method based on human body model mapping. Chin. J. Sci. Instrum. 2020, 41, 236–244. [Google Scholar]

- Diya, S.Z.; Prorna, R.A.; Rahman, I.I.; Islam, A.B.; Islam, M.N. Applying Brain-Computer Interface technology for Evaluation of user experience in playing games. In Proceedings of the International Conference on Electrical, Computer and Communication Engineering (ECCE 2019), Cox’s Bazar, Bangladesh, 7–9 February 2019; pp. 16–19. [Google Scholar]

- Xi, X.; Jiang, W.; Lü, Z.; Miran, S.M.; Luo, Z.Z. Daily activity monitoring and fall detection based on surfaceelectromyography and plantar pressure. Complexity 2020, 2020, 9532067. [Google Scholar] [CrossRef]

- Wei, W.; Liu, A.; Shahzad, M. Gait recognition using wifi signals. In Proceedings of the 2016 ACM International Joint Conference ACM, San Francisco, CA, USA, 13–17 August 2016; pp. 363–373. [Google Scholar]

- Jiang, X.; Zhang, Y.; Yang, Q.; Deng, B.; Wang, H. Millimeter-Wave Array Radar-Based Human Gait Recognition Using Multi-Channel Three-Dimensional Convolutional Neural Network. Sensors 2020, 20, 5466. [Google Scholar] [CrossRef] [PubMed]

- Sutherland, D.H. The evolution of clinical gait analysis part III–kinetics and energy assessment. Gait Posture 2005, 21, 447–461. [Google Scholar] [CrossRef]

- Van der Kruk, E.; Reijne, M.M. Accuracy of human motion capture systems for sport applications; state-of-the-art review. Eur. J. Sport Sci. 2018, 18, 806–819. [Google Scholar]

- Daniel, H. Robust solving of optical motion capture data by denoising. ACM Trans. Graph. 2018, 37, 165. [Google Scholar]

- Ali, A.; Sundaraj, K.; Ahmad, B.; Ahamed, N.; Islam, A. Gait disorder rehabilitation using vision and non-vision based sensors: A systematic review. Bosn. J. Basic Med. Sci. 2012, 12, 193–202. [Google Scholar] [CrossRef] [PubMed]

- Glowinski, S.; Blazejewski, A.; Krzyzynski, T. Inertial Sensors and Wavelets Analysis as a Tool for Pathological Gait Identification. In Innovations in Biomedical Engineering; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Shubao, Y.; Chen, C.; Hangyu, Z.; Xinping, W.; Wei, C. Neural Networks for Pathological Gait Classification Using Wearable Motion Sensors. In Proceedings of the Biomedical Circuits and Systems Conference, Nara, Japan, 17–19 October 2019. [Google Scholar]

- Arellano-González, J.C.; Medellín-Castillo, H.I.; Cervantes-Sánchez, J.J. Identification and Analysis of the Biomechanical Parameters Used for the Assessment of Normal and Pathological Gait: A Literature Review. In Proceedings of the ASME 2019 International Mechanical Engineering Congress and Exposition, Salt Lake City, UT, USA, 11–14 November 2019. [Google Scholar]

- Zhang, J.; Li, P.; Zhu, T.; Zhang, W.; Liu, S. Human motion capture based on kinect and IMUs and its application to human-robot collaboration. In Proceedings of the 2020 5th International Conference on Advanced Robotics and Mechatronics (ICARM), Shenzhen, China, 18–21 December 2020; pp. 392–397. [Google Scholar]

- Ma, W.; Zhang, X.; Yin, G. Design on intelligent perception system for lower limb rehabilitation exoskeleton robot. In Proceedings of the International Conference on Ubiquitous Robots and Ambient Intelligence, Xi’an, China, 19–22 August 2016; pp. 587–592. [Google Scholar]

- Wang, C.; Wu, X.; Wang, Z.; Ma, Y. Implementation of a brain-computer interface on a lower-limb exoskeleton. IEEE Access 2018, 6, 38524–38534. [Google Scholar] [CrossRef]

- Young, A.J.; Gannon, H.; Ferris, D.P. A biomechanical comparison of proportional electromyography control to biological torque control using a powered hip exoskeleton. Front. Bioeng. Biotechnol. 2017, 5, 37. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Eguren, D.; Azorín, J.M.; Grossman, R.G.; Luu, T.P.; Contreras-Vidal, J.L. Brain-machine interfaces for controlling lower-limb powered robotic systems. J. Neural Eng. 2018, 15, 021004. [Google Scholar] [CrossRef]

- Gadaleta, M.; Rossi, M. IDNet: Smartphone-based gait recognition with convolutional neural networks. Pattern Recognit. 2018, 74, 25–37. [Google Scholar] [CrossRef]

- Zou, Q.; Wang, Y.; Wang, Q.; Zhao, Y.; Li, Q. Deep learning-based gait recognition using smartphones in the wild. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3197–3212. [Google Scholar] [CrossRef]

- Huang, H.; Zhou, P.; Li, Y.; Sun, F. A lightweight attention-based CNN model for efficient gait recognition with wearable IMU sensors. Sensors 2021, 21, 2866. [Google Scholar] [CrossRef]

- Ahmad, M.; Raza, R.A.; Mazzara, M. Multi sensor-based implicit user identification. Comput. Mater. Contin. 2021, 68, 1673–1692. [Google Scholar] [CrossRef]

- Choi, S.; Youn, I.H.; Lemay, R. Biometric gait recognition based on wireless acceleration sensor using k-nearest neighbor classification. In Proceedings of the International Conference on Computing, Beijing, China, 22–23 May 2014. [Google Scholar]

- De Rossi, S.M.; Crea, S.; Donati, M.; Reberšek, P.; Novak, D.; Vitiello, N.; Lenzi, T.; Podobnik, J.; Munih, M.; Carrozza, M.C. Gait segmentation using bipedal foot pressure patterns. In Proceedings of the IEEE Ras & Embs International Conference on Biomedical Robotics & Biomechatronics, Rome, Italy, 24–27 June 2012. [Google Scholar]

- Ailisto, H.J.; Lindholm, M.; Mantyjarvi, J.; Vildjiounaite, E.; Makela, S.M. Identifying people from gait pattern with accelerometers. Proc. SPIE Int. Soc. Opt. Eng. 2005, 5779, 7–14. [Google Scholar]

- Sun, F.M.; Mao, C.F.; Fan, X.M.; Li, Y. Accelerometer-based speed-adaptive gait authentication method for wearable IoT devices. IEEE Int. Things. J. 2018, 6, 820–830. [Google Scholar] [CrossRef]

- Mannini, A.; Sabatini, A.M. Machine learning methods for classifying human physical activity from on-body accelerometers. Sensors 2010, 10, 1154–1175. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Awrejcewicz, J.; Szymanowska, O.; Shen, S.; Zhao, X.; Baker, J.S.; Gu, Y. Effects of severe hallux valgus on metatarsal stress and the metatarsophalangeal loading during balanced standing: A finite element analysis. Comput. Biol. Med. 2018, 97, 1–7. [Google Scholar] [CrossRef]

- Vigneshwaran, S.; Murali, G. Foot plantar pressure measurement system for static and dynamic condition. IOP Conf. Ser. Mater. Sci. Eng. 2020, 993, 012106. [Google Scholar] [CrossRef]

- Ehatisham-Ul-Haq, M.; Javed, A.; Azam, M.A.; Malik, H.M.; Irtaza, A.; Lee, I.H.; Mahmood, M.T. Robust human activity recognition using multimodal feature-level fusion. IEEE Access 2019, 7, 60736–60751. [Google Scholar] [CrossRef]

- Zhang, X.G.; Tang, H.; Fu, C.J.; Shi, Y.L. Gait Recognition algorithm based on hidden markov model. Comput. Sci. 2016, 43, 285–289, 302. [Google Scholar]

- Du, S.P. Improved forward-back algorithm for hidden model with dwell time. Pure Appl. Math. 2008, 24, 580–584. [Google Scholar]

- Guo, Q.; Chai, H.X. Modeling state dwell time in hidden markov model. J. Tsinghua Univ. (Sci. Technol.) 1999, 39, 98–101. [Google Scholar]

| Subject | Age (y) | Height (kg) | Weight (cm) | Shoe Size (EU) |

|---|---|---|---|---|

| 1 | 26 | 71 | 173 | 42 |

| 2 | 28 | 68 | 169 | 41.5 |

| 3 | 24 | 56 | 172 | 41.5 |

| 4 | 26 | 80 | 175 | 42 |

| 5 | 31 | 66 | 172 | 41.5 |

| 6 | 30 | 68 | 174 | 42 |

| 7 | 29 | 52 | 172 | 41.5 |

| 8 | 25 | 46 | 168 | 40 |

| Sensor(s) | S1 (%) | S2 (%) | S3 (%) | S4 (%) | S5 (%) | S6 (%) | Aggregated (%) |

|---|---|---|---|---|---|---|---|

| Acc | 87.9 | 51.3 | 77.8 | 86.3 | 85.8 | 93.1 | 83.9 |

| Gyro | 83.9 | 53.9 | 75.6 | 91.2 | 80.7 | 91.4 | 82.4 |

| Force | 90.3 | 50.4 | 80.3 | 92.5 | 88.1 | 95.7 | 86.6 |

| Acc + Gyro | 89.6 | 54.8 | 78.9 | 93.3 | 89.4 | 97.6 | 87.3 |

| Acc + Gyro + Force | 96.3 | 81.7 | 90.4 | 97.4 | 94.9 | 99.8 | 94.7 |

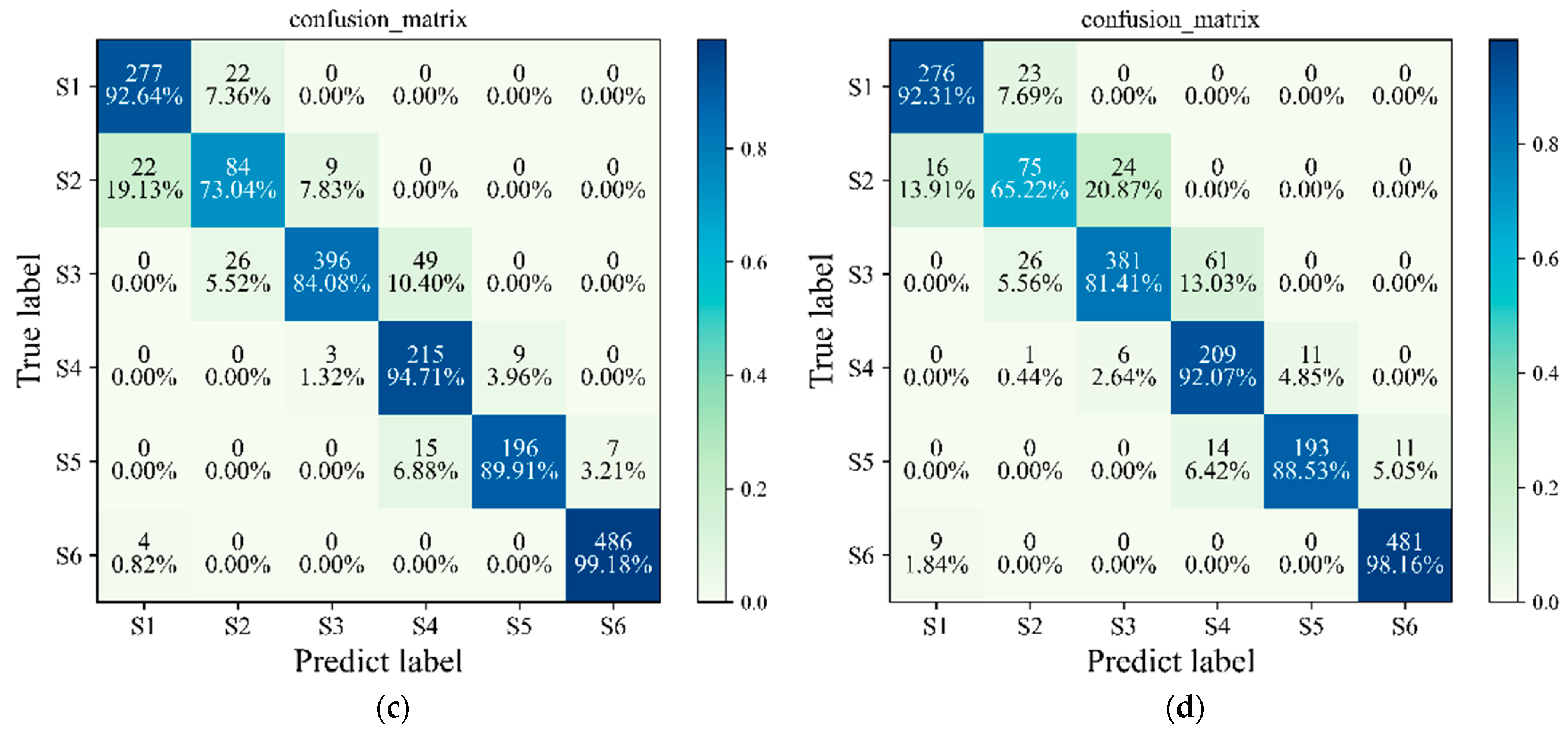

| Method | S1 (%) | S2 (%) | S3 (%) | S4 (%) | S5 (%) | S6 (%) | Aggregated (%) |

|---|---|---|---|---|---|---|---|

| NHMM | 96.3 | 81.7 | 90.4 | 97.4 | 94.9 | 99.8 | 94.7 |

| HMM | 94.9 | 60.0 | 82.3 | 93.8 | 91.7 | 99.6 | 90.2 |

| LSTM | 92.6 | 73.1 | 84.1 | 94.7 | 89.9 | 99.1 | 91.1 |

| SVM | 92.3 | 65.2 | 81.4 | 92.1 | 88.5 | 98.1 | 88.9 |

| Test Subject | Cross-Validation Accuracy (%) | Left Out Subject Accuracy (%) |

|---|---|---|

| 1 | 93.4 | 86.8 |

| 2 | 93.9 | 84.2 |

| 3 | 94.7 | 85.9 |

| 4 | 92.9 | 82.1 |

| 5 | 93.1 | 80.4 |

| 6 | 94.7 | 84.6 |

| 7 | 92.3 | 85.9 |

| 8 | 93.4 | 84.6 |

| Average | 93.6 | 84.3 |

| Standard deviation | 0.63 | 3.95 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Song, Q.; Ma, T.; Yao, N.; Liu, R.; Wang, B. Research on Human Gait Phase Recognition Algorithm Based on Multi-Source Information Fusion. Electronics 2023, 12, 193. https://doi.org/10.3390/electronics12010193

Wang Y, Song Q, Ma T, Yao N, Liu R, Wang B. Research on Human Gait Phase Recognition Algorithm Based on Multi-Source Information Fusion. Electronics. 2023; 12(1):193. https://doi.org/10.3390/electronics12010193

Chicago/Turabian StyleWang, Yu, Quanjun Song, Tingting Ma, Ningguang Yao, Rongkai Liu, and Buyun Wang. 2023. "Research on Human Gait Phase Recognition Algorithm Based on Multi-Source Information Fusion" Electronics 12, no. 1: 193. https://doi.org/10.3390/electronics12010193

APA StyleWang, Y., Song, Q., Ma, T., Yao, N., Liu, R., & Wang, B. (2023). Research on Human Gait Phase Recognition Algorithm Based on Multi-Source Information Fusion. Electronics, 12(1), 193. https://doi.org/10.3390/electronics12010193