Few-Shot Learning for Multi-POSE Face Recognition via Hypergraph De-Deflection and Multi-Task Collaborative Optimization

Abstract

1. Introduction

- (1)

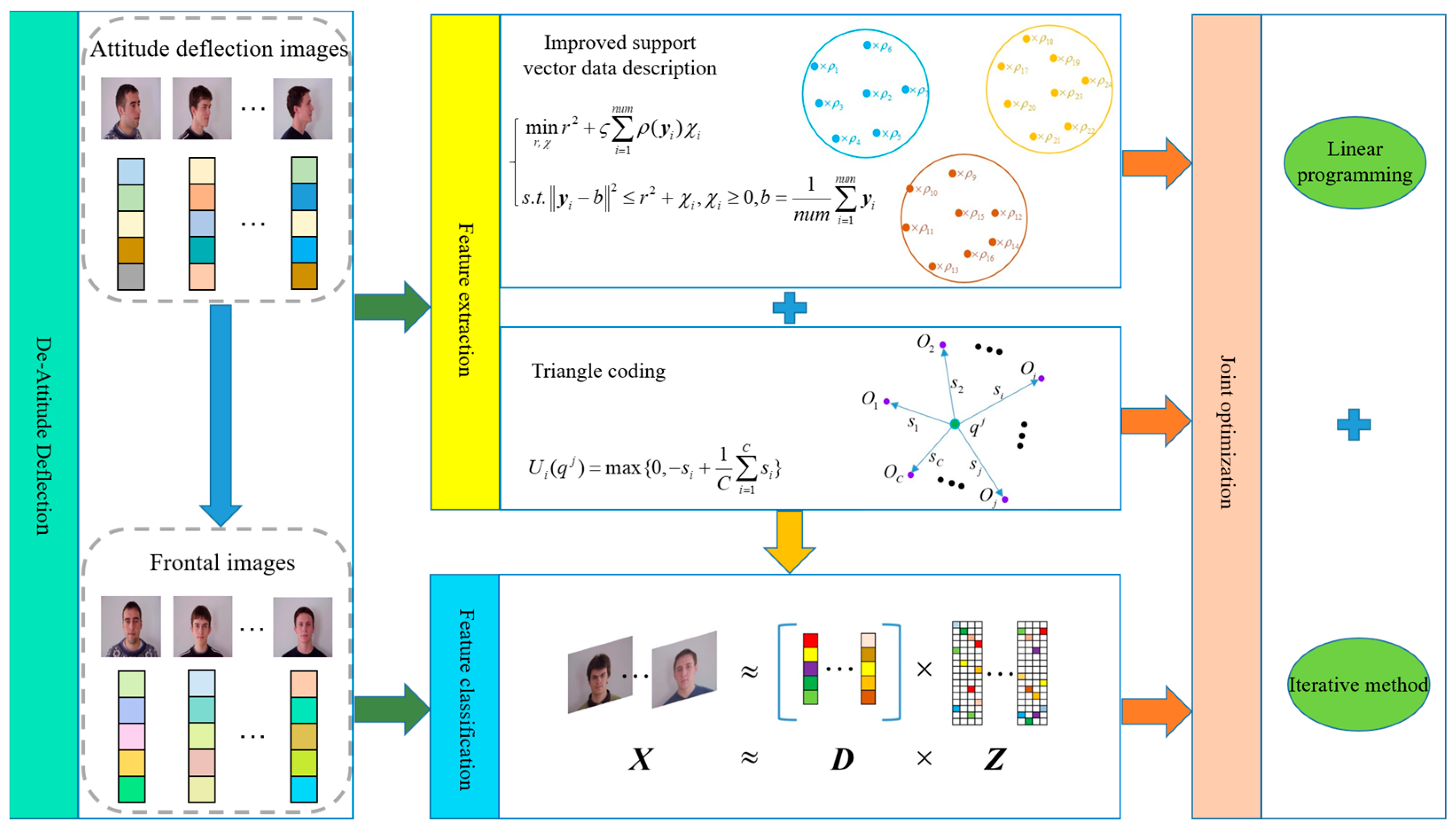

- A novel multi-pose face recognition framework based on hypergraph de-deflection is proposed. The framework first isolates the pose-free deflection images, then utilizes the proposed feature coding method based on improved support vector data description to extract the features of the pose-free deflection images, and recognizes the extracted features.

- (2)

- A new feature encoding method based on improved support vector data description is proposed. The feature encoding method utilizes the improved support vector data description and triangle encoding to make the extracted features more discriminative.

- (3)

- An effective feature extraction and feature classification optimization model is constructed, which makes it easy to obtain a solution closer to the global optimum and helps to improve the recognition performance of the algorithm.

2. Relate Studies

2.1. Few-Shot Face Recognition

2.2. Non-Negative Matrix Factorization

2.3. Hypergraph Theory

- (a)

- , ;

- (b)

- ;

3. Proposed Method

3.1. Feature Discrimination Enhancement Method Based on Non-Negative Matrix Factorization and Hypergraph Embedding

3.2. Feature Coding Method Based on Improved Support Vector Data Description

3.3. Dictionary Learning-Based Classifier

3.4. Joint Optimization of the Feature Extraction and Feature Classification

4. Experiments

4.1. Dataset

4.2. Experimental Results and Analysis

4.2.1. Comparison with State-of-the-Art Methods

Experimental Setup

Experimental Result

4.2.2. Cross-Validation Experiment

4.2.3. The Effect of Feature Dimension on the Recognition Performance of the Algorithms

4.2.4. The Display of the Extracted Frontal Images

4.2.5. Ablation Experiment

Experimental Setup

Experimental Results

4.2.6. The Effect of Parameters on the Recognition Performance of HDMCO

4.2.7. Comparison of Computational Complexity

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jeevan, G.; Zacharias, G.C.; Nair, M.S.; Rajan, J. An empirical study of the impact of masks on face recognition. Pattern Recognit. 2022, 122, 108308. [Google Scholar] [CrossRef]

- Solovyev, R.; Wang, W.; Gabruseva, T. Weighted boxes fusion: Ensembling boxes from different object detection models. Image Vis. Comput. 2021, 107, 104117. [Google Scholar] [CrossRef]

- Wu, C.; Ju, B.; Wu, Y.; Xiong, N.N.; Zhang, S. WGAN-E: A generative adversarial networks for facial feature security. Electronics 2020, 9, 486. [Google Scholar] [CrossRef]

- Sengupta, S.; Chen, J.C.; Castillo, C.; Patel, V.M.; Chellappa, R.; Jacobs, D.W. Frontal to profile face verification in the wild. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Khrissi, L.; El Akkad, N.; Satori, H.; Satori, K. Clustering method and sine cosine algorithm for image segmentation. Evol. Intell. 2022, 15, 669–682. [Google Scholar] [CrossRef]

- Zhao, J.; Xiong, L.; Cheng, Y.; Cheng, Y.; Li, J.; Zhou, L.; Xu, Y.; Karlekar, J.; Pranata, S.; Shen, S.; et al. 3D-aided deep pose-invariant face recognition. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18), Stockholm, Sweden, 13–19 July 2018; Volume 2, p. 11. [Google Scholar]

- Zhao, J.; Xiong, L.; Li, J.; Xing, J.; Yan, S.; Feng, J. 3D-aided dual-agent gans for unconstrained face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2380–2394. [Google Scholar] [CrossRef]

- Zhao, J.; Cheng, Y.; Xu, Y.; Xiong, L.; Li, J.; Zhao, F.; Jayashree, K.; Pranata, S.; Shen, S.; Xing, J.; et al. Towards pose invariant face recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2207–2216. [Google Scholar]

- Zhao, J.; Xiong, L.; Karlekar Jayashree, P.; Li, J.; Zhao, F.; Wang, Z.; Sugiri Pranata, P.; Shengmei Shen, P.; Yan, S.; Feng, J. Dual-agent gans for photorealistic and identity preserving profile face synthesis. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhao, J. Deep Learning for Human-Centric Image Analysis. Ph.D. Thesis, National University of Singapore, Singapore, 2018. [Google Scholar]

- Khrissi, L.; EL Akkad, N.; Satori, H.; Satori, K. An Efficient Image Clustering Technique based on Fuzzy C-means and Cuckoo Search Algorithm. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 423–432. [Google Scholar] [CrossRef]

- Ding, C.; Tao, D. Pose-invariant face recognition with homography-based normalization. Pattern Recognit. 2017, 66, 144–152. [Google Scholar] [CrossRef]

- Luan, X.; Geng, H.; Liu, L.; Li, W.; Zhao, Y.; Ren, M. Geometry structure preserving based gan for multi-pose face frontalization and recognition. IEEE Access 2020, 8, 104676–104687. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, J. Unsupervised face frontalization for pose-invariant face recognition. Image Vis. Comput. 2021, 106, 104093. [Google Scholar] [CrossRef]

- Yin, Y.; Jiang, S.; Robinson, J.P.; Fu, Y. Dual-attention gan for large-pose face frontalization. In Proceedings of the 2020 15th IEEE international conference on automatic face and gesture recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 249–256. [Google Scholar]

- Lin, C.-H.; Huang, W.-J.; Wu, B.-F. Deep representation alignment network for pose-invariant face recognition. Neurocomputing 2021, 464, 485–496. [Google Scholar] [CrossRef]

- Yang, H.; Gong, C.; Huang, K.; Song, K.; Yin, Z. Weighted feature histogram of multi-scale local patch using multi-bit binary descriptor for face recognition. IEEE Trans. Image Process. 2021, 30, 3858–3871. [Google Scholar] [CrossRef]

- Tu, X.; Zhao, J.; Liu, Q.; Ai, W.; Guo, G.; Li, Z.; Liu, W.; Feng, J. Joint face image restoration and frontalization for recognition. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1285–1298. [Google Scholar] [CrossRef]

- Zhou, L.-F.; Du, Y.-W.; Li, W.-S.; Mi, J.-X.; Luan, X. Pose-robust face recognition with huffman-lbp enhanced by divide-and-rule strategy. Pattern Recognit. 2018, 78, 43–55. [Google Scholar] [CrossRef]

- Zhang, C.; Li, H.; Qian, Y.; Chen, C.; Zhou, X. Locality-constrained discriminative matrix regression for robust face identification. IEEE Trans. Neural Netw. Learn. Syst. 2020, 33, 1254–1268. [Google Scholar] [CrossRef]

- Gao, L.; Guan, L. A discriminative vectorial framework for multi-modal feature representation. IEEE Trans. Multimed. 2021, 24, 1503–1514. [Google Scholar]

- Yang, S.; Deng, W.; Wang, M.; Du, J.; Hu, J. Orthogonality loss: Learning discriminative representations for face recognition. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2301–2314. [Google Scholar] [CrossRef]

- Huang, F.; Yang, M.; Lv, X.; Wu, F. Cosmos-loss: A face representation approach with independent supervision. IEEE Access 2021, 9, 36819–36826. [Google Scholar] [CrossRef]

- He, M.; Zhang, J.; Shan, S.; Kan, M.; Chen, X. Deformable face net for pose invariant face recognition. Pattern Recognit. 2020, 100, 107113. [Google Scholar] [CrossRef]

- Wang, Q.; Guo, G. Dsa-face: Diverse and sparse attentions for face recognition robust to pose variation and occlusion. IEEE Trans. Inf. Forensics Secur. 2021, 16, 4534–4543. [Google Scholar] [CrossRef]

- He, R.; Li, Y.; Wu, X.; Song, L.; Chai, Z.; Wei, X. Coupled adversarial learning for semi-supervised heterogeneous face recognition. Pattern Recognit. 2021, 110, 107618. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, X.; Lei, Z.; Cao, D.; Li, S.Z. Fast adapting without forgetting for face recognition. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 3093–3104. [Google Scholar] [CrossRef]

- Sun, J.; Yang, W.; Xue, J.H.; Liao, Q. An equalized margin loss for face recognition. IEEE Trans. Multimed. 2020, 22, 2833–2843. [Google Scholar] [CrossRef]

- Zhang, Y.; Fu, K.; Han, C.; Cheng, P.; Yang, S.; Yang, X. PGM-face: Pose-guided margin loss for cross-pose face recognition. Neurocomputing 2021, 460, 154–165. [Google Scholar] [CrossRef]

- Badave, H.; Kuber, M. Head pose estimation based robust multicamera face recognition. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 492–495. [Google Scholar]

- Wang, L.; Li, S.; Wang, S.; Kong, D.; Yin, B. Hardness-aware dictionary learning: Boosting dictionary for recognition. IEEE Trans. Multimed. 2020, 23, 2857–2867. [Google Scholar] [CrossRef]

- Holkar, A.; Walambe, R.; Kotecha, K. Few-shot learning for face recognition in the presence of image discrepancies for limited multi-class datasets. Image Vis. Comput. 2022, 120, 104420. [Google Scholar] [CrossRef]

- Guan, Y.; Fang, J.; Wu, X. Multi-pose face recognition using cascade alignment network and incremental clustering. Signal, Image Video Process. 2021, 15, 63–71. [Google Scholar] [CrossRef]

- Zhang, Y.; Fu, K.; Han, C.; Cheng, P. Identity-and-pose-guided generative adversarial network for face rotation. Neurocomputing 2021, 450, 33–47. [Google Scholar] [CrossRef]

- Qu, H.; Wang, Y. Application of optimized local binary pattern algorithm in small pose face recognition under machine vision. Multimed. Tools Appl. 2022, 81, 29367–29381. [Google Scholar] [CrossRef]

- Masi, I.; Chang, F.J.; Choi, J.; Harel, S.; Kim, J.; Kim, K.; Leksut, J.; Rawls, S.; Wu, Y.; Hassner, T.; et al. Learning pose-aware models for pose-invariant face recognition in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 379–393. [Google Scholar] [CrossRef]

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S.; Khelifi, F. Pose-invariant face recognition with multitask cascade networks. Neural Comput. Appl. 2022, 34, 6039–6052. [Google Scholar] [CrossRef]

- Liu, J.; Li, Q.; Liu, M.; Wei, T. CP-GAN: A cross-pose profile face frontalization boosting pose-invariant face recognition. IEEE Access 2020, 8, 198659–198667. [Google Scholar] [CrossRef]

- Tao, Y.; Zheng, W.; Yang, W.; Wang, G.; Liao, Q. Frontal-centers guided face: Boosting face recognition by learning pose-invariant features. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2272–2283. [Google Scholar] [CrossRef]

- Gao, G.; Yu, Y.; Yang, M.; Chang, H.; Huang, P.; Yue, D. Cross-resolution face recognition with pose variations via multilayer locality-constrained structural orthogonal procrustes regression. Inf. Sci. 2020, 506, 19–36. [Google Scholar] [CrossRef]

- Wang, H.; Kawahara, Y.; Weng, C.; Yuan, J. Representative selection with structured sparsity. Pattern Recognit. 2017, 63, 268–278. [Google Scholar] [CrossRef]

- Gross, R.; Matthews, I.; Cohn, J.; Kanade, T.; Baker, S. Multi-pie. Image Vis. Comput. 2010, 28, 807–813. [Google Scholar] [CrossRef]

- Kemelmacher-Shlizerman, I.; Seitz, S.M.; Miller, D.; Brossard, E. The megaface benchmark: 1 million faces for recognition at scale. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4873–4882. [Google Scholar]

- Gao, W.; Cao, B.; Shan, S.; Chen, X.; Zhou, D.; Zhang, X.; Zhao, D. The CAS-PEAL large-scale chinese face database and baseline evaluations. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2007, 38, 149–161. [Google Scholar]

- Wolf, L.; Hassner, T.; Maoz, I. Face recognition in unconstrained videos with matched background similarity. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 529–534. [Google Scholar]

- Zheng, T.; Deng, W. Cross-Pose LFW: A Database for Studying Cross-Pose Face Recognition in Unconstrained Environments; Technical Report; Beijing University of Posts and Telecommunications: Beijing, China, 2018; Volume 5. [Google Scholar]

- Peer, P. CVL Face Database, Computer Vision Lab., Faculty of Computer and Information Science, University of Ljubljana, Slovenia. 2005. Available online: http://www.lrv.fri.uni-lj.si/facedb.html (accessed on 27 March 2023).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Duan, X.; Tan, Z.-H. A spatial self-similarity based feature learning method for face recognition under varying poses. Pattern Recognit. Lett. 2018, 111, 109–116. [Google Scholar] [CrossRef]

- Wu, H.; Gu, J.; Fan, X.; Li, H.; Xie, L.; Zhao, J. 3D-guided frontal face generation for pose-invariant recognition. ACM Trans. Intell. Syst. Technol. 2023, 14, 1–21. [Google Scholar] [CrossRef]

- Zhao, J.; Li, J.; Zhao, F.; Nie, X.; Chen, Y.; Yan, S.; Feng, J. Marginalized CNN: Learning deep invariant representations. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017. [Google Scholar] [CrossRef]

- Wang, X.; Wang, S.; Liang, Y.; Gu, L.; Lei, Z. RVFace: Reliable vector guided softmax loss for face recognition. IEEE Trans. Image Process. 2022, 31, 2337–2351. [Google Scholar] [CrossRef]

- Zhong, Y.; Deng, W.; Fang, H.; Hu, J.; Zhao, D.; Li, X.; Wen, D. Dynamic training data dropout for robust deep face recognition. IEEE Trans. Multimed. 2021, 24, 1186–1197. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4690–4699. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sun, Y.; Wang, X.; Tang, X. Deep learning face representation from predicting 10,000 classes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1891–1898. [Google Scholar]

| Methods\Datasets | Multi-PIE [40] | MegaFace [41] | CAS-PEAL [42] | YTF [43] | CPLFW [44] | CVL [45] |

|---|---|---|---|---|---|---|

| Reset [48] | 91.06 | 87.77 | 90.77 | 76.05 | 82.36 | 89.56 |

| Duan [49] | 87.68 | 82.55 | 89.37 | 73.88 | 81.06 | 85.17 |

| PGM-Face [29] | 90.23 | 85.33 | 90.01 | 73.20 | 78.58 | 88.06 |

| PCCycleGAN [14] | 88.99 | 85.01 | 88.85 | 75.16 | 80.66 | 87.38 |

| LDMR [20] | 91.33 | 86.23 | 92.29 | 77.22 | 85.06 | 90.23 |

| MH-DNCCM [21] | 91.78 | 85.89 | 90.46 | 76.11 | 82.50 | 87.98 |

| DRA-Net [16] | 93.06 | 83.99 | 93.16 | 76.47 | 82.97 | 90.01 |

| TGLBP [35] | 89.06 | 86.13 | 90.67 | 75.60 | 83.71 | 86.97 |

| MCN [37] | 92.01 | 86.24 | 91.37 | 77.05 | 85.20 | 88.31 |

| 3D-PIM [50] | 93.02 | 86.31 | 91.79 | 78.05 | 85.07 | 89.22 |

| WFH [17] | 91.55 | 86.01 | 92.70 | 74.88 | 84.01 | 87.34 |

| mCNN [51] | 88.68 | 85.17 | 87.58 | 72.89 | 80.59 | 81.38 |

| HADL [31] | 90.82 | 85.35 | 90.47 | 75.95 | 84.35 | 86.40 |

| RVFace [52] | 92.10 | 88.03 | 93.17 | 78.05 | 85.97 | 90.03 |

| DTDD [53] | 90.39 | 88.37 | 93.55 | 77.35 | 86.23 | 88.80 |

| ArcFace [54] | 95.89 | 91.37 | 92.13 | 83.40 | 84.88 | 87.23 |

| VGG [55] | 95.14 | 89.29 | 90.92 | 81.05 | 83.06 | 85.71 |

| DeepID [56] | 93.88 | 87.58 | 88.15 | 78.45 | 83.46 | 85.12 |

| HDMCO [ours] | 95.19 | 90.67 | 95.88 | 80.34 | 88.41 | 92.19 |

| Methods\Datasets | Multi-PIE [40] | MegaFace [41] | CAS-PEAL [42] | YTF [43] | CPLFW [44] | CVL [45] |

|---|---|---|---|---|---|---|

| Reset [48] | 78.35 | 70.68 | 76.18 | 82.67 | 76.83 | 81.33 |

| Duan [49] | 76.02 | 65.43 | 72.67 | 77.61 | 73.25 | 78.69 |

| PGM-Face [29] | 79.66 | 73.14 | 75.68 | 75.60 | 77.25 | 80.39 |

| PCCycleGAN [14] | 81.64 | 72.95 | 77.62 | 73.08 | 76.89 | 76.28 |

| LDMR [20] | 83.58 | 76.89 | 72.99 | 75.03 | 75.88 | 79.01 |

| MH-DNCCM [21] | 82.05 | 73.91 | 70.03 | 73.26 | 74.19 | 80.06 |

| DRA-Net [16] | 85.11 | 80.34 | 77.68 | 79.32 | 80.64 | 81.39 |

| TGLBP [35] | 80.24 | 78.92 | 78.33 | 75.17 | 78.38 | 79.68 |

| MCN [37] | 83.67 | 80.20 | 81.08 | 77.68 | 80.34 | 78.18 |

| 3D-PIM [50] | 85.02 | 81.35 | 81.69 | 79.67 | 77.58 | 76.64 |

| WFH [17] | 82.67 | 78.54 | 76.44 | 77.39 | 79.14 | 75.89 |

| mCNN [51] | 80.33 | 78.67 | 79.58 | 78.99 | 80.01 | 78.66 |

| HADL [31] | 78.89 | 80.59 | 79.44 | 79.88 | 78.46 | 80.62 |

| RVFace [52] | 82.24 | 80.30 | 77.89 | 79.01 | 81.33 | 80.23 |

| DTDD [53] | 80.95 | 80.68 | 79.25 | 79.31 | 80.64 | 82.07 |

| ArcFace [54] | 82.53 | 85.01 | 82.34 | 80.09 | 81.69 | 80.60 |

| VGG [55] | 79.28 | 81.02 | 78.08 | 75.89 | 79.88 | 79.47 |

| DeepID [56] | 81.08 | 82.16 | 79.66 | 81.06 | 81.06 | 78.30 |

| HDMCO [ours] | 88.37 | 85.67 | 86.17 | 85.60 | 88.32 | 88.97 |

| Methods\Datasets | Multi-PIE [40] | MegaFace [41] | CAS-PEAL [42] | YTF [43] | CPLFW [44] | CVL [45] |

|---|---|---|---|---|---|---|

| Reset [48] | 89.26 | 86.08 | 86.92 | 78.34 | 80.16 | 86.57 |

| Duan [49] | 85.06 | 80.38 | 88.15 | 75.06 | 79.68 | 86.23 |

| PGM-Face [29] | 89.32 | 86.42 | 88.95 | 75.01 | 77.19 | 86.27 |

| PCCycleGAN [14] | 86.27 | 85.39 | 86.19 | 77.12 | 79.18 | 85.61 |

| LDMR [20] | 88.97 | 85.09 | 90.87 | 75.80 | 83.97 | 87.18 |

| MH-DNCCM [21] | 88.39 | 82.17 | 88.69 | 78.02 | 80.05 | 85.10 |

| DRA-Net [16] | 90.86 | 82.34 | 92.05 | 75.24 | 80.34 | 87.19 |

| TGLBP [35] | 86.95 | 85.06 | 91.21 | 75.32 | 81.99 | 85.43 |

| MCN [37] | 90.67 | 83.97 | 89.68 | 76.38 | 83.97 | 87.32 |

| 3D-PIM [50] | 90.98 | 85.11 | 90.08 | 75.86 | 83.46 | 87.68 |

| WFH [17] | 89.30 | 83.67 | 90.79 | 74.02 | 83.97 | 86.22 |

| mCNN [51] | 86.91 | 85.06 | 86.40 | 70.66 | 79.30 | 79.66 |

| HADL [31] | 88.69 | 84.39 | 88.67 | 76.08 | 83.97 | 85.88 |

| RVFace [52] | 90.68 | 86.92 | 91.86 | 78.68 | 85.02 | 88.60 |

| DTDD [53] | 88.67 | 87.08 | 92.43 | 76.18 | 85.15 | 87.67 |

| ArcFace [54] | 93.91 | 90.28 | 90.88 | 82.91 | 82.69 | 86.41 |

| VGG [55] | 95.86 | 88.06 | 88.67 | 79.38 | 81.67 | 85.02 |

| DeepID [56] | 93.05 | 86.14 | 85.97 | 77.68 | 81.97 | 84.67 |

| HDMCO [ours] | 96.08 | 91.35 | 94.86 | 81.57 | 88.05 | 92.30 |

| Methods\Datasets | Multi-PIE [40] | MegaFace [41] | CAS-PEAL [42] | YTF [43] | CPLFW [44] | CVL [45] |

|---|---|---|---|---|---|---|

| Reset [48] | 81.32 | 78.92 | 81.24 | 68.05 | 73.68 | 80.92 |

| Duan [49] | 80.68 | 75.60 | 80.38 | 63.58 | 71.99 | 78.96 |

| PGM-Face [29] | 77.68 | 79.31 | 77.59 | 72.38 | 68.56 | 77.90 |

| PCCycleGAN [14] | 76.82 | 75.66 | 74.97 | 68.33 | 69.98 | 78.62 |

| LDMR [20] | 80.38 | 83.29 | 80.64 | 73.20 | 76.82 | 80.93 |

| MH-DNCCM [21] | 81.60 | 81.32 | 83.67 | 64.51 | 71.93 | 79.71 |

| DRA-Net [16] | 83.64 | 83.16 | 81.46 | 66.49 | 74.69 | 80.97 |

| TGLBP [35] | 80.32 | 80.97 | 76.91 | 64.98 | 72.64 | 77.62 |

| MCN [37] | 80.06 | 79.86 | 80.46 | 71.61 | 71.62 | 78.59 |

| 3D-PIM [50] | 81.30 | 80.61 | 78.67 | 70.38 | 73.92 | 78.61 |

| WFH [17] | 81.69 | 80.67 | 81.33 | 63.89 | 73.68 | 79.68 |

| mCNN [51] | 79.37 | 75.31 | 76.82 | 63.99 | 71.68 | 70.29 |

| HADL [31] | 80.34 | 73.97 | 80.34 | 73.61 | 73.61 | 77.85 |

| RVFace [52] | 77.31 | 76.89 | 83.89 | 75.06 | 75.38 | 79.33 |

| DTDD [53] | 78.39 | 80.67 | 82.58 | 73.68 | 78.99 | 78.99 |

| ArcFace [54] | 82.67 | 78.59 | 81.37 | 77.31 | 74.63 | 77.97 |

| VGG [55] | 80.69 | 77.98 | 80.59 | 73.68 | 73.91 | 76.89 |

| DeepID [56] | 83.99 | 77.86 | 78.61 | 71.68 | 77.35 | 77.95 |

| HDMCO [ours] | 89.30 | 85.07 | 85.99 | 78.95 | 83.93 | 83.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, X.; Liao, M.; Chen, L.; Hu, J. Few-Shot Learning for Multi-POSE Face Recognition via Hypergraph De-Deflection and Multi-Task Collaborative Optimization. Electronics 2023, 12, 2248. https://doi.org/10.3390/electronics12102248

Fan X, Liao M, Chen L, Hu J. Few-Shot Learning for Multi-POSE Face Recognition via Hypergraph De-Deflection and Multi-Task Collaborative Optimization. Electronics. 2023; 12(10):2248. https://doi.org/10.3390/electronics12102248

Chicago/Turabian StyleFan, Xiaojin, Mengmeng Liao, Lei Chen, and Jingjing Hu. 2023. "Few-Shot Learning for Multi-POSE Face Recognition via Hypergraph De-Deflection and Multi-Task Collaborative Optimization" Electronics 12, no. 10: 2248. https://doi.org/10.3390/electronics12102248

APA StyleFan, X., Liao, M., Chen, L., & Hu, J. (2023). Few-Shot Learning for Multi-POSE Face Recognition via Hypergraph De-Deflection and Multi-Task Collaborative Optimization. Electronics, 12(10), 2248. https://doi.org/10.3390/electronics12102248