Quantum Machine Learning—An Overview

Abstract

1. Introduction

1.1. Motivation and Contribution

- RQ1:

- To what extent is classical computing combined with quantum computing?

- RQ2:

- Does quantum machine learning provides speed increases compared to classical machine learning?

- RQ3:

- Does combining classical and quantum approaches lead to increased accuracy, or are there cases where classical machine learning performs better?

- RQ4:

- What are the advantages and limitations of quantum machine learning?

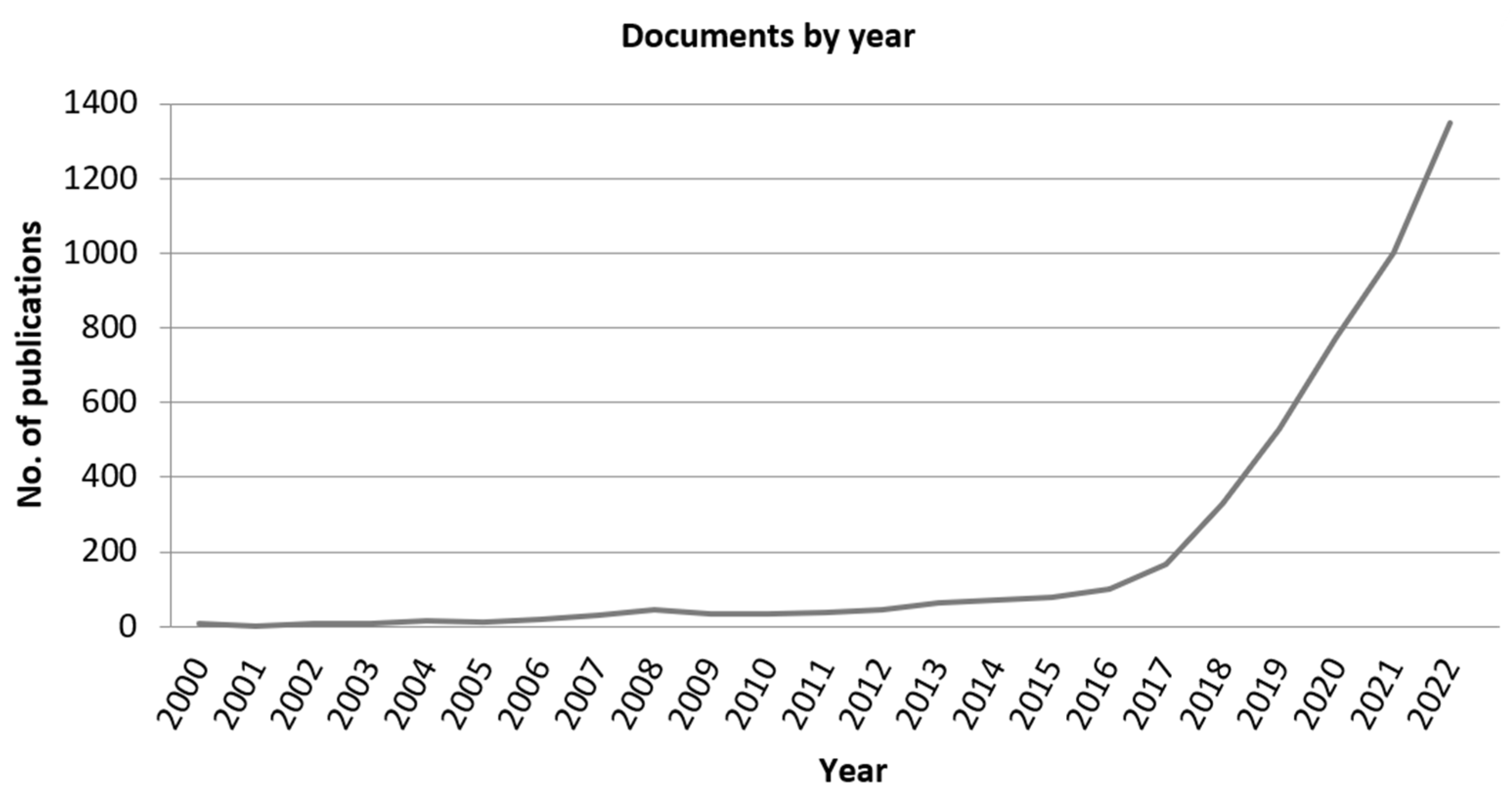

1.2. Literature Analysis

2. Quantum Machine Learning—The Basic Concept

3. Quantum Machine Learning—Algorithms and Applications

SVM Kernels and Quantum SVM

4. Quantum Learning Methods

5. Quantum Machine Learning—Advantages and Limitations

6. A Preliminary Experimental Study

6.1. Datasets Acquisition

6.2. Experimental Results and Discussion

7. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, W. Comparison of Two Quantum Nearest Neighbor Classifiers on IBM’s Quantum Simulator. Nat. Sci. 2018, 10, 87–98. [Google Scholar] [CrossRef]

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum Machine Learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef] [PubMed]

- Samuel, A.L. Some Studies in Machine Learning Using the Game of Checkers. IBM J. Res. Dev. 1959, 3, 210–229. [Google Scholar] [CrossRef]

- Rebentrost, P.; Mohseni, M.; Lloyd, S. Quantum Support Vector Machine for Big Data Classification. Phys. Rev. Lett. 2014, 113, 130503. [Google Scholar] [CrossRef] [PubMed]

- Abdi, H.; Williams, L.J. Principal Component Analysis: Principal Component Analysis. WIREs Comp. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Rosenblatt, F. The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Mozaffari-Kermani, M.; Sur-Kolay, S.; Raghunathan, A.; Jha, N.K. Systematic Poisoning Attacks on and Defenses for Machine Learning in Healthcare. IEEE J. Biomed. Health Inform. 2015, 19, 1893–1905. [Google Scholar] [CrossRef]

- Qayyum, A.; Qadir, J.; Bilal, M.; Al-Fuqaha, A. Secure and Robust Machine Learning for Healthcare: A Survey. IEEE Rev. Biomed. Eng. 2021, 14, 156–180. [Google Scholar] [CrossRef]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural Cleanse: Identifying and Mitigating Backdoor Attacks in Neural Networks. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 707–723. [Google Scholar]

- Shor, P.W. Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer. SIAM Rev. 1999, 41, 303–332. [Google Scholar] [CrossRef]

- Abohashima, Z.; Elhosen, M.; Houssein, E.H.; Mohamed, W.M. Classification with Quantum Machine Learning: A Survey. arXiv 2020, arXiv:2006.12270. [Google Scholar]

- Farouk, A.; Tarawneh, O.; Elhoseny, M.; Batle, J.; Naseri, M.; Hassanien, A.E.; Abedl-Aty, M. Quantum Computing and Cryptography: An Overview. In Quantum Computing: An Environment for Intelligent Large Scale Real Application; Hassanien, A.E., Elhoseny, M., Kacprzyk, J., Eds.; Studies in Big Data; Springer International Publishing: Cham, Switzerland, 2018; Volume 33, pp. 63–100. [Google Scholar]

- Duan, B.; Yuan, J.; Yu, C.-H.; Huang, J.; Hsieh, C.-Y. A Survey on HHL Algorithm: From Theory to Application in Quantum Machine Learning. Phys. Lett. A 2020, 384, 126595. [Google Scholar] [CrossRef]

- Feynman, R.P. Feynman Lectures on Computation, 1st ed.; Hey, T., Allen, R.W., Eds.; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- da Silva, A.J.; Ludermir, T.B.; de Oliveira, W.R. Quantum Perceptron over a Field and Neural Network Architecture Selection in a Quantum Computer. Neural Netw. 2016, 76, 55–64. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Lin, S.; Yu, K.; Guo, G. Quantum K-Nearest Neighbor Classification Algorithm Based on Hamming Distance. Quantum Inf. Process. 2022, 21, 18. [Google Scholar] [CrossRef]

- Lu, S.; Braunstein, S.L. Quantum Decision Tree Classifier. Quantum Inf. Process. 2014, 13, 757–770. [Google Scholar] [CrossRef]

- Adhikary, S.; Dangwal, S.; Bhowmik, D. Supervised Learning with a Quantum Classifier Using Multi-Level Systems. Quantum Inf. Process. 2020, 19, 89. [Google Scholar] [CrossRef]

- Chakraborty, S.; Shaikh, S.H.; Chakrabarti, A.; Ghosh, R. A Hybrid Quantum Feature Selection Algorithm Using a Quantum Inspired Graph Theoretic Approach. Appl. Intell. 2020, 50, 1775–1793. [Google Scholar] [CrossRef]

- Neven, H.; Denchev, V.S.; Rose, G.; Macready, W.G. Training a Large Scale Classifier with the Quantum Adiabatic Algorithm. arXiv 2009, arXiv:0912.0779. [Google Scholar]

- Pudenz, K.L.; Lidar, D.A. Quantum Adiabatic Machine Learning. Quantum Inf. Process. 2013, 12, 2027–2070. [Google Scholar] [CrossRef]

- Neigovzen, R.; Neves, J.L.; Sollacher, R.; Glaser, S.J. Quantum Pattern Recognition with Liquid-State Nuclear Magnetic Resonance. Phys. Rev. A 2009, 79, 042321. [Google Scholar] [CrossRef]

- Bennett, C.H.; Brassard, G.; Breidbart, S.; Wiesner, S. Quantum Cryptography, or Unforgeable Subway Tokens. In Advances in Cryptology; Chaum, D., Rivest, R.L., Sherman, A.T., Eds.; Springer: Boston, MA, USA, 1983; pp. 267–275. [Google Scholar]

- Bennett, C.H.; Bessette, F.; Brassard, G.; Salvail, L.; Smolin, J. Experimental Quantum Cryptography. J. Cryptol. 1992, 5, 3–28. [Google Scholar] [CrossRef]

- Tian, J.; Wu, B.; Wang, Z. High-Speed FPGA Implementation of SIKE Based on an Ultra-Low-Latency Modular Multiplier. IEEE Trans. Circuits Syst. I Regul. Pap. 2021, 68, 3719–3731. [Google Scholar] [CrossRef]

- Mozaffari-Kermani, M.; Azarderakhsh, R. Reliable hash trees for post-quantum stateless cryptographic hash-based signatures. In Proceedings of the IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems (DFTS), Amherst, MA, USA, 12–14 October 2015; pp. 103–108. [Google Scholar]

- Shor, P.W. Algorithms for Quantum Computation: Discrete Logarithms and Factoring. In Proceedings of the 35th Annual Symposium on Foundations of Computer Science, Santa Fe, NM, USA, 20–22 November 1994; pp. 124–134. [Google Scholar]

- Ciliberto, C.; Herbster, M.; Ialongo, A.D.; Pontil, M.; Rocchetto, A.; Severini, S.; Wossnig, L. Quantum Machine Learning: A Classical Perspective. Proc. R. Soc. A 2008, 474, 20170551. [Google Scholar] [CrossRef] [PubMed]

- Dunjko, V.; Briegel, H.J. Machine Learning & Artificial Intelligence in the Quantum Domain: A Review of Recent Progress. Rep. Prog. Phys. 2018, 81, 074001. [Google Scholar] [PubMed]

- Schuld, M.; Sinayskiy, I.; Petruccione, F. An Introduction to Quantum Machine Learning. Contemp. Phys. 2015, 56, 172–185. [Google Scholar] [CrossRef]

- Havenstein, C.; Thomas, D.; Chandrasekaran, S. Comparisons of performance between quantum and classical machine learning. SMU Data Sci. Rev. 2018, 1, 11. [Google Scholar]

- Singh, G.; Kaur, M.; Singh, M.; Kumar, Y. Implementation of Quantum Support Vector Machine Algorithm Using a Benchmarking Dataset. IJPAP 2022, 60, 407–414. [Google Scholar]

- Saini, S.; Khosla, P.; Kaur, M.; Singh, G. Quantum Driven Machine Learning. Int. J. Theor. Phys. 2020, 59, 4013–4024. [Google Scholar] [CrossRef]

- Havlíček, V.; Córcoles, A.D.; Temme, K.; Harrow, A.W.; Kandala, A.; Chow, J.M.; Gambetta, J.M. Supervised Learning with Quantum-Enhanced Feature Spaces. Nature 2019, 567, 209–212. [Google Scholar] [CrossRef]

- Tang, E. A Quantum-Inspired Classical Algorithm for Recommendation Systems. In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, ACM, Phoenix, AZ, USA, 23–26 June 2019; pp. 217–228. [Google Scholar]

- Shan, Z.; Guo, J.; Ding, X.; Zhou, X.; Wang, J.; Lian, H.; Gao, Y.; Zhao, B.; Xu, J. Demonstration of Breast Cancer Detection Using QSVM on IBM Quantum Processors. Res. Sq. 2022; preprint, in review. [Google Scholar]

- Kumar, T.; Kumar, D.; Singh, G. Performance Analysis of Quantum Classifier on Benchmarking Datasets. IJEER 2022, 10, 375–380. [Google Scholar] [CrossRef]

- Kavitha, S.S.; Kaulgud, N. Quantum Machine Learning for Support Vector Machine Classification. Evol. Intel. 2022, 1–10. [Google Scholar] [CrossRef]

- Batra, K.; Zorn, K.M.; Foil, D.H.; Minerali, E.; Gawriljuk, V.O.; Lane, T.R.; Ekins, S. Quantum Machine Learning Algorithms for Drug Discovery Applications. J. Chem. Inf. Model 2021, 61, 2641–2647. [Google Scholar] [CrossRef] [PubMed]

- Suzuki, Y.; Yano, H.; Gao, Q.; Uno, S.; Tanaka, T.; Akiyama, M.; Yamamoto, N. Analysis and Synthesis of Feature Map for Kernel-Based Quantum Classifier. Quantum Mach. Intell. 2020, 2, 9. [Google Scholar] [CrossRef]

- Bai, Y.; Han, X.; Chen, T.; Yu, H. Quadratic Kernel-Free Least Squares Support Vector Machine for Target Diseases Classification. J. Comb. Optim. 2015, 30, 850–870. [Google Scholar] [CrossRef]

- Feynman, R.P.; Kleinert, H. Effective Classical Partition Functions. Phys. Rev. A 1986, 34, 5080–5084. [Google Scholar] [CrossRef]

- Deutsch, D. Quantum theory, the Church–Turing principle and the universal quantum computer. Proc. R. Soc. London A Math. Phys. Sci. 1985, 400, 97–117. [Google Scholar]

- Grover, L.K. A Fast Quantum Mechanical Algorithm for Database Search. In Proceedings of the 28th Annual ACM Symposium on Theory of Computing (STOC ’96), Philadelphia, PA, USA, 22–24 May 1996; ACM: New York, NY, USA, 1996; pp. 212–219. [Google Scholar]

- Jones, J.A.; Mosca, M.; Hansen, R.H. Implementation of a quantum search algorithm on a quantum computer. Nature 1998, 393, 344–346. [Google Scholar] [CrossRef]

- IBM. DOcplex Examples. Available online: https://prod.ibmdocs-production-dal-6099123ce774e592a519d7c33db8265e-0000.us-south.containers.appdomain.cloud/docs/en/icos/12.9.0?topic=api-docplex-examples (accessed on 16 April 2023).

- Preskill, J. Quantum Comput. Entanglement Frontier. arXiv 2012, arXiv:1203.5813. [Google Scholar]

- Gibney, E. Hello quantum world! Google publishes landmark quantum supremacy claim. Nature 2019, 574, 461–463. [Google Scholar] [CrossRef]

- Milburn, G.J.; Leggett, A.J. The Feynman Processor: Quantum Entanglement and the Computing Revolution. Phys. Today 1999, 52, 51–52. [Google Scholar] [CrossRef]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information, 10th anniversary ed.; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2010. [Google Scholar]

- Lewin, D.I. Searching for the elusive qubit. Comput. Sci. Eng. 2001, 3, 4–7. [Google Scholar] [CrossRef]

- Barenco, A.; Bennett, C.H.; Cleve, R.; DiVincenzo, D.P.; Margolus, N.; Shor, P.; Sleator, T.; Smolin, J.A.; Weinfurter, H. Elementary Gates for Quantum Computation. Phys. Rev. A 1995, 52, 3457–3467. [Google Scholar] [CrossRef] [PubMed]

- Fowler, A.G.; Devitt, S.J.; Hollenberg, L.C.L. Implementation of Shor’s Algorithm on a Linear Nearest Neighbour Qubit Array. arXiv 2004, arXiv:quant-ph/0402196. [Google Scholar] [CrossRef]

- Liang, L.; Li, C. Realization of Quantum SWAP Gate between Flying and Stationary Qubits. Phys. Rev. A 2005, 72, 024303. [Google Scholar] [CrossRef]

- Grassl, M.; Roetteler, M.; Beth, T. Efficient Quantum Circuits for Non-Qubit Quantum Error-Correcting Codes. Int. J. Found. Comput. Sci. 2002, 14, 757–775. [Google Scholar] [CrossRef]

- Mishra, N.; Kapil, M.; Rakesh, H.; Anand, A.; Mishra, N.; Warke, A.; Sarkar, S.; Dutta, S.; Gupta, S.; Prasad Dash, A.; et al. Quantum Machine Learning: A Review and Current Status. In Data Management, Analytics and Innovation. Advances in Intelligent Systems and Computing; Springer: Singapore, 2019; pp. 101–145. [Google Scholar]

- Sharma, N.; Chakrabarti, A.; Balas, V.E. Data Management, Analytics and Innovation. In Proceedings of the Third International Conference on Data Management, Analytics and Innovation—ICDMAI, Kuala Lumpur, Malaysia, 18–20 January 2019; Volume 2. [Google Scholar]

- McClean, J.R.; Romero, J.; Babbush, R.; Aspuru-Guzik, A. The Theory of Variational Hybrid Quantum-Classical Algorithms. New J. Phys. 2016, 18, 023023. [Google Scholar] [CrossRef]

- Abbas, A.; Sutter, D.; Zoufal, C.; Lucchi, A.; Figalli, A.; Woerner, S. The Power of Quantum Neural Networks. Nat. Comput. Sci. 2021, 1, 403–409. [Google Scholar] [CrossRef]

- Cong, I.; Choi, S.; Lukin, M.D. Quantum Convolutional Neural Networks. Nat. Phys. 2018, 15, 1273–1278. [Google Scholar] [CrossRef]

- Bausch, J. Recurrent Quantum Neural Networks. arXiv 2020, arXiv:2006.14619. [Google Scholar]

- Zhao, R.; Wang, S. A Review of Quantum Neural Networks: Methods, Models, Dilemma. arXiv 2021, arXiv:2109.01840. [Google Scholar]

- Lloyd, S.; Mohseni, M.; Rebentrost, P. Quantum Algorithms for Supervised and Unsupervised Machine Learning. arXiv 2013, arXiv:1307.0411. [Google Scholar]

- Adcock, J.; Allen, E.; Day, M.; Frick, S.; Hinchliff, J.; Johnson, M.; Morley-Short, S.; Pallister, S.; Price, A.; Stanisic, S. Advances in quantum machine learning. arXiv 2015, arXiv:1512.02900. [Google Scholar]

- Ackley, D.H.; Hinton, G.E.; Sejnowski, T.J. A Learning Algorithm for Boltzmann Machines. Cogn. Sci. 1985, 9, 147–169. [Google Scholar] [CrossRef]

- Wiebe, N.; Kapoor, A.; Granade, C.; Svore, K.M. Quantum Inspired Training for Boltzmann Machines. arXiv 2015, arXiv:1507.02642. [Google Scholar]

- Schuld, M.; Sinayskiy, I.; Petruccione, F. The Quest for a Quantum Neural Network. Quantum Inf. Process. 2014, 13, 2567–2586. [Google Scholar] [CrossRef]

- Schuld, M.; Killoran, N. Quantum Machine Learning in Feature Hilbert Spaces. Phys. Rev. Lett. 2019, 122, 040504. [Google Scholar] [CrossRef]

- Huang, Y.; Ni, L.; Miao, Y. A Quantum Cognitive Map Model. In Proceedings of the 2009 Fifth International Conference on Natural Computation, Tianjian, China, 14–16 August 2009; pp. 28–31. [Google Scholar]

- Nan, M.; Hamido, F.; Yun, Ζ.; Shupeng, W. Ensembles of Fuzzy Cognitive Map Classifiers Based on Quantum Computation. APH 2015, 12, 7–26. [Google Scholar]

- Zhang, W.-R. Information Conservational YinYang Bipolar Quantum-Fuzzy Cognitive Maps—Mapping Business Data to Business Intelligence. In Proceedings of the 2016 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Vancouver, BC, Canada, 24–29 July 2016; pp. 2279–2286. [Google Scholar]

- Zhang, W.-R. From Equilibrium-Based Business Intelligence to Information Conservational Quantum-Fuzzy Cryptography—A Cellular Transformation of Bipolar Fuzzy Sets to Quantum Intelligence Machinery. IEEE Trans. Fuzzy Syst. 2018, 26, 656–669. [Google Scholar] [CrossRef]

- Bologna, G.; Hayashi, Y. QSVM: A Support Vector Machine for Rule Extraction. In Advances in Computational Intelligence; Rojas, I., Joya, G., Catala, A., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9095, pp. 276–289. [Google Scholar]

- Park, J.-E.; Quanz, B.; Wood, S.; Higgins, H.; Harishankar, R. Practical Application Improvement to Quantum SVM: Theory to Practice. arXiv 2020, arXiv:2012.07725. [Google Scholar]

- Vashisth, S.; Dhall, I.; Aggarwal, G. Design and Analysis of Quantum Powered Support Vector Machines for Malignant Breast Cancer Diagnosis. J. Intell. Syst. 2021, 30, 998–1013. [Google Scholar] [CrossRef]

- Fedorov, D.A.; Peng, B.; Govind, N.; Alexeev, Y. VQE Method: A Short Survey and Recent Developments. Mater. Theory 2022, 6, 2. [Google Scholar] [CrossRef]

- Cerezo, M.; Arrasmith, A.; Babbush, R.; Benjamin, S.C.; Endo, S.; Fujii, K.; McClean, J.R.; Mitarai, K.; Yuan, X.; Cincio, L.; et al. Variational Quantum Algorithms. Nat. Rev. Phys. 2021, 3, 625–644. [Google Scholar] [CrossRef]

- Acharya, R.; Aleiner, I.; Allen, R.; Andersen, T.I.; Ansmann, M.; Arute, F.; Arya, K.; Asfaw, A.; Atalaya, J.; Babbush, R.; et al. Suppressing quantum errors by scaling a surface code logical qubit. arXiv 2022, arXiv:2207.06431. [Google Scholar]

- Bshouty, N.H.; Jackson, J.C. Learning DNF over the Uniform Distribution Using a Quantum Example Oracle. SIAM J. Comput. 1998, 28, 1136–1153. [Google Scholar] [CrossRef]

- Qiskit Aqua Algorithms Documentation. Available online: https://qiskit.org/documentation/stable/0.19/apidoc/qiskit_aqua.html (accessed on 12 April 2023).

- Nick Street. Scikit Learn Dataset Load. Available online: http://scikit-learn.org/stable/modules/generated/sklearn.datasets.load_breast_cancer.html (accessed on 12 April 2023).

- Available online: https://archive.ics.uci.edu/ml/datasets/ionosphere (accessed on 12 April 2023).

- Available online: https://archive.ics.uci.edu/ml/datasets/spambase (accessed on 12 April 2023).

- Simon, R. Resampling strategies for model assessment and selection. In Fundamentals of Data Mining in Genomics and Proteomics; Dubitzky, W., Granzow, M., Berrar, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 173–186. [Google Scholar]

- Berrar, D. Cross-Validation. In Encyclopedia of Bioinformatics and Computational Biology; Ranganathan, S., Gribskov, M., Nakai, K., Schönbach, C., Eds.; Academic Press: Oxford, UK, 2019; pp. 542–545. [Google Scholar]

- Ding, C.; Bao, T.-Y.; Huang, H.-L. Quantum-Inspired Support Vector Machine. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 7210–7222. [Google Scholar] [CrossRef]

| Dataset Name | Number of Samples | Number of Features | Number of Classes |

|---|---|---|---|

| Breast cancer | 699 | 10 | 2 |

| Ionosphere | 351 | 34 | 2 |

| Spam Base | 4601 | 57 | 2 |

| Folds | QSVM | SVM | ||||

|---|---|---|---|---|---|---|

| Roc_Auc | Accuracy | F1-Score | Roc_Auc | Accuracy | F1-Score | |

| Fold 1 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 | 0.97 |

| Fold 2 | 0.96 | 0.95 | 0.96 | 0.93 | 0.94 | 0.95 |

| Fold 3 | 0.96 | 0.95 | 0.96 | 0.94 | 0.95 | 0.96 |

| Fold 4 | 0.96 | 0.97 | 0.97 | 0.93 | 0.94 | 0.95 |

| Fold 5 | 0.96 | 0.95 | 0.96 | 0.98 | 0.98 | 0.98 |

| Fold 6 | 0.92 | 0.94 | 0.95 | 0.96 | 0.97 | 0.97 |

| Fold 7 | 0.91 | 0.91 | 0.93 | 0.97 | 0.98 | 0.98 |

| Fold 8 | 0.92 | 0.89 | 0.91 | 1.0 | 1.0 | 1.0 |

| Fold 9 | 0.92 | 0.92 | 0.94 | 0.96 | 0.97 | 0.97 |

| Fold 10 | 0.95 | 0.94 | 0.95 | 0.92 | 0.91 | 0.92 |

| Mean ± Std | 0.94 ± 0.0226 | 0.94 ± 0.0256 | 0.95 ± 0.0189 | 0.96 ± 0.0255 | 0.96 ± 0.0260 | 0.97 ± 0.0217 |

| Folds | QSVM | SVM | ||||

|---|---|---|---|---|---|---|

| Roc_Auc | Accuracy | F1-Score | Roc_Auc | Accuracy | F1-Score | |

| Fold 1 | 0.87 | 0.86 | 0.94 | 0.81 | 0.88 | 0.89 |

| Fold 2 | 0.89 | 0.88 | 0.95 | 0.91 | 0.91 | 0.95 |

| Fold 3 | 0.66 | 0.80 | 0.86 | 0.73 | 0.77 | 0.71 |

| Fold 4 | 0.87 | 0.88 | 0.90 | 0.70 | 0.74 | 0.73 |

| Fold 5 | 0.82 | 0.85 | 0.84 | 0.75 | 0.82 | 0.79 |

| Fold 6 | 0.90 | 0.91 | 0.86 | 0.80 | 0.77 | 0.65 |

| Fold 7 | 0.89 | 0.88 | 0.94 | 0.76 | 0.80 | 0.83 |

| Fold 8 | 0.72 | 0.74 | 0.81 | 0.84 | 0.85 | 0.82 |

| Fold 9 | 0.83 | 0.82 | 0.88 | 0.73 | 0.85 | 0.86 |

| Fold 10 | 0.72 | 0.77 | 0.80 | 0.84 | 0.88 | 0.88 |

| Mean ± Std | 0.82 ± 0.0862 | 0.84 ± 0.0549 | 0.88 ± 0.0539 | 0.78 ± 0.0646 | 0.83 ± 0.0562 | 0.81 ± 0.0921 |

| Folds | QSVM | SVM | ||||

|---|---|---|---|---|---|---|

| Roc_Auc | Accuracy | F1-Score | Roc_Auc | Accuracy | F1-Score | |

| Fold 1 | 0.80 | 0.80 | 0.79 | 0.78 | 0.77 | 0.71 |

| Fold 2 | 0.79 | 0.81 | 0.81 | 0.84 | 0.84 | 0.86 |

| Fold 3 | 0.86 | 0.86 | 0.86 | 0.85 | 0.85 | 0.91 |

| Fold 4 | 0.81 | 0.81 | 0.84 | 0.81 | 0.81 | 0.83 |

| Fold 5 | 0.85 | 0.85 | 0.84 | 0.81 | 0.81 | 0.84 |

| Fold 6 | 0.85 | 0.85 | 0.89 | 0.82 | 0.82 | 0.84 |

| Fold 7 | 0.87 | 0.87 | 0.84 | 0.83 | 0.83 | 0.82 |

| Fold 8 | 0.85 | 0.85 | 0.83 | 0.79 | 0.79 | 0.74 |

| Fold 9 | 0.81 | 0.82 | 0.83 | 0.83 | 0.82 | 0.90 |

| Fold 10 | 0.84 | 0.84 | 0.87 | 0.80 | 0.80 | 0.81 |

| Mean ± Std | 0.83 ± 0.0279 | 0.83 ± 0.0241 | 0.83 ± 0.0287 | 0.81 ± 0.0222 | 0.81 ± 0.0237 | 0.82 ± 0.0626 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tychola, K.A.; Kalampokas, T.; Papakostas, G.A. Quantum Machine Learning—An Overview. Electronics 2023, 12, 2379. https://doi.org/10.3390/electronics12112379

Tychola KA, Kalampokas T, Papakostas GA. Quantum Machine Learning—An Overview. Electronics. 2023; 12(11):2379. https://doi.org/10.3390/electronics12112379

Chicago/Turabian StyleTychola, Kyriaki A., Theofanis Kalampokas, and George A. Papakostas. 2023. "Quantum Machine Learning—An Overview" Electronics 12, no. 11: 2379. https://doi.org/10.3390/electronics12112379

APA StyleTychola, K. A., Kalampokas, T., & Papakostas, G. A. (2023). Quantum Machine Learning—An Overview. Electronics, 12(11), 2379. https://doi.org/10.3390/electronics12112379