Learning Strategies for Sensitive Content Detection

Abstract

1. Introduction

2. Difference with Other Surveys

Contribution of This Work

- The few papers published to date in the field of sensitive-content detection show the overall picture of the research contribution in this field.

- To our awareness, this is the first comprehensive systematic study that brings together valuable research contributions in this field, bringing together content-based strategies on video/image (visual and auditory) and textual (hashes and keywords) features.

- This study is classified according to the methodologies proposed to facilitate comparison between them and the selection of the best ones.

- This review will be useful for new researchers to identify the issues and challenges that the community is addressing in this field. In addition, gaps are discussed that will help future researchers to identify and explore new directions in the field of sensitive-content detection.

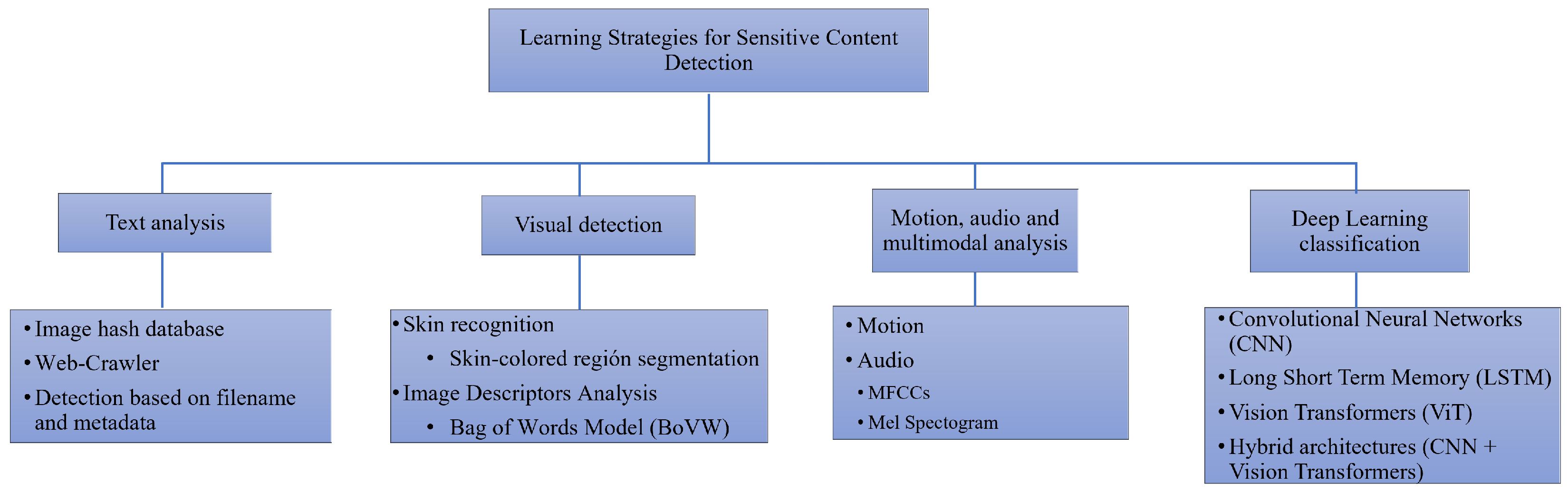

3. Types of Strategies to Detect Sexually Sensitive Content Classification

4. Strategies Based on Text Analysis

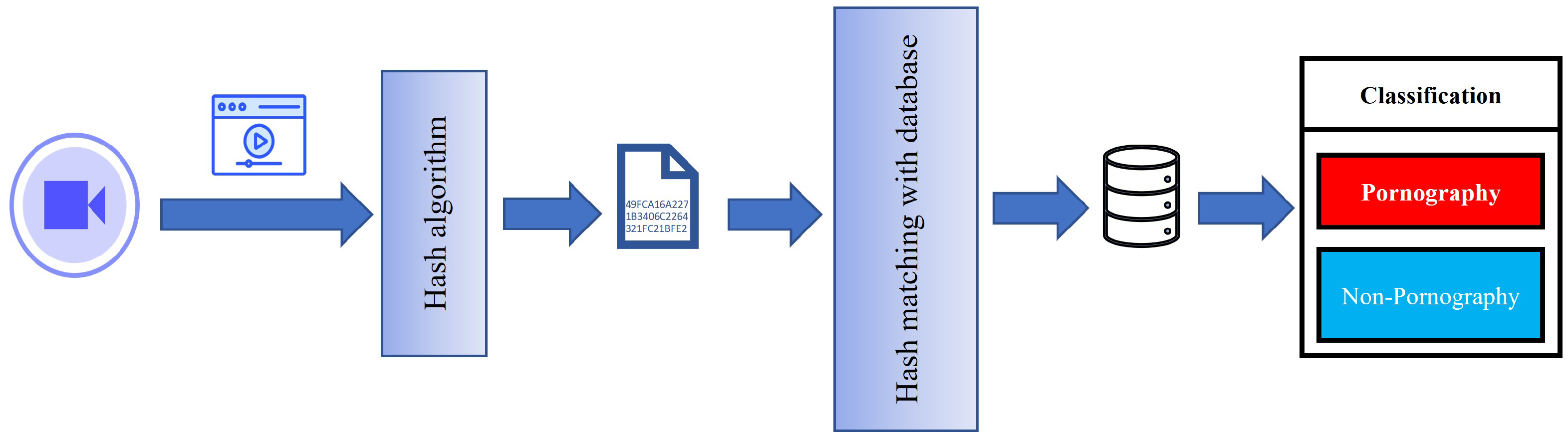

4.1. Strategies Based on Image Hash Database

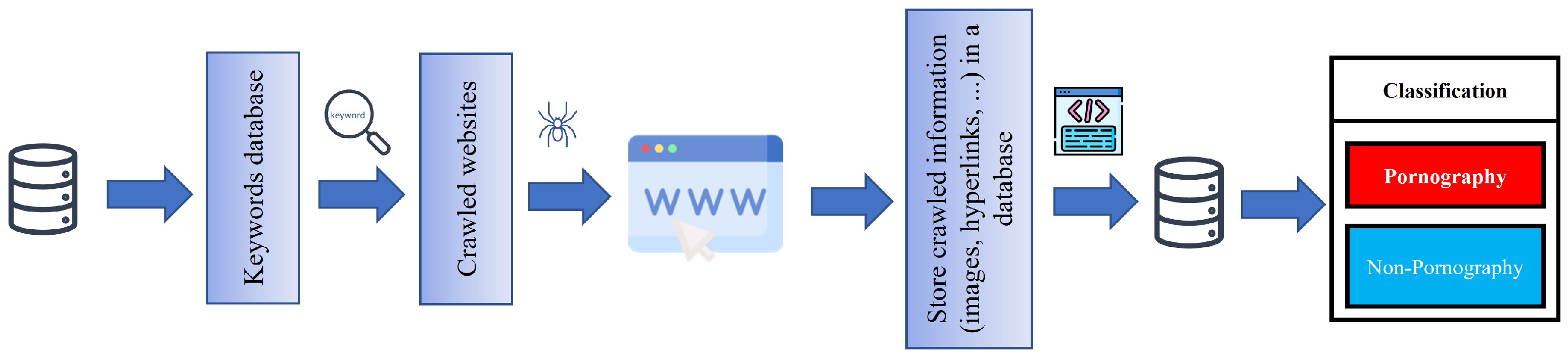

4.2. Strategies Based on Web-Crawlers

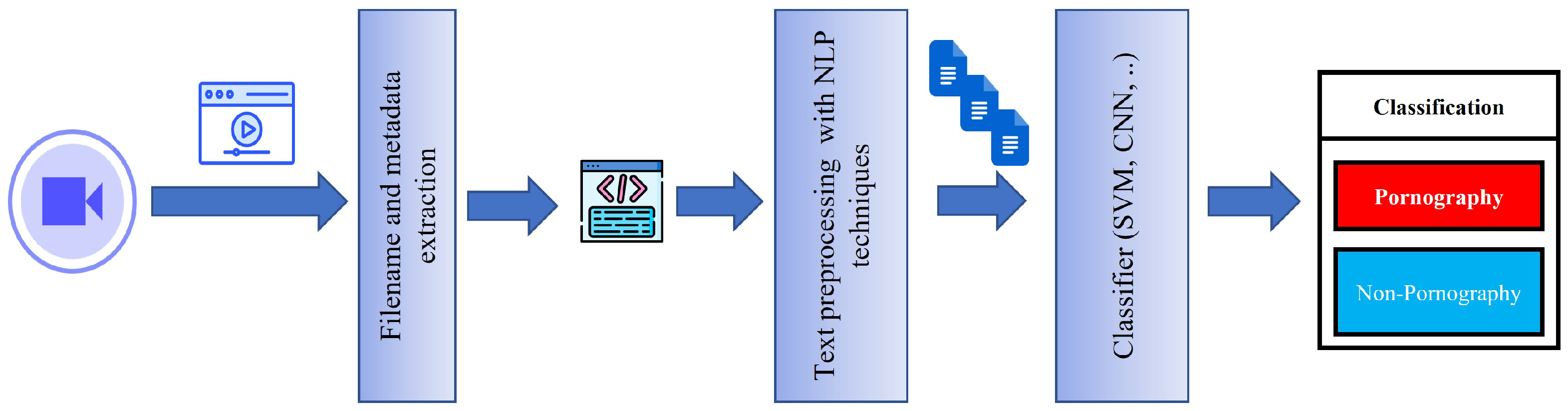

4.3. Strategies Based on Filename and Metadata

5. Strategies Based on Visual Detection

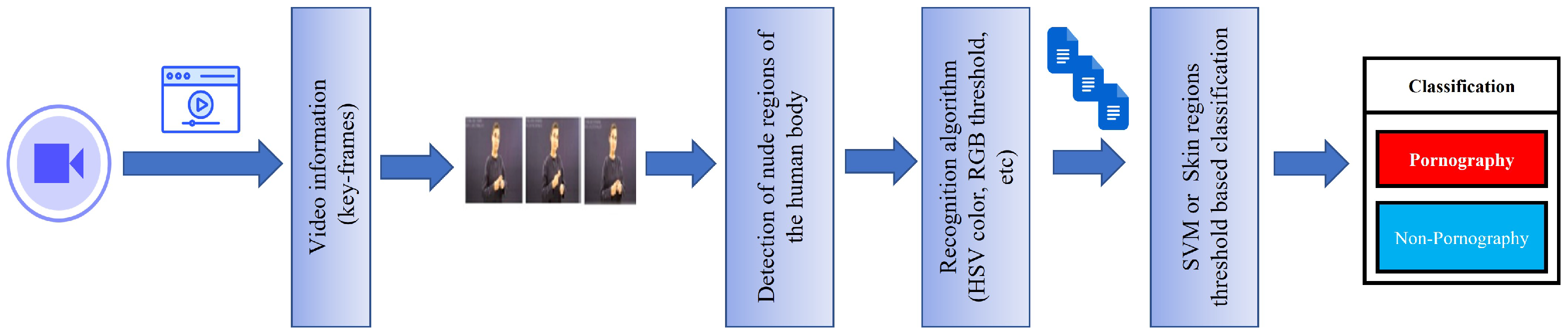

5.1. Strategies Based on Skin Recognition

5.2. Strategies Based on Image Descriptor

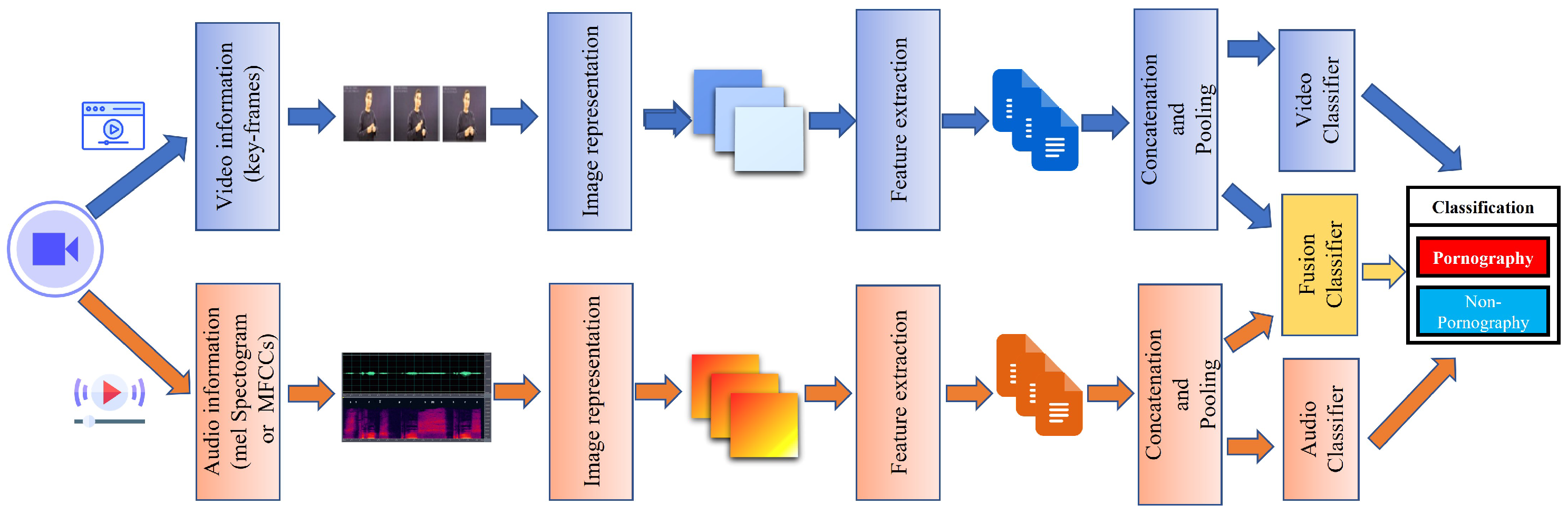

6. Strategies Based on Motion, Audio and Multimodal Analysis

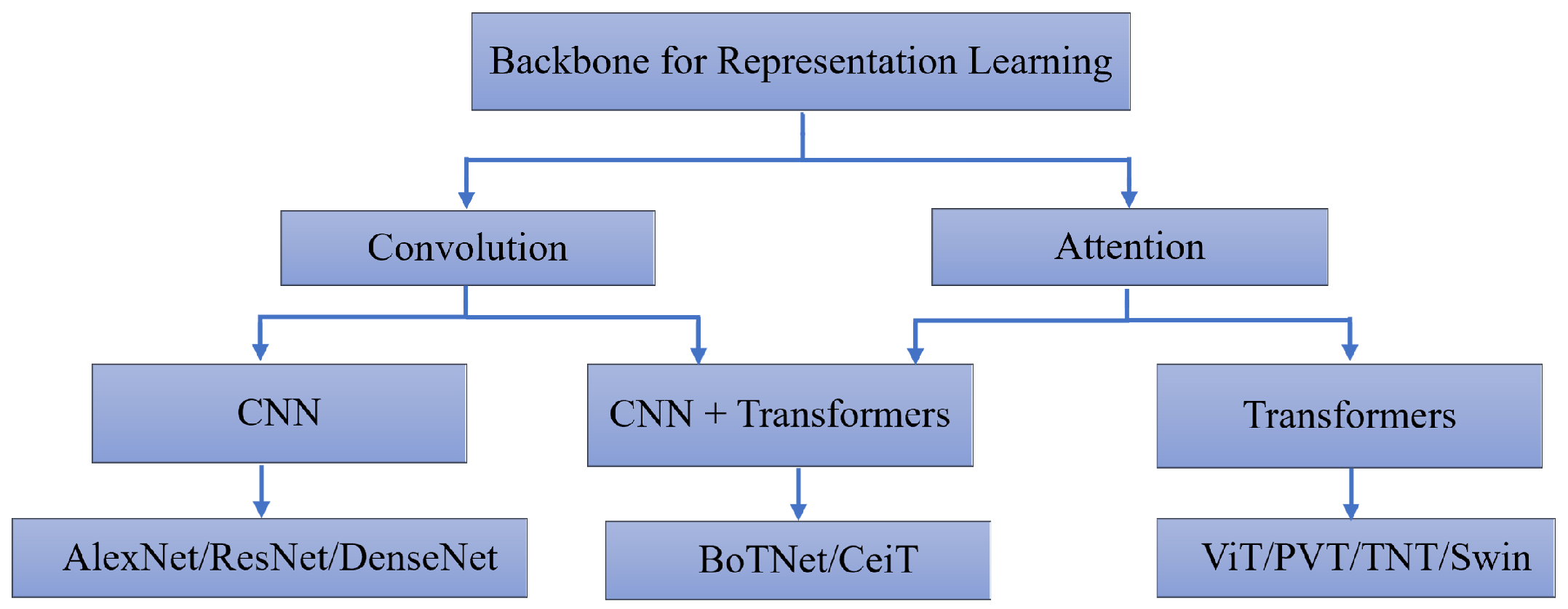

7. Strategies Based on Deep Learning

7.1. Early Approaches Based on CNNs

7.2. New Approaches Based on Fusion Models (CNNs and RNN)

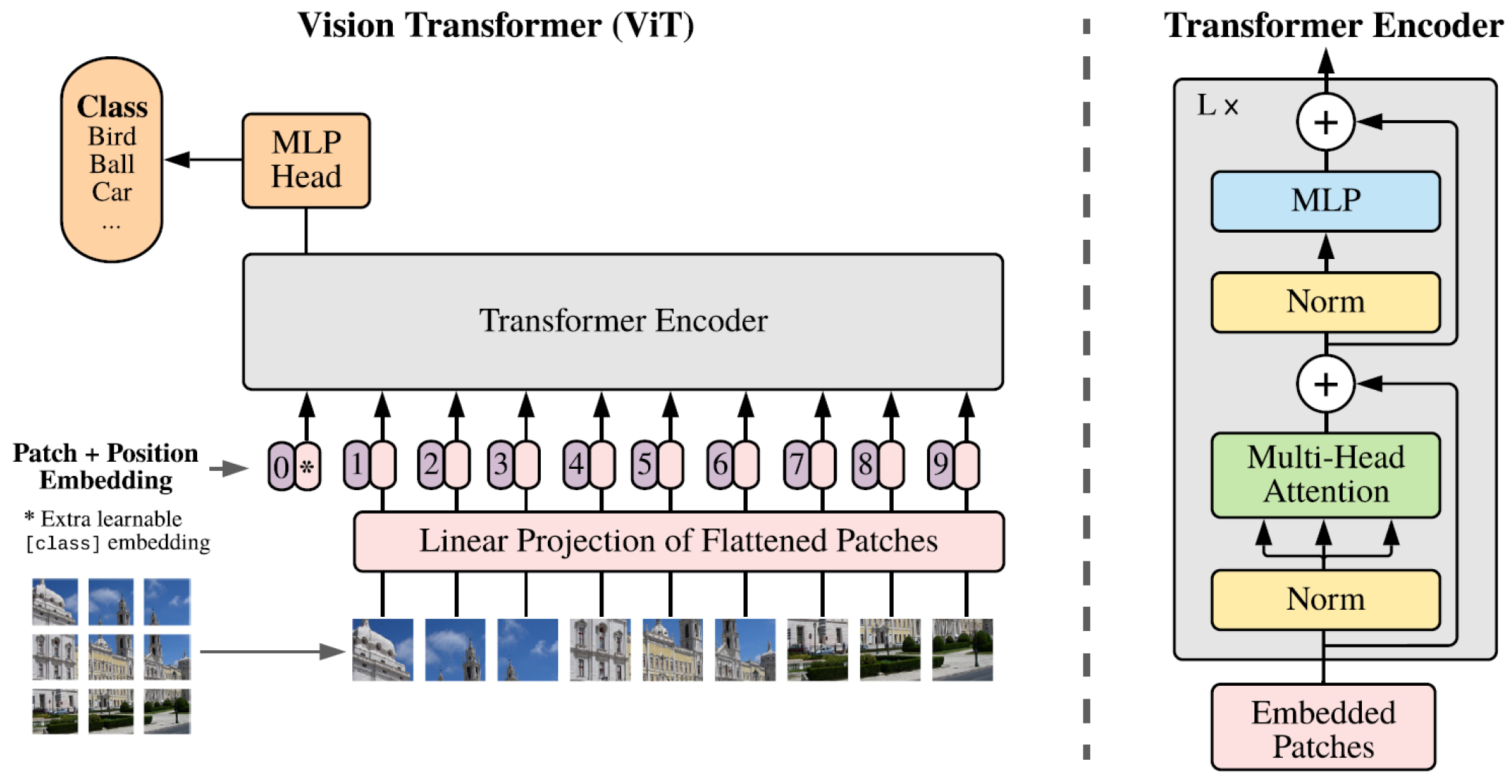

7.3. Vision Attention

8. Results, Challenges and Open Issues

8.1. Results

8.2. Challenges and Open Issues

- Given the success of deep learning methodologies, research on combinations of supervised, unsupervised and, more recently, self-supervised deep network architectures is expected to continue in order to achieve the most optimal configuration in the field of sensitive-content detection.

- Contrarily, there is an anticipation that additional video features, including the more recent audio features, alongside static (keyframes) and dynamic (motion vectors) features, will be assessed in this domain, alongside the outcomes of deep learning methodologies. Within this framework, it is crucial to evaluate the various approaches using the same dataset to ensure an impartial assessment of the aforementioned strategies.

- The use of audio features should be further explored if the number of false negatives can be reduced, either with spectrograms or with transcription to text (e.g., whisper [134]) and subsequent classification with NLP techniques.

- For textual features, the main problem is the frequency with which the search pattern for keywords and filenames needs to be updated. Using only textual features is a challenge, as it would require more frequent re-training of the built models than models based on visual features. However, these features can be incorporated together with visual and auditory features to improve the final ranking.

- In the realm of current state-of-the-art attention mechanisms and approaches, models are designed to consider both global (ViT) and local (CNN) contexts, which play a crucial role in identifying the difficulty of detecting certain images and videos with and without sexual content that may be ambiguous. Consequently, the latest CNN and ViT-based architectures can assign a higher pornographic score to images featuring semi-naked individuals within a context that suggests sexual interest, such as erotic or provocative poses. In contrast, safe images containing semi-nude individuals, such as a girl in a bikini or boys in swimming costumes, receive a low score. However, when it comes to images with sensitive content where individuals are clothed or show minimal skin exposure, and where body exposure is partial or no genitalia are depicted, automatic evaluation systems tend to falter. In contrast, humans find it relatively easy to discern pornographic context, often due to facial expressions.

- As mentioned above, most of the strategies perform well in detecting sensitive content but fail in certain cases. This could be because the number of such images labelled as pornographic in the training set is very low. In addition to focusing on improving the architecture of the neural networks, it is important to have a robust dataset and to perform the relevant pre-processing correctly so that the proposed models can generalise successfully. For this, state-of-the-art image-generation models (text-to-image or image-to-image) such as stable diffusion could be used to improve the dataset [135].

- To the previous point, one of the main challenges in the context of sensitive-content detection is to create a large dataset labelled by experts that is as heterogeneous as possible (different categories of sexual images, poses, etc.), taking into account the diversity of ethnicities, genders, etc., which serves to evaluate and compare the different DL models created by the scientific community in a satisfactory way.

- Recent research on semantic analysis, such as object and background detection in videos, has yielded excellent results. In this regard, it would be interesting to detect patterns between backgrounds and objects in scenes with sexual content, aiming not only to improve detection performance by avoiding false negatives in scenes with minimal nudity but also to create a database that provides more context for CSAM/CSEM video scenes.

- Performing an analysis of the robustness of the built model (e.g., against adversarial attacks) and, whenever feasible, employing algorithms to analyse the explainability of the model will facilitate in understanding the decision-making process of the black-box algorithm and will enable evaluation of the model to enhance its performance and to identify areas where it may be failing.

- As the DL-based models developed detect new sensitive content, they should automatically generate hashes of newly detected explicit content and other textual and contextual features to update international databases, so that major NGOs can check available material against the hashes and other extracted features. To do this, researchers, organisations and Big Tech that have access to the databases must agree to move in the same direction in order to increase the success rate.

9. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ramaswamy, S.; Seshadri, S. Children on the brink: Risks for child protection, sexual abuse, and related mental health problems in the COVID-19 pandemic. Indian J. Psychiatry 2020, 62, S404. [Google Scholar] [CrossRef]

- Europol. Internet Organised Crime Threat Assessment (IOCTA) 2021; Publications Office of the European Union: Luxembourg, 2021; p. 12. [Google Scholar] [CrossRef]

- Lee, H.E.; Ermakova, T.; Ververis, V.; Fabian, B. Detecting child sexual abuse material: A comprehensive survey. Forensic Sci. Int. Digit. Investig. 2020, 34, 301022. [Google Scholar] [CrossRef]

- Khaksar Pour, A.; Chaw Seng, W.; Palaiahnakote, S.; Tahaei, H.; Anuar, N.B. A survey on video content rating: Taxonomy, challenges and open issues. Multimed. Tools Appl. 2021, 80, 24121–24145. [Google Scholar] [CrossRef]

- Cifuentes, J.; Sandoval Orozco, A.L.; García Villalba, L.J. A survey of artificial intelligence strategies for automatic detection of sexually explicit videos. Multimed. Tools Appl. 2022, 81, 3205–3222. [Google Scholar] [CrossRef]

- PhotoDNA. Microsoft. Available online: https://www.microsoft.com/en-us/photodna (accessed on 17 December 2022).

- Canadian Centre for Children Protection. Project Arachnid. 2022. Available online: https://projectarachnid.ca/ (accessed on 19 December 2022).

- Media Detective—Software to Detect and Remove Adult Material on Your Home Computer. Available online: https://www.mediadetective.com/ (accessed on 5 January 2023).

- Hyperdyne Software—Detect and Remove Adult Files with Snitch Porn Cleaner. Available online: https://hyperdynesoftware.com/ (accessed on 5 January 2023).

- Thorn Research: Understanding Sexually Explicit Images, Self-Produced by Children. Available online: https://www.thorn.org/blog/thorn-research-understanding-sexually-explicit-images-self-produced-by-children/ (accessed on 4 January 2023).

- NetClean. Bright Technology for a Brighter Future. Available online: https://www.netclean.com/ (accessed on 4 January 2023).

- Choi, B.; Han, S.; Chung, B.; Ryou, J. Human body parts candidate segmentation using laws texture energy measures with skin colour. In Proceedings of the International Conference on Advanced Communication Technology, Gangwon-Do, Republic of Korea, 3–16 February 2011; pp. 556–560. [Google Scholar]

- Polastro, M.D.C.; Eleuterio, P.M.D.S. A statistical approach for identifying videos of child pornography at crime scenes. In Proceedings of the 7th International Conference on Availability, Reliability and Security, Prague, Czech Republic, 20–24 August 2012; pp. 604–612. [Google Scholar] [CrossRef]

- Lee, H.; Lee, S.; Nam, T. Implementation of high performance objectionable video classification system. In Proceedings of the 8th International Conference Advanced Communication Technology, Gangwon-Do, Republic of Korea, 20–22 February 2006; Volume 2, pp. 959–962. [Google Scholar] [CrossRef]

- Beyer, L.; Izmailov, P.; Kolesnikov, A.; Caron, M.; Kornblith, S.; Zhai, X.; Minderer, M.; Tschannen, M.; Alabdulmohsin, I.; Pavetic, F. FlexiViT: One Model for All Patch Sizes. arXiv 2022, arXiv:2212.08013. [Google Scholar]

- Eleuterio, P.; Polastro, M. An adaptive sampling strategy for automatic detection of child pornographic videos. In Proceedings of the Seventh International Conference on Forensic Computer Science, Brasília, Brazil, 26–28 September 2012; pp. 12–19. [Google Scholar] [CrossRef]

- Wang, D.; Zhu, M.; Yuan, X.; Qian, H. Identification and annotation of erotic film based on content analysis. Electron. Imaging Multimed. Technol. IV 2005, 5637, 88. [Google Scholar] [CrossRef]

- Ulges, A.; Stahl, A. Automatic detection of child pornography using colour visual words. In Proceedings of the IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2011; pp. 3–8. [Google Scholar] [CrossRef]

- Garcia, M.B.; Revano, T.F.; Habal, B.G.M.; Contreras, J.O.; Enriquez, J.B.R. A Pornographic Image and Video Filtering Application Using Optimized Nudity Recognition and Detection Algorithm. In Proceedings of the 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management, Baguio City, Philippines, 29 November–2 December 2018; pp. 1–4, ISBN 978-1-5386-7767-4. [Google Scholar] [CrossRef]

- Caetano, C.; Avila, S.; Guimarães, S.; De Araújo, A.A. Pornography detection using BossaNova video descriptor. In Proceedings of the European Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014; pp. 1681–1685. [Google Scholar]

- Caetano, C.; Avila, S.; Schwartz, W.R.; Guimarães, S.J.F.; Araújo, A.D.A. A mid-level video representation based on binary descriptors: A case study for pornography detection. Neurocomputing 2016, 213, 102–114. [Google Scholar] [CrossRef]

- Lopes, A.P.B.; De Avila, S.E.; Peixoto, A.N.; Oliveira, R.S.; Coelho, M.D.M.; Araújo, A.D.A. Nude detection in video using bag-of-visual-features. In Proceedings of the 22nd Brazilian Symposium on Computer Graphics and Image Processing, Rio de Janeiro, Brazil, 11–15 October 2009; pp. 224–231. [Google Scholar] [CrossRef]

- Setyanto, A.; Kusrini, K.; Made Artha Agastya, I. Comparison of SIFT and SURF methods for porn image detection. In Proceedings of the 4th International Conference on Information Technology, Information Systems and Electrical Engineering, Yogyakarta, Indonesia, 20–21 November 2019; pp. 281–285. [Google Scholar] [CrossRef]

- Tian, C.; Zhang, X.; Wei, W.; Gao, X. Colour pornographic image detection based on colour-saliency preserved mixture deformable part model. Multimed. Tools Appl. 2018, 77, 6629–6645. [Google Scholar] [CrossRef]

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar] [CrossRef]

- Moreira, D.; Avila, S.; Perez, M.; Moraes, D.; Testoni, V.; Valle, E.; Goldenstein, S.; Rocha, A. Pornography classification: The hidden clues in video space–time. Forensic Sci. Int. 2016, 268, 46–61. [Google Scholar] [CrossRef]

- Moreira, D.; Avila, S.; Perez, M.; Moraes, D.; Testoni, V.; Valle, E.; Goldenstein, S.; Rocha, A. Multimodal data fusion for sensitive scene localization. Inf. Fusion 2019, 45, 307–323. [Google Scholar] [CrossRef]

- Da Silva, M.V.; Marana, A.N. Spatiotemporal CNNs for pornography detection in videos. In Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2019; Volume 11401, pp. 547–555. [Google Scholar] [CrossRef]

- Moustafa, M. Applying deep learning to classify pornographic images and videos. arXiv 2015, arXiv:1511.08899. [Google Scholar]

- Perez, M.; Avila, S.; Moreira, D.; Moraes, D.; Testoni, V.; Valle, E.; Goldenstein, S.; Rocha, A. Video pornography detection through deep learning techniques and motion information. Neurocomputing 2017, 230, 279–293. [Google Scholar] [CrossRef]

- Mallmann, J.; Santin, A.O.; Viegas, E.K.; dos Santos, R.R.; Geremias, J. PPCensor: Architecture for real-time pornography detection in video streaming. Future Gener. Comput. Syst. 2020, 112, 945–955. [Google Scholar] [CrossRef]

- Song, K.; Kim, Y.S. An enhanced multimodal stacking scheme for online pornographic content detection. Appl. Sci. 2020, 10, 2943. [Google Scholar] [CrossRef]

- Fu, Z.; Li, J.; Chen, G.; Yu, T.; Deng, T. PornNet: A unified deep architecture for pornographic video recognition. Appl. Sci. 2021, 11, 3066. [Google Scholar] [CrossRef]

- Chen, J.; Liang, G.; He, W.; Xu, C.; Yang, J.; Liu, R. A Pornographic Images Recognition Model based on Deep One-Class Classification With Visual Attention Mechanism. IEEE Access 2020, 8, 122709–122721. [Google Scholar] [CrossRef]

- Gangwar, A.; González-Castro, V.; Alegre, E.; Fidalgo, E. AttM-CNN: Attention and metric learning based CNN for pornography, age and Child Sexual Abuse (CSA) Detection in images. Neurocomputing 2021, 445, 81–104. [Google Scholar] [CrossRef]

- Westlake, B.; Bouchard, M.; Frank, R. Comparing methods for detecting child exploitation content online. In Proceedings of the European Intelligence and Security Informatics Conference, Odense, Denmark, 22–24 August 2012; pp. 156–163. [Google Scholar] [CrossRef]

- Stallings, W. Cryptography and Network Security; Pearson: London, UK, 2017; p. 767. ISBN 978-1-2921-5858-7. [Google Scholar]

- Farid, H. An Overview of Perceptual Hashing. J. Online Trust. Saf. 2021, 36, 1405. [Google Scholar] [CrossRef]

- Liong, V.E.; Lu, J.; Wang, G.; Moulin, P.; Zhou, J. Deep hashing for compact binary codes learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–15 June 2015; pp. 2475–2483. [Google Scholar] [CrossRef]

- Zhao, F.; Huang, Y.; Wang, L.; Tan, T. Deep Semantic Ranking Based Hashing for Multi-Label Image Retrieval. arXiv 2015, arXiv:1501.06272. [Google Scholar]

- Liu, H.; Wang, R.; Shan, S.; Chen, X. Deep Supervised Hashing for Fast Image Retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2064–2072. [Google Scholar]

- Wu, D.; Lin, Z.; Li, B.; Ye, M.; Wang, W. Deep Supervised Hashing for Multi-Label and Large-Scale Image Retrieval. In Proceedings of the 2017 ACM on International Conference on Multimedia Retrieval ICMR ’17, Bucharest, Romania, 6–9 June 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 150–158. [Google Scholar] [CrossRef]

- Wang, H.; Yao, M.; Jiang, G.; Mi, Z.; Fu, X. Graph-Collaborated Auto-Encoder Hashing for Multi-view Binary Clustering. arXiv 2023, arXiv:2301.02484. [Google Scholar]

- Jiang, C.; Pang, Y. Perceptual image hashing based on a deep convolution neural network for content authentication. J. Electron. Imaging 2018, 27, 043055. [Google Scholar] [CrossRef]

- Facebook. Open-Sourcing Photo- and Video-Matching Technology to Make the Internet Safer | Meta. Available online: https://about.fb.com/news/2019/08/open-source-photo-video-matching/ (accessed on 17 January 2023).

- Google. Content Safety API. Available online: https://protectingchildren.google/tools-for-partners/#learn-about-our-tools (accessed on 17 January 2023).

- Apple. CSAM Detection. 2021. Available online: https://www.apple.com/child-safety/pdf/CSAM_Detection_Technical_Summary.pdf (accessed on 17 January 2023).

- iCOP: Live forensics to reveal previously unknown criminal media on P2P networks. Digit. Investig. 2016, 18, 50–64. [CrossRef]

- Krawetz, N. PhotoDNA and Limitations—The Hacker Factor Blog. Available online: https://www.hackerfactor.com/blog/index.php?archives/931-PhotoDNA-and-Limitations.html (accessed on 4 February 2023).

- Steel, C.M. Child pornography in peer-to-peer networks. Child Abus. Negl. 2009, 33, 560–568. [Google Scholar] [CrossRef]

- Westlake, B.; Bouchard, M.; Frank, R. Assessing the Validity of Automated Webcrawlers as Data Collection Tools to Investigate Online Child Sexual Exploitation. Sex. Abus. J. Res. Treat. 2017, 29, 685–708. [Google Scholar] [CrossRef]

- Panchenko, A.; Beaufort, R.; Fairon, C. Detection of child sexual abuse media on p2p networks: Normalization and classification of associated filenames. In Proceedings of the LREC Workshop on Language Resources for Public Security Applications, Istanbul, Turkey, 27 May 2012; pp. 27–31. [Google Scholar]

- Polastro, M.D.C.; Da Silva Eleuterio, P.M. NuDetective: A forensic tool to help combat child pornography through automatic nudity detection. In Proceedings of the Workshops on Database and Expert Systems Applications, Bilbao, Spain, 30 August 2010; pp. 349–353. [Google Scholar] [CrossRef]

- Peersman, C.; Schulze, C.; Rashid, A.; Brennan, M.; Fischer, C. ICOP: Automatically identifying new child abuse media in P2P networks. In Proceedings of the IEEE Security and Privacy Workshops, San Jose, CA, USA, 18–21 May 2014; IEEE Computer Society, 1730 Massachusetts Ave., NW: Washington, DC, USA, 2014; pp. 124–131. [Google Scholar] [CrossRef]

- Gov.UK. New AI Technique to Block Online Child Grooming Launched—GOV.UK. Available online: https://www.gov.uk/government/news/new-ai-technique-to-block-online-child-grooming-launched (accessed on 4 December 2022).

- Al-Nabki, M.W.; Fidalgo, E.; Alegre, E.; Aláiz-Rodríguez, R. File name classification approach to identify child sexual abuse. In Proceedings of the 9th International Conference on Pattern Recognition Applications and Methods ICPRAM, Valletta, Malta, 22–24 February 2020; pp. 228–234. [Google Scholar] [CrossRef]

- Aldahoul, N.; Karim, H.A.; Abdullah, M.H.L.; Fauzi, M.F.A.; Ba Wazir, A.S.; Mansor, S.; See, J. Exploring high-level features for detecting cyberpedophilia. Comput. Speech Lang. 2014, 28, 108–120. [Google Scholar] [CrossRef]

- Peersman, C. Detecting Deceptive Behaviour in the Wild: Text Mining for Online Child Protection in the Presence of Noisy and Adversarial Social Media Communications. Ph.D. Thesis, Lancaster University, Lancaster, UK, 2018. [Google Scholar] [CrossRef]

- Pereira, M.; Dodhia, R.; Anderson, H.; Brown, R. Metadata-Based Detection of Child Sexual Abuse Material. arXiv 2020, arXiv:2010.02387. [Google Scholar]

- Perverted Justice Foundation. The Largest and Best Anti-Predator Organization Online. Available online: Perverted-Justice.com (accessed on 22 February 2023).

- Carlsson, A.; Eriksson, A.; Isik, M. Automatic Detection of Images Containing Nudity. Ph.D. Thesis, IT University of Goteborg, Gothenburg, Sweden, 2008. [Google Scholar]

- Fleck, M.M.; Forsyth, D.A.; Bregler, C. Finding naked people. In Proceedings of the 4th European Conference on Computer Vision, Cambridge, UK, 14–18 April 1996; Volume 1065, pp. 594–602. [Google Scholar] [CrossRef]

- Jones, M.J.; Rehg, J.M. Statistical colour models with application to skin detection. Int. J. Comput. Vis. 2002, 46, 81–96. [Google Scholar] [CrossRef]

- Kakumanu, P.; Makrogiannis, S.; Bourbakis, N. A survey of skin-colour modeling and detection methods. Pattern Recognit. 2007, 40, 1106–1122. [Google Scholar] [CrossRef]

- Platzer, C.; Stuetz, M.; Lindorfer, M. Skin sheriff: A machine learning solution for detecting explicit images. In Proceedings of the 2nd International Workshop on Security and Forensics in Communication Systems, Kyoto, Japan, 3 June 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 45–55. [Google Scholar] [CrossRef]

- Ap-apid, R. An Algorithm for Nudity Detection. In Proceedings of the 5th Philippine Computing Science Congress, University of Cebu (Banilad Campus), Cebu City, Philippines, 4–5 March 2005; pp. 201–205. [Google Scholar]

- Ozinov, F. GitHub—Bakwc/PornDetector: Porn Images Detector with Python, Tensorflow, Scikit-Learn and Opencv. Available online: https://github.com/bakwc/PornDetector (accessed on 12 December 2022).

- Zhuo, L.; Geng, Z.; Zhang, J.; Guang Li, X. ORB feature based web pornographic image recognition. Neurocomputing 2016, 173, 511–517. [Google Scholar] [CrossRef]

- Lillie, O. PHP Video. 2017. Available online: https://github.com/buggedcom/phpvideotoolkit-v2 (accessed on 27 December 2022).

- Zhu, M.-L. Video stream segmentation method based on video page. J. Comput. Aided Design. Comput. Graph. 2000, 12, 585–589. [Google Scholar]

- El-Hallak, M.; Lovell, D. ORB an efficient. Arthritis Rheum. 2013, 65, 2736. [Google Scholar] [PubMed]

- Jansohn, C.; Ulges, A.; Breuel, T.M. Detecting pornographic video content by combining image features with motion information. In Proceedings of the ACM Multimedia Conference, with Co-located Workshops and Symposiums, Beijing, China, 19–24 October 2009; pp. 601–604. [Google Scholar] [CrossRef]

- Deselaers, T.; Pimenidis, L.; Ney, H. Bag-of-visual-words models for adult image classification and filtering. In Proceedings of the International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008. [Google Scholar]

- Zhang, J.; Sui, L.; Zhuo, L.; Li, Z.; Yang, Y. An approach of bag-of-words based on visual attention model for pornographic images recognition in compressed domain. Neurocomputing 2013, 110, 145–152. [Google Scholar] [CrossRef]

- Valle, E.; de Avila, S.; da Luz, A.; de Souza, F.; Coelho, M.; Araújo, A. Content-Based Filtering for Video Sharing Social Networks. arXiv 2011, arXiv:1101.2427. [Google Scholar]

- Laptev, I. On space-time interest points. Int. J. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Avila, S.; Thome, N.; Cord, M.; Valle, E.; De Araújo, A. BOSSA: Extended bow formalism for image classification. In Proceedings of the International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 2909–2912. [Google Scholar] [CrossRef]

- Avila, S.; Thome, N.; Cord, M.; Valle, E.; De Araújo, A. Pooling in image representation: The visual codeword point of view. Comput. Vis. Image Underst. 2013, 117, 453–465. [Google Scholar] [CrossRef]

- Kim, A. NSFW Dataset. 2019. Available online: https://github.com/alex000kim/nsfw_data_scraper (accessed on 22 November 2022).

- Chen, Y.; Zheng, R.; Zhou, A.; Liao, S.; Liu, L. Automatic detection of pornographic and gambling websites based on visual and textual content using a decision mechanism. Sensors 2020, 20, 3989. [Google Scholar] [CrossRef]

- Souza, F.; Valle, E.; Camara-Chavez, G.; De Araujo, A. An Evaluation on Colour Invariant Based Local Spatiotemporal Features for Action Recognition. In Proceedings of the Conference on Graphics, Patterns and Images, Ouro Preto, Brazil, 22–25 August 2012; pp. 1–6. [Google Scholar]

- Rea, N.; Lacey, G.; Lambe, C.; Dahyot, R. Multimodal periodicity analysis for illicit content detection in videos. In Proceedings of the IET Conference Publications, Leela Palace, Bangalore, India, 26–28 September 2006; pp. 106–114. [Google Scholar] [CrossRef]

- Zuo, H.; Wu, O.; Hu, W.; Xu, B. Recognition of blue movies by fusion of audio and video. In Proceedings of the IEEE International Conference on Multimedia and Expo, Hannover, Germany, 23–26 June 2008; pp. 37–40. [Google Scholar]

- Liu, Y.; Yang, Y.; Xie, H.; Tang, S. Fusing audio vocabulary with visual features for pornographic video detection. Future Gener. Comput. Syst. 2014, 31, 69–76. [Google Scholar] [CrossRef]

- Kim, C.Y.; Kwon, O.J.; Kim, W.G.; Choi, S.R. Automatic System for Filtering Obscene Video. In Proceedings of the 10th International Conference on Advanced Communication Technology, Phoenix Park, Republic of Korea, 17–20 February 2008; Volume 2, pp. 1435–1438. [Google Scholar] [CrossRef]

- Wang, J.Z.; Li, J.; Wiederhold, G.; Firschein, O. System for screening objectionable images. Comput. Commun. 1998, 21, 1355–1360. [Google Scholar] [CrossRef]

- Endeshaw, T.; Garcia, J.; Jakobsson, A. Classification of indecent videos by low complexity repetitive motion detection. In Proceedings of the Applied Imagery Pattern Recognition Workshop, Washington, DC, USA, 15–17 October 2008. [Google Scholar] [CrossRef]

- Qu, Z.; Liu, Y.; Liu, Y.; Jiu, K.; Chen, Y. A method for reciprocating motion detection in porn video based on motion features. In Proceedings of the 2nd IEEE International Conference on Broadband Network and Multimedia Technology, Beijing, China, 18–20 October 2009; pp. 183–187. [Google Scholar] [CrossRef]

- Ulges, A.; Schulze, C.; Borth, D.; Stahl, A. Pornography detection in video benefits (a lot) from a multi-modal approach. In Proceedings of the 2012 ACM Workshop on Audio and Multimedia Methods for Large-Scale Video Analysis, Nara, Japan, 2 November 2012; pp. 21–26. [Google Scholar] [CrossRef]

- Behrad, A.; Salehpour, M.; Ghaderian, M.; Saiedi, M.; Barati, M.N. Content-based obscene video recognition by combining 3D spatiotemporal and motion-based features. Eurasip J. Image Video Process. 2012, 2012, 1. [Google Scholar] [CrossRef]

- Jung, S.; Youn, J.; Sull, S. A real-time system for detecting indecent videos based on spatiotemporal patterns. IEEE Trans. Consum. Electron. 2014, 60, 696–701. [Google Scholar] [CrossRef]

- Schulze, C.; Henter, D.; Borth, D.; Dengel, A. Automatic detection of CSA media by multi-modal feature fusion for law enforcement support. In Proceedings of the ACM International Conference on Multimedia Retrieval, Glasgow, UK, 1–4 April 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 353–360. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, X.; Huang, L.; Ouyang, J.; Liao, M.; Wu, L. Analyzing periodicity and saliency for adult video detection. Multimed. Tools Appl. 2020, 79, 4729–4745. [Google Scholar] [CrossRef]

- Mahadeokar, J.; Pesavento, G. Open Sourcing a deep learning Solution for Detecting NSFW Images. Yahoo Eng. 2016, 24, 2018. [Google Scholar]

- Nian, F.; Li, T.; Wang, Y.; Xu, M.; Wu, J. Pornographic image detection utilizing deep convolutional neural networks. Neurocomputing 2016, 210, 283–293. [Google Scholar] [CrossRef]

- Vitorino, P.; Avila, S.; Perez, M.; Rocha, A. Leveraging deep neural networks to fight child pornography in the age of social media. J. Vis. Commun. Image Represent. 2018, 50, 303–313. [Google Scholar] [CrossRef]

- Wang, Y.; Jin, X.; Tan, X. Pornographic image recognition by strongly-supervised deep multiple instance learning. In Proceedings of the International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; Volume 2016, pp. 4418–4422. [Google Scholar] [CrossRef]

- Xu, W.; Parvin, H.; Izadparast, H. Deep learning Neural Network for Unconventional Images Classification. Neural Process. Lett. 2020, 52, 169–185. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–15 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Ha, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar]

- Wehrmann, J.; Simões, G.S.; Barros, R.C.; Cavalcante, V.F. Adult content detection in videos with convolutional and recurrent neural networks. Neurocomputing 2018, 272, 432–438. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Song, K.H.; Kim, Y.S. Pornographic video detection scheme using multimodal features. J. Eng. Appl. Sci. 2018, 13, 1174–1182. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3D convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; Volume 2015, pp. 4489–4497. [Google Scholar] [CrossRef]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; Lecun, Y.; Paluri, M. A Closer Look at Spatiotemporal Convolutions for Action Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6450–6459. [Google Scholar] [CrossRef]

- Singh, S.; Buduru, A.B.; Kaushal, R.; Kumaraguru, P. KidsGUARD: Fine grained approach for child unsafe video representation and detection. In Proceedings of the ACM Symposium on Applied Computing, Limassol, Cyprus, 8–12 April 2019; Volume F1477, pp. 2104–2111. [Google Scholar] [CrossRef]

- Papadamou, K.; Papasavva, A.; Zannettou, S.; Blackburn, J.; Kourtellis, N.; Leontiadis, I.; Stringhini, G.; Sirivianos, M. Disturbed Youtube for kids: Characterizing and detecting inappropriate videos targeting young children. In Proceedings of the 14th International AAAI Conference on Web and Social Media, Atlanta, GA, USA, 8–11 June 2020; pp. 522–533. [Google Scholar] [CrossRef]

- Chaves, D.; Fidalgo, E.; Alegre, E.; Alaiz-Rodríguez, R.; Jáñez-Martino, F.; Azzopardi, G. Assessment and Estimation of Face Detection Performance Based on Deep Learning for Forensic Applications. Sensors 2020, 20, 4491. [Google Scholar] [CrossRef]

- Yang, S.; Luo, P.; Loy, C.C.; Tang, X. WIDER FACE: A Face Detection Benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Nada, H.; Sindagi, V.A.; Zhang, H.; Patel, V.M. Pushing the Limits of Unconstrained Face Detection: A Challenge Dataset and Baseline Results. arXiv 2018, arXiv:1804.10275. [Google Scholar]

- Lee, G.; Kim, M. Deepfake Detection Using the Rate of Change between Frames Based on Computer Vision. Sensors 2021, 21, 7367. [Google Scholar] [CrossRef] [PubMed]

- Rossler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Niessner, M. FaceForensics++: Learning to detect manipulated facial images. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; Volume 2019, p. 1. [Google Scholar] [CrossRef]

- Kaggle. Deepfake Detection Challenge. Kaggle. 2020. Available online: https://www.kaggle.com/c/deepfake-detection-challenge (accessed on 1 December 2022).

- Aldahoul, N.; Karim, H.A.; Abdullah, M.H.L.; Fauzi, M.F.A.; Ba Wazir, A.S.; Mansor, S.; See, J. Transfer detection of yolo to focus cnn’s attention on nude regions for adult content detection. Symmetry 2021, 13, 26. [Google Scholar] [CrossRef]

- Lovenia, H.; Lestari, D.P.; Frieske, R. What Did i Just Hear? Detecting Pornographic Sounds in Adult Videos Using Neural Networks. In Proceedings of the ACM International Conference Proceeding Series, St. Pölten, Austria, 6–9 September 2022; Association for Computing Machinery: New York, NY, USA, 2022; Volume 1, pp. 92–95. [Google Scholar] [CrossRef]

- Gautam, N.; Vishwakarma, D.K. Obscenity Detection in Videos through a Sequential ConvNet Pipeline Classifier. IEEE Trans. Cogn. Dev. Syst. 2022, 8920, 1–10. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 201, 5999–6009. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Volume 12346, pp. 213–229. [Google Scholar] [CrossRef]

- Wu, B.; Xu, C.; Dai, X.; Wan, A.; Zhang, P.; Yan, Z.; Tomizuka, M.; Gonzalez, J.; Keutzer, K.; Vajda, P. Visual Transformers: Token-based Image Representation and Processing for Computer Vision. arXiv 2020, arXiv:2006.03677. [Google Scholar]

- Simoes, G.S.; Wehrmann, J.; Barros, R.C. Attention-based Adversarial Training for Seamless Nudity Censorship. In Proceedings of the International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019; Volume 2019, pp. 1–8. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.; Tay, F.E.; Feng, J.; Yan, S. Tokens-to-Token ViT: Training vision transformers from Scratch on ImageNet. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 538–547. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with Depthwise Separable Convolutions. arXiv 2016, arXiv:1610.02357. [Google Scholar]

- Lin, X.; Qin, F.; Peng, Y.; Shao, Y. Fine-grained pornographic image recognition with multiple feature fusion transfer learning. Int. J. Mach. Learn. Cybern. 2021, 12, 73–86. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Yousaf, K.; Nawaz, T. A deep learning-Based Approach for Inappropriate Content Detection and Classification of YouTube Videos. IEEE Access 2022, 10, 16283–16298. [Google Scholar] [CrossRef]

- Google. Google Open Dataset. 2022. Available online: https://datasetsearch.research.google.com/ (accessed on 25 August 2022).

- Athalye, A. Ribosome: Synthesize Photos from PhotoDNA Using Machine Learning. 2021. Available online: https://github.com/anishathalye/ribosome (accessed on 12 December 2022).

- Kingma, D.P.; Welling, M. An Introduction to Variational Autoencoders. Found. Trends Mach. Learn. 2019, 12, 307–392. [Google Scholar] [CrossRef]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic Routing between Capsules. In Proceedings of the 31st International Conference on Neural Information Processing Systems NIPS’17, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 3859–3869. [Google Scholar]

- Yang, J.; Li, C.; Dai, X.; Yuan, L.; Gao, J. Focal Modulation Networks. arXiv 2022, arXiv:2203.11926. [Google Scholar]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. arXiv 2022, arXiv:2212.04356. [Google Scholar]

- Zhang, L.; Agrawala, M. Adding Conditional Control to Text-to-Image Diffusion Models. arXiv 2023, arXiv:2302.05543. [Google Scholar]

- Laranjeira, C.; Macedo, J.; Avila, S.; dos Santos, J.A. Seeing without Looking: Analysis Pipeline for Child Sexual Abuse Datasets. In Proceedings of the ACM Conference on Fairness, Accountability, and Transparency, Seoul, Republic of Korea, 21–24 June 2022; Association for Computing Machinery: New York, NY, USA, 2022; Volume 1. [Google Scholar]

| Reference | Dataset Size | Features | Classification Algorithm | Evaluation Measures |

|---|---|---|---|---|

| Polastro et al. [53] | 330,595 files | File name analysis + | SVM | Recall: 95% |

| Image analysis | Precision: 93% | |||

| Panchenko et al. [52] | 106,350 files | File name analysis + metadata (term extractor + filename normaliser) | C-SVM linear | Acc: 96.97% |

| Peersman et al. [54] | 40,000 CSA file names and 40,000 legal pornographic file names | File name classification | SVM | Precision: 89.9 |

| Bogdanova et al. [57] | Chat logs (5 subsets) from the perverted-justice website [60] | Chat logs (lexicon) | SVM | Recall: 95% |

| Peersman et al. [48] | 330,595 files | File name categorisation (CSA-rel. keywords + semantic feats + Char. n-grams) | SVM | Overall F1-score: 77.75% |

| Al-Nabki et al. [56] | 65,351 files | File name classifier (n-grams) | CNN | F1-score: 85% |

| Pereira et al. [59] | 1,010,000 file paths | File path-based character quantisation | CNN | Acc: 96.8% |

| Reference | Dataset Size | Frame Extraction Algorithm | Recognition Algorithm | Features | Classification Algorithm | Evaluation Measures |

|---|---|---|---|---|---|---|

| Wang et al. [17] | 112 | Colour difference [70] | Gaussian model in YCbCr | Skin Colour | Bayesian | Precision: 90.3% |

| Skin texture morphology | Recall: 91.5% | |||||

| Lee et al. [14] | 1200 | Uniform sampling | Single: Gaussian | Skin colour | SVM | Precision: 96.6% |

| Global: HSV colour discriminant | Recall: 86.19% | |||||

| Castro Polastro et al. [13] | 149 | Uniform sampling | RGB threshold | Skin colour | Skin regions threshold [66] | Precision: 85.7% Recall: 84.9% |

| Silva Eleuterio et al. [16] | 149 | Logarithmic function | RGB threshold | Skin colour | Skin regions threshold [66] | Precision: 85.9% |

| Recall: 87.3% | ||||||

| Li Zhuo et al. [68] | 19,000 | ORB descriptor extraction [71], HSV and BoVW | Skin colour | SVM | Precision: 93.03% | |

| Garcia et al. [19] | 253 | Uniform sampling [69] | YCbCr threshold Gaussian Low-pass filter | Skin colour Texture skin | Skin regions threshold [66] | Precision: 90.33% |

| Reference | Dataset Size | BoVW Type Algorithm | Features Descriptor | Classification Algorithm | Evaluation Measures |

|---|---|---|---|---|---|

| Lopes et al. [22] | 179 | Standard | HueSIFT | SVM (linear kernel) | Acc: 93.2% |

| Avila et al.[77] | 800 | BOSSA | HueSIFT | SVM (Nonlinear kernel) | Acc: 87.1% |

| 800 | |||||

| Avila et al. [78] | 4900 | BossaNova | HueSIFT | SVM (Linear kernel) | Acc: 89.5% |

| Tian et al. [24] | 800 | CPMDPM | HoG and CA | Latent SVM | Precision: 80% |

| Recall: 82% | |||||

| F1-score: 81% | |||||

| Caetano et al. [20] | 800 | BossaNova | Binary descriptors | SVM (Nonlinear kernel) | Acc: 90.9% |

| Caetano et al.[21] | 800 | BossaNovaVD | Binary descriptors | SVM (Nonlinear kernel) | Acc: 92.4% |

| Jansohn et al. [72] | 3595 | Standard | Motion vectors | SVM( Not specified kernel) | Equal error: 6.04% |

| Valle et al. [75] | 800 | Standard | Motion vectors | SVM (Nonlinear kernel) | Acc: 91.9% |

| Souza et al. [81] | 800 | Standard | colour STIP | SVM (Linear kernel) | Acc: 91.0% |

| Li Zhuo et al. [68] | 19,000 | Standard | ORB Descriptor Extraction [71] HSV | SVM (Nonlinear kernel) (RBF kernel) | Acc: 93.03% |

| Moreira et al. [26] | 2000 | Fisher vector | TRoF | SVM (Linear kernel) | Acc: 95.0% |

| Hartatik et al. [23] | 8981 | Standard | SIFT and SURF | KNN | Acc: 82.26% |

| Reference | Dataset Size | Features Analysed | Classification Algorithm | Evaluation Measures |

|---|---|---|---|---|

| Zuo et al. [83] | 889 videos | 12 MFCC and energy term | GMM | Precision: 92.3% |

| body contour | Bayes classifier | Recall: 98.3% | ||

| Kim et al. [85] | 3255 videos | Motion vectors moments of shape | Shape matching [86] | Acc: 96.5% |

| Endeshaw et al. [87] | 750 videos | Motion vectors | Spectral estimation threshold | TPR > 85% |

| FNR < 10% | ||||

| Zhiyi et al. [88] | 100 videos | Motion vectors (strength and direction) | Two-motion features threshold | Acc: 90.0% |

| Ulges et al. [89] | 3300 | Motion vectors, audio features, skin colour | SVM (RBF kernel) | Equal error: 5.92% |

| Behrad et al. [90] | 4000 videos | Motion and periodicity features | SVM (Linear kernel) | Acc: 95.44% |

| Schulze et al. [92] | 60,000 images | Colour-correlograms + skin features, visual pyramids + visual words + SentiBank mid-level sentiment feature | SVM RBF (for each feature) + late fusion | Equal error: 10% |

| 3000 videos | Colour-correlograms + skin features, visual pyramids + visual words + audio words | SVM RBF (for each feature) + late fusion | Equal error: 8% | |

| Liu et al. [84] | 558 videos | Periodicity-based video | SVM (RBF kernel) | Acc: 94.44% |

| BoVW | FPR: 9.76% | |||

| Liu et al. [93] | 548 videos | Audio periodicity and visual saliency colour moments | SVM (RBF kernel) | TPR: 96.7% FPR: 10% |

| Reference | Dataset Size | DL Architecture | Classification Algorithm | Evaluation Measures |

|---|---|---|---|---|

| Moustafa [29] | 800 videos [78] | Fusion (AlexNet and GoogleNet CNN) | Majority voting | Acc: 94.1% |

| Perez et al. [30] | 800 videos [78] | GoogleNet-based CNN | SVM (linear) | Acc: 97.9% |

| 2000 videos [26] | Acc: 96.4% | |||

| Wehrmann et al. [103] | 800 videos [78] | Fusion (ResNet and GoogleNet CNN) | LSTM-RNN | Acc: 95.6% |

| Song et al. [105] | 2000 videos [26] | Fusion video (VGG-16) + motion (VGG-16) | Multimodal stacking | Acc: 67.6% |

| + audio (mel-scaled spectrogram) | ensemble | TPR: 100% | ||

| Silva and Marana [28] | 800 videos [78] | VGG-C3D CNN | SVM (Linear) | Acc: 95.1% |

| ResNet R(2+1)D CNN | Softmax classifier | Acc: 91.8% | ||

| Singh et al. [108] | 800 videos [78] + Animated | VGG16 + LSTM autoencoder | LSTM classifier | Pre: 89.0% |

| videos with nudity | Rec: 85.0% | |||

| Papadamou et al. [109] | 4797 videos | Inception-V3 CNN (thumbnail) | 2 LSTM RNN + dense layer | Acc: 84.3% |

| Rec: 89.0% | ||||

| Song et al. [32] | 2000 videos [26] | Fusion video (VGG16 + Bi-LSTM) + audio | Multimodal stacking | Acc: 92.33% |

| (multilayered dilated Conv.) | ensemble | FNR: 4.6% | ||

| Chen et al. [34] | 800 videos [78] + Custom | DOCAPorn (VGG19 modification + visual | Softmax classifier | Acc: 95.63% |

| Dataset (1,000,000 images) | attention) | Acc: 98.42% | ||

| AlDahoul et al. [116] | 2000 videos [26] | YOLOv3 + ResNet-50 | Random forest | Acc: 87.75% |

| F1-score: 90.03% | ||||

| Lin et al. [126] | 120,000 [79] + | Fusion DenseNet121(4) + visual attention | Softmax classifier | Acc: 94.96% |

| Pornography-800 [78] | Acc: 94.3% | |||

| Ganwar et al. [35] | Training: Pornography2M [35] + 1M Google Open Dataset [129] | CNN + Inception + inception reduction + inception-ResNet + attention | Softmax classifier + centre loss | Acc: 97.1% |

| Testing: 2000 videos [26] | F2: 97.45% | |||

| Fu et al. [33] | 30,000 videos | ResNet-50 + BiFPN + ResNet-attention network (RANet) + VGGish | Softmax classifier | Acc: 93.4% |

| Yousaf et al. [128] | 111,561 videos | EfficientNet-B7 + BiLSTM | Softmax classifier | Acc: 95.66% |

| F1-score: 92.67% | ||||

| Lovenia et al. [117] | 800 videos [78] | CNN (audio features) | Voting segment-to-audio alg. | Acc: 95.75% |

| Gautam et al. [118] | 800 videos [78] | ResNet-18 + sequence classifier ConvNets | Softmax classifier | Acc: 98.25% |

| 2000 videos [26] | + faster RCNN-inception ResNet V2 | Acc: 97.15% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Povedano Álvarez, D.; Sandoval Orozco, A.L.; García-Miguel, J.P.; García Villalba, L.J. Learning Strategies for Sensitive Content Detection. Electronics 2023, 12, 2496. https://doi.org/10.3390/electronics12112496

Povedano Álvarez D, Sandoval Orozco AL, García-Miguel JP, García Villalba LJ. Learning Strategies for Sensitive Content Detection. Electronics. 2023; 12(11):2496. https://doi.org/10.3390/electronics12112496

Chicago/Turabian StylePovedano Álvarez, Daniel, Ana Lucila Sandoval Orozco, Javier Portela García-Miguel, and Luis Javier García Villalba. 2023. "Learning Strategies for Sensitive Content Detection" Electronics 12, no. 11: 2496. https://doi.org/10.3390/electronics12112496

APA StylePovedano Álvarez, D., Sandoval Orozco, A. L., García-Miguel, J. P., & García Villalba, L. J. (2023). Learning Strategies for Sensitive Content Detection. Electronics, 12(11), 2496. https://doi.org/10.3390/electronics12112496