A Period Training Method for Heterogeneous UUV Dynamic Task Allocation

Abstract

:1. Introduction

2. Dynamic Task Allocation Formulation

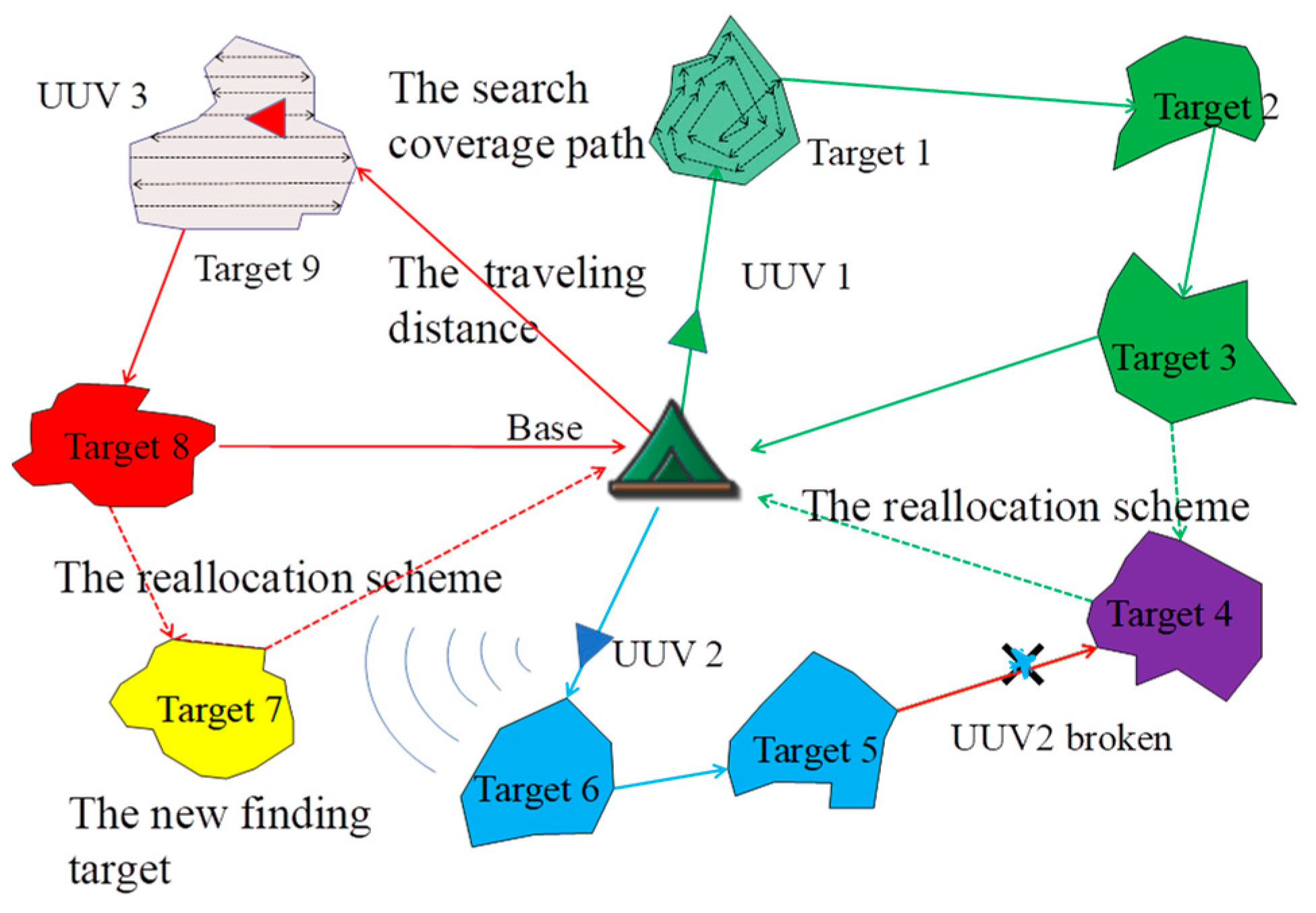

2.1. Scenario Description of the Dynamic UUV Allocation Problem

2.2. Objective Functions and Constraints

3. MARL with the Attention Mechanism and Period Training Method

3.1. Encoder with Deep Feature Extraction Networks

3.2. Decoder with Attention Mechanisms

3.3. Period Training Method

4. Simulation Experiment

4.1. Dynamic Case Settings with Emergencies

- Case 1: We randomly generate 40 irregular task areas as the initial settings. Four heterogeneous UUVs with different velocities and search ranges need to conduct these tasks with preplanned orders and search coverage paths. The original task situation is shown in Figure 5a.

- 2.

- Case 2: We consider that the UUVs find a group of new task areas while performing case 1. Similarly, the new target areas are approximated using a bounding rectangle. To search the new task areas, the algorithms need to reallocate the task schemes in real time.

- 3.

- Case 3: Following case 2, we assume that UUV 4 is broken and cannot search for any tasks in case 3. To deal with the emergency, the rest of the unfinished tasks are quickly assigned to the other UUVs according to objective function (1) and constraint (4).

4.2. Simulation Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gan, W.; Xia, T.; Chu, Z. A Prognosis Technique Based on Improved GWO-NMPC to Improve the Trajectory Tracking Control System Reliability of Unmanned Underwater Vehicles. Electronics 2023, 12, 921. [Google Scholar] [CrossRef]

- Lemieszewski, L.; Radomska-Zalas, A.; Perec, A.; Dobryakova, L.; Ochin, E. GNSS and LNSS Positioning of Unmanned Transport Systems: The Brief Classification of Terrorist Attacks on USVs and UUVs. Electronics 2021, 10, 401. [Google Scholar] [CrossRef]

- Zuo, L.; Hu, J.; Sun, H.; Gao, Y. Resource allocation for target tracking in multiple radar architectures over lossy networks. Signal Process 2023, 208, 108973. [Google Scholar] [CrossRef]

- Baylog, J.G.; Wettergren, T.A. A ROC-Based Approach for Developing Optimal Strategies in UUV Search Planning. IEEE J. Ocean. Eng. 2018, 43, 843–855. [Google Scholar] [CrossRef]

- Sun, S.; Song, B.; Wang, P.; Dong, H.; Chen, X. Real-Time Mission-Motion Planner for Multi-UUVs Cooperative Work Using Tri-Level Programing. IEEE Trans. Intell. Transp. Syst. 2022, 23, 1260–1273. [Google Scholar] [CrossRef]

- Ao, T.; Zhang, K.; Shi, H.; Jin, Z.; Zhou, Y.; Liu, F. Energy-Efficient Multi-UAVs Cooperative Trajectory Optimization for Communication Coverage: An MADRL Approach. Remote Sens. 2023, 15, 429. [Google Scholar] [CrossRef]

- Sun, Y.; He, Q. Computational Offloading for MEC Networks with Energy Harvesting: A Hierarchical Multi-Agent Reinforcement Learning Approach. Electronics 2023, 12, 1304. [Google Scholar] [CrossRef]

- He, Z.; Dong, L.; Sun, C.; Wang, J. Asynchronous Multithreading Reinforcement-Learning-Based Path Planning and Tracking for Unmanned Underwater Vehicle. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 2757–2769. [Google Scholar] [CrossRef]

- Qian, F.; Su, K.; Liang, X.; Zhang, K. Task Assignment for UAV Swarm Saturation Attack: A Deep Reinforcement Learning Approach. Electronics 2023, 12, 1292. [Google Scholar] [CrossRef]

- Fang, Z.; Jiang, D.; Huang, J.; Cheng, C.; Sha, Q.; He, B.; Li, G. Autonomous underwater vehicle formation control and obstacle avoidance using multi-agent generative adversarial imitation learning. Ocean Eng. 2022, 262, 112182. [Google Scholar] [CrossRef]

- Ding, C.; Zheng, Z. A Reinforcement Learning Approach Based on Automatic Policy Amendment for Multi-AUV Task Allocation in Ocean Current. Drones 2022, 6, 141. [Google Scholar] [CrossRef]

- Liang, Z.; Dai, Y.; Lyu, L.; Lin, B. Adaptive Data Collection and Offloading in Multi-UAV-Assisted Maritime IoT Systems: A Deep Reinforcement Learning Approach. Remote Sens. 2023, 15, 292. [Google Scholar] [CrossRef]

- Zhang, K.; He, F.; Zhang, Z.; Lin, X.; Li, M. Multi-vehicle routing problems with soft time windows: A multiagent reinforcement learning approach. Transp. Res. C Emerg. Technol. 2022, 121, 102861. [Google Scholar] [CrossRef]

- Kool, W.; van Hoof, H.; Welling, M. Attention, Learn to Solve Routing Problems. In Proceedings of the 2019 International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2017. [Google Scholar]

- Zuo, L.; Gao, S.; Li, Y.; Li, L.; Li, M.; Lu, X. A Fast and Robust Algorithm with Reinforcement Learning for Large UAV Cluster Mission Planning. Remote Sens. 2022, 14, 1304. [Google Scholar] [CrossRef]

- Ren, L.; Fan, X.; Cui, J.; Shen, Z.; Lv, Y.; Xiong, G. A Multi-Agent Reinforcement Learning Method with Route Recorders for Vehicle Routing in Supply Chain Management. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16410–16420. [Google Scholar] [CrossRef]

- Chen, T.; Yang, T.; Yu, Y. Multi-UAV Task Assignment with Parameter and Time-Sensitive Uncertainties Using Modified Two-Part Wolf Pack Search Algorithm. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 2853–2872. [Google Scholar] [CrossRef]

- Duan, H.; Zhao, J.; Deng, Y.; Shi, Y.; Ding, X. Dynamic Discrete Pigeon-Inspired Optimization for Multi-UAV Cooperative Search-Attack Mission Planning. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 706–720. [Google Scholar] [CrossRef]

| Algorithm | Case 1 | Case 2 | Case 3 | |||

|---|---|---|---|---|---|---|

| Value | Time | Value | Time | Value | Time | |

| MTWPS | 209.2 | 12.3 s | 223.1 | 13.1 s | 197.1 | 12.5 s |

| DDPIO | 206.5 | 14.6 s | 264.6 | 17.2 s | 211.1 | 14.9 s |

| MARLAM | 203.4 | 2.0 s | 210.2 | 2.3 s | 197.0 | 1.5 s |

| MARLAM-PTM | 205.8 | 1.8 s | 205.6 | 2.4 s | 183.3 | 1.5 s |

| Case | MARLAM-PTM | MARLAM | MTWPS | DDPIO | |

|---|---|---|---|---|---|

| Case 4-1 | 40-4 | 205.62 | 206.5 | 209.3 | 216.2 |

| Case 4-2 | 40-4 | 229.65 | 232.98 | 224.16 | 233.24 |

| Case 4-3 | 40-4 | 193.35 | 194.68 | 195.24 | 204.27 |

| Case 5-1 | 60-4 | 252.56 | 259.61 | 270.27 | 274.86 |

| Case 5-2 | 60-4 | 259.87 | 261.48 | 267.07 | 269.51 |

| Case 5-3 | 60-4 | 259.90 | 261.28 | 258.54 | 267.67 |

| Case 6-1 | 40-3 | 181.84 | 189.08 | 190.42 | 190.06 |

| Case 6-2 | 40-3 | 199.19 | 217.81 | 205.88 | 210.12 |

| Case 6-3 | 40-3 | 199.98 | 203.8 | 200.52 | 209.11 |

| Case 7-1 | 60-3 | 248.96 | 253.98 | 244.82 | 258.10 |

| Case 7-2 | 60-3 | 249.77 | 251.63 | 254.20 | 256.03 |

| Case 7-3 | 60-3 | 244.40 | 245.89 | 244.12 | 260.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, J.; Yang, K.; Gao, S.; Bao, S.; Zuo, L.; Wei, X. A Period Training Method for Heterogeneous UUV Dynamic Task Allocation. Electronics 2023, 12, 2508. https://doi.org/10.3390/electronics12112508

Xie J, Yang K, Gao S, Bao S, Zuo L, Wei X. A Period Training Method for Heterogeneous UUV Dynamic Task Allocation. Electronics. 2023; 12(11):2508. https://doi.org/10.3390/electronics12112508

Chicago/Turabian StyleXie, Jiaxuan, Kai Yang, Shan Gao, Shixiong Bao, Lei Zuo, and Xiangyu Wei. 2023. "A Period Training Method for Heterogeneous UUV Dynamic Task Allocation" Electronics 12, no. 11: 2508. https://doi.org/10.3390/electronics12112508

APA StyleXie, J., Yang, K., Gao, S., Bao, S., Zuo, L., & Wei, X. (2023). A Period Training Method for Heterogeneous UUV Dynamic Task Allocation. Electronics, 12(11), 2508. https://doi.org/10.3390/electronics12112508