Gaze-Based Human–Computer Interaction for Museums and Exhibitions: Technologies, Applications and Future Perspectives

Abstract

:1. Introduction

2. Objectives

3. Eye Tracking Technology

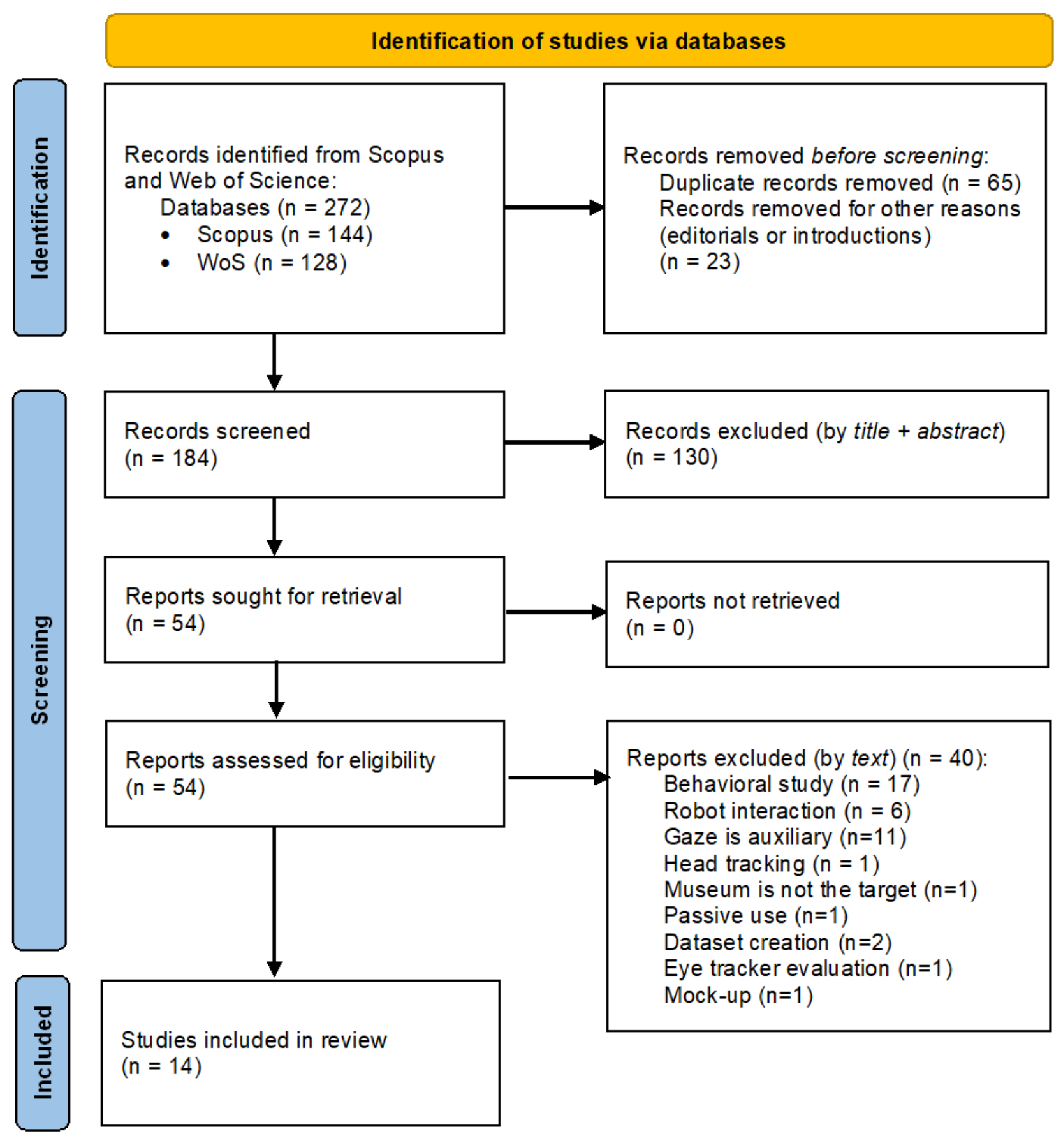

4. Materials and Methods

4.1. Searching Methodology

4.2. Selection Criteria

4.3. Selected Records

5. Results

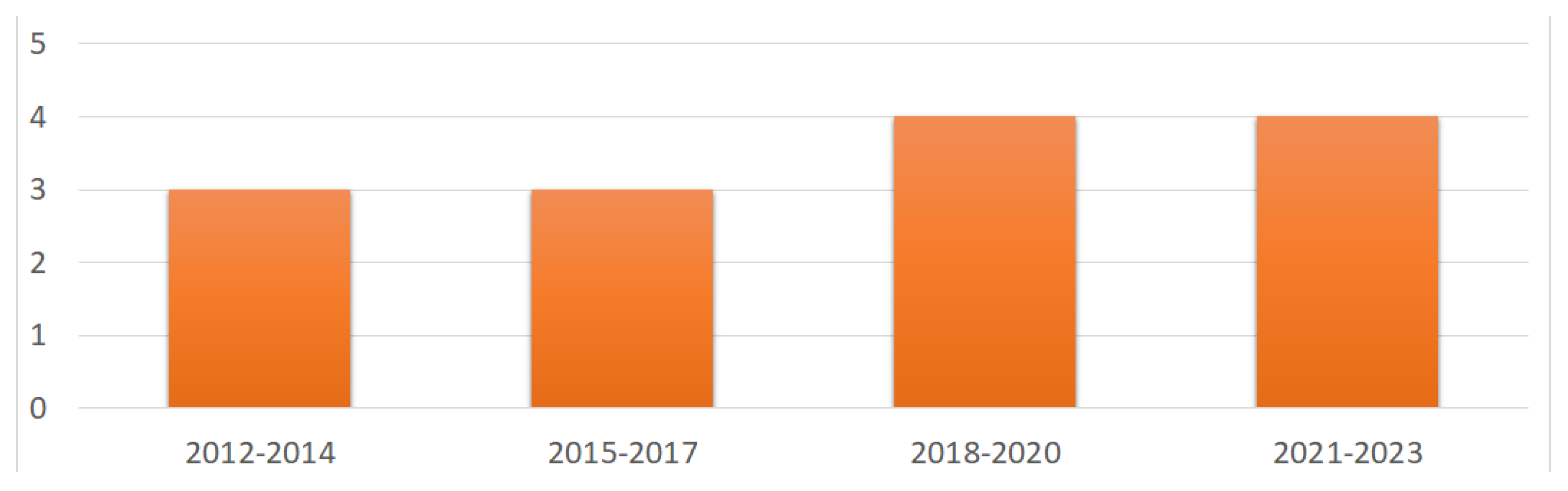

5.1. Initial Analysis

5.2. Wearable Eye Trackers/AR Applications

5.3. Remote Eye Trackers/Non-Immersive VR Applications

6. Discussion

6.1. Summary on the Use of Wearable Eye Trackers/AR Applications

6.2. Summary on the Use of Remote Eye Trackers/Non-Immersive VR Applications

6.3. General Considerations

6.4. Future Perspectives

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pedersen, I.; Gale, N.; Mirza-Babaei, P.; Reid, S. More than Meets the Eye: The Benefits of Augmented Reality and Holographic Displays for Digital Cultural Heritage. J. Comput. Cult. Herit. 2017, 10, 11. [Google Scholar] [CrossRef]

- Ibrahim, N.; Ali, N.M. A Conceptual Framework for Designing Virtual Heritage Environment for Cultural Learning. J. Comput. Cult. Herit. 2018, 11, 1–27. [Google Scholar] [CrossRef]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A Survey of Augmented, Virtual, and Mixed Reality for Cultural Heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Wang, C.; Zhu, Y. A Survey of Museum Applied Research Based on Mobile Augmented Reality. Comput. Intell. Neurosci. 2022, 2022, 2926241. [Google Scholar] [CrossRef]

- Mortara, M.; Catalano, C.E.; Bellotti, F.; Fiucci, G.; Houry-Panchetti, M.; Petridis, P. Learning cultural heritage by serious games. J. Cult. Herit. 2014, 15, 318–325. [Google Scholar] [CrossRef] [Green Version]

- DaCosta, B.; Kinsell, C. Serious Games in Cultural Heritage: A Review of Practices and Considerations in the Design of Location-Based Games. Educ. Sci. 2023, 13, 47. [Google Scholar] [CrossRef]

- Styliani, S.; Fotis, L.; Kostas, K.; Petros, P. Virtual museums, a survey and some issues for consideration. J. Cult. Herit. 2009, 10, 520–528. [Google Scholar] [CrossRef]

- Choi, B.; Kim, J. Changes and Challenges in Museum Management after the COVID-19 Pandemic. J. Open Innov. Technol. Mark. Complex. 2021, 7, 148. [Google Scholar] [CrossRef]

- Giannini, T.; Bowen, J.P. Museums and Digital Culture: From Reality to Digitality in the Age of COVID-19. Heritage 2022, 5, 192–214. [Google Scholar] [CrossRef]

- Fanini, B.; d’Annibale, E.; Demetrescu, E.; Ferdani, D.; Pagano, A. Engaging and shared gesture-based interaction for museums the case study of K2R international expo in Rome. In Proceedings of the 2015 Digital Heritage, Granada, Spain, 28 September–2 October 2015; Volume 1, pp. 263–270. [Google Scholar] [CrossRef]

- Yoshida, R.; Tamaki, H.; Sakai, T.; Nakadai, T.; Ogitsu, T.; Takemura, H.; Mizoguchi, H.; Namatame, M.; Saito, M.; Kusunoki, F.; et al. Novel application of Kinect sensor to support immersive learning within museum for children. In Proceedings of the 2015 9th International Conference on Sensing Technology (ICST), Auckland, New Zealand, 8–10 December 2015; pp. 834–837. [Google Scholar] [CrossRef]

- Dondi, P.; Lombardi, L.; Rocca, I.; Malagodi, M.; Licchelli, M. Multimodal workflow for the creation of interactive presentations of 360 spin images of historical violins. Multimed. Tools Appl. 2018, 77, 28309–28332. [Google Scholar] [CrossRef]

- Buquet, C.; Charlier, J.; Paris, V. Museum application of an eye tracker. Med. Biol. Eng. Comput. 1988, 26, 277–281. [Google Scholar] [CrossRef]

- Wooding, D.S.; Mugglestone, M.D.; Purdy, K.J.; Gale, A.G. Eye movements of large populations: I. Implementation and performance of an autonomous public eye tracker. Behav. Res. Methods Instrum. Comput. 2002, 34, 509–517. [Google Scholar] [CrossRef] [Green Version]

- Wooding, D.S. Eye movements of large populations: II. Deriving regions of interest, coverage, and similarity using fixation maps. Behav. Res. Methods Instrum. Comput. 2002, 34, 518–528. [Google Scholar] [CrossRef] [Green Version]

- Milekic, S. Gaze-tracking and museums: Current research and implications. In Museums and the Web 2010: Proceedings; Archives & Museum Informatics: Toronto, ON, Canada, 2010; pp. 61–70. [Google Scholar]

- Eghbal-Azar, K.; Widlok, T. Potentials and Limitations of Mobile Eye Tracking in Visitor Studies. Soc. Sci. Comput. Rev. 2013, 31, 103–118. [Google Scholar] [CrossRef]

- Villani, D.; Morganti, F.; Cipresso, P.; Ruggi, S.; Riva, G.; Gilli, G. Visual exploration patterns of human figures in action: An eye tracker study with art paintings. Front. Psychol. 2015, 6, 1636. [Google Scholar] [CrossRef] [Green Version]

- Calandra, D.M.; Di Mauro, D.; D’Auria, D.; Cutugno, F. E.Y.E. C.U.: An Emotional eYe trackEr for Cultural heritage sUpport. In Empowering Organizations: Enabling Platforms and Artefacts; Springer International Publishing: Cham, Switzerland, 2016; pp. 161–172. [Google Scholar] [CrossRef]

- Das, D.; Rashed, M.G.; Kobayashi, Y.; Kuno, Y. Supporting Human–Robot Interaction Based on the Level of Visual Focus of Attention. IEEE Trans. Hum. Mach. Syst. 2015, 45, 664–675. [Google Scholar] [CrossRef]

- Rashed, M.G.; Suzuki, R.; Lam, A.; Kobayashi, Y.; Kuno, Y. A vision based guide robot system: Initiating proactive social human robot interaction in museum scenarios. In Proceedings of the 2015 International Conference on Computer and Information Engineering (ICCIE), Rajshahi, Bangladesh, 26–27 November 2015; pp. 5–8. [Google Scholar] [CrossRef]

- Iio, T.; Satake, S.; Kanda, T.; Hayashi, K.; Ferreri, F.; Hagita, N. Human-like guide robot that proactively explains exhibits. Int. J. Soc. Robot. 2020, 12, 549–566. [Google Scholar] [CrossRef] [Green Version]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice, 3rd ed.; Springer International Publishing AG: Cham, Switzerland, 2017. [Google Scholar]

- Velichkovsky, B.M.; Dornhoefer, S.M.; Pannasch, S.; Unema, P.J. Visual Fixations and Level of Attentional Processing. In Proceedings of the ETRA 2000 Symposium on Eye Tracking Research & Applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; ACM: New York, NY, USA, 2000; pp. 79–85. [Google Scholar] [CrossRef] [Green Version]

- Robinson, D.A. The mechanics of human saccadic eye movement. J. Physiol. 1964, 174, 245–264. [Google Scholar] [CrossRef]

- Shackel, B. Pilot study in electro-oculography. Br. J. Ophthalmol. 1960, 44, 89. [Google Scholar] [CrossRef] [Green Version]

- Robinson, D.A. A Method of Measuring Eye Movemnent Using a Scieral Search Coil in a Magnetic Field. IEEE Trans. Bio-Med. Electron. 1963, 10, 137–145. [Google Scholar] [CrossRef]

- Mele, M.L.; Federici, S. Gaze and eye-tracking solutions for psychological research. Cogn. Process. 2012, 13, 261–265. [Google Scholar] [CrossRef] [PubMed]

- Popa, L.; Selejan, O.; Scott, A.; Mureşanu, D.F.; Balea, M.; Rafila, A. Reading beyond the glance: Eye tracking in neurosciences. Neurol. Sci. 2015, 36, 683–688. [Google Scholar] [CrossRef] [PubMed]

- Wedel, M.; Pieters, R. Eye tracking for visual marketing. Found. Trends Mark. 2008, 1, 231–320. [Google Scholar] [CrossRef] [Green Version]

- Cantoni, V.; Perez, C.J.; Porta, M.; Ricotti, S. Exploiting Eye Tracking in Advanced E-Learning Systems. In Proceedings of the CompSysTech ’12: 13th International Conference on Computer Systems and Technologies, Ruse, Bulgaria, 22–23 June 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 376–383. [Google Scholar] [CrossRef]

- Nielsen, J.; Pernice, K. Eyetracking Web Usability; New Riders Press: Thousand Oaks, CA, USA, 2009. [Google Scholar]

- Mosconi, M.; Porta, M.; Ravarelli, A. On-Line Newspapers and Multimedia Content: An Eye Tracking Study. In Proceedings of the SIGDOC ’08: 26th Annual ACM International Conference on Design of Communication, Lisbon, Portugal, 22–24 September 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 55–64. [Google Scholar] [CrossRef]

- Kasprowski, P.; Ober, J. Eye Movements in Biometrics. In Biometric Authentication; Maltoni, D., Jain, A.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 248–258. [Google Scholar]

- Porta, M.; Dondi, P.; Zangrandi, N.; Lombardi, L. Gaze-Based Biometrics From Free Observation of Moving Elements. IEEE Trans. Biom. Behav. Identity Sci. 2022, 4, 85–96. [Google Scholar] [CrossRef]

- Duchowski, A.T. Gaze-based interaction: A 30 year retrospective. Comput. Graph. 2018, 73, 59–69. [Google Scholar] [CrossRef]

- Majaranta, P.; Räihä, K.J. Text entry by gaze: Utilizing eye-tracking. In Text Entry Systems: Mobility, Accessibility, Universality; Morgan Kaufmann: San Francisco, CA, USA, 2007; pp. 175–187. [Google Scholar]

- Porta, M. A study on text entry methods based on eye gestures. J. Assist. Technol. 2015, 9, 48–67. [Google Scholar] [CrossRef]

- Porta, M.; Dondi, P.; Pianetta, A.; Cantoni, V. SPEye: A Calibration-Free Gaze-Driven Text Entry Technique Based on Smooth Pursuit. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 312–323. [Google Scholar] [CrossRef]

- Kumar, C.; Menges, R.; Müller, D.; Staab, S. Chromium based framework to include gaze interaction in web browser. In Proceedings of the 26th International Conference on World Wide Web Companion, Geneva, Switzerland, 3–7 April 2017; pp. 219–223. [Google Scholar]

- Casarini, M.; Porta, M.; Dondi, P. A Gaze-Based Web Browser with Multiple Methods for Link Selection. In Proceedings of the ETRA ’20 Adjunct: ACM Symposium on Eye Tracking Research and Applications, Stuttgart, Germany, 2–5 June 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Davanzo, N.; Dondi, P.; Mosconi, M.; Porta, M. Playing Music with the Eyes through an Isomorphic Interface. In Proceedings of the COGAIN ’18: Workshop on Communication by Gaze Interaction, Warsaw, Poland, 15 June 2018; ACM: New York, NY, USA, 2018; pp. 5:1–5:5. [Google Scholar] [CrossRef]

- Valencia, S.; Lamb, D.; Williams, S.; Kulkarni, H.S.; Paradiso, A.; Ringel Morris, M. Dueto: Accessible, Gaze-Operated Musical Expression. In Proceedings of the ASSETS ’19: 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 513–515. [Google Scholar] [CrossRef] [Green Version]

- Jacob, R.J. Eye movement-based human-computer interaction techniques: Toward non-command interfaces. Adv. Hum.-Comput. Interact. 1993, 4, 151–190. [Google Scholar]

- Wobbrock, J.O.; Rubinstein, J.; Sawyer, M.W.; Duchowski, A.T. Longitudinal Evaluation of Discrete Consecutive Gaze Gestures for Text Entry. In Proceedings of the ETRA ’08: 2008 Symposium on Eye Tracking Research & Applications, Savannah, Georgia, 26–28 March 2008; ACM: New York, NY, USA, 2008; pp. 11–18. [Google Scholar] [CrossRef] [Green Version]

- Porta, M.; Turina, M. Eye-S: A Full-Screen Input Modality for Pure Eye-Based Communication. In Proceedings of the ETRA ’08: 2008 Symposium on Eye Tracking Research & Applications, Savannah, Georgia, 26–28 March 2008; ACM: New York, NY, USA, 2008; pp. 27–34. [Google Scholar] [CrossRef]

- Istance, H.; Bates, R.; Hyrskykari, A.; Vickers, S. Snap clutch, a moded approach to solving the Midas touch problem. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications, Savannah, Georgia, 26–28 March 2008; pp. 221–228. [Google Scholar] [CrossRef]

- Królak, A.; Strumiłło, P. Eye-blink detection system for human–computer interaction. Univ. Access Inf. Soc. 2012, 11, 409–419. [Google Scholar] [CrossRef] [Green Version]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Majaranta, P.; Aoki, H.; Donegan, M.; Witzner Hansen, D.; Hansen, J.P. Gaze Interaction and Applications of Eye Tracking: Advances in Assistive Technologies; IGI Global: Hershey, PA, USA, 2011. [Google Scholar]

- Zeng, Z.; Neuer, E.S.; Roetting, M.; Siebert, F.W. A One-Point Calibration Design for Hybrid Eye Typing Interface. Int. J. Hum. Comput. Interact. 2022, 1–14. [Google Scholar] [CrossRef]

- Nagamatsu, T.; Fukuda, K.; Yamamoto, M. Development of Corneal Reflection-Based Gaze Tracking System for Public Use. In Proceedings of the PerDis ’14: International Symposium on Pervasive Displays, Copenhagen, Denmark, 3–4 June 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 194–195. [Google Scholar] [CrossRef]

- Cantoni, V.; Merlano, L.; Nugrahaningsih, N.; Porta, M. Eye Tracking for Cultural Heritage: A Gaze-Controlled System for Handless Interaction with Artworks. In Proceedings of the CompSysTech ’16: 17th International Conference on Computer Systems and Technologies 2016, Palermo, Italy, 23–24 June 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 307–314. [Google Scholar] [CrossRef]

- Cantoni, V.; Dondi, P.; Lombardi, L.; Nugrahaningsih, N.; Porta, M.; Setti, A. A Multi-Sensory Approach to Cultural Heritage: The Battle of Pavia Exhibition. IOP Conf. Ser. Mater. Sci. Eng. 2018, 364, 012039. [Google Scholar] [CrossRef]

- Mokatren, M.; Kuflik, T.; Shimshoni, I. A Novel Image Based Positioning Technique Using Mobile Eye Tracker for a Museum Visit. In Proceedings of the MobileHCI ’16: 18th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Florence, Italy, 6–9 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 984–991. [Google Scholar] [CrossRef]

- Mokatren, M.; Kuflik, T.; Shimshoni, I. Exploring the Potential Contribution of Mobile Eye-Tracking Technology in Enhancing the Museum Visit Experience. In Proceedings of the AVI*CH, Bari, Italy, 7–10 June 2016; pp. 23–31. [Google Scholar]

- Mokatren, M.; Kuflik, T.; Shimshoni, I. Exploring the potential of a mobile eye tracker as an intuitive indoor pointing device: A case study in cultural heritage. Future Gener. Comput. Syst. 2018, 81, 528–541. [Google Scholar] [CrossRef]

- Piening, R.; Pfeuffer, K.; Esteves, A.; Mittermeier, T.; Prange, S.; Schröder, P.; Alt, F. Looking for Info: Evaluation of Gaze Based Information Retrieval in Augmented Reality. In Human-Computer Interaction—INTERACT 2021; Ardito, C., Lanzilotti, R., Malizia, A., Petrie, H., Piccinno, A., Desolda, G., Inkpen, K., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 544–565. [Google Scholar] [CrossRef]

- Giariskanis, F.; Kritikos, Y.; Protopapadaki, E.; Papanastasiou, A.; Papadopoulou, E.; Mania, K. The Augmented Museum: A Multimodal, Game-Based, Augmented Reality Narrative for Cultural Heritage. In Proceedings of the IMX ’22: ACM International Conference on Interactive Media Experiences, Aveiro, Portugal, 22–24 June 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 281–286. [Google Scholar] [CrossRef]

- Toyama, T.; Kieninger, T.; Shafait, F.; Dengel, A. Gaze Guided Object Recognition Using a Head-Mounted Eye Tracker. In Proceedings of the ETRA ’12: Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 91–98. [Google Scholar] [CrossRef] [Green Version]

- Schuchert, T.; Voth, S.; Baumgarten, J. Sensing Visual Attention Using an Interactive Bidirectional HMD. In Proceedings of the Gaze-In ’12: 4th Workshop on Eye Gaze in Intelligent Human Machine Interaction, Santa Monica, CA, USA, 26 October 2012; Association for Computing Machinery: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Yang, J.; Chan, C.Y. Audio-Augmented Museum Experiences with Gaze Tracking. In Proceedings of the MUM ’19: 18th International Conference on Mobile and Ubiquitous Multimedia, Pisa, Italy, 26–29 November 2019; Association for Computing Machinery: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Dondi, P.; Porta, M.; Donvito, A.; Volpe, G. A gaze-based interactive system to explore artwork imagery. J. Multimodal User Interfaces 2022, 16, 55–67. [Google Scholar] [CrossRef]

- Al-Thani, L.K.; Liginlal, D. A Study of Natural Interactions with Digital Heritage Artifacts. In Proceedings of the 2018 3rd Digital Heritage International Congress (DigitalHERITAGE) Held Jointly with 2018 24th International Conference on Virtual Systems & Multimedia (VSMM 2018), San Francisco, CA, USA, 26–30 October 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Raptis, G.E.; Kavvetsos, G.; Katsini, C. MuMIA: Multimodal Interactions to Better Understand Art Contexts. Appl. Sci. 2021, 11, 2695. [Google Scholar] [CrossRef]

- Porta, M.; Caminiti, A.; Dondi, P. GazeScale: Towards General Gaze-Based Interaction in Public Places. In Proceedings of the ICMI ’22: 2022 International Conference on Multimodal Interaction, Bengaluru, India, 7–11 November 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 591–596. [Google Scholar] [CrossRef]

- Zeng, Z.; Liu, S.; Cheng, H.; Liu, H.; Li, Y.; Feng, Y.; Siebert, F. GaVe: A webcam-based gaze vending interface using one-point calibration. J. Eye Mov. Res. 2023, 16. [Google Scholar] [CrossRef]

- Mu, M.; Dohan, M. Community Generated VR Painting Using Eye Gaze. In Proceedings of the MM ’21: 29th ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; Association for Computing Machinery: New York, NY, USA, 2021; pp. 2765–2767. [Google Scholar] [CrossRef]

- Pathirana, P.; Senarath, S.; Meedeniya, D.; Jayarathna, S. Eye gaze estimation: A survey on deep learning-based approaches. Expert Syst. Appl. 2022, 199, 116894. [Google Scholar] [CrossRef]

- Plopski, A.; Hirzle, T.; Norouzi, N.; Qian, L.; Bruder, G.; Langlotz, T. The Eye in Extended Reality: A Survey on Gaze Interaction and Eye Tracking in Head-Worn Extended Reality. ACM Comput. Surv. 2022, 55, 1–39. [Google Scholar] [CrossRef]

| Category | Studies |

|---|---|

| Real Museum | [52,53,54,55,56,57,58,59] |

| Simulated Museum | [60,61,62,63] |

| Virtual Museum | [64,65] |

| Category | Studies |

|---|---|

| AR | [55,56,57,58,59,60,61,62] |

| VR (non-immersive) | [52,53,54,63,64,65] |

| Category | Studies |

|---|---|

| Wearable Eye Tracker | [55,56,57,58,59,60,61,62] |

| Remote Eye Tracker | [52,53,54,63,64,65] |

| Category | Studies |

|---|---|

| Gaze only | [52,53,54,58,60,61,62,63,64] |

| Gaze and Voice | [65] |

| Gaze and Gesture | [55,56,57] |

| Gaze, Voice and Gesture | [59] |

| Type of ET | Pros | Cons |

|---|---|---|

| Wearable | Freedom of movement | Hygienic risk |

| May be uncomfortable to wear | ||

| Usually more expensive | ||

| Remote | Hygienic solution | May require user to stay relatively still |

| Usually cheaper |

| Goal | How the Goal Can Be Achieved |

|---|---|

| Removing/reducing calibration | Designing interfaces with large target elements |

| Implementing quick “rough” calibration (e.g., one-point calibration) | |

| Exploiting new AI-based gaze estimation methods | |

| Creating immersive VR applications | Applying existing immersive VR gaze-based interaction methods (e.g., those employed in video games) |

| Promoting the digitization of artworks (with the consequent creation of more virtual museums) | |

| Consolidating AR applications | Extensively testing and enhancing existing AR approaches in museums (e.g., regarding robustness and responsiveness) |

| Implementing personalized tours | Performing real-time processing of visitors’ gaze patterns |

| Providing recommendations based on visitors’ interest (e.g., suggesting artwork similar to those previously observed) | |

| Improving museum accessibility | Carrying out tests with motor impaired people |

| Contextualizing existing gaze-based assistive applications to the museum environment |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dondi, P.; Porta, M. Gaze-Based Human–Computer Interaction for Museums and Exhibitions: Technologies, Applications and Future Perspectives. Electronics 2023, 12, 3064. https://doi.org/10.3390/electronics12143064

Dondi P, Porta M. Gaze-Based Human–Computer Interaction for Museums and Exhibitions: Technologies, Applications and Future Perspectives. Electronics. 2023; 12(14):3064. https://doi.org/10.3390/electronics12143064

Chicago/Turabian StyleDondi, Piercarlo, and Marco Porta. 2023. "Gaze-Based Human–Computer Interaction for Museums and Exhibitions: Technologies, Applications and Future Perspectives" Electronics 12, no. 14: 3064. https://doi.org/10.3390/electronics12143064

APA StyleDondi, P., & Porta, M. (2023). Gaze-Based Human–Computer Interaction for Museums and Exhibitions: Technologies, Applications and Future Perspectives. Electronics, 12(14), 3064. https://doi.org/10.3390/electronics12143064