Static Hand Gesture Recognition Based on Millimeter-Wave Near-Field FMCW-SAR Imaging

Abstract

:1. Introduction

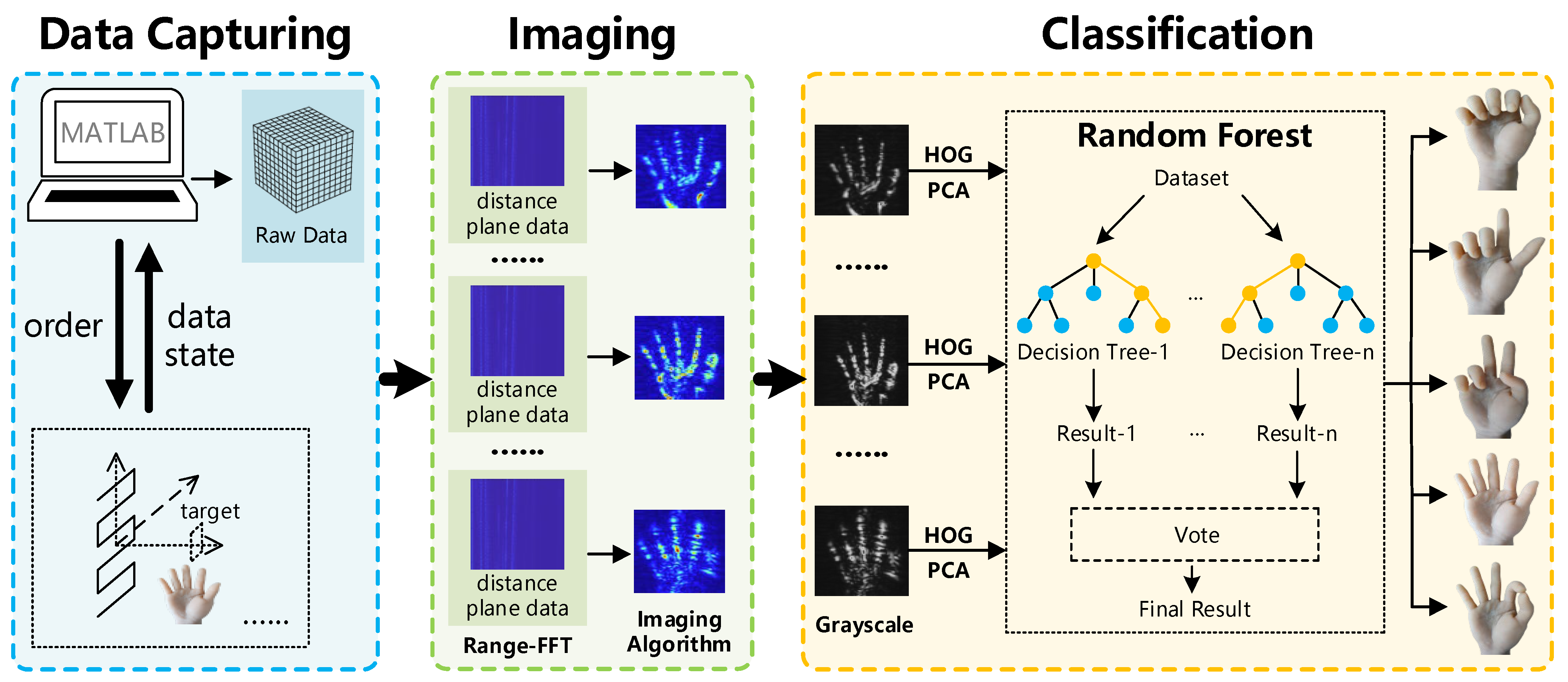

- We built a millimeter-wave near-field SAR imaging system. The system was deployed to perform static gesture imaging, and the resulting images were utilized as features to accomplish static gesture recognition. In comparison to cameras, the millimeter-wave imaging system is not bound by lighting conditions and can effectively scan static gestures in dark environments.

- We present a method for constructing a dataset of static gesture images. The dataset was generated by using different imaging algorithms to process different static gestures at every distance plane. A classification approach based on HOG–PCA–RF is proposed for this dataset, where HOG was utilized for image feature extraction, followed by dimensionality reduction using PCA and ultimately classification using Random Forest.

- The system built by us captured the data of five common static gestures and produced images that could approximately reproduce the contours of the gestures. The classification results demonstrate that the proposed method achieved satisfactory recognition precision.

2. Related Work

2.1. Dynamic Hand Gesture Recognition Based on Wireless Sensing

2.2. Static Hand Gesture Recognition Based on Wireless Sensing

3. System Design

3.1. System Overview

3.2. Data Capturing

3.3. Static Hand Gesture Imaging

3.4. Gesture Classification

| Algorithm 1: Static Hand Gesture Classification |

| Input: |

| Output: |

| 1. |

| 2. // dim: dimension |

| 3. for i = 1:1:epoch |

| 4. |

| 5. end for |

| 6. |

| 7. function HOG (H) |

| 8. |

| 9. // x-direction gradient |

| 10. // y-direction gradient |

| 11. // gradient amplitude |

| 12. // gradient angle |

| 13. |

| 14. end function |

| 15. |

| 16. for i = 1:1:n |

| 17. // centralization |

| 18. end for |

| 19. // calculate the covariance matrix |

| 20. // calculate eigenvalue |

| 21. for i = 1:1:m |

| 22. // obtain feature vector |

| 23. end for |

| 24. // obtain projection matrix |

| 25. return WX |

| 26. end function |

4. Experiment and Evaluation

4.1. Experimental Platform

4.2. Experimental Setup

4.3. Experimental Evaluation

4.3.1. Imaging Result

4.3.2. Classification Result

- Unobstructed scene.

- Obstructed scene.

5. Conclusions and Discussion

- The data capturing time is long and meets the real-time requirements with difficulty. The data capturing time is about 30 min for one gesture with our platform, and it is hard to keep an experimenter’s hand static from begin to end. This is one of the reasons why we used a simulated human hand to conduct the imaging experiment (another reason: in work performed in [34,35], simulated humans have already been used to conduct imaging experiments). Improvements can be made in two aspects in the future. Regarding the hardware aspect, a radar with more antenna units can be selected, but this increases hardware costs. Regarding the algorithm aspect, techniques such as Compressive Sensing, Deep Learning and Matrix Compensation can be used to reduce spatial sampling points, which can restore under-sampled data to full-sampled data, thereby reducing the capturing time.

- The lack of consideration for the same type of static gesture from different angles and directions led to low system robustness. In the future, better feature extraction methods can be considered to extract features that are independent of angles and directions, followed by robustness-testing experiments.

- Due to limitations in data capturing efficiency, experiments had to be conducted using simulated hands. Compared with a real human hand, the material used for a simulated human hand is more ideal, and it has higher reflectivity, thus obtaining better imaging results. It is possible that simulated hands might work better than real hands. Therefore, in the future, experiments can be carried out using human hands when data capturing efficiency improves.

- The cardboard box experiment shows that, compared to Computer Vision, wireless sensing has the ability to penetrate obstruction and recognize gestures behind it. However, the cardboard box clearly has an effect on the signal. Different materials of the obstruction have different effects on the signal. According to the SAR imaging algorithm, as the reflectivity of the material (such as metal) increases, it becomes more difficult to penetrate; conversely, as reflectivity decreases, it becomes easier to penetrate the material (such as a cardboard box). Therefore, the penetration ability of wireless sensing is limited, which is related to the reflectivity (in other words, the material) of the obstruction. Therefore, depending on the material, it is important to determine the penetration range of millimeter-wave imaging in wireless sensing.

- This article focuses on the research of static gesture recognition via wireless sensing and does not involve research on dynamic gestures via wireless sensing. How to use wireless sensing to recognize dynamic gestures and static gestures together is a big challenge in the future.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gupta, H.P.; Chudgar, H.S.; Mukherjee, S.; Dutta, T.; Sharma, K. A continuous hand gestures recognition technique for human-machine interaction using accelerometer and gyroscope sensors. IEEE Sens. J. 2016, 16, 6425–6432. [Google Scholar] [CrossRef]

- Lian, K.Y.; Chiu, C.C.; Hong, Y.J.; Sung, W.T. Wearable armband for real time hand gesture recognition. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2992–2995. [Google Scholar]

- Mistry, P.; Maes, P.; Chang, L. WUW-wear Ur world: A wearable gestural interface. In CHI’09 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2009; pp. 4111–4116. [Google Scholar]

- Xu, Y.; Zhou, H. Static gesture recognition based on OpenCV in a simple background. Comput. Sci. 2022, 49 (Suppl. S2), 393–398. [Google Scholar]

- Hu, Z.; Zhou, Y.; Shi, B.; He, H. Static gesture recognition algorithm based on attention mechanism and feature fusion. Comput. Eng. 2022, 48, 240–246. [Google Scholar]

- Oyedotun, O.K.; Khashman, A. Deep learning in vision-based static hand gesture recognition. Neural Comput. Appl. 2017, 28, 3941–3951. [Google Scholar] [CrossRef]

- Ohn-Bar, E.; Trivedi, M.M. Hand gesture recognition in real time for automotive interfaces: A multimodal vision-based approach and evaluations. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2368–2377. [Google Scholar] [CrossRef]

- Ren, Z.; Yuan, J.; Meng, J.; Zhang, Z. Robust part-based hand gesture recognition using kinect sensor. IEEE Trans. Multimed. 2013, 15, 1110–1120. [Google Scholar] [CrossRef]

- Rogez, G.; Supancic, J.S.; Ramanan, D. Understanding everyday hands in action from RGB-D images. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3889–3897. [Google Scholar]

- Jian, H.E.; Li, J.J.; Cheng, Z.H.; Xin, W.E.; Jia, B.A.; Wang, W.D. Visual gesture recognition technology based on long and short-term memory and deep neural network. J. Graph. 2020, 41, 372–381. [Google Scholar]

- Sha, J.; Ma, J.; Mou, H.; Hou, J. Overview of dynamic gesture recognition based on vision. Comput. Sci. Appl. 2020, 10, 990–1001. [Google Scholar]

- Wang, L.; Ma, Z.; Tang, X.; Wang, Z.; Zhou, G.; He, S. A gesture recognition method inspired by visual perception for extravehicular activities. Manned Spacefl. 2017, 23, 805–810. [Google Scholar]

- Al-Qaness, M.A.A.; Li, F. WiGeR: WiFi-based gesture recognition system. ISPRS Int. J. Geo. Inf. 2016, 5, 92. [Google Scholar] [CrossRef]

- Abdelnasser, H.; Harras, K.; Youssef, M. A ubiquitous WiFi-based fine-grained gesture recognition system. IEEE Trans. Mob. Comput. 2018, 18, 2474–2487. [Google Scholar] [CrossRef]

- Ahmed, S.; Cho, S.H. Hand gesture recognition using an IR-UWB radar with an inception module-based classifier. Sensors 2020, 20, 564. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Qu, W.; Qiu, L. Overview of Research on Millimeter Wave Radar Gesture Recognition. J. Ordnance Equip. Eng. 2021, 42, 119–125. [Google Scholar]

- Zhang, Z.; Tian, Z.; Zhou, M. Latern: Dynamic continuous hand gesture recognition using FMCW radar sensor. IEEE Sens. J. 2018, 18, 3278–3289. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D.; Fu, Y.; Yao, D.; Xie, L.; Zhou, M. Multi-Hand Gesture Recognition Using Automotive FMCW Radar Sensor. Remote Sens. 2022, 14, 2374. [Google Scholar] [CrossRef]

- Dang, X.; Bai, Y.; Hao, Z.; Liu, G. Wi-GC: A Deep Spatiotemporal Gesture Recognition Method Based on Wi-Fi Signal. Appl. Sci. 2022, 12, 10425. [Google Scholar] [CrossRef]

- Zhang, T.; Song, T.; Chen, D.; Zhang, T.; Zhuang, J. WiGrus: A WiFi-based gesture recognition system using software-defined radio. IEEE Access 2019, 7, 131102–131113. [Google Scholar] [CrossRef]

- Dang, X.; Wei, K.; Hao, Z.; Ma, Z. Cross-Scene Sign Language Gesture Recognition Based on Frequency-Modulated Continuous Wave Radar. Signals 2022, 3, 875–894. [Google Scholar] [CrossRef]

- Wu, Q.; Zhao, D. Dynamic hand gesture recognition using FMCW radar sensor for driving assistance. In Proceedings of the 2018 10th International Conference on Wireless Communications and Signal Processing (WCSP), Hangzhou, China, 18–20 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Jin, B.; Peng, Y.; Kuang, X.; Zhang, Z.; Lian ZWang, B. Robust Dynamic Hand Gesture Recognition Based on Millimeter Wave Radar Using Atten-TsNN. IEEE Sens. J. 2022, 22, 10861–10869. [Google Scholar] [CrossRef]

- Kim, S.Y.; Han, H.G.; Kim, J.W.; Lee, S.; Kim, T.W. A hand gesture recognition sensor using reflected impulses. IEEE Sens. J. 2017, 17, 2975–2976. [Google Scholar] [CrossRef]

- Wang, P.; Jiang, R.; Liu, C. Amaging: Acoustic Hand Imaging for Self-adaptive Gesture Recognition. In Proceedings of the IEEE Infocom 2022-IEEE Conference on Computer Communications, London, UK, 2–5 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 80–89. [Google Scholar]

- Smith, J.W.; Thiagarajan, S.; Willis, R.; Makris, Y.; Torlak, M. Improved static hand gesture classification on deep convolutional neural networks using novel sterile training technique. IEEE Access 2021, 9, 10893–10902. [Google Scholar] [CrossRef]

- Zidane, F.; Lanteri, J.; Brochier, L.; Joachimowicz, N.; Roussel, H.; Migliaccio, C. Damaged apple sorting with mmwave imaging and nonlinear support vector machine. IEEE Trans. Antennas Propag. 2020, 68, 8062–8071. [Google Scholar] [CrossRef]

- Xu, W.; Song, W.; Liu, J.; Liu, Y.; Cui, X.; Zheng, Y.; Han, J.; Wang, X.; Ren, K. Mask does not matter: Anti-spoofing face authentication using mmWave without on-site registration. In Proceedings of the 28th Annual International Conference on Mobile Computing and Networking, Sydney, Australia, 17–21 October 2022; pp. 310–323. [Google Scholar]

- Li, J.; Stoica, P. MIMO Radar Signal Processing; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Texas Instruments. MIMO Radar. Available online: https://www.ti.com.cn/cn/lit/an/swra554a/swra554a.pdf (accessed on 1 July 2018).

- Yanik, M.E.; Torlak, M. Millimeter-wave near-field imaging with two-dimensional SAR data. In Proceedings of the SRC Techcon, Austin, TX, USA, 16–18 September 2018. [Google Scholar]

- Che, L.; Wu, X.; Wang, L.; Du, G.; Jiang, L. Improved algorithm of millimeter wave with complex network and sparse imaging. Appl. Res. Comput. 2022, 39, 604–608. [Google Scholar] [CrossRef]

- Hao, Z.; Wang, R.; Dang, X.; Yan, H.; Peng, J. mmSight: A Robust Millimeter-Wave Near-Field SAR Imaging Algorithm. Appl. Sci. 2022, 12, 12085. [Google Scholar] [CrossRef]

- Fan, B.; Gao, J.K.; Li, H.J.; Jiang, Z.J.; He, Y. Near-Field 3D SAR Imaging Using a Scanning Linear MIMO Array with Arbitrary Topologies. IEEE Access 2019, 8, 6782–6791. [Google Scholar] [CrossRef]

- Sheen, D.; Mcmakin, D.; Hall, T. Near-field three-dimensional radar imaging techniques and applications. Appl. Opt. 2010, 49, E83–E93. [Google Scholar] [CrossRef] [PubMed]

| FMCW Parameters | Value | Scanner Parameters | Value |

|---|---|---|---|

| Start Frequency (GHz) | 77 | (mm) | 160 |

| Chirp Slope (MHz/μs) | 57.115 | (mm) | 200 |

| Bandwidth (MHz) | 3998.05 | (mm) | 2 |

| ADC Samples | 384 | (mm) | 8 |

| Sample Rate (ksps) | 6250 | 81 | |

| 26 | |||

| (mm) | 350~410 |

| Algorithm | Hyperparameter |

|---|---|

| RF | n_estimators = 100, max_depth = 7, min_samples_leaf = 3 |

| SVM | C = 1.0, kernel = ‘rbf’, gamma = ‘auto’, probability = False, shrinking = True |

| KNN | n_neighbors = 5, weights = ‘uniform’, algorithm = ‘auto’, leaf_size = 30, p = 2 |

| GBDT | n_estimators = 200, max_depth = 3, min_samples_leaf = 1 |

| Method | Average Precision |

|---|---|

| HOG + PCA + Random Forest | 97.55% |

| HOG + PCA + SVM | 85.73% |

| HOG + PCA + KNN | 87.08% |

| HOG + PCA + GBDT | 95.27% |

| Method | Average Precision |

|---|---|

| HOG + PCA + Random Forest | 93.41% |

| HOG + PCA + SVM | 89.62% |

| HOG + PCA + KNN | 88.10% |

| HOG + PCA + GBDT | 87.17% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, Z.; Wang, R.; Peng, J.; Dang, X. Static Hand Gesture Recognition Based on Millimeter-Wave Near-Field FMCW-SAR Imaging. Electronics 2023, 12, 4013. https://doi.org/10.3390/electronics12194013

Hao Z, Wang R, Peng J, Dang X. Static Hand Gesture Recognition Based on Millimeter-Wave Near-Field FMCW-SAR Imaging. Electronics. 2023; 12(19):4013. https://doi.org/10.3390/electronics12194013

Chicago/Turabian StyleHao, Zhanjun, Ruidong Wang, Jianxiang Peng, and Xiaochao Dang. 2023. "Static Hand Gesture Recognition Based on Millimeter-Wave Near-Field FMCW-SAR Imaging" Electronics 12, no. 19: 4013. https://doi.org/10.3390/electronics12194013