Attention-Oriented Deep Multi-Task Hash Learning

Abstract

1. Introduction

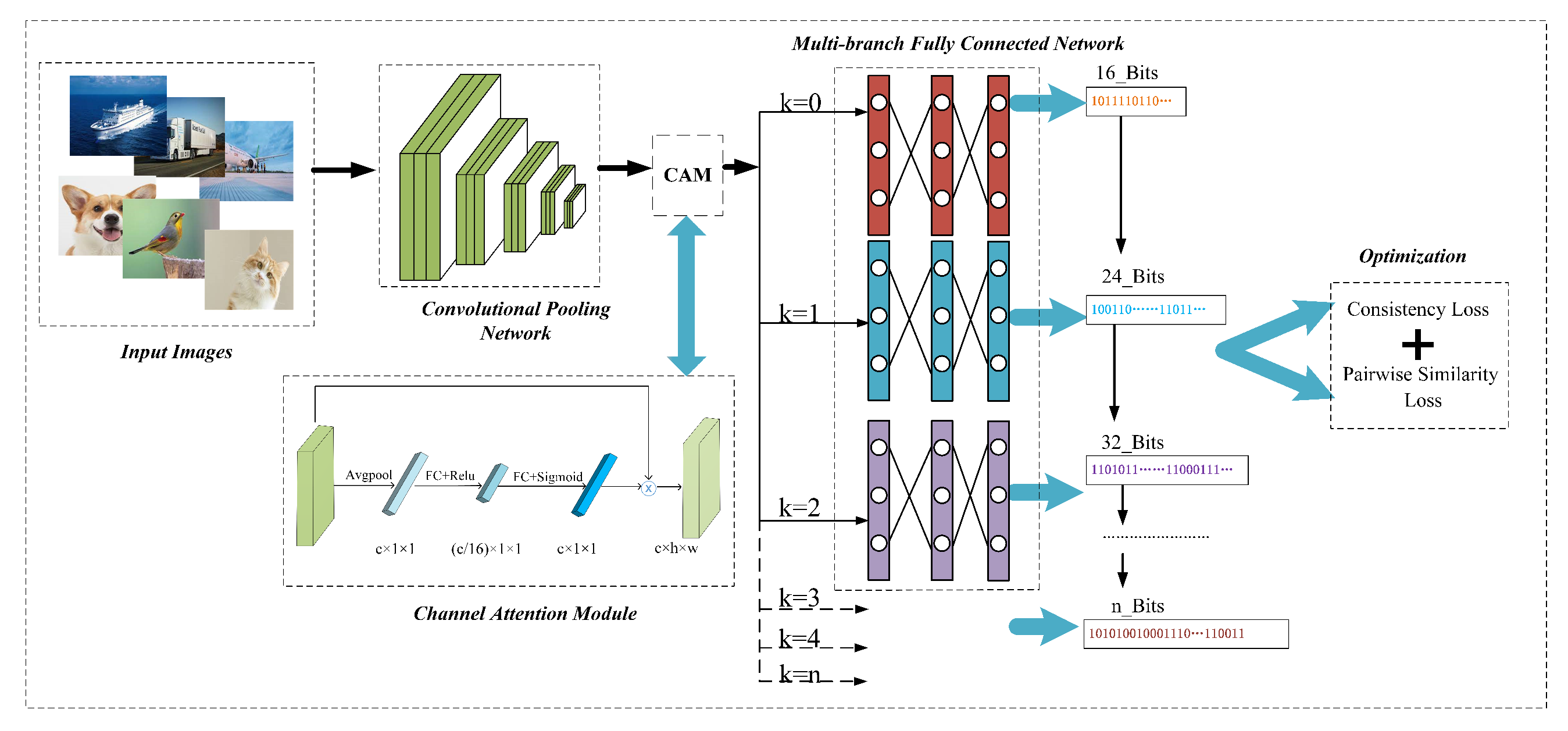

- To further extract discriminative fine-grained features, a channel attention module (CAM) is introduced immediately after the convolutional neural network. The important features are weighted at the channel level to enhance the expressive capability of deep features.

- To solve some drawbacks of single hash code learning, the paper designs a sub-network with multiple branches sharing hard parameters. The outputs of these branches correspond to hash codes of various lengths for a image, which greatly decreases the overhead of model computation.

- To explore the potential semantics involved in multiple hash codes, the paper designs a consistency loss based on adjacent branch hash codes, causing the smaller semantic gap among adjacent branches, which contributes to the representation capability of binary codes.

2. Proposed Method

2.1. Notations

2.2. Problem Definitions

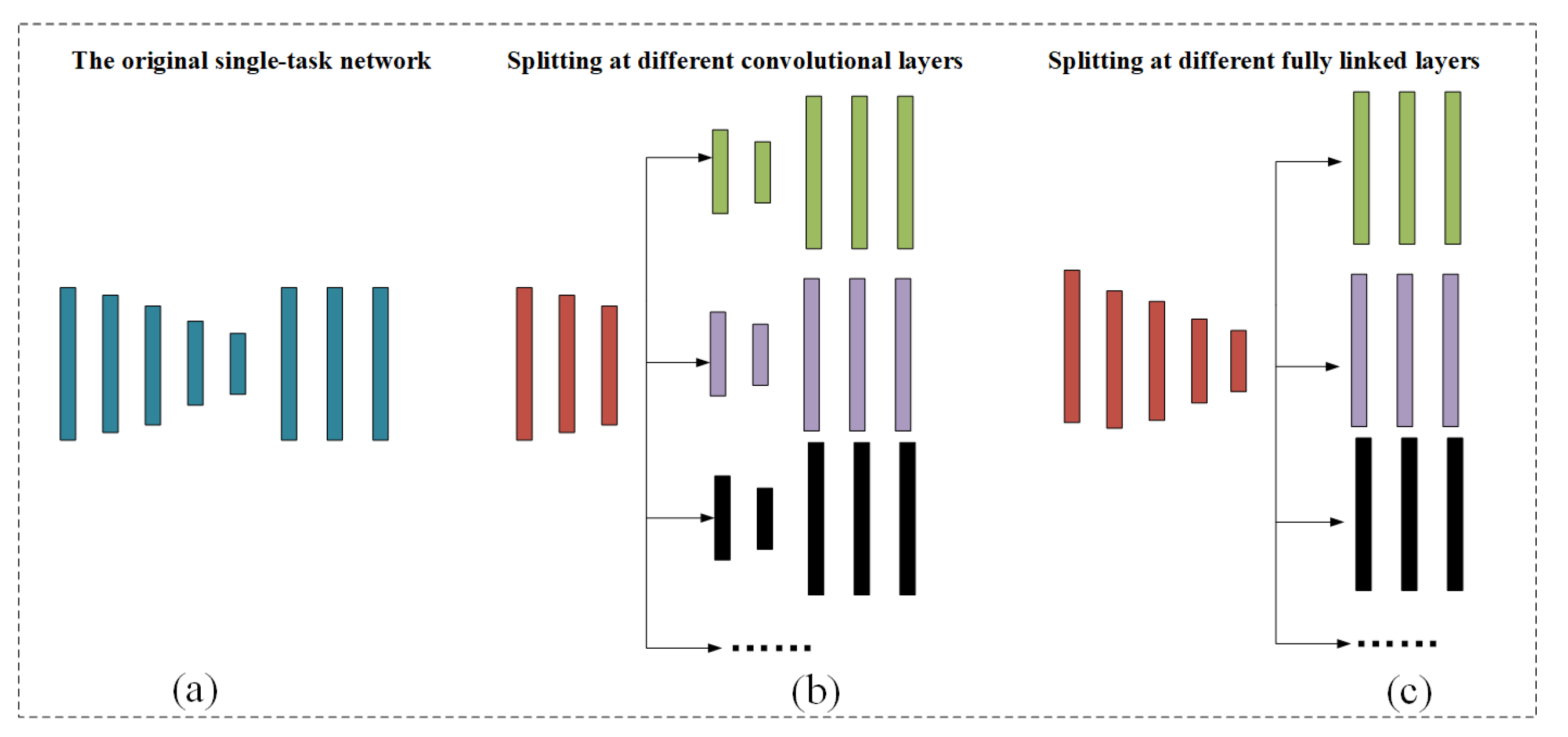

2.3. Architecture Structure of ADMTH

2.3.1. Feature Extraction Module

2.3.2. Channel Attention Module

2.3.3. Hash Learning Module

2.4. Objective Function

3. Experiments

3.1. Datasets

3.2. Experimental Settings

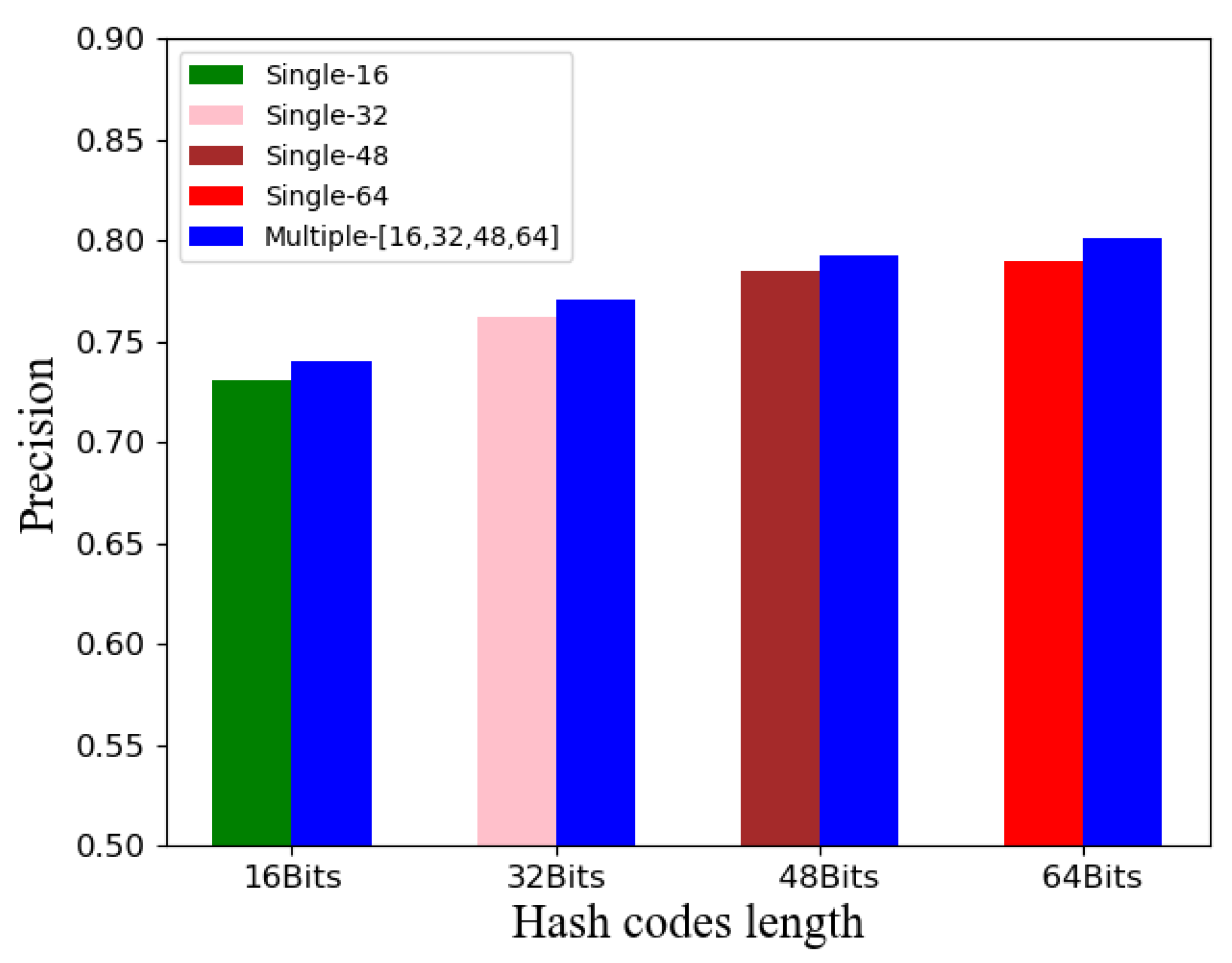

3.3. Ablation Study

3.4. Time Complexity and Training Efficiency

3.5. Comparison to Baselines

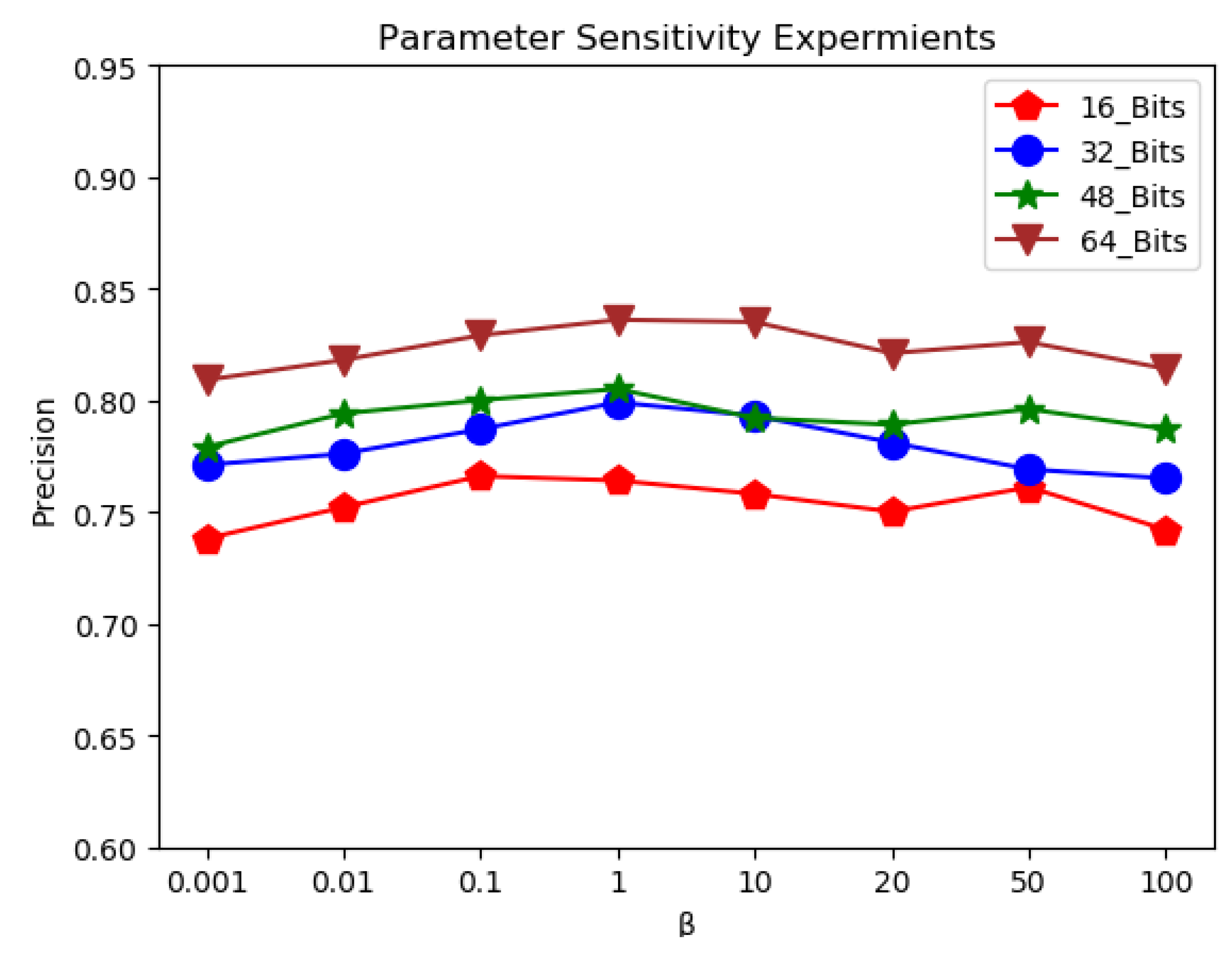

3.6. Parameter Sensitivity

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cao, Y.; Qi, H.; Zhou, W.; Kato, J.; Li, K.; Liu, X.; Gui, J. Binary hashing for approximate nearest neighbor search on big data: A survey. IEEE Access 2017, 6, 2039–2054. [Google Scholar] [CrossRef]

- Alwen, J.; Gazi, P.; Kamath, C.; Klein, K.; Osang, G.; Pietrzak, K.; Reyzin, L.; Rolínek, M.; Rybár, M. On the memory-hardness of data-independent password-hashing functions. In Proceedings of the 2018 on Asia Conference on Computer and Communications Security, Incheon, Republic of Korea, 4–8 June 2018; pp. 51–65. [Google Scholar]

- Andoni, A.; Razenshteyn, I. Optimal data-dependent hashing for approximate near neighbors. In Proceedings of the Forty-Seventh Annual ACM Symposium on Theory of Computing, Portland, OR, USA, 15–17 June 2015; pp. 793–801. [Google Scholar]

- Indyk, P.; Motwani, R. Approximate nearest neighbors: Towards removing the curse of dimensionality. In Proceedings of the Thirtieth Annual ACM Symposium on Theory of Computing, Dallas, TX, USA, 24–26 May 1998; pp. 604–613. [Google Scholar]

- Weiss, Y.; Torralba, A.; Fergus, R. Spectral hashing. In Advances in Neural Information Process Systems, Proceedings of the NIPS 2008, Vancouver, BC, Canada, 8–11 December; Curran Associates Inc.: Red Hook, NY, USA, 2008; Volume 21, p. 21. [Google Scholar]

- Gong, Y.; Lazebnik, S.; Gordo, A.; Perronnin, F. Iterative quantization: A procrustean approach to learning binary codes for large-scale image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 2916–2929. [Google Scholar] [CrossRef] [PubMed]

- Ding, G.; Guo, Y.; Zhou, J. Collective matrix factorization hashing for multimodal data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2075–2082. [Google Scholar]

- Zimek, A.; Schubert, E.; Kriegel, H.P. A survey on unsupervised outlier detection in high-dimensional numerical data. Stat. Anal. Data Mining: ASA Data Sci. J. 2012, 5, 363–387. [Google Scholar] [CrossRef]

- Shen, F.; Shen, C.; Liu, W.; Tao Shen, H. Supervised discrete hashing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 37–45. [Google Scholar]

- Kang, W.C.; Li, W.J.; Zhou, Z.H. Column sampling based discrete supervised hashing. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Gui, J.; Liu, T.; Sun, Z.; Tao, D.; Tan, T. Fast supervised discrete hashing. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 490–496. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Wu, Y.; Xu, X.S. Scalable supervised discrete hashing for large-scale search. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 1603–1612. [Google Scholar]

- Liu, X.; Nie, X.; Zhou, Q.; Yin, Y. Supervised discrete hashing with mutual linear regression. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1561–1568. [Google Scholar]

- Luo, X.; Nie, L.; He, X.; Wu, Y.; Chen, Z.D.; Xu, X.S. Fast scalable supervised hashing. In Proceedings of the the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 735–744. [Google Scholar]

- Li, W.J.; Wang, S.; Kang, W.C. Feature learning based deep supervised hashing with pairwise labels. arXiv 2015, arXiv:1511.03855. [Google Scholar]

- Cao, Y.; Long, M.; Liu, B.; Wang, J. Deep cauchy hashing for hamming space retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1229–1237. [Google Scholar]

- Zhu, H.; Long, M.; Wang, J.; Cao, Y. Deep hashing network for efficient similarity retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Jiang, Q.Y.; Cui, X.; Li, W.J. Deep discrete supervised hashing. IEEE Trans. Image Process. 2018, 27, 5996–6009. [Google Scholar] [CrossRef]

- Wang, X.; Shi, Y.; Kitani, K.M. Deep supervised hashing with triplet labels. In Proceedings of the Asian Conference on Computer Vision, Taipei, Taiwan, 20–24 November 2016; pp. 70–84. [Google Scholar]

- Zhao, F.; Huang, Y.; Wang, L.; Tan, T. Deep semantic ranking based hashing for multi-label image retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1556–1564. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, X.; Lai, H.; Feng, J. Attention-aware deep adversarial hashing for cross-modal retrieval. In Proceedings of the Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 591–606. [Google Scholar]

- Huang, C.Q.; Yang, S.M.; Pan, Y.; Lai, H.J. Object-location-aware hashing for multi-label image retrieval via automatic mask learning. IEEE Trans. Image Process. 2018, 27, 4490–4502. [Google Scholar] [CrossRef]

- Yuan, Y.; Xie, L.; Leung, C.C.; Chen, H.; Ma, B. Fast query-by-example speech search using attention-based deep binary embeddings. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 1988–2000. [Google Scholar] [CrossRef]

- Nie, X.; Liu, X.; Guo, J.; Wang, L.; Yin, Y. Supervised discrete multiple-length hashing for image retrieval. IEEE Trans. Big Data 2022, 9, 312–327. [Google Scholar] [CrossRef]

- Yu, J.; Kuang, Z.; Zhang, B.; Zhang, W.; Lin, D.; Fan, J. Leveraging content sensitiveness and user trustworthiness to recommend fine-grained privacy settings for social image sharing. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1317–1332. [Google Scholar] [CrossRef]

- Yu, J.; Zhu, C.; Zhang, J.; Huang, Q.; Tao, D. Spatial pyramid-enhanced NetVLAD with weighted triplet loss for place recognition. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 661–674. [Google Scholar] [CrossRef]

- Yu, J.; Yang, X.; Gao, F.; Tao, D. Deep multimodal distance metric learning using click constraints for image ranking. IEEE Trans. Cybern. 2016, 47, 4014–4024. [Google Scholar] [CrossRef]

- Wang, L.; Nie, X.; Zhou, Q.; Shi, Y.; Liu, X. Deep Multiple Length Hashing via Multi-task Learning. In ACM Multimedia Asia; ACM: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Zhang, Y.; Yang, Q. An overview of multi-task learning. Natl. Sci. Rev. 2018, 5, 30–43. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. 2021, 40, 1618–1631. [Google Scholar] [CrossRef]

- Evgeniou, T.; Pontil, M. Regularized multi–task learning. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22–25 August 2004; pp. 109–117. [Google Scholar]

- Thung, K.H.; Wee, C.Y. A brief review on multi-task learning. Multimed. Tools Appl. 2018, 77, 29705–29725. [Google Scholar] [CrossRef]

- Standley, T.; Zamir, A.; Chen, D.; Guibas, L.; Malik, J.; Savarese, S. Which tasks should be learned together in multi-task learning? In Proceedings of the International Conference on Machine Learning. PMLR, Virtual Event, 13–18 July 2020; pp. 9120–9132. [Google Scholar]

- Jalali, A.; Sanghavi, S.; Ruan, C.; Ravikumar, P. A dirty model for multi-task learning. In Proceedings of the Advances in Neural Information Processing Systems 23: 24th Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–9 December 2010; pp. 964–972. [Google Scholar]

- Chua, T.S.; Tang, J.; Hong, R.; Li, H.; Luo, Z.; Zheng, Y. Nus-wide: A real-world web image database from national university of singapore. In Proceedings of the ACM International Conference on Image and Video Retrieval, Santorini Island, Greece, 8–10 July 2009; pp. 1–9. [Google Scholar]

- Chen, X.; Fang, H.; Lin, T.Y.; Vedantam, R.; Gupta, S.; Dollár, P.; Zitnick, C.L. Microsoft coco captions: Data collection and evaluation server. arXiv 2015, arXiv:1504.00325. [Google Scholar]

- Xia, R.; Pan, Y.; Lai, H.; Liu, C.; Yan, S. Supervised hashing for image retrieval via image representation learning. In Proceedings of the Twenty-Eighth AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 29–31 July 2014. [Google Scholar]

- Lai, H.; Pan, Y.; Liu, Y.; Yan, S. Simultaneous feature learning and hash coding with deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3270–3278. [Google Scholar]

- Cao, Z.; Long, M.; Wang, J.; Yu, P.S. Hashnet: Deep learning to hash by continuation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5608–5617. [Google Scholar]

- Kang, R.; Cao, Y.; Long, M.; Wang, J.; Yu, P.S. Maximum-margin hamming hashing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8252–8261. [Google Scholar]

- Jiang, Q.Y.; Li, W.J. Asymmetric deep supervised hashing. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LO, USA, 2–3 February 2018; Volume 32. [Google Scholar]

- Lu, J.; Liong, V.E.; Tan, Y.P. Adversarial multi-label variational hashing. IEEE Trans. Image Process. 2020, 30, 332–344. [Google Scholar] [CrossRef]

- Shi, Y.; Nie, X.; Liu, X.; Yang, L.; Yin, Y. Zero-shot Hashing via Asymmetric Ratio Similarity Matrix. IEEE Trans. Knowl. Data Eng. 2022, in press. [CrossRef]

- Shu, Z.; Yong, K.; Yu, J.; Gao, S.; Mao, C.; Yu, Z. Discrete asymmetric zero-shot hashing with application to cross-modal retrieval. Neurocomputing 2022, 511, 366–379. [Google Scholar] [CrossRef]

- Yao, X.; Wang, M.; Zhou, W.; Li, H. Hash Bit Selection With Reinforcement Learning for Image Retrieval. IEEE Trans. Multimed. 2022, in press. [CrossRef]

- Li, T.; Yang, X.; Wang, B.; Xi, C.; Zheng, H.; Zhou, X. Bi-CMR: Bidirectional Reinforcement Guided Hashing for Effective Cross-Modal Retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2022. [Google Scholar]

- Rao, P.M.; Singh, S.K.; Khamparia, A.; Bhushan, B.; Podder, P. Multi-class Breast Cancer Classification using Ensemble of Pretrained models and Transfer Learning. Curr. Med. Imaging 2022, 18, 409–416. [Google Scholar] [PubMed]

- Kowsher, M.; Sobuj, M.S.I.; Shahriar, M.F.; Prottasha, N.J.; Arefin, M.S.; Dhar, P.K.; Koshiba, T. An Enhanced Neural Word Embedding Model for Transfer Learning. Appl. Sci. 2022, 12, 2848. [Google Scholar] [CrossRef]

- Fonseca-Bustos, J.; Ramírez-Gutiérrez, K.A.; Feregrino-Uribe, C. Robust image hashing for content identification through contrastive self-supervised learning. Neural Netw. 2022, 156, 81–94. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Zhang, S.; Men, A.; Chen, Q. Cross-Modal Contrastive Hashing Retrieval for Infrared Video and EEG. Sensors 2022, 22, 8804. [Google Scholar] [CrossRef]

| Dataset | Details | |||

|---|---|---|---|---|

| Labels | Total Numbers | Training Set | Test Set | |

| NUS-WIDE | Multiple (21) | 195 K | 10.5 k | 2.1 K |

| MS-COCO | Multiple (81) | 122 K | 10 k | 5 K |

| Split Method | NUS-WIDE | |||

|---|---|---|---|---|

| 16 bits | 32 bits | 48 bits | 64 bits | |

| DMTH-Conv | 0.610 | 0.684 | 0.725 | 0.768 |

| DMTH-Fullcnt | 0.740 | 0.771 | 0.793 | 0.801 |

| Method | Average Training Time (s) |

|---|---|

| Single-16 | 155.52 |

| Single-32 | 158.66 |

| Single-48 | 157.27 |

| Single-64 | 158.34 |

| Sum | 629.79 |

| Multiple-[16,32,48,64] | 209.60 |

| Method | NUS-WIDE | |||

|---|---|---|---|---|

| 16 Bits | 32 Bits | 48 Bits | 64 Bits | |

| CNNH | 59.0 | 60.0 | 63.5 | 67.0 |

| DNNH | 68.0 | 70.0 | 71.3 | 71.5 |

| DHN | 67.1 | 69.7 | 73.3 | 76.1 |

| HASHNET | 69.5 | 71.5 | 73.8 | 78.0 |

| DCH | 74.0 | 77.2 | 76.9 | 79.3 |

| MMHH | 77.2 | 78.4 | 78.0 | 82.1 |

| ADSH | 75.8 | 74.0 | 73.3 | 72.0 |

| AMVH | 72.3 | 74.7 | 75.5 | 77.3 |

| DMLH | 75.0 | 78.2 | 79.4 | 80.3 |

| ADMTH | 76.2 | 79.5 | 80.4 | 83.7 |

| Method | MS-COCO | |||

|---|---|---|---|---|

| 16 Bits | 32 Bits | 48 Bits | 64 Bits | |

| CNNH | 56.0 | 56.9 | 53.7 | 50.6 |

| DNNH | 57.7 | 60.2 | 52.3 | 50.1 |

| DHN | 67.5 | 66.8 | 60.0 | 59.8 |

| HASHNET | 68.5 | 69.0 | 66.4 | 67.8 |

| DCH | 69.6 | 75.7 | 72.5 | 70.4 |

| ADSH | 57.8 | 63.7 | 60.0 | 56.7 |

| AMVH | 66.7 | 70.0 | 70.3 | 72.8 |

| DMLH | 65.7 | 70.6 | 70.0 | 72.2 |

| ADMTH | 67.7 | 72.8 | 73.0 | 74.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Meng, Z.; Dong, F.; Yang, X.; Xi, X.; Nie, X. Attention-Oriented Deep Multi-Task Hash Learning. Electronics 2023, 12, 1226. https://doi.org/10.3390/electronics12051226

Wang L, Meng Z, Dong F, Yang X, Xi X, Nie X. Attention-Oriented Deep Multi-Task Hash Learning. Electronics. 2023; 12(5):1226. https://doi.org/10.3390/electronics12051226

Chicago/Turabian StyleWang, Letian, Ziyu Meng, Fei Dong, Xiao Yang, Xiaoming Xi, and Xiushan Nie. 2023. "Attention-Oriented Deep Multi-Task Hash Learning" Electronics 12, no. 5: 1226. https://doi.org/10.3390/electronics12051226

APA StyleWang, L., Meng, Z., Dong, F., Yang, X., Xi, X., & Nie, X. (2023). Attention-Oriented Deep Multi-Task Hash Learning. Electronics, 12(5), 1226. https://doi.org/10.3390/electronics12051226