Abstract

In order to address driver’s dazzle caused by the abuse of high beams when vehicles meet at night, a night vision anti-halation algorithm based on image fusion combining visual saliency with YUV-FNSCT is proposed. Improved Frequency-turned (FT) visual saliency detection is proposed to quickly lock on the objects of interest, such as vehicles and pedestrians, so as to improve the salient features of fusion images. The high- and low-frequency sub-bands of infrared saliency images and visible luminance components can quickly be obtained using fast non-subsampled contourlet transform (FNSCT), which has the characteristics of multi-direction, multi-scale, and shift-invariance. According to the halation degree in the visible image, the nonlinear adaptive fusion strategy of low-frequency weight reasonably eliminates halation while retaining useful information from the original image to the maximum extent. The statistical matching feature fusion strategy distinguishes the common and unique edge information from the high-frequency sub-bands by mutual matching so as to obtain more effective details of the original images such as the edges and contours. Only the luminance Y decomposed by YUV transform is involved in image fusion, which not only avoids color shift of the fusion image but also reduces the amount of computation. Considering the night driving environment and the degree of halation, the visible images and infrared images were collected for anti-halation fusion in six typical halation scenes on three types of roads covering most night driving conditions. The fused images obtained by the proposed algorithm demonstrate complete halation elimination, rich color details, and obvious salient features and have the best comprehensive index in each halation scene. The experimental results and analysis show that the proposed algorithm has advantages in halation elimination and visual saliency and has good universality for different night vision halation scenes, which help drivers to observe the road ahead and improve the safety of night driving. It also has certain applicability to rainy, foggy, smoggy, and other complex weather.

1. Introduction

When vehicles meet at night, the abuse of high beams will dazzle the oncoming driver. As a result, drivers cannot see the road ahead for a short period of time, and their judgment regarding their vehicle from both sides and pedestrians between vehicles as well as from behind the oncoming vehicle is decreased, which may easily induce traffic accidents. According to statistics, 30–40 percent of nighttime traffic accidents are related to the abuse of high beam lights, and this proportion is still rising [1].

To prevent traffic accidents caused by the halation phenomenon, setting up green barriers or installing anti-glare plates in the middle of a two-way lane can effectively eliminate halation, but they are limited by the road and other conditions. They are generally only used in urban trunk roads and highways.

To improve the safety of night driving and enhance the visibility of effective information in night vision halation images, night vision anti-halation technology has attracted extensive attention from the research community. Reference [2] installs a polarization film on the front windshield of vehicles, which can effectively block the strong light from high beams. The method is simple and easy but also leads to a sharp reduction in light intensity, making it difficult for the driver to observe information in the dark area. An infrared night vision device [3] is installed on the vehicle to obtain infrared images without halation on road conditions ahead. However, the infrared images are grayscale images with low resolution, a serious lack of detail information, and the visual effects are not ideal. Reference [4] fuses the images collected synchronously using two visible cameras with different exposure apertures in the same road conditions, which expands the image’s dynamic range, effectively weakening the halation phenomenon. However, there are some problems such as incomplete halation elimination and weak information complementarity between visible images.

The different-source image fusion method eliminates halation by taking advantage of the strong complementarity of visible and infrared image information, resulting in a good visual effect of the fusion image. However, Wavelet transform [5] is not anisotropic, so it cannot accurately express the orientation of an image boundary, resulting in a certain degree of blurring of the edge details in the fusion image. Curvelet transform [6] overcomes the shortcomings of Wavelet transform and improves the clarity of the fusion image. However, important information such as pedestrians and vehicles is not significant in the fusion image. Non-subsample Shearlet transform (NSST) [7] decomposes images efficiently and reduces the calculation amount. However, it requires high registration for source images. The Laplacian Pyramid based on sparse representation (LP-SR) [8] highlights the high-frequency information in the image and has some resistance to noise. However, when the source image is too complex, it is easy to cause loss of image detail information. Non-subsampled contourlet transform (NSCT) [9] fully extracts the orientation information from the images. However, the decomposition process is complicated, and the operation efficiency is low. Subspace-based image fusion methods include principal component analysis [10] and independent component analysis [11], which project a high-dimensional source image into a low-dimensional space to improve fusion efficiency. However, the noise of the fusion image is obvious.

The main contributions to the work are as follows: (1) A different-source image fusion algorithm based on visual saliency and YUV-FNSCT is introduced. (2) An improved FT visual saliency detection algorithm is proposed. It quickly locks the salient information in infrared images to improve the salient features of fusion images. (3) A visible and infrared image fusion method combining YUV and FNSCT is designed, where the contour features of images are effectively extracted by FNSCT, and only the luminance component decomposed by YUV transform is involved in image fusion to improve the processing efficiency and avoid the color shift of fusion images. (4) An experimental study is conducted to show the effectiveness and universality of the proposed algorithm. The proposed algorithm is compared with five state-of-the-art algorithms in six typical halation scenes on three types of roads. The experimental outcomes illustrate that the proposed algorithm has advantages in halation elimination and visual saliency and has good universality for different night vision halation scenes.

The remainder of the article is arranged as follows. Section 2 presents the night vision anti-halation principle of infrared and visible image fusion. Section 3 describes the step-by-step design and implementation of the anti-halo fusion algorithm. Section 4 gives the experiential results and discussion. Lastly, Section 5 represents the conclusion.

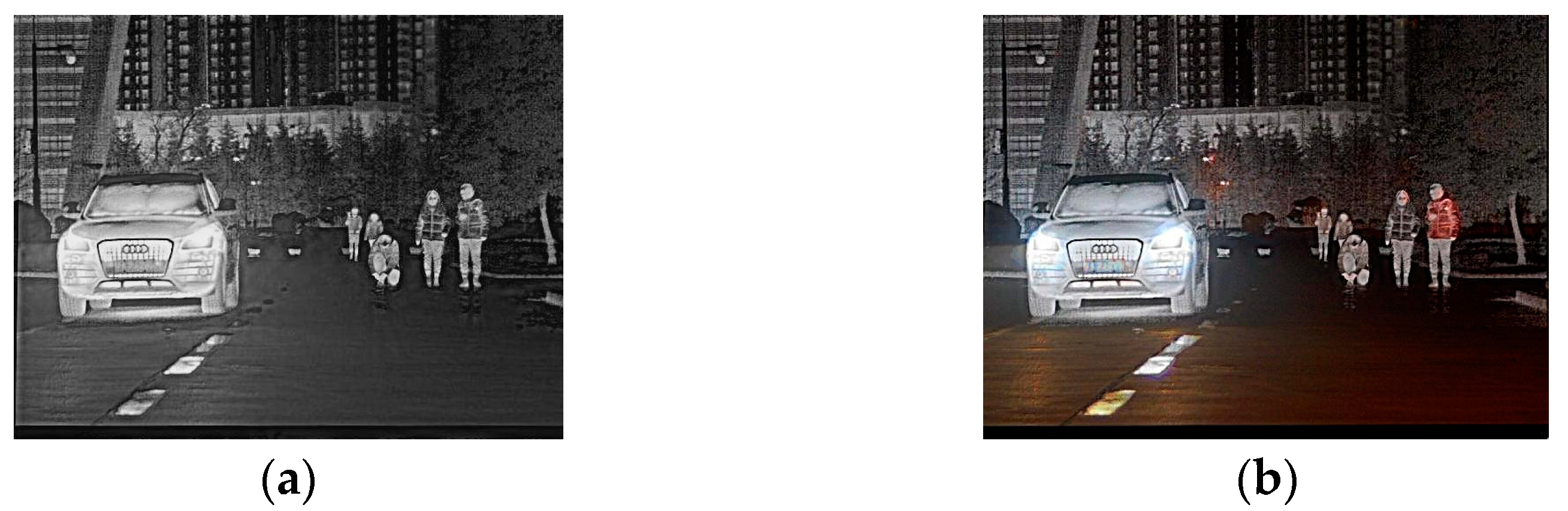

2. Principle of Night Vision Anti-Halation Based on Different-Source Image Fusion

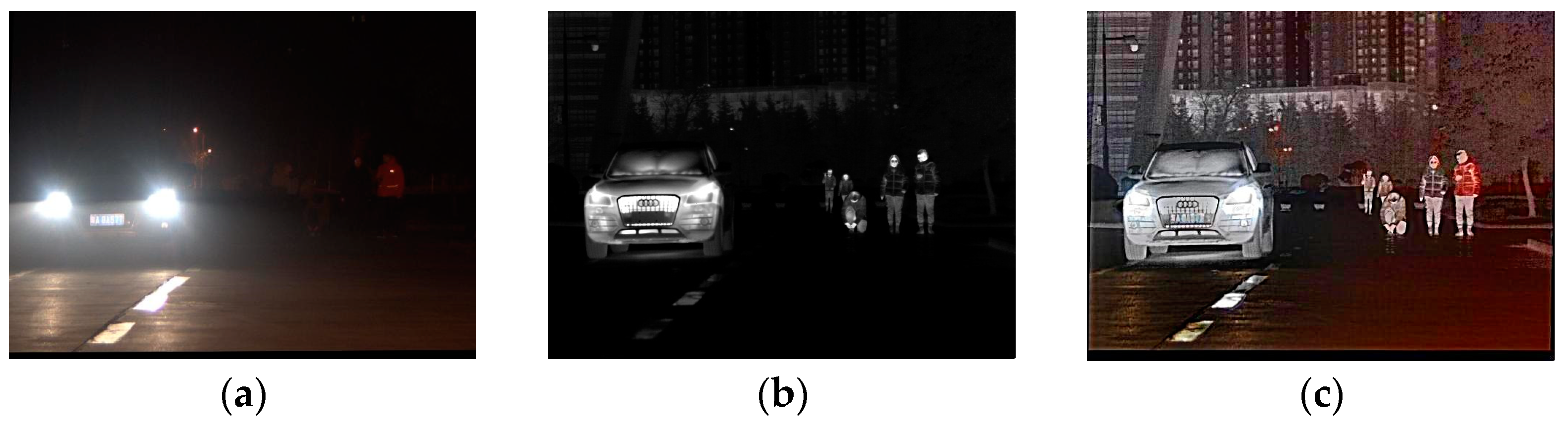

In a typical nighttime halation scene, the visible image has rich colors. However, the brightness of the halation area is too high, which not only makes the effective information drowned out by strong light but also leads to lower brightness in the non-halation area so that it is difficult to observe important information in the dark area. The infrared image can better reflect thermal properties and is not affected by the halation, but it is a grayscale image with low resolution, so the features of scenes such as details and textures are often lost [12]. Based on the strong complementarity of two different-source images [13], a night vision anti-halation algorithm based on image fusion is proposed. The original images and the fusion image in the halation scene are shown in Figure 1.

Figure 1.

The original images and the fusion image in the halation scene. (a) Visible image. (b) Infrared image. (c) Fusion image.

In order to highlight important information such as pedestrians and vehicles, the visual saliency detection of infrared images is carried out to quickly lock the area of interest to human eyes and extract complete salient contours so as to improve the salient features of night vision anti-halation fusion images.

To effectively retain the colors of the visible image, it is decomposed into luminance Y and chrominance U and V by YUV transform. Since the halation information in the visible image is mainly distributed in the luminance Y [14], it is necessary to perform the anti-halation fusion process only for the luminance Y and the infrared salient images. In this way, it not only reduces the amount of computation but also avoids the color distortion caused by the chrominance U and V participating in the fusion [15].

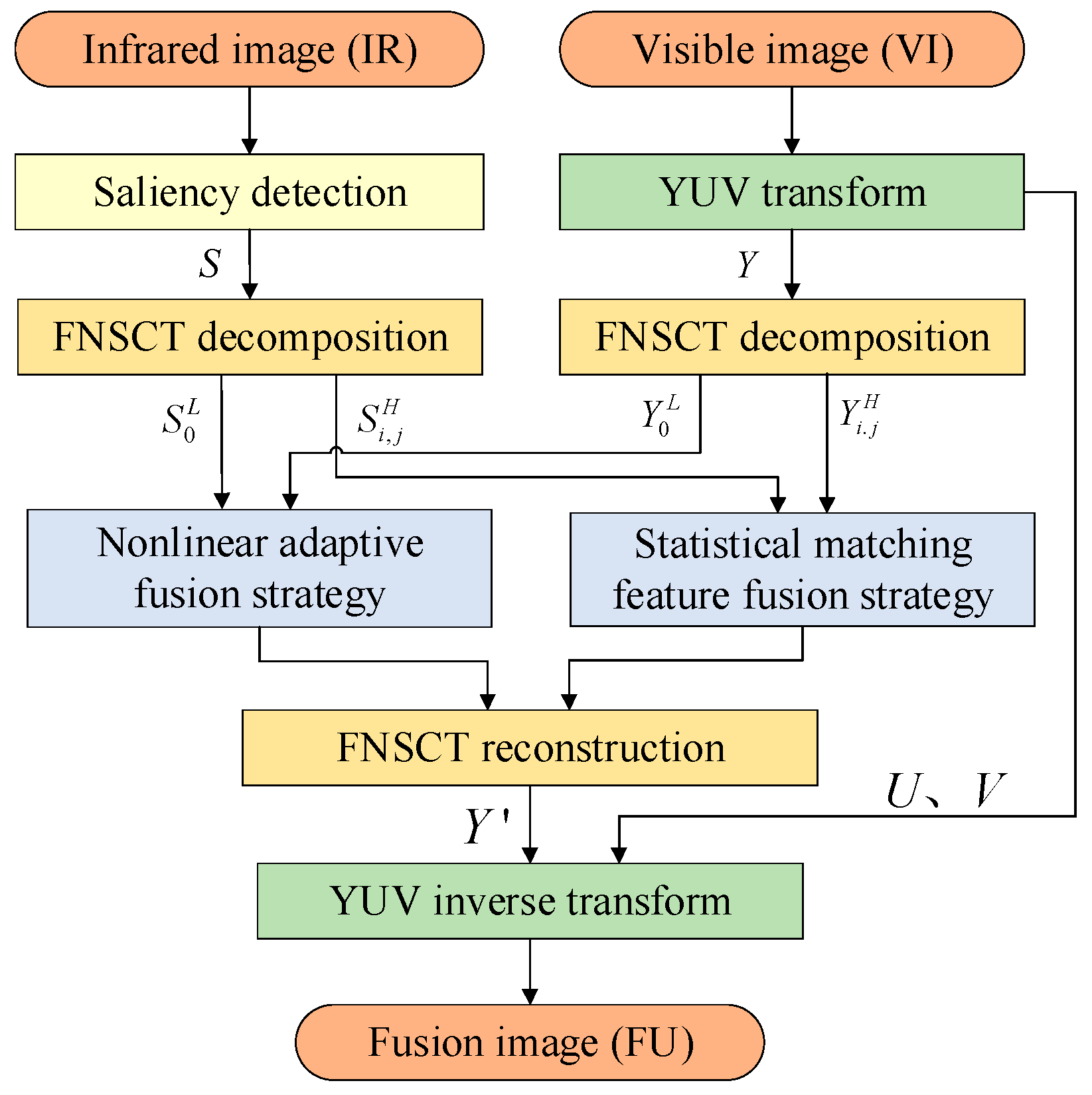

In order to make a fusion image with richer spatial details, higher definition, and less spectral distortion, the fast non-subsampled contourlet transform (FNSCT) is used to decompose and reconstruct the luminance Y and the infrared salient image. An FNSCT that has good multi-direction, multi-scale, and shift-invariance can effectively extract the image contour features and further improve the sharpness of the fused image. The low-frequency decomposition sub-band contains the main contour and halation of the image. The nonlinear adaptive fusion strategy of low-frequency weight is designed to eliminate halation while preserving the contours of two original images. The high-frequency sub-bands reflect image details such as edges and textures. The statistical matching feature strategy is used to fuse high-frequency sub-bands, which can distinguish between the common and unique edge information by mutual matching, so as to obtain more and more effective information from the original image. The overall implementation block diagram is shown in Figure 2.

Figure 2.

The overall block diagram of the proposed method.

3. Realization of Image Fusion Combining Visual Saliency with YUV-FNSCT

According to the above principle, the image fusion algorithm combining visual saliency with YUV-FNSCT is mainly divided into visual saliency detection of an infrared image, YUV transformation, FNSCT decomposition and reconstruction, and low-frequency and high-frequency fusion strategies.

3.1. Visual Saliency Detection of Infrared Images

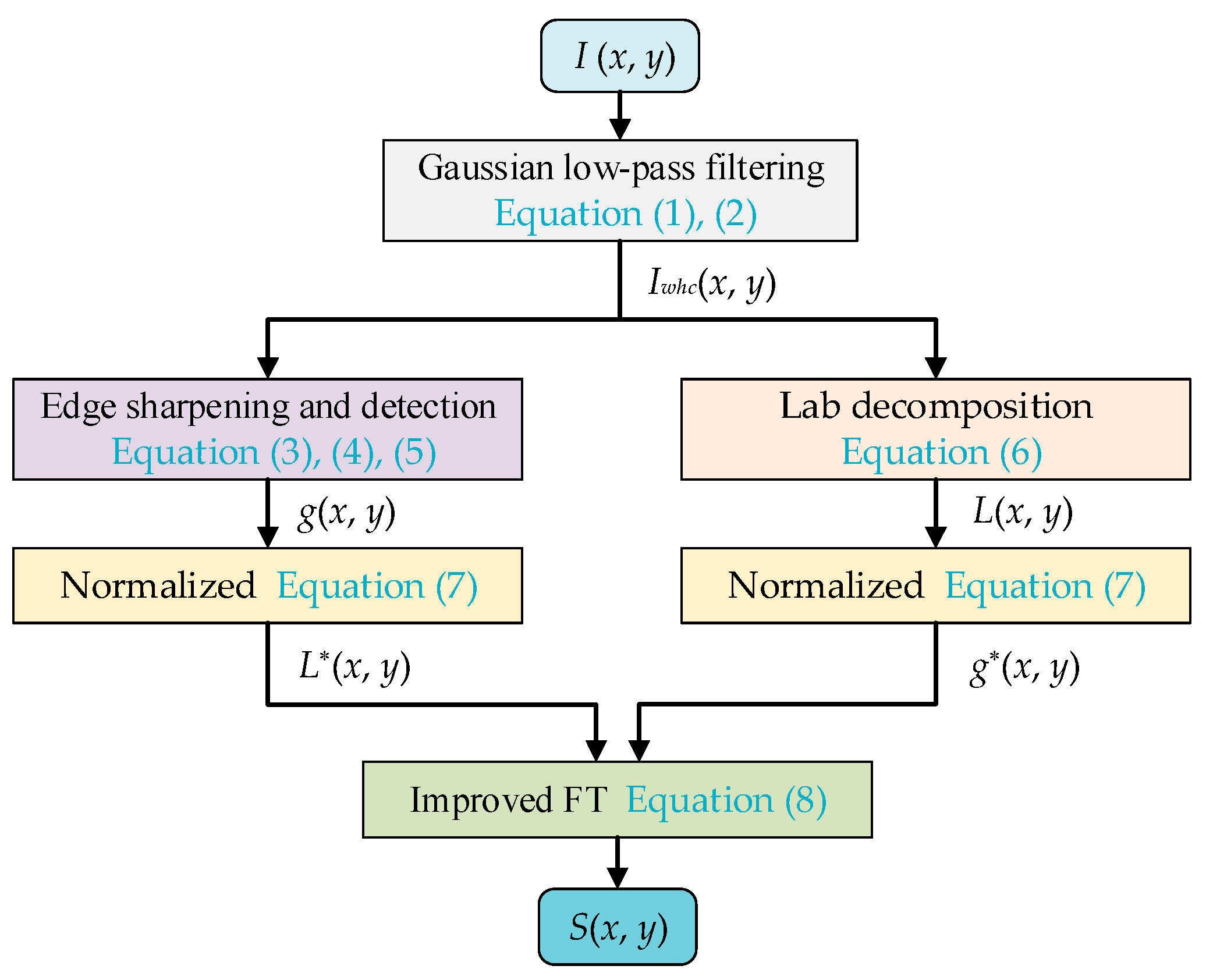

Based on the human visual system, visual saliency detection quantifies the interest degree of each region to a gray level to obtain the visual saliency image. Therefore, it can accurately identify the visual region of interest in the image [16]. The improved Frequency-turned (FT) algorithm proposed in this paper is used to detect the visual saliency of infrared images and extract the complete contours and edges.

As a spatial frequency domain saliency algorithm, the FT algorithm first performs Gaussian low-pass filtering, and then calculates the Euclidean distance between each pixel and the average value of all pixels in the CIE Lab color space as the significance value [17]. This algorithm is simple and efficient and can output full resolution images while highlighting salient objects. However, the segmentation of salient information boundaries is not clear, so the difference between the generated salient area and the background gray value is small, resulting in an unsatisfactory effect of detecting salient targets.

To improve the detection accuracy of salient targets in images, the improved FT algorithm firstly extracts the edge features of an infrared image, and then normalizes and weighs each feature value to obtain the significant image. As a result, the algorithm can effectively improve the saliency value, suppress background noise, and enrich the edge details for the infrared image.

The Gaussian low-pass filter is used to truncate the high-frequency information from the infrared image I (x, y) and retain the low-frequency part reflecting the significant features. The infrared smoothed image Iwhc(x, y) after filtering can be expressed as

where h (x, y) is the two-dimensional Gaussian function, (x, y) is the coordinate of the image pixel, and ‘⁎’ is the convolution operator. h (x, y) is expressed as

The Sobel operator with accurate edge localization and high noise immunity is used for edge extraction of infrared images. The weight of a pixel can be determined according to the distances from its neighboring pixels to achieve edge sharpening and detection [18]. The horizontal gradient gx(x, y) and the vertical gradient gy(x, y) of the image are expressed as

where mx, my are the convolution kernels of edge detection in the horizontal and vertical directions, respectively, and are taken as

The gradient image g(x, y) reflecting edge features of the Gaussian smoothed image is expressed as

Since the infrared Gaussian smoothed image Iwhc(x, y) is a grayscale image, the pixel values of the color components a and b are constant in the CIE Lab color space. Therefore, only the luminance L(x, y) is decomposed and expressed as [19]

The luminance L and the gradient g have different value ranges (L∈[0, 100], g∈[0, 255]), resulting in more prominent gradient features of the image. Therefore, L and g must be normalized [20]. The normalized luminance L⁎(x, y) and gradient g⁎(x, y) are expressed as

where Lmin and Lmax are the minimum and maximum of the luminance L, respectively. gmin and gmax are the minimum and maximum of the gradient g, respectively.

The infrared visual saliency image S(x, y) obtained by the improved FT algorithm is

where Lu and gu are the arithmetic means of the normalized luminance L⁎ and gradient g⁎, respectively. wL and wg are the weight coefficients of the luminance L and gradient g, respectively.

The luminance L has a greater influence on the visual saliency image and should be assigned a larger weight. In this paper, wL and wg are set to 0.7 and 0.3 by calculation and optimization.

The processing flow of the improved FT algorithm to obtain infrared visual salient images is shown in Figure 3.

Figure 3.

The processing flow of the improved FT algorithm.

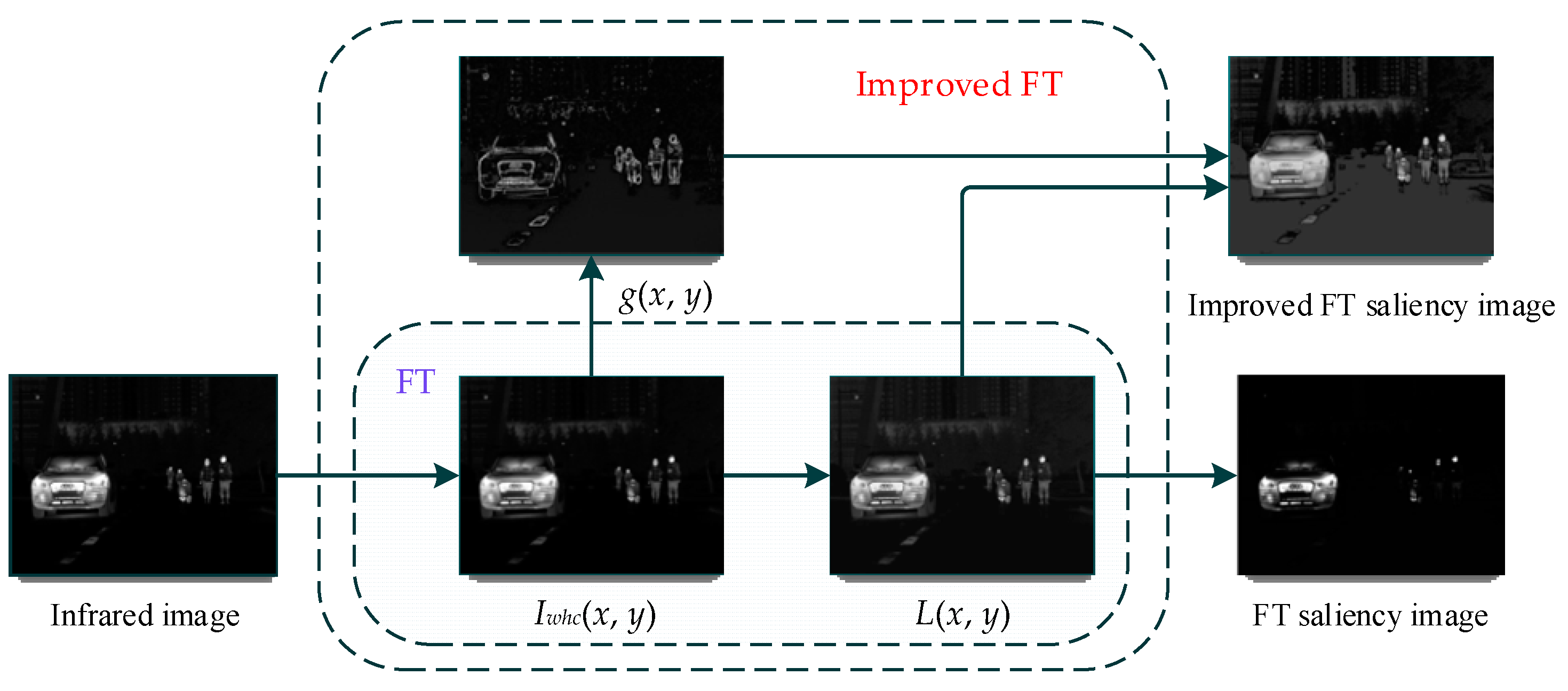

Taking the infrared image using Figure 1b as an example, the comparison of the visual saliency detection process between the improved FT and the traditional FT is shown in Figure 4.

Figure 4.

The process of visual saliency detection.

3.2. FNSCT Decomposition

The visible image is transformed from RGB to a YUV color space, and the luminance Y and the chrominance U and V are obtained [14]. The color space model transformation from RGB to YUV is defined as

The luminance Y and the infrared saliency image S are decomposed by FNSCT to obtain the corresponding low-frequency and high-frequency sub-band images.

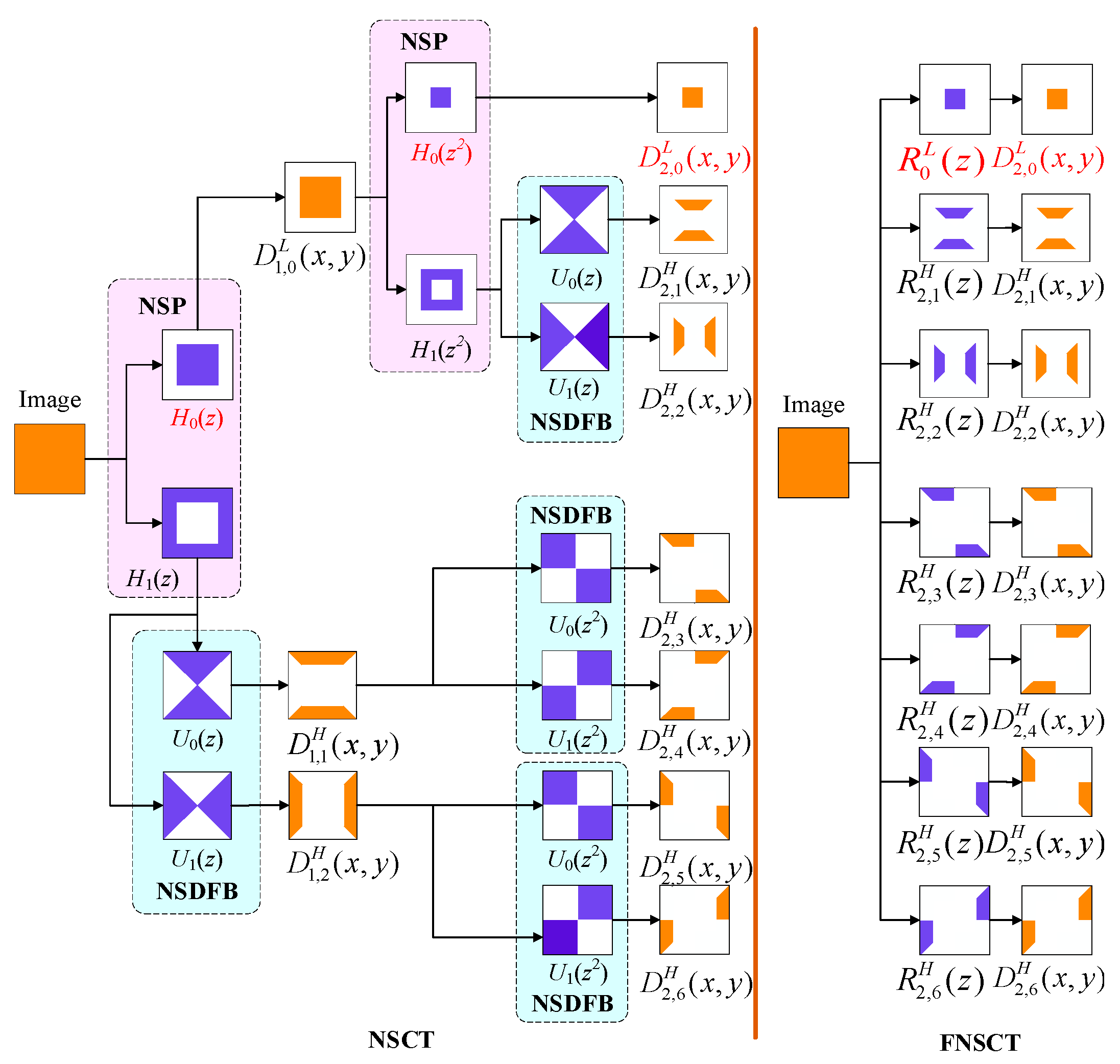

FNSCT is a simple and fast NSCT in the frequency domain, which replaces the tree-composed filter bank of NSCT with a multi-channel filter bank, and can effectively eliminate the pseudo-Gibbs phenomenon and solve the frequency aliasing of the contourlet transform. Therefore, it can retain the important information from the illumination Y and the infrared saliency image S such as edges, textures, and orientations.

NSCT is composed of the non-subsampled pyramid (NSP) and the non-subsampled directional filter bank (NSDFB) [21,22]. Image decomposition needs to be processed by NSP and NSDFB step by step. This iterative filtering has a large amount of computation and low efficiency. FNSCT reconstructs the tree filter banks of NSCT into the multi-channel filter banks R(z). The low-frequency channel filters (z) are defined as

where H0() denotes the low-pass filter in the NSP and i is the decomposition level of FNSCT.

The high-frequency channel filters (z) are defined as

where H(z) and U(z) are denoted as

where H1(z2m) denotes the high-pass filter in NSP. Uν() denotes the dual-channel fan filter in NSDFB and satisfies U1() =1 − U0(). Subscript v is 0 or 1. m = 0, 1, 2, …, i − 1. j is the direction number of high-frequency decomposition and is expressed as [23]

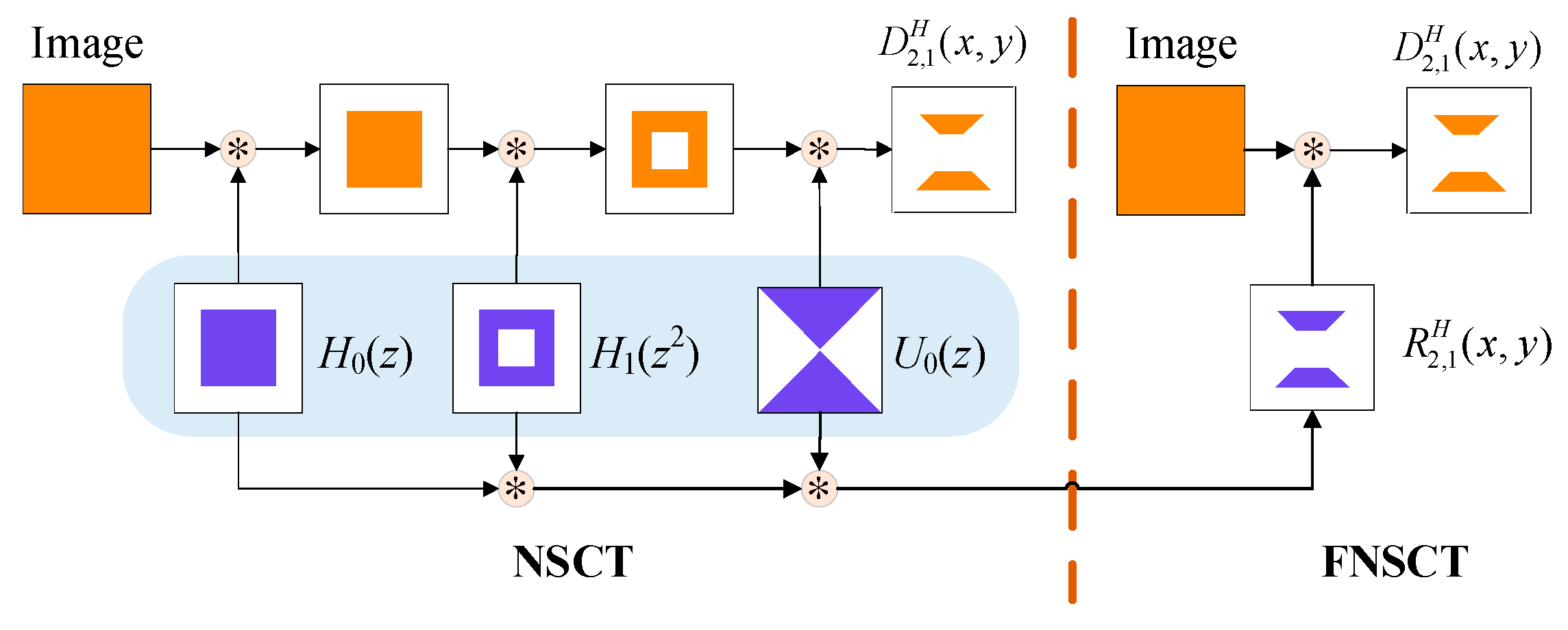

The multi-channel filter bank R(z) convolves multiple filters in each channel of NSCT into one filter, which reduces the computational complexity and improves the decomposition efficiency. The sampling frequency and the size of the frequency domain filtering kernel are fixed in FNSCT, and the number of filters is reduced from 2i+2 − 4 to 2i+1 − 1. Figure 5 shows the sub-band image (x, y) is decomposed in the first direction of the high-frequency channel by two-level FNSCT. The blue region denotes the filter passband, and the orange region denotes the decomposed sub-band images. ‘⁎’ represented as convolution operator.

Figure 5.

Principle of the sub-band image decomposed by two-level FNSCT.

The FNSCT filters are formed by the convolution of the NSCT filters; thus, the filter passband of each channel in FNSCT is an intersection of the frequency bands of the corresponding channel filters in NSCT.

A comparison of the decomposition of two-level NSCT and FNSCT in each direction is shown in Figure 6.

Figure 6.

Comparison of two-level NSCT and FNSCT decomposition in each direction.

From Figure 6, filter convolution is faster and more efficient than multiple filters. The FNSCT filter has the same frequency domain and filtering characteristics as the NSCT filter. Let ψ be the passband of filter bank R(z). It is satisfied that

The luminance Y and the infrared saliency image S are decomposed by multi-channel filters R(z) to obtain the corresponding low-frequency sub-bands (x, y) and (x, y) and the high-frequency sub-bands (x, y) and (x, y). Each sub-band image can be obtained by filtering only once, which improves the decomposition efficiency.

3.3. Nonlinear Adaptive Fusion Strategy of Low Frequency

The low-frequency sub-bands in the luminance Y contain most of the halation information, and the gray value of the halation areas is significantly higher than that of the non-halation areas [24]. In order to eliminate halation completely, we designed a nonlinear adaptive fusion strategy with a low-frequency weight.

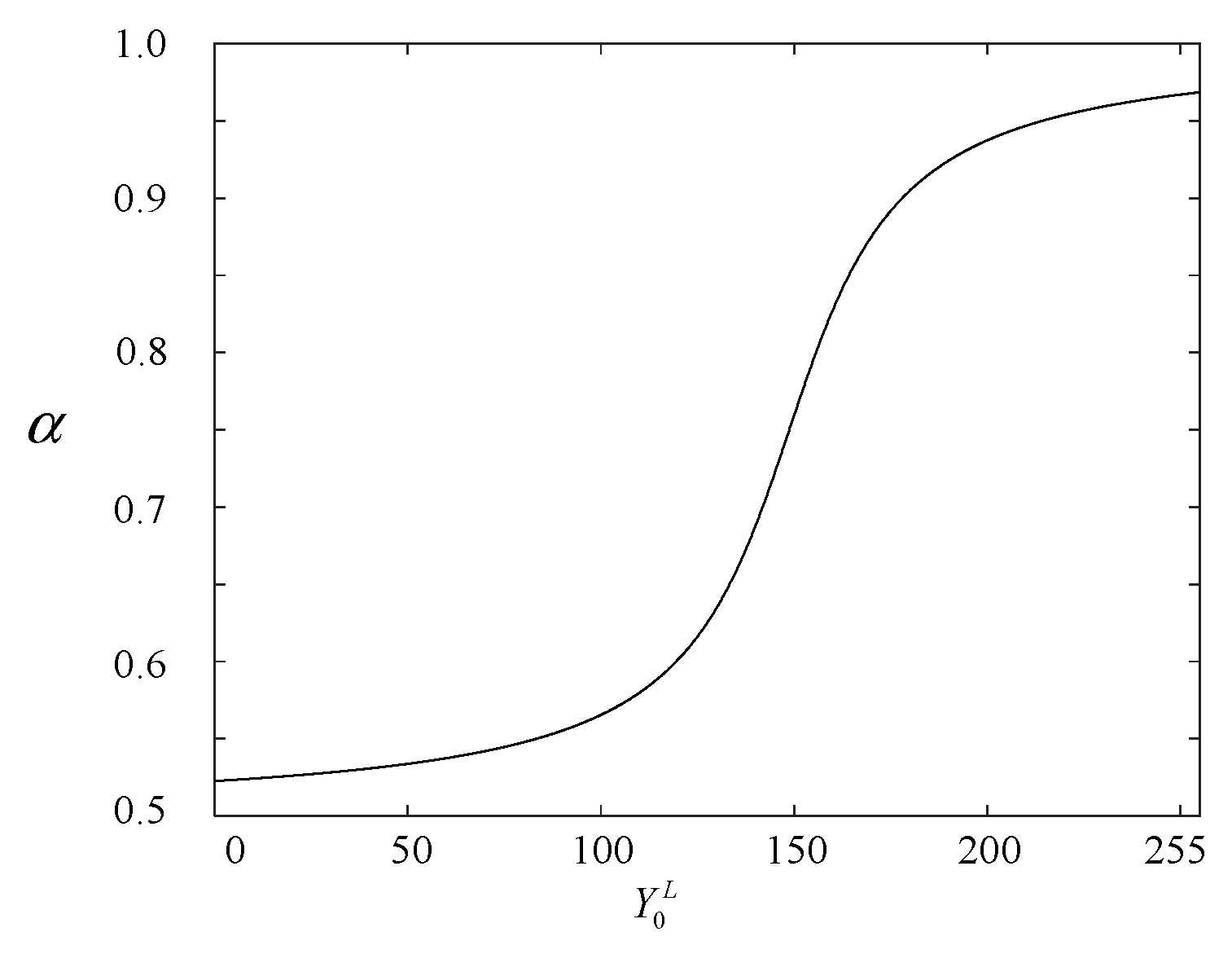

The information from halation areas in the low-frequency fusion image is mainly taken from the infrared saliency image S, while that of the non-halation areas is from the synthesis of Y and S [25,26]. The constructed infrared low-frequency coefficient weight α(x, y) is defined as

where (x, y) is the low-frequency coefficient of the luminance Y. cH is the critical halation value in the low-frequency coefficient matrix of Y. cIL is the low-frequency coefficient of the infrared salient image S at the critical value. Y maps to the interval [a, b], and c is the length of the interval. After calculating and optimizing many times, the optimal fusion result was reached when cH was 3.5 and cIL was 0.75. The interval [a, b] is taken as [0, 6], and the corresponding relation curve between (x, y) and α(x, y) is shown in Figure 7.

Figure 7.

The relation between α(x, y) and (x, y).

According to Figure 7, in the interval [0, 255], the weight α increases with the increase in , indicating that the higher the pixel brightness in the low-frequency sub-band of the luminance Y, the greater the weight of the infrared visual saliency image. In the halation, (x, y) is greater than the critical grayscale value of halation, and the weight α is close to 1. That is, the information from halation areas in the low-frequency sub-band fusion image is mainly taken from the infrared saliency image S, avoiding the high-brightness halation in the visible image to participate in the fusion, so as to achieve a more complete elimination of the halation. In the non-halation areas, S is given about half of the weight. The information from non-halation areas is from the synthesis of the detail information of Y and S. At the halation boundary, the smooth curve with nonlinear continuous change is used to transition from the halation area to the non-halation area so as to avoid the light and dark fusion boundary in the low-frequency fusion image.

The nonlinear adaptive fusion strategy of low-frequency weight is defined as

where (x, y) is the low-frequency coefficient of the fusion image.

The nonlinear adjustment strategy of low-frequency weight not only eliminates the halation completely but also retains the useful information from the two original images to the maximum extent.

3.4. Statistical Matching Feature Fusion Strategy of High Frequency

The high-frequency sub-bands reflect the image detail information such as the edges and textures [27]. The high-frequency sub-bands of the luminance Y are quite different from that of the infrared saliency image S; thus, the statistical matching feature strategy is adopted to enhance the textures and other details of the fusion images [28]. The high-frequency sub-band fusion is expressed as

where (x, y), (x, y), and (x, y) are the high-frequency sub-bands of the fusion image, the luminance, and the infrared saliency image, respectively.

The statistical matching feature strategy can distinguish between the common and the unique edge information by mutual matching to obtain more effective details from Y and S.

3.5. FNSCT Reconstruction

Similar to FNSCT decomposition, the reconstruction filters are designed for each channel to form the reconstruction multi-channel filter bank r(z). The fused high- and low-frequency sub-bands were convolved with the corresponding reconstructed multi-channel filters, respectively, and then summed to obtain the reconstructed image. The reconstructed new luminance Y′ is

where (z) and (z) are the low-frequency and the high-frequency reconstructed multi-channel filters, respectively.

In order to completely reconstruct the decomposition sub-bands of FNSCT, the decomposition multi-channel filters and the reconstruction multi-channel filters should satisfy the following relation:

The new luminance Y′ and the original chromaticity U and V generate an RGB color image conforming to human visuals by YUV inverse transform [14]. The color space model transformation from YUV to RGB is defined as

The new luminance Y′ and the night vision anti-halation fusion image obtained from the original image in Figure 1 is shown in Figure 8.

Figure 8.

The new luminance Y′ and the night vision anti-halation fusion image. (a) New luminance Y′. (b) Fusion image.

4. Experimental Results and Analysis

Considering both the night driving environment and the degree of halation, the effectiveness and universality of the proposed algorithm are verified. We collected infrared and visible videos on three types of roads covering most night driving, i.e., urban trunk road, residential road, and suburban road. In addition, the videos include vehicle fast-speed videos and vehicle slow-speed videos of each road. The halation areas in the videos change from small to large and then smaller as the vehicles come closer. We obtained more than 6200 original infrared and visible images from the videos collected.

The proposed algorithm is compared with Wavelet [5], Curvelet [6], NSST [7], LPSR [8], and FNSCT [23]. The quality of the fusion images obtained by the six algorithms above is evaluated by the adaptive partition quality evaluation method of night vision anti-halation fusion images [29]. The degree of halation elimination DHE is used to evaluate the anti-halation effect in the halation areas. The greater the value, the more complete the halation elimination. In non-halation areas, seven objective indexes, including mean µ, standard deviation σ, average gradient AG, spatial frequency SF, cross-entropy CEFU-VI and CEFU-IR, and edge preservation QAB/F, were used to evaluate the quality of fusion images in terms of clarity, color richness, and visual saliency, respectively.

μ represents the average gray of pixels, and, the greater the value, the higher the average brightness. σ indicates the dispersion of the image grayscale relative to μ, and, the higher the σ, the larger the contrast. AG represents the change rate of the image detail contrast. The higher the AG, the clearer the image. SF reflects the overall activity of the image in the spatial domain. The larger the SF, the better the fusion effect. CEFU-VI and CEFU-IR reflect the differences of the corresponding pixels between the fusion image and the original visible as well as that between the fusion image and infrared images, respectively. The lower the value, the more information the fusion image obtains from the original image. QAB/F reflects the degree to which the fusion image maintains the edge of the original image. The greater the value, the more useful information such as contour and texture can be extracted from the original image.

4.1. Urban Trunk Road

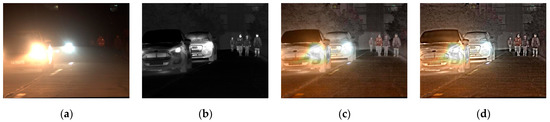

There are streetlamps and scattered light from surrounding buildings on the urban trunk road, and the oncoming car is running with low beams from far to near, forming small halation scenes (Scene 1) and large halation scenes (Scene 2). In the visible image, the roads near the halation areas can be seen, but the pedestrians and road conditions in dark areas are difficult to observe. In the infrared image, the outlines of the car and pedestrians can be seen, but the color information is missing.

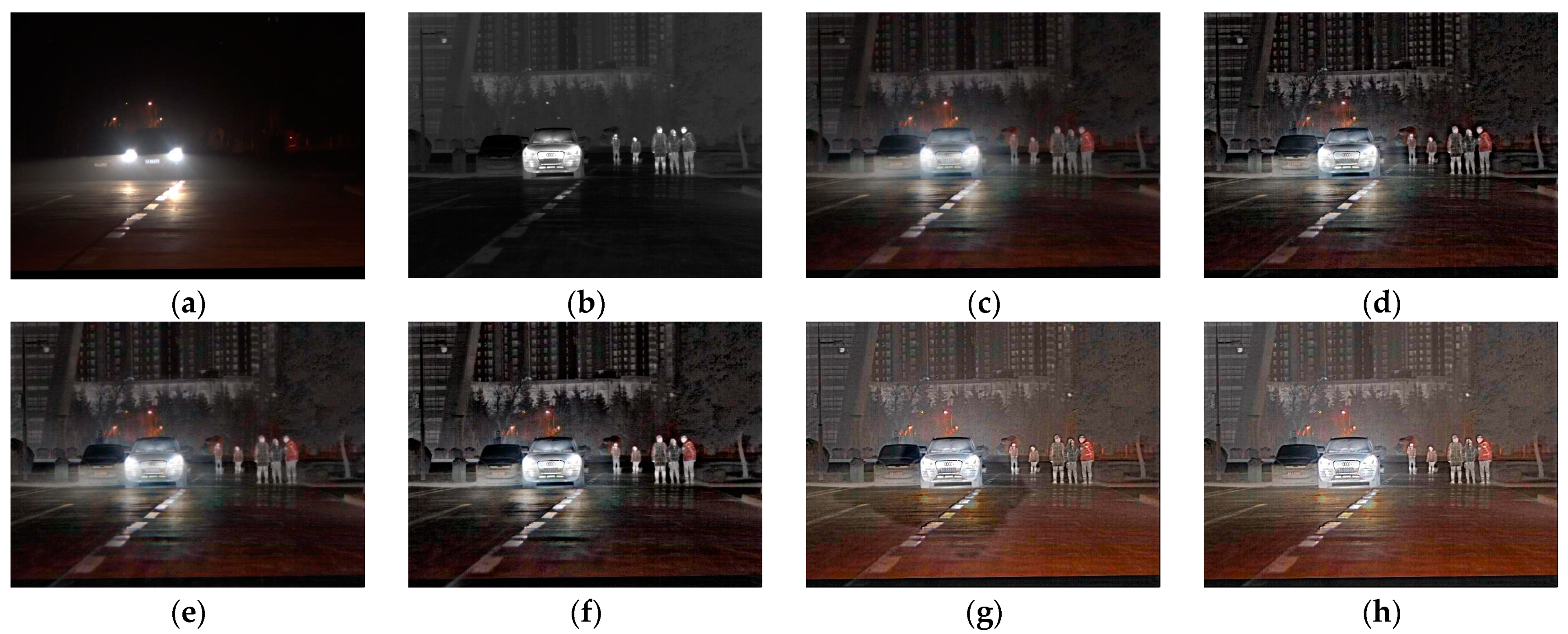

The original images and the fusion images of each algorithm in Scene 1 and Scene 2 are shown in Figure 9 and Figure 10, respectively. Table 1 and Table 2 show the objective evaluation indexes of the fusion images in Scene 1 and Scene 2, respectively.

Figure 9.

The original images and the fusion images of different algorithms in Scene 1. (a) Visible image. (b) Infrared image. (c) Wavelet. (d) Curvelet. (e) NSST. (f) LPSR. (g) FNSCT. (h) Ours.

Figure 10.

The original images and the fusion images of different algorithms in Scene 2. (a) Visible image. (b) Infrared image. (c) Wavelet. (d) Curvelet. (e) NSST. (f) LPSR. (g) FNSCT. (h) Ours.

Table 1.

Objective indexes of fusion images in Scene 1.

Table 2.

Objective indexes of fusion images in Scene 2.

From Figure 9, Wavelets and NSST algorithms cannot eliminate halation completely. The fusion image is dark overall, and the details of salient targets such as vehicles and pedestrians are fuzzy. Curvelet and LPSR algorithms improve the image sharpness, but the halation elimination is still incomplete. The FNSCT algorithm enhances the brightness and contrast of the background of the fusion image, and the halation elimination is more complete. However, the fusion traces between halation and non-halation areas are obvious. The proposed algorithm completely eliminates the halation formed by the headlights, and the color of the fusion image is more natural and bright.

From Figure 10, the Wavelet algorithm eliminates most of the halation. However, local halation still exists, the color shift is serious, and the edge of the vehicle and the background is fuzzy. The Curvelet algorithm improves the clarity of the fusion image. However, there are obvious fusion traces at the junction of the halation and non-halation areas. The NSST algorithm enhances the contour details of vehicles and pedestrians but enlarges halation range, and the non-halation area is dark. The LPSR algorithm enhances the brightness of salient targets and eliminates halation around headlights. However, the road surface has obvious light–dark alternating shadows. The FNSCT fusion image has better halation elimination effects. However, the details of trees and background buildings are lost in non-halation areas. The proposed algorithm eliminates halation completely. The textures of vehicles, pedestrians, lane lines, and road edges are clear, and the visibility of background buildings and trees is greatly improved, which is more suitable for human visual perception.

It can be seen from Table 1 and Table 2 that the DHE of the proposed algorithm is the highest, indicating that the halation elimination is the most thorough in the fusion images. µ, σ, AG, SF, and QAB/F are the largest, and CEFU-VI and CEFU-IR are the smallest, indicating that the overall visual effect is best in the non-halation areas of the fusion image.

In conclusion, the proposed algorithm not only eliminates the high-brightness halation information but also moderately enhances the visual effect of the non-halation area.

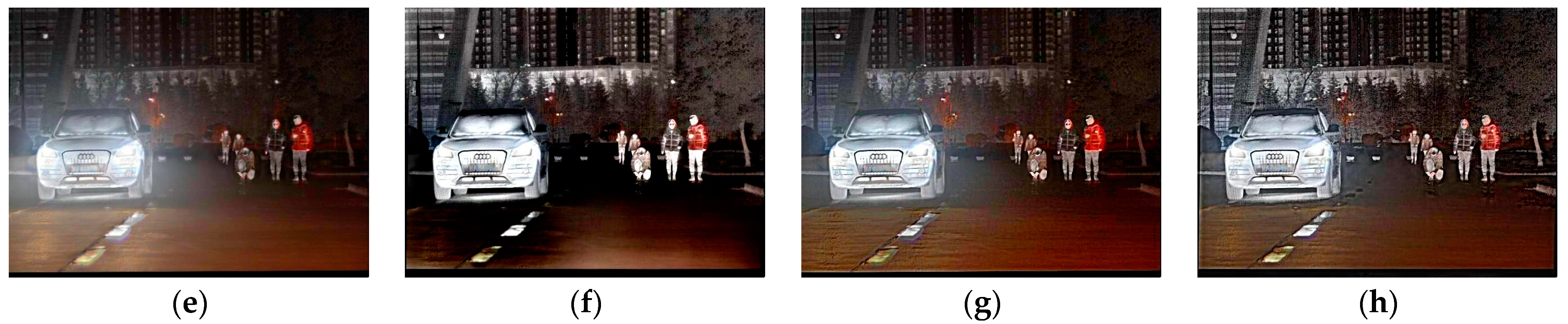

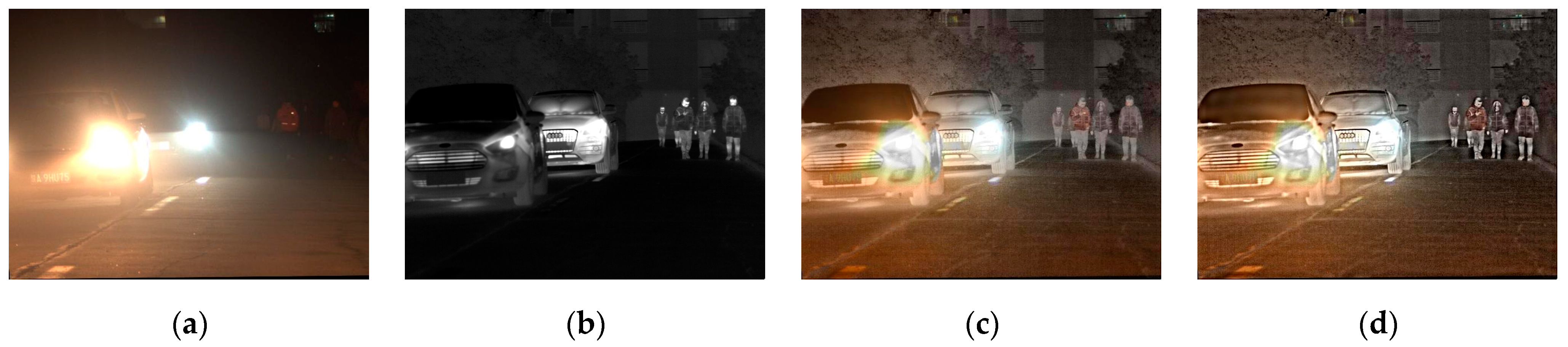

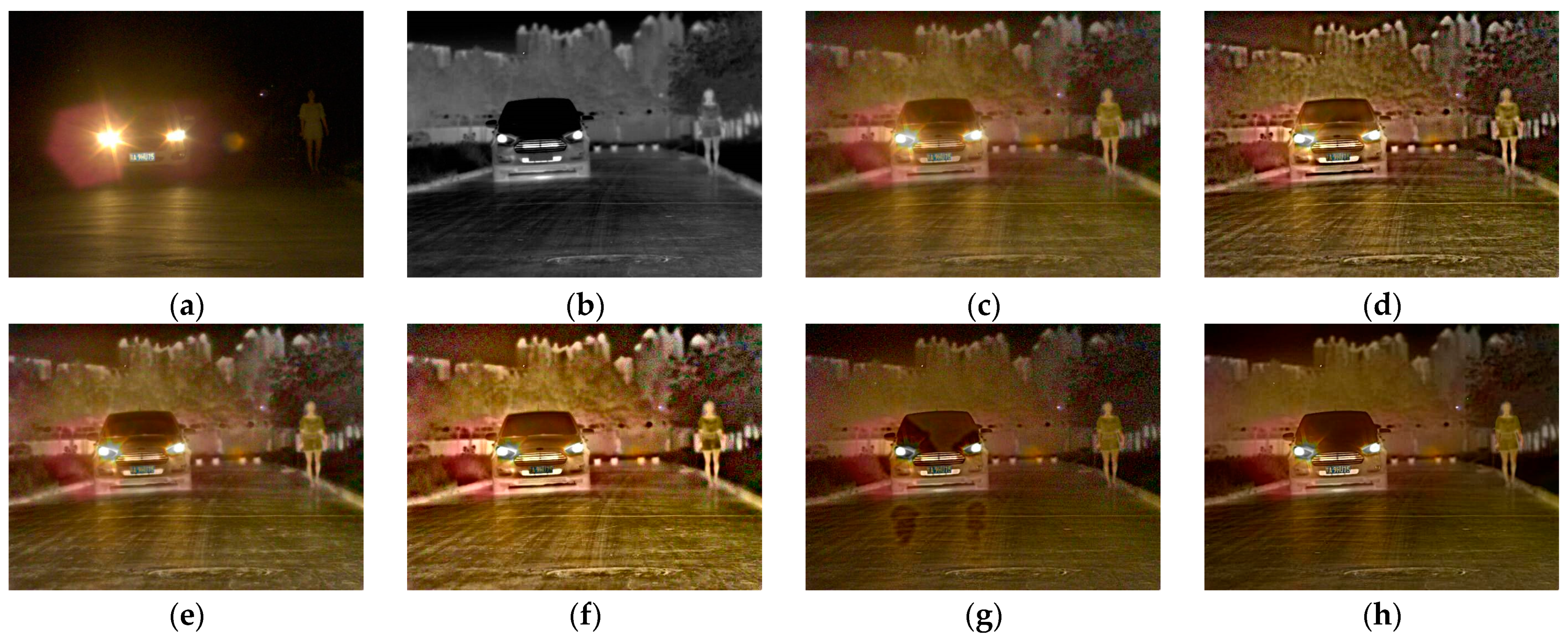

4.2. Residential Road

There is weak scattered light from buildings on the residential road. The overall road environment is dark, and the cars are running with low beams. When the cars are far away, the halation area is small in the visible image (Scene 3). There is little information about pedestrians and road conditions next to cars in the dark areas. The infrared image is not influenced by halation, and the outlines of vehicles and pedestrians are clear. However, the details of the background and both sides of the road are missing. When multiple oncoming cars are close, there are more halation parts in the visible image, and the halation area is larger (Scene 4). The license plate of the previous car and the road conditions ahead can be seen clearly. However, the large-area high-brightness halation makes the information in the dark area more difficult to observe, and it is almost impossible to see the pedestrians and road conditions near the cars. In the infrared image, cars and pedestrians can be seen clearly. The original images and the fusion images of each algorithm in Scene 3 and Scene 4 are shown in Figure 11 and Figure 12, respectively. Table 3 and Table 4 show the objective evaluation indexes of the fusion images in Scene 3 and Scene 4, respectively.

Figure 11.

The original images and the fusion images of different algorithms in Scene 3. (a) Visible image. (b) Infrared image. (c) Wavelet. (d) Curvelet. (e) NSST. (f) LPSR. (g) FNSCT. (h) Ours.

Figure 12.

The original images and the fusion images of different algorithms in Scene 4. (a) Visible image. (b) Infrared image. (c) Wavelet. (d) Curvelet. (e) NSST. (f) LPSR. (g) FNSCT. (h) Ours.

Table 3.

Objective indexes of fusion images in Scene 3.

Table 4.

Objective indexes of fusion images in Scene 4.

From Figure 11, the Wavelet fusion image has serious halation, a dim background, and blurred contours. In the Curvelet fusion image, the edges of vehicles and pedestrians are enhanced, but the halation is enlarged. The NSST algorithm reduces the halation range. However, the color information is severely lost, and the noise is obvious. The LPSR algorithm enhances background brightness, but the halation elimination is poor. The FNSCT algorithm eliminates most of the halation. However, the detailed information such as the buildings in the background and the trees along the road is lost. The proposed algorithm eliminates halation more thoroughly. The brightness of the non-halation area is improved significantly, and the features of important information such as vehicles and pedestrians are remarkable. The visual effect is more desirable.

As can be seen from Table 3, the CEFU-VI of the proposed algorithm is only slightly higher than that of the FNSCT, but the CEFU-IR is much lower than that of the FNSCT. The reason is that the algorithm in this paper retains more details and textures in the infrared image so as to completely eliminate halation of the visible image. The actual visual effect of the fusion image is better. The remaining indexes are better than that of the other algorithms.

From Figure 12, the Wavelet and Curvelet fusion images still have halation. NSST and LPSR algorithms improve the color saliency and clarity of fusion images, yet the halation is still obvious. The FNSCT fusion image has better halation elimination and enhanced details. However, the overall brightness is low. The algorithm in this paper eliminates the halation completely. The license plate is clear, and the information such as pedestrians and background buildings are enhanced moderately.

Table 4 shows that the µ of the proposed algorithm is lower than that of the NSST and the LPSR. This is because the NSST and LPSR enlarge the halation range, which enhances the brightness of the non-halation area, resulting in the smallest DHE of them all. The remaining indexes are better than that of the other algorithms.

Comparing the results of Scene 3 and Scene 4, it can be seen that as the halation area of the visible image becomes larger, the halation degree becomes more serious, and the proposed algorithm has better halation elimination, more significant color and details, and a better overall visual effect.

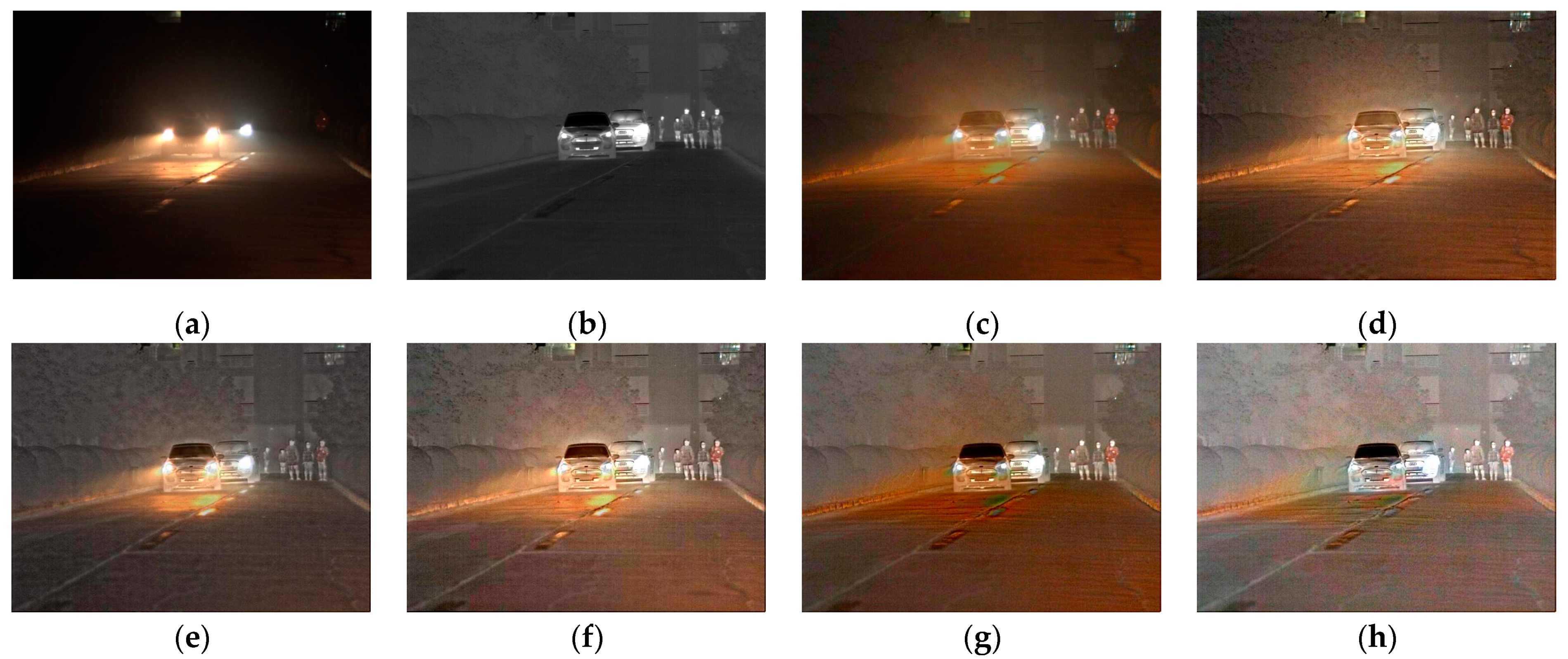

4.3. Suburban Road

There are almost no other light sources on the suburban roads except for the headlights, and the overall illumination is very low. The car is running with high beams. When the car is far away, the halation area is small (Scene 5). When the car is close, the halation area is large and the brightness is high (Scene 6), and it is easy to make the driver opposite blinded, resulting in traffic accidents. In the infrared images, the edge contours of the car and pedestrians can be seen, yet the clarity is low.

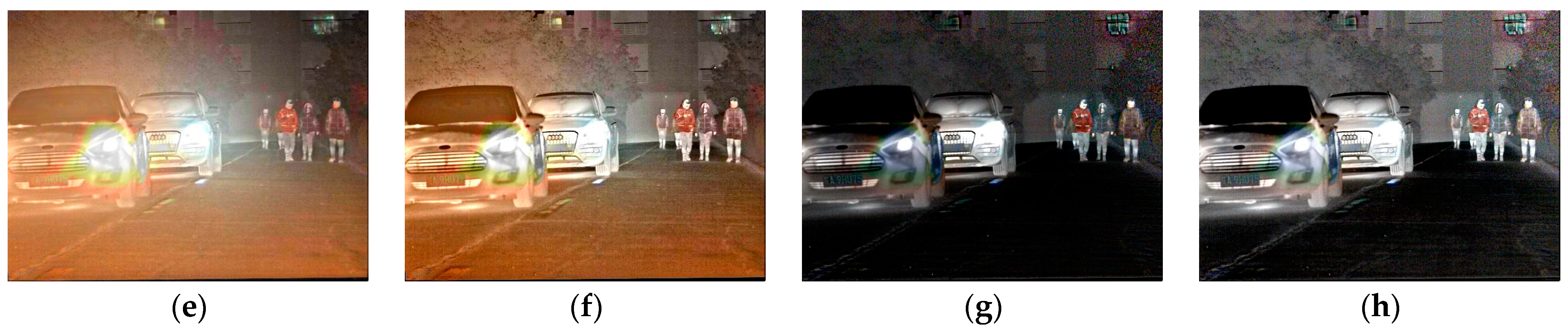

The original images and the fusion images of each algorithm in Scene 5 and Scene 6 are shown in Figure 13 and Figure 14, respectively. Table 5 and Table 6 show the objective evaluation indexes of the fusion images in Scene 5 and Scene 6, respectively.

Figure 13.

The original images and the fusion images of different algorithms in Scene 5. (a) Visible image. (b) Infrared image. (c) Wavelet. (d) Curvelet. (e) NSST. (f) LPSR. (g) FNSCT. (h) Ours.

Figure 14.

The original images and the fusion images of different algorithms in Scene 6. (a) Visible image. (b) Infrared image. (c) Wavelet. (d) Curvelet. (e) NSST. (f) LPSR. (g) FNSCT. (h) Ours.

Table 5.

Objective indexes of fusion images in Scene 5.

Table 6.

Objective indexes of fusion images in Scene 6.

From Figure 13 and Figure 14 and Table 5 and Table 6, the car and pedestrians in the Wavelet fusion image are blurred, and the halation is obvious in both scenes. In Scene 5, the Curvelet and LPSR fusion images have a great amount of background noise. The license plate is fuzzy in the NSST fusion image. The pedestrian details are missing in the FNSCT fusion image. The proposed algorithm eliminates halation thoroughly, and the details of the road surface and background are richer in the fusion image. In Scene 6, the Curvelet and NSST algorithms improve the clarity of the fusion images, yet the halation elimination is insufficient. The LPSR and FNSCT algorithms further eliminate the halation. However, the background of the LPSR fusion image is dark, and the FNSCT fusion image is blurred overall. The algorithm in this paper eliminates not only the strong halation of the headlights but also the reflected light of the road surface. The obtained fusion image has significant contour features, richer color and texture, and the best visual effect.

4.4. Discussion

From the above experimental results and analysis, it can be seen that there are significant differences in the evaluation indexes of the fusion images obtained by different algorithms, indicating that the adopted evaluation system can reflect the quality of night vision anti-halation fusion images from different aspects and the advantages and disadvantages of different algorithms.

To evaluate the quality of fusion images with more confidence and validity, the eight indexes are normalized using Min-Max Normalization, and then summed to obtain a comprehensive index for comparative evaluation. The normalized evaluation index I* is expressed as

where I is the original evaluation index and Imax and Imin are the maximum and minimum of the same index for different algorithms. For the convenience of data analysis, Imin is taken as 0.

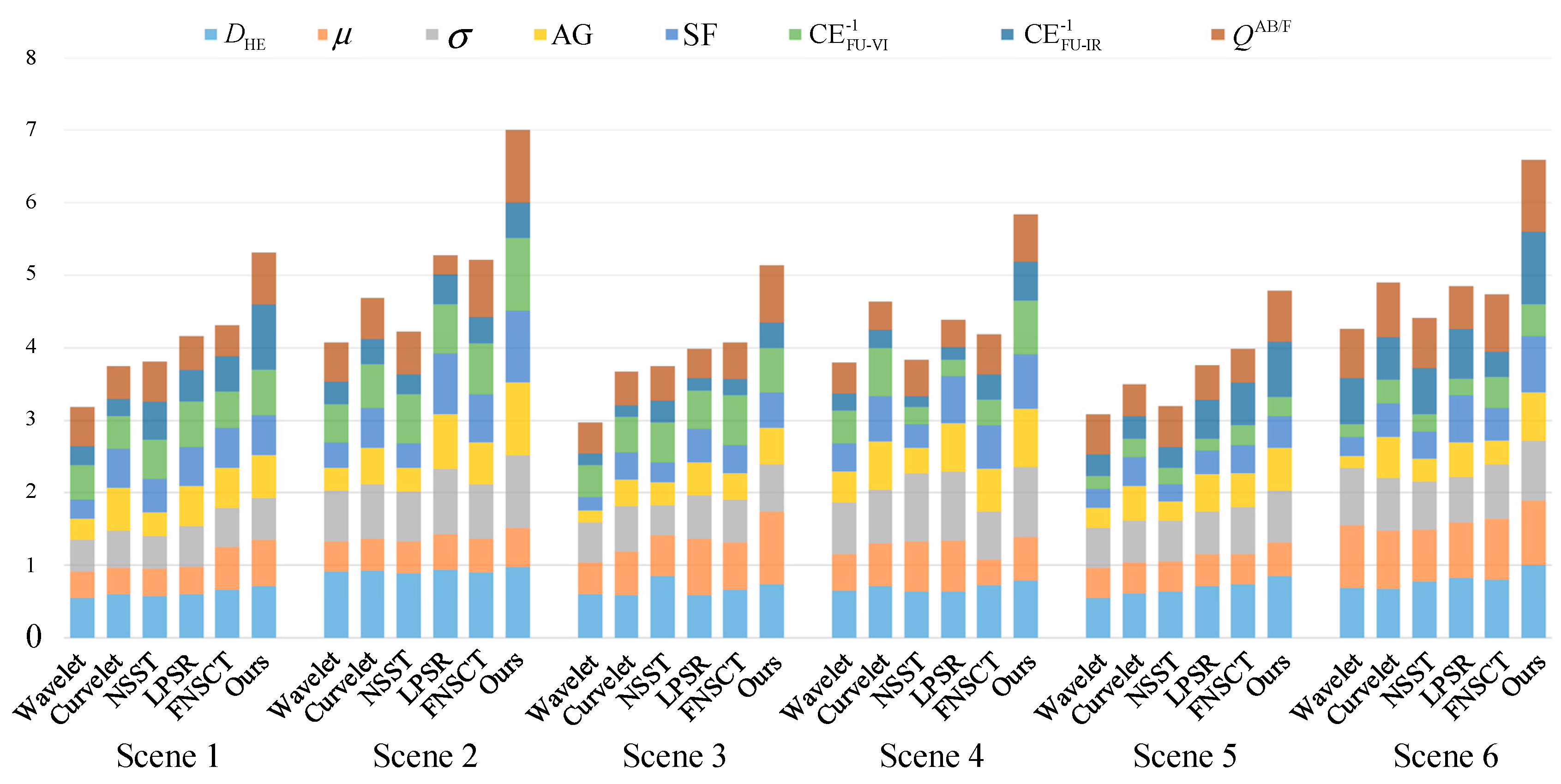

The histogram of normalized indexes of the fusion images in six scenes is shown in Figure 15.

Figure 15.

The comprehensive index of fusion images.

The comprehensive index reflects the overall quality of the fusion image. The higher the bars, the better the image fusion quality and the more consistent with human visual perception.

From Figure 15, Wavelet and NSST algorithms have a low ability to process halation images in each scene. The Curvelet algorithm is more suitable for processing images in a large halation scene. The LPSR and FNSCT algorithms have almost the same comprehensive index, indicating that the fusion effect of the two algorithms is similar. The algorithm in this paper has the best comprehensive index in each halation scene, indicating that the proposed algorithm has advantages in halation elimination and visual saliency and has good universality for different night vision halation scenes.

The urban trunk road has scattered light from streetlamps and surrounding buildings, which can enhance the visibility of the whole fusion image. So, the comprehensive index of the fusion image of the urban trunk road is higher than that of the other two roads. On the residential road, the road environment is dim. When the cars and pedestrians are far away, the halation area is small and the salient targets are inconspicuous, so the brightness is low on the non-halation area of the fusion image, and the comprehensive index of each algorithm is lower than that of the urban trunk road. The overall illumination is very low on the suburban road, and the comprehensive index of each algorithm is the lowest.

On the same road, as the oncoming cars approach, the halation area becomes larger, and the large halation scene can enhance visibility surrounding the halation. Therefore, the comprehensive index of the large halation scene is higher than that of the small halation scene.

In conclusion, both the nighttime ambient illumination and the degree of halation will affect the visual effect of the fusion image.

5. Conclusions

To solve the problem of drivers being blinded by nighttime halation, this paper proposes a night vision anti-halation algorithm-based different-source image fusion. The improved FT saliency detection algorithm is used to extract the salient information from infrared images, which effectively highlights the objects of interest in fusion images, such as cars and pedestrians. Only the luminance is involved in anti-halation fusion by YUV transform, which improves the efficiency of the algorithm and avoids color distortion of the fusion image. FNSCT decomposition and reconstruction improves clarity and details in the fusion image and reduces spectral distortion. The experimental results show that the fusion images of the algorithm in this paper have complete halation elimination, rich color and details, and prominent saliency information. The proposed algorithm has good universality for different halation scenes and is helpful for night driving safety. It also has certain applicability to rainy, foggy, smoggy, and other complex weather.

Author Contributions

Conceptualization, Q.G.; methodology, Q.G. and F.Y.; software, Q.G. and F.Y.; validation, F.Y. and H.W.; writing—original draft preparation, Q.G.; writing—review and editing, F.Y. and H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by PROJECT OF THE NATIONAL NATURAL SCIENCE FOUNDATION OF CHINA, grant number 62073256 and THE KEY RESEARCH AND DEVELOPMENT PROJECT OF SHAANXI PROVINCE, grant number 2019GY-094.

Data Availability Statement

The datasets generated during this study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, Y.; Chen, Q. Case Study of road rage incidents resulting from the illegal use of high beams. Transp. Res. Interdiscip. Perspect. 2020, 7, 100184:1–100184:8. [Google Scholar] [CrossRef]

- Zhu, M.; Yi, K.; Zhang, W.; Fan, Z.; He, H.; Shao, J. Preparation of high performance thin-film polarizers. Chin. Opt. Lett. 2010, 8, 624–626. [Google Scholar] [CrossRef]

- Ashiba, H.I.; Ashiba, M.I. Super-efficient enhancement algorithm for infrared night vision imaging system. Multimed. Tools Appl. 2020, 80, 9721–9747. [Google Scholar] [CrossRef]

- Yuan, Y.; Shen, Y.; Peng, J.; Wang, L.; Zhang, H. Defogging technology based on dual-channel sensor information fusion of near-infrared and visible light. J. Sens. 2020, 2020, 8818650:1–8818650:17. [Google Scholar] [CrossRef]

- Liu, D.; Yang, F.; Wei, H.; Hu, P. Remote sensing image fusion method based on discrete wavelet and multiscale morphological transform in the IHS color space. J. Appl. Remote Sens. 2020, 14, 016518:1–016518:21. [Google Scholar] [CrossRef]

- Yu, Z. An Improved infrared and visible image fusion algorithm based on curvelet transform. Acta Inform. Malays. 2017, 1, 36–38. [Google Scholar] [CrossRef]

- Zhang, B.; Lu, X.; Pei, H.; Zhao, Y. A fusion algorithm for infrared and visible images based on saliency analysis and non-subsampled Shearlet transform. Infrared Phys. Technol. 2015, 73, 286–297. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.; Zhu, R.; Wang, Z.; Feng, Y.; Zhang, X. A multi-focus image fusion framework based on multi-scale sparse representation in gradient domain. Signal Process. 2021, 189, 108254:1–108254:13. [Google Scholar] [CrossRef]

- Zhu, L.; Liu, Z.; Gao, H. Two-stage sparse representation of NSCT features with application to SAR target classification. J. Electromagn. Waves Appl. 2020, 34, 2371–2384. [Google Scholar] [CrossRef]

- Jia, H. Research on Image Fusion Algorithm Based on Nonsubsampled Shear Wave Transform and Principal Component Analysis. J. Phys. Conf. Ser. 2022, 2146, 01205:1–01205:6. [Google Scholar] [CrossRef]

- Ghahremani, M.; Ghassemian, H. Remote-sensing image fusion based on curvelets and ICA. Int. J. Remote Sens. 2015, 36, 4131–4143. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Zhang, X.; Zhang, S. Infrared and visible image fusion based on saliency detection and two-scale transform decomposition. Infrared Phys. Technol. 2021, 114, 103626:1–103626:15. [Google Scholar] [CrossRef]

- Chen, J.; Wu, K.; Cheng, Z.; Luo, L. A saliency-based multiscale approach for infrared and visible image fusion. Signal Process. 2021, 182, 107936:1–107936:12. [Google Scholar] [CrossRef]

- Zhang, H.; Peng, Q. PSO and K-means-based semantic segmentation toward agricultural products. Future Gener. Comput. Syst. 2021, 126, 82–87. [Google Scholar] [CrossRef]

- Zhou, C.; Zhao, J.; Pan, Z.; Hong, Q.; Huang, L. Fusion of visible and infrared images based on IHS transformation and regional variance matching degree. IOP Conf. Ser. Earth Environ. Sci. 2019, 234, 012021:1–012021:7. [Google Scholar] [CrossRef]

- Cong, R.; Lei, J.; Fu, H.; Cheng, M.M.; Lin, W.; Huang, Q. Review of visual saliency detection with comprehensive information. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 2941–2959. [Google Scholar] [CrossRef]

- Pan, X.; Zheng, Y.; Jeon, B. Robust segmentation based on salient region detection coupled gaussian mixture model. Information 2022, 13, 98:1–98:13. [Google Scholar] [CrossRef]

- Wu, L.; Fang, S.; Ma, Y.; Fan, F.; Huang, J. Infrared small target detection based on gray intensity descent and local gradient watershed. Infrared Phys. Technol. 2022, 123, 104171:1–104171:12. [Google Scholar] [CrossRef]

- Lu, X.U.; Hui, W.; Si-yi, Q.I.; Jing-wen, L.; Li-juan, W. Coastal soil salinity estimation based digital images and color space conversion. Spectrosc. Spectr. Anal. 2021, 41, 2409–2414. [Google Scholar] [CrossRef]

- Amin, J.; Anjum, M.A.; Sharif, A.; Raza, M.; Kadry, S.; Nam, Y. Malaria Parasite Detection Using a Quantum-Convolutional Network. Comput. Mater. Contin. 2022, 70, 6023–6039. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, L.B.; Liu, J.S.; Gao, Y.; Wu, Z.; Zhu, H.C.; Du, C.P.; Mai, X.L.; Yang, C.F.; Chen, Y. Robust restoration of low-dose cerebral perfusion CT images using NCS-Unet. Nucl. Sci. Tech. 2022, 33, 64–78. [Google Scholar] [CrossRef]

- Zhou, P.; Chen, G.; Wang, M.; Liu, X.; Chen, S.; Sun, R. Side-Scan Sonar Image Fusion Based on Sum-Modified Laplacian Energy Filtering and Improved Dual-Channel Impulse Neural Network. Appl. Sci. 2020, 10, 1028:1–1028:18. [Google Scholar] [CrossRef]

- Zhao, C.; Guo, Y.; Wang, Y. A fast fusion scheme for infrared and visible light images in NSCT domain. Infrared Phys. Technol. 2015, 72, 266–275. [Google Scholar] [CrossRef]

- Vitoria, P.; Ballester, C. Automatic flare spot artifact detection and removal in photographs. J. Math. Imaging Vis. 2019, 61, 515–533. [Google Scholar] [CrossRef]

- Li, X.; Zhou, F.; Tan, H.; Zhang, W.; Zhao, C. Multimodal medical image fusion based on joint bilateral filter and local gradient energy. Inf. Sci. 2021, 569, 302–325. [Google Scholar] [CrossRef]

- Tan, W.; Thitøn, W.; Xiang, P.; Zhou, H. Multi-modal brain image fusion based on multi-level edge-preserving filtering. Biomed. Signal Process. Control. 2021, 64, 102280:1–102280:13. [Google Scholar] [CrossRef]

- Jain, S.; Salau, A.O.; Meng, W. An image feature selection approach for dimensionality reduction based on kNN and SVM for AkT proteins. Cogent Eng. 2019, 6, 159537:1–159537:14. [Google Scholar] [CrossRef]

- Ren, K.; Ye, Y.; Gu, G.; Chen, Q. Feature matching based on spatial clustering for aerial image registration with large view differences. Optik 2022, 259, 169033:1–169033:10. [Google Scholar] [CrossRef]

- Guo, Q.; Chai, G.; Li, H. Quality evaluation of night vision anti-halation fusion image based on adaptive partition. J. Electron. Inf. Technol. 2019, 42, 1750–1757. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).