Credit Risk Prediction Model for Listed Companies Based on CNN-LSTM and Attention Mechanism

Abstract

1. Introduction

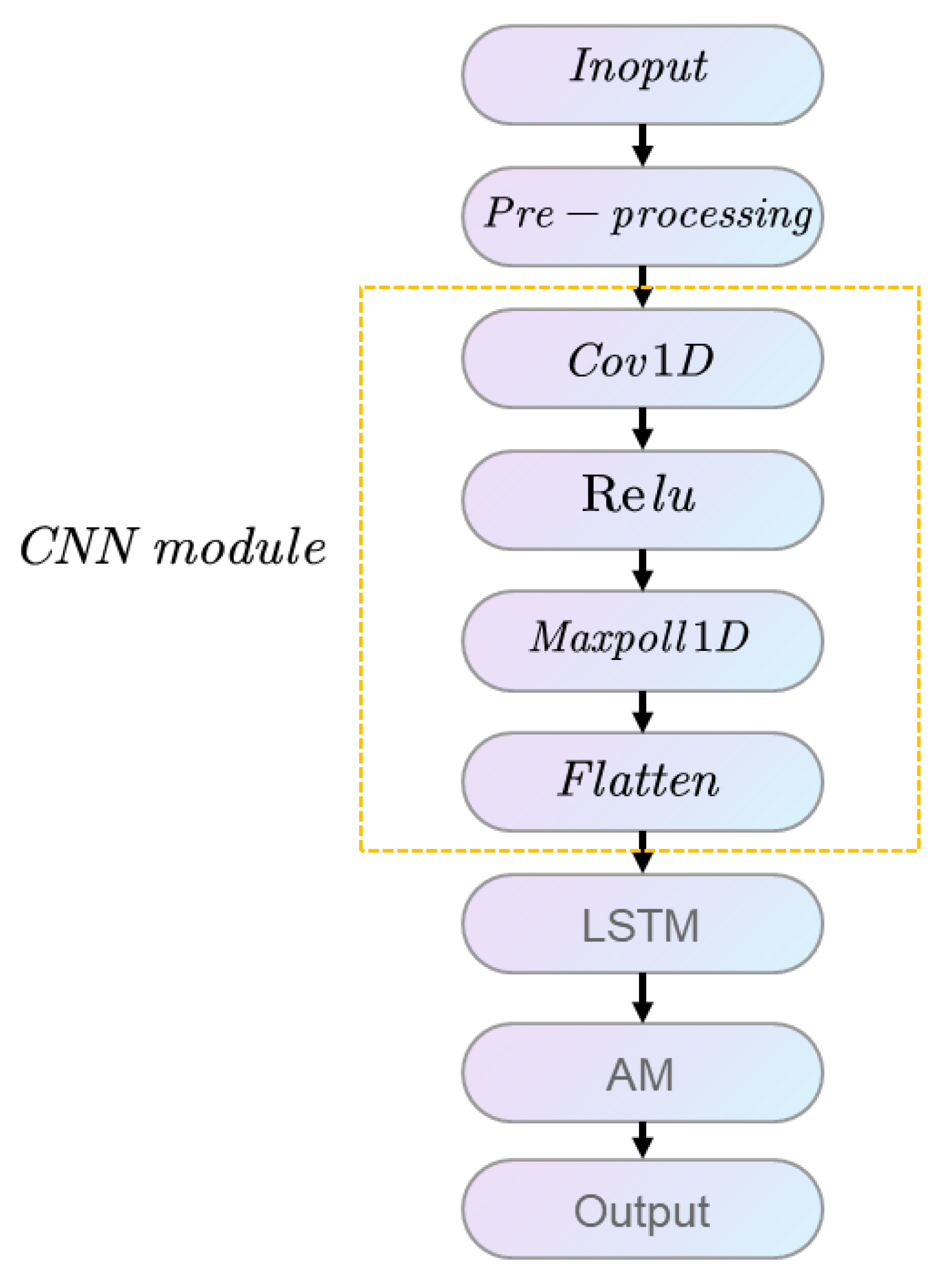

- Compared with the traditional Z-score and Logit models, the improved CNN-LSTM model used in this paper has a more vital information selection ability and time-series data-learning ability and can make accurate predictions for time-series data. The attention module can automatically judge and learn the importance of different features of credit indicators of listed companies and the derived importance relationship to assign weights, which significantly improves the prediction ability of the LSTM model for long input series and effectively improves the prediction ability for the credit risk of listed companies.

- The model proposed in this paper can effectively solve the nonlinear problem of predicting credit risk, has more applicability than the Z-score, Logit and KMV models and does not require many samples compared with the latest neural network model.

- It can genuinely reflect the relationship between the default and credit risk of listed companies, which makes commercial banks and investors better able to make reasonable and timely responses to the credit-risk problems of companies.

2. Related Work

2.1. Logit Model and the Z-Score

2.2. KMV Model

2.3. ANN

3. Methodology

3.1. Overview of Our Network

3.2. CNN Model

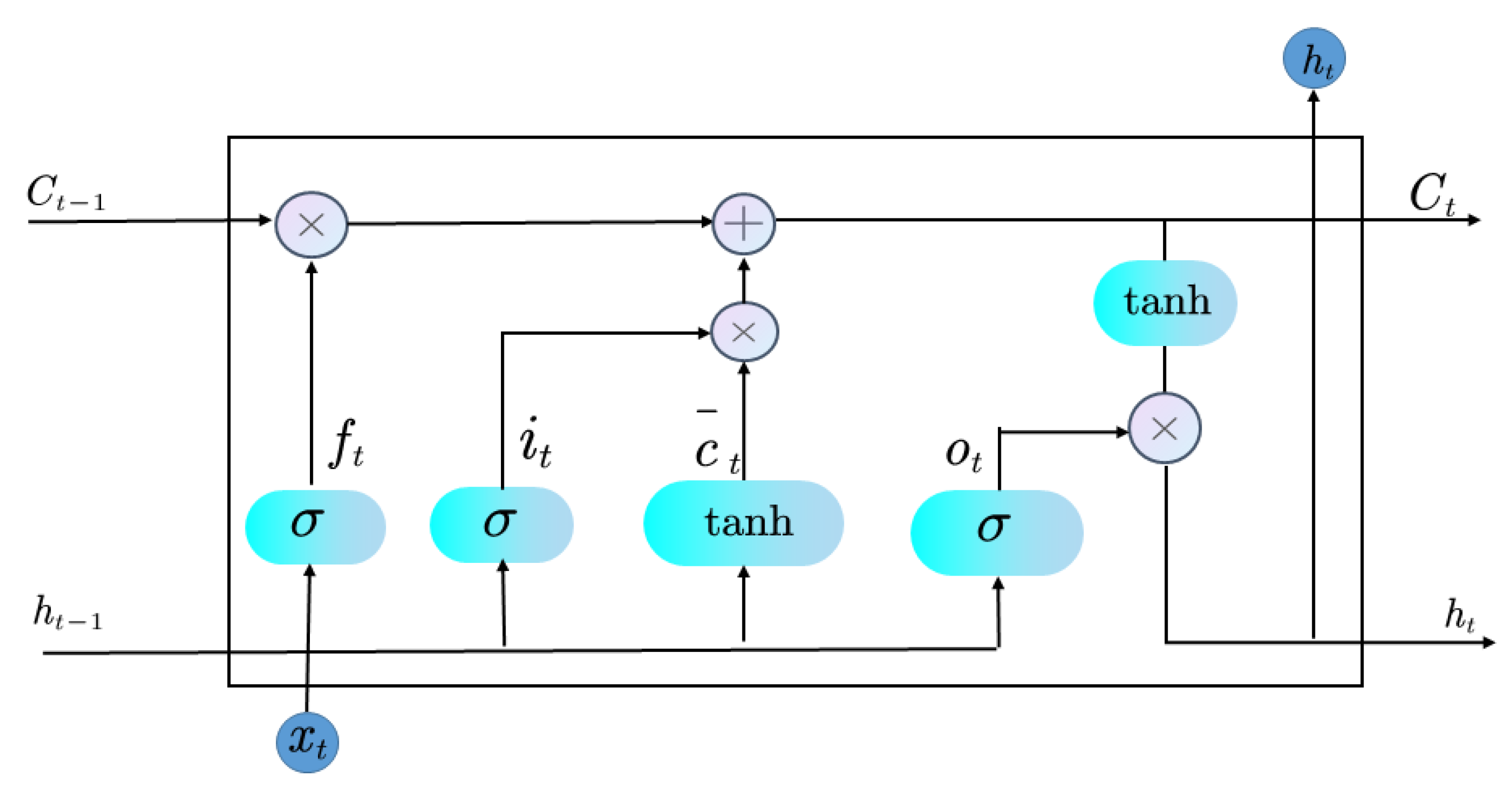

3.3. LSTM Model

4. Experiment

4.1. Datasets

4.2. Experimental Details

4.3. Experimental Results and Analysis

| Algorithm 1: Algorithmic representation of the training process in this paper. |

|

5. Conclusions and Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Rostami, M.; Berahmand, K.; Nasiri, E.; Forouzandeh, S. Review of swarm intelligence-based feature selection methods. Eng. Appl. Artif. Intell. 2021, 100, 104210. [Google Scholar] [CrossRef]

- Ning, X.; Xu, S.; Nan, F.; Zeng, Q.; Wang, C.; Cai, W.; Li, W.; Jiang, Y. Face editing based on facial recognition features. IEEE Trans. Cogn. Dev. Syst. 2022. Available online: https://ieeexplore.ieee.org/document/9795907 (accessed on 22 March 2023). [CrossRef]

- Yoosefdoost, I.; Basirifard, M.; Álvarez-García, J. Reservoir Operation Management with New Multi-Objective (MOEPO) and Metaheuristic (EPO) Algorithms. Water 2022, 14, 2329. [Google Scholar] [CrossRef]

- Zhu, Y.; Xie, C.; Wang, G.J.; Yan, X.G. Predicting China’s SME credit risk in supply chain finance based on machine learning methods. Entropy 2016, 18, 195. [Google Scholar] [CrossRef]

- Ning, X.; Tian, W.; Yu, Z.; Li, W.; Bai, X.; Wang, Y. HCFNN: High-order coverage function neural network for image classification. Pattern Recognit. 2022, 131, 108873. [Google Scholar] [CrossRef]

- Cai, W.; Ning, X.; Zhou, G.; Bai, X.; Jiang, Y.; Li, W.; Qian, P. A Novel Hyperspectral Image Classification Model Using Bole Convolution with Three-Directions Attention Mechanism: Small sample and Unbalanced Learning. IEEE Trans. Geosci. Remote. Sens. 2022, 61, 5500917. [Google Scholar] [CrossRef]

- Manab, N.A.; Theng, N.Y.; Md-Rus, R. The determinants of credit risk in Malaysia. Procedia-Soc. Behav. Sci. 2015, 172, 301–308. [Google Scholar] [CrossRef]

- Ning, X.; Tian, W.; He, F.; Bai, X.; Sun, L.; Li, W. Hyper-sausage coverage function neuron model and learning algorithm for image classification. Pattern Recognit. 2023, 136, 109216. [Google Scholar] [CrossRef]

- Chen, Z.; Huang, J.; Ahn, H.; Ning, X. Costly features classification using monte carlo tree search. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- Zhibin, Z.; Liping, S.; Xuan, C. Labeled box-particle CPHD filter for multiple extended targets tracking. J. Syst. Eng. Electron. 2019, 30, 57–67. [Google Scholar]

- Wei, X.; Saha, D. KNEW: Key Generation using NEural Networks from Wireless Channels. In Proceedings of the 2022 ACM Workshop on Wireless Security and Machine Learning, San Antonio, TX, USA, 19 May 2022; pp. 45–50. [Google Scholar]

- Zou, Z.B.; Song, L.P.; Song, Z.L. Labeled box-particle PHD filter for multi-target tracking. In Proceedings of the 2017 third IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1725–1730. [Google Scholar]

- Zou, Z.; Careem, M.; Dutta, A.; Thawdar, N. Joint spatio-temporal precoding for practical non-stationary wireless channels. IEEE Trans. Commun. 2023. Available online: https://ieeexplore.ieee.org/document/10034681 (accessed on 22 March 2023). [CrossRef]

- Zou, Z.; Wei, X.; Saha, D.; Dutta, A.; Hellbourg, G. SCISRS: Signal Cancellation using Intelligent Surfaces for Radio Astronomy Services. In Proceedings of the GLOBECOM 2022-2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 4238–4243. [Google Scholar]

- Zou, Z.; Careem, M.; Dutta, A.; Thawdar, N. Unified characterization and precoding for non-stationary channels. In Proceedings of the ICC 2022-IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 5140–5146. [Google Scholar]

- Peng, H.; Huang, S.; Chen, S.; Li, B.; Geng, T.; Li, A.; Jiang, W.; Wen, W.; Bi, J.; Liu, H.; et al. A length adaptive algorithm-hardware co-design of transformer on fpga through sparse attention and dynamic pipelining. In Proceedings of the 59th ACM/IEEE Design Automation Conference, Francisco, CA, USA, 10–14 July 2022; pp. 1135–1140. [Google Scholar]

- Zhang, Y.; Mu, L.; Shen, G.; Yu, Y.; Han, C. Fault diagnosis strategy of CNC machine tools based on cascading failure. J. Intell. Manuf. 2019, 30, 2193–2202. [Google Scholar] [CrossRef]

- Shen, G.; Zeng, W.; Han, C.; Liu, P.; Zhang, Y. Determination of the average maintenance time of CNC machine tools based on type II failure correlation. Eksploatacja i Niezawodność 2017, 19, 604–614. [Google Scholar] [CrossRef]

- Shen, G.; Han, C.; Chen, B.; Dong, L.; Cao, P. Fault analysis of machine tools based on grey relational analysis and main factor analysis. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2018; Volume 1069, p. 012112. [Google Scholar]

- Shen, G.-X.; Zhao, X.Z.; Zhang, Y.-Z.; Han, C.-Y. Research on criticality analysis method of CNC machine tools components under fault rate correlation. In Proceedings of the IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2018; Volume 307, p. 012023. [Google Scholar]

- Nazari, M.; Alidadi, M. Measuring credit risk of bank customers using artificial neural network. J. Manag. Res. 2013, 5, 17. [Google Scholar] [CrossRef]

- Song, Z.; Johnston, R.M.; Ng, C.P. Equitable Healthcare Access During the Pandemic: The Impact of Digital Divide and Other SocioDemographic and Systemic Factors. Appl. Res. Artif. Intell. Cloud Comput. 2021, 4, 19–33. [Google Scholar]

- Song, Z.; Mellon, G.; Shen, Z. Relationship between Racial Bias Exposure, Financial Literacy, and Entrepreneurial Intention: An Empirical Investigation. J. Artif. Intell. Mach. Learn. Manag. 2020, 4, 42–55. [Google Scholar]

- Teles, G.; Rodrigues, J.; Rabê, R.A.; Kozlov, S.A. Artificial neural network and Bayesian network models for credit risk prediction. J. Artif. Intell. Syst. 2020, 2, 118–132. [Google Scholar] [CrossRef]

- He, F.; Ye, Q. A bearing fault diagnosis method based on wavelet packet transform and convolutional neural network optimized by simulated annealing algorithm. Sensors 2022, 22, 1410. [Google Scholar] [CrossRef]

- Han, Y.; Wang, B. Investigation of listed companies credit risk assessment based on different learning schemes of BP neural network. Int. J. Bus. Manag. 2011, 6, 204–207. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Zhang, L. The Evaluation on the Credit Risk of Enterprises with the CNN-LSTM-ATT Model. Comput. Intell. Neurosci. CIN 2022, 2022, 6826573. [Google Scholar] [CrossRef] [PubMed]

- Han, C.; Lin, T. Reliability evaluation of electro spindle based on no-failure data. Highlights Sci. Eng. Technol. 2022, 16, 86–97. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Xu, F.; Zheng, Y.; Hu, X. Real-time finger force prediction via parallel convolutional neural networks: A preliminary study. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3126–3129. [Google Scholar]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative study of CNN and RNN for natural language processing. arXiv 2017, arXiv:1702.01923. [Google Scholar]

- Elton, E.J.; Gruber, M.J.; Blake, C.R. A first look at the accuracy of the CRSP mutual fund database and a comparison of the CRSP and Morningstar mutual fund databases. J. Financ. 2001, 56, 2415–2430. [Google Scholar] [CrossRef]

- Berger, A.N.; Udell, G.F. A more complete conceptual framework for SME finance. J. Bank. Financ. 2006, 30, 2945–2966. [Google Scholar] [CrossRef]

- Zhang, B.T.; Joung, J.G. Time series prediction using committee machines of evolutionary neural trees. In Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), Washington, DC, USA, 6–9 July 1999; IEEE: Piscataway, NJ, USA, 1999; Volume 1, pp. 281–286. [Google Scholar]

- Yu, H.; Chen, R.; Zhang, G. A SVM stock selection model within PCA. Procedia Comput. Sci. 2014, 31, 406–412. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, L.; Juan, L.; Zhou, S.; Li, L. The study on credit risk warning of regional listed companies in China based on logistic model. Discret. Dyn. Nat. Soc. 2021, 2021, 1–8. [Google Scholar] [CrossRef]

- Halteh, K.; Kumar, K.; Gepp, A. Using cutting-edge tree-based stochastic models to predict credit risk. Risks 2018, 6, 55. [Google Scholar] [CrossRef]

- Su, E.D.; Huang, S.M. Comparing firm failure predictions between logit, KMV, and ZPP models: Evidence from Taiwan’s electronics industry. Asia-Pac. Financ. Mark. 2010, 17, 209–239. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhou, L.; Xie, C.; Wang, G.J.; Nguyen, T.V. Forecasting SMEs’ credit risk in supply chain finance with an enhanced hybrid ensemble machine learning approach. Int. J. Prod. Econ. 2019, 211, 22–33. [Google Scholar] [CrossRef]

- Chen, Y.C.; Huang, W.C. Constructing a stock-price forecast CNN model with gold and crude oil indicators. Appl. Soft Comput. 2021, 112, 107760. [Google Scholar] [CrossRef]

- Li, M.; Zhang, Z.; Lu, M.; Jia, X.; Liu, R.; Zhou, X.; Zhang, Y. Internet Financial Credit Risk Assessment with Sliding Window and Attention Mechanism LSTM Model. Tehnički vjesnik 2023, 30, 1–7. [Google Scholar]

- Vidal, A.; Kristjanpoller, W. Gold volatility prediction using a CNN-LSTM approach. Expert Syst. Appl. 2020, 157, 113481. [Google Scholar] [CrossRef]

- Chen, B.R.; Liu, Z.; Song, J.; Zeng, F.; Zhu, Z.; Bachu, S.P.K.; Hu, Y.C. FlowTele: Remotely shaping traffic on internet-scale networks. In Proceedings of the 18th International Conference on emerging Networking EXperiments and Technologies, Rome, Italy, 6–9 December 2022; pp. 349–368. [Google Scholar]

- Zhang, R.; Zeng, F.; Cheng, X.; Yang, L. UAV-aided data dissemination protocol with dynamic trajectory scheduling in VANETs. In Proceedings of the ICC 2019—2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Georgios, K. Credit risk evaluation and rating for SMES using statistical approaches: The case of European SMES manufacturing sector. J. Appl. Financ. Bank. 2019, 9, 59–83. [Google Scholar]

| Symbols | Meaning |

|---|---|

| q | the query vector |

| the input vector | |

| the attention distribution | |

| E | the activation function |

| the input feature map | |

| K | the convolution kernel corresponding to the feature |

| the bias unit of the layer | |

| downsampling | |

| the sigmoid function | |

| W | the weight of the neuron |

| b | the deviation of the neuron |

| Factors | Code | Variable | Categories |

|---|---|---|---|

| Applicant factors | Current ratio | Liquidity | |

| Applicant factors | Quick ratio | Liquidity | |

| Applicant factors | Cash ratio | Liquidity | |

| Applicant factors | Working capital turnover | Liquidity | |

| Applicant factors | Return on equity | Leverage | |

| Applicant factors | Profit margin on sales | Profitability | |

| Applicant factors | Rate of Return on Total Assets | Leverage | |

| Applicant factors | Total Assets Growth Rate | Activity | |

| Counter party factors | Credit rating | Non-finance | |

| Counter party factors | Quick ratio | Liquidity | |

| Counter party factors | Turnover of total capital | Liquidity | |

| Counter party factors | Profit margin on sales | Profitability | |

| ltems’ characteristics factors | Price rigidity, liquidation | Non- finance | |

| ltems’ characteristics factors | Account receivable collection period | Leverage | |

| ltems’ characteristics factors | Accounts receivable turnover ratio | Leverage | |

| Operation condition factors | Industry trends | Non-finance | |

| Operation condition factors | Transaction time and transaction | Non-finance | |

| Operation condition factors | frequency Credit rating of SME | Non-finance |

| Model | ||

|---|---|---|

| Ours | 2.38 | 3.34 |

| Kmv | 2.58 | 3.65 |

| Svm | 2.81 | 2.94 |

| Tress | 2.41 | 3.5 |

| Index | Ours | SVM | KMV |

|---|---|---|---|

| Current ratio | 0.5653 | 05023 | 0.4613 |

| Quick ratio | 0.4904 | 0.4756 | 0.4653 |

| Cash ratio | 0.4545 | 0.5864 | 0.5656 |

| Credit rating | 0.8623 | 0.8523 | 0.8321 |

| Quick ratio | 09864 | 0.9654 | 0.9451 |

| Industry trends | 0.8746 | 0.8586 | 0.8321 |

| Model | Accuracy | Flops | Parameters (M) |

|---|---|---|---|

| Logistic [4] | 0.7762 | 212 | 27.03 |

| Tree [35] | 0.914 | 140 | 140.47 |

| KMV [38] | 0.8577 | 205 | 11.69 |

| ZPP [40] | 0.8454 | 180 | 15.79 |

| AM [41] | 0.790 | 125.77 | 169.99 |

| CNN [42] | 0.897 | 150.66 | 177.17 |

| LSTM [43] | 0.931 | 142.43 | 99.86 |

| CNN-LSTM [44] | 0.964 | 109 | 56.44 |

| SMV [47] | 0.9044 | 113.4 | 122.86 |

| Ours | 0.9843 | 102 | 14.14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Xu, C.; Feng, B.; Zhao, H. Credit Risk Prediction Model for Listed Companies Based on CNN-LSTM and Attention Mechanism. Electronics 2023, 12, 1643. https://doi.org/10.3390/electronics12071643

Li J, Xu C, Feng B, Zhao H. Credit Risk Prediction Model for Listed Companies Based on CNN-LSTM and Attention Mechanism. Electronics. 2023; 12(7):1643. https://doi.org/10.3390/electronics12071643

Chicago/Turabian StyleLi, Jingyuan, Caosen Xu, Bing Feng, and Hanyu Zhao. 2023. "Credit Risk Prediction Model for Listed Companies Based on CNN-LSTM and Attention Mechanism" Electronics 12, no. 7: 1643. https://doi.org/10.3390/electronics12071643

APA StyleLi, J., Xu, C., Feng, B., & Zhao, H. (2023). Credit Risk Prediction Model for Listed Companies Based on CNN-LSTM and Attention Mechanism. Electronics, 12(7), 1643. https://doi.org/10.3390/electronics12071643