A Clustered Federated Learning Method of User Behavior Analysis Based on Non-IID Data

Abstract

:1. Introduction

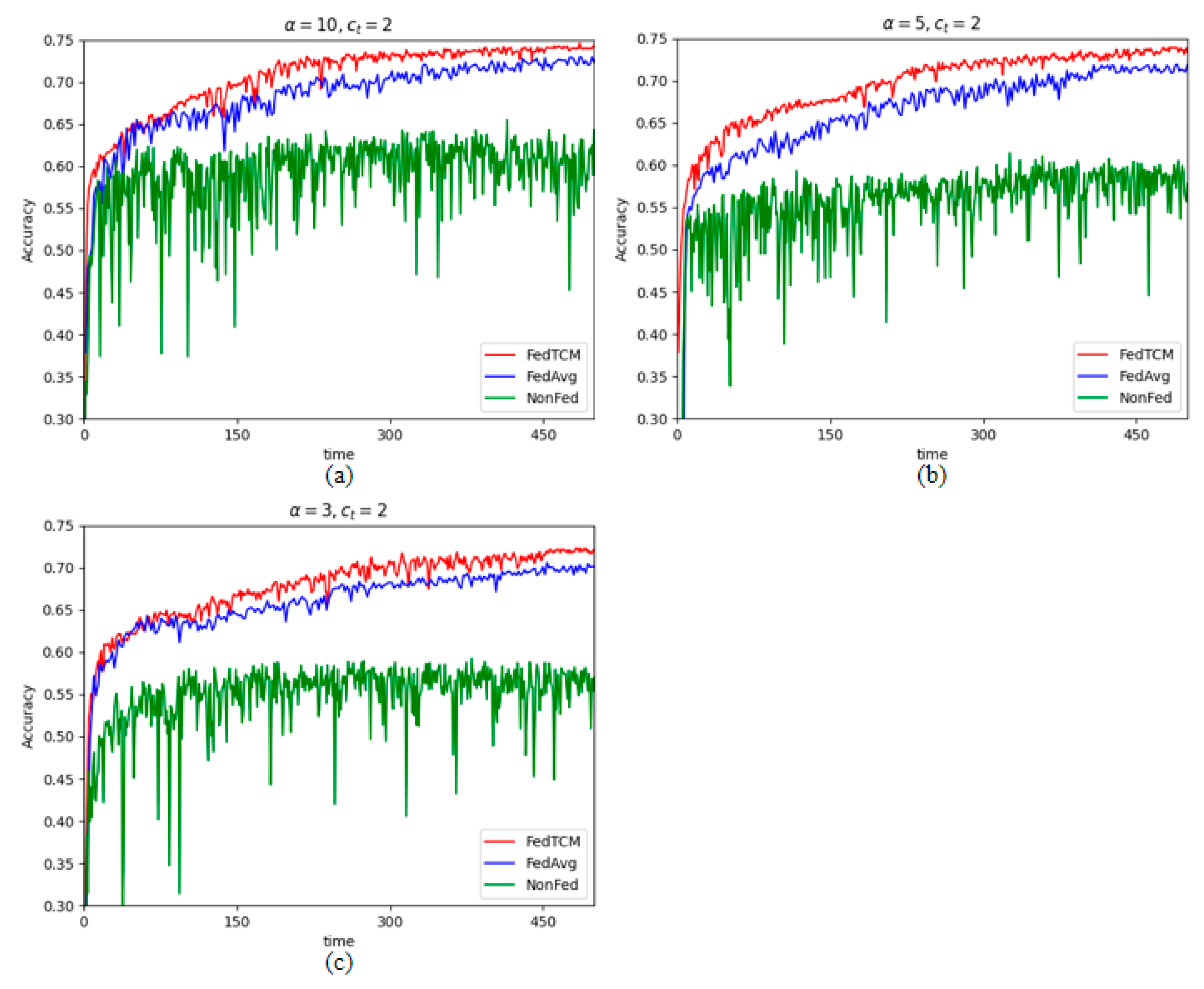

- To solve the problem of the impact of Non-IID data on the FL model and the difficulty in adjusting parameters due to asynchronous communication, FedTCM sets up a two-tier cache structure in the server, which results in accuracy improvement in non-IID environments.

- Using intra-cluster synchronous communication and inter-cluster asynchronous communication mitigates the impact of varying client computation speed and reduces the communication burden on the server.

- The performance evaluation on the user behavior dataset shows that the algorithm in this paper has high accuracy compared to existing algorithms.

2. Related Work

2.1. Data Augmentation

2.2. Cluster-Based Multi-Model Learning

2.3. Adaptive Optimization

2.4. Personalized Federated Learning

3. The Principle of FedTCM

3.1. Clustering the Clients Based on the Distribution of Local Data

3.2. The Process of Training on Clients

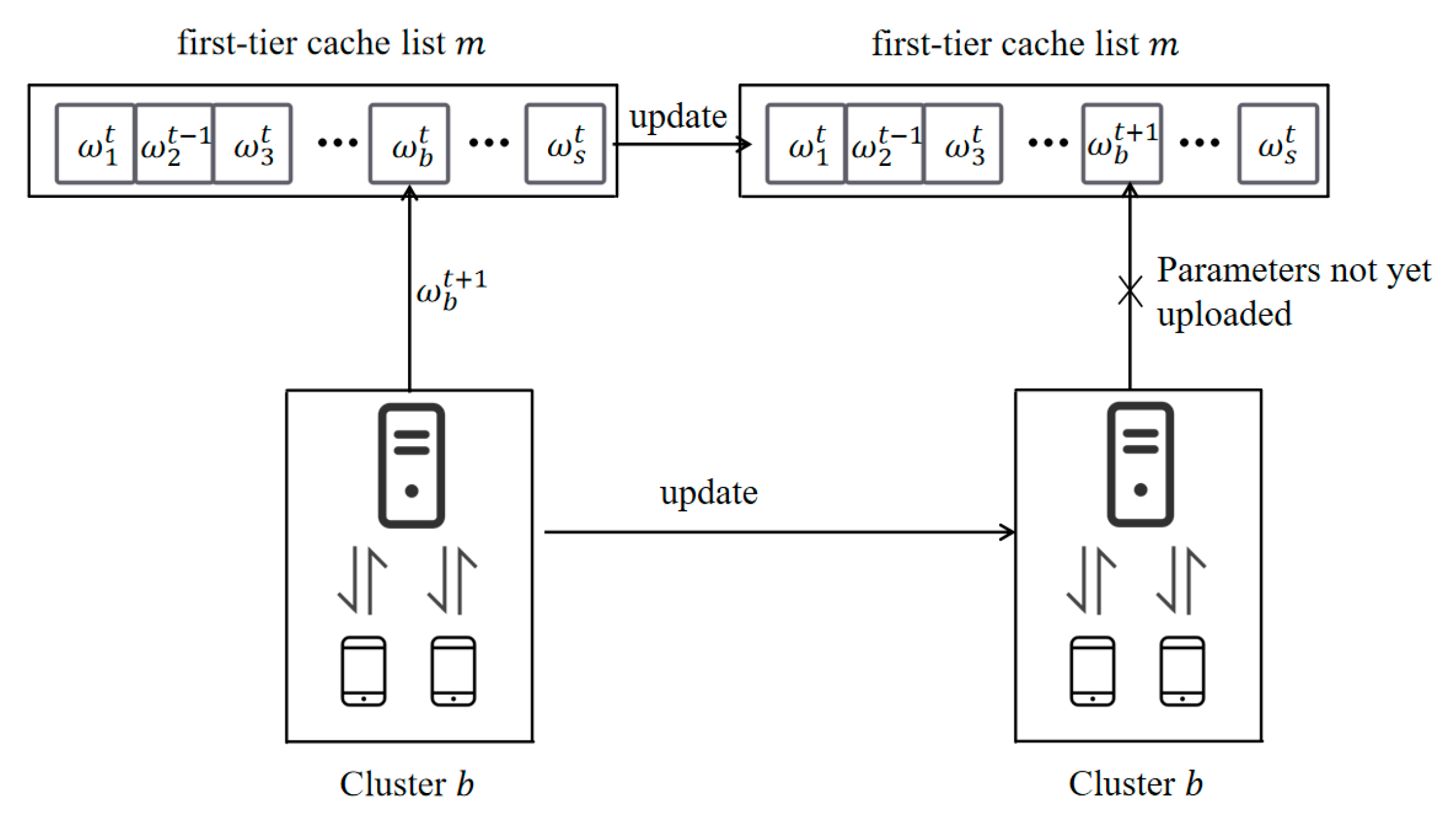

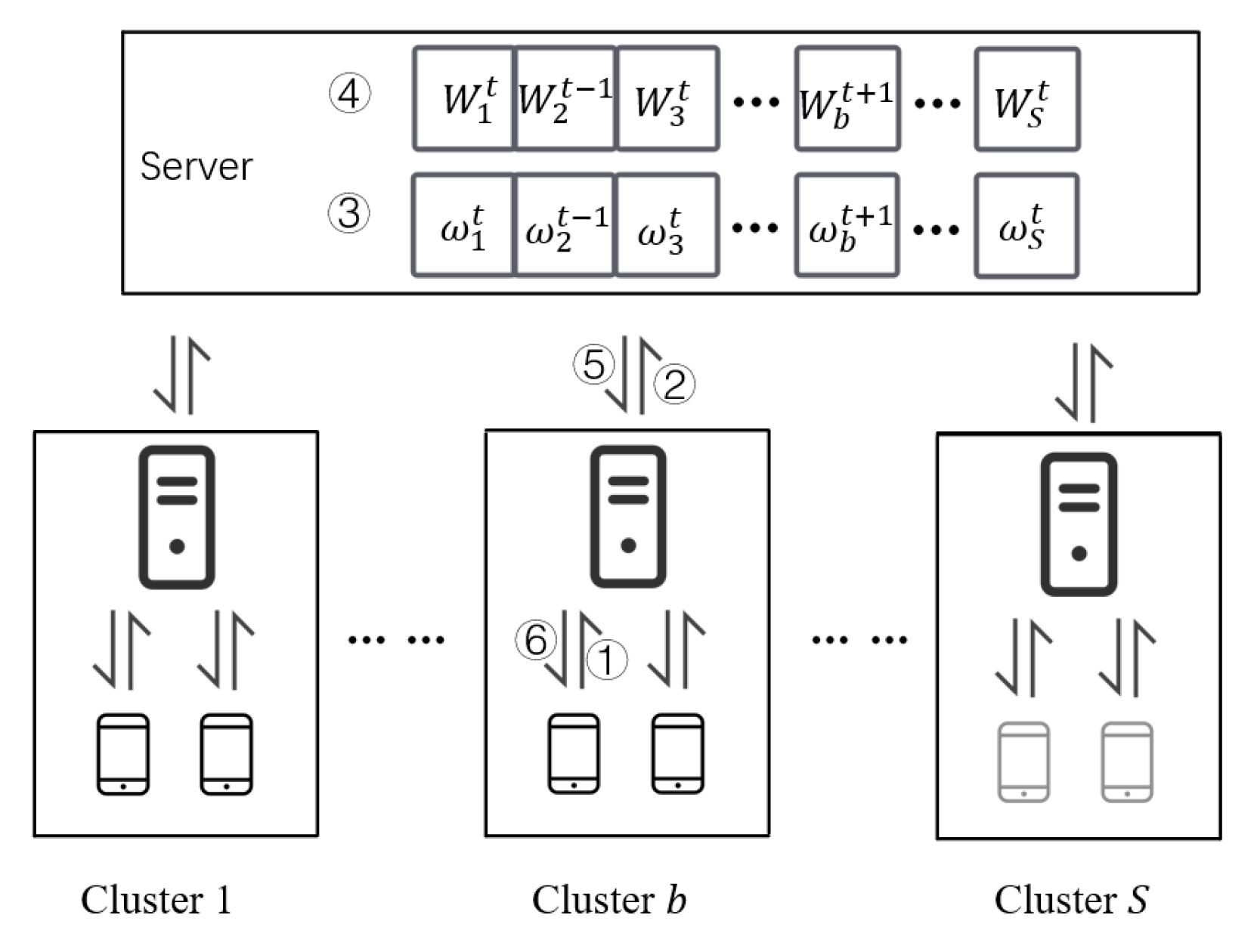

3.3. The Major Tasks of Cluster Mediator

3.4. The Major Tasks of the Server

3.5. The Process of FedTCM

| Algorithm 1. FedTCM. |

| Input: is the cosine similarity clustering algorithm |

| Output: global model |

| 1: server process: |

| 2: Before training starts, receive label count vector |

| 3: |

| 4: for = 0,1…, do: |

| 5: Receive model from cluster |

| 6: |

| 7: |

| 8: |

| 9: Send to cluster , |

| 10: end for |

| 11: |

| 12: cluster mediator: |

| 13: Receive from server, send to clients in the cluster |

| 14: Receive from clients in cluster |

| 15: Aggregate the collected parameters: |

| 16: Send to server |

| 17: client device: |

| 18: Receive from cluster mediator |

| 19: for local iteration do: |

| 20: local update |

| 21: end for |

| 22: Send update model to cluster mediator |

4. Experiment and Results

4.1. Dataset and Pre-Processing

4.2. Federated Data Splitting

4.3. Baseline Algorithm

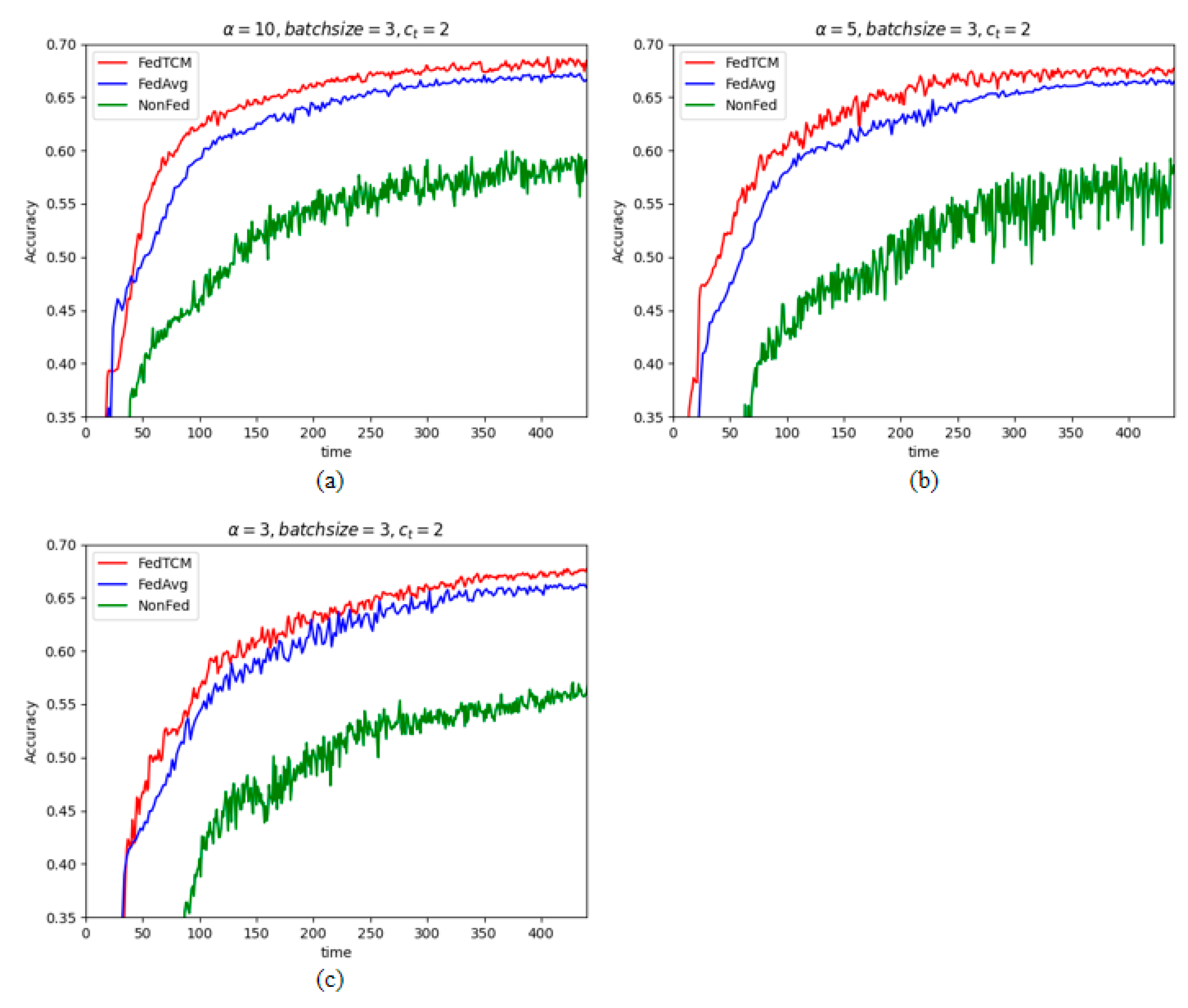

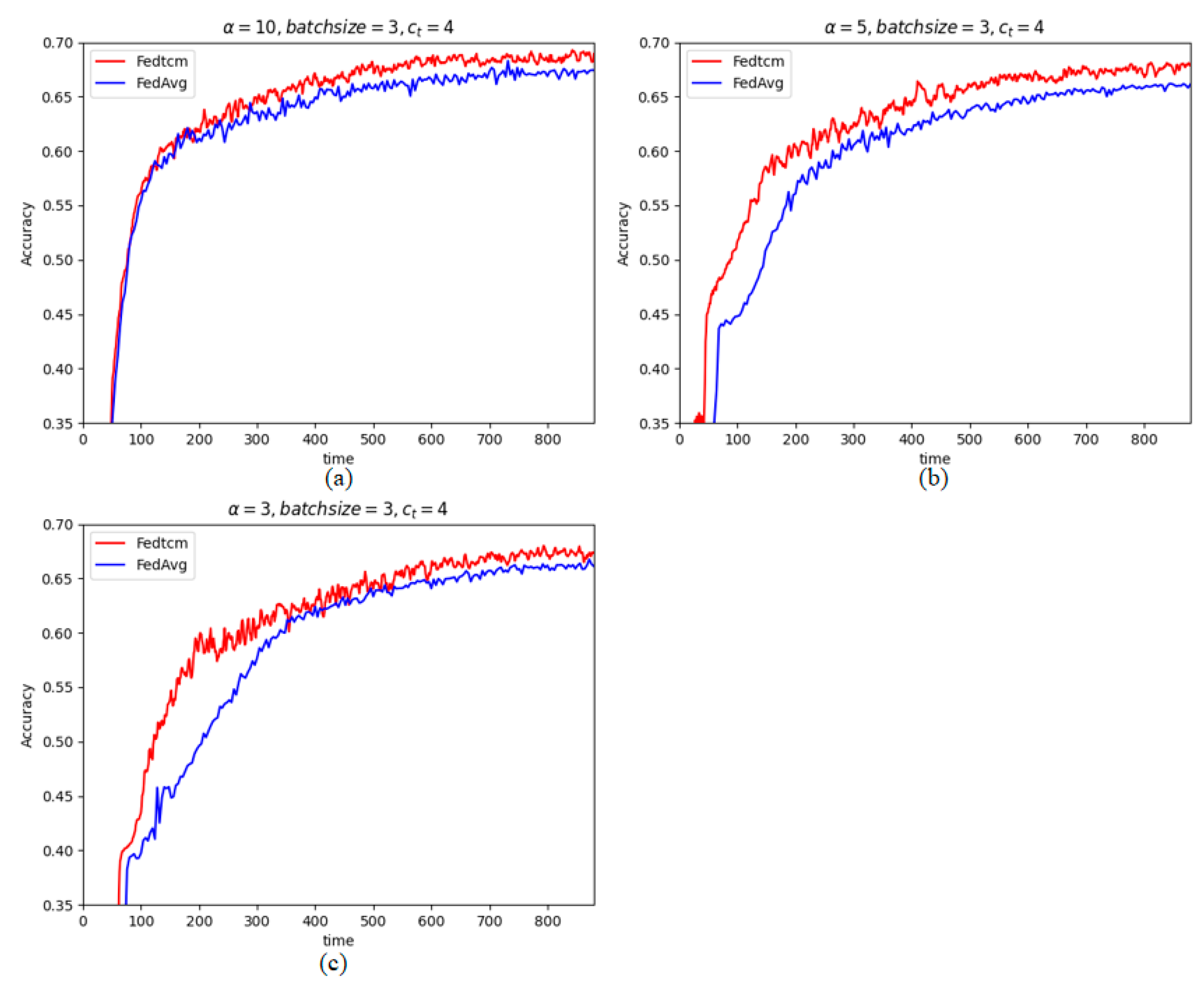

4.4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics Conference, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. In Proceedings of the Machine Learning and Systems, Austin, TX, USA, 2–4 March 2020; Volume 2, pp. 429–450. [Google Scholar]

- Gao, L.; Fu, H.; Li, L.; Chen, Y.; Xu, M.; Xu, C.-Z. Feddc: Federated learning with non-iid data via local drift decoupling and correction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10112–10121. [Google Scholar]

- Karimireddy, S.P.; Kale, S.; Mohri, M.; Reddi, S.; Stich, S.; Suresh, A.T. SCAFFOLD: Stochastic Controlled Averaging for Federated Learning. In Proceedings of the Machine Learning Research, 37th International Conference on Machine Learning, Vienna, Austria, 12–18 July 2020; pp. 5132–5143. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated learning with non-IID data. arXiv 2018, arXiv:1806.00582. [Google Scholar]

- Yao, X.; Huang, T.; Zhang, R.-X.; Li, R.; Sun, L. Federated learning with unbiased gradient aggregation and controllable meta updating. arXiv 2019, arXiv:1910.08234. [Google Scholar]

- Younis, R.; Fisichella, M. FLY-SMOTE: Re-balancing the non-IID iot edge devices data in federated learning system. IEEE Access 2022, 10, 65092–65102. [Google Scholar] [CrossRef]

- Duan, M.; Liu, D.; Chen, X.; Tan, Y.; Ren, J.; Qiao, L.; Liang, L. Astraea: Self-balancing federated learning for improving classification accuracy of mobile deep learning applications. In Proceedings of the 2019 IEEE 37th International Conference on Computer Design (ICCD), Abu Dhabi, United Arab Emirates, 17–20 November 2019; pp. 246–254. [Google Scholar]

- Xie, C.; Koyejo, S.; Gupta, I. Asynchronous federated optimization. arXiv 2019, arXiv:1903.03934. [Google Scholar]

- Hu, C.-H.; Chen, Z.; Larsson, E.G. Device scheduling and update aggregation policies for asynchronous federated learning. In Proceedings of the 2021 IEEE 22nd International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Lucca Italy, 27–30 September 2021; pp. 281–285. [Google Scholar]

- Jeong, E.; Oh, S.; Kim, H.; Park, J.; Bennis, M.; Kim, S.-L. Communication-efficient on-device machine learning: Federated distillation and augmentation under non-IID private data. arXiv 2018, arXiv:1811.11479. [Google Scholar]

- Long, G.; Xie, M.; Shen, T.; Zhou, T.; Wang, X.; Jiang, J. Multi-center federated learning: Clients clustering for better personalization. World Wide Web 2023, 26, 481–500. [Google Scholar] [CrossRef]

- Duan, M.; Liu, D.; Ji, X.; Liu, R.; Liang, L.; Chen, X.; Tan, Y. Fedgroup: Efficient federated learning via decomposed similarity-based clustering. In Proceedings of the 2021 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), New York, NY, USA, 30 September–3 October 2021; pp. 228–237. [Google Scholar]

- Huang, Y.; Chu, L.; Zhou, Z.; Wang, L.; Liu, J.; Pei, J.; Zhang, Y. Personalized cross-silo federated learning on non-IID data. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 7865–7873. [Google Scholar]

- Hsu, T.-M.H.; Qi, H.; Brown, M. Measuring the effects of non-identical data distribution for federated visual classification. arXiv 2019, arXiv:1909.06335. [Google Scholar]

- Kopparapu, K.; Lin, E. Fedfmc: Sequential efficient federated learning on non-iid data. arXiv 2020, arXiv:2006.10937. [Google Scholar]

- Jamali-Rad, H.; Abdizadeh, M.; Singh, A. Federated learning with taskonomy for non-IID data. IEEE Trans. Neural Netw Learn Syst. 2022, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Xue, Y.; Klabjan, D.; Luo, Y. Aggregation delayed federated learning. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 85–94. [Google Scholar]

- Khodak, M.; Balcan, M.-F.F.; Talwalkar, A.S. Adaptive gradient-based meta-learning methods. In Proceedings of the 33rd International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 8–14 December 2019; pp. 5917–5928. [Google Scholar]

- Shi, G.; Li, L.; Wang, J.; Chen, W.; Ye, K.; Xu, C. HySync: Hybrid federated learning with effective synchronization. In Proceedings of the 2020 IEEE 22nd International Conference on High Performance Computing and Communications (HPCC), Yanuca Island, Cuvu, Fiji, 14–16 December 2020; pp. 628–633. [Google Scholar]

- Ghosh, A.; Hong, J.; Yin, D.; Ramchandran, K. Robust federated learning in a heterogeneous environment. arXiv 2019, arXiv:1906.06629. [Google Scholar]

- Ghosh, A.; Chung, J.; Yin, D.; Ramchandran, K. An efficient framework for clustered federated learning. Adv. Neur. Inf. Process. Syst. 2020, 33, 19586–19597. [Google Scholar] [CrossRef]

| Notation | Definition |

|---|---|

| FL | federated learning |

| Non-IID | non-identically and non-independently distributed |

| first-tier cache list | |

| position of list | |

| second-tier cache list | |

| position of list | |

| the label count vector of clients | |

| the components of the vectors | |

| the cosine similarity between clients | |

| loss function | |

| the number of dataset labels | |

| the one-hot vector of the model output | |

| {} | the characteristics and label of the data sample |

| the one-hot vector of | |

| the local empirical risk of the client | |

| the dataset of client in cluster | |

| the number of datasets in cluster | |

| the number of datasets of client in cluster | |

| the model parameters of client in cluster at | |

| the aggregation parameters of cluster at | |

| the aggregation parameters of first-tier cache at | |

| learning rate | |

| epoch | |

| the threshold value of cosine similarity | |

| all clusters | |

| the number of clusters | |

| the number of clients | |

| the degree of heterogeneity of the data distribution | |

| batch size | |

| delay time parameter |

| Device | |||

|---|---|---|---|

| Central server | 1981 | 2294 | 2521 |

| Local client | 260 * | 287 * | 256 * |

| Device | |||

|---|---|---|---|

| Central server | 2295 | 2690 | 3368 |

| Local client | 341 * | 338 * | 336 * |

| Device | FedAvg | ||

|---|---|---|---|

| Central server | 4400 | 2262 * | 2784 * |

| Local client | 220 | 268 * | 338 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Li, Z. A Clustered Federated Learning Method of User Behavior Analysis Based on Non-IID Data. Electronics 2023, 12, 1660. https://doi.org/10.3390/electronics12071660

Zhang J, Li Z. A Clustered Federated Learning Method of User Behavior Analysis Based on Non-IID Data. Electronics. 2023; 12(7):1660. https://doi.org/10.3390/electronics12071660

Chicago/Turabian StyleZhang, Jianfei, and Zhongxin Li. 2023. "A Clustered Federated Learning Method of User Behavior Analysis Based on Non-IID Data" Electronics 12, no. 7: 1660. https://doi.org/10.3390/electronics12071660

APA StyleZhang, J., & Li, Z. (2023). A Clustered Federated Learning Method of User Behavior Analysis Based on Non-IID Data. Electronics, 12(7), 1660. https://doi.org/10.3390/electronics12071660