1. Introduction

High-Efficiency Video Coding (H265/HEVC) is the next generation of Advanced Video Coding (H.264/AVC) compression technology, aimed at improving compression efficiency. Compared to earlier standards, H265/HEVC saves twice as much bandwidth. The desire for streaming digital video or video storing services is unavoidable as the Internet grows. H265/HEVC helps boost speed and capacity with its highly efficient coding efficiency. Moreover, H265/HEVC also outperforms high-resolution videos (4K, 8K).

Most video coding standards use intra-prediction to eliminate spatial redundancies by generating a predicted block based on nearby pixels. In H265/HEVC, the intra-prediction unit has been updated to achieve efficient coding; intra-prediction now has 35 prediction modes, nine more than H.264/AVC currently provides, and supports prediction unit (PU) sizes ranging from to (sample × sample) in comparison to the largest PU size of in H.264/AVC. H265/HEVC offers more complex intra-prediction, which has a substantial impact on coding efficiency because of the enormous computational complexity.

Intra-prediction consumes significant processing time, motivating researchers to employ various strategies to reduce algorithm complexity. Nevertheless, the output FPS of software solutions cannot meet the daily needs of the majority of consumers. Currently, streaming high-quality video in real time is common, yet, encoding these videos at high FPS is difficult with the present software solutions. As a result, we can bypass software constraints by employing hardware acceleration.

Many researchers investigated implementing the intra-prediction algorithm on hardware to achieve higher output framerates and more energy efficiency than the software version. Using the data reuse strategy for decreasing the number of computations, the proposed [

1] solution helps to reduce 80% of the computation time to reach a framerate of 30 FPS for the FHD resolution. Nevertheless, it only supports PU sizes of

and

. Therefore, the authors deploy a completely pipelined solution constructed on the Field Programmable Gate Arrays (FPGA) in the architecture [

2], which supports all intra-prediction modes with all PU sizes to reach the framerate at 24 FPS for the 4K resolution.

Hasan Azgin and their colleagues proposed a Computation and Energy Reduction Method for HEVC Intra Prediction [

3] in 2017. It was demonstrated that using 24.63% less energy than the original H.265/HEVC intra-prediction equation, 40 FPS can be produced for the FHD resolution at 166MHz. In 2018, [

4] developed an effective FPGA implementation for the approximate intra-prediction algorithm using digital signal processing (DSP) blocks instead of adders and shifters. This design can handle 55 FPS for the FHD resolution while consuming less energy. These proposals aim to reduce computational complexity while increasing energy efficiency. However, with the continued demand for high-quality streaming video, the ability to read-time encode at the 4K resolution and high FPS is necessary.

The contributions of this paper are as follows:

- (1)

We propose a completely pipelined architecture for the intra-prediction module. By implementing DC, Planer, and Angular processing units in parallel, we effectively minimize execution time to increase the throughput. The predicted samples are generated for all PU sizes in one single clock cycle.

- (2)

A flexible partitioning of cell predictions was introduced for the angular prediction modes, which enhances parallelism up to 16 PUs with sizes of or each time. Our solutions provide a processing speed of 210 FPS for the FHD resolution and 52 FPS for the 4K resolution and support all prediction modes and PU sizes.

The rest of the paper is organized as follows.

Section 2 explains the H.265/HEVC coding structure and intra-prediction algorithm.

Section 3 explains the proposed hardware architecture for intra-prediction. Next,

Section 4 and

Section 5 show the functional verification and synthesis results of the proposed design. Finally,

Section 6 states our conclusions.

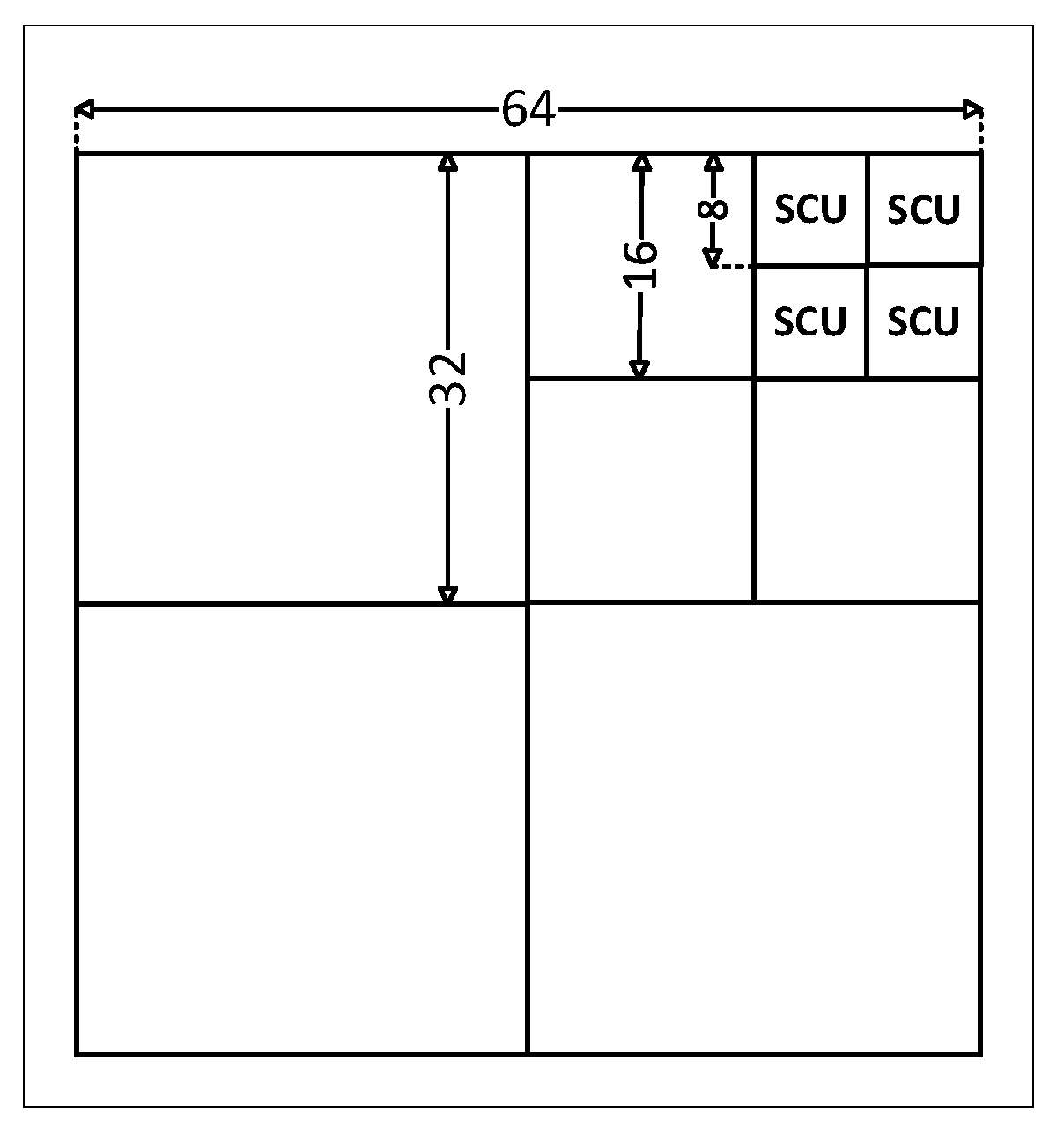

3. Hardware Implementation of H.265/HEVC Intra-Prediction

The proposed hardware architecture supports all intra-prediction modes, and all PU block sizes (

,

,

,

). According to

Figure 5, the input reference samples are conditionally filtered and divided into three main datapaths for DC, planar, and angular prediction modules, as shown in

Table 1, and the output predicted samples are fed to the SAD (Sum of Absolute Difference) module to calculate the cost for each prediction mode.

3.1. Reference Sample Filtering

In the Reference Samples Filtering stage, a three-tap filter is applied according to Equations (

1)–(

3);

Figure 6 depicts the hardware implementation of the three-tap filter. It has a pipeline behavior and requires three adders and two shifters.

We used a tree-tap filtering cell for each reference sample and a multiplexer to select the output-filtered references based on the size of the prediction block to filter all of the input reference samples. The configuration of three-tap filtering cells is depicted in

Figure 7. Depending on the size of the prediction block, a varied number of filtering cells is triggered to generate the output. All filtering cells are activated in the

block; however, only cells 0 to 8 are activated in the

block. It enables the Reference Samples Filter module to process reference samples of any size without duplicating hardware resources.

3.2. Angular Prediction

The angular prediction Equations (

8) and (

9) require reference samples and

i,

f values for each prediction; before delivering the predicted samples, they can be flipped or post-filtered if necessary.

Figure 8 depicts our proposed hardware architecture for the Angular prediction module.

The top and left references are chosen in our architecture to generate main and side reference samples for the next stage. The “REFERENCE SELECT” module processes the selection using multiplexers, as shown in

Figure 9.

The “NEGATIVE REFERENCE EXTEND” module processes the main and side references to generate the reference with the required negative index reference samples for prediction equations later. We have already calculated i and f for each mode and kept these values in memory as a look-up table to reduce the effort of finding i and f.

To implement Equations (

8) and (

9) without employing a multiplier, we use the PEA [

2] (Processing Element for Angular) concept. Equation (

9) can be rearranged as follows:

As shown in

Figure 10, a group of five 2to1 multiplexers and six adders is employed, and a three-stage pipeline is used to reduce propagation time and increase throughput.

Another technique to implement Equations (

8) and (

9) in FPGA is to use direct multiplication operations offered by the DSP block, as shown in

Figure 11. The DSP blocks are particularly efficient regarding power consumption and may be customized. Moreover, they work well with binary multipliers and accumulators. Therefore, Equation (

9) should be modified using the DSP as follows:

Then, Equation (

26) matches to the custom implementation

of DSP48 slice, where:

The biggest PU size

needs 1024 PEA units to predict 1024 samples for one mode in the angular prediction architecture. The PU size

needs 64 PEA units to predict 64 samples for one mode. Thus, we split those 1024 PEA units into 16 groups (64 PEA units/group), then each group is used to predict 64 samples for one mode. Therefore, 16 modes can be predicted in parallel. The parallelism for PU size

is achieved in the same manner. Using flexible PEAs, we can obtain a maximum throughput of 1024 predicted samples per clock cycle. The predicted block will be flipped at the “SAMPLES FLIP” module in the case of horizontal modes. The flip process is depicted in

Figure 12.

For modes 10 and 26, if the control signal

, then post-processing is required. The post-processing process applies Equations (

14) and (

15) to filter discontinuities in the predicted block boundary.

3.3. Planar Prediction

Module Planar prediction in Intra Prediction helps solve the image’s areas with countering and blockiness inside a PU block. Similar to Angular Prediction, we also transfer multiplications in Equations (

17) and (

18) into the adders and shifters module to reduce the complexity of multiplications. For example, in the case of PU size is

, with

, Equations (

17) and (

18) are as follows:

In the case of

and

, then the formula

is:

Two multiplications in (

36) and (

37) can be transformed into the shifter and adder modules according to the following formula:

Compared to employing multipliers, this transformation uses a set of shifters and adders instead of multipliers, as shown in

Figure 13, which reduces the resources required to calculate the values of

and

.

The module’s input is determined by whether the calculated value is or . If is the calculating value, the input value will be , left reference samples, value, and reversed. These two numbers will be calculated in parallel simultaneously to increase throughput.

Figure 13 depicts a module with a two-stage pipeline to calculate in the situation of a PU size

, in which the values of

and

shift from 0 (0 0 0) to 7 (1 1 1). As a result, it requires three bits to cover all cases, with these bits simultaneously acting as the input for three corresponding multiplexers. If

, the selected values will be identical to the value of the reference sample. If

, the input values will be either Top_Right (

) or Bottom_Left (

) depending on which value needs to be calculated. Then, these values are combined to produce the calculation-required value of

and

[

2].

The number of multiplexers and shifters used to decode the relevant bits varies depending on the PU size. A maximum of five multiplexer sets for PU and a minimum of 2 multiplexer sets for PU .

These modules are used as the sub-modules to operate Equation (

16), thus creating predicted samples for 8 × 8 size module, as shown in

Figure 14. The same method is used for

,

, and

PU sizes.

The Planar Prediction module’s output is the predicted sample values at the location that has to be predicted after using the Planar Prediction algorithm. Then, depending on the processing PU size, these values are chosen by employing a multiplexer. The selection signal input of this multiplexer is controlled by the value of

, as shown in

Figure 15.

3.4. DC Prediction

The architecture of the module DC prediction is relatively straightforward because the outcome of DC Prediction is simply a calculated dcVal value based on the sum of left and top reference samples, which are then attached to every single output sample location inside the PU block.

In this module, the values of the top and left reference samples are sequentially passed by the add module to be added. In the case of PU size , we employ a pipelined adder tree with 63 adder stages for adding at this step. It takes six clock cycles to add all of the left and top references.

The values of

,

, and

in DC prediction must pass through post-processing procedures, as indicated in

Section 3.4. Hence, after adding all of the left and top samples, the dcVal output is attached to a series of three parallel filter modules to determine the output value.

Figure 16 depicts a complete DC prediction module with the filters that have been added.

In special cases with predicted samples at positions , , and , when the dcVal value is ready, it is put inside three-taps () or two-taps filter module ( and ), a multiplexer set is added to these values with control signal is a variable. In the case , the output value equals after processing through filters. Vice versa, if , the final value is . The first output requires seven clock cycles to complete all pipeline registers, and the next projected value is ready at each cycle.

4. Functional Verification

To validate our solution, we create a Universal Verification Methodology (UVM) environment, as illustrated in

Figure 17. The Angular, DC, and Planar sequences are randomized and delivered to DUT via a virtual interface. The DUT output is gathered and monitored before being compared to the output of the H.265/HEVC intra-prediction software reference model and updated in the coverage report.

An open-source H.265/HEVC encoder [

11] is used as a reference model for the intra-prediction module in this research. The model was created in the C programming language and supports all intra-prediction modes and PU sizes. By employing the SystemVerilog Direct Programming Interface [

12] (DPI), which allows SystemVerilog to connect directly with functions written in C, we can eliminate errors in constructing our own software reference model because we reuse existing C functions from [

11].

Questasim 10.7c is used to operate our test environment. A test case is considered “PASSED” when the same input reference sample is used, and the prediction output of the DUT with the software model is the same. We set coverage checkpoints for all prediction modes to ensure that our design was covered during the simulation phase. The simulation results indicate that our design function was successful.

5. Synthesis Results

The proposed hardware architecture is described in SystemVerilog, with a synthesis target of Xilinx Virtex-7 (xc7vx485tffg1761-3) and a speed grade = −2.

The latencies of the PU modules taken to perform in a CU module are described in

Table 3. The parameters Latency of load reference samples, Latency of reconstruction loop, Latency of sample prediction, and Number PUs in 1 CU are labeled by (1), (2), (3), and (4). For the PU

, PU 8 × 8, PU

, and PU

, the latencies of loading reference samples are 1, 1, 2, and 4, respectively. As shown in

Figure 2, the latencies of the reconstruction loop of those PUs are the delay from the Sample Prediction unit, Subtraction, Transform, Quantization, Inverse Quantization, Inverse transform, and Summation modules. For the optimal pipelined design, the delay of the Subtraction, Transform, Quantization, Inverse Quantization, Inverse transform, and Summation modules can be estimated by 1, 2, 2, 2, 2, and 1 cycles, respectively. The latencies of the Sample Prediction unit are 3, 3, 36, and 36 clocks for the PU

, PU 8 × 8, PU

, and PU

, respectively. Therefore, the latencies of the reconstruction loop of those PUs are 13, 13, 46, and 46, respectively. For the worst-case prediction for one CU module, 1 PU

, 4 PUs

, 16 PUs 8 × 8, and 64 PUs

are performed. Then, the total latency to finish one CU module is 546 cycles. Therefore, as shown in

Table 4, if we do the intra-prediction for the FHD frame, there are 2020 CU modules to be executed. Then, the frame rate is 210 FPS. If we do the intra-prediction for the 4K frame, there are 8100 CU modules to be executed. Then, the frame rate is 52 FPS.

The synthesis comparison results are shown in

Table 5 and

Table 6. The slide LUTS utilization costs 73%, and the slice registers utilization costs 41% of the FPGA resources. The memory utilization of our design is high because all reconstructed samples are stored in register buffers, including the original buffer, reference buffer, and control buffer, as shown in

Figure 5.

Compared to earlier works, the proposed design [

13] accelerates the throughput of the most frequently used PU (PU

). This approach provides a frame rate of 4.38 FPS for the 4K resolution. To increase the throughput up to 7.5 FPS, the authors of [

14,

15] applied the pipelined TU coding and the paralleled intra-prediction architectures. By applying a fully parallel manner for the mode prediction, transformation, quantization, inverse quantization, inverse transformation, rate estimation, and reconstruction processes, [

16,

17] provided a frame rate of 11.25 FPS. To improve both throughput and hardware resources, ref. [

18] proposed a four-stage pipeline architecture. This approach provides a frame rate of 15 FPS with a high bit rate/area (47 Kbps/LUT). To reach real-time 4K video processing of 24 FPS, [

2] simplified the equations of all calculations for reference sample preparations and applied parallel computing. The authors of [

19,

22] investigated parallelization of Kvazaar-based intra-encoder on CPU and FPGA platforms to obtain the frame rate of 60 FPS for the 4K resolution with a bit rate/area of about 20 Kbps/LUT. The authors of [

20] studied the impact of a high-level synthesis (HLS) design method on the HEVC intra-prediction block decoder. Although this work provides a high bit rate/area (45.82 Kbps/LUT), the frame rate is only 2 FPS. To increase the frame rate of 15 FPS, [

21] proposed the computationally scalable algorithm and the architecture design for the H.265/HEVC intra-encoder. This design provides a bit rate/area of 32.04 Kbps/LUT. The designs in [

23,

24] provide a high frame rate of 30 FPS for the 4K resolution. However, the hardware resources of these works are not mentioned. To extremely reduce the hardware resources, some works applied approximation algorithms to simplify the designs [

3,

4,

25,

26]. The frame rates of those designs are 10, 13.75, 30, and 24 FPS, respectively. Although these approaches helped to increase the bit rate/area performance extremely, their peak signal-to-noise ratios (PSNRs) are affected. In addition to implementing on FPGA platforms, some works are designed and implemented on the ASIC platform [

27,

28] to provide the frame rate of 30 FPS for the 8K resolution. As shown in

Table 5 and

Table 6, our work provides a frame rate of 52 FPS with a high bit rate/area (48 Kbps/LUT). This throughput is high enough for the real-time processing of the 4K video frame.