Grid-Based Low Computation Image Processing Algorithm of Maritime Object Detection for Navigation Aids

Abstract

:1. Introduction

2. Literature Review

2.1. Three Types of Object Detection

2.2. Comparison of GLC and Literature Review

3. Grid-Based Low Computation Algorithm

3.1. Pixel Clustering and Greyscale

3.2. Horizontal Line Detection

3.3. Maritime Object Detection

- GLC is able to detect a vessel when it floats on the horizontal line: this is a practical assumption since the speed of a maritime vessels is typically much slower than vehicles on land;

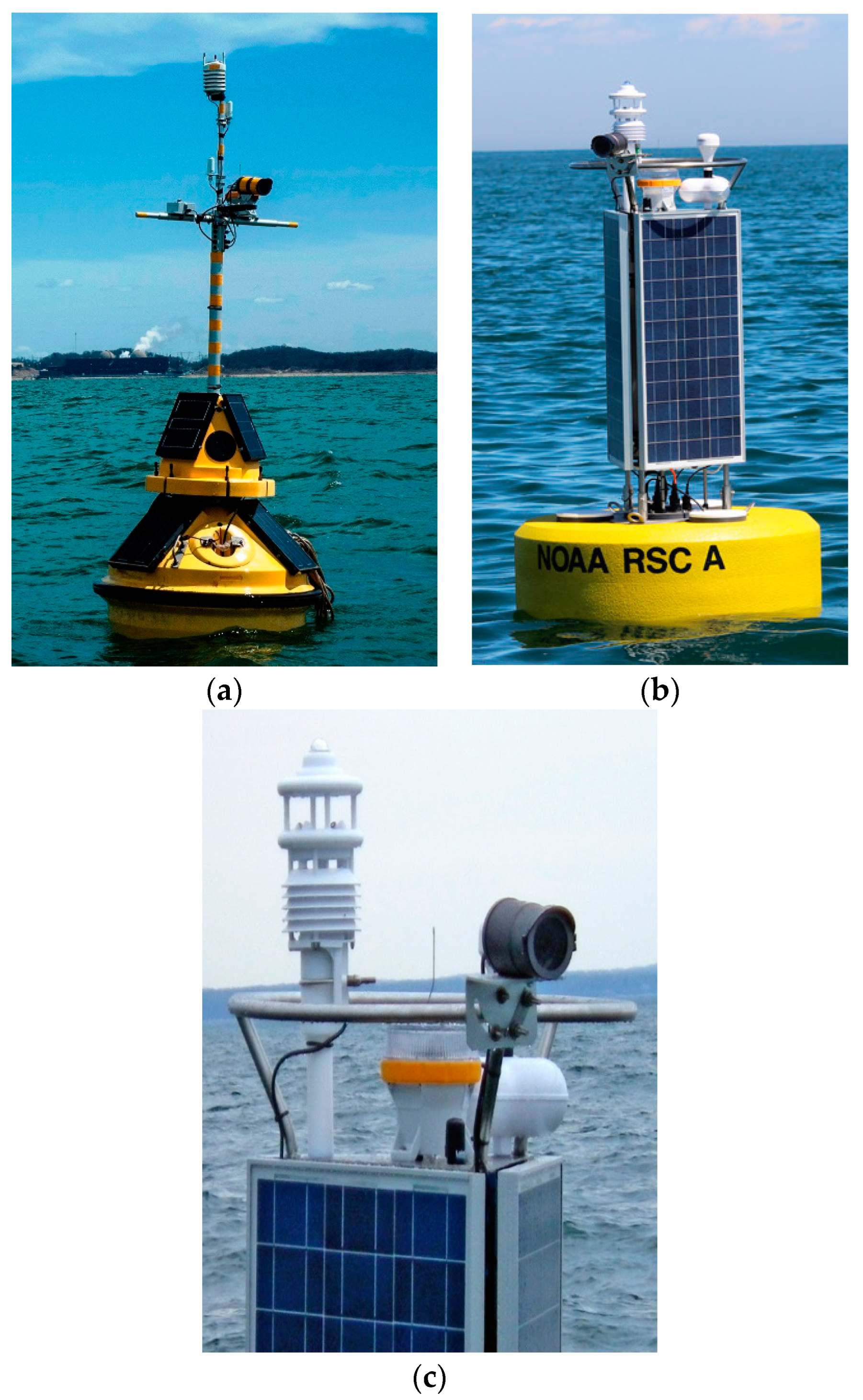

- Input images from mounted cameras must have a horizon line: the target application of the GLC algorithm is navigation aids accident prevention systems which are assumed to be located in sea and surrounded by horizon lines in 360 degrees.

3.4. Overall Process Description

4. Experiments

4.1. Experiment Environments

4.2. Experiment Result Definitions

- Figure 11a: there is an object in an image and GLC detects the horizon within a grid in which the actual horizon exists. GLC decides there is an object in the image because the result of Equation (3) is larger than 20, where the object exists. This paper defines this case as ‘horizon decision success’ and ‘object decision success’.

- Figure 11b: there is an object in an image and GLC detects the horizon within the grid in which the object exists. This error occasionally occurs with GLC as well as existing algorithms when the object size or the hue contrast of the object is large. In this case, however, GLC still has the possibility of successfully detecting an object in an image. The aim of GLC is to locate ROI rather than finding an accurate coordinate of a line; therefore, this paper defines this case as ‘horizon decision success’. GLC successfully detects the object where the object exists and so, the object decision of this example image is ‘object decision success’.

- Figure 11c: there is no object in an image and the result of Equation (3) is always less than 20. Therefore, this paper defines this case as ‘horizon decision success’ and ‘object decision success’.

- Figure 11d: there is no object in an image and GLC successfully detects a horizon. However, GLC decides there is an object because reflection on the surface makes noises. GLC makes a wrong decision and will misinform the AI algorithm. This paper defines this case as ‘horizon decision success’ and ‘object decision fail’.

4.3. GLC Performanc Evaluataion

4.4. Horizon Detection Performance Comparison

4.5. Object Detection Performance Comparison

5. Conclusions

- This paper proposed a new image processing approach called GLC aiming for extremely low energy consumption for maritime object detection;

- The grid-based approach was optimized for maritime object detection since grids avoid errors caused by subtle changes in the moving background;

- This paper compared GLC with existing and well-known image processing algorithms and demonstrated that GLC significantly reduces the image processing time also can increase the image detection rate;

- Using the GLC algorithm, navigation aids can extend their functions to long-term accident prevention system using cameras mounted on them.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kirimtat, A.; Krejcar, O.; Kertesz, A.; Tasgetiren, M.F. Future trends and current state of smart city concepts: A survey. IEEE Access 2020, 8, 86448–86467. [Google Scholar] [CrossRef]

- Forti, N.; d’Afflisio, E.; Braca, P.; Millefiori, L.M.; Carniel, S.; Willett, P. Next-Gen Intelligent Situational Awareness Systems for Maritime Surveillance and Autonomous Navigation. Proc. IEEE 2022, 110, 1532–1537. [Google Scholar] [CrossRef]

- Babić, A.; Oreč, M.; Mišković, N. Developing the concept of multifunctional smart buoys. In Proceedings of the OCEANS 2021: San Diego—Porto, San Diego, CA, USA, 20–23 September 2021. [Google Scholar]

- Ng, Y.; Pereira, J.M.; Garagic, D.; Tarokh, V. Robust Marine Buoy Placement for Ship Detection Using Dropout K-Means. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Ramos, M.A.; Utne, I.B.; Mosleh, A. Collision avoidance on maritime autonomous surface ships: Operators’ tasks and human failure events. Saf. Sci. 2019, 116, 33–44. [Google Scholar] [CrossRef]

- Raymond, B.; Christopher, H.; Robert, C.; Helmut, P. Counter-vandalism at NDBC. In Proceedings of the 2014 Oceans—St. John’s, St. John’s, NL, Canada, 14–19 September 2014. [Google Scholar]

- Shugar, D.H.; Jacquemart, M.; Shean, M.; Bhushan, S.; Upadhyay, K.; Sattar, A.; Schwanghart, W.; McBride, S.; Van Wyk de Vries, M.; Mergili, M.; et al. A massive rock and ice avalanche caused the 2021 disaster at Chamoli, Indian Himalaya. Science 2021, 373, 300–306. [Google Scholar] [CrossRef]

- O’Neil, K.; LeBlanc, L.; Vázquez, J. Eyes on the Ocean applying operational technology to enable science. In Proceedings of the OCEANS 2015—MTS/IEEE Washington, Washington, DC, USA, 19–22 September 2015. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Del Pizzo, S.; De Martino, A.; De Viti, G.; Testa, R.L.; De Angelis, G. IoT for buoy monitoring system. In Proceedings of the IEEE International Workshop on Metrology for Sea (MetroSea), Bari, Italy, 8–10 October 2018. [Google Scholar]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video processing from electro-optical sensors for object detection and tracking in a maritime environment: A survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef]

- Jingling, L.; Dongke, L. Ship target detection based on adverse meteorological conditions. In Proceedings of the Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2022. [Google Scholar]

- Meifang, Y.; Xin, N.; Ryan, W.L. Coarse-to-fine luminance estimation for low-light image enhancement in maritime video surveillance. In Proceedings of the Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019. [Google Scholar]

- Yu, G.; Yuxu, L.; Ryan, W.L.; Lizheng, W.; Fenghua, Z. Heterogeneous twin dehazing network for visibility enhancement in maritime video surveillance. In Proceedings of the International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–25 September 2021. [Google Scholar]

- Takumi, N.; Etsuro, S. A Preliminary Study on Obstacle Detection System for Night Navigation. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020. [Google Scholar]

- Petković, M.; Vujović, I.; Kuzmanić, I. An overview on horizon detection methods in maritime video surveillance. Trans. Marit. Sci. 2020, 9, 106–112. [Google Scholar] [CrossRef]

- Gershikov, E.; Libe, T.; Kosolapov, S. Horizon line detection in marine images: Which method to choose? Int. J. Adv. Intell. Syst. 2013, 6, 79–88. [Google Scholar]

- Hashmani, M.A.; Umair, M.; Rizvi, S.S.H.; Gilal, A.R. A survey on edge detection based recent marine horizon line detection methods and their applications. In Proceedings of the IEEE International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 29–30 January 2020. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Hough, V.C. Method and Means for Recognizing Complex Pattern. U.S. Patent 3069654, 1962. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Muhuri, A.; Ratha, D.; Bhattacharaya, A. Seasonal Snow Cover Change Detection Over the Indian Himalayas Using Pola-ri-metric SAR Images. IEEE Geosci. Remote Sens. Lett. 2017, 12, 2340–2344. [Google Scholar] [CrossRef]

- Henley, C.; Maeda, T.; Swedish, T.; Raskar, R. Imaging Behind Occluders Using Two-Bounce Light. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Prasad, D.K.; Prasath, C.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Object detection in a maritime environment: Performance evaluation of background subtraction methods. IEEE Trans. Intell. Transp. Syst. 2018, 20, 1787–1802. [Google Scholar] [CrossRef]

- Prasad, D.K.; Dong, H.; Rajan, D.; Quek, C. Are object detection assessment criteria ready for maritime computer vision? IEEE Trans. Intell. Transp. Syst. 2019, 21, 5295–5304. [Google Scholar] [CrossRef]

- Fefilatyev, S.; Goldgof, D.; Shreve, M.; Lembke, C. Detection and tracking of ships in open sea with rapidly moving buoy-mounted camera system. Ocean Eng. 2012, 54, 1–12. [Google Scholar] [CrossRef]

- Zhenfeng, S.; Linggang, W.; Zhongyuan, W.; Wan, D.; Wenjing, W. Saliency-aware convolution neural network for ship detection in surveillance video. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 781–794. [Google Scholar]

- Sung, W.M.; Jiwon, L.; Jungsoo, L.; Dowon, N.; Wonyoung, Y. A Comparative study on the maritime object detection performance of deep learning models. In Proceedings of the International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 21–23 October 2020. [Google Scholar]

- Safa, M.S.; Manisha, N.L.; Gnana, K.; Vidya, K.M. A review on object detection algorithms for ship detection. In Proceedings of the International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021. [Google Scholar]

- Hao, L.; Deng, L.; Cheng, Y.; Jianbo, L.; Zhaoquan, G. Enhanced YOLO v3 tiny network for real-time ship detection from visual image. IEEE Access 2021, 9, 16692–16706. [Google Scholar]

- Liu, T.; Zhou, B.; Zhao, Y.; Yan, S. Ship detection algorithm based on improved YOLO V5. In Proceedings of the International Conference on Automation, Control and Robotics Engineering (CACRE), Dalian, China, 15–17 July 2021. [Google Scholar]

- Duarte, N.; João, F.; Bruno, D.; Rodrigo, V. Real-time vision based obstacle detection in maritime Environments. In Proceedings of the International Conference on Autonomous Robot Systems and Competitions (ICARSC), Santa Maria de Feira, Portugal, 29–30 April 2022. [Google Scholar]

- Hegarty, A.; Westbrook, G.; Glynn, D.; Murray, D.; Omerdic, E.; Toal, D. A low-cost remote solar energy monitoring system for a buoyed IoT ocean observation platform. In Proceedings of the IEEE World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019. [Google Scholar]

- Micaela, V.; Gianluca, B.; Davide, S.; Mattia, V.; Marco, A.; Francesco, G.; Alessandro, C.; Roberto, C.; Marko, B.; Marco, S. A systematic assessment of embedded neural networks for object detection. In Proceedings of the International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020. [Google Scholar]

- Kanokwan, R.; Vasaka, V.; Ryousei, T. Evaluating the power efficiency of deep learning inference on embedded GPU systems. In Proceedings of the International Conference on Information Technology (INCIT), Nakhonpathom, Thailand, 2–3 November 2017. [Google Scholar]

- Chun, R.H.; Wei, Y.H.; Yi, S.L.; Chien, C.L.; Yu, W.Y. A Content-Adaptive Resizing Framework for Boosting Computation Speed of Background Modeling Methods. IEEE Trans. Syst. Man Cybern. Syst. 2020, 52, 1192–1204. [Google Scholar]

- Chun, R.H.; Wei, C.W.; Wei, A.W.; Szu, Y.L.; Yen, Y.L. USEAQ: Ultra-Fast Superpixel Extraction via Adaptative Sampling From Quantized Regions. IEEE Trans. Image Process. 2018, 27, 4916–4931. [Google Scholar]

- Gershikov, E. Is color important for horizon line detection? In Proceedings of the IEEE International Conference on Advanced Technologies for Communications (ATC), Hanoi, Vietnam, 15–17 October 2014. [Google Scholar]

- Chi, Y.J.; Hyun, S.Y.; Kyeong, D.M. Fast horizon detection in maritime images using region-of-interest. Int. J. Distrib. Sensor Netw. 2018, 14, 155014771879075. [Google Scholar]

- Ferreira, J.C.; Branquinho, J.; Paulo, C.F. Fernando, Computer vision algorithms fishing vessel monitoring—Identification of vessel plate number. In Proceedings of the International Symposium on Ambient Intelligence (ISAmI), Porto, Portugal, 21–23 June 2017. [Google Scholar]

- Shu, Z.; Shenggeng, H.; Qian, G.; Xindong, L.; Can, C.; Xinzheng, Z. A fusion detection algorithm of motional ship in bridge collision avoidance system. In Proceedings of the International Conference on Mechanical, Control and Computer Engineering (ICMCCE), Harbin, China, 8–10 December 2017. [Google Scholar]

- Zhan, Y.; Qing, Z.L.; Feng, N.Z. Ship detection for visual maritime surveillance from non-stationary platforms. Ocean Eng. 2017, 141, 53–63. [Google Scholar] [CrossRef]

| Horizon Decision | Object Decision | |

|---|---|---|

| 65 images having an object | Success: GLC decides a line within a grid where a horizon exists Success: GLC decides a line within a grid where an object exists | Success: GLC decides one object where the object exists |

| Fail: any other cases | ||

| Fail: any other cases | Fail | |

| 35 images having no object | Success: GLC detects a line within a grid where a horizon exists | Success: GLC decides no object |

| Fail: any other cases | ||

| Fail: any other cases | Fail |

| The Number of Grids | Horizon Detecting Processing Time (1 Frame) |

|---|---|

| 5 × 5 grids | 8.5 ms |

| 10 × 10 grids | 9.0 ms |

| 20 × 20 grids | 9.3 ms |

| 25 × 25 grids | 9.4 ms |

| 50 × 50 grids | 9.5 ms |

| 100 × 100 grids | 11 ms |

| Reason of Horizon Detection Failure | 25 × 25 Grids | Canny Hough | Canny Hough Otsu | |

|---|---|---|---|---|

| With an object | R1: Horizon is detected within a grid where the object exists. | 23 | 2 | 2 |

| R2: Horizon is detected within a grid where the object or horizon does not exist. | 2 | 6 | 4 | |

| R3: No horizon is detected | 0 | 41 | 36 | |

| With no object | R2: Horizon is detected within a grid where the horizon does not exist. | 8 | 0 | 3 |

| R3: No horizon is detected | 0 | 22 | 14 |

| Algorithm | Canny + Hough | Canny + Hough + Otsu | GLC |

|---|---|---|---|

| Time | 51 ms | 47 ms | 8.5~11 ms |

| 65 Images with an Object | 35 Images with No Object | |||

|---|---|---|---|---|

| Horizon detection success | GLC | CE + HT + Otsu | GLC | CE + HT + Otsu |

| 63 images | 25 images | 28 images | 18 images | |

| Object detection success | GLC | CCL | GLC | CCL |

| 57 images | 18 images | 27 images | 11 images | |

| Algorithm | Canny + Hough + Otsu + CCL | GLC (25 × 25 Grids) |

|---|---|---|

| Time | 71 ms (47 ms: line detection 24 ms: object detection) | 9 ms (line and object detection) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeon, H.-S.; Park, S.-H.; Im, T.-H. Grid-Based Low Computation Image Processing Algorithm of Maritime Object Detection for Navigation Aids. Electronics 2023, 12, 2002. https://doi.org/10.3390/electronics12092002

Jeon H-S, Park S-H, Im T-H. Grid-Based Low Computation Image Processing Algorithm of Maritime Object Detection for Navigation Aids. Electronics. 2023; 12(9):2002. https://doi.org/10.3390/electronics12092002

Chicago/Turabian StyleJeon, Ho-Seok, Sung-Hyun Park, and Tae-Ho Im. 2023. "Grid-Based Low Computation Image Processing Algorithm of Maritime Object Detection for Navigation Aids" Electronics 12, no. 9: 2002. https://doi.org/10.3390/electronics12092002