Evaluation of Hand Washing Procedure Using Vision-Based Frame Level and Spatio-Temporal Level Data Models

Abstract

1. Introduction

- A robust method of acquiring and utilizing RGB and depth data in real-time,

- Combination of different vision data models acquired from RGB and depth images for classification,

- An ideal camera position for hand-washing quality measurement,

- A custom dataset containing different vision-based data models,

- Comparison of 12 different neural network models making use of all the available data.

2. Related Works

2.1. Vision-Based Works

2.2. Sensor-Based Works

3. Materials and Methods

3.1. Camera and Positioning

3.2. Data Acquisition

3.3. Hand Detection

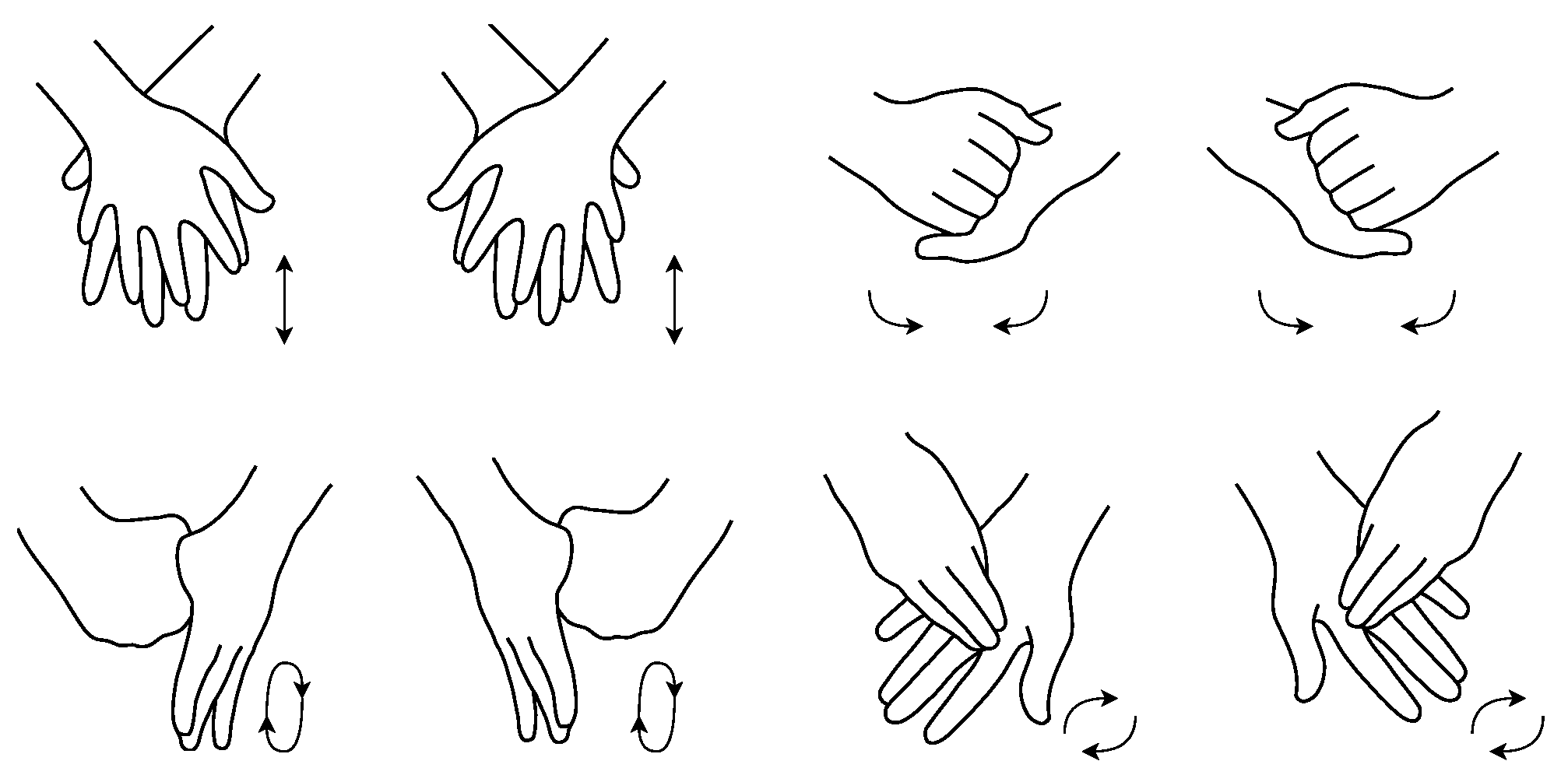

3.4. Gesture Classification

3.4.1. Frame Level Classification

3.4.2. Spatio-Temporal Level Classification

4. Results

5. Discussion

- It is possible to classify gestures of hand-washing procedure with high accuracy when both hands are captured in a single frame and treated as a single object rather than separate objects.

- We demonstrated that our introduced idea of using projection images and PGMs can be effectively used for hand-washing evaluation.

- Suggested camera positioning is efficient for data acquisition and robust hand tracking.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, Q.; Gao, Y.; Wang, X.; Liu, R.; Du, P.; Wang, X.; Zhang, X.; Lu, S.; Wang, Z.; Shi, Q.; et al. Nosocomial infections among patients with COVID-19, SARS and MERS: A rapid review and meta-analysis. Ann. Transl. Med. 2020, 8, 629. [Google Scholar] [CrossRef] [PubMed]

- Wiener-Well, Y.; Galuty, M.; Rudensky, B.; Schlesinger, Y.; Attias, D.; Yinnon, A.M. Nursing and physician attire as possible source of nosocomial infections. Am. J. Infect. Control 2011, 39, 555–559. [Google Scholar] [CrossRef] [PubMed]

- WHO’s Hand Washing Guidelines. Available online: https://www.who.int/multi-media/details/how-to-handwash-and-handrub (accessed on 15 February 2023).

- Llorca, D.F.; Parra, I.; Sotelo, M.Á.; Lacey, G. A vision-based system for automatic hand washing quality assessment. Mach. Vis. Appl. 2011, 22, 219–234. [Google Scholar] [CrossRef]

- Xia, B.; Dahyot, R.; Ruttle, J.; Caulfield, D.; Lacey, G. Hand hygiene poses recognition with rgb-d videos. In Proceedings of the 17th Irish Machine Vision and Image Processing Conference, Dublin, Ireland, 26–28 August 2015; pp. 43–50. [Google Scholar]

- Ivanovs, M.; Kadikis, R.; Lulla, M.; Rutkovskis, A.; Elsts, A. Automated quality assessment of hand washing using deep learning. arXiv 2020, arXiv:2011.11383. [Google Scholar]

- Prakasa, E.; Sugiarto, B. Video Analysis on Handwashing Movement for the Completeness Evaluation. In Proceedings of the 2020 International Conference on Radar, Antenna, Microwave, Electronics, and Telecommunications (ICRAMET), Serpong, Indonesia, 18–20 November 2020; pp. 296–301. [Google Scholar]

- Vo, H.Q.; Do, T.; Pham, V.C.; Nguyen, D.; Duong, A.T.; Tran, Q.D. Fine-grained hand gesture recognition in multi-viewpoint hand hygiene. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 1443–1448. [Google Scholar]

- Zhong, C.; Reibman, A.R.; Mina, H.A.; Deering, A.J. Designing a Computer-Vision Application: A Case Study for Hand-Hygiene Assessment in an Open-Room Environment. J. Imaging 2021, 7, 170. [Google Scholar] [CrossRef] [PubMed]

- Xie, T.; Tian, J.; Ma, L. A vision-based hand hygiene monitoring approach using self-attention convolutional neural network. Biomed. Signal Process. Control 2022, 76, 103651. [Google Scholar] [CrossRef]

- Mondol, M.A.S.; Stankovic, J.A. Harmony: A hand wash monitoring and reminder system using smart watches. In Proceedings of the 12th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services on 12th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Coimbra, Portugal, 22–24 July 2015; pp. 11–20. [Google Scholar]

- Wang, C.; Sarsenbayeva, Z.; Chen, X.; Dingler, T.; Goncalves, J.; Kostakos, V. Accurate measurement of handwash quality using sensor armbands: Instrument validation study. JMIR mHealth uHealth 2020, 8, e17001. [Google Scholar] [CrossRef] [PubMed]

- Samyoun, S.; Shubha, S.S.; Mondol, M.A.S.; Stankovic, J.A. iWash: A smartwatch handwashing quality assessment and reminder system with real-time feedback in the context of infectious disease. Smart Health 2021, 19, 100171. [Google Scholar] [CrossRef] [PubMed]

- Santos-Gago, J.M.; Ramos-Merino, M.; Álvarez-Sabucedo, L.M. Identification of free and WHO-compliant handwashing moments using low cost wrist-worn wearables. IEEE Access 2021, 9, 133574–133593. [Google Scholar] [CrossRef]

- Wang, F.; Wu, X.; Wang, X.; Chi, J.; Shi, J.; Huang, D. You Can Wash Better: Daily Handwashing Assessment with Smartwatches. arXiv 2021, arXiv:2112.06657. [Google Scholar]

- Zhang, Y.; Xue, T.; Liu, Z.; Chen, W.; Vanrumste, B. Detecting hand washing activity among activities of daily living and classification of WHO hand washing techniques using wearable devices and machine learning algorithms. Healthc. Technol. Lett. 2021, 8, 148. [Google Scholar] [CrossRef] [PubMed]

- Microsoft’s Kinect-2.0 Camera. Available online: https://developer.microsoft.com/en-us/windows/kinect/ (accessed on 15 February 2023).

- Depth Cameras. Available online: https://rosindustrial.org/3d-camera-survey (accessed on 13 April 2023).

- Cheng, H.; Yang, L.; Liu, Z. Survey on 3D hand gesture recognition. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 1659–1673. [Google Scholar] [CrossRef]

- Caputo, A.; Giachetti, A.; Soso, S.; Pintani, D.; D’Eusanio, A.; Pini, S.; Borghi, G.; Simoni, A.; Vezzani, R.; Cucchiara, R.; et al. SHREC 2021: Skeleton-based hand gesture recognition in the wild. Comput. Graph. 2021, 99, 201–211. [Google Scholar] [CrossRef]

- Youtube Comparison of Depth Cameras. Available online: https://youtu.be/NrIgjK_PeQU (accessed on 15 February 2023).

- PyKinect2. Available online: https://github.com/Kinect/PyKinect2 (accessed on 15 February 2023).

- Oudah, M.; Al-Naji, A.; Chahl, J. Hand gesture recognition based on computer vision: A review of techniques. J. Imaging 2020, 6, 73. [Google Scholar] [CrossRef] [PubMed]

- Jian, C.; Li, J. Real-time multi-trajectory matching for dynamic hand gesture recognition. IET Image Process. 2020, 14, 236–244. [Google Scholar] [CrossRef]

- OpenCV Library. Available online: https://opencv.org/ (accessed on 15 February 2023).

- Open3d Library. Available online: http://www.open3d.org/ (accessed on 15 February 2023).

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- PointNet-Keras Implementation. Available online: https://keras.io/examples/vision/pointnet/ (accessed on 15 February 2023).

- Liang, C.; Song, Y.; Zhang, Y. Hand gesture recognition using view projection from point cloud. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4413–4417. [Google Scholar]

- Ge, L.; Liang, H.; Yuan, J.; Thalmann, D. Robust 3d hand pose estimation in single depth images: From single-view cnn to multi-view cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3593–3601. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Yan, M.; Bohg, J. Meteornet: Deep learning on dynamic 3d point cloud sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9246–9255. [Google Scholar]

- Chen, J.; Meng, J.; Wang, X.; Yuan, J. Dynamic graph cnn for event-camera based gesture recognition. In Proceedings of the 2020 IEEE International Symposium on Circuits and Systems (ISCAS), Virtual, 10–21 October 2020; pp. 1–5. [Google Scholar]

- Tensorflow-Keras Library. Available online: https://keras.io/about/ (accessed on 15 February 2023).

- Plaid-ML Compiler. Available online: https://github.com/plaidml/plaidml (accessed on 15 February 2023).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Train/Val. (A) | Test (A) | Total (A) | Train/Val. (B) | Test (B) | Total (B) | |

|---|---|---|---|---|---|---|

| phase1 | 17,589 | 3048 | 20,637 | 50,888 | 8995 | 59,883 |

| phase2 | 16,983 | 2848 | 19,831 | 50,575 | 8598 | 59,173 |

| phase3 | 16,546 | 2819 | 19,365 | 49,558 | 8554 | 58,112 |

| phase4 | 16,306 | 2783 | 19,089 | 49,208 | 8459 | 57,667 |

| phase5 | 16,358 | 2767 | 19,125 | 49,009 | 8374 | 57,383 |

| phase6 | 16,298 | 2808 | 19,106 | 48,974 | 8509 | 57,483 |

| phase7 | 16,232 | 2797 | 19,029 | 49,194 | 8557 | 57,751 |

| phase8 | 16,180 | 2880 | 19,060 | 49,118 | 8668 | 57,786 |

| Total | 132,492 | 22,750 | 155,242 | 396,524 | 68,714 | 465,238 |

| System | CPU | GPU | RAM | TF | Plaid-ML |

|---|---|---|---|---|---|

| 1 | AMD Ryzen-5 1600 | AMD RX 580 | 32 GB DDR4 | 2.4.1 | Yes |

| 2 | AMD Ryzen-5 2600X | AMD R9 270X | 32 GB DDR4 | 2.7.0 | No |

| 3 | Intel i3-8100 | NVIDIA GT 730 | 16 GB DDR4 | 2.7.0 | No |

| 4 | Intel i5-3230M | AMD HD 7500M/7600M | 16 GB DDR3 | 2.7.0 | No |

| Model | System | Epochs | Avg. Accuracy |

|---|---|---|---|

| Inception-V3 RGB | 1 | 10 | 99.87% |

| MobileNet-V1 RGB | 1 | 10 | 99.75% |

| ResNet-50 RGB | 1 | 10 | 99.62% |

| Inception-V3 Projection | 3 | 85 | 89.37% |

| MobileNet-V1 Projection | 3 | 85 | 83.75% |

| ResNet-50 Projection | 3 | 85 | 81.37% |

| PointNet PCD | 2 | 10 | 98.50% |

| PointNet PGM | 2 | 25 | 87.25% |

| VGG-16-LSTM RGB | 4 | 50 | 93.87% |

| VGG-16-LSTM Projection | 4 | 50 | 97.87% |

| PointNet-LSTM PCD | 4 | 50 | 100% |

| Fusion-Custom Layers | 4 | 50 | 99.62% |

| Study | Gesture Amount | Approach | Accuracy * (%) |

|---|---|---|---|

| Llorca et al. [4] | 6 | Vision | 97.81 |

| Xia et al. [5] | 12 | Vision | 100 |

| Ivanovs et al. [6] | 7 | Vision | 67 |

| Prakasa and Sugiarto [7] | 6 | Vision | 98.81 |

| Q.Vo et al. [8] | 7 | Vision | 84 |

| Zhong et al. [9] | 4 | Vision | 98.1 |

| Xie et al. [10] | 7 | Vision | 93.75 |

| Mondol and Stankovic [11] | 7 | Sensor | ≈60 |

| Wang et al. [12] | 10 | Sensor | 90.9 |

| Samyoun et al. [13] | 10 | Sensor | ≈99 |

| Santos-Gago et al. [14] | Not specified | Sensor | 100 |

| Wang et al. [15] | 9 | Sensor | 92.27 |

| Zhang et al. [16] | 11 | Sensor | 98 |

| Ours | 8 | Vision | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Özakar, R.; Gedikli, E. Evaluation of Hand Washing Procedure Using Vision-Based Frame Level and Spatio-Temporal Level Data Models. Electronics 2023, 12, 2024. https://doi.org/10.3390/electronics12092024

Özakar R, Gedikli E. Evaluation of Hand Washing Procedure Using Vision-Based Frame Level and Spatio-Temporal Level Data Models. Electronics. 2023; 12(9):2024. https://doi.org/10.3390/electronics12092024

Chicago/Turabian StyleÖzakar, Rüstem, and Eyüp Gedikli. 2023. "Evaluation of Hand Washing Procedure Using Vision-Based Frame Level and Spatio-Temporal Level Data Models" Electronics 12, no. 9: 2024. https://doi.org/10.3390/electronics12092024

APA StyleÖzakar, R., & Gedikli, E. (2023). Evaluation of Hand Washing Procedure Using Vision-Based Frame Level and Spatio-Temporal Level Data Models. Electronics, 12(9), 2024. https://doi.org/10.3390/electronics12092024