Abstract

In high-mobility scenarios, a user’s media experience is severely constrained by the difficulty of network channel prediction, the instability of network quality, and other problems caused by the user’s fast movement, frequent base station handovers, the Doppler effect, etc. To this end, this paper proposes a video adaptive transmission architecture based on three-dimensional caching. In the temporal dimension, video data are cached to different base stations, and in the spatial dimension video data are cached to base stations, high-speed trains, and clients, thus constructing a multilevel caching architecture based on spatio-temporal attributes. Then, this paper mathematically models the media stream transmission process and summarizes the optimization problems that need to be solved. To solve the optimization problem, this paper proposes three optimization algorithms, namely, the placement algorithm based on three-dimensional caching, the video content selection algorithm for caching, and the bitrate selection algorithm. Finally, this paper builds a simulation system, which shows that the scheme proposed in this paper is more suitable for high-speed mobile networks, with better and more stable performance.

1. Introduction

With the development of network technology, the demand for streaming media is increasing. Video traffic accounts for a gradually increasing proportion of the total network traffic []. Nowadays, high-speed rail has become one of the most popular modes of intercity travel. In the high-speed rail network environment, users move fast, and frequent base station handovers cause frequent network channel quality jitter, frequent data retransmissions, increased media data transmission delay, and buffer overflow, which seriously reduce the user’s media experience.

Adaptive transmission technology can reduce the negative impact of network quality jitter on the user’s media experience []. Its method is to divide the meida data into different bitrates, store them on the media server, and adaptively select the appropriate bitrate data for the user according to the changes in the network bandwidth. Common transmission protocols include Dynamic Adaptive Streaming over HTTP (DASH) and HTTP Live Streaming (HLS). Many scholars have studied the transmission of variable-bitrate videos. Feng et al. [] studied the impact of video segment length on user experience, and proposed an adaptive variable-length video segment scheme, which makes dynamic decisions based on real-time network data and user information, and achieves a balance between the accuracy and overhead of variable-length segmentation. The machine learning prediction of the client segment request bitrate implemented at the network edge was studied in [], and the client segment request prediction problem was formulated as a supervised learning problem, i.e., to predict the bitrate of the next segment request of the client, so as to be prefetch it at the mobile edge and improve the user’s experience. The above research results reduce the impact of channel quality jitter on the user’s media experience, but are based on low-speed networks; and more constraints need to be considered in high-speed networks.

Cache placement can reduce video transmission delay and the negative impact of network bandwidth jitter. Cache technology is also one of the techniques commonly used by scholars to reduce video transmission delay. Xu et al. [] proposed a user-assisted base station (BS) storage and cooperative prefetching scheme for high-speed railway (HSR) communication, which improves the system throughput. However, it does not consider the environmental factors of users watching videos. A mobile edge network streaming media optimization framework based on privacy-preserving learning was proposed in [], which included a user data privacy scheme and dynamic video cache algorithm. However, the strategy proposed by the literature is in the scenario of low mobility speed, and does not consider some characteristics of the high-speed railway mobile scenario. In high-speed networks, users move very fast, so the calculation of cache placement location and time is also a very critical challenge.

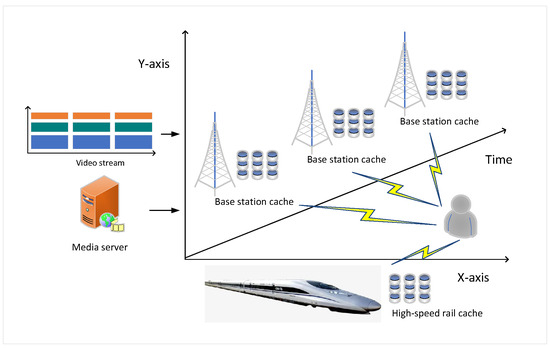

This paper considers the characteristics of the high-speed railway network scenario, such as high-speed mobility and severe network channel quality jitter. Under the above constraints, this paper proposes an adaptive streaming media transmission method based on three-dimensional cache and environment awareness (TDCEA). The three dimensions referred to in this paper are the time dimension, the space dimension, and multilevel caching from server to user. In the time dimension, the multimedia data need to be placed on different base station severs according to time. The space dimension refers to caches located at different locations, such as base station, high-speed rail, and client. Multilevel caching refers to the multitier cache architecture formed by different base stations, high-speed rails, and clients based on the spatio-temporal attributes.

According to the user’s channel quality and the environmental parameters of the user watching the video, the transmission of streaming media based on the three-dimensional cache architecture is mathematically modeled, the optimization goal of this paper is summarized, and the corresponding solution algorithm is proposed, aiming to reduce the transmission delay and improve the user’s QoE. The solution proposed in this paper needs to solve the following problems: The first is which data to store. On the high-speed rail, a large number of users watch videos, and it is a challenge to choose which media data to store. The second is how to choose the cache location (at the base station or on the high-speed rail). The third is when to store the data. The user moves at a high speed and the cache has a short validity period, so the cache placement time is also very critical. The fourth is how to choose the media bitrate so as to improve the user’s media experience.

The rest of the paper is organized as follows. Section 2 introduces the related research status of video cache technology and adaptive bit rate technology. Section 3 proposes the system architecture and system model. In Section 4, some algorithms to solve the problem are proposed. The simulation is given in Section 5. Section 6 analyzes the results of the experiments, and discussion in Section 7. Section 8 provides a conclusion.

2. Related Works

Scholars have carried out a great deal of research on cache technology in order to improve users’ media experience. In [], Mamduhi et al. proposed a cross-layer controller for handling file transfer at different levels and applied it to wireless network transmission, thus better managing network resources and improving the network transmission efficiency. In [], the evolution of 360-degree video view requests was predicted by a long short-term memory (LSTM) network, and the predicted content is prefetched to the temporary storage. To further improve the video quality of transmission, users located in the overlapping areas of multiple small base stations are allowed to receive data from any of these small base stations, thereby enhancing the user’s video experience. With the development of edge computing technology, scholars have proposed streaming media transmission optimization strategies based on edge computing. In [], the user’s QoE was used as the key indicator to drive the edge device offloading, and limited computing resources in were used to provide better service for the user. Nguyen et al. [] dynamically allocated wireless resources to optimize the user’s QoE in LTE wireless cellular networks, used the cross-layer information of the network.

Setting memory in the streaming media transmission process is one of the effective method to reduce video transmission delay and improving user QoE. Many researchers have carried out a large amount of research on placement problems. The technical challenges and development directions of mobile edge cache were analyzed in []. In [], Pham et al. stored media data on edge servers to reduce data transmission delay and proposed an algorithm to increase the data hit rate. Wang et al. [] analyzed the current state of research on caching in edge computing and proposed a collaborative strategy for streaming media content in cloud-edge collaborative environment. For the cache data selection problem, researchers generally believe that storing popular content can minimize the energy consumption of data delivery. The caching data selection algorithm was studied by [], by using small base stations to store the most popular files. In [], Li et al. studied the relationship between multiple cache points and user service, and found that, in some cases, randomly caching files results in better performance than caching the most popular data.

Li et al. [] studied the system modeling, large-scale optimization, and framework design of hierarchical edge caching in device-to-device assisted mobile networks. A layer-based non-orthogonal transmission scalable media cache technology was proposed in [], which utilized the layer features in scalable video. In [], Zhang et al. studied the challenges brought by the different levels of content popularity and different viewing demands of scalable video, and proposed different types of content corresponding transmission schemes. In [], Tran et al. proposed a multirate cache (MRC) scheme for video offloading in dense device-to-device (D2D) networks. A scalable video coding collaborative cache architecture for drones and clients was proposed in [], which provided high transmission rate content distribution and personalized viewing quality in hotspot areas. A reactive content cache strategy based on deep reinforcement learning was proposed in [], which made decisions for new requested content. The main goal was to obtain higher cache gain with lower space cost. Xu et al. [] proposed a new heterogeneous network video content secure edge cache scheme. In order to motivate the participation of edge devices, the Nash bargaining game was used to model the negotiation between content providers and edge storage, and the optimal request cache space of content providers and the optimal price of each edge device were analyzed together. In [], Kim et al. studied the caching of part of the content, rather than the entire content, to enrich users’ QoE. And They proposed a cache strategy for data blocks of different quality. A 360° video cache solution based on viewpoint reconstruction was proposed in [].

At present, many scholars have conducted in-depth research on the adaptive technology of streaming media. A novel AQM algorithm was proposed in [], which was based on the hotspot technology of deep learning, and used a long short-term memory (LSTM) neural network to enhance the users’ perception of video quality. Meanwhile, the optimization of the buffer was also presented in []. Santos et al. designed a new rate-adaptive algorithm, which aimed to minimize the amount of rebuffering and maximize the video quality. Gupta et al. [] proposed a data cache video adaptive bit-rate switching algorithm. The algorithm dynamically constructed a smooth factor based on the throughput and cache state, and performed exponential weighted averaging on the bandwidth to maintain the buffer balance and ensure the video quality.

The above literature studied the cache technology and variable-bitrate algorithm for streaming media, and proposed many valuable methods. However, the above research is based on low-speed mobile networks. In high-speed mobile networks, cache is not only related to space, but also to time, and frequent base station handovers cause severe jitter in user channel quality; these factors all cause the performance of the above methods to decline. Therefore, this paper proposes an adaptive streaming media transmission optimization method based on three-dimensional cache and environment awareness.

The main contributions of this paper are as follows:

- This paper proposes an adaptive streaming media transmission architecture based on three-dimensional cache and environment awareness, which considers the utility of the cache in time and space dimensions.

- This paper digitizes the adaptive streaming media transmission scheme based on the three-dimensional cache and environment awareness, and summarizes the problems to be optimized.

- This paper proposes an algorithm to solve the problems, which are the selection of video data, cache location, and time and bitrate selection, and verifies the performance of the proposed method via simulation.

3. Architecture and Model

The adaptive streaming media transmission architecture based on a three-dimensional cache and environment awareness proposed in this paper is shown in Figure 1: First, the base station collects the user’s media requests and analyzes the popularity of the videos according to the request files of a large number of users, and then stores these data in the sever of the base station or the high-speed rail. Then, the user selects the appropriate bitrate video according to the environmental parameters of watching the video, and first searches in the high-speed rail cache. If the required content is found, the current quality of the wireless channel does not need to be considered. If it is not found, the data request is reapplied according to the quality of the current network channel quality and is searched in the base station cache. If it is found, it is directly sent to the user. If it is not found, the data is resent from the server or transcode (if there are other bitrate data).

Figure 1.

The architecture interactive model proposed in this article.

3.1. User’s QoE Model

Assume that the current time is t, n base stations are passed along the way, and the video is evenly divided into m segments of length . In this paper, in the high-speed mobile scenario, the distance between the user equipment (UE) and the base station (BS), and between the rail speed and other viewing environment, as well as the objective quality of the video, have a great impact on the user’s QoE, which is defined by Equation (1):

where the function represents the objective quality. The objective quality can be expressed by the quality evaluation parameters PSNR and SSIM []. The function represents the impact of cache miss and retranscoding on the user’s QoE, and represents the impact of data retransmission delay after cache miss on the user’s QoE. The parameter represents the jitter of video quality, and the parameter represents the number of rebuffering instances. The parameters , , , , and represent the impact coefficients of each factor.

where denotes a monotonically increasing function of , where is the number of bitrate switches that user x has experienced in the previous segments. is the current bitrate of user. J is the video bitrate index.

Normalize the value of QoE:

3.2. Video Data Cache Model

Content popularity: Content popularity is used to describe the popularity of streaming media, reflecting the probability of customers requesting streaming media files at a certain time. In this paper, the content popularity of streaming media files can be calculated by Equation (4): Assume a streaming media file M

where represents the i-th video segment in M. Assign with a popularity level number, and the probability of accessing is , for all . Therefore, can be called the popularity of in the set M, and the popularity distribution in M is:

Starting from each time segment, the base station collects requests from users, and the cache content is divided into two types: (i) New content, i.e., video segments requests that have not been cached yet. (ii) New version, i.e., the new version of the current video segment requested. Content popularity is modeled as a Zipf distribution. In this distribution, content of a higher popularity may have a higher probability of being requested. According to [], can be obtained as follows:

where reflects the skewness of the popularity distribution.

where means storing the video data in the base station cache, and means storing the video data in the high-speed rail cache. The parameters and represent the popularity thresholds, which can be defined by the user. When , the base station and the storage time of the cache data need to be calculated. Assume that the user connects to the base station at time t, the current speed of the user is , the distance to is , and the distance to is . Therefore, the cache base station selection function is

Assuming that the base station cache is selected at , the cache validity can be calculated by Equation (9)

3.3. Transmission Model

The objective quality of QoS can be calculated by Equation (10):

where f is the calculation function of video objective quality, and represents the bitrate selected.

When the user needs to obtain data from the base station, the video bitrate chosen by the user should be less than the current network bandwidth . The current network bandwidth is related to the user’s channel quality.

Due to multipath effects, the signal from the base station reaches the user through M different paths; therefore, the average signal-to-interference-plus-noise (SINR) ratio of a single user at a given point is expressed as []:

with

where M is the number of multipaths, is the propagation delay with base station i (for base station 0, ), is the additional delay that is added by path j, is the path loss from base station i, is a constant and a lognormal variable due to shadowing. is the average power that is associated with the j-th path, is the length of the cyclic prefix (CP), and is the length of the useful signal frame, and is the noise power.

The value of is related to the base station that the user connects to.

where is a function, and is the base station to which that the user connects. The base station is related to the current viewing environment of the user, such as the user’s moving speed, the distance between the user and different base stations, the size of video data transmission.

where w is a function, is the user’s moving speed at time t, is the distance between the user and the base station , is the distance between the user and the base station , and is the bitrate. When the user needs to obtain data from the high-speed rail cache, the bitrate is independent of the current network condition of the user.

where represents the data bitrate in the high-speed rail cache. When the video data requested by the user is not found in the base station, there are two choices: request the video data with the corresponding bitrate from the server, or transcode the media data based on the computing module of the base station. If transcoding is chosen, there is an additional cost, which has a negative impact on the user’s QoE.

where represents the playback time of a video segment, and represents the processor frequency of the edge service. The higher the frequency, the lower the cost. If the user chooses to retransmit the data, the data retransmission will cause delay .

The optimization goal of this paper is

4. Algorithm

4.1. Three-Dimensional Cache Placement

In this paper, the base station collects requests from user devices in periods and processes the corresponding data requests in parallel. According to the popularity of the media, some data will be cached in the base station. When the user sends a request for , it will first search the high-speed rail . If has the requested content, it will directly call from . If it does not, the BS will search for in . If found, it will directly call from . If none of the above is found, the content will be obtained from the video server. Algorithm 1 shows the three-dimensional cache access strategy. For and , when receiving the content , if there is content in the old version data, it will replace the old version with the new content. If the user has extra storage space, the content will be cached directly. If both storage spaces are full, the content in is deleted, and then the content in is deleted.

| Algorithm 1: Three-Dimensional Caching Access Policy (TDCAP) | |

| Require: | |

| System Initialization. | |

| 1: | for t = 1 to T-1 do |

| 2: | Construct which obey Zipf. collects . |

| 3: | for i = 1 to U do |

| 4: | |

| 5: | |

| 6: | for in do |

| 7: | if then |

| 8: | Get content in |

| 9: | t = t + 1 |

| 10: | continue |

| 11: | end if |

| 12: | if then |

| 13: | Get content in |

| 14: | t = t + 1 |

| 15: | continue |

| 16: | else |

| 17: | get content from . |

| 18: | end if |

| 19: | end for |

| 20: | for in do |

| 21: | Get content from media server |

| 22: | |

| 23: | end for |

| 24: | end for |

| 25: | end for |

4.2. Cache Content Selection

Prestore content selection can be regarded as a multiple knapsack problem. The cache is regarded as a knapsack, and b bytes of space are allocated to in (the total capacity allocated to N users is less than or equal to the total capacity), so the capacity of is bB. Similarly, the capacity of hight-speed rail is . There are n that need to be downloaded to the cache, each occupies space, and the popularity of is the value of . The specific content selection strategy is shown in Algorithm 2. The algorithm can achieve the local optimal solution of the cache strategy, and compared with other algorithms, it can significantly reduce the delay caused by the user not finding the target file.

| Algorithm 2: Cache Content Selection Download Algorithm (CCSDA) | |

| Require: | |

| System Initialization ( representative the highest value maximum value of the first i items, given that the knapsack capacity is j. | |

| 1: | while do |

| 2: | for to do |

| 3: | for to do |

| 4: | |

| 5: | if then |

| 6: | |

| 7: | end if |

| 8: | end for |

| 9: | end for |

| 10: | end while |

| 11: | Download M from to according to . |

| 12: | for the user request do |

| 13: | for to do |

| 14: | for to do |

| 15: | |

| 16: | if then |

| 17: | |

| 18: | end if |

| 19: | end for |

| 20: | end for |

| 21: | Download M from to according to |

| 22: | end for |

4.3. Bit Rate Adaptive Selection Algorithm

This paper adopts edge computing and cross-layer information for bit rate adaptive algorithm. It is assumed that the video segment has multiple fixed bitrates: . The value of Bc is calculated according to Equation (21)

where represents the bitrate of the highest quality level, and is initially set to . Then, the user’s QoE is calculated respectively, at the bitrates of and . If the bitrate of is chosen, the user’s QoE is denoted as , and if the bit rate of is chosen, the user’s QoE is denoted as . Compare the values of and : if , then ; otherwise, set . Repeat the above steps until . Then, the user’s when choosing the bitrate is compared with the user’s when choosing the video data stored in the high-speed rail. If , the data with the bit rate are chosen, and if , the video data are requested from the high-speed rail cache. The pseudocode of the algorithm is shown in Algorithm 3.

| Algorithm 3: Bitrate Adaptive Selection Algorithm | |

| Require: | |

| System Initialization bitrates , | |

| initial bitrate in the high-speed buffer is . | |

| , , ) | |

| while h! = m do | |

| Calculate | |

| 3: | Calculate |

| if >= then | |

| , | |

| 6: | else |

| , | |

| end if | |

| 9: | end while |

| if then | |

| Download video with bitrate | |

| 12: | else |

| Download video with bitrate | |

| end if | |

5. Simulation

In this section, we conducted a simulation experiment to verify the effectiveness of the proposed algorithm. First, we compared the cache performance, such as the average lifetime and the average hit probability of the cache data. Then, we compared the user’s QoE under the HLS, BSBC and BBA algorithms to verify the performance of the whole algorithm proposed in this paper.

Cache Performance Comparison Algorithm

In this paper, the average lifetime and the average hit probability of the cache data are defined as follows:

The average lifetime of the cache data:

This is used to describe the timeliness of the data collected by the system. The peak age of the content represents the maximum value of the content in the system; the age of the content is defined as follows:

where t represents the current time, and represents the generation time (also known as the generation time stamp) of the latest data packet received by the receiver. Therefore, the average information age is the sum of all instantaneous information ages divided by the amount of information:

where N represents the total number of streaming media files.

Average hit probability:

The efficiency of cache is usually measured by the hit rate. In this paper, the hit rate refers to the probability that the file requested by the user is in the corresponding cache. The hit rate is defined as the ratio of the number of successful accesses to the total number of accesses. Specifically, it is the probability that a given access to a memory location will be satisfied by the cache. The hit rate can be expressed as follows:

where, represents the number of times that the requested file matches, and represents the total number of user requests. The average hit probability is defined as follows:

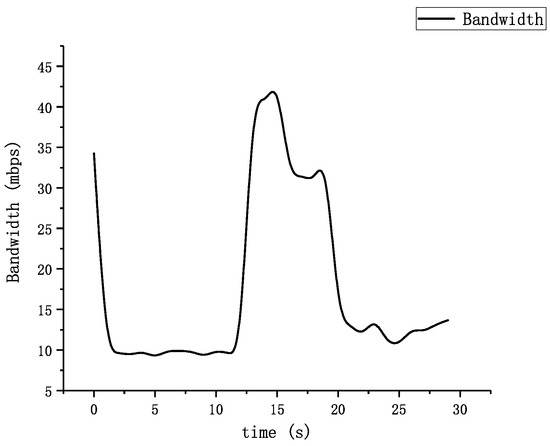

This paper uses Python to build a simulation environment, and the simulation parameters are shown in Table 1. The bandwidth data used in the simulation experiment are real bandwidth data collected in a real high-speed rail environment, and Figure 2 shows the network bandwidth in the high-speed rail environment used in this experiment. In order to compare the user’s QoE under different cache algorithms, the parameters in the QoE model are set as follows: , , , , . The transmission cost factor . In this paper, eight videos named Boat, FoodMarket, RitualDance, Crosswalk, TunnelFlag, Tango, Dancers, and DinnerScene are used as data for the streaming media experiment []. In the simulation experiment, each video is divided into 10 s segments, and the video is played in a loop, using the Joint Scaling video model (JSVM, version 9.19) [] to encode the data with different quality levels.

Table 1.

Simulation parameters.

Figure 2.

Bandwidth data in high-speed rail 5G environment.

The following are the cache algorithms compared in this paper:

Content-Oriented Strategy: This strategy focuses on optimizing the content distribution in the streaming media system. The core idea of this strategy is to cache the requested content as close as possible to the user’s location in order to reduce latency and improve user experience.

Version-Oriented Strategy: Version-Oriented Strategy focuses on optimizing version management in the streaming media system. The core idea of this strategy is to cache different versions of the same content on different nodes to meet the needs of different users.

The following are the bitrate selection algorithms compared in this paper:

BSBC []: BSBC is the abbreviation of buffer-based video bitrate adaptation, which aims to solve the problem of low average bitrate in the DASH standard. The algorithm analyzes the data in the video cache, calculates the average bitrate of the current frame, and dynamically adjusts the bitrate to improve the user experience. Specifically, the BSBC algorithm divides the video into multiple blocks, with each block containing multiple frames. For each block, the algorithm first calculates the total bitrate and total cache of the block, and then calculates the average bitrate of each frame according to these values. Finally, the bitrate is dynamically adjusted according to the average bitrate to improve the user experience.

HLS []: HTTP Live Streaming algorithm is a protocol and method for implementing the online streaming of audio and video content. This technology, developed by Apple, aims to use the HTTP protocol to transmit audio and video content efficiently and stably on the Internet, while automatically adjusting the bitrate to meet the needs of different network environments.

BBA []: The buffer-based adaptive (BBA) algorithm is a method of selecting bitrate based on buffer filling state. When the buffer is full, it chooses a higher bitrate; when the buffer is empty, it chooses a lower bitrate to avoid buffer overflow or playback interruption.

6. Results

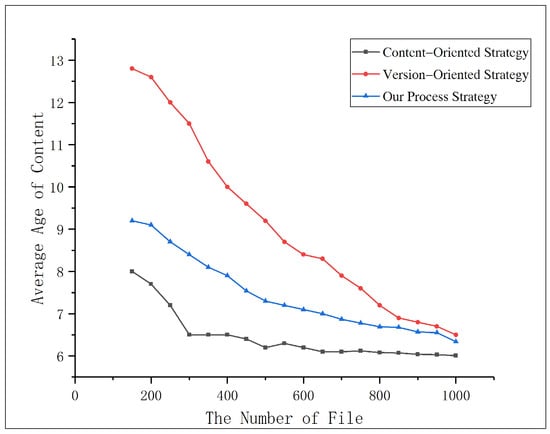

Figure 3 shows the average lifetime of the cache data of the three algorithms as the number of files requested by increases. As can be seen, as the total number of files increases, the average lifetime of the content decreases. This means that the files become increasingly fresh. The lower the freshness of the files, the higher the stability of the files obtained by the user, so our proposed algorithm has higher robustness.

Figure 3.

Average age of content with increasing number of files.

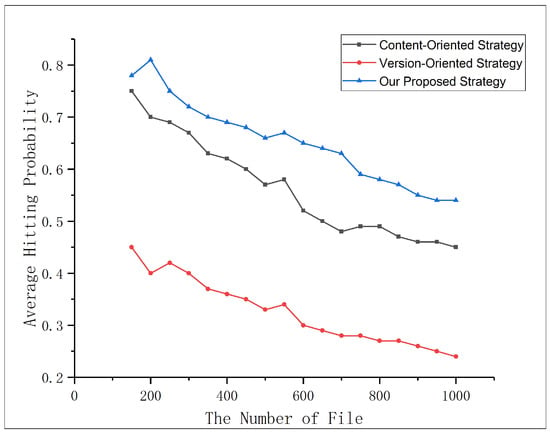

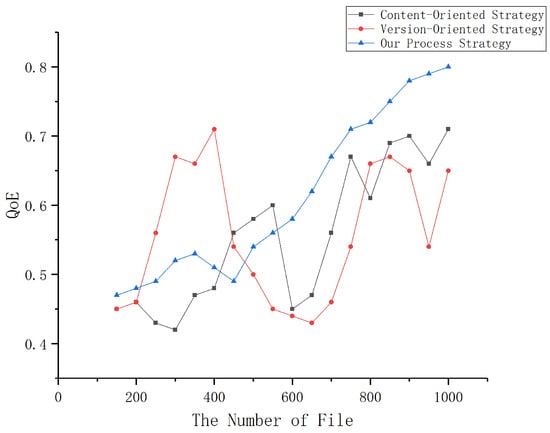

As shown in Figure 4, as the number of file requests increases, the cache hit rate is higher because the cache content increases, so the overall QoE rises. However, the performance of the above two strategies is unstable, because their content requests are more random, and when the file is not found in the cache, the performance of the above two strategies will be decreased. Therefore, the strategy proposed in this paper has higher applicability in dealing with streaming media transmission in high-speed rail. Figure 5 shows the average hit probability of the three algorithms as the number of files requested by increases. As the number of requested files increases, the files become increasingly difficult to hit. Our strategy ensures that the hit rate of the files is higher when the number of files is large.

Figure 4.

Average hitting probability with increasing number of files.

Figure 5.

QoE with increasing number of files.

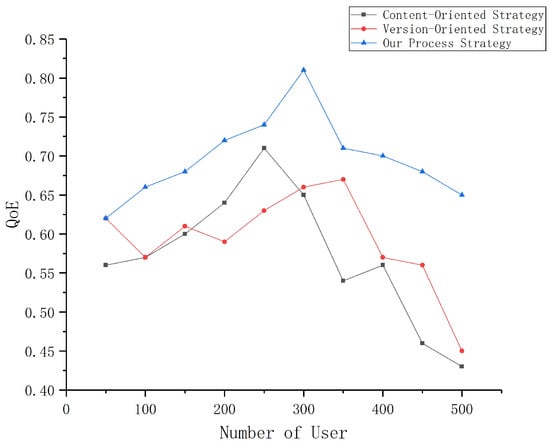

As shown in Figure 6, as the number of users increases, the user’s QoE decreases because the cache space occupied by each user decreases. At this time, when the user requests a file, the probability of finding the requested file in the sever decreases, so the QoE decreases. However, the performance of the strategy proposed in this paper is still better than those of the above two strategies. The strategy proposed in this paper is more suitable for video transmission in high-speed mobile networks.

Figure 6.

QoE with increasing number of users.

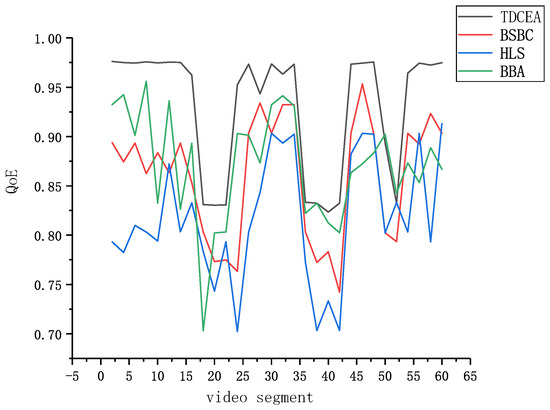

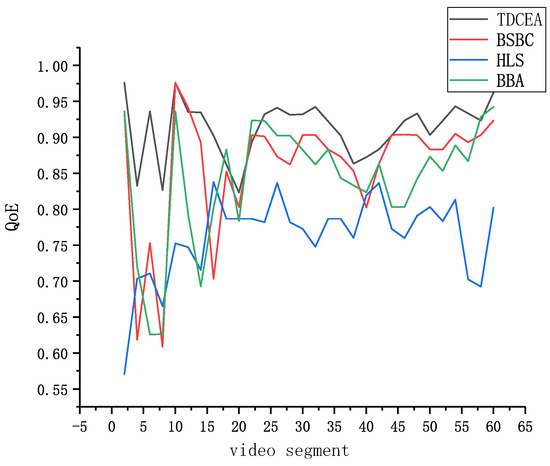

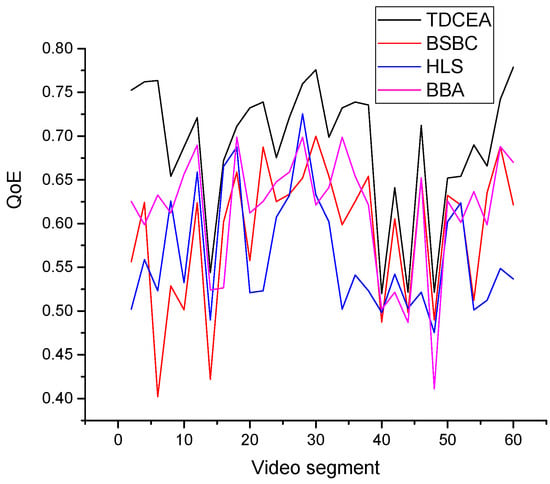

In Figure 7, the content movement of the video Boat is slow, so the encoded data are small, and the required bandwidth is small, resulting in the highest bitrate video playing smoothly for the user. The algorithm proposed in this paper can quickly find the local optimal solution, thus obtaining high QoE performance. In addition, in Figure 8, the QoE of the HLS algorithm is lower than that of other algorithms, especially at the beginning of the transmission, because the algorithm randomly selects the video bitrate at the beginning, making it more unstable. However, the algorithm proposed in this paper performs well in the initial bitrate selection. In Figure 9, watching the video RitualDance, because there are many elements in the video and the scene changes faster, the encoded data are larger, and thus users cannot watch the high bitrate video smoothly, resulting in lower QoE. However, the algorithm we proposed still maintains good performance, indicating that it can still perform well under high load. In general, the algorithm we proposed is relatively superior in high-speed environments.

Figure 7.

The users’ QoE when watching the video Boat.

Figure 8.

The users’ QoE when watching the video FoodMarket.

Figure 9.

The users’ QoE when watching the video RitualDance.

Figure 10 shows the QoE of high-speed rail users when each user randomly selects one of eight videos to watch under different algorithms. The results show that the TDCEA method proposed in this paper achieves the best performance. Compared to high-speed rail users watching a single video individually, the average user QoE obtained in this experiment is lower than that of users watching the same video. This is because the increase in the number of videos available occupies more cache space, thus reducing the cache hit rate and the average user QoE.

Figure 10.

The users’ QoE when watching the eight videos.

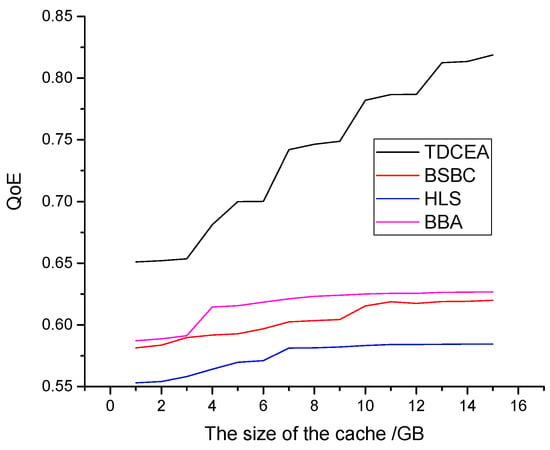

The results in Figure 11 are obtained from simulations under the scenario where high-speed rail users randomly select one of eight videos to watch. In this paper, both the base station cache and the high-speed rail onboard cache vary with different levels. This shows the impact of different levels on the average user QoE. As illustrated, the proposed TDCEA method exhibits a significant increase in average user QoE with larger cache sizes. This suggests that expanding the onboard cache capacity in high-speed rail enables more video data to be cached, thereby reducing the demand for wireless resources and improving user video experience. The QoE for other methods also increases with larger cache levels, but to a lesser extent. This is because in high-speed rail scenarios, the bottleneck for video transmission lies between the base station and client side. Under the special networking environments of high-speed rails, it is difficult to store more data on the client side.

Figure 11.

The users’ QoE with different cache levels.

7. Discussion

In the high-speed rail network environment, the frequent switching of users causes the congestion algorithm to intervene frequently, resulting in a decline in the stability of the network bandwidth estimation accuracy. This paper proposes a new transmission method called TDCEA, which constructs a three-dimensional cache architecture to prestore media data at suitable locations and times. The simulation results demonstrate that our proposed TDCEA achieves the best performance. The main reason for this is that TDCEA has been designed specifically for high-mobility scenarios and is thus more suitable for such environments. As shown in Figure 11, TDCEA performs better with increased cache capacity because prefetching data in the high-speed rail environment improves the communication quality between the rail and clients. Moreover, the channel between the stationary high-speed rail environment and users is more stable. Therefore, clients can receive video data from the rail cache more smoothly. In summary, our proposed TDCEA delivers superior performance and robustness in highly mobile environments.

The method proposed in this paper requires cache servers to be deployed onboard high-speed rails. Also, due to the high speed of these rail systems, the timeliness of cached content is reduced, resulting in additional hardware costs. However, the trade-off between increased hardware expenses and improved user quality of experience has not been extensively discussed in this paper. This will be the focus of our future research by incorporating caching costs into our model to enable a more comprehensive cost-benefit analysis.

8. Conclusions

This paper proposes a new transmission method called TDCEA to address video delivery challenges in high-mobility environments. It solves the following three problems: one, when to prestore data; two, where to prestore data; and three, which data bitrate to select. Simulation experiments demonstrate that our proposed TDCEA delivers superior performance and robustness compared to existing methods under high-speed mobility scenarios.

Author Contributions

Conceptualization, J.G. and J.Z.; methodology, J.G.; software, Y.Z.; validation, Y.Z. and F.S.; formal analysis, Y.Z.; investigation, H.G.; resources, J.G.; data curation, Y.Z.; writing—original draft preparation, J.G.; writing—review and editing, J.G.; visualization, Y.Z.; supervision, Y.T.; project administration, Y.T.; funding acquisition, J.G., Y.Z., J.Z., F.S., H.G. and Y.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was substantially supported by the National Natural Science Foundation of China under Grant No. 62002263 and No. 62002025, in part by Tianjin Municipal Education Commission Research Program Project under 2022KJ012 and Open Foundation of State key Laboratory of Networking and in part by Switching Technology (Beijing University of Posts and Telecommunications) (SKLNST-2021-1-20).

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to being related to our subsequent research.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| QoE | Quality of Experience |

| Objective Quality Function of Video | |

| Negative Gain Function of QoE Due to Recoding | |

| The Negative Gain Function of QoE Due to Retransmission Delay | |

| The Negative Gain of QoE Due to Video Quality Jitter | |

| The Negative Gain of QoE Due to Video Buffering Events | |

| Trade-off Parameters | |

| The number of Bitrate Switches | |

| x | The x-th User |

| SINR | Signal to Interference Plus Noise |

| r | The Function for Computing SINR. |

| Base Station for User Connection | |

| The Bitrate of Cached Data in High-Speed Rail | |

| Cost | The Cost of Transcoding |

| The Duration of Playback for a Video Segment | |

| CPU Performance | |

| M | Video File |

| N | The Total Number of Video Segments |

| Popularity Threshold | |

| Boolean Function | |

| Base Station | |

| t | Time |

| User Velocity | |

| dist | Distance Between User and Base Station |

| Cached Base Station | |

| VS | Video Server |

| Timelines | Cache Lifetime |

| f | Objective Quality Function |

| Video Bitrate | |

| Bandwidth | |

| Retransmission Delay | |

| Base Station Cache | |

| High-speed Rail Cache |

References

- Klimkiewicz, B. Monitoring Media Pluralism in the Digital Era: Application of the Media Pluralism Monitor in the European Union, Albania, Montenegro, the Republic of North Macedonia, Serbia and Turkey in the Year 2022. Country Report: Poland; European University Institute: Fiesole, Italy, 2023. [Google Scholar]

- Li, W.; Huang, J.; Liu, J.; Jiang, W.; Wang, J. Learning Audio and Video Bitrate Selection Strategies Via Explicit Requirements. IEEE Trans. Mob. Comput. 2023; in press. [Google Scholar] [CrossRef]

- Feng, T.; Qi, Q.; Wang, J.; Liao, J.; Liu, J. Timely and Accurate Bitrate Switching in HTTP Adaptive Streaming With Date-Driven I-Frame Prediction. IEEE Trans. Multimed. 2023, 25, 3753–3762. [Google Scholar] [CrossRef]

- Behravesh, R.; Rao, A.; Perez-Ramirez, D.F.; Harutyunyan, D.; Riggio, R.; Boman, M. Machine Learning at the Mobile Edge: The Case of Dynamic Adaptive Streaming Over HTTP (DASH). IEEE Trans. Netw. Serv. Manag. 2022, 19, 4779–4793. [Google Scholar] [CrossRef]

- Xu, W.; Xu, Q.; Tao, L.; Xiang, W. User-Assisted Base Station Caching and Cooperative Prefetching for High-Speed Railway Systems. IEEE Internet Things J. 2023, 10, 17839–17850. [Google Scholar] [CrossRef]

- Zhang, Z.; Cao, T.; Wang, X.; Xiao, H.; Guan, J. VC-PPQ: Privacy-Preserving Q-Learning Based Video Caching Optimization in Mobile Edge Networks. IEEE Trans. Netw. Sci. Eng. 2022, 9, 4129–4144. [Google Scholar] [CrossRef]

- Mamduhi, M.H.; Maity, D.; Johansson, K.H.; Lygeros, J. Regret-Optimal Cross-Layer Co-Design in Networked Control Systems—Part I: General Case. IEEE Commun. Lett. 2023, 27, 2874–2878. [Google Scholar] [CrossRef]

- Maniotis, P.; Thomos, N. Tile-Based Edge Caching for 360° Live Video Streaming. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4938–4950. [Google Scholar] [CrossRef]

- Barmpounakis, S.; Maroulis, N.; Papadakis, M.; Tsiatsios, G.; Soukaras, D.; Alonistioti, N. Network slicing—Enabled RAN management for 5G: Cross layer control based on SDN and SDR. Comput. Netw. 2020, 166, 106987. [Google Scholar] [CrossRef]

- Nguyen, T.V.; Nguyen, N.P.; Kim, C.; Dao, N.N. Intelligent aerial video streaming: Achievements and challenges. J. Netw. Comput. Appl. 2023, 211, 103564. [Google Scholar] [CrossRef]

- Shende, C.; Park, C.; Sen, S.; Wang, B. Cross-layer Network Bandwidth Estimation for Low-latency Live ABR Streaming. In Proceedings of the 14th Conference on ACM Multimedia Systems, New York, NY, USA, 7–10 June 2023; pp. 183–193. [Google Scholar]

- Pham, X.Q.; Nguyen, T.D.; Nguyen, V.; Huh, E.N. Joint Service Caching and Task Offloading in Multi-Access Edge Computing: A QoE-Based Utility Optimization Approach. IEEE Commun. Lett. 2021, 25, 965–969. [Google Scholar] [CrossRef]

- Wang, Y.; Fei, Z.X.; Zhang, X.Y.; Sun, R.j.; Miao, Z.Y. Survey on caching technology in mobile edge networks. J. Beijing Univ. Posts Telecommun. 2017, 40, 1–13. [Google Scholar]

- Tang, H.; Li, C.; Zhang, Y.; Luo, Y. Optimal multilevel media stream caching in cloud-edge environment. J. Supercomput. 2021, 77, 10357–10376. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Y.; Song, M.; Yan, X.; Luo, Y. An optimized content caching strategy for video stream in edge-cloud environment. J. Netw. Comput. Appl. 2021, 191, 103158. [Google Scholar] [CrossRef]

- Li, X.; Wang, X.; Wan, P.J.; Han, Z.; Leung, V.C.M. Hierarchical Edge Caching in Device-to-Device Aided Mobile Networks: Modeling, Optimization, and Design. IEEE J. Sel. Areas Commun. 2018, 36, 1768–1785. [Google Scholar] [CrossRef]

- Ma, J.; Liu, L.; Shang, B.; Jere, S.; Fan, P. Performance Analysis and Optimization for Layer-Based Scalable Video Caching in 6G Networks. IEEE/ACM Trans. Netw. 2023, 31, 1494–1506. [Google Scholar] [CrossRef]

- Zhang, X.; Ren, Y.; Lv, T.; Hanzo, L. Caching Scalable Videos in the Edge of Wireless Cellular Networks. IEEE Netw. 2023, 37, 34–42. [Google Scholar] [CrossRef]

- Tran, Q.N.; Vo, N.S.; Phan, T.M.; Lam, T.C.; Masaracchia, A. Multi-Rate Probabilistic Caching Optimized Video Offloading in Dense D2D Networks. IEEE Commun. Lett. 2023, 27, 1240–1244. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, Y.; Yi, W.; Liu, Y.; Nallanathan, A. Joint Optimization of Caching Placement and Trajectory for UAV-D2D Networks. IEEE Trans. Commun. 2022, 70, 5514–5527. [Google Scholar] [CrossRef]

- Liu, Y.; Jia, J.; Cai, J.; Huang, T. Deep Reinforcement Learning for Reactive Content Caching With Predicted Content Popularity in Three-Tier Wireless Networks. IEEE Trans. Netw. Serv. Manag. 2023, 20, 486–501. [Google Scholar] [CrossRef]

- Xu, Q.; Su, Z.; Ni, J. Incentivizing Secure Edge Caching for Scalable Coded Videos in Heterogeneous Networks. IEEE Trans. Inf. Forensics Secur. 2023, 18, 2480–2492. [Google Scholar] [CrossRef]

- Kim, D.; Choi, M. Impacts of Device Caching of Content Fractions on Expected Content Quality. IEEE Wirel. Commun. Lett. 2022, 11, 1022–1026. [Google Scholar] [CrossRef]

- Ye, Z.; Li, Q.; Ma, X.; Zhao, D.; Jiang, Y.; Ma, L.; Yi, B.; Muntean, G.M. VRCT: A Viewport Reconstruction-Based 360° Video Caching Solution for Tile-Adaptive Streaming. IEEE Trans. Broadcast. 2023, 69, 691–703. [Google Scholar] [CrossRef]

- Amodu, O.A.; Bukar, U.A.; Mahmood, R.A.R.; Jarray, C.; Othman, M. Age of Information minimization in UAV-aided data collection for WSN and IoT applications: A systematic review. J. Netw. Comput. Appl. 2023, 216, 103652. [Google Scholar] [CrossRef]

- Santos, C.E.M.; da Silva, C.A.G.; Pedroso, C.M. Improving Perceived Quality of Live Adaptative Video Streaming. Entropy 2021, 23, 948. [Google Scholar] [CrossRef] [PubMed]

- Gupta, D.; Rani, S.; Ahmed, S.H. ICN-edge caching scheme for handling multimedia big data traffic in smart cities. Multimed. Tools Appl. 2022, 82, 39697–39717. [Google Scholar] [CrossRef]

- Doumas, A.V.; Papanicolaou, V.G. Uniform versus Zipf distribution in a mixing collection process. Stat. Probab. Lett. 2019, 155, 108559. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, Y.; Walker, G.K.; Li, W.; Salehian, K.; Florea, A. Improving LTE e MBMS With Extended OFDM Parameters and Layered-Division-Multiplexing. IEEE Trans. Broadcast. 2017, 63, 32–47. [Google Scholar] [CrossRef]

- Available online: https://media.xiph.org/video/derf (accessed on 5 July 2023).

- JSVM 9 Software. Available online: https://vcgit.hhi.fraunhofer.de/jvet/jsvm (accessed on 8 January 2023).

- Ji, A.; Luan, Y. Buffer compensation based video bitrate adaptation algorithm. J. Comput. Appl. 2022, 42, 2816–2822. [Google Scholar]

- Ashok Kumar, P.M.; Arun Raj, L.N.; Jyothi, B.; Soliman, N.F.; Bajaj, M.; El-Shafai, W. A Novel Dynamic Bit Rate Analysis Technique for Adaptive Video Streaming over HTTP Support. Sensors 2022, 22, 9307. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Z.; Huang, J.; Jiang, W.; Wang, J. A Buffer-Based Adaptive Bitrate Approach in Wireless Networks with Iterative Correction. IEEE Wirel. Commun. Lett. 2022, 11, 1644–1648. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).