Overcoming Fear and Improving Public Speaking Skills through Adaptive VR Training

Abstract

:1. Introduction

2. Materials and Methods

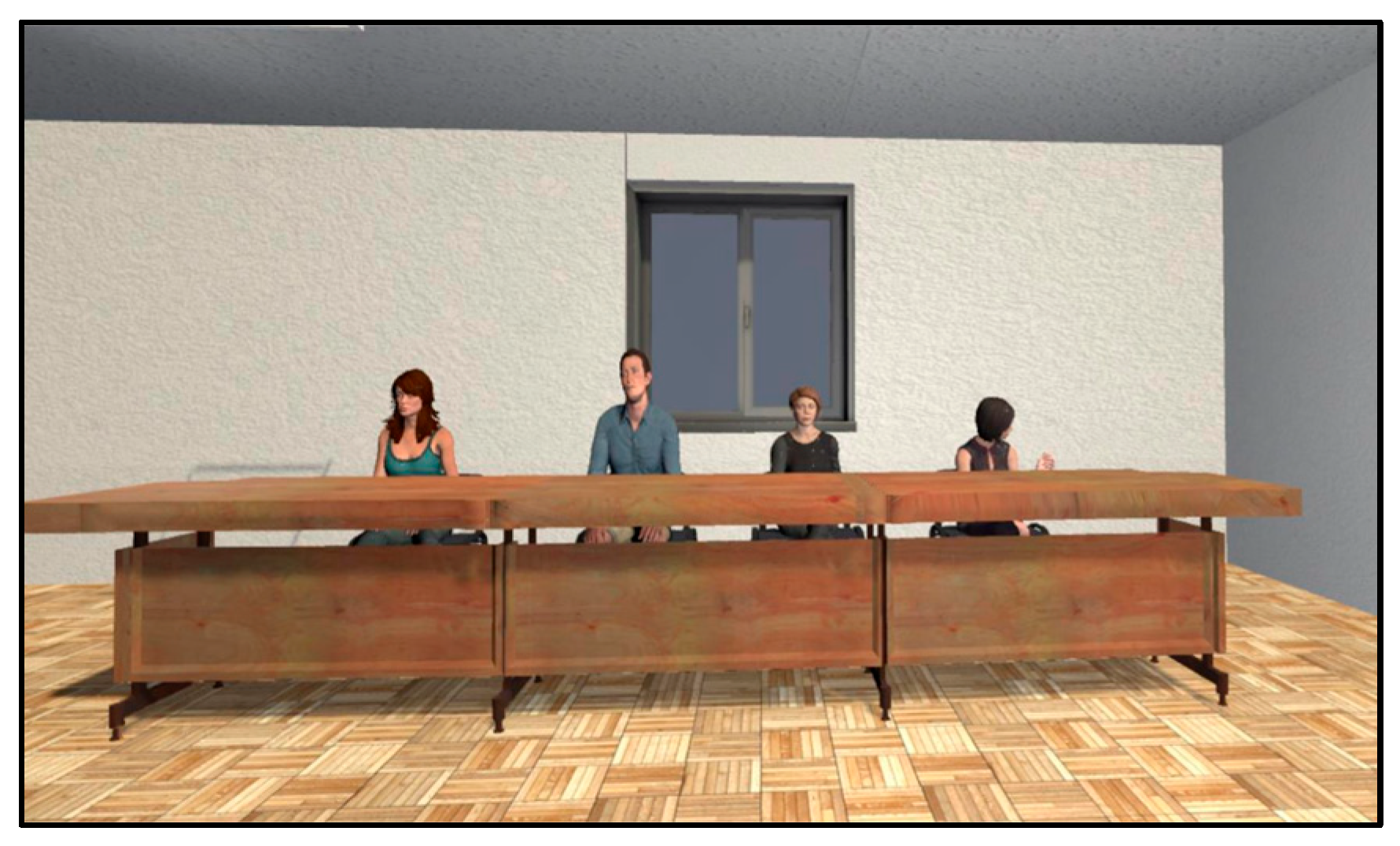

2.1. Overview of Virtual Reality for Public Speaking (VR4PS)

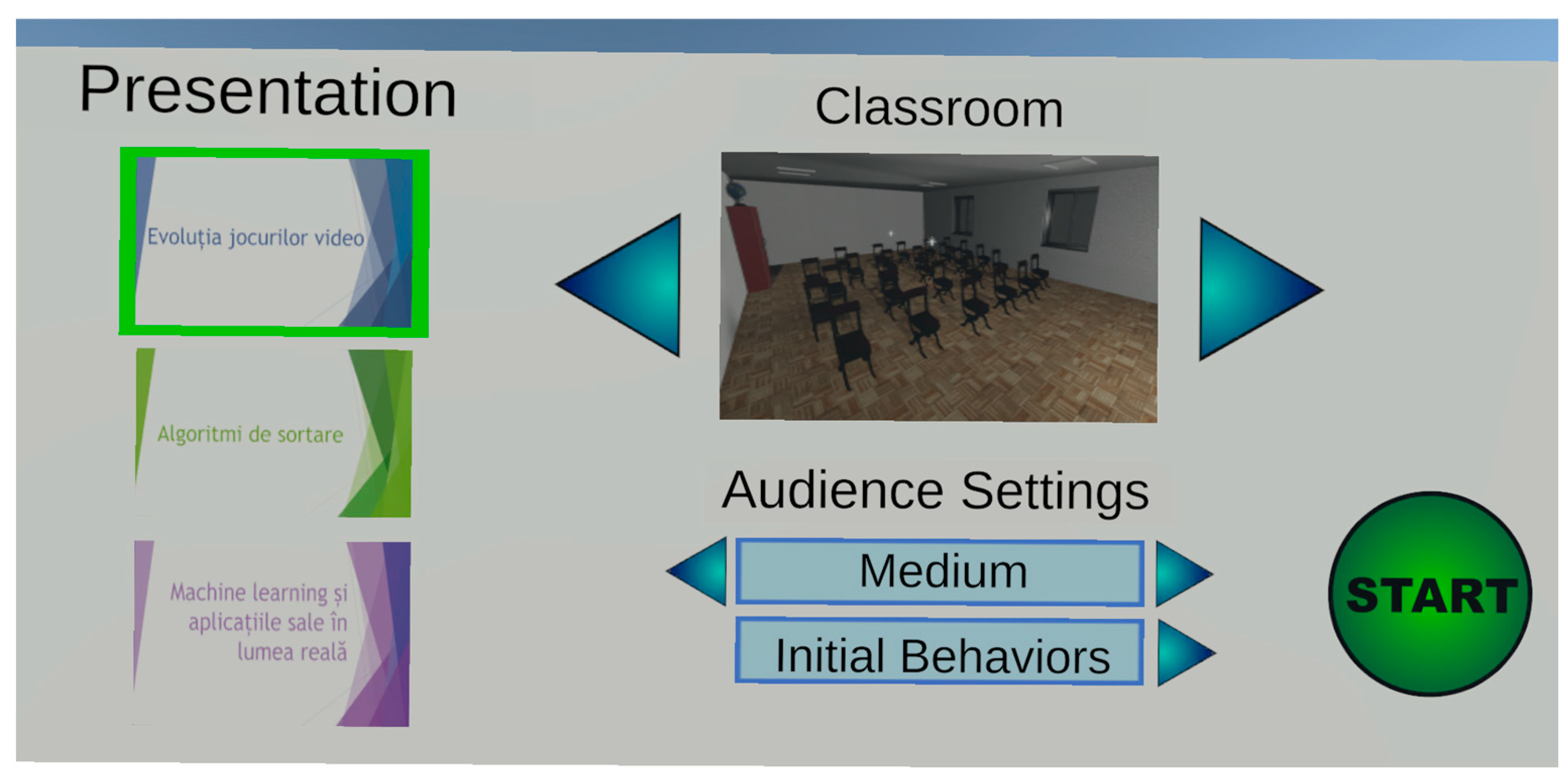

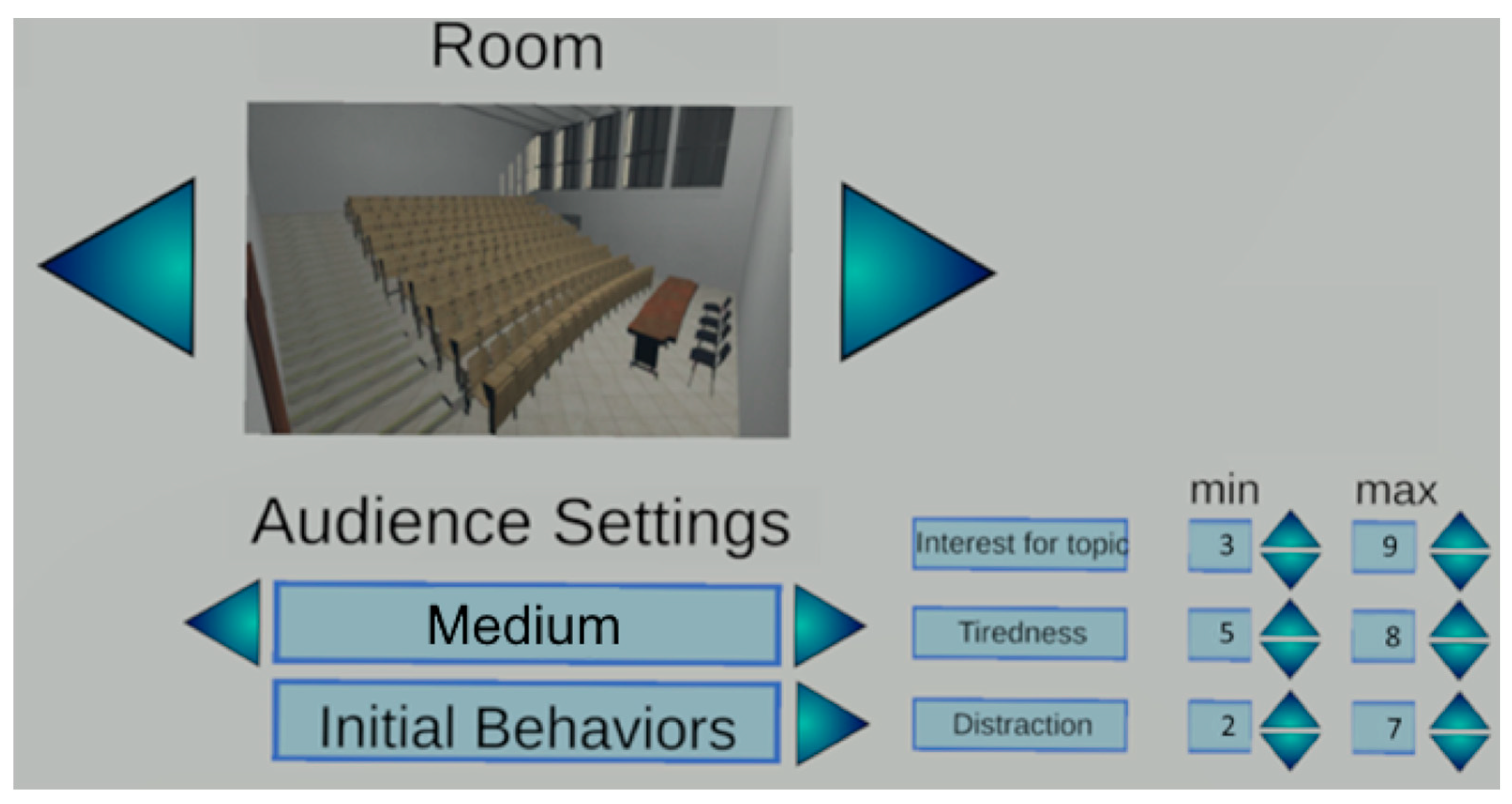

2.2. Customization of the Presentation Session in the Virtual Environment

- Empty room (0% seat occupancy);

- Small audience (25% of seats occupied);

- Medium-sized audience (50% of seats occupied);

- Large audience (75% of seats occupied);

- Full room (100% seat occupancy).

- Interest in the topic that will be presented;

- Fatigue levels;

- Susceptibility to distraction.

2.3. Metrics and Scoring

- Hand and head movement: Both the hand and head movements of the user are captured in real-time in the 3D virtual environment. The system calculates the extent of their movement by computing the distance between their previous and current positions, offering insights into their range of motion and interaction with the virtual space;

- Head swings: each time a user oscillates their focus between the PowerPoint presentation and the virtual audience, it is counted as a head swing, offering insights into the number of times the user disconnects from the audience;

- Presentation gaze: This metric is important in understanding user engagement. It calculates the duration in which a user directs their attention towards the presentation slides versus the virtual audience;

- Usage of presentation pointer: Highlighted in Figure 6, the application tracks the duration the presentation pointer targets the slide content. This metric is invaluable, differentiating between moments when a presenter is actively using the pointer to showcase important content on the slides versus speaking without engaging with the audience. Analyzed in tandem with gaze direction, this offers deeper insights into user–slide interactions. The usage of the pointer is tracked while the user is actively looking at the presentation, hence a strong correlation with presentation gaze;

- Voice volume: This is ascertained by measuring the amplitude of microphone-detected sound levels, providing a clear understanding of the user’s audibility;

- Advanced voice analysis: For metrics like voice clarity, rhythm, filler words, and long pauses, our system integrates [23] with Azure’s Speech-to-Text algorithm. This algorithm transcribes the recorded voice into textual content, facilitating subsequent text-based evaluations. For instance, consider the following:

- ○

- Rhythm: assessed as the rate of spoken words per minute;

- ○

- Clarity: defined by the algorithm’s confidence level in accurately transcribing spoken content;

- ○

- Long pauses: evaluated by contrasting silent audio durations against a preset threshold;

- ○

- Filler words: the system identifies these through a string-matching process, comparing the transcribed content against a predefined list of common filler words.

2.4. Implementation Details

- Audience Proximity: affects the audience metrics proportionally to the distance from the user to each audience member;

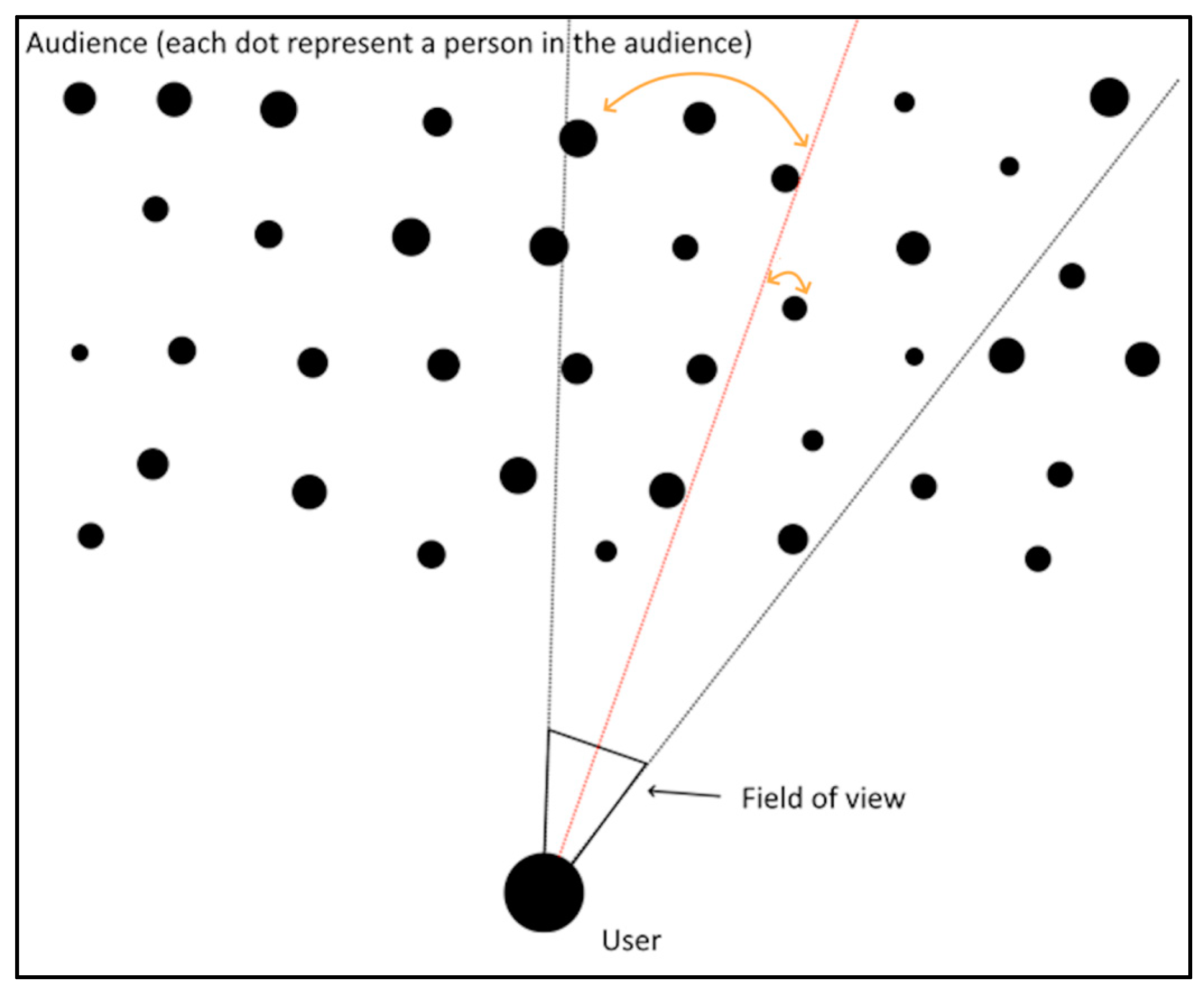

- Audience Gaze: affects the audience metrics proportionally to the viewing angle (Figure 8);

- Audience Interact: The user has the capability to direct the controller’s pointer at a specific audience member, simulating the action of addressing that individual directly. As a result, this targeted interaction enhances the particular audience member’s metrics, which in turn radiates out to positively influence the engagement levels of nearby individuals in a radial manner.

2.5. Evaluation

- ‘The Evolution of Video Games’ (Presentation 1);

- ‘An Overview of Sorting Algorithms’ (Presentation 2);

- ‘Machine Learning and Its Practical Applications’ (Presentation 3).

3. Results and Discussion

- Most participants spent between 10 and 60 min preparing each presentation. The averages for Presentation 1, Presentation 2, and Presentation 3 were 29, 48, and 51 min, respectively;

- A high level of mastery over the presentation subject was indicated, with scores mainly falling between seven and nine;

- The physical well-being of participants varied, with most feeling well, but some reported feeling average;

- Expectations regarding their performance were generally optimistic, with most predicting a score of around seven.

Evaluation Limitations

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Anderson, P.L.; Price, M.S.; Edwards, S.M.; Obasaju, M.A.; Schmertz, S.K.; Zimand, E.; Calamaras, M.R. Virtual reality exposure therapy for social anxiety disorder: A randomized controlled trial. J. Consult. Clin. Psychol. 2013, 81, 751–760. [Google Scholar] [CrossRef] [PubMed]

- Botella, C.; Gallego, M.; Garcia-Palacios, A.; Botella, V.; Baños, R.; Quero, S.; Alcañiz, M. An Internet-Based Self-Help Treatment for Fear of Public Speaking: A Controlled Trial. CyberPsychol. Behav. Soc. Netw. 2010, 13, 407–421. [Google Scholar] [CrossRef] [PubMed]

- Harris, S.; Kemmerling, R.; North, M. Brief Virtual Reality Therapy for Public Speaking Anxiety. CyberPsychol. Behav. Impact Internet Multimed. Virtual Real. Behav. Soc. 2002, 5, 543–550. [Google Scholar] [CrossRef] [PubMed]

- Kimani, E. A Sensor-based Framework for Real-time Detection and Alleviation of Public Speaking Anxiety. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), Cambridge, UK, 3–6 September 2019; pp. 55–59. [Google Scholar]

- Jinga, N. VR Training Systems for Public Speaking—A Qualitative Survey. In Proceedings of the International Scientific Conference eLearning and Software for Education, Bucharest, Romania, 27–28 April 2021; pp. 174–181. [Google Scholar]

- Palmas, F.; Reinelt, R.; Cichor, J.E.; Plecher, D.A.; Klinker, G. Virtual Reality Public Speaking Training: Experimental Evaluation of Direct Feedback Technology Acceptance. In Proceedings of the 2021 IEEE Virtual Reality and 3D User Interfaces (VR), Lisboa, Portugal, 27 March–1 April 2021; pp. 463–472. [Google Scholar]

- Palmas, F.; Cichor, J.; Plecher, D.A.; Klinker, G. Acceptance and Effectiveness of a Virtual Reality Public Speaking Training. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 14–18 October 2019; pp. 363–371. [Google Scholar]

- Salkevicius, J.; Navickas, L. Battling the Fear of Public Speaking: Designing Software as a Service Solution for a Virtual Reality Therapy. In Proceedings of the 2018 6th International Conference on Future Internet of Things and Cloud Workshops (FiCloudW), Barcelona, Spain, 6–8 August 2018; pp. 209–213. [Google Scholar] [CrossRef]

- El-Yamri, M.; Romero-Hernandez, A.; Gonzalez-Riojo, M.; Manero, B. Emotions-Responsive Audiences for VR Public Speaking Simulators Based on the Speakers’ Voice. In Proceedings of the 2019 IEEE 19th International Conference on Advanced Learning Technologies (ICALT), Maceió, Brazil, 15–18 July 2019; pp. 349–353. [Google Scholar]

- Tudor, A.D.; Poeschl, S.; Doering, N. Virtual audience customization for public speaking training procedures. In Proceedings of the 2013 IEEE Virtual Reality (VR), Lake Buena Vista, FA, USA, 18–20 March 2013; pp. 61–62. [Google Scholar]

- SalsabilaHadi, S.A.; Putri, M.S.; Ismiarti, F.; Santoso Gunawan, A.A.; Pramudya, F.S. A Systematic Literature Review:Virtual Reality’s in Decreasing Public Speaking Anxiety (PSA). In Proceedings of the 2023 International Conference on Information Technology and Computing (ICITCOM), Yogyakarta, Indonesia, 1–2 December 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Yadav, M.; Sakib, M.N.; Feng, K.; Chaspari, T.; Behzadan, A. Virtual reality interfaces and population-specific models to mitigate public speaking anxiety. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII), Cambridge, UK, 3–6 September 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Yuen, E.K.; Goetter, E.M.; Stasio, M.J.; Ash, P.; Mansour, B.; McNally, E.; Sanchez, M.; Hobar, E.; Forte, S.; Zulaica, K.; et al. A pilot of acceptance and commitment therapy for public speaking anxiety delivered with group videoconferencing and virtual reality exposure. J. Context. Behav. Sci. 2019, 12, 47–54. [Google Scholar] [CrossRef]

- Siddiqui, H.; Irfan, H.; Lakhani, A.S.; Ahmed, B.; Shaikh, S.; Movania, M.M.; Farhan, M. Manifest: Public Speaking Training Using Virtual Reality. In Proceedings of the 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Sydney, Australia, 16–20 October 2023; pp. 468–473. [Google Scholar] [CrossRef]

- Arushi; Dillon, R.; Teoh, A.N. Real-time Stress Detection Model and Voice Analysis: An Integrated VR-based Game for Training Public Speaking Skills. In Proceedings of the 2021 IEEE Conference on Games (CoG), Copenhagen, Denmark, 17–20 August 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Bălan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Fear Level Classification Based on Emotional Dimensions and Machine Learning Techniques. Sensors 2019, 19, 1738. [Google Scholar] [CrossRef] [PubMed]

- Bălan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. An Investigation of Various Machine and Deep Learning Techniques Applied in Automatic Fear Level Detection and Acrophobia Virtual Therapy. Sensors 2020, 20, 496. [Google Scholar] [CrossRef] [PubMed]

- Bălan, O.; Moise, G.; Petrescu, L.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Emotion Classification Based on Biophysical Signals and Machine Learning Techniques. Symmetry 2019, 12, 21. [Google Scholar] [CrossRef]

- Mitruț, O.; Moldoveanu, A.; Petrescu, L.; Petrescu, C.; Moldoveanu, F. A Review of Virtual Therapists in Anxiety and Phobias Alleviating Applications. In Virtual, Augmented and Mixed Reality; Chen, J.Y.C., Fragomeni, G., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 71–79. [Google Scholar]

- Petrescu, L.; Petrescu, C.; Mitruț, O.; Moise, G.; Moldoveanu, A.; Moldoveanu, F.; Leordeanu, M. Integrating Biosignals Measurement in Virtual Reality Environments for Anxiety Detection. Sensors 2020, 20, 7088. [Google Scholar] [CrossRef] [PubMed]

- Petrescu, L.; Petrescu, C.; Oprea, A.; Mitruț, O.; Moise, G.; Moldoveanu, A.; Moldoveanu, F. Machine Learning Methods for Fear Classification Based on Physiological Features. Sensors 2021, 21, 4519. [Google Scholar] [CrossRef] [PubMed]

- Stanica, I.; Dascalu, M.I.; Bodea, C.N.; Moldoveanu, A.D.B. VR Job Interview Simulator: Where Virtual Reality Meets Artificial Intelligence for Education. In Proceedings of the 2018 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 30–31 May 2018; pp. 9–12. [Google Scholar]

- Jinga, N. Voice Metrics for Discourse Quality Analysis. UPB Sci. Bull. Ser. C 2023, 85, 3–12. [Google Scholar]

- Moldoveanu, A.; Mitrut, O.; Jinga, N.; Petrescu, C.; Moldoveanu, F.; Asavei, V.; Anghel, A.M.; Petrescu, L. Immersive Phobia Therapy through Adaptive Virtual Reality and Biofeedback. Appl. Sci. 2023, 13, 10365. [Google Scholar] [CrossRef]

| Metric Name | Metric Description | Metric Interval | Optimal Metric Values | Metric Weight |

|---|---|---|---|---|

| Volume | Obtained directly from microphone’s input. | [0, 100] | [40, 60] | +0.15 |

| Rhythm | Obtained by dividing “presentation elapsed time” by the “current number of spoken words”. | [0, 50] | [19, 21] | +0.20 |

| Clarity | Obtained directly from the Speech-to-Text algorithm “confidence” parameter. | [0, 100] | [85, 100] | +0.15 |

| Filler words | The number of user’s spoken words that match those from the filler words’ dictionary. | [0, word_count] | [0, 15% × word_count] | −0.10 |

| Long pauses | Computed by checking the timestamp difference of the current spoken word and the previous spoken word against a set threshold. | [0, presentation_elapsed_time] | [0, 10% × presentation_elapsed_time] | −0.05 |

| Head movement | Computed by subtracting the headset’s previous position from the headset’s current position (Unity unit distance). | [0, 25] | [5, 8] | +0.05 |

| Hand movement | Computed by subtracting the controller’s previous position from the current position (Unity unit distance). | [0, 40] | [10, 15] | +0.10 |

| Presentation gaze | The amount of time in seconds the user spent looking at the presentation. | [0, presentation_elapsed_time] | [0, 15% × presentation_elapsed_time] | −0.15 |

| Head swings | The number of times the user turned back and forth between the presentation slides and the audience. The maximum value is obtained if the user turned his head every second. | [0, presentation_elapsed_time] | [0, 15% × presentation_elapsed_time] | −0.15 |

| Presentation pointer | The amount of time in seconds the pointer was used in the direction of the slides while the user was looking at the presentation. | [0, presentation_elapsed_time] | [0, 25% × presentation_elapsed_time] | +0.25 |

| Audience interest in topic | Computed as an average from all the audience members. Each audience member has a starting value as set by the user (Figure 5). Each of these values can evolve over time and is influenced by the user metrics (the above metrics). These values are also influenced by other computations such as Audience Proximity, Audience Gaze, and Audience Interact (detailed below) | [0, 100] | 100 | +0.40 |

| Audience distraction | [0, 100] | 0 | −0.15 | |

| Audience tiredness | [0, 100] | 0 | −0.15 |

| Metric Name | Turning Point | Audience Interest in Topic | Audience Distraction | Audience Tiredness |

|---|---|---|---|---|

| Volume | 20 or 80 | 2% | −2% | - |

| Rhythm | 15 or 25 | 2% | −2% | - |

| Clarity | 75 | 2% | −2% | - |

| Filler words | 25% × word_count | - | −2% | −2% |

| Long pauses | 20% × word_count | - | −2% | −2% |

| Head movement | 3 or 10 | - | −3% | - |

| Hand movement | 5 or 20 | - | −3% | - |

| Presentation gaze | 25% × presentation_elapsed_time | - | −5% | −3% |

| Head swings | 25% × presentation_elapsed_time | 3% | −3% | - |

| Presentation pointer | 10% × presentation_elapsed_time | 5% | −3% | - |

| Audience Proximity | - | 1% | −1% | - |

| Audience Gaze | - | 1% | −1% | - |

| Audience Interact | - | 25% | −25% | −5% |

| Question | Presentation 1 | Presentation 2 | Presentation 3 | % Improvement from Presentation 1 to Presentation 3 |

|---|---|---|---|---|

| Time spent preparing (minutes) | 29 | 48 | 51 | 43.13% |

| Mastery of the presentation’s subject | 6.40 | 7.88 | 7.12 | 10.11% |

| Anticipated performance | 6.72 | 7.56 | 7.24 | 7.18% |

| Post-performance rating | 7.00 | 7.44 | 7.92 | 11.61% |

| How much the application helped | 8.60 | 8.52 | 9.12 | 5.70% |

| How intuitive was the application? | 9.27 | 9.32 | 9.30 | 0.32% |

| Metric | Presentation 1 | Presentation 2 | Presentation 3 | % Improvement from Presentation 1 to Presentation 3 |

|---|---|---|---|---|

| Time speaking (%) | 94.84 | 95.36 | 95.87 | 1.07 |

| Filler words (%) | 16.30 | 14.97 | 12.43 | 23.74 |

| Head swings (per minute) | 7.87 | 7.56 | 7.23 | 8.13 |

| Time looking at the presentation (%) | 58.96 | 68.13 | 55.76 | 5.42 |

| Pointer usage while looking at the presentation (%) | 24.60 | 29.92 | 30.62 | 19.66 |

| Hand movement (unit distance/min) | 13.92 | 12.86 | 14.94 | 6.82 |

| Head movement (unit distance/min) | 9.56 | 8.23 | 10.53 | 9.21 |

| Score (Formula (1)) | 7.57 | 8.31 | 8.56 | 11.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jinga, N.; Anghel, A.M.; Moldoveanu, F.; Moldoveanu, A.; Morar, A.; Petrescu, L. Overcoming Fear and Improving Public Speaking Skills through Adaptive VR Training. Electronics 2024, 13, 2042. https://doi.org/10.3390/electronics13112042

Jinga N, Anghel AM, Moldoveanu F, Moldoveanu A, Morar A, Petrescu L. Overcoming Fear and Improving Public Speaking Skills through Adaptive VR Training. Electronics. 2024; 13(11):2042. https://doi.org/10.3390/electronics13112042

Chicago/Turabian StyleJinga, Nicolae, Ana Magdalena Anghel, Florica Moldoveanu, Alin Moldoveanu, Anca Morar, and Livia Petrescu. 2024. "Overcoming Fear and Improving Public Speaking Skills through Adaptive VR Training" Electronics 13, no. 11: 2042. https://doi.org/10.3390/electronics13112042

APA StyleJinga, N., Anghel, A. M., Moldoveanu, F., Moldoveanu, A., Morar, A., & Petrescu, L. (2024). Overcoming Fear and Improving Public Speaking Skills through Adaptive VR Training. Electronics, 13(11), 2042. https://doi.org/10.3390/electronics13112042