EEG_DMNet: A Deep Multi-Scale Convolutional Neural Network for Electroencephalography-Based Driver Drowsiness Detection

Abstract

1. Introduction

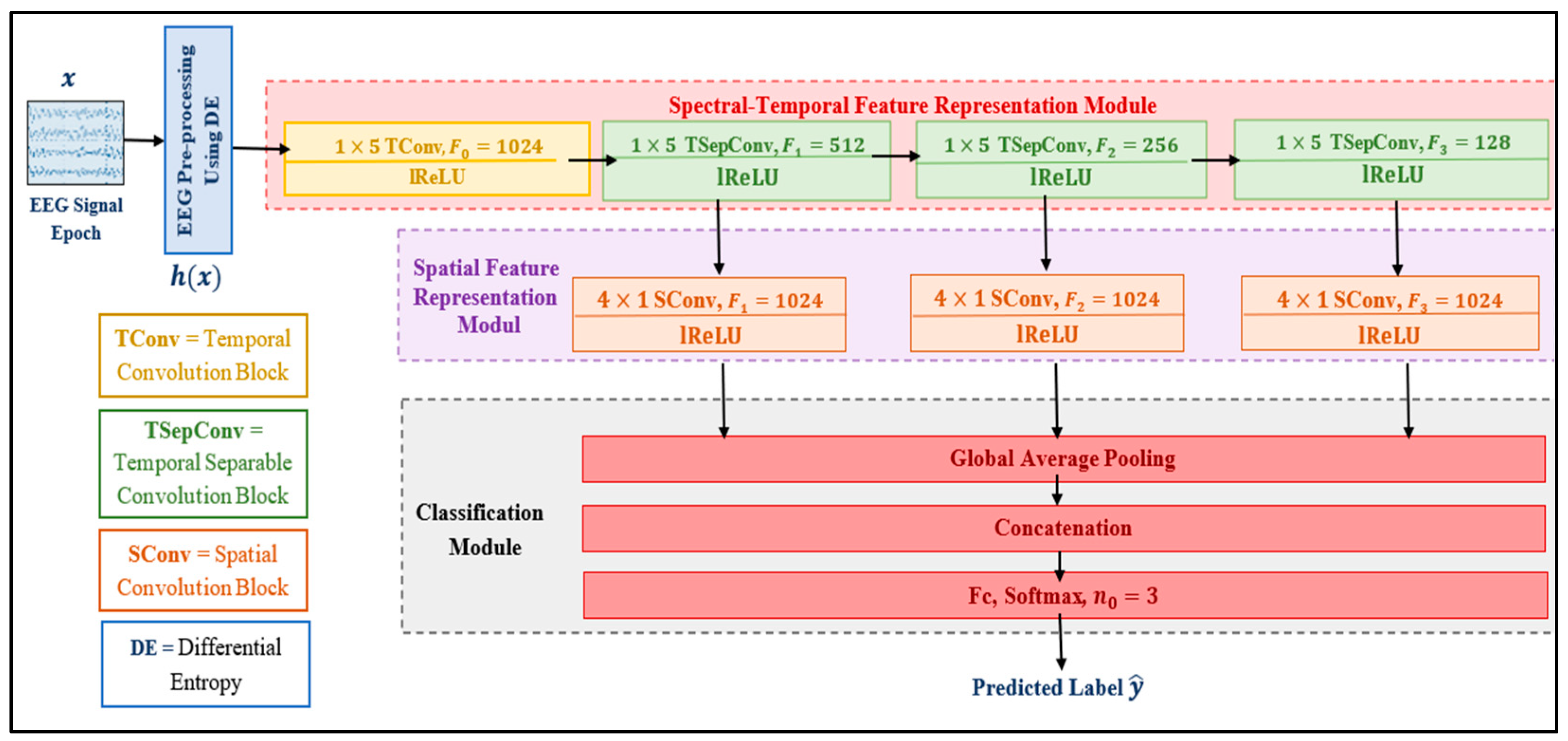

- We proposed a deep multi-scale CNN model (EEG_DMNet) based on EEG signals for driver’s drowsiness detection. This method takes an EEG trial as the input, preprocesses it using differential entropy (DE); then, it calculates multi-scale spectral-temporal features using 1D temporal convolution, and then computes the spatial patterns using 1D spatial convolutions.

- We conducted several experiments to evaluate the performance of the method for drowsiness detection and compared its performance with those of the state-of-the-art methods, demonstrating its outstanding performance.

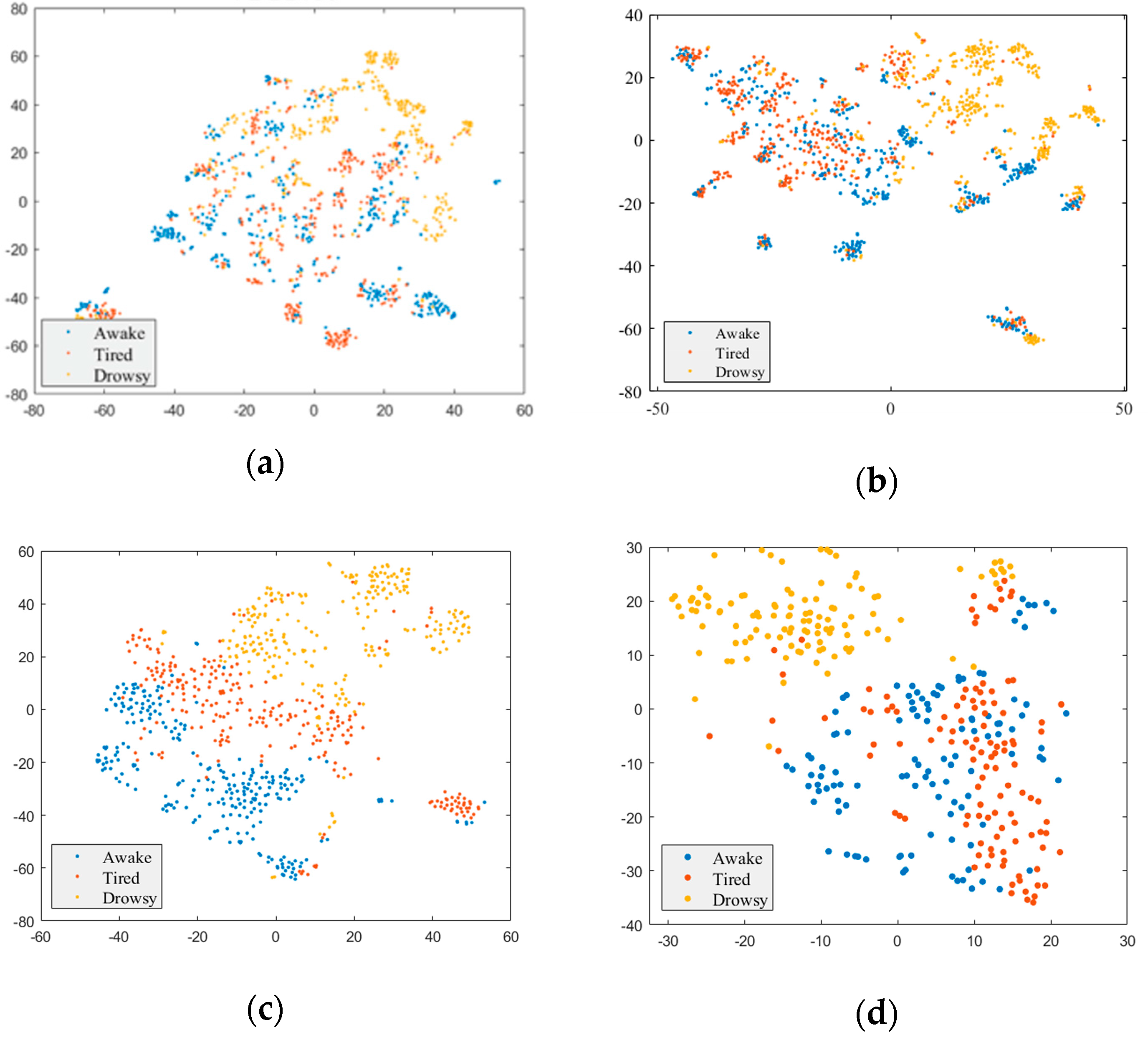

- We gave an analysis and visualization of the features learned by the EEG_DMNet, which demonstrates that it learns more discriminative features compared to the state-of-the-art models.

2. Literature Review

2.1. Hand-Engineered (HE) Feature-Based Methods

2.2. Deep Learning (DL)-Based Methods

3. Materials and Methods

3.1. Problem Formulation

3.2. Proposed Deep Multi-Scale CNN Model—EEG_DMNet

3.2.1. Spectral-Temporal Feature Extraction Mapping

3.2.2. Spatial Feature Extraction Mapping

3.2.3. Classification Function

4. Experiments and Results

4.1. Dataset Description

4.2. Training and Evaluation Setup

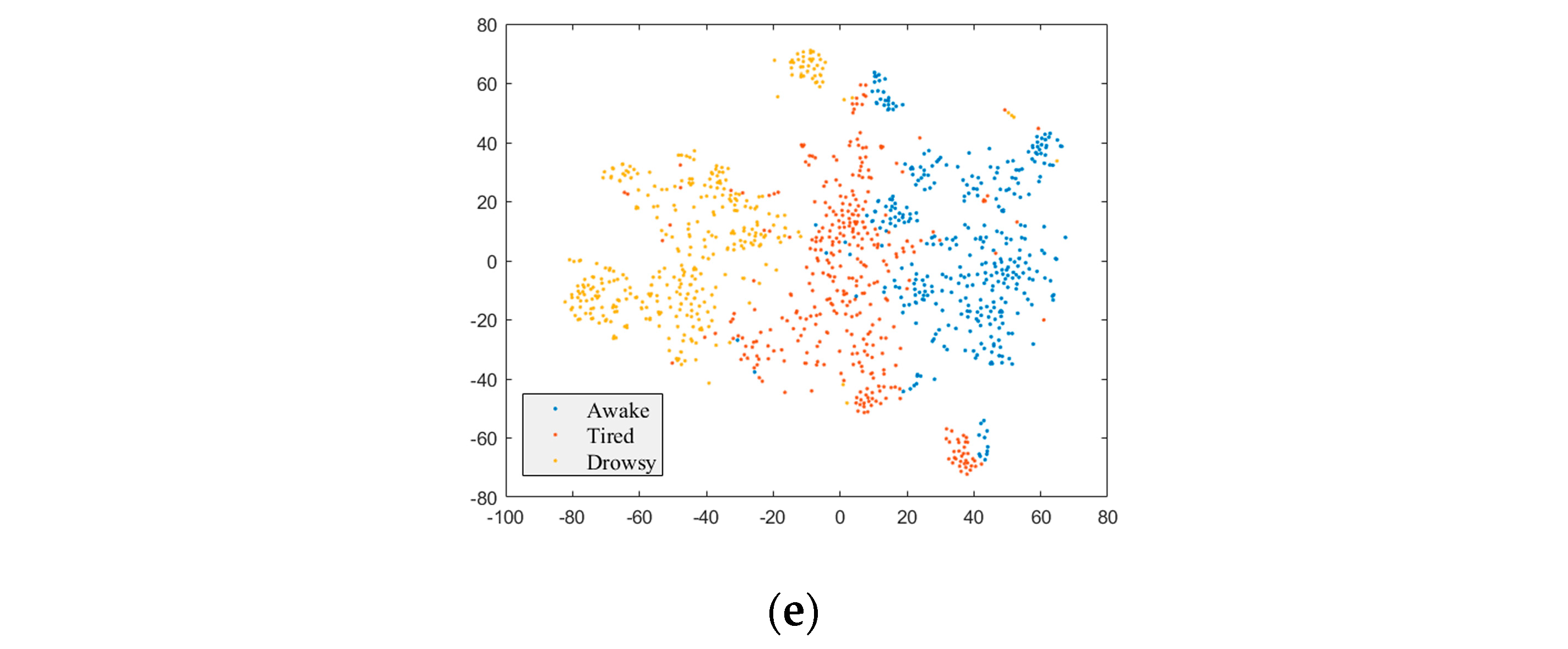

4.2.1. Implementation and Training

4.2.2. Evaluation Protocol

4.3. Ablation Study

4.3.1. Raw Data vs. DE Preprocessing

4.3.2. The Impact of the Number of Filters

4.3.3. The Impact of Activation Functions

4.3.4. The Impact of Spectral-Temporal and Spatial Blocks

4.3.5. The Impact of Scales

4.3.6. The Impact of RNN Layers

4.4. Experiment Results

4.5. Comparison with the State-of-the-Art Methods

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Studying the Prevalence of Drowsiness among Car Drivers in Saudi Arabia and Its Impact on Accidents. University Sleep Disorders Center at King Saud University. Available online: https://news.ksu.edu.sa/ar/node/104565 (accessed on 24 May 2022).

- Tefft, B. The Prevalence and Impact of Drowsy Driving—AAA Foundation for Traffic Safety. Available online: https://aaafoundation.org/prevalence-impact-drowsy-driving/ (accessed on 30 April 2022).

- Akerstedt, T.; Bassetti, C.; Cirignotta, F.; García-Borreguero, D.; Gonçalves, M.; Horne, J.; Léger, D.; Partinen, M.; Penzel, T.; Philip, P.; et al. Sleepiness at the Wheel; The French institut of Sleep and Vigilance: Paris, France, 2013. [Google Scholar]

- Ko, W.; Jeon, E.; Jeong, S.; Suk, H.I. Multi-scale Neural Network for EEG Representation Learning in BCI. IEEE Comput. Intell. Mag. 2021, 16, 31–45. [Google Scholar] [CrossRef]

- Zhu, M.; Chen, J.; Li, H.; Liang, F.; Han, L.; Zhang, Z. Vehicle driver drowsiness detection method using wearable EEG based on convolution neural network. Neural Comput. Appl. 2021, 33, 13965–13980. [Google Scholar] [CrossRef] [PubMed]

- Gharagozlou, F.; Saraji, G.N.; Mazloumi, A.; Nahvi, A.; Nasrabadi, A.M.; Foroushani, A.R.; Kheradmand, A.A.; Ashouri, M.; Samavati, M. Detecting Driver Mental Fatigue Based on EEG Alpha Power Changes during Simulated Driving. Iran. J. Public Health 2015, 44, 1693–1700. [Google Scholar] [PubMed]

- Lin, C.T.; Wu, R.C.; Liang, S.F.; Chao, W.H.; Chen, Y.J.; Jung, T.P. EEG-based drowsiness estimation for safety driving using independent component analysis. IEEE Trans. Circuits Syst. I Regul. Pap. 2005, 52, 2726–2738. [Google Scholar] [CrossRef]

- Paulo, J.R.; Pires, G.; Nunes, U.J. Cross-Subject Zero Calibration Driver’s Drowsiness Detection: Exploring Spatiotemporal Image Encoding of EEG Signals for Convolutional Neural Network Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 905–915. [Google Scholar] [CrossRef] [PubMed]

- Cuui, Y.; Xu, Y.; Wu, D. EEG-Based Driver Drowsiness Estimation Using Feature Weighted Episodic Training. arXiv 2019, arXiv:1909.11456. [Google Scholar] [CrossRef] [PubMed]

- Ko, W.; Yoon, J.; Kang, E.; Jun, E.; Choi, J.-S.; Suk, H.-I. Deep Recurrent Spatio-Temporal Neural Networkfor Motor Imagery based BCI. In Proceedings of the 6th International Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 15–17 January 2018; AAAI Press: Washington, DC, USA, 2018; pp. 1–3. [Google Scholar]

- Shen, M.; Zou, B.; Li, X.; Zheng, Y.; Zhang, L. Tensor-Based EEG Network Formation and Feature Extraction for Cross-Session Driving Drowsiness Detection. In Proceedings of the 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020. [Google Scholar]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A Compact Convolutional Network for EEG-based Brain-Computer Interfaces. J. Neural Eng. 2016, 15, 056013. [Google Scholar] [CrossRef]

- Chen, J.; Wang, S.; He, E.; Wang, H.; Wang, L. Recognizing drowsiness in young men during real driving based on electroencephalography using an end-to-end deep learning approach. Biomed. Signal Process. Control 2021, 69, 102792. [Google Scholar] [CrossRef]

- Cui, J.; Lan, Z.; Sourina, O.; Müller-Wittig, W. EEG-Based Cross-Subject Driver Drowsiness Recognition with an Interpretable Convolutional Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 7921–7933. [Google Scholar] [CrossRef]

- Jayaram, V.; Alamgir, M.; Altun, Y.; Scholkopf, B.; Grosse-Wentrup, M. Transfer Learning in Brain-Computer Interfaces. IEEE Comput Intell Mag 2016, 11, 20–31. [Google Scholar] [CrossRef]

- Arico, P.; Borghini, G.; Di Flumeri, G.; Sciaraffa, N.; Babiloni, F. Passive BCI beyond the lab: Current trends and future directions. Physiol. Meas. 2018, 39, 08TR02. [Google Scholar] [CrossRef] [PubMed]

- Haufe, S.; Meinecke, F.; Görgen, K.; Dähne, S.; Haynes, J.-D.; Blankertz, B.; Bießmann, F. On the interpretation of weight vectors of linear models in multivariate neuroimaging. Neuroimage 2014, 87, 96–110. [Google Scholar] [CrossRef] [PubMed]

- Orrù, G.; Micheletto, M.; Terranova, F.; Marcialis, G.L. Electroencephalography Signal Processing Based on Textural Features for Monitoring the Driver’s State by a Brain-Computer Interface. arXiv 2020, arXiv:2010.06412. [Google Scholar]

- Khare, S.K.; Bajaj, V. Entropy-Based Drowsiness Detection Using Adaptive Variational Mode Decomposition. IEEE Sens. J. 2021, 21, 6421–6428. [Google Scholar] [CrossRef]

- Khare, S.K.; Bajaj, V. Optimized Tunable Q Wavelet Transform Based Drowsiness Detection from Electroencephalogram Signals. IRBM 2022, 43, 13–21. [Google Scholar] [CrossRef]

- Shen, M.; Zou, B.; Li, X.; Zheng, Y.; Li, L.; Zhang, L. Multi-source signal alignment and efficient multi-dimensional feature classification in the application of EEG-based subject-independent drowsiness detection. Biomed. Signal Process. Control 2021, 70, 103023. [Google Scholar] [CrossRef]

- Min, J.; Xiong, C.; Zhang, Y.; Cai, M. Driver fatigue detection based on prefrontal EEG using multi-entropy measures and hybrid model. Biomed. Signal Process. Control 2021, 69, 102857. [Google Scholar] [CrossRef]

- Chen, K.; Liu, Z.; Liu, Q.; Ai, Q.; Ma, L. EEG-based mental fatigue detection using linear prediction cepstral coefficients and Riemann spatial covariance matrix. J Neural Eng. 2022, 19, 066021. [Google Scholar] [CrossRef]

- Kim, K.J.; Lim, K.T.; Baek, J.W.; Shin, M. Low-Cost Real-Time Driver Drowsiness Detection based on Convergence of IR Images and EEG Signals. In Proceedings of the 3rd International Conference on Artificial Intelligence in Information and Communication, ICAIIC, Jeju Island, Republic of Korea, 13–16 April 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 438–443. [Google Scholar] [CrossRef]

- Jia, H.; Xiao, Z.; Ji, P. End-to-end fatigue driving EEG signal detection model based on improved temporal-graph convolution network. Comput. Biol. Med. 2023, 152, 106431. [Google Scholar] [CrossRef]

- Turkoglu, M.; Alcin, O.F.; Aslan, M.; Al-Zebari, A.; Sengur, A. Deep rhythm and long short term memory-based drowsiness detection. Biomed. Signal Process. Control 2021, 65, 102364. [Google Scholar] [CrossRef]

- Tang, J.; Li, X.; Yang, Y.; Zhang, W. Euclidean space data alignment approach for multi-channel LSTM network in EEG based fatigue driving detection. Electron. Lett. 2021, 57, 836–838. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, Y.; He, Y.; Zhang, J. Phase lag index-based graph attention networks for detecting driving fatigue. Rev. Sci. Instrum. 2021, 92, 094105. [Google Scholar] [CrossRef] [PubMed]

- Budak, U.; Bajaj, V.; Akbulut, Y.; Atila, O.; Sengur, A. An effective hybrid model for EEG-based drowsiness detection. IEEE Sens. J. 2019, 19, 7624–7631. [Google Scholar] [CrossRef]

- Ko, W.; Oh, K.; Jeon, E.; Suk, H.-I. VIGNet: A Deep Convolutional Neural Network for EEG-based Driver Vigilance Estimation. In Proceedings of the 2020 8th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 26–28 February 2020; IOP Publishing Ltd.: Bristol, UK, 2020; pp. 1–3. [Google Scholar] [CrossRef]

- Ullah, I.; Hussain, M.; Qazi, E.-U.; Aboalsamh, H. An automated system for epilepsy detection using EEG brain signals based on deep learning approach. Expert Syst. Appl. 2018, 107, 61–71. [Google Scholar] [CrossRef]

- Huo, X.-Q.; Zheng, W.-L.; Lu, B.-L. Driving Fatigue Detection with Fusion of EEG and Forehead EOG. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 897–904. [Google Scholar]

- Shi, L.-C.; Lu, B.-L. Off-Line and On-Line Vigilance Estimation Based on Linear Dynamical System and Manifold Learning. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 6587–6590. [Google Scholar]

- Cui, J.; Lan, Z.; Liu, Y.; Li, R.; Li, F.; Sourina, O.; Müller-Wittig, W. A compact and interpretable convolutional neural network for cross-subject driver drowsiness detection from single-channel EEG. Methods 2021, 202, 173–184. [Google Scholar] [CrossRef]

- Hwang, S.; Park, S.; Kim, D.; Lee, J.; Byun, H. Mitigating inter-subject brain signal variability for EEG-based driver fatigue state classification. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 990–994. [Google Scholar] [CrossRef]

- Zheng, W.-L.; Lu, B.-L. A multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef]

- Zhang, N.; Zheng, W.L.; Liu, W.; Lu, B.L. Continuous vigilance estimation using LSTM neural networks. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2016; pp. 530–537. [Google Scholar] [CrossRef]

| Module | Block | Input Size | Specification | Output Size | No. of Parameters | |||

|---|---|---|---|---|---|---|---|---|

| Stride | Padding | Filter Size | Number of Filters | |||||

| Spectral-Temporal Feature Representation | TConv1 | 4 × 25 × 1 | 1 × 1 | 0 × 0 | 1 × 5 | F0 = 1024 | 4 × 21 × 1024 | 6144 |

| TSepConv1 | 4 × 21 × 1024 | 1 × 5 | F1 = 512 | 4 × 17 × 512 | 529,408 | |||

| TSepConv2 | 4 × 17 × 512 | 1 × 5 | F2 = 256 | 4 × 13 × 256 | 133,632 | |||

| TSepConv3 | 4 × 13 × 256 | 1 × 5 | F3 = 128 | 4 × 9 × 128 | 34,048. | |||

| Spatial Feature Representation | SConv1 | 4 × 17 × 512 | 1 × 1 | 0 × 0 | 4 × 1 | F1,4 = 1024 | 1 × 17 × 1024 | 2,098,176 |

| SConv2 | 4 × 13 × 256 | 4 × 1 | F2,4 = 1024 | 1 × 13 × 1024 | 1,049,600 | |||

| SConv3 | 4 × 9 × 128 | 4 × 1 | F3,4 = 1024 | 1 × 9 × 1024 | 525,312 | |||

| Classification | Global Average Pooling | 1 × 17 × 1024 | - | 1 × 1 × 1024 | NA | |||

| 1 × 13 × 1024 | 1 × 1 × 1024 | NA | ||||||

| 1 × 9 × 1024 | 1 × 1 × 1024 | NA | ||||||

| Concatenation | 1 × 1 × 1024 | - | 1 × 1 × 3072 | NA | ||||

| 1 × 1 × 1024 | NA | |||||||

| 1 × 1 × 1024 | NA | |||||||

| FC | 1 × 1 × 3072 | = 3, number of classes, i.e., awake, tired, or drowsy | 1 × 1 × 3 | 9219 | ||||

| Total No. of parameters | 4,385,539 | |||||||

| Data Type | Performance Metrics (%) | ||||

|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |

| Raw EEG data | 78.82 | 68.23 | 84.12 | 67.98 | 68.71 |

| DE Preprocessing | 94.35 | 91.52 | 95.76 | 91.52 | 91.52 |

| Experiment | Number of Filters | Performance Metrics (%) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| F0 | F1,4 | F2,4 | F3,4 | Acc | Sen | Spe | F1 Score | Pre | |

| 1 | 128 | 128 | 128 | 128 | 94.35 | 91.52 | 95.76 | 91.52 | 91.52 |

| 2 | 256 | 256 | 256 | 256 | 95.88 | 93.83 | 96.91 | 93.82 | 93.82 |

| 3 | 512 | 512 | 512 | 512 | 96.54 | 94.81 | 97.41 | 94.83 | 94.86 |

| 4 | 1024 | 1024 | 1024 | 1024 | 97.70 | 96.54 | 98.27 | 96.54 | 96.54 |

| 5 | 2048 | 2048 | 2048 | 2048 | 97.15 | 95.72 | 97.86 | 95.74 | 95.77 |

| Data Type | Performance Metrics (%) | ||||

|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |

| ReLU | 97.09 | 95.63 | 97.81 | 95.63 | 95.63 |

| LeakyReLU | 97.70 | 96.54 | 98.27 | 96.54 | 96.54 |

| ELU | 96.98 | 95.47 | 97.73 | 95.46 | 95.46 |

| Block Type | Performance Metrics (%) | ||||

|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |

| Spectral-Temporal | 95.33 | 93.00 | 96.50 | 93.01 | 93.01 |

| Spectral-Temporal + Spatial | 97.70 | 96.54 | 98.27 | 96.54 | 96.54 |

| Data Type | Performance Metrics (%) | ||||

|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |

| Three Scales | 97.70 | 96.54 | 98.27 | 96.54 | 96.54 |

| Two Scales | 96.92 | 95.39 | 97.69 | 95.37 | 95.37 |

| Single Scale | 89.24 | 83.87 | 91.93 | 83.93 | 84.03 |

| Experiment | Model | Number of Learnable Parameters | Performance Metrics (%) | ||||

|---|---|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |||

| 1 | EEG_DMNet | 4.3 M | 97.70 | 96.54 | 98.27 | 96.54 | 96.54 |

| 2 | EEG_DMNet + LSTM | 32.8 M | 96.60 | 94.90 | 97.45 | 94.91 | 94.94 |

| 3 | EEG_DMNet + BiLSTM | 30.2 M | 97.04 | 95.56 | 97.78 | 95.57 | 95.59 |

| 4 | EEG_DMNet + GRU | 22.2 M | 96.76 | 9514 | 97.57 | 95.17 | 95.22 |

| Fold | Performance Metrics (%) | ||||

|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |

| 1 | 97.32 | 95.98 | 97.99 | 95.00 | 96.03 |

| 2 | 97.16 | 95.74 | 97.87 | 95.72 | 95.75 |

| 3 | 97.79 | 96.69 | 98.35 | 96.70 | 96.72 |

| 4 | 97.01 | 95.51 | 97.75 | 95.48 | 95.50 |

| 5 | 98.11 | 97.16 | 98.58 | 97.16 | 97.16 |

| 6 | 97.79 | 96.69 | 98.35 | 96.69 | 96.69 |

| 7 | 97.08 | 95.63 | 97.81 | 95.61 | 95.62 |

| 8 | 97.79 | 96.69 | 98.35 | 96.68 | 96.68 |

| 9 | 96.61 | 94.92 | 97.46 | 94.91 | 94.91 |

| 10 | 97.95 | 96.93 | 98.46 | 96.94 | 96.97 |

| Mean | 97.46 ± 0.49 | 96.19 ± 0.74 | 98.10 ± 0.37 | 96.09 ± 0.83 | 96.20 ± 0.74 |

| Fold | Performance Metrics (%) | ||||

|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |

| 1 | 97.49 | 96.24 | 98.12 | 96.23 | 96.24 |

| 2 | 97.31 | 95.97 | 97.98 | 95.97 | 96.00 |

| 3 | 96.95 | 95.43 | 97.72 | 95.41 | 95.41 |

| 4 | 96.42 | 94.62 | 97.31 | 94.62 | 94.61 |

| 5 | 97.31 | 95.97 | 97.98 | 95.95 | 95.98 |

| 6 | 97.49 | 96.24 | 98.12 | 96.24 | 96.25 |

| 7 | 96.77 | 95.16 | 97.58 | 95.13 | 95.16 |

| 8 | 96.06 | 94.09 | 97.04 | 94.09 | 94.10 |

| 9 | 95.52 | 93.28 | 96.64 | 93.23 | 93.22 |

| 10 | 97.65 | 96.48 | 98.24 | 96.47 | 96.52 |

| Mean | 96.90 ± 0.70 | 95.35 ± 1.06 | 97.67 ± 0.53 | 95.33 ± 1.07 | 95.35 ± 1.08 |

| Fold | Performance Metrics (%) | ||||

|---|---|---|---|---|---|

| Acc | Sen | Spe | F1 Score | Pre | |

| 1 | 97.70 | 96.54 | 98.27 | 96.54 | 96.54 |

| 2 | 96.60 | 94.90 | 97.45 | 94.89 | 94.89 |

| 3 | 96.27 | 94.40 | 97.20 | 94.37 | 94.39 |

| 4 | 96.71 | 95.06 | 97.53 | 95.06 | 95.08 |

| 5 | 96.98 | 95.47 | 97.74 | 95.47 | 95.46 |

| 6 | 97.04 | 95.56 | 97.78 | 95.55 | 95.55 |

| 7 | 97.37 | 96.05 | 98.02 | 96.03 | 96.04 |

| 8 | 96.82 | 95.23 | 97.61 | 95.22 | 95.22 |

| 9 | 96.98 | 95.47 | 97.74 | 95.47 | 95.47 |

| 10 | 97.81 | 96.71 | 98.35 | 96.70 | 96.70 |

| Mean | 97.03 ± 0.48 | 95.54 ± 0.72 | 97.77 ± 0.36 | 95.53 ± 0.72 | 95.53 ± 0.72 |

| Model | No. of Subjects | No. of Channels | Trial Length | Performance Metrics (%) | |||

|---|---|---|---|---|---|---|---|

| Acc | Sen | F1 Score | Pre | ||||

| 1D-LBP by Orru et al. [19]—2020 | 23 | 17 | 8 s | 77.89 | - | - | - |

| VIGNet by Ko et al. [38]—2020 | 23 | 17 | 8 s | 89.72 | 84.58 | 84.60 | 84.67 |

| MSNN by Ko et al. [4]—2021 | 23 | 17 | 8 s | 81.62 | 72.43 | 72.11 | 72.68 |

| IFDM by Hwang et al. [34]—2021 | 8 | 17 | 8 s | 92.09 | - | - | - |

| Multi-channel LSTM + ESDA by Tang et al. [31]—2021 | 23 | 17 | 8 s | 95.70 | - | - | - |

| LPPCs+ R-SCM by Chen et al. [21]—2022 | 23 | 17 | 8 s | 87.10 | - | 86.75 | - |

| MATCN-GT by Jai et al. [28]—2023 | 23 | 17 | 8 s | 93.67 | - | - | - |

| EEG_DMNet—only noon trials (ours) | 23 | 4 | 8 s | 97.46 | 96.19 | 96.09 | 96.20 |

| EEG_DMNet—only night trials (ours) | 96.90 | 95.35 | 95.33 | 95.35 | |||

| EEG_DMNet—both trials (ours) | 97.03 | 95.54 | 95.53 | 95.53 | |||

| Model | p-Values of the Performance Metrics | ||||

|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | F1 Score | Precision | |

| VIGNet and EEG_DMNet (only noon trials). | h = 1 | h = 1 | h = 1 | h = 1 | h = 1 |

| VIGNet and EEG_DMNet (only night trials). | h = 1 | h = 1 | h = 1 | h = 1 | h = 1 |

| VIGNet and EEG_DMNet (both trials). | h = 1 | h = 1 | h = 1 | h = 1 | h = 1 |

| MSNN and EEG_DMNet (only noon trials). | h = 1 | h = 1 | h = 1 | h = 1 | h = 1 |

| MSNN and EEG_DMNet (only night trials). | h = 1 | h = 1 | h = 1 | h = 1 | h = 1 |

| MSNN and EEG_DMNet (both trials). | h = 1 | h = 1 | h = 1 | h = 1 | h = 1 |

| Model | Number of Layers | Number of Learnable Parameters | Number of FLOPs |

|---|---|---|---|

| VIGNet | 10 | 5 K | 8 K |

| MSNN | 38 | 97.4 K | 235 M |

| EEG_DMNet (ours) | 38 | 4.3 M | 92 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Obaidan, H.B.; Hussain, M.; AlMajed, R. EEG_DMNet: A Deep Multi-Scale Convolutional Neural Network for Electroencephalography-Based Driver Drowsiness Detection. Electronics 2024, 13, 2084. https://doi.org/10.3390/electronics13112084

Obaidan HB, Hussain M, AlMajed R. EEG_DMNet: A Deep Multi-Scale Convolutional Neural Network for Electroencephalography-Based Driver Drowsiness Detection. Electronics. 2024; 13(11):2084. https://doi.org/10.3390/electronics13112084

Chicago/Turabian StyleObaidan, Hanan Bin, Muhammad Hussain, and Reham AlMajed. 2024. "EEG_DMNet: A Deep Multi-Scale Convolutional Neural Network for Electroencephalography-Based Driver Drowsiness Detection" Electronics 13, no. 11: 2084. https://doi.org/10.3390/electronics13112084

APA StyleObaidan, H. B., Hussain, M., & AlMajed, R. (2024). EEG_DMNet: A Deep Multi-Scale Convolutional Neural Network for Electroencephalography-Based Driver Drowsiness Detection. Electronics, 13(11), 2084. https://doi.org/10.3390/electronics13112084