Abstract

Ambient Intelligence (AMI) represents a significant advancement in information technology that is perceptive, adaptable, and finely attuned to human needs. It holds immense promise across diverse domains, with particular relevance to healthcare. The integration of Artificial Intelligence (AI) with the Internet of Medical Things (IoMT) to create an AMI environment in medical contexts further enriches this concept within healthcare. This survey provides invaluable insights for both researchers and practitioners in the healthcare sector by reviewing the incorporation of AMI techniques in the IoMT. This analysis encompasses essential infrastructure, including smart environments and spectrum for both wearable and non-wearable medical devices to realize the AMI vision in healthcare settings. Furthermore, this survey provides a comprehensive overview of cutting-edge AI methodologies employed in crafting IoMT systems tailored for healthcare applications and sheds light on existing research issues, with the aim of guiding and inspiring further advancements in this dynamic field.

Keywords:

internet of medical things; IoMT; ambient intelligence; AMI; artificial intelligence; AI; healthcare 1. Introduction

In recent years, healthcare management systems have encountered increasing strain. While healthcare services predominantly center around hospitals, chronic diseases remain a formidable challenge worldwide. Cardiovascular diseases notably rank as a leading cause of death in regions such as the EU [1]. This strain on healthcare has become particularly apparent during pandemics such as COVID-19 and swine flu, with healthcare centers sometimes stretched to their limits. Additionally, patients often require ongoing monitoring post-surgery until their health stabilizes; however, current practices often entail frequent and burdensome hospital visits, which can prove costly, inefficient, and inconvenient for patients in need of routine checkups. Despite technological advances, many industrialized nations are grappling with significant challenges regarding the quality and affordability of healthcare services. Consequently, the sustainability of the healthcare sector is increasingly questioned, necessitating the utilization of makeshift facilities and telehealth technologies to ensure the continued viability of healthcare systems.

Amid these healthcare challenges, the exploration of information and communication technology (ICT) has emerged as a promising avenue for the implementation of autonomous and proactive healthcare services. From consumer-driven healthcare services through web-based platforms to electronic health records, ICT solutions have significantly enhanced the accessibility and efficiency of healthcare delivery. Notably, smartphone applications such as Teladoc [2] offer online assessment services, connecting patients with doctors and showcasing remarkable performance; however, these solutions often provide only brief glimpses of physiological conditions, and lack continuous monitoring over extended periods.

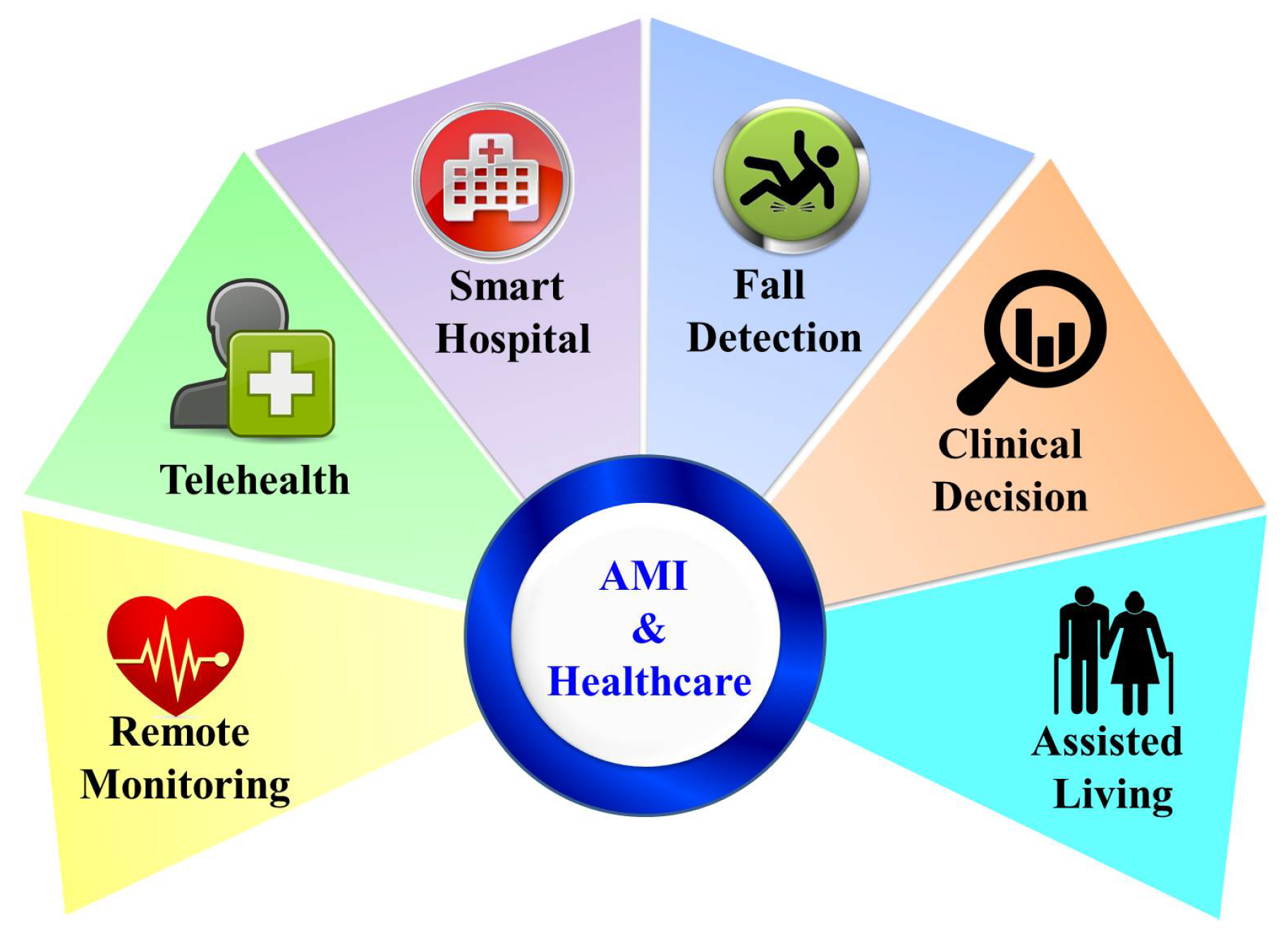

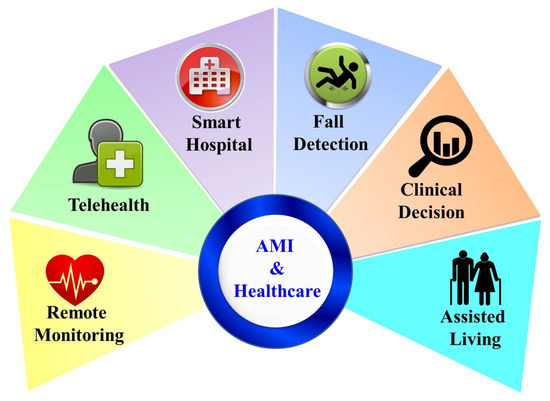

The limitations of contemporary healthcare systems have spurred interest in alternative approaches aimed at promoting effective personalized care. Among these approaches, Ambient Intelligence (AMI) and the Internet of Medical Things (IoMT) represent a paradigm shift towards intelligent healthcare. AMI, renowned for its capacity to cultivate adaptable and contextually aware environments, holds vast potential for revolutionizing healthcare facilities into intelligent ecosystems that cater to the unique needs of individual patients [3]. Simultaneously, IoMT technologies can facilitate the utilization of interconnected medical devices and sensors, providing unparalleled opportunities for real-time monitoring, data collection, and remote patient management [4]. The integration of Artificial Intelligence (AI) algorithms in AMI and IoMT systems enhances their capabilities by enabling advanced data analytics, predictive modeling, and decision support functionalities. The benefits of such integration can be briefly summarized as follows:

- Automated home monitoring services

- Reduced hospital and hospice occupancy

- Reduced healthcare cost

- Personalized healthcare services

- Predictive analysis for early disease detection

Collectively, these AI-powered technologies can usher in a new era of intelligent healthcare that prioritizes patient-centered care while optimizing operational efficiency. In response to these advancements, this paper endeavors to delve into the integration of AMI and AI in the healthcare environment through the IoMT. The focus is on exploring how the synergy between adaptive AMI environments and IoMT technologies, augmented by the analytical capability of AI, can effectively address the prevailing challenges encountered by healthcare systems with the goal of elevating the quality of patient care and ultimately enhancing healthcare outcomes. Through an exhaustive examination of the pertinent literature encompassing various components of AMI in communication, healthcare, and cutting-edge technological advancements in AI, this study aims to shed light on the myriad benefits, potential hurdles, and future trajectories associated with the utilization of AMI and AI in healthcare via the IoMT.

1.1. Recent Surveys on AMI and Diseases

Table 1 outlines previous surveys conducted on the applications of AMI in healthcare, highlighting their main objectives. The authors of [5] addressed the different sensors that can capture varied sensory information, including depth sensors, thermal sensors, radio sensors, acoustic sensors, etc., all of which can be deployed in hospital or daily spaces for monitoring human activities. However, they did not cover methods for identifying abnormal behavior, challenges in dataset acquisition, and the creation of artificial datasets when needed. On the other hand, [6] accentuated case studies through abstract experimentation without highlighting the technical information associated with data processing or model deployment. The impact of AIoT was evaluated in [7] for the healthcare domain. The authors performed a detailed exploration of various facets of IoT advancements specifically tailored to the medical field; however, their approach lacked specificity in addressing specific diseases, and did not emphasize how AMI could handle emergency cases. Moreover, the authors of [8] provided a detailed and comprehensive review of the techniques used to profile activities of daily living (ADL) and detect abnormal behavior for healthcare purposes. They defined abnormal behaviors and provided examples of abnormal behaviors/activities in the case of elderly people. In addition, they described different ambient sensor types and deep learning (DL)-based methods for abnormal behavior detection.

Table 1.

Surveys on AMI and healthcare.

This paper provides a comprehensive exploration of various sensors along with their usage, benefits and challenges. The contemporary literature addressing the accuracy limitations of these sensors is thoroughly reviewed. Additionally, it includes a brief overview of different communication protocols for the IoMT. A detailed explanation of various datasets is provided, along with an investigation of contemporary research on the fundamental AI principles of classification, segmentation, and detection within the context of AMI applications in healthcare. The primary aim is to offer a comprehensive overview that can facilitate broader integration between AI, AMI, and the IoMT.

1.2. Purpose of Study

The IoMT, together with its various components, encompasses a vast topic. This comprehensive work aims to serve as a one-stop solution for researchers. The purpose of this work can be summarized as follows:

- A comprehensive explanation of the AMI environment is provided, highlighting its key components, functionalities, and capabilities in healthcare settings.

- Various IoMT sensors, including body-centric and ambient sensors, are explored while discussing their invasive or non-invasive nature, usage, benefits, and applications in facilitating intelligent healthcare solutions.

- Various types of actuators are discussed based on their relationship to the body and environment, providing insights into their functions, challenges, and examples in healthcare contexts.

- The communication protocols and technologies used in IoMT systems are delved into, examining their role in facilitating seamless data exchange and interaction among sensors, actuators, and other components in healthcare environments.

- The integration of the IoMT with AI algorithms is analyzed, specifically focusing on image and video data as well as sensor data processing techniques.

- A comprehensive literature survey is presented in order to explore the diverse applications of AMI in healthcare, covering areas such as remote patient monitoring, personalized healthcare delivery, smart medical devices, and telemedicine.

- The challenges and limitations associated with the implementation of AMI in healthcare are analyzed, including issues related to data privacy, security, interoperability, scalability, and user acceptance.

The rest of this paper is organized as follows. Section 2 describes the fundamentals of AMI, including the key characteristics and environments. Section 3 provides brief information on the roles of sensors and actuators in the IoMT. Section 4 discusses the significance of communications in the IoMT, along with descriptions of different protocols and their limitations. Section 5 focuses on the utilization of AI in the IoMT, covering different datasets, preprocessing techniques, annotation methods, model training, and outcome prediction. Section 6 explores the application of AMI in healthcare through two specific case studies. Section 7 discusses the open challenges. Finally, concluding remarks are provided in Section 8.

2. Fundamentals of AMI

AMI refers to a computing paradigm in which the environment supports the occupant by adapting to their needs rather than inhibiting them. These intelligent environments can perceive, process, and respond to human interactions seamlessly and unobtrusively. Unlike traditional computing systems, which often require explicit inputs from users, AMI systems operate in the background using sensors, actuators, and intelligent algorithms to anticipate and adapt to users’ needs and preferences [10]. The key characteristics of AMI include:

- Context-aware computing: Aims to gain a deeper understanding of the significant contextual and situational information within ambient systems.

- Embedded systems: AMI applications primarily consist of embedded systems, intelligent sensing technologies, and actuators that can be deployed and operated autonomously.

- Intelligence: Different AI algorithms enhance the analytical capabilities of AMI systems to perceive, understand, and act.

- Personalized systems: Can be personalized and tailored to the needs and satisfaction of each user.

- Anticipatory: Can anticipate and fulfill the needs of an individual without requiring conscious intervention from the user.

- Ubiquitous: Enables the integration of invisible sensors into real-time environments, facilitated by the miniaturization of embedded components for enhanced mobility.

- Transparency: Seamlessly integrates into daily lives, subtly blending into the background without disruption.

- Complaints and flexibility: AMI systems are highly adaptable and flexible, and are capable of effortlessly adjusting to the diverse needs of individuals.

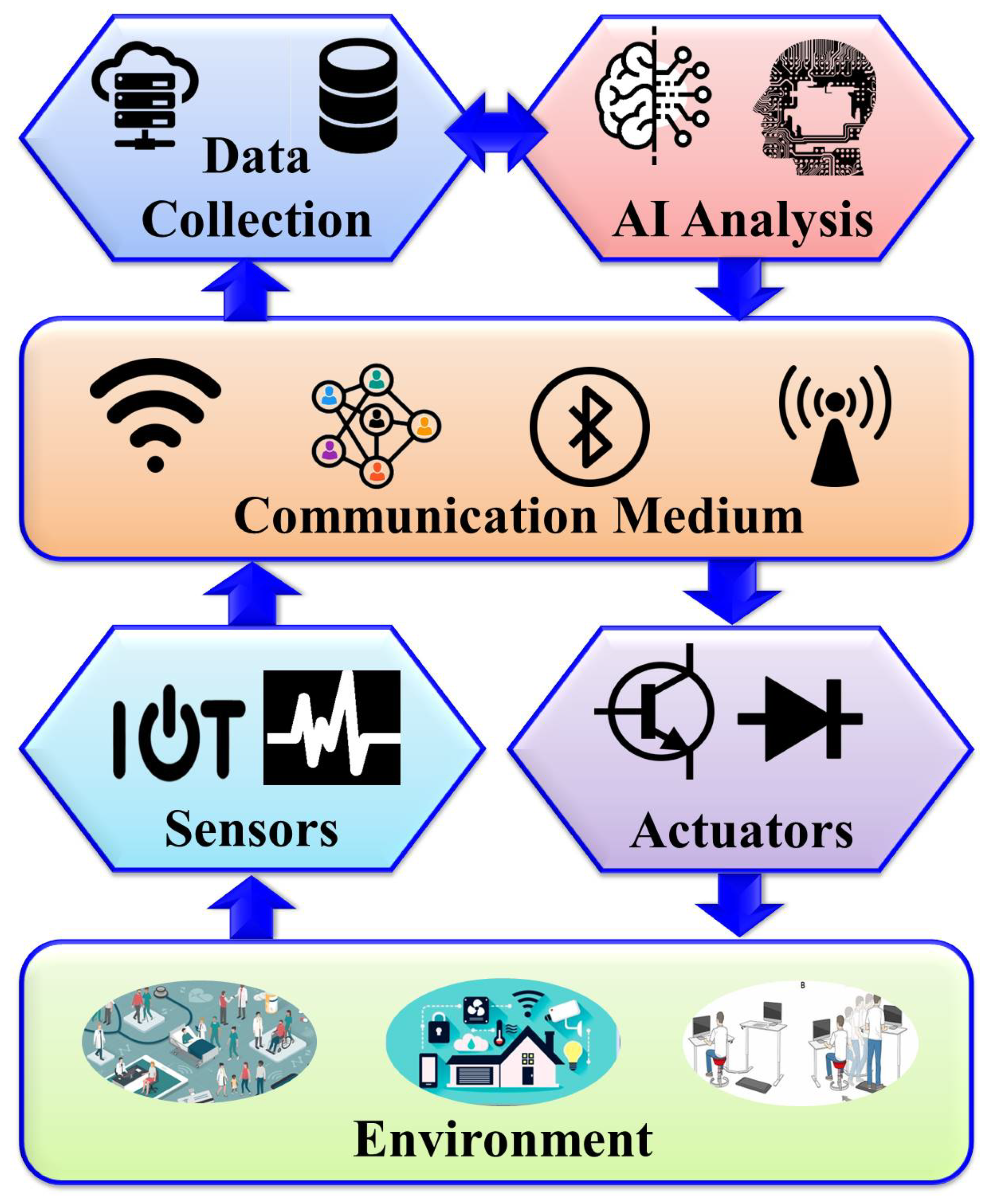

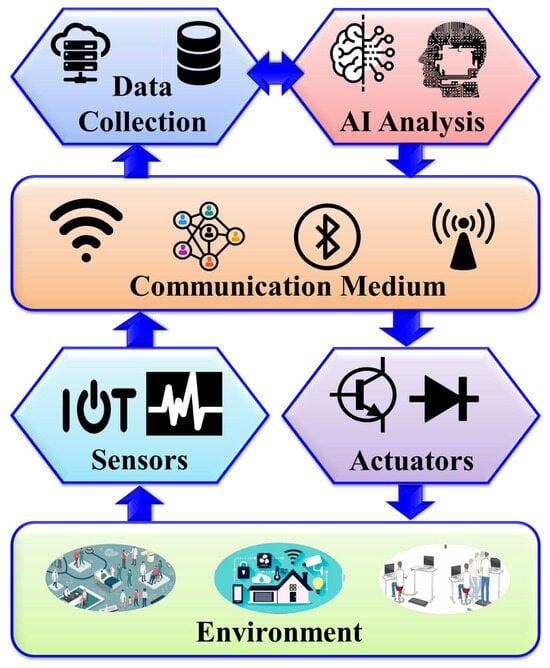

Within the realm of AMI, the creation of intelligent environments relies on synergy between various components working harmoniously to enhance user experiences and streamline everyday tasks. Figure 1 depicts the core components of AMI systems in healthcare, including the environment, intelligent sensing technologies, actuators, communication media, and advanced AI algorithms. These components form the foundation of AMI environments, enabling them to perceive, process, and respond to user interactions seamlessly and intuitively.

Figure 1.

General architecture of AMI assisted living.

The AMI environment comprises a dynamic ecosystem embedded with various sensors, actuators, and a communication system that collaborates with AI algorithms to create intelligent and context-aware spaces. These spaces can respond to users’ needs without requiring explicit input from them. Users can benefit from the interactive functionalities of these spaces without having to provide any explicit commands. Such environments can be established by integrating AMI into homes, workplaces, healthcare facilities, and public spaces to enhance user experiences, improve operational efficiency, and establish what is known as “Smart Living”. Consequently, individuals in AMI assisted living (AMIL) are surrounded by intelligent gadgets that can sense their conditions while predicting and potentially adapting to support them in their daily activities. In this paper, we primarily focus on those environments relevant to human healthcare.

In healthcare, AMIL is utilized in various intelligent environments to enhance patient care, optimize clinical workflows, and improve the healthcare system as a whole. The different AMIL environments in healthcare are described below:

- Hospital rooms: AMI is integrated into hospital rooms to establish intelligent and patient-centric environments. For example, ref. [11] demonstrated the utilization of AMI to track and monitor patients in intensive care units. Additionally, ref. [12] proposed the use of AMI to predict patients’ length of stay, thereby aiding in the prevention of emergency department overcrowding. Furthermore, AMI is able to monitor patient health metrics and adjust environmental conditions such as lighting and temperature within patient wards in order to ensure optimal comfort.

- Clinics and outpatient facilities: AMI deployed in clinics or outpatient facilities can streamline healthcare by minimizing clinical wait times [13]. Smart clinic environments utilize IoT sensors and AI algorithms to manage patient appointments, optimize waiting times, and personalize care pathways based on individual patient needs and preferences.

- Smart home: Smart home monitoring plays a pivotal role in determining the efficiency rate. For instance, daily activity monitoring could benefit healthy adults as well as children, individuals with disabilities, and the elderly. Moreover, these environments are effective when dealing with situations such as the COVID-19 pandemic [14]. Constant AMI-based monitoring systems can assist in daily living activities, monitor health conditions, and provide personalized care and support services.

- Rehabilitation centers: Smart rehabilitation could combine physical and cognitive activities to provide better rehabilitating facilities that are less boring while still being very effective in patient recovery. Moreover, AMI could aid in the treatment of individuals with Acquired Brain Injury (ABI) [15] by employing specialized devices to regulate patients’ movements and certain physiological responses, such as changes in heart rate, throughout the rehabilitation process.

- Mobile healthcare units: With the advancement of the Internet of Vehicles, it has become possible to integrate AMI into mobile healthcare units such as ambulances. This allows for the delivery of medical services and critical care interventions while on the move. Smart ambulances [16] can make real-time decisions based on traffic conditions or hospital loading conditions, helping to minimize travel time and select the best hospital for a specific medical emergency.

3. Sensors and Actuators in the IoMT

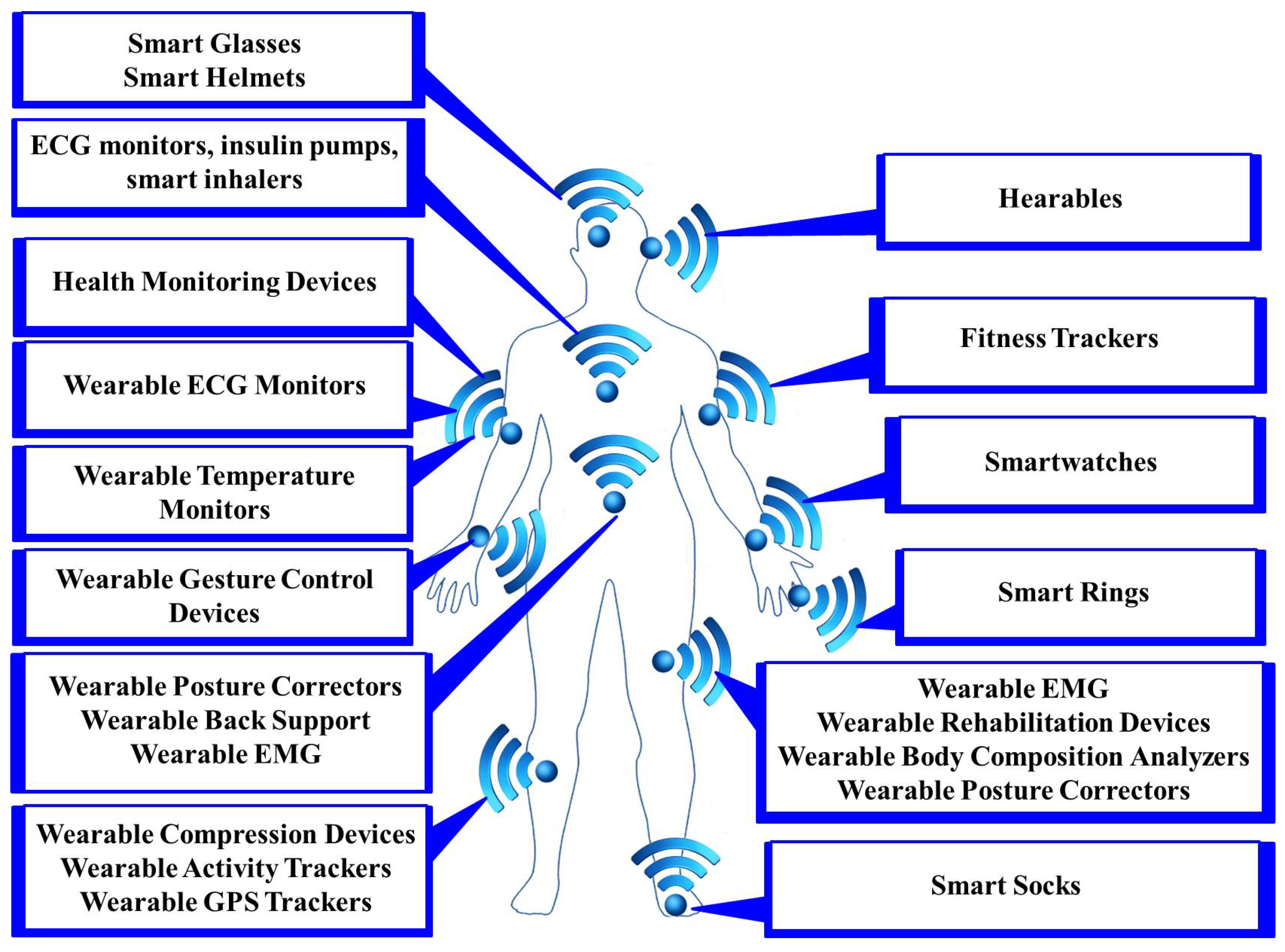

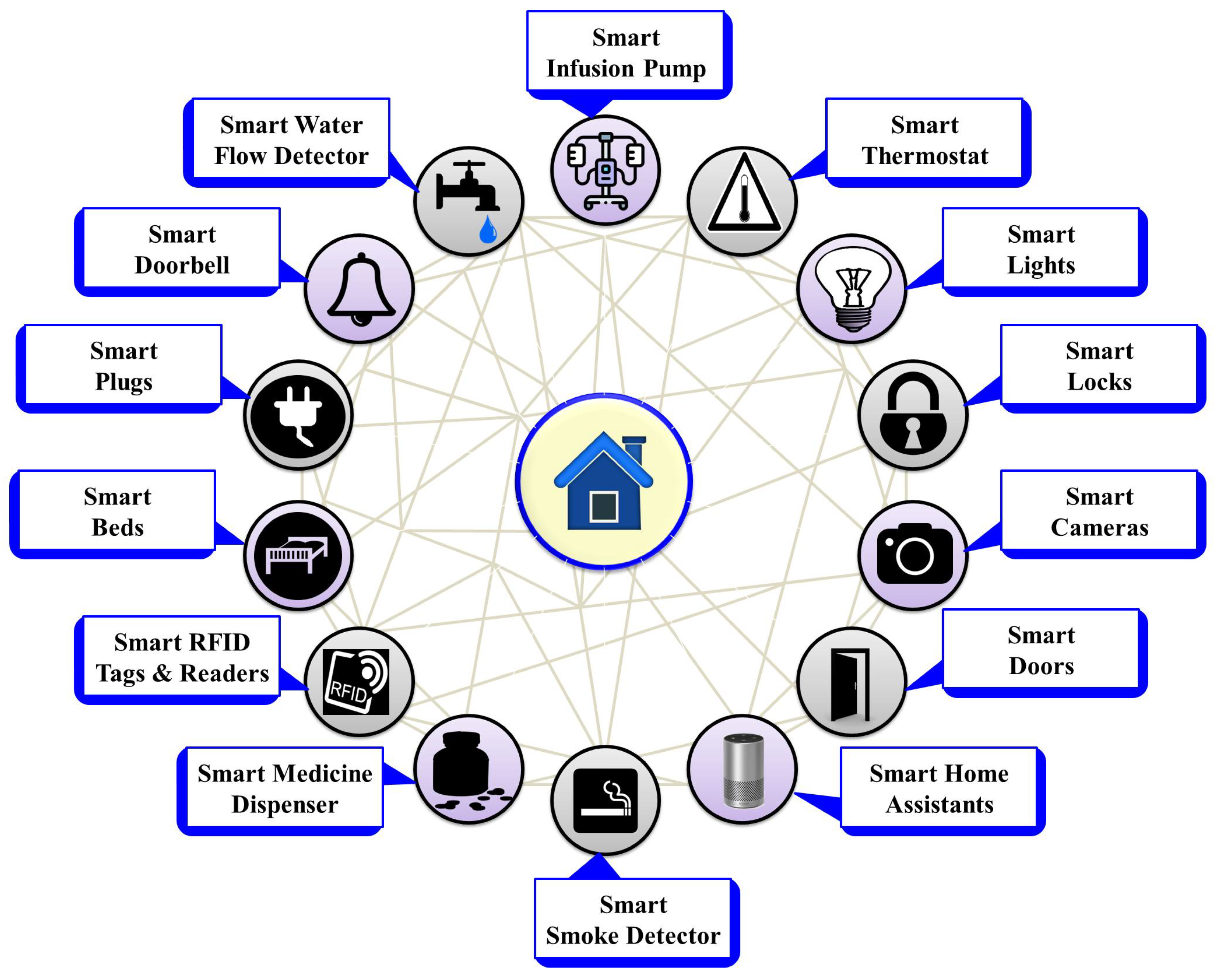

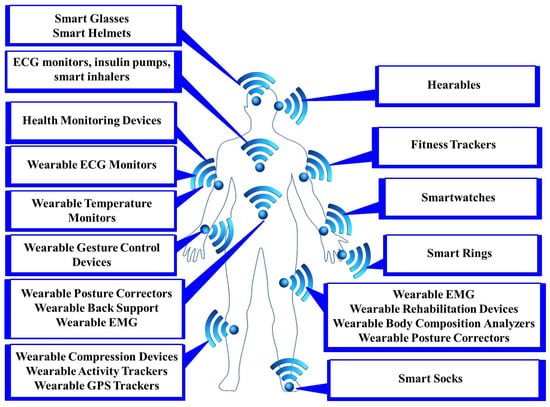

Sensors are the foundation of any IoMT environment. They collect data from the physical IoMT environment and transfer them to communication gateways for storing and analysis purposes. Typically, these sensors can be broadly classified into two categories: body sensors (as shown in Figure 2) and ambient sensors (as shown in Figure 3) [17]. Body sensors collect physiological information such as heart rate, body temperature, blood pressure, blood glucose information, oxygen saturation, etc. By leveraging advanced computational methods, the collected data can be analyzed and interpreted to extract meaningful insights and identify patterns indicative of specific activities or health conditions. Ambient sensors, on the other hand, collect physical information such as gravity, room or outside temperature, humidity sensor, motion sensor, etc. Detailed information on different body sensors and ambient sensors, including sensor type, description, data type, benefits, challenges, and integrations, is provided in Table 2 and Table 3.

Figure 2.

Different body-based IoMT devices.

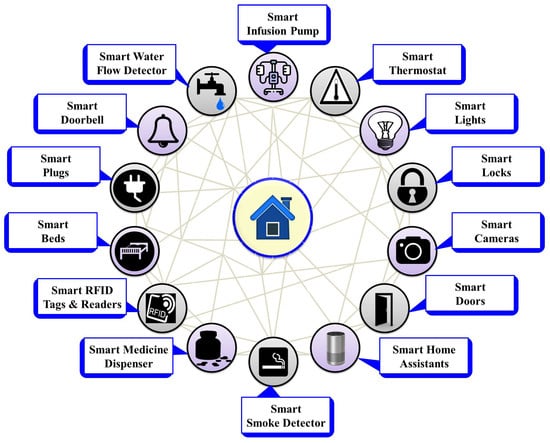

Figure 3.

Various ambient IoMT devices.

Table 2.

List of body sensors used in the IoMT.

Table 3.

List of ambient sensors which can be used in the IoMT.

3.1. Role of Body Sensors in the IoMT

Body sensors with assistance of various algorithms can be used to classify, detect or recognize different activities or associated health problems. The body sensors are described as follows:

3.1.1. Heart Rate Monitors

Heart rate monitors [17] can measure the heart’s activity by detecting electrical sensors generated during each heartbeat. These sensors are commonly integrated into wearable devices such as smart watches and fitness trackers. They can be used to monitor heart rate variability, which provides insights into the autonomic nervous system’s function and can be indicative of cardiovascular health. Heart rate monitors are valuable tools for detecting abnormalities such as arrhythmias, which can indicate underlying heart conditions such as atrial fibrillation or heart failure that signal the need for urgent medical attention. Additionally, wrist-worn heart rate monitors can enhance the rehabilitation efforts of stroke patients by monitoring exercise intensity during physical therapy sessions. The Fitbit Charge 5 Fitness and Heart Rate Tracker, Wahoo Tickr X Heart Rate Monitor Chest Strap, Garmin Forerunner 945 GPS Running Smartwatch with Heart Rate Monitor, and Apple smart watches are among the commercially available devices for heart rate monitoring.

3.1.2. Blood Pressure Monitors

Blood pressure monitors [18] are sensors used to measure the force of blood against the walls of arteries as the heart pumps blood throughout the body. These sensors come in various forms, including wearable devices and home blood pressure cuffs. Monitoring blood pressure levels is crucial for managing hypertension, a significant risk factor for cardiovascular diseases such as stroke, heart attack, and kidney disease. Wearable sensors that continuously monitor blood pressure levels can provide valuable data for optimizing hypertension treatment and reducing the risk of associated complications. Additionally, a study has shown that of patients with IS have a systolic blood pressure >139 mmHg, while have a systolic blood pressure >185 mmHg [19]. Commercial devices include the Omron HeartGuide Wearable Blood Pressure Monitor, QardioArm Wireless Blood Pressure Monitor, iHealth Clear Wireless Blood Pressure Monitor, and several smartwatches.

3.1.3. Electrocardiogram (ECG) Sensors

ECG sensors [20] monitor and diagnose heart conditions by measuring the electrical activity of the heart. These sensors are widely used in clinical settings, ambulatory monitoring, and home healthcare. One prominent example of an ECG sensor is the AliveCor KardiaMobile EKG Monitor. This compact and portable device can be connected to a smartphone to record single-lead ECGs any time and anywhere. It detects arrhythmias, monitors heart health, and facilitates remote cardiac monitoring. The device has a user-friendly interface and seamless mobile integration, making it a convenient solution for proactive heart health monitoring.

3.1.4. Blood Glucose Monitors

Blood glucose monitoring is essential for individuals managing diabetes. Sensors can measure glucose levels in the blood, providing real-time glucose data that can help to prevent dangerous fluctuations in blood sugar levels [18]. Continuous glucose monitoring (CGM) devices are vital for reducing the risk of diabetic complications such as hyperglycemia and hypoglycemia. By providing real-time glucose data, these monitors empower individuals to make informed decisions about their diet, exercise, and insulin dosage, in turn improving their overall diabetes management and quality of life. Moreover, elevated glucose levels have been correlated with larger final infarct volume and poorer clinical outcomes. This association has been observed in patients undergoing acute reperfusion therapies such as intravenous thrombolysis and endovascular therapy for IS as well as in various studies.

3.1.5. Pulse Oximeter

These sensors [17] are used for monitoring oxygen saturation levels in the blood. They are particularly useful in assessing respiratory conditions such as chronic obstructive pulmonary disease (COPD), asthma, and sleep apnea. Moreover, pulse oximetry is commonly used during anesthesia and critical care to measure oxygen levels and ensure adequate oxygenation of tissues. By providing continuous readings of oxygen saturation, pulse oximeters aid healthcare professionals in promptly identifying and addressing respiratory abnormalities. These devices include the Masimo MightySat Fingertip Pulse Oximeter, Nonin Onyx Vantage 9590 Finger Pulse Oximeter, and ChoiceMMed Fingertip Pulse Oximeter, among others.

3.1.6. Temperature Sensor

Temperature sensors [17] play a crucial role in detecting changes in body temperature, which can signify the presence of fever, infection, or inflammatory conditions. The ability to continuously monitor temperature using wearable devices and medical-grade thermometers is critical for early detection and prompt intervention, which is key to managing infectious diseases and preventing complications. Furthermore, temperature sensors have a wide range of applications in environmental monitoring, food safety, and industrial processes where precise temperature control is essential. Examples include the Withings Thermo Smart Temporal Thermometer, iHealth No-Touch Forehead Thermometer, Kinsa Smart Ear Thermometer, etc.

3.1.7. Accelerometer

Accelerometers [21] are sensors that can measure changes in movement and orientation, including acceleration forces. These sensors have a wide range of applications in healthcare, where they are used for monitoring physical activity levels and detecting abnormal movement patterns. They are valuable in fall detection systems, especially in the elder care context, where sudden changes in acceleration can signal a fall and allow for timely assistance. Moreover, accelerometers play a crucial role in assessing motor function and gait abnormalities in individuals with neurological disorders. Examples include the Fitbit Charge 4 Fitness Tracker, Garmin Vivosmart 4 Activity Tracker, Xiaomi Mi Band 6 Fitness Tracker, etc.

3.1.8. Gyroscope

Gyroscopes play a critical role in measuring orientation and angular velocity. They work in tandem with accelerometers to provide accurate motion tracking and spatial orientation. Wearable devices and smartphones make extensive use of these sensors, allowing features such as screen rotation and gesture recognition. In healthcare, gyroscopes help to diagnose balance disorders and monitor movement disorders such as Parkinson’s disease. Changes in orientation and movement patterns can be indicative of disease progression. Examples include Apple Watches, Samsung Galaxy Watches, and the Huawei Watch GT 3.

3.1.9. Electromyography (EMG)

EMG sensors [22] detect electrical activity in skeletal muscles, aiding in diagnosing and monitoring neuromuscular disorders such as muscular dystrophy and carpal tunnel syndrome. They are vital in rehabilitation, where they are used to assess muscle function and guide therapy. Commercial devices such as the MyoWare Muscle Sensor and Delsys Trigno Wireless EMG System are commonly used in these applications.

3.1.10. Electroencephalogram (EEG)

EEG sensors [22] are utilized to capture and analyze the electrical activity of the brain. These sensors come in various forms, from portable consumer-grade headsets to sophisticated clinical systems with multiple electrodes, enabling the monitoring and assessment of brain function in diverse applications. From diagnosing neurological disorders such as epilepsy and sleep disorders to advancing brain–computer interface technologies for assistive communication and rehabilitation, EEG sensors offer invaluable insights into the intricacies of brain activity, fostering innovation and progress in both research and healthcare realms. Examples include the NeuroSky MindWave Mobile EEG Headset, among others.

3.1.11. Respiratory Rate Monitor

Respiratory rate monitors [17] track the number of breaths taken per minute, offering crucial insights into respiratory health. In particular, they find applications in monitoring respiratory conditions such as asthma, chronic bronchitis, and pneumonia. These monitors detect changes in respiratory rate that can indicate symptom exacerbation or complications. Continuous monitoring enables healthcare professionals to evaluate respiratory status, make informed treatment decisions, and enhance patient care. Common examples of these devices include the ResMed AirSense 10 AutoSet CPAP Machine and the Philips Respironics DreamStation CPAP Machine.

3.1.12. Blood Gas Sensors

Blood gas sensors are devices designed to measure the levels of gases such as oxygen (O2), carbon dioxide (CO2), and pH in the blood. These sensors play a crucial role in assessing the respiratory and metabolic status of patients in clinical settings such as hospitals, intensive care units (ICUs), and emergency departments. By providing real-time measurements of blood gas levels, these sensors help clinicians to diagnose and monitor conditions such as respiratory failure, metabolic acidosis or alkalosis, and shock. Examples include the Radiometer ABL90 FLEX PLUS Blood Gas Analyzer, among others.

3.1.13. Intraocular Pressure Sensors

Intraocular pressure monitors are designed to measure the pressure inside the eye. Elevated intraocular pressure is a significant risk factor for glaucoma, a group of eye conditions that can lead to optic nerve damage and vision loss if left untreated. These sensors are crucial for diagnosing and monitoring glaucoma as well as for assessing the effectiveness of treatment interventions. One example of an intraocular pressure sensor is the Sensimed Triggerfish.

Figure 2 illustrates popular IoMT wearable devices categorized by the region of the body where they are used. In the head region, devices such as hearables, smart glasses, and smart helmets can be worn. Hearables or smart earbuds provide functionalities such as real-time translation, biometric monitoring, and voice-activated controls. Smart glasses offer augmented reality (AR) experiences, navigation assistance, and hands-free notifications, while smart helmets are equipped with features such as collision detection, health monitoring, and communication systems for enhanced safety. For the upper limbs, wearables include a variety of health monitoring devices, such as wearable ECG monitors that track heart activity and detect anomalies. Temperature monitors continuously measure body temperature, while gesture control devices allow users to control other devices through hand movements. Fitness trackers monitor physical activity, sleep patterns, and other health metrics, while smart watches offer multi-functional capabilities including notifications, health tracking, and mobile payments. Smart rings, though smaller in size, provide similar functionalities to smartwatches, often focusing on health metrics and contactless payments. In the lower limbs, specifically the legs, wearable devices include EMG monitors to measure muscle activity aiding in physical therapy and sports training. Rehabilitation devices assist in recovery from injuries by providing data and support for therapeutic exercises. Body composition analyzers measure metrics such as body fat and muscle mass, helping users to maintain their fitness goals, while posture correctors alert users to improper posture, promoting better spinal health. Compression devices improve circulation and reduce swelling, which is particularly useful for individuals with conditions such as varicose veins. Activity trackers and GPS trackers monitor movements and location providing valuable data for fitness and safety. Additionally, smart socks enhance activity tracking and comfort, and are often equipped with sensors to monitor gait and balance. Detailed information about body sensors, including sensor type, description, data type, benefits, challenges, and integrations, is provided in Table 2.

3.2. Accuracy Improvement in Body Sensors

Body sensors are indispensable for monitoring individual health and providing crucial feedback for overall wellbeing. However, achieving accurate measurements through wearable sensors is often hampered by deviations in accuracy during the automatic collection of health vitals stemming from the various factors outlined in the challenges section of Table 2. The application of AI offers powerful solutions to address these challenges, enhancing the accuracy of body sensors through a range of advanced techniques tailored to each specific sensor type.

However, prior to the application of AI algorithms, a common requirement is data preprocessing to address the issue of noise prevalent in sensor data, which is a challenge for all sensors. For instance, in heart rate monitors, noise impact can be mitigated using noise removal or outlier detection algorithms such as first-order low-pass filters and wavelet transformation algorithms [23] or the spectral filter algorithm for motion artifact and pulse reconstruction (SpaMA) [24]. Two-stage algorithms can also be employed for noise artifact elimination and spectral analysis [24]. Similarly, readings from blood sugar monitoring devices such as PPGs are influenced by the testing site and environmental conditions. These issues can be mitigated through filtering techniques such as the Butterworth filter, which operates within a frequency range of 0.5–8 Hz [25]. In CBGM, noise removal approaches include adaptive iterative filtering and fast discrete lifting-based wavelet transform (LWT) [26] as well as multi-filtering augmentation [27]. Pulse oximetry sensor data often utilize adaptive filtering techniques [28], while accelerometer and gyroscope sensors benefit from Butterworth high-pass filtering [29], complementary filters, and Kalman filters [30] for error assessment and enhanced accuracy. Artifacts can be effectively removed from EEG sensors using graph signal processing [31]. Motion artifacts can be reduced using analytical software tools [32], enhanced peak and valley detection algorithms [33], or through Fourier transformation [34].

Among the most widely used ML algorithms for overcoming accuracy limitations in body sensors are Bayesian models such as Gaussian naive Bayes (NB), probabilistic approaches, and nonparametric methods such as exemplar-based techniques, kernel methods, decision trees (DT), random forests (RF), bagging, and boosting. Other commonly employed algorithms are logistic regression (LoR), linear regression (LR), linear discriminant analysis (LDA), k-nearest neighbor (k-NN), and support vector machine (SVM) [29,35,36,37,38,39]. Additionally, advanced signal processing techniques such as ANOVA, chi-square, mutual information, and ReliefF are utilized to enhance accuracy [40]. In the case of blood gas sensors, accuracy can be increased through SMOTEENN sampling [41], kernel principal component analysis (KPCA), and adaptive boosting [38]. Additionally, the accuracy of respiratory rate monitoring can be enhanced through motion video magnification using the Hermite transform and an artificial hydrocarbon network (AHN) following classification of frames using Bayesian-optimized AHN [42].

Furthermore, DL approaches are often applied to enhance accuracy. For instance, one-dimensional (1D) DL models such as GlucoNet are used for accurate blood glucose level measurement [25], while intraocular pressure sensors benefit from automated feature extraction using 1D CNNs [43]. DL-based algorithms, including deep neural networks (DNNs), graph neural networks (GNNs), deep belief networks (DBNs), generative adversarial networks (GANs), transformers, recurrent neural networks (RNNs), autoencoders, attention mechanisms, and CapsNets, as well as reinforcement learning (RL), are options for further improving accuracy [35,44]. Additionally, the accuracy of CBGM can be enhanced using the multilayer perceptron (MLP) approach. In this approach, data are categorized into classes such as hypoglycemia, non-diabetic, prediabetes, diabetes, severe diabetes, and critical diabetes. A patient-specific personalized model is then trained, with MLP used in the final stage for accurate prediction of blood glucose concentration [45]. Moreover, DL-based hybrid approaches have been applied for improving the accuracy of EMG by combining CNN and long short-term memory (LSTM) models [46]. Multiple DL models can be combined in an ensembling approach to increase the predictive accuracy of ECG-based sensor data [47].

3.3. Impact of Ambient Sensors in the IoMT

Ambient sensors play a critical role in monitoring the environment, enhancing safety, and automating processes across various industries. These sensors provide real-time data on environmental factors, enabling better decision-making, optimizing resource utilization, and improving the overall quality of life. For example, light sensors [48] can detect and measure the intensity of ambient light, allowing for automatic adjustments to lighting levels in response to changing daylight conditions. This enhances energy efficiency while contributing to the creation of a more comfortable and productive indoor environment. Temperature sensors can maintain optimal thermal comfort by monitoring indoor and outdoor temperatures, and can be utilized in heating, ventilation, and air conditioning systems to regulate temperature levels for maximum comfort and energy efficiency [48]. To control indoor humidity levels, humidity sensors can measure the moisture content in the air. This is particularly important for preventing mold growth, maintaining indoor air quality, and ensuring the longevity of sensitive equipment and materials [48].

Motion sensors detect movement within a specified area, triggering actions such as turning on lights or activating security alarms [49]. They are commonly used in security systems, occupancy detection systems, and home automation applications to enhance safety and convenience. The presence or absence of nearby objects can be identified through proximity sensors, facilitating touchless control and automation in various devices and systems [49]. They are employed in applications, ranging from automatic door-opening systems to touchscreen devices and industrial machinery. Smoke sensors and gas sensors are critical for detecting potentially harmful substances in the air, including smoke, carbon monoxide, and other toxic gases. They are essential components of fire detection systems, home security systems, and industrial safety equipment, helping to prevent accidents and protect lives.

Sound sensors monitor ambient noise levels, generating valuable data for noise pollution monitoring, acoustic environment analysis, and sound-based applications such as voice-controlled devices and smart speakers [50]. Air quality sensors, CO2 sensors, and water quality sensors [48] measure pollutants and contaminants in the atmosphere and water sources, respectively. These sensors are essential for monitoring environmental pollution, assessing indoor air quality, and ensuring the safety of drinking water. Finally, magnetic switches offer detection capabilities for changes in magnetic fields, enabling diverse applications such as security systems, door/window sensors, and industrial automation. They provide reliable detection of opening and closing events, contributing to enhanced security and efficiency in various environments.

Figure 3 provides brief information on different ambient sensors used to create smart environments. These smart devices include smart infusion pumps and medicine dispensers, which transform patient dosage delivery systems by minimizing errors; additionally, smart thermostats, lights, locks, cameras, doors, home assistants, smoke detectors, beds, plugs, doorbells, and water flow detectors offer automated control, enhancing energy efficiency and ensuring security in homes, businesses, hospitals, and other settings. Detailed descriptions along with benefits, challenges, and integrations are provided in Table 3.

3.4. Influence of Actuators in the IoMT

Actuators translate electrical impulses into physical actions or movements, facilitating various functions that contribute to an individual’s wellbeing. In essence, actuators respond to instructions based on the data acquired by sensors, which are then processed and computed to execute the desired actions. For instance, sensors such as blood glucose monitors measure the body’s blood glucose levels, while actuators respond by regulating insulin levels. These actuators can be categorized into body-centric actuators [51] and environment-centric actuators. Body-centric actuators can be further classified into therapy (therapeutic), diagnosis (diagnostic), and treatment actuators [52]. Several body-centric actuators are described in Table 4. The main challenge for these body-centric actuators is to ensure safety and compatibility with the human body.

Table 4.

List of actuators used in healthcare.

In addition to body-centric actuators, which directly interact with the human body, other types of actuators play crucial roles in various healthcare applications. Surgical robot actuators, for instance, enable precise and controlled movements during surgical procedures, enhancing surgical outcomes and patient safety. Drug delivery pump actuators regulate the flow rate of medication administration, ensuring accurate dosage delivery to patients [51]. Similarly, infusion pump actuators facilitate the controlled infusion of fluids into patients’ circulatory systems, which is vital for administering medications and nutrients. Radiology table actuators enable precise positioning of patients during imaging procedures, ensuring optimal imaging quality while prioritizing patient comfort and safety. Rehabilitation robot actuators support physical therapy and rehabilitation exercises, providing varying levels of resistance and assistance to patients undergoing rehabilitation [51]. Despite their indispensable roles, these actuators pose challenges related to accuracy, compatibility, safety, and integration with existing healthcare systems. Addressing these challenges is paramount to harnessing the full potential of these actuators in improving healthcare delivery and patient outcomes.

These environment-centric actuators operate outside the human body and interact with features of the external environment such as medical devices, equipment, and facilities. They can be described as follows:

- Linear actuators: Linear actuators such as adjustable hospital beds, patient lifts, and wheelchair lifts are used in various healthcare applications. They enable smooth and precise linear motion, facilitating patient positioning and mobility assistance.

- Rotary actuators: Rotary actuators are employed in medical devices such as robotic surgical systems and diagnostic equipment. They provide rotational motion, allowing for precise positioning of medical instruments and imaging components [53].

- Pneumatic actuators: Pneumatic actuators utilize compressed air to generate motion and force. They are utilized in medical devices such as pneumatic compression sleeves for deep vein thrombosis prevention and pneumatic assistive devices for patient transfers and mobility aids [51].

- Electrical actuators: Electrical actuators are used in medical imaging equipment such as MRI machines and CT scanners. They enable precise control of moving parts within these devices, contributing to high-resolution imaging and diagnostic accuracy [53].

- Piezoelectric actuators: Piezoelectric actuators are employed in medical devices for precise positioning and manipulation at the microscale. They find applications in areas such as micromanipulation for surgical procedures, microfluidics for drug delivery, and nanotechnology for cellular manipulation [51].

- Hydraulic actuators: Hydraulic actuators use pressurized fluid to generate motion and force. They are utilized in medical devices such as hydraulic patient lifts, operating room tables, and hydraulic assistive devices for rehabilitation and physical therapy [53].

4. Significance of Communications in IoMT

The communication layer in IoMT systems serves as a foundation for enabling seamless connectivity, data exchange, and interaction between distributed devices, sensors, and systems within an ambient intelligent environment. This layer encompasses various communication technologies, protocols, and standards tailored to the specific requirements of IoMT applications. In other words, the communication medium layer is responsible for managing the underlying communication infrastructure, protocols, and technologies to ensure smooth connectivity, efficient data transmission, and reliable operation of IoMT systems.

4.1. Wireless Connectivity Services

The IoMT relies heavily on wireless connectivity technologies, with essential features including low latency, scalability, cost-effectiveness, flexible data transmission for varied data sizes, adaptable coverage areas, and optimal power consumption, along with the best possible network technologies [54]. In general, communication connectivity can be broadly categorized into short-term communication protocols and long-term communication protocols based on the distance they serve [55]. Short-term communication technologies, including Wi-Fi (IEEE 802.11 [56]), Bluetooth (IEEE 802.15.1 [56]), Zigbee (IEEE 802.15.4 [56]), and Z-Wave, enable efficient communication over shorter distances within physical environments such as homes, offices, and industrial facilities. Long-term communication technologies, including cellular networks such as 4G LTE (Long-Term Evolution) and 5G, provide wide-area coverage for mobile devices, IoT applications, and virtual entities in the metaverse. Additionally, Wide-Area Networks (WANs), comprising satellite communication and terrestrial networks, extend communication coverage to connect devices, systems, and virtual entities across expansive geographic areas, including both physical and virtual spaces within the metaverse. The different networking interfaces in AMI are as follows:

- Wi-Fi: Wi-Fi is a wireless communication technology based on the IEEE 802.11 standard that enables devices to connect to a local area network (LAN) and communicate with each other and the Internet. Wi-Fi is commonly used in sensor networks for applications such as smart homes, smart cities, and industrial automation.

- WiMax: WiMax is a wireless broadband tech based on IEEE 802.16 standard [57]. It supports data rates from a few Mbps to tens of Mbps, and enables point-to-multipoint and mesh network topologies for broadband access. WiMax finds applications in urban and rural internet access, cellular network backhaul, and last-mile connectivity.

- Bluetooth: Bluetooth is a short-range wireless communication technology that enables devices to connect and communicate over short distances. Bluetooth is commonly used in sensor networks for applications such as wearable devices, healthcare monitoring, and personal area networks (PANs).

- Zigbee: Zigbee is a low-power and low-data-rate wireless communication protocol based on the IEEE 802.15.4 standard. Zigbee is commonly used in sensor networks for applications such as smart homes, industrial automation, and smart cities due to its low power consumption and mesh networking capabilities.

- Z-Wave: Z-Wave is a wireless communication protocol designed for home automation and smart home applications. Z-Wave operates in the sub-GHz frequency range and is optimized for low-power devices and reliable communication in residential environments.

- Thread: Thread is a low-power mesh networking protocol designed for smart home applications. Thread operates on the IEEE 802.15.4 standard and provides IPv6 connectivity, enabling devices to communicate directly with each other and with the internet.

- LoRa (Long Range): LoRa is a long-range and low-power wireless communication technology designed for applications that require long-distance communication and low power consumption. LoRa is commonly used in sensor networks for applications such as smart agriculture, environmental monitoring, and asset tracking.

- Cellular Networks: Cellular networks (4G LTE, 5G) are wide-area wireless communication networks that provide mobile connectivity and internet access to devices over large geographical areas. Cellular networks such as 4G LTE and 5G are commonly used in sensor networks for applications such as smart cities, transportation systems, and remote monitoring.

- MBWA (Mobile Broadband Wireless Access): MBWA provides high-speed internet access to mobile devices using technologies such as 3G, 4G (LTE), and 5G. It supports high data rates and enables seamless mobility support for users. MBWA finds applications in mobile internet access, video streaming, online gaming, IoT connectivity, and other mobile applications.

- Optical Wireless Communications (OWC): OWC utilizes unguided visible, infrared (IR), or ultraviolet (UV) light to transmit signals, primarily for short-range communication purposes [58]. OWC systems operating within the visible band of 390–750 nm are referred to as visible light communications (VLC). VLC systems use light-emitting diodes (LEDs), and find applications in wireless local area networks, wireless personal area networks, and vehicular networks. Terrestrial point-to-point OWC systems, known as free space optical (FSO) systems, operate in the 750–1600 nm IR spectrum and provide high data rates. OWC systems operating in the UV spectrum function at frequencies of 200–280 nm.

- Power Line Communication (PLC): PLC uses electrical wiring for data transmission. It enables communication over power lines with data rates ranging from a few hundred bps to tens of Mbps. PLC is used for point-to-point and point-to-multipoint communication in smart grid management, home automation, remote metering, and indoor networking.

4.2. Mesh Networking Technologies

Mesh networking technologies are indispensable for IoMT systems, as they facilitate the formation of self-configuring mesh networks among devices. In the IoMT context, mesh networks are characterized by a non-hierarchical topology in which multiple devices or nodes coexist, cooperate, and offer comprehensive coverage over a wider area. Protocols such as Zigbee and Thread are commonly employed to implement mesh networks, enabling direct communication between devices without the need for centralized infrastructure. Through mesh networking, devices can communicate with each other via multiple hops, leveraging dynamic routing and self-healing capabilities. This enhances network coverage, scalability, and reliability in IoMT environments [59]. Mesh networks typically adopt either full mesh or partial mesh topologies. In a full mesh topology, every node is directly connected to every other node, while in a partial mesh topology only certain nodes are directly connected. The choice between a fully or partially meshed network depends on factors such as overall network traffic. Although mesh networks offer increased stability, extended coverage range, and improved security, they also entail higher costs, complexity, scalability challenges, and potentially increased latency for the nodes.

4.3. Edge Computing/Fog Computing Technologies

Edge computing is seamlessly integrated into the communication layer of IoMT systems thanks to their ability to process data closer to the source, enabling faster response times, reduced latency, and enhanced overall system efficiency. Edge or fog computing supports real-time data processing and analysis, facilitating rapid decision-making and response to dynamic changes for both physical and virtual entities [60]. Additionally, edge computing reduces the need to transmit large volumes of raw data to centralized servers or cloud platforms, thereby alleviating network congestion and minimizing bandwidth usage. Fog computing, on the other hand, extends the capabilities of edge computing by providing a hierarchical architecture that includes intermediate fog nodes between edge devices and centralized cloud servers. Fog nodes act as intermediaries for data processing and storage, allowing for more efficient resource utilization and dynamic allocation of computational tasks based on local requirements.

4.4. Metaverse

IoMT systems within the metaverse leverage advanced AI algorithms to analyze user behaviors, preferences, and interactions, enabling personalized experiences and intelligent decision-making. These AI-driven capabilities enhance user immersion and engagement within virtual environments while optimizing resource allocation and system performance. The metaverse serves as a virtual layer overlaying physical spaces, offering immersive experiences for work, play, and socialization. Within this virtual realm, users interact with digital representations of physical objects and environments, facilitated by XR technologies such as augmented reality (AR) and virtual reality (VR). Digital twins powered by real-world data enable predictive modeling and simulation of physical objects and spaces within the metaverse, enhancing realism and functionality [61].

4.5. Security and Privacy Mechanisms

Security and privacy mechanisms are essential for protecting data integrity, confidentiality, and privacy in both physical and virtual environments. Encryption, authentication, access control, and secure communication protocols (e.g., TLS/SSL) are implemented to mitigate risks associated with unauthorized access, data breaches, and cyberattacks in AMI systems, including those operating within the metaverse [61]. Cryptography plays a crucial role in ensuring data security by enabling secure communication despite potential threats. Encryption algorithms transform input (plain-text) into encrypted output (cipher-text) using keys. Symmetric key encryption methods include block cipher, which operates on single data blocks by breaking down the message into blocks for individual processing with a cryptographic key, and stream cipher, which encrypts and decrypts shared data using symmetric key mechanisms. Asymmetric key encryption, also known as public-key encryption, uses two different keys, one for encryption and another for decryption, in contrast to symmetric key encryption, where the same key is used for both encryption and decryption.

4.6. Communication Protocols

In general, communication protocols specify the rules and conventions for transmitting data over communication networks, thereby ensuring efficient and reliable communication. Communication protocols are crucial for modern interconnected systems, as they allow devices and applications to exchange data seamlessly. While there are many protocols available, a few stand out for their unique features. HTTP (Hypertext Transfer Protocol) is the foundation of the World Wide Web, and is used for client–server communication over the internet. For IoT and constrained environments, CoAP (Constrained Application Protocol) is lightweight and efficient, making it ideal for resource-constrained devices. AMQP (Advanced Message Queuing Protocol) and MQTT (Message Queuing Telemetry Transport) are messaging protocols, with AMQP prioritizing enterprise-grade reliability and MQTT focusing on lightweight publish–subscribe messaging. XMPP (Extensible Messaging and Presence Protocol) is used for real-time communication and fosters instant messaging and presence information exchange. COBRA (Common Object Request Broker Architecture) provides a framework for distributed object communication, while ZeroMQ offers a flexible socket-based messaging approach for high-performance applications. In industrial automation, DDS (Data Distribution Service) and OPC UA (Unified Architecture) ensure interoperability and real-time data exchange within distributed systems. Finally, DPWS (Devices Profile for Web Services) facilitates standardized communication for devices in a web services environment, helping to achieve interoperability in heterogeneous systems. Each of these protocols serves specific communication needs, collectively forming a diverse ecosystem essential for modern information exchange. These different communication protocols are explained briefly in Table 5:

Table 5.

List of communication protocols in the IoMT.

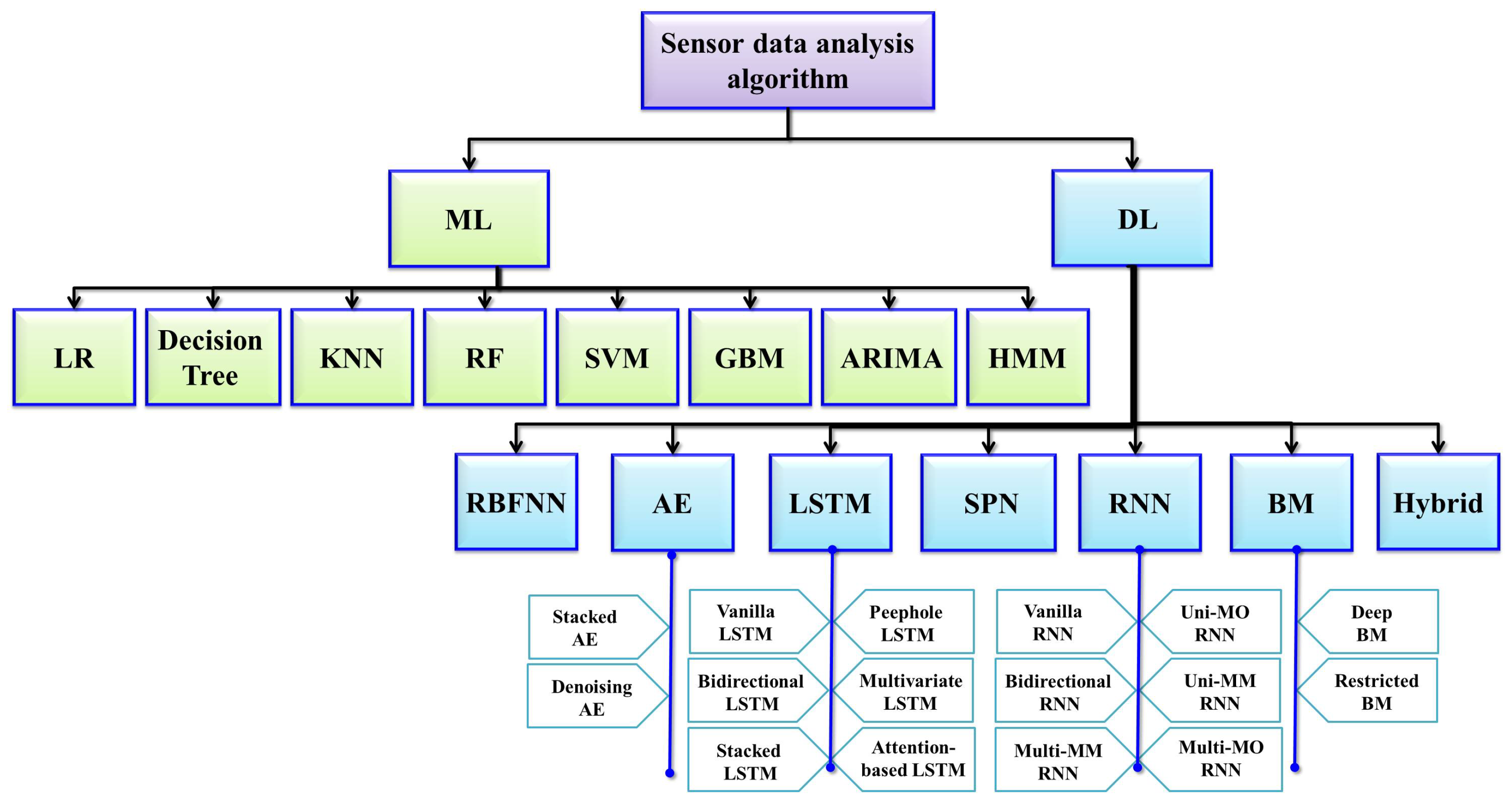

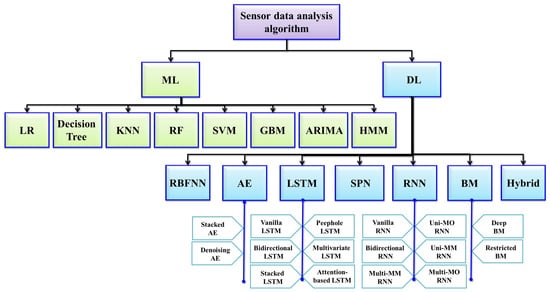

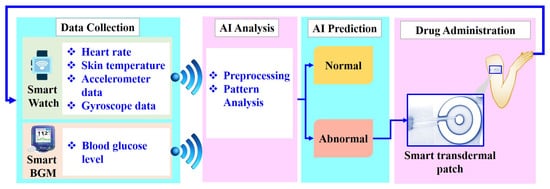

5. Utilization of AI Analysis in the IoMT

TAI analysis techniques make up an important part of foundation for the IoMT, facilitating systems to become intelligent; AI-powered systems possess the ability to process, interpret, and extrapolate actionable insights from the extensive datasets acquired from both environmental and user interactions. These AI techniques use either ML, DL, or RL algorithms to perform predictive analytics, pattern recognition for normal/anomaly detection, activity recognition, sentiment analysis, and behavior analysis. In turn, these techniques empower IoMT systems to discern user preferences, anticipate their needs, and provide personalized and context-aware services. By integrating the aforementioned AI analysis techniques, IoMT devices can deliver optimal user experiences, making AI a vital component of modern-day smart environments.

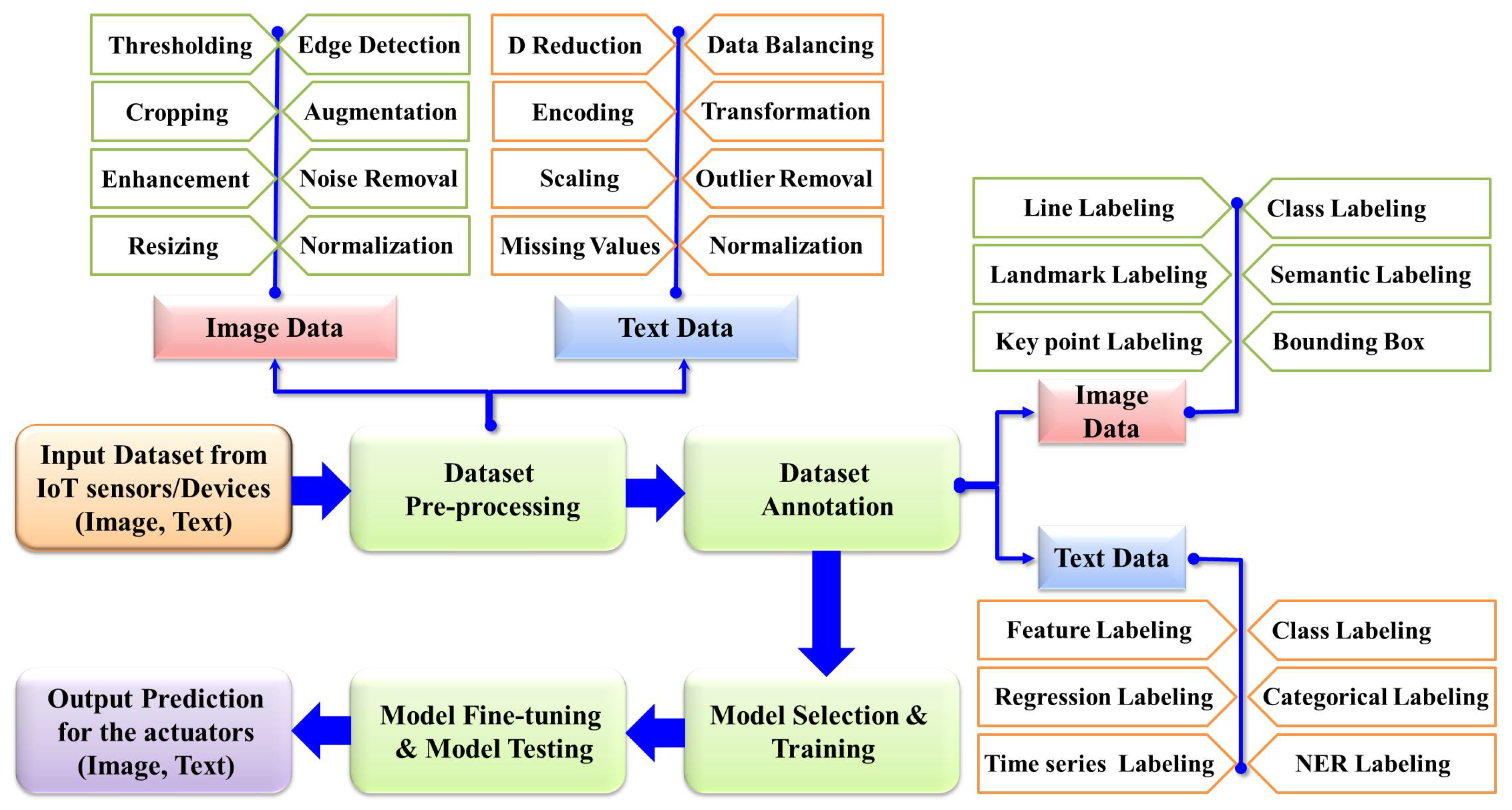

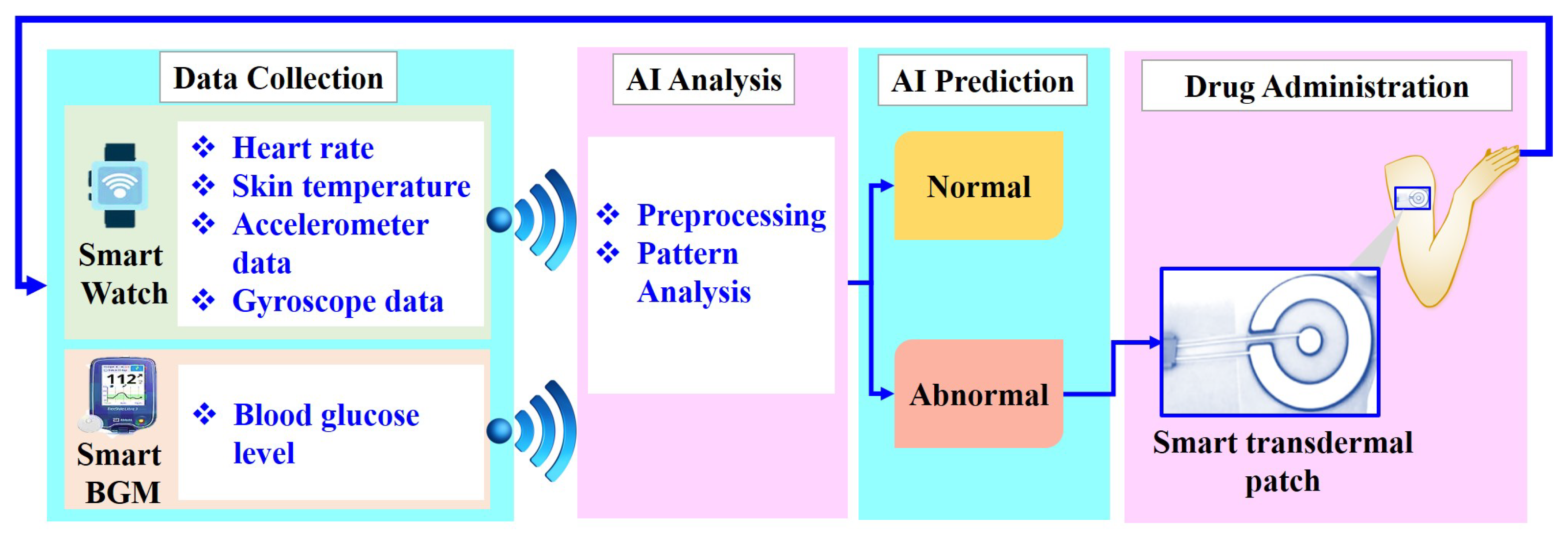

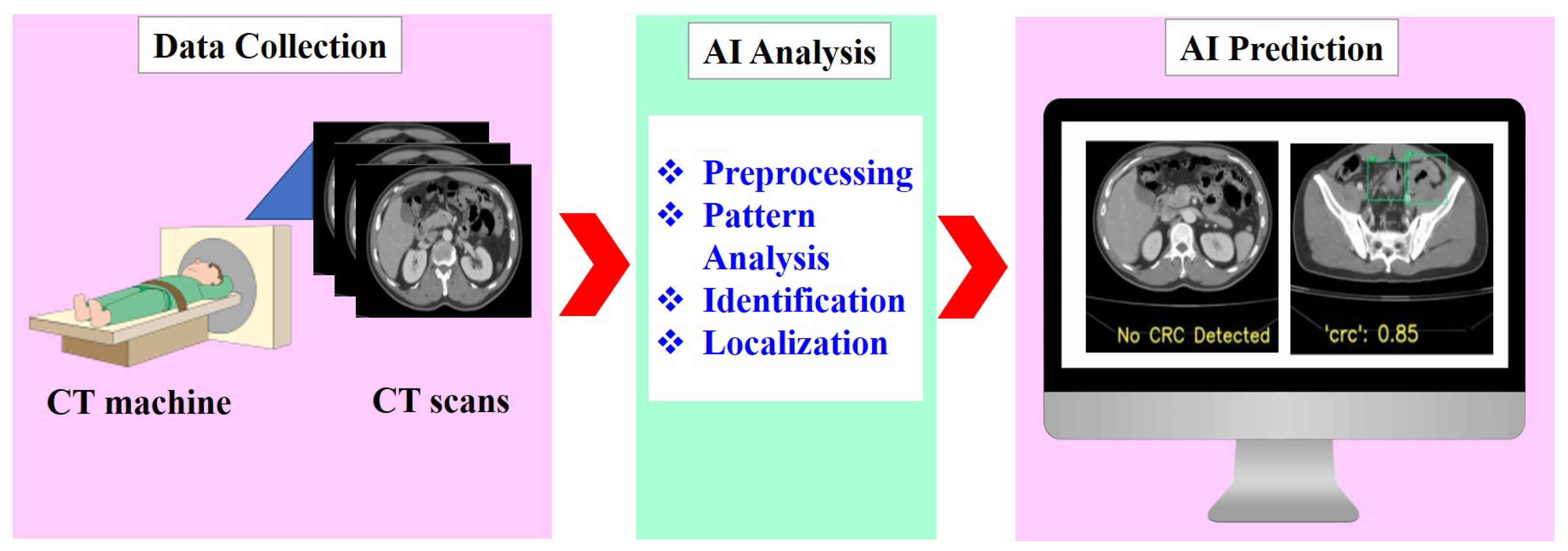

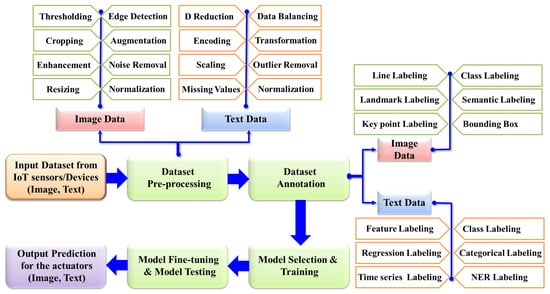

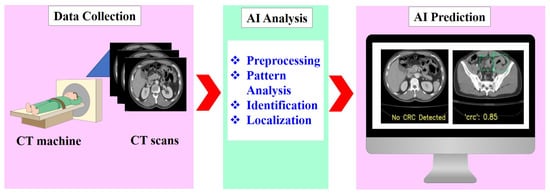

AI systems typically process data acquired from various sources, such as IoT sensors and devices, which may include images, videos, and text. However, these raw data often contain irrelevant information, noise, or missing values which must be addressed to prevent inaccuracies and misdiagnoses. Consequently, data preprocessing to enhance data quality and reliability is a critical initial step. In supervised learning paradigms, data annotation is a fundamental part of analysis. This process involves delineating the Region of Interest (RoI) to facilitate accurate interpretation of normal and abnormal conditions. Annotation is crucial during the model training phase, as it provides the ground truth necessary for learning. During the testing phase, the model’s performance is evaluated on preprocessed data, eliminating the need for further external labeling.

The rapid advancement of AI has led to the emergence of a wide array of models, each with distinct capabilities; therefore, selecting and training the most appropriate model is a meticulous process preceding final model derivation. After training, the model undergoes fine-tuning and cross-validation to enhance its accuracy further. Rigorous testing is then performed to ensure the model’s robustness and generalization. Upon validation, the final model is deployed in real-world scenarios to predict and detect abnormalities. For example, in neuro-imaging analysis, AI models can directly identify abnormalities and present the results in image format on a monitor screen. In other applications, such as cardio-version devices, outputs are delivered through actuators that restore normal heart rhythm when arrhythmias are detected. This comprehensive AI analysis procedure is illustrated in Figure 4, with the details briefly described in the subsequent sections.

Figure 4.

General workflow of AI.

5.1. Dataset Description

IoMT and AI, both being data-driven approaches, necessitate large datasets for model training prior to deployment. These datasets serve as foundational resources for comprehending and leveraging the capabilities of interconnected devices and sensors. Acquiring these data represents a laborious task that demands ample time. Therefore, existing datasets can be leveraged to train initial models before deployment. In this section, various publicly available open datasets suitable for model training are showcased. As described in Table 6, these datasets encompass sensor data. These sensor data consist of popular datasets, including the sensors from which they were collected together with the application area [70,71]. Moreover, some publicly available medical image datasets [72] are described in Table 7.

Table 6.

List of available sensor datasets.

Table 7.

List of available datasets for image/video processing in healthcare.

5.2. Data Preprocessing

An AI system initially preprocesses acquired image or text data using common techniques such as normalization and scaling. Image data often exhibit significant variations in pixel intensities due to factors such as lighting conditions or exposure settings, which can adversely affect model performance, particularly in medical imaging. Therefore, pixel intensities are normalized and scaled and edge detection techniques are applied to enhance or highlight object boundaries for better analysis.

Image enhancement methods include contrast enhancement techniques such as histogram equalization and contrast-limited adaptive histogram equalization (CLAHE), both of which improve contrast by redistributing pixel intensity values. Edge enhancement techniques emphasize object boundaries to make them more discernible, while unsharp masking and brightness correction through logarithmic or gamma adjustment are commonly used to improve visual quality. Noise removal techniques reduce unwanted random variations in pixel values. Common methods include Gaussian filtering, which smooths images, and median filtering, which effectively removes salt-and-pepper noise by replacing each pixel value with the median of the neighboring pixels. Advanced techniques such as bilateral filtering preserve edges while reducing noise, and wavelet-based denoising decomposes the image into different frequency components to remove noise selectively.

The raw image data may be collected from different sensors, resulting in varied image resolutions. In the case of DL-based image analysis, models have specific image resolution requirements, which can range from 224 × 224 to 512 × 512 or larger depending on the model. Thus, all collected raw images must undergo resizing to ensure uniform resolution. Additionally, images may contain nonessential regions, especially in neuroimaging, where surrounding tissue areas may not be relevant to the analysis; removing these nonessential regions allows models to focus on the critical tissue parts, making automated cropping necessary. Moreover, in real-life situations abnormal data typically appear less frequently than normal data. To address this imbalance, data augmentation techniques are employed to increase the number of training samples and enhance data variability during training. This ensures that the model is exposed to a wider range of scenarios and can generalize better to unseen data.

Textual data preprocessing is essential for ensuring the accuracy and efficiency of AI models. Missing values in numerical or categorical data can be dealt with by replacing them with the mean, median, mode, or user-defined parameters. Categorical data may require encoding techniques such as one-hot encoding, label encoding, or developer-specific algorithms to transform them into a format suitable for analysis [106]. Dimensionality reduction techniques such as principal component analysis (PCA) or LDA are employed to mitigate the “curse of dimensionality”, enhancing computational efficiency. Outliers in text data are addressed using methods based on the Z-score or interquartile range (IQR). Additionally, windowing techniques can be applied to maintain time dependency in text datasets, with the size of the time window being either static or dynamic [8].

Balancing text data is crucial for addressing class imbalances. One common approach is oversampling, where instances of the minority class are duplicated or synthetically generated using techniques such as SMOTE (Synthetic Minority Oversampling Technique). Undersampling involves reducing the number of instances in the majority class to match the minority class, though it risks losing valuable information. Data augmentation can also be employed, in which new text samples are created through paraphrasing, translating, or substituting words with their synonyms to increase the dataset’s diversity. Algorithmic approaches adjust class weights during training to penalize misclassification of the minority class more heavily, encouraging the model to learn from these instances. Combining these preprocessing methods can significantly enhance a model’s performance and robustness. By integrating these preprocessing techniques, AI models can better handle the complexities and variations inherent in textual data, leading to more accurate and reliable outcomes.

5.3. Data Annotation

After the preprocessing, dataset annotation is another important stage. In the annotation stage, the necessary labeled information is defined to enhance the understanding and usability of the data, which is particularly important for supervised learning. For image data, annotation involves labeling objects, regions, or attributes within images, enabling algorithms to recognize and interpret visual elements accurately for tasks such as object detection, segmentation, and classification. For instance, in brain tumor detection, bounding boxes or masks are generated, allowing the machine to learn the features associated with tumors and identify them during the testing phase. Similarly, annotation of text data involves labeling text segments with semantic or syntactic information, facilitating tasks such as sentiment analysis, named entity recognition, and document classification. For instance, the authors of [107] used labels such as the category-specific number of documents, number of documents that do not belong to any category, etc., to categorize text documents. Named entity recognition (NER) labeling identifies and categorizes entities within text, such as the names of persons, organizations, and locations. Annotation of the data enables more effective AI model training and evaluation, enhancing the accuracy, robustness, and generalization of the resulting model in real-world applications Several popular data annotation methods and the related software are described in Table 8.

Table 8.

Different image annotation techniques and related software.

5.4. Model Selection and Training

Model selection is a critical aspect of IoMT systems, playing a pivotal role in optimizing performance and resource efficiency in terms of the total number of parameters, floating point operations, and generalization capabilities. The selected model must strike a balance between accuracy and computational efficiency, especially in resource-constrained settings such as smart homes and wearable devices. Additionally, the AI model must perform well in diverse conditions to ensure its robustness in practical applications. The interoperability of the model is critical in IoMT contexts, where the AI model’s decisions should be sufficiently understandable and explainable to establish user trust and acceptance. In essence, the choice of the model directly impacts the reliability, scalability, and effectiveness of AMI systems, making it a crucial factor to consider during the design and implementation of such systems.

The analysis of images/videos is a multifaceted task, ranging from activity recognition, sentiment analysis, and behavior analysis to predictive analysis. These tasks rely on fundamental processes such as classification, segmentation, and detection. The integration of classification, detection, and segmentation techniques exhibits tremendous potential for augmenting the understanding and interaction of AI systems with their surroundings. Classification algorithms enable the identification and categorization of entities, events, or phenomena within the environment, providing important context for decision-making processes. By accurately classifying elements such as objects, sounds, and environmental conditions, AI systems can respond more intelligently to a wide range of situations. This in turn facilitates tasks such as smart hospital management, home automation, calorie intake monitoring, and personalized assistance.

5.4.1. Role of Classification Models

This section summarizes recent research on classification models, particularly in the healthcare context. A concise overview of these models is provided in Table 9, with areas for future exploration indicated as well.

Table 9.

Contemporary literature on the application of classification algorithms in healthcare.

Other popular classification models include AlexNet [121], which achieved a significant success in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). This has led to the development of other popular models such as the Visual Geometry Group Network (VGGNet) [121], which embraces simplicity through a uniform architecture characterized by small 3 × 3 convolutional filters, providing a sturdy framework for image recognition tasks. The VGGNet researchers experimented with the number of convolutional layers, resulting in variants such as VGG16 and VGG19, with 16 and 19 layers, respectively. CNN architectures have continued to unfold with the advent of Residual Networks (ResNets) [121], followed by inception modules such as Inception V3 [121], Inception V4 [121], and InceptionResNetV2 [121]. Meanwhile, refined iterations of the ResNet architecture, including ResNet50V2 [122], ResNet101V2 [122], and ResNet152V2 [122], have emerged, incorporating enhancements such as identity mappings and bottleneck blocks that further solidify their prowess in image classification. Feature reusability and feature propagation are further enhanced with the inclusion of densely connected blocks in DenseNet [121], where each layer receives inputs from all preceding layers. The inception architecture has been further extended by Xception, in which the standard convolutional layers are replaced by depthwise separable convolutional layers.

5.4.2. Role of Segmentation Models

Segmentation plays a pivotal role in understanding complex environments. The process of segmentation can be broadly categorized into semantic segmentation, instance segmentation, and panoptic segmentation [123]. Semantic segmentation enables systems to recognize various elements within a scene, such as people, furniture, or vehicles, facilitating context-aware decision-making. In other words, semantic segmentation categorizes each contents of an image into ‘things’ or ‘stuff’. Instance segmentation enhances this by allowing systems to identify and track specific objects or individuals, that is, they highlight individual instances of ‘things’. Thus, instance segmentation enables personalized interactions and tailored responses. Panoptic segmentation further enriches the understanding by combining both semantic and instance-level information, empowering IoMT systems to interpret scenes comprehensively and adaptively respond to dynamic environments. This segment provides a brief discussion about the application of semantic segmentation algorithms (Table 10), instance segmentation algorithms (Table 11), and panoptic segmentation algorithms (Table 12) in the healthcare context.

Semantic segmentation models such as FCN [123], SegNet [123], UNet [123], and DeconvNet [123] have significantly advanced segmentation capabilities through innovative architectures and learning strategies. FCN, a pioneering architecture, demonstrated end-to-end learning for pixel-wise segmentation, utilizing both downsampling and upsampling paths to capture contextual information and recover spatial semantics. UNet, widely used in medical imaging, employs an encoder–decoder structure to extract features and derive semantically meaningful information. SegNet and DeconvNet also follow this architecture, emphasizing feature extraction and upsampling for effective segmentation. FusionNet [123], a variant of UNet, incorporates “summation-based skip connections” to create a deeper network, resulting in enhanced data abstraction capabilities. FuseNet [123], on the other hand, introduces cross-modal fusion to capture enhanced local and global characteristics from images, which is particularly beneficial in complex scenarios. E-Net [123] utilizes filter factorization to reduce network complexity while maintaining performance. These architectural innovations contribute to improved semantic segmentation performance across various applications.

Table 10.

Contemporary literature on the application of semantic segmentation in healthcare.

Table 10.

Contemporary literature on the application of semantic segmentation in healthcare.

| Model Name | Brief Descriptions | Aspects to Address |

|---|---|---|

| FAM-U-Net [76] | Integrated Multiscale Feature Extraction (MFE) module and Deep Aggregation Pyramid Pooling Module (DAPPM) to extract the most pertinent features for fluid detection from Optical coherence tomography (OCT) images. Additionally, Convolution Block Attention Module (CBAM) is utilized in the skip connection paths of UNet to enhance the segmentation. | Information related to model parameters or FLOPs are missing. |

| MEF-UNet [84] | Designed a selective feature extraction encoder with detail and structure extraction stages to capture lesion details and shape features accurately. Introduced a context information storage module in skip connections to integrate adjacent feature maps and a multi-scale feature fusion module in the decoder section | Information related to model parameters or FLOPs are missing. |

| MISSFormer [87] | Incorporated local and global context, along with global-local correlation of multi-scale features, into a position-free hierarchical U-shaped transformer architecture. | Incorporating enhanced local context for small RoI could enhance segmentation performance. Information related to model parameters or FLOPs are missing. |

| DRD-UNet [91] | UNet incorporated with pyramiidal block with dilated convolution, residual connection and dense layer resulting in a total of 130 layers | The model has high computational complexity (15.40 M) which is greater than baseline UNet on the same dataset by 7.71 M |

| Act-AttSegNet [93] | Incorporated attention gate in SegNet’s skip connection and proposed a Fuzzy Energy-based ACM for vector-valued image segmentation, integrating neural network with ACM by utilizing predicted segmentation masks to remove manual contour initialization | Information related to model parameters or FLOPs are missing. |

| Dense-PSP-UNet [124] | Using Dense-UNet as backbone, incorporated pyramid scene parsing module in the skip connections for extracting multiscale features and contextual associations. | Limited data size may lead to poor generalization of the model, increased risk of overfitting, and compromised performance when applied to larger datasets. |

| BowelNet [125] | Conducted joint localization of five bowel segments: duodenum, jejunum-ileum, colon, sigmoid, and rectum with V-Net, and fine-tuned it using an ensemble multi-task segmentor to leverage meaningful geometric representations. | Segmentation quality can be enhanced by incorporating key points, anatomical information, attention mechanisms, and dilated convolutions. |

| SDNet [126] | Introduced two single-task branches to individually handle teeth and dental plaque segmentation, along with incorporating category-specific features through contrastive and structural constraint module. | |

| M-Net [127] | Two SegNet models with distinct pooling strategies, namely max pooling and average pooling, are ensembled and concatenated using a shared softmax classifier. | The model has high computational and storage complexity due to the presence of two SegNet |

| MSFF Net [128] | Enabled multi-scale feature fusion, spatial feature extraction, channel-wise feature enhancement, refinement of segmentation borders, and focused attention. | Information related to model parameters or FLOPs are missing. |

ACM: Active Contour Model; SDNet: Semantic Decomposition Network; PSP: Pyramid Scene Parsing; DRD: Dilation, Residual, and Dense Block FAM-UNet: Multiscale Feature Aggregation and Double-Attention Mixed UNet; MSFF: Multi-Scale Feature Fusion Net; FLOPs: Floating Point Operations; WSI: Whole-Slide Imaging.

Table 11.

Contemporary literature on the application of instance segmentation in healthcare.

Table 11.

Contemporary literature on the application of instance segmentation in healthcare.

| Model Name | Brief Descriptions | Aspects to Address |

|---|---|---|

| IAFMSMB Net [92] | Utilized Instance Aware Filters and a multi-scale Mask Branch to generate a global mask and employed Conditional Encoding to enhance intermediate features. | The model struggled to separate small targets with unclear boundaries, reducing segmentation accuracy, especially when dealing with overlapping aggregations. Also, the denoising process often removed small objects, impacting segmentation quality. The multi-step denoising approach and the diffusion model resulted in slow inference times |

| CoarseInst [104] | Weakly supervised framework with box annotations includes coarse mask generation, self-training for instance segmentation, and lightweight encoder with cascade attention block for improved feature information | Information related to model parameters or FLOPs are missing. |

| M-YOLACT++ [105] | Utilized ResNet-101 as the backbone network, followed by a multi-scale feature fusion (MSFF) module with cris-cross attention and convolutional block attention modules to aggregate contextual information from feature maps across all scales. | The absence of information regarding model parameters or FLOPs is noted. Incorporating prospective data could provide further insights into the model’s generalizability. |

| SibNet [129] | Utilized seed map to reduce overlap between instances, aiding counting and generating an instance segmentation map that accurately depicts individual instances’ arbitrary shapes and sizes, even under occlusion. | The model can be extended to count fractional items and has been considered for homogeneous food counting, where each item corresponds to one food, preventing the identification of multi-dish foods. |

| SPR-Mask R-CNN [130] | Combined ResNet101 with Feature Pyramid Network (FPN) to extract a multi-scale feature map, along with RoIAlign method for processing features at different scales. | Improvemt is required for small oran identifcation such as endothyroid vessel. It cann not differentiate annotomically symmetrical structures such as left and right thyroid lobes. |

| MSS-WISN [131] | Incorporated a feature extraction network to enhance feature expression and a feature fusion network to emphasize salient features, mitigating the impact of scale variations. | Information related to model parameters or FLOPs are missing. |

| DSCA-Net [132] | Incorporated hierarchical feature extraction module in the encoder and feature attention mechanism in the decoder network and deep scale feature fusion in both encoder and decoder. | The model has higher computational complexity (32.50 M) in comparison to ResUNet++ (20.42 M). |

| FoodMask [133] | Utilized FPN as a backbone for feature extraction, integrating clustering concepts for food instance counting, segmentation, and recognition. | The food counting technique is not class-agnostic, posing challenges for its application across a wide variety of foods. |

M-YOLACT++: Modified YOLACT++; MSS-WISN: Multi-Scale and Multi-Staining White Blood Cell Instance Segmentation Network; IAFMSMB Net: Instance-Aware Filters and Multi-Scale Mask Branch; DSCA-Net: Double-Stage Codec Attention Network.

Table 12.

Contemporary literature on the application of panoptic segmentation in healthcare.

Table 12.

Contemporary literature on the application of panoptic segmentation in healthcare.

| Model Name | Brief Descriptions | Aspects to Address |

|---|---|---|

| Hybrid-PA-Net [74] | Panoptic segmentation head integrates pixel-wise classification from GCNN-ResNet50 with instance output of PANet. | Incorporating prospective data could provide further insights into the model’s generalization. |

| PanoforTeeth [80] | Dual-path transformer block integrates various attention mechanisms including pixel-to-memory feedback attention, pixel-to-pixel self-attention, and memory-to-pixel and memory-to-memory self-attention. It also incorporated a stacked decoder block for aggregating multi-scale features across various decoding resolutions. | The model is computationally expensive and lacks generalization as it is trained on a single-center dataset. |

| ms-SP Net [81] | Utilized ms-SP along with connection between multi-scale features and spatial RoI characteristics | The model is parametric. |

| VertXNet [83] | Combined UNet and MaskRCNN with their own ensemble rule for the generation of unified segmentation results. | The method lacks an end-to-end approach as both UNet and MaskRCNN were trained separately. The ensemble rule was manually crafted, lacking generalization. Effective localization of ‘S1’ and ‘C2’ vertebrae directly influences vertebra localization. |

ms-SP: Multi-Scale Spatial Pooling.