Overview of AI-Models and Tools in Embedded IIoT Applications

Abstract

:1. Introductions

1.1. Motivations and Contributions

- Reduced latency and communication overhead: Integrating AI models directly into embedded devices allows data to be processed and analyzed on-site, reducing the need to transmit large amounts of data to remote servers for processing. This significantly reduces latency and communication overhead, enabling faster and more responsive decisions [1,2,3].

- Energy savings and resource optimization: Processing data directly on embedded platforms reduces overall system power consumption, as it eliminates the need to transmit data over long distances and run complex artificial intelligence algorithms on remote servers. Furthermore, optimizing the computing and memory resources of embedded devices allows for efficient implementation of artificial intelligence models even in the presence of resource constraints [4,5,6,7].

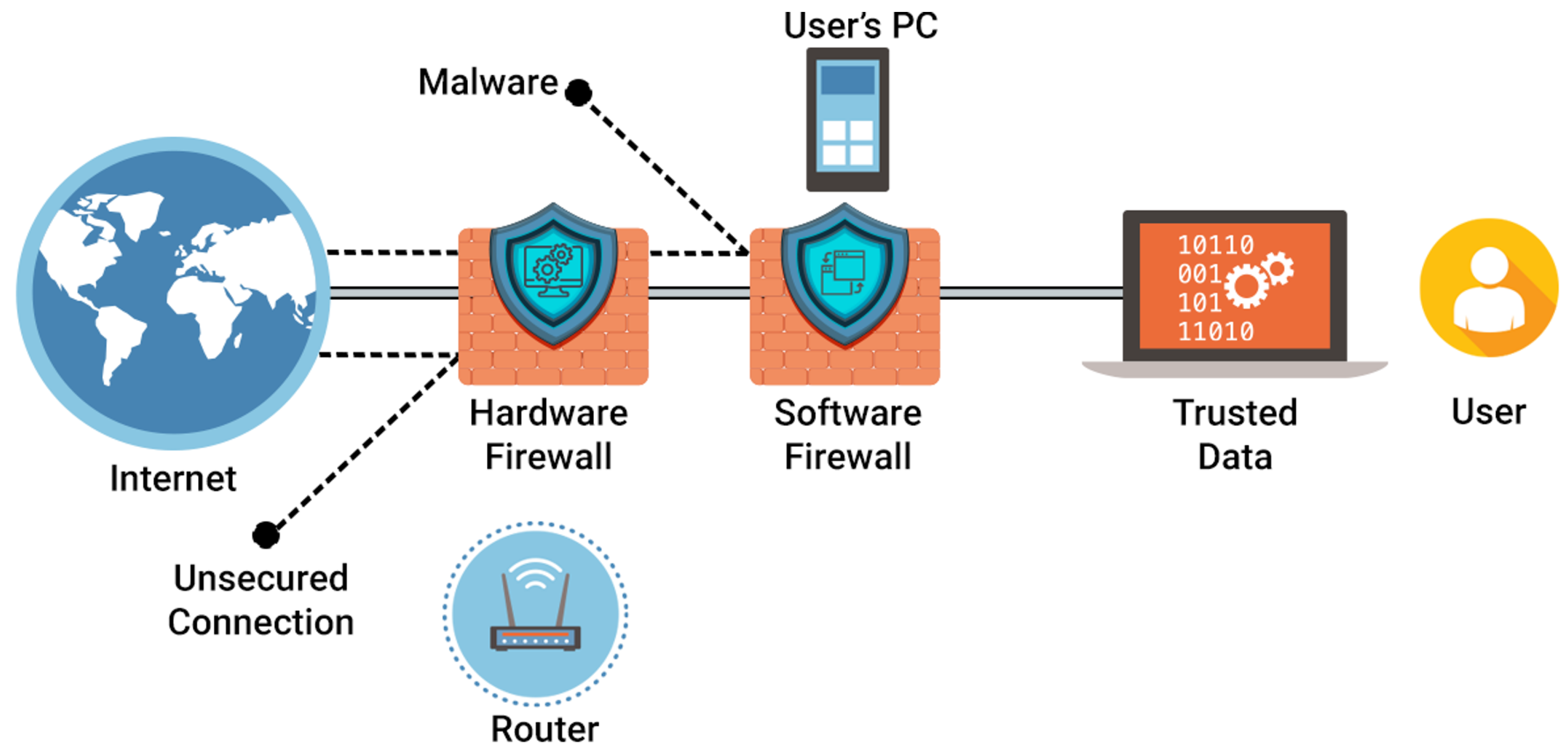

- Improved data security and privacy: Integrating AI models on embedded platforms helps to keep sensitive and critical data within the enterprise perimeter, reducing the risk of security breaches and improving data privacy. Furthermore, local data processing allows encryption and cybersecurity techniques to be applied directly on embedded devices, ensuring greater protection of sensitive information [8,9,10,11].

- Increased system resilience and availability: The integration of artificial intelligence models on embedded platforms makes IIoT systems more resilient and autonomous, capable of continuing to operate even in the absence of a network connection or in adverse environmental conditions. This is especially crucial in industrial environments where business continuity is essential for the safety and efficiency of operations [12,13,14].

1.2. Background on the IIoT

1.3. Background on AI for the IIoT

- Machine Learning: A sub-discipline of AI that focuses on training computers to learn from data without being explicitly programmed. Machine learning algorithms enable computers to identify patterns and relationships in data, allowing them to make predictions or decisions based on new unseen data. This capability makes machine learning particularly useful in tasks such as predictive modeling, classification, clustering, and anomaly detection. By iteratively learning from data, machine learning models can improve their performance over time and adapt to changing conditions [74,75,76,77].

- Artificial Neural Networks: These are mathematical models inspired by the functioning of the human brain, composed of interconnected artificial neurons organized in layers. Neural networks are capable of learning complex patterns and relationships in data through a process called training.During training, the network adjusts the weights of connections between neurons in order to minimize the difference between the predicted and actual outcomes. Neural networks have demonstrated remarkable performance in various tasks, including image recognition, natural language processing, speech recognition, and time series prediction. Their ability to automatically extract relevant features from raw data makes them a powerful tool in machine learning and AI applications [78,79,80].

- Supervised and Unsupervised Learning: In supervised machine learning, the model is trained on a set of labeled data, where each example is associated with a target variable or outcome. The model learns to map input features to the corresponding target values, enabling it to make predictions on new unseen data. Supervised learning algorithms include regression for predicting continuous outcomes and classification for predicting categorical outcomes. On the other hand, unsupervised machine learning involves training the model on unlabeled data, where the goal is to identify patterns or structures in the data without explicit guidance. Unsupervised learning techniques include clustering, size reduction, and anomaly detection. These methods are valuable for exploring and understanding the underlying structure of data, uncovering hidden patterns, and generating insights without the need for labeled examples [81,82,83].

- Fault prediction is crucial in preventing unplanned and costly downtime in the IIoT space. Using machine learning algorithms, sensor data can be analyzed to identify patterns and warning signals that may indicate imminent failures in industrial equipment. This allows for timely preventive interventions to avoid costly breakdowns, thereby extending the useful life of systems. Traditional preventative maintenance methods can be limited by a lack of real-time data and inability to accurately predict failures. AI overcomes these limitations by enabling more accurate and timely predictive analytics [84,85,86,87].

- Process Optimization is essential to maximizing efficiency and reducing costs in industrial environments. AI can identify inefficiencies in manufacturing processes by analyzing data in real time and suggesting improvements. This can include production line optimizations, waste reduction, and optimization of processing times. Traditional methods of process optimization can be limited by difficulty in detecting inefficiencies and identifying areas for improvement. AI overcomes these limitations by offering deeper and more proactive analysis of data [88,89,90].

- Predictive Maintenance allows for the prediction of when a plant or machine will require maintenance, thereby avoiding unexpected and costly downtime. By analyzing sensor data in real time, it is possible to detect signs of impending failure and plan preventative interventions before a failure occurs. Traditional methods of scheduled maintenance can be ineffective and expensive, as they rely on fixed maintenance intervals rather than the actual needs of the facility. AI overcomes these limitations by enabling more targeted and data-driven maintenance [91,92,93,94].

- Product Quality Control is essential in order to ensure that manufacturing standards are met and that products meet customer expectations. AI can monitor product quality by identifying defects or anomalies during the manufacturing process and taking corrective measures in real time. This can improve customer satisfaction, reduce waste, and increase profitability. Traditional quality control methods can be limited by subjectivity and slowness in detecting defects. AI overcomes these limitations by offering objective and immediate analysis of production data [95,96,97].

- Cybersecurity is crucial in the IIoT to protect industrial systems and data from cyber threats and attacks. AI techniques such as anomaly detection and behavior analysis can help to identify suspicious activities and potential security breaches in real time, allowing for timely responses that mitigate risks. Traditional cybersecurity measures may not be sufficient to address the evolving nature of cyber threats in IIoT environments.

- Machine Control and Optimization: With the increasing connectivity of industrial machinery via the IIoT, AI is currently playing a crucial role in enhancing machine control and optimization. By leveraging AI algorithms, real-time data from interconnected machines can be analyzed to optimize machine performance, minimize downtime, and maximize production efficiency. AI-powered control systems can actively adjust machine parameters such as speed, temperature, and pressure to optimize production processes and ensure product quality. Additionally, predictive maintenance algorithms can anticipate machinery failures, allowing for proactive maintenance interventions to prevent costly breakdowns. AI-driven machine control and optimization contribute to overall operational excellence in industrial settings [105,106,107,108,109,110,111].

2. Methodology of This Overview

2.1. Scope and Selection Criteria

- Relevance: Models and applications that are directly applicable to IIoT scenarios, particularly in areas such as predictive maintenance, quality control, supply chain management, and energy optimization.

- Impact: Research and case studies that demonstrate measurable improvements in efficiency, accuracy, or productivity due to the application of deep learning in the IIoT.

- Novelty: Inclusion of both well-established models (e.g., CNNs, RNNs, LSTMs) and emerging models (e.g., GANs, autoencoders) to provide a broad perspective on current trends and innovations.

- Publication Quality: Preference for peer-reviewed articles, high-impact conference papers, and reputable technical reports to ensure the reliability and validity of the information presented.

2.2. Evaluation Methods

- Quantitative Evaluation:

- -

- Performance Metrics: Analysis of key performance indicators (KPIs) such as accuracy, precision, recall, F1-score, and mean squared error (MSE) to evaluate the effectiveness of deep learning models.

- -

- Computational Efficiency: Assessment of the computational requirements, including training time, inference speed, and resource consumption, to gauge the practicality of deploying these models in industrial environments.

- -

- Scalability: Examination of the scalability of models, considering their ability to handle the large-scale data and real-time processing demands typical in IIoT applications.

- Qualitative Evaluation:

- -

- Applicability: Evaluation of the relevance and applicability of models to various IIoT domains through case studies and real-world implementations.

- -

- Adaptability: Consideration of the adaptability of models to different industrial contexts and their ability to integrate with existing IIoT infrastructure.

- -

- Innovative Contributions: Identification of novel contributions and advancements made by the models in improving industrial processes and addressing specific challenges in IIoT.

2.3. Analysis and Synthesis

- Comparative analysis of different deep learning models in terms of their performance and applicability in IIoT contexts.

- Synthesis of findings to highlight best practices, challenges, and future directions in the integration of deep learning with IIoT.

3. AI Models in IIoT Applications

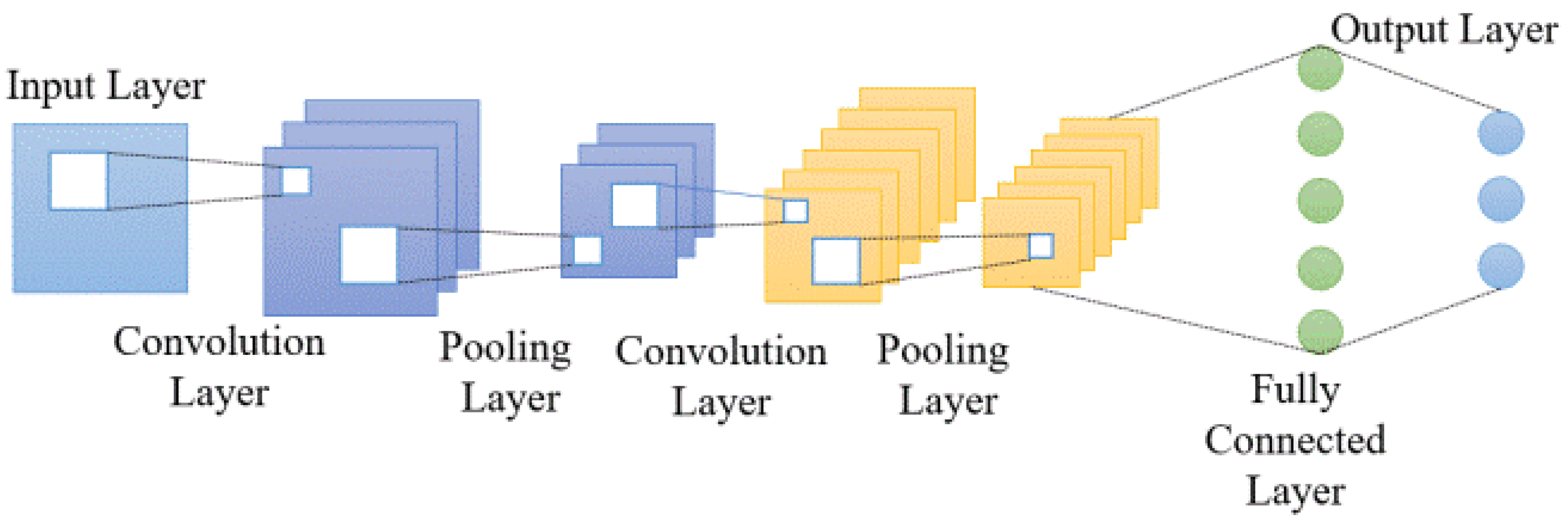

3.1. Convolutional Neural Networks

3.2. Recurrent Neural Networks

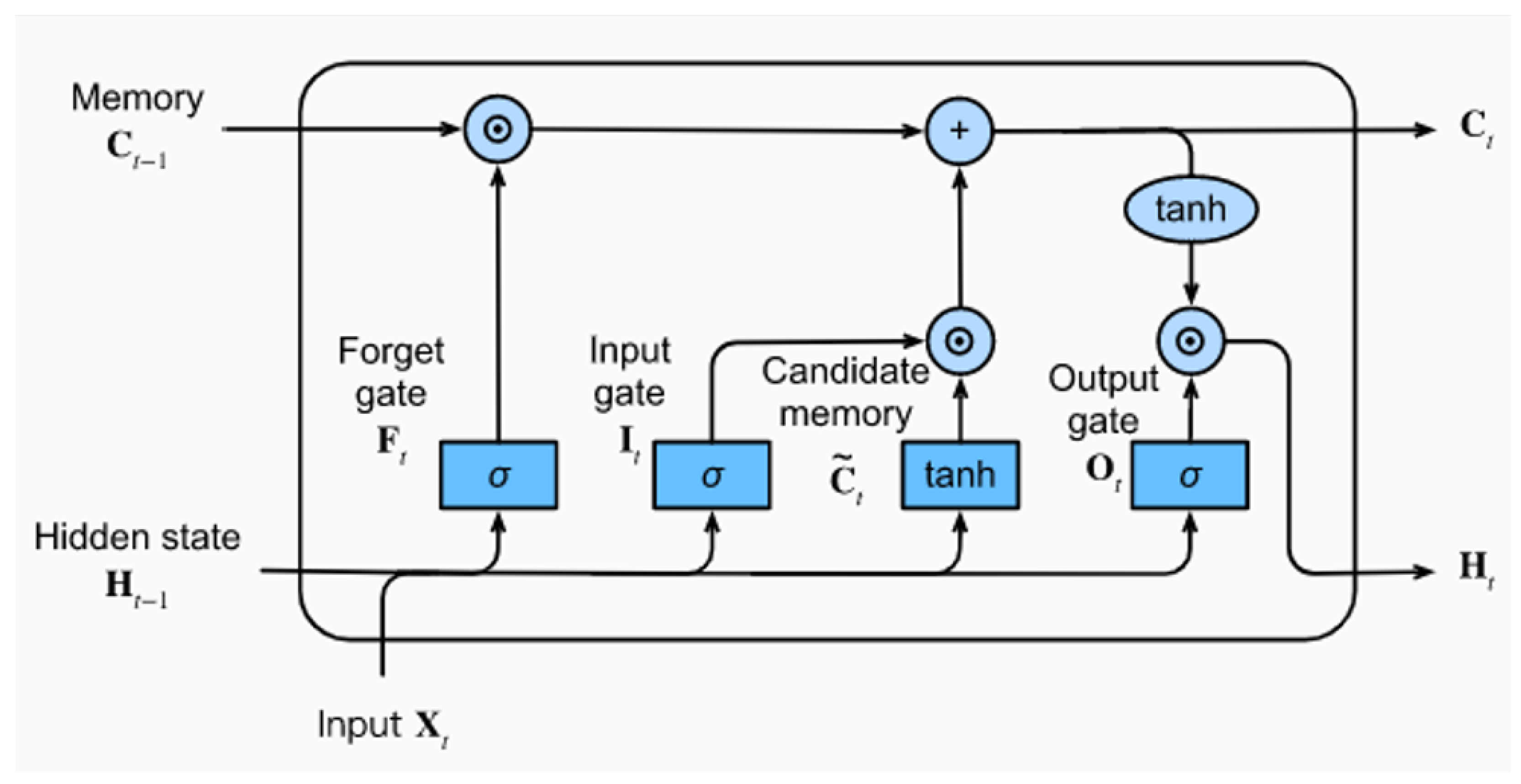

3.3. Long Short-Term Memory

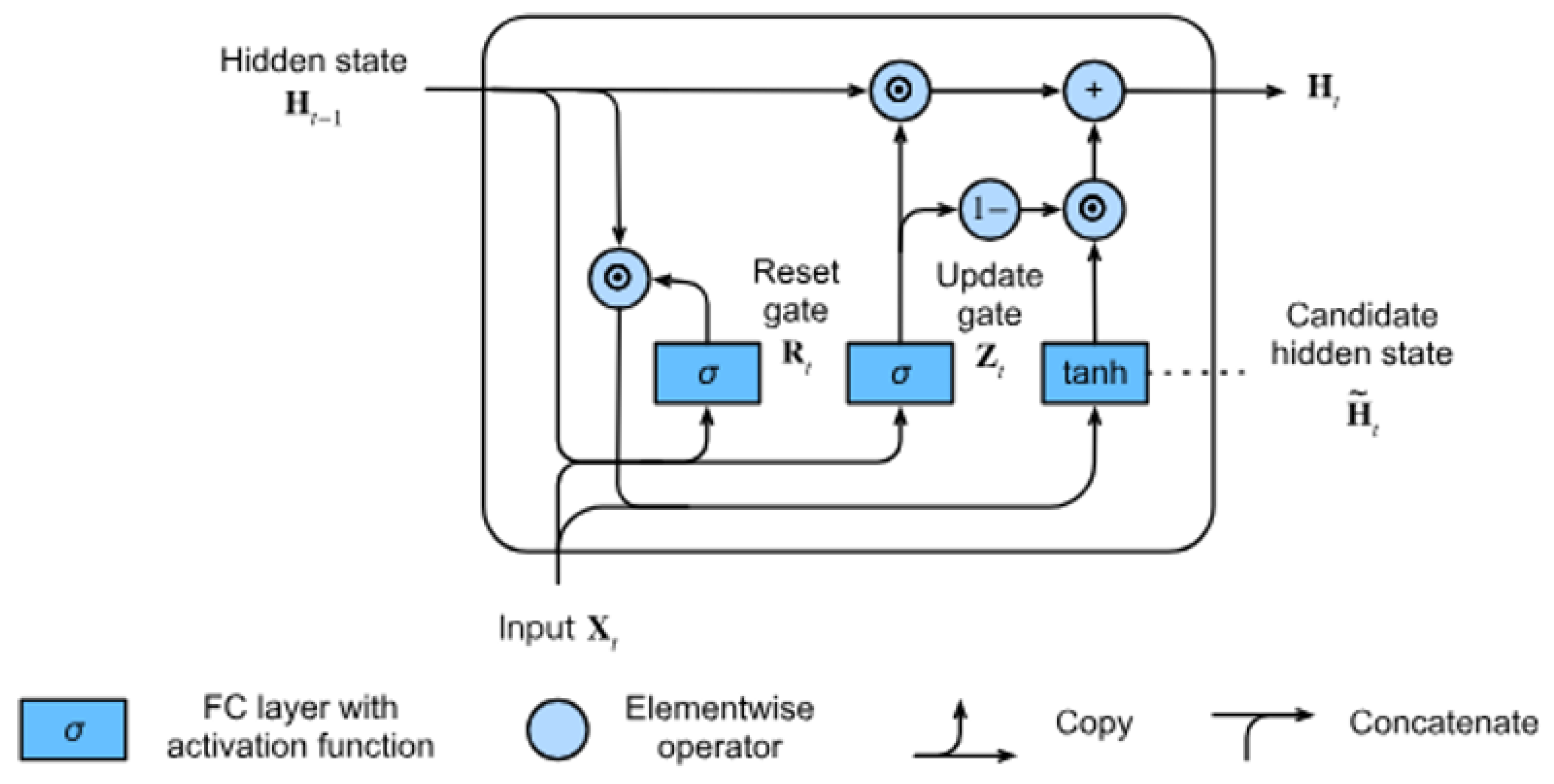

3.4. Gated Recurrent Unit

3.5. Generative Adversarial Networks

3.6. Autoencoder Neural Networks

3.7. Summary of AI Model Characteristics

3.8. IIoT Applications for Industry

4. Tools and Devices for Embedded AI in IIoT Applications

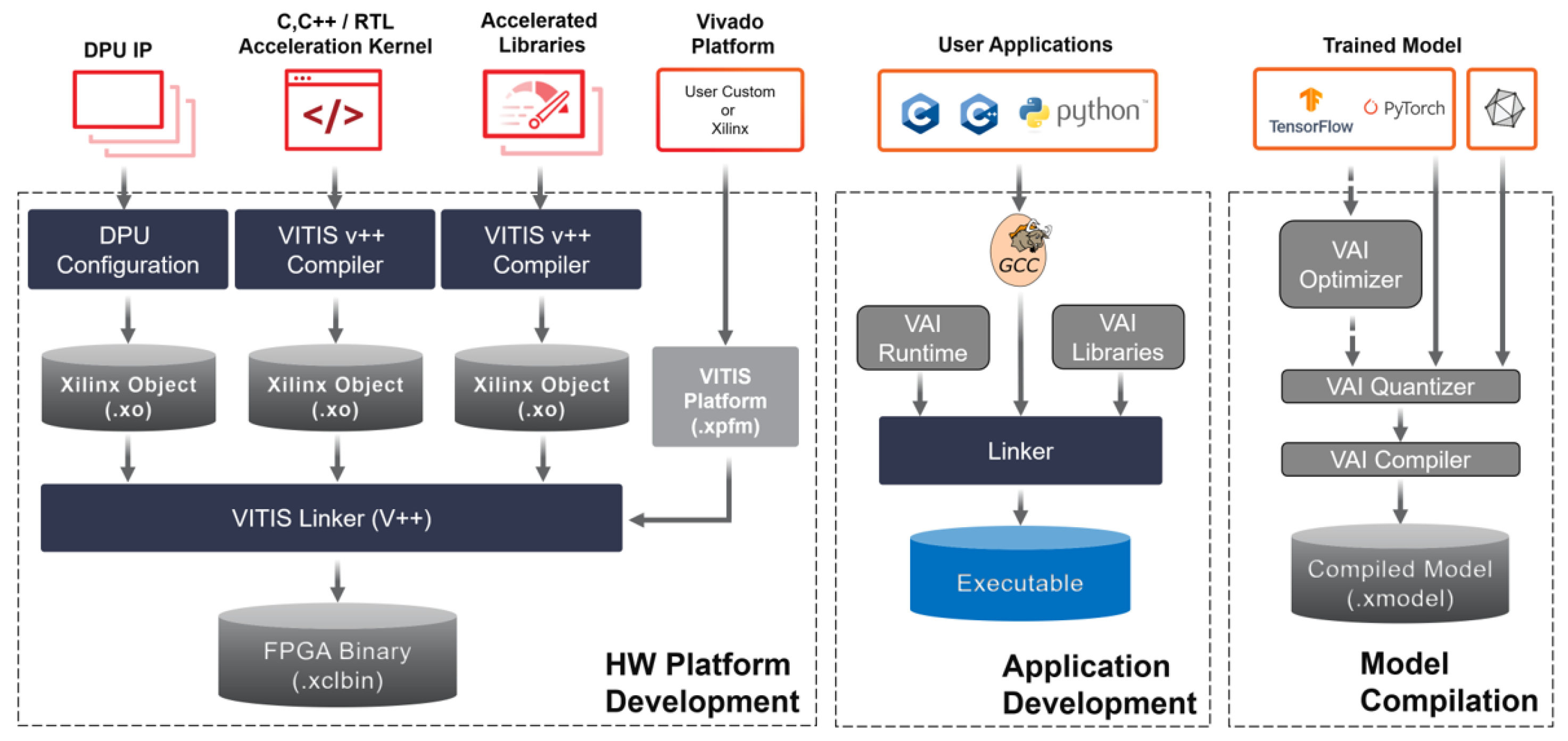

4.1. Vitis AI

4.2. TensorFlow Lite

4.3. TensorFlow Lite for Microcontrollers

4.4. STM32Cube.AI

4.5. ISPU

4.6. Renesas E-AI

4.7. Hailo-8

- A dataflow compiler tasked with generating a binary file tailored for the Hailo-8 processor from a pretrained model acquired from third-party high-level frameworks such as TensorFlow v2.15 or PyTorch v2.3. This software suite also incorporates optimizations, including quantization, to maximize the utilization of Hailo-8 hardware resources, alongside profiling information.

- HailoRT, providing C/C++ and Python APIs to enable seamless interaction between host-running applications and the Hailo-8 processor for executing compiled NN models. Additionally, it furnishes a GStreamer element to integrate NN inference seamlessly into a GStreamer pipeline.

- A model zoo [168] housing pretrained models tailored for computer vision tasks.

- A model explorer to assist users in selecting the most suitable models from the model zoo based on specific application requirements such as accuracy and Frames per second (FPS).

4.8. Google Edge TPU

4.9. Nvidia Jetson Orin Nano

4.10. Intel Movidius Myriad X VPU

4.11. NXP SW and HW Solutions

4.12. Nordic Semiconductor HW Solution

- nRF52 and nRF53 Series Bluetooth SoCs: These SoCs are now capable of running AI and ML features through a partnership with Edge Impulse, a leading provider of “tiny ML” tools. This integration allows for easy-to-use AI and ML features on resource-constrained wireless IoT chips, making them accessible to a broader range of applications [190].

- Arm Total Access: Nordic Semiconductor has adopted Arm Total Access to advance AI and ML capabilities at the edge. This subscription provides advanced access to multiple Arm products, including Cortex CPUs, Ethos NPUs, and the CoreLink System IP. This integration enables Nordic to access greater ML capabilities and computing resources for advanced IoT applications [191].

- Atlazo Acquisition: Nordic Semiconductor has acquired the IP portfolio of Atlazo, a US-based technology leader in AI/ML processors, sensor interface design, and energy management for tiny edge devices. This acquisition enhances Nordic’s position in low-power products and solutions for IoT applications and accelerates its strategic development initiatives, particularly in health-related applications [192].

4.13. Infineon AURIX HW Solutions

| Name | Supported High Level Frameworks | Supported Hardware | Used Weights Data Types | Typical Applications |

|---|---|---|---|---|

| Vitis AI | TensorFlow v2.15, PyTorch v2.3, ONNX v1.16.1 | Zynq™ UltraScale+™ MPSoC, Versal™ adaptive SoCs, and Alveo™ platforms | INT8 | FPGA-based accelerators implementation [196,197,198] |

| TensorFlow Lite Micro | TensorFlow Lite v2.15 | Microcontrollers-based platforms (e.g., Cortex-M-based platform) | INT8 | Real-time compact ML/AI MCU Integration [199,200,201] |

| STM32Cube.AI | TensorFlow v2.15, ONNX v1.16.1 (e.g., PyTorch v2.3, Matlab v2023b, and Scikit-learn v1.15) | STM32 microcontrollers | FP32, INT8 | Tiny ML/AI for Edge IIoT [202,203,204] |

| ISPU | custom C-code algorithms | STM32 microcontrollers | full precision to 1-bit NNs | Integration of In-device ML/AI model for sensors [205,206] |

| Renesas e-AI | ONNX (e.g., TensorFlow, PyTorch, etc.) | Renesas RZ/V series | INT8 | AI-based Device Fingerprinting [207,208] |

| Hailo-8 | Kerasv2.16, TensorFlow v2.15, TensorFlow Lite v2.15, PyTorch v2.3, and ONNX v1.16.1 | boards featuring the Hailo processor | INT4-8-16 bits | Accelerated AI-based IoT systems [209,210,211] |

| Edge TPU | TensorFlow Lite compiled for the Edge TPU | boards featuring the Edge TPU | INT8 | real-time high-speed computation for edge computing [212,213] |

| Jetson Orin Nano | Any framework compatible with Nvidia Ampere GPU | Nvidia Jetson Orin Nano w/o development kit | Any data type supported by the Ampere GPU | ML/AI-based Image and Video processing on embedded devices [214,215,216] |

| Myriad X | TensorFlow v2.15, Caffe v.2.10 | Intel Neural Compute Stick 2 and other boards featuring the Myriad X VPU | FP16, fixed point 8-bit | Accelerating AI and Computer Vision for Satellite Applications [180,217] |

| NXP eIQ | TensorFlow Lite & micro v2.15, Glow v10.9, CMSIS-NN v6.6.0 | NXP EdgeVerse MCU and microprocessors (i.e., i.MX RT crossover MCUs, and i.MX family) | FP32, INT8 | AI-based Automotive Cybersecurity [218,219] |

| Nordic Semiconductor | TensorFlow Lite for Microcontrollers v2.15 | Nordic Semiconductor nRF5340 and nRF9160 SoCs | INT8 | real-time embedded localization systems [220,221,222] |

| Aurix | TensorFlow Lite, PyTorch, ONNX | Aurix TC2xx and TC3xx microcontrollers | FP32, INT8 | real-time control/monitoring algorithm for automotive [223,224,225] |

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Saha, R.; Misra, S.; Deb, P.K. FogFL: Fog-Assisted Federated Learning for Resource-Constrained IoT Devices. IEEE Internet Things J. 2021, 8, 8456–8463. [Google Scholar] [CrossRef]

- Shao, J.; Zhang, J. Communication-Computation Trade-off in Resource-Constrained Edge Inference. IEEE Commun. Mag. 2020, 58, 20–26. [Google Scholar] [CrossRef]

- Mayer, R.; Tariq, M.A.; Rothermel, K. Minimizing communication overhead in window-based parallel complex event processing. In Proceedings of the DEBS ’17: The 11th ACM International Conference on Distributed and Event-based Systems, Barcelona, Spain, 19–23 June 2017; pp. 54–65. [Google Scholar]

- Pimenov, D.Y.; Mia, M.; Gupta, M.K.; Machado, Á.R.; Pintaude, G.; Unune, D.R.; Khanna, N.; Khan, A.M.; Tomaz, Í.; Wojciechowski, S.; et al. Resource saving by optimization and machining environments for sustainable manufacturing: A review and future prospects. Renew. Sustain. Energy Rev. 2022, 166, 112660. [Google Scholar] [CrossRef]

- Ahmed, Q.W.; Garg, S.; Rai, A.; Ramachandran, M.; Jhanjhi, N.Z.; Masud, M.; Baz, M. Ai-based resource allocation techniques in wireless sensor internet of things networks in energy efficiency with data optimization. Electronics 2022, 11, 2071. [Google Scholar] [CrossRef]

- Yao, C.; Yang, C.; Xiong, Z. Energy-Saving Predictive Resource Planning and Allocation. IEEE Trans. Commun. 2016, 64, 5078–5095. [Google Scholar] [CrossRef]

- Hijji, M.; Ahmad, B.; Alam, G.; Alwakeel, A.; Alwakeel, M.; Abdulaziz Alharbi, L.; Aljarf, A.; Khan, M.U. Cloud servers: Resource optimization using different energy saving techniques. Sensors 2022, 22, 8384. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.; Ahmed, G. Improving IoT privacy, data protection and security concerns. Int. J. Technol. Innov. Manag. (IJTIM) 2021, 1, 18–33. [Google Scholar] [CrossRef]

- Wang, F.; Diao, B.; Sun, T.; Xu, Y. Data Security and Privacy Challenges of Computing Offloading in FINs. IEEE Netw. 2020, 34, 14–20. [Google Scholar] [CrossRef]

- Yang, P.; Xiong, N.; Ren, J. Data Security and Privacy Protection for Cloud Storage: A Survey. IEEE Access 2020, 8, 131723–131740. [Google Scholar] [CrossRef]

- Xu, L.; Jiang, C.; Wang, J.; Yuan, J.; Ren, Y. Information Security in Big Data: Privacy and Data Mining. IEEE Access 2014, 2, 1149–1176. [Google Scholar] [CrossRef]

- Abdelmalak, M.; Benidris, M. Proactive Generation Redispatch to Enhance Power System Resilience During Hurricanes Considering Unavailability of Renewable Energy Sources. IEEE Trans. Ind. Appl. 2022, 58, 3044–3053. [Google Scholar] [CrossRef]

- Jasiūnas, J.; Lund, P.D.; Mikkola, J. Energy system resilience—A review. Renew. Sustain. Energy Rev. 2021, 150, 111476. [Google Scholar] [CrossRef]

- Stanković, A.M.; Tomsovic, K.L.; De Caro, F.; Braun, M.; Chow, J.H.; Čukalevski, N.; Dobson, I.; Eto, J.; Fink, B.; Hachmann, C.; et al. Methods for Analysis and Quantification of Power System Resilience. IEEE Trans. Power Syst. 2023, 38, 4774–4787. [Google Scholar] [CrossRef]

- Ud Din, I.; Bano, A.; Awan, K.A.; Almogren, A.; Altameem, A.; Guizani, M. LightTrust: Lightweight Trust Management for Edge Devices in Industrial Internet of Things. IEEE Internet Things J. 2023, 10, 2776–2783. [Google Scholar] [CrossRef]

- Peniak, P.; Bubeníková, E.; Kanáliková, A. Validation of High-Availability Model for Edge Devices and IIoT. Sensors 2023, 23, 4871. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Sun, W.; Shi, Y. Architecture and Implementation of Industrial Internet of Things (IIoT) Gateway. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Weihai, China, 14–16 October 2020; pp. 114–120. [Google Scholar] [CrossRef]

- Ghosh, A.; Mukherjee, A.; Misra, S. SEGA: Secured Edge Gateway Microservices Architecture for IIoT-Based Machine Monitoring. IEEE Trans. Ind. Inform. 2022, 18, 1949–1956. [Google Scholar] [CrossRef]

- Aazam, M.; Zeadally, S.; Harras, K.A. Deploying Fog Computing in Industrial Internet of Things and Industry 4.0. IEEE Trans. Ind. Inform. 2018, 14, 4674–4682. [Google Scholar] [CrossRef]

- Tange, K.; De Donno, M.; Fafoutis, X.; Dragoni, N. A Systematic Survey of Industrial Internet of Things Security: Requirements and Fog Computing Opportunities. IEEE Commun. Surv. Tutor. 2020, 22, 2489–2520. [Google Scholar] [CrossRef]

- Chalapathi, G.S.S.; Chamola, V.; Vaish, A.; Buyya, R. Industrial internet of things (iiot) applications of edge and fog computing: A review and future directions. In Fog/Edge Computing for Security, Privacy, and Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 293–325. [Google Scholar]

- Vogel, B.; Dong, Y.; Emruli, B.; Davidsson, P.; Spalazzese, R. What is an open IoT platform? Insights from a systematic mapping study. Future Internet 2020, 12, 73. [Google Scholar] [CrossRef]

- Fahmideh, M.; Zowghi, D. An exploration of IoT platform development. Inf. Syst. 2020, 87, 101409. [Google Scholar] [CrossRef]

- Ali, Z.; Mahmood, A.; Khatoon, S.; Alhakami, W.; Ullah, S.S.; Iqbal, J.; Hussain, S. A generic Internet of Things (IoT) middleware for smart city applications. Sustainability 2022, 15, 743. [Google Scholar] [CrossRef]

- Li, J.; Liang, W.; Xu, W.; Xu, Z.; Li, Y.; Jia, X. Service Home Identification of Multiple-Source IoT Applications in Edge Computing. IEEE Trans. Serv. Comput. 2023, 16, 1417–1430. [Google Scholar] [CrossRef]

- Khanna, A.; Kaur, S. Internet of things (IoT), applications and challenges: A comprehensive review. Wirel. Pers. Commun. 2020, 114, 1687–1762. [Google Scholar] [CrossRef]

- Bacco, M.; Boero, L.; Cassara, P.; Colucci, M.; Gotta, A.; Marchese, M.; Patrone, F. IoT Applications and Services in Space Information Networks. IEEE Wirel. Commun. 2019, 26, 31–37. [Google Scholar] [CrossRef]

- Sharma, A.; Babbar, H.; Rani, S.; Sah, D.K.; Sehar, S.; Gianini, G. MHSEER: A meta-heuristic secure and energy-efficient routing protocol for wireless sensor network-based industrial IoT. Energies 2023, 16, 4198. [Google Scholar] [CrossRef]

- Liu, D.; Liang, C.; Mo, H.; Chen, X.; Kong, D.; Chen, P. LEACH-D: A low-energy, low-delay data transmission method for industrial internet of things wireless sensors. Internet Things-Cyber-Phys. Syst. 2024, 4, 129–137. [Google Scholar] [CrossRef]

- Zhang, J.; Yan, Q.; Zhu, X.; Yu, K. Smart industrial IoT empowered crowd sensing for safety monitoring in coal mine. Digit. Commun. Netw. 2023, 9, 296–305. [Google Scholar] [CrossRef]

- Lu, J.; Shen, J.; Vijayakumar, P.; Gupta, B.B. Blockchain-Based Secure Data Storage Protocol for Sensors in the Industrial Internet of Things. IEEE Trans. Ind. Inform. 2022, 18, 5422–5431. [Google Scholar] [CrossRef]

- Liu, Y.; Dillon, T.; Yu, W.; Rahayu, W.; Mostafa, F. Noise Removal in the Presence of Significant Anomalies for Industrial IoT Sensor Data in Manufacturing. IEEE Internet Things J. 2020, 7, 7084–7096. [Google Scholar] [CrossRef]

- Liu, Y.; Dillon, T.; Yu, W.; Rahayu, W.; Mostafa, F. Missing Value Imputation for Industrial IoT Sensor Data With Large Gaps. IEEE Internet Things J. 2020, 7, 6855–6867. [Google Scholar] [CrossRef]

- Gupta, D.; Juneja, S.; Nauman, A.; Hamid, Y.; Ullah, I.; Kim, T.; Tag eldin, E.M.; Ghamry, N.A. Energy Saving Implementation in Hydraulic Press Using Industrial Internet of Things (IIoT). Electronics 2022, 11, 4061. [Google Scholar] [CrossRef]

- Meng, Y.; Li, J. Data sharing mechanism of sensors and actuators of industrial IoT based on blockchain-assisted identity-based cryptography. Sensors 2021, 21, 6084. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Wang, Y.; Cairano, S.D.; Koike-Akino, T.; Guo, J.; Orlik, P.; Guan, X.; Lu, C. Smart Actuation for End-Edge Industrial Control Systems. IEEE Trans. Autom. Sci. Eng. 2024, 21, 269–283. [Google Scholar] [CrossRef]

- Anes, H.; Pinto, T.; Lima, C.; Nogueira, P.; Reis, A. Wearable devices in Industry 4.0: A systematic literature review. In Proceedings of the International Symposium on Distributed Computing and Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2023; pp. 332–341. [Google Scholar]

- Jan, M.A.; Khan, F.; Khan, R.; Mastorakis, S.; Menon, V.G.; Alazab, M.; Watters, P. Lightweight Mutual Authentication and Privacy-Preservation Scheme for Intelligent Wearable Devices in Industrial-CPS. IEEE Trans. Ind. Inform. 2021, 17, 5829–5839. [Google Scholar] [CrossRef] [PubMed]

- Ghafurian, M.; Wang, K.; Dhode, I.; Kapoor, M.; Morita, P.P.; Dautenhahn, K. Smart Home Devices for Supporting Older Adults: A Systematic Review. IEEE Access 2023, 11, 47137–47158. [Google Scholar] [CrossRef]

- Yang, J.; Sun, L. A Comprehensive Survey of Security Issues of Smart Home System: “Spear” and “Shields”, Theory and Practice. IEEE Access 2022, 10, 124167–124192. [Google Scholar] [CrossRef]

- Jmila, H.; Blanc, G.; Shahid, M.R.; Lazrag, M. A Survey of Smart Home IoT Device Classification Using Machine Learning-Based Network Traffic Analysis. IEEE Access 2022, 10, 97117–97141. [Google Scholar] [CrossRef]

- Khan, M.; Silva, B.N.; Han, K. Internet of Things Based Energy Aware Smart Home Control System. IEEE Access 2016, 4, 7556–7566. [Google Scholar] [CrossRef]

- Abbasi, M.; Abbasi, E.; Li, L.; Aguilera, R.P.; Lu, D.; Wang, F. Review on the microgrid concept, structures, components, communication systems, and control methods. Energies 2023, 16, 484. [Google Scholar] [CrossRef]

- Singh, S.V.; Khursheed, A.; Alam, Z. Wired communication technologies and networks for smart grid—A review. In Cyber Security in Intelligent Computing and Communications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 183–195. [Google Scholar]

- Eid, M.M.; Sorathiya, V.; Lavadiya, S.; Shehata, E.; Rashed, A.N.Z. Free space and wired optics communication systems performance improvement for short-range applications with the signal power optimization. J. Opt. Commun. 2021, 000010151520200304. [Google Scholar] [CrossRef]

- Crepaldi, M.; Barcellona, A.; Zini, G.; Ansaldo, A.; Ros, P.M.; Sanginario, A.; Cuccu, C.; Demarchi, D.; Brayda, L. Live Wire—A Low-Complexity Body Channel Communication System for Landmark Identification. IEEE Trans. Emerg. Top. Comput. 2021, 9, 1248–1264. [Google Scholar] [CrossRef]

- Yang, D.; Mahmood, A.; Hassan, S.A.; Gidlund, M. Guest Editorial: Industrial IoT and Sensor Networks in 5G-and-Beyond Wireless Communication. IEEE Trans. Ind. Inform. 2022, 18, 4118–4121. [Google Scholar] [CrossRef]

- Liu, W.; Nair, G.; Li, Y.; Nesic, D.; Vucetic, B.; Poor, H.V. On the Latency, Rate, and Reliability Tradeoff in Wireless Networked Control Systems for IIoT. IEEE Internet Things J. 2021, 8, 723–733. [Google Scholar] [CrossRef]

- Du, R.; Zhen, L. Multiuser physical layer security mechanism in the wireless communication system of the IIOT. Comput. Secur. 2022, 113, 102559. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Khan, M.A.; Amjad, H. Hybrid FSO/RF networks: A review of practical constraints, applications and challenges. Opt. Switch. Netw. 2023, 47, 100697. [Google Scholar] [CrossRef]

- Alexandropoulos, G.C.; Shlezinger, N.; Alamzadeh, I.; Imani, M.F.; Zhang, H.; Eldar, Y.C. Hybrid Reconfigurable Intelligent Metasurfaces: Enabling Simultaneous Tunable Reflections and Sensing for 6G Wireless Communications. IEEE Veh. Technol. Mag. 2024, 19, 75–84. [Google Scholar] [CrossRef]

- Chowdhury, M.Z.; Hasan, M.K.; Shahjalal, M.; Hossan, M.T.; Jang, Y.M. Optical Wireless Hybrid Networks: Trends, Opportunities, Challenges, and Research Directions. IEEE Commun. Surv. Tutor. 2020, 22, 930–966. [Google Scholar] [CrossRef]

- Giustina, D.D.; Rinaldi, S. Hybrid Communication Network for the Smart Grid: Validation of a Field Test Experience. IEEE Trans. Power Deliv. 2015, 30, 2492–2500. [Google Scholar] [CrossRef]

- Shi, G.; Shen, X.; Xiao, F.; He, Y. DANTD: A Deep Abnormal Network Traffic Detection Model for Security of Industrial Internet of Things Using High-Order Features. IEEE Internet Things J. 2023, 10, 21143–21153. [Google Scholar] [CrossRef]

- Hewa, T.; Braeken, A.; Liyanage, M.; Ylianttila, M. Fog Computing and Blockchain-Based Security Service Architecture for 5G Industrial IoT-Enabled Cloud Manufacturing. IEEE Trans. Ind. Inform. 2022, 18, 7174–7185. [Google Scholar] [CrossRef]

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A New Comprehensive Realistic Cyber Security Dataset of IoT and IIoT Applications for Centralized and Federated Learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Wang, J.; Chen, J.; Ren, Y.; Sharma, P.K.; Alfarraj, O.; Tolba, A. Data security storage mechanism based on blockchain industrial Internet of Things. Comput. Ind. Eng. 2022, 164, 107903. [Google Scholar] [CrossRef]

- Xenofontos, C.; Zografopoulos, I.; Konstantinou, C.; Jolfaei, A.; Khan, M.K.; Choo, K.K.R. Consumer, Commercial, and Industrial IoT (In)Security: Attack Taxonomy and Case Studies. IEEE Internet Things J. 2022, 9, 199–221. [Google Scholar] [CrossRef]

- Cai, X.; Geng, S.; Zhang, J.; Wu, D.; Cui, Z.; Zhang, W.; Chen, J. A Sharding Scheme-Based Many-Objective Optimization Algorithm for Enhancing Security in Blockchain-Enabled Industrial Internet of Things. IEEE Trans. Ind. Inform. 2021, 17, 7650–7658. [Google Scholar] [CrossRef]

- Sengupta, J.; Ruj, S.; Bit, S.D. A comprehensive survey on attacks, security issues and blockchain solutions for IoT and IIoT. J. Netw. Comput. Appl. 2020, 149, 102481. [Google Scholar] [CrossRef]

- Yu, X.; Guo, H. A Survey on IIoT Security. In Proceedings of the 2019 IEEE VTS Asia Pacific Wireless Communications Symposium (APWCS), Singapore, 28–30 August 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Panchal, A.C.; Khadse, V.M.; Mahalle, P.N. Security Issues in IIoT: A Comprehensive Survey of Attacks on IIoT and Its Countermeasures. In Proceedings of the 2018 IEEE Global Conference on Wireless Computing and Networking (GCWCN), Lonavala, India, 23–24 November 2018; pp. 124–130. [Google Scholar] [CrossRef]

- Yu, K.; Tan, L.; Aloqaily, M.; Yang, H.; Jararweh, Y. Blockchain-Enhanced Data Sharing With Traceable and Direct Revocation in IIoT. IEEE Trans. Ind. Inform. 2021, 17, 7669–7678. [Google Scholar] [CrossRef]

- Jia, B.; Zhang, X.; Liu, J.; Zhang, Y.; Huang, K.; Liang, Y. Blockchain-Enabled Federated Learning Data Protection Aggregation Scheme With Differential Privacy and Homomorphic Encryption in IIoT. IEEE Trans. Ind. Inform. 2022, 18, 4049–4058. [Google Scholar] [CrossRef]

- Bader, J.; Michala, A.L. Searchable encryption with access control in industrial internet of things (IIoT). Wirel. Commun. Mob. Comput. 2021, 2021, 5555362. [Google Scholar] [CrossRef]

- Mantravadi, S.; Schnyder, R.; Møller, C.; Brunoe, T.D. Securing IT/OT Links for Low Power IIoT Devices: Design Considerations for Industry 4.0. IEEE Access 2020, 8, 200305–200321. [Google Scholar] [CrossRef]

- Astorga, J.; Barcelo, M.; Urbieta, A.; Jacob, E. Revisiting the feasibility of public key cryptography in light of iiot communications. Sensors 2022, 22, 2561. [Google Scholar] [CrossRef]

- Prasad, S.G.; Sharmila, V.C.; Badrinarayanan, M. Role of Artificial Intelligence based Chat Generative Pre-trained Transformer (ChatGPT) in Cyber Security. In Proceedings of the 2023 2nd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 4–6 May 2023; pp. 107–114. [Google Scholar] [CrossRef]

- Uddin, R.; Kumar, S.A.P. SDN-Based Federated Learning Approach for Satellite-IoT Framework to Enhance Data Security and Privacy in Space Communication. IEEE J. Radio Freq. Identif. 2023, 7, 424–440. [Google Scholar] [CrossRef]

- Ahmadi, S. Next Generation AI-Based Firewalls: A Comparative Study. Int. J. Comput. (IJC) 2023, 49, 245–262. [Google Scholar]

- Sun, P.; Garcia, L.; Salles-Loustau, G.; Zonouz, S. Hybrid Firmware Analysis for Known Mobile and IoT Security Vulnerabilities. In Proceedings of the 2020 50th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Valencia, Spain, 29 June–2 July 2020; pp. 373–384. [Google Scholar] [CrossRef]

- Feng, X.; Zhu, X.; Han, Q.L.; Zhou, W.; Wen, S.; Xiang, Y. Detecting Vulnerability on IoT Device Firmware: A Survey. IEEE/CAA J. Autom. Sin. 2023, 10, 25–41. [Google Scholar] [CrossRef]

- He, D.; Yu, X.; Li, T.; Chan, S.; Guizani, M. Firmware Vulnerabilities Homology Detection Based on Clonal Selection Algorithm for IoT Devices. IEEE Internet Things J. 2022, 9, 16438–16445. [Google Scholar] [CrossRef]

- Dini, P.; Saponara, S. Analysis, design, and comparison of machine-learning techniques for networking intrusion detection. Designs 2021, 5, 9. [Google Scholar] [CrossRef]

- Dini, P.; Colicelli, A.; Saponara, S. Review on Modeling and SOC/SOH Estimation of Batteries for Automotive Applications. Batteries 2024, 10, 34. [Google Scholar] [CrossRef]

- Zhu, X.; Zheng, Q.; Tian, X.; Elhanashi, A.; Saponara, S.; Dini, P. Car Recognition Based on HOG Feature and SVM Classifier. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society; Springer: Berlin/Heidelberg, Germany, 2023; pp. 319–326. [Google Scholar]

- Dini, P.; Begni, A.; Ciavarella, S.; De Paoli, E.; Fiorelli, G.; Silvestro, C.; Saponara, S. Design and Testing Novel One-Class Classifier Based on Polynomial Interpolation With Application to Networking Security. IEEE Access 2022, 10, 67910–67924. [Google Scholar] [CrossRef]

- Runge, J.; Zmeureanu, R. Forecasting energy use in buildings using artificial neural networks: A review. Energies 2019, 12, 3254. [Google Scholar] [CrossRef]

- Abdolrasol, M.G.; Hussain, S.S.; Ustun, T.S.; Sarker, M.R.; Hannan, M.A.; Mohamed, R.; Ali, J.A.; Mekhilef, S.; Milad, A. Artificial neural networks based optimization techniques: A review. Electronics 2021, 10, 2689. [Google Scholar] [CrossRef]

- Emambocus, B.A.S.; Jasser, M.B.; Amphawan, A. A Survey on the Optimization of Artificial Neural Networks Using Swarm Intelligence Algorithms. IEEE Access 2023, 11, 1280–1294. [Google Scholar] [CrossRef]

- Jafari, F.; Dorafshan, S. Comparison between Supervised and Unsupervised Learning for Autonomous Delamination Detection Using Impact Echo. Remote Sens. 2022, 14, 6307. [Google Scholar] [CrossRef]

- Chen, Y.; Mancini, M.; Zhu, X.; Akata, Z. Semi-Supervised and Unsupervised Deep Visual Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 1327–1347. [Google Scholar] [CrossRef] [PubMed]

- Gwilliam, M.; Shrivastava, A. Beyond supervised vs. unsupervised: Representative benchmarking and analysis of image representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 9642–9652. [Google Scholar]

- Huang, H.; Ding, S.; Zhao, L.; Huang, H.; Chen, L.; Gao, H.; Ahmed, S.H. Real-Time Fault Detection for IIoT Facilities Using GBRBM-Based DNN. IEEE Internet Things J. 2020, 7, 5713–5722. [Google Scholar] [CrossRef]

- Jarwar, M.A.; Khowaja, S.A.; Dev, K.; Adhikari, M.; Hakak, S. NEAT: A Resilient Deep Representational Learning for Fault Detection Using Acoustic Signals in IIoT Environment. IEEE Internet Things J. 2023, 10, 2864–2871. [Google Scholar] [CrossRef]

- Lang, W.; Hu, Y.; Gong, C.; Zhang, X.; Xu, H.; Deng, J. Artificial Intelligence-Based Technique for Fault Detection and Diagnosis of EV Motors: A Review. IEEE Trans. Transp. Electrif. 2022, 8, 384–406. [Google Scholar] [CrossRef]

- Elhanashi, A.; Saponara, S.; Zheng, Q. Classification and Localization of Multi-Type Abnormalities on Chest X-Rays Images. IEEE Access 2023, 11, 83264–83277. [Google Scholar] [CrossRef]

- da Silva, A.; Gil, M.M. Industrial processes optimization in digital marketplace context: A case study in ornamental stone sector. Results Eng. 2020, 7, 100152. [Google Scholar] [CrossRef]

- Jiang, J.; Zu, Y.; Li, X.; Meng, Q.; Long, X. Recent progress towards industrial rhamnolipids fermentation: Process optimization and foam control. Bioresour. Technol. 2020, 298, 122394. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Huang, G.; Zheng, A.; Liu, J. Research on the optimization of IIoT data processing latency. Comput. Commun. 2020, 151, 290–298. [Google Scholar] [CrossRef]

- Begni, A.; Dini, P.; Saponara, S. Design and test of an LSTM-based algorithm for Li-Ion batteries remaining useful life estimation. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society; Springer: Berlin/Heidelberg, Germany, 2022; pp. 373–379. [Google Scholar]

- Dini, P.; Basso, G.; Saponara, S.; Romano, C. Real-time monitoring and ageing detection algorithm design with application on SiC-based automotive power drive system. IET Power Electron. 2024. [Google Scholar] [CrossRef]

- Dini, P.; Ariaudo, G.; Botto, G.; Greca, F.L.; Saponara, S. Real-time electro-thermal modelling and predictive control design of resonant power converter in full electric vehicle applications. IET Power Electron. 2023, 16, 2045–2064. [Google Scholar] [CrossRef]

- Pacini, F.; Dini, P.; Fanucci, L. Design of an Assisted Driving System for Obstacle Avoidance Based on Reinforcement Learning Applied to Electrified Wheelchairs. Electronics 2024, 13, 1507. [Google Scholar] [CrossRef]

- Anderson, H.E.; Santos, I.C.; Hildenbrand, Z.L.; Schug, K.A. A review of the analytical methods used for beer ingredient and finished product analysis and quality control. Anal. Chim. Acta 2019, 1085, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Pang, J.; Zhang, N.; Xiao, Q.; Qi, F.; Xue, X. A new intelligent and data-driven product quality control system of industrial valve manufacturing process in CPS. Comput. Commun. 2021, 175, 25–34. [Google Scholar] [CrossRef]

- Wang, T.; Chen, Y.; Qiao, M.; Snoussi, H. A fast and robust convolutional neural network-based defect detection model in product quality control. Int. J. Adv. Manuf. Technol. 2018, 94, 3465–3471. [Google Scholar] [CrossRef]

- Rosadini, C.; Chiarelli, S.; Nesci, W.; Saponara, S.; Gagliardi, A.; Dini, P. Method for Protection from Cyber Attacks to a Vehicle Based upon Time Analysis, and Corresponding Device. U.S. Patent 17/929,370, 16 March 2023. [Google Scholar]

- Rosadini, C.; Chiarelli, S.; Cornelio, A.; Nesci, W.; Saponara, S.; Dini, P.; Gagliardi, A. Method for Protection from Cyber Attacks to a Vehicle Based Upon Time Analysis, and Corresponding Device. U.S. Patent 18/163,488, 3 August 2023. [Google Scholar]

- Dini, P.; Saponara, S. Design and Experimental Assessment of Real-Time Anomaly Detection Techniques for Automotive Cybersecurity. Sensors 2023, 23, 9231. [Google Scholar] [CrossRef] [PubMed]

- Elhanashi, A.; Dini, P.; Saponara, S.; Zheng, Q. Integration of Deep Learning into the IoT: A Survey of Techniques and Challenges for Real-World Applications. Electronics 2023, 12, 4925. [Google Scholar] [CrossRef]

- Elhanashi, A.; Saponara, S.; Dini, P.; Zheng, Q.; Morita, D.; Raytchev, B. An integrated and real-time social distancing, mask detection, and facial temperature video measurement system for pandemic monitoring. J. -Real-Time Image Process. 2023, 20, 95. [Google Scholar] [CrossRef]

- Elhanashi, A.; Gasmi, K.; Begni, A.; Dini, P.; Zheng, Q.; Saponara, S. Machine learning techniques for anomaly-based detection system on CSE-CIC-IDS2018 dataset. In Proceedings of the International Conference on Applications in Electronics Pervading Industry, Environment and Society; Springer: Berlin/Heidelberg, Germany, 2022; pp. 131–140. [Google Scholar]

- Dini, P.; Elhanashi, A.; Begni, A.; Saponara, S.; Zheng, Q.; Gasmi, K. Overview on Intrusion Detection Systems Design Exploiting Machine Learning for Networking Cybersecurity. Appl. Sci. 2023, 13, 7507. [Google Scholar] [CrossRef]

- Pacini, F.; Di Matteo, S.; Dini, P.; Fanucci, L.; Bucchi, F. Innovative Plug-and-Play System for Electrification of Wheel-Chairs. IEEE Access 2023, 11, 89038–89051. [Google Scholar] [CrossRef]

- Dini, P.; Saponara, S. Model-based design of an improved electric drive controller for high-precision applications based on feedback linearization technique. Electronics 2021, 10, 2954. [Google Scholar] [CrossRef]

- Dini, P.; Saponara, S. Design of an observer-based architecture and non-linear control algorithm for cogging torque reduction in synchronous motors. Energies 2020, 13, 2077. [Google Scholar] [CrossRef]

- Dini, P.; Saponara, S. Design of adaptive controller exploiting learning concepts applied to a BLDC-based drive system. Energies 2020, 13, 2512. [Google Scholar] [CrossRef]

- Dini, P.; Saponara, S. Processor-in-the-loop validation of a gradient descent-based model predictive control for assisted driving and obstacles avoidance applications. IEEE Access 2022, 10, 67958–67975. [Google Scholar] [CrossRef]

- Bernardeschi, C.; Dini, P.; Domenici, A.; Mouhagir, A.; Palmieri, M.; Saponara, S.; Sassolas, T.; Zaourar, L. Co-simulation of a model predictive control system for automotive applications. In Proceedings of the International Conference on Software Engineering and Formal Methods; Springer: Berlin/Heidelberg, Germany, 2021; pp. 204–220. [Google Scholar]

- Benedetti, D.; Agnelli, J.; Gagliardi, A.; Dini, P.; Saponara, S. Design of a digital dashboard on low-cost embedded platform in a fully electric vehicle. In Proceedings of the 2020 IEEE International Conference on Environment and Electrical Engineering and 2020 IEEE Industrial and Commercial Power Systems Europe (EEEIC/I&CPS Europe), Madrid, Spain, 9–12 June 2020; pp. 1–5. [Google Scholar]

- Xu, X.; Sun, J.; Wang, C.; Zou, B. A novel hybrid CNN-LSTM compensation model against DoS attacks in power system state estimation. Neural Process. Lett. 2022, 54, 1597–1621. [Google Scholar] [CrossRef]

- Abdallah, M.; An Le Khac, N.; Jahromi, H.; Delia Jurcut, A. A hybrid CNN-LSTM based approach for anomaly detection systems in SDNs. In Proceedings of the ARES 2021: The 16th International Conference on Availability, Reliability and Security, Vienna, Austria, 17–20 August 2021; pp. 1–7. [Google Scholar]

- Alkahtani, H.; Aldhyani, T.H. Botnet attack detection by using CNN-LSTM model for Internet of Things applications. Secur. Commun. Netw. 2021, 2021, 3806459. [Google Scholar] [CrossRef]

- Alabsi, B.A.; Anbar, M.; Rihan, S.D.A. CNN-CNN: Dual Convolutional Neural Network Approach for Feature Selection and Attack Detection on Internet of Things Networks. Sensors 2023, 23, 6507. [Google Scholar] [CrossRef] [PubMed]

- Alonazi, M.; Ansar, H.; Mudawi, N.A.; Alotaibi, S.S.; Almujally, N.A.; Alazeb, A.; Jalal, A.; Kim, J.; Min, M. Smart Healthcare Hand Gesture Recognition Using CNN-Based Detector and Deep Belief Network. IEEE Access 2023, 11, 84922–84933. [Google Scholar] [CrossRef]

- Latif, G.; Abdelhamid, S.E.; Mallouhy, R.E.; Alghazo, J.; Kazimi, Z.A. Deep learning utilization in agriculture: Detection of rice plant diseases using an improved CNN model. Plants 2022, 11, 2230. [Google Scholar] [CrossRef]

- Ullah, I.; Mahmoud, Q.H. Design and Development of RNN Anomaly Detection Model for IoT Networks. IEEE Access 2022, 10, 62722–62750. [Google Scholar] [CrossRef]

- Kim, Y.; Wang, P.; Mihaylova, L. Structural Recurrent Neural Network for Traffic Speed Prediction. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 12–17 May 2019; pp. 5207–5211. [Google Scholar] [CrossRef]

- Pech, M.; Vrchota, J.; Bednář, J. Predictive maintenance and intelligent sensors in smart factory. Sensors 2021, 21, 1470. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Yang, L.T.; Cao, E.; Guo, L.; Ren, L.; Deen, M.J. A Tensor-based t-SVD-LSTM Remaining Useful Life Prediction Model for Industrial Intelligence. IEEE Trans. Ind. Inform. 2022, 1–12. [Google Scholar] [CrossRef]

- Zhang, W.; Guo, W.; Liu, X.; Liu, Y.; Zhou, J.; Li, B.; Lu, Q.; Yang, S. LSTM-Based Analysis of Industrial IoT Equipment. IEEE Access 2018, 6, 23551–23560. [Google Scholar] [CrossRef]

- Ranjan, N.; Bhandari, S.; Zhao, H.P.; Kim, H.; Khan, P. City-Wide Traffic Congestion Prediction Based on CNN, LSTM and Transpose CNN. IEEE Access 2020, 8, 81606–81620. [Google Scholar] [CrossRef]

- Wu, D.; Jiang, Z.; Xie, X.; Wei, X.; Yu, W.; Li, R. LSTM Learning With Bayesian and Gaussian Processing for Anomaly Detection in Industrial IoT. IEEE Trans. Ind. Inform. 2020, 16, 5244–5253. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, L.; Wang, X. Fault Detection for Motor Drive Control System of Industrial Robots Using CNN-LSTM-based Observers. CES Trans. Electr. Mach. Syst. 2023, 7, 144–152. [Google Scholar] [CrossRef]

- Kim, D.H.; Farhad, A.; Pyun, J.Y. UWB Positioning System Based on LSTM Classification With Mitigated NLOS Effects. IEEE Internet Things J. 2023, 10, 1822–1835. [Google Scholar] [CrossRef]

- Hu, L.; Miao, Y.; Yang, J.; Ghoneim, A.; Hossain, M.S.; Alrashoud, M. IF-RANs: Intelligent Traffic Prediction and Cognitive Caching toward Fog-Computing-Based Radio Access Networks. IEEE Wirel. Commun. 2020, 27, 29–35. [Google Scholar] [CrossRef]

- Wang, Y.; Liao, W.; Chang, Y. Gated recurrent unit network-based short-term photovoltaic forecasting. Energies 2018, 11, 2163. [Google Scholar] [CrossRef]

- Brandão Lent, D.M.; Novaes, M.P.; Carvalho, L.F.; Lloret, J.; Rodrigues, J.J.P.C.; Proença, M.L. A Gated Recurrent Unit Deep Learning Model to Detect and Mitigate Distributed Denial of Service and Portscan Attacks. IEEE Access 2022, 10, 73229–73242. [Google Scholar] [CrossRef]

- Ullah, S.; Boulila, W.; Koubâa, A.; Ahmad, J. MAGRU-IDS: A Multi-Head Attention-Based Gated Recurrent Unit for Intrusion Detection in IIoT Networks. IEEE Access 2023, 11, 114590–114601. [Google Scholar] [CrossRef]

- Hussain, B.Z.; Khan, I. Sequentially Integrated Convolutional-Gated Recurrent Unit Autoencoder for Enhanced Security in Industrial Control Systems. TechRxiv 2024. [Google Scholar] [CrossRef] [PubMed]

- Bellocchi, L.; Geroliminis, N. Unraveling reaction-diffusion-like dynamics in urban congestion propagation: Insights from a large-scale road network. Sci. Rep. 2020, 10, 4876. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Dai, Z.; He, Z.; Ma, J.; Wang, Y.; Wang, Y. Learning traffic as images: A deep convolutional neural network for large-scale transportation network speed prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef] [PubMed]

- Hong, K.; Pan, J.; Jin, M. Transformer Condition Monitoring Based on Load-Varied Vibration Response and GRU Neural Networks. IEEE Access 2020, 8, 178685–178694. [Google Scholar] [CrossRef]

- Su, X.; Shan, Y.; Li, C.; Mi, Y.; Fu, Y.; Dong, Z. Spatial-temporal attention and GRU based interpretable condition monitoring of offshore wind turbine gearboxes. IET Renew. Power Gener. 2022, 16, 402–415. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, W.; Zhou, X.; Zhou, Q. MECGuard: GRU enhanced attack detection in Mobile Edge Computing environment. Comput. Commun. 2021, 172, 1–9. [Google Scholar] [CrossRef]

- Huang, X.; Yuan, Y.; Chang, C.; Gao, Y.; Zheng, C.; Yan, L. Human Activity Recognition Method Based on Edge Computing-Assisted and GRU Deep Learning Network. Appl. Sci. 2023, 13, 9059. [Google Scholar] [CrossRef]

- Chowdhary, A.; Jha, K.; Zhao, M. Generative Adversarial Network (GAN)-Based Autonomous Penetration Testing for Web Applications. Sensors 2023, 23, 8014. [Google Scholar] [CrossRef]

- Gan, C.; Lin, J.; Huang, D.W.; Zhu, Q.; Tian, L. Advanced persistent threats and their defense methods in industrial Internet of things: A survey. Mathematics 2023, 11, 3115. [Google Scholar] [CrossRef]

- Li, Y.; Dai, W.; Bai, J.; Gan, X.; Wang, J.; Wang, X. An Intelligence-Driven Security-Aware Defense Mechanism for Advanced Persistent Threats. IEEE Trans. Inf. Forensics Secur. 2019, 14, 646–661. [Google Scholar] [CrossRef]

- Yu, W.; Sun, Y.; Zhou, R.; Liu, X. GAN Based Method for Labeled Image Augmentation in Autonomous Driving. In Proceedings of the 2019 IEEE International Conference on Connected Vehicles and Expo (ICCVE), Graz, Austria, 4–8 November 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Lee, J.; Shiotsuka, D.; Nishimori, T.; Nakao, K.; Kamijo, S. Gan-based lidar translation between sunny and adverse weather for autonomous driving and driving simulation. Sensors 2022, 22, 5287. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Zhang, Y.; Zhang, L.; Liu, C.; Khurshid, S. Deeproad: Gan-based metamorphic testing and input validation framework for autonomous driving systems. In Proceedings of the 33rd ACM/IEEE International Conference on Automated Software Engineering, Montpellier, France, 3–7 September 2018; pp. 132–142. [Google Scholar]

- Ma, C.T.; Gu, Z.H. Review of GaN HEMT applications in power converters over 500 W. Electronics 2019, 8, 1401. [Google Scholar] [CrossRef]

- Tien, C.W.; Huang, T.Y.; Chen, P.C.; Wang, J.H. Using autoencoders for anomaly detection and transfer learning in IoT. Computers 2021, 10, 88. [Google Scholar] [CrossRef]

- Torabi, H.; Mirtaheri, S.L.; Greco, S. Practical autoencoder based anomaly detection by using vector reconstruction error. Cybersecurity 2023, 6, 1. [Google Scholar] [CrossRef]

- Liu, T.; Wang, J.; Liu, Q.; Alibhai, S.; Lu, T.; He, X. High-ratio lossy compression: Exploring the autoencoder to compress scientific data. IEEE Trans. Big Data 2021, 9, 22–36. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Z. A Conditional Convolutional Autoencoder-Based Method for Monitoring Wind Turbine Blade Breakages. IEEE Trans. Ind. Inform. 2021, 17, 6390–6398. [Google Scholar] [CrossRef]

- Roy, M.; Bose, S.K.; Kar, B.; Gopalakrishnan, P.K.; Basu, A. A Stacked Autoencoder Neural Network based Automated Feature Extraction Method for Anomaly detection in On-line Condition Monitoring. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; pp. 1501–1507. [Google Scholar] [CrossRef]

- Lee, S.J.; Yoo, P.D.; Asyhari, A.T.; Jhi, Y.; Chermak, L.; Yeun, C.Y.; Taha, K. IMPACT: Impersonation Attack Detection via Edge Computing Using Deep Autoencoder and Feature Abstraction. IEEE Access 2020, 8, 65520–65529. [Google Scholar] [CrossRef]

- Yu, W.; Liu, Y.; Dillon, T.; Rahayu, W. Edge Computing-Assisted IoT Framework With an Autoencoder for Fault Detection in Manufacturing Predictive Maintenance. IEEE Trans. Ind. Inform. 2023, 19, 5701–5710. [Google Scholar] [CrossRef]

- Xilinx. Vitis AI. Available online: https://www.xilinx.com/products/design-tools/vitis/vitis-ai.html (accessed on 1 June 2024).

- Nannipieri, P.; Giuffrida, G.; Diana, L.; Panicacci, S.; Zulberti, L.; Fanucci, L.; Hernandez, H.G.M.; Hubner, M. Icu4sat: A general-purpose reconfigurable instrument control unit based on open source components. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–9. [Google Scholar]

- Pacini, T.; Rapuano, E.; Fanucci, L. Fpg-ai: A technology-independent framework for the automation of cnn deployment on fpgas. IEEE Access 2023, 11, 32759–32775. [Google Scholar] [CrossRef]

- Mittal, S. A survey of FPGA-based accelerators for convolutional neural networks. Neural Comput. Appl. 2020, 32, 1109–1139. [Google Scholar] [CrossRef]

- Google. TensorFlow Lite. Available online: https://www.tensorflow.org/lite (accessed on 1 June 2024).

- David, R.; Duke, J.; Jain, A.; Reddi, V.J.; Jeffries, N.; Li, J.; Kreeger, N.; Nappier, I.; Natraj, M.; Regev, S.; et al. Tensorflow lite micro: Embedded machine learning on tinyml systems. arXiv 2020, arXiv:2010.08678. [Google Scholar]

- Google. TensorFlow Lite for Microcontrollers. Available online: https://www.tensorflow.org/lite/microcontrollers (accessed on 1 June 2024).

- CMSIS. CMSIS-NN. Available online: https://arm-software.github.io/CMSIS_6/latest/NN/index.html (accessed on 1 June 2024).

- STMicroelectronics. STM32Cube.AI. Available online: https://stm32ai.st.com/stm32-cube-ai/ (accessed on 1 June 2024).

- STMicroelectronics. AI Model Zoo for STM32 Devices. Available online: https://github.com/STMicroelectronics/stm32ai-modelzoo/ (accessed on 1 June 2024).

- STMicroelectronics. IMUs with Intelligent Sensor Processing Unit. Available online: https://www.st.com/content/st_com/en/campaigns/ispu-ai-in-sensors.html (accessed on 1 June 2024).

- STMicroelectronics. X-NUCLEO-IKS4A1 Expansion Board for STM32 Nucleo. Available online: https://www.st.com/en/ecosystems/x-nucleo-iks4a1.html (accessed on 1 June 2024).

- Renesas. E-AI Solutions. Available online: https://www.renesas.com/us/en/key-technologies/artificial-intelligence/e-ai (accessed on 1 June 2024).

- Hailo. Hailo-8 for Edge Devices. Available online: https://hailo.ai/products/ai-accelerators/hailo-8-ai-accelerator/#hailo8-overview (accessed on 1 June 2024).

- Bahig, G.; El-Kadi, A. Formal verification of automotive design in compliance with ISO 26262 design verification guidelines. IEEE Access 2017, 5, 4505–4516. [Google Scholar] [CrossRef]

- Hailo. Hailo Software Suite for AI Applications. Available online: https://hailo.ai/products/hailo-software/hailo-ai-software-suite/#sw-overview (accessed on 1 June 2024).

- Hailo. Hailo Model Zoo. Available online: https://github.com/hailo-ai/hailo_model_zoo/tree/master (accessed on 1 June 2024).

- Google. Edge TPU. Available online: https://coral.ai/products/ (accessed on 1 June 2024).

- Google. TensorFlow Models on the Edge TPU. Available online: https://coral.ai/docs/edgetpu/models-intro (accessed on 1 June 2024).

- Google. Run Inference on the Edge TPU with Python. Available online: https://coral.ai/docs/edgetpu/tflite-python/ (accessed on 1 June 2024).

- Google. Models for Edge TPU. Available online: https://coral.ai/models/ (accessed on 1 June 2024).

- Ramaswami, D.P.; Hiemstra, D.M.; Yang, Z.W.; Shi, S.; Chen, L. Single event upset characterization of the intel movidius myriad x vpu and google edge tpu accelerators using proton irradiation. In Proceedings of the 2022 IEEE Radiation Effects Data Workshop (REDW) (in Conjunction with 2022 NSREC), Provo, UT, USA, 18–22 July 2022; pp. 1–3. [Google Scholar]

- Nvidia. Jetson Orin for Next-Gen Robotics. Available online: https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-orin/ (accessed on 1 June 2024).

- Nvidia. Jetson Orin Nano Developer Kit Getting Started. Available online: https://developer.nvidia.com/embedded/learn/get-started-jetson-orin-nano-devkit (accessed on 1 June 2024).

- Nvidia. TensorRT SDK. Available online: https://developer.nvidia.com/tensorrt (accessed on 1 June 2024).

- Slater, W.S.; Tiwari, N.P.; Lovelly, T.M.; Mee, J.K. Total ionizing dose radiation testing of NVIDIA Jetson nano GPUs. In Proceedings of the 2020 IEEE High Performance Extreme Computing Conference (HPEC), Boston, MA, USA, 21–25 September 2020; pp. 1–3. [Google Scholar]

- Rad, I.O.; Alarcia, R.M.G.; Dengler, S.; Golkar, A.; Manfletti, C. Preliminary Evaluation of Commercial Off-The-Shelf GPUs for Machine Learning Applications in Space. Semester Thesis, Technical University of Munich, Munich, Germany, 6 September 2023. [Google Scholar]

- Giuffrida, G.; Diana, L.; de Gioia, F.; Benelli, G.; Meoni, G.; Donati, M.; Fanucci, L. CloudScout: A deep neural network for on-board cloud detection on hyperspectral images. Remote Sens. 2020, 12, 2205. [Google Scholar] [CrossRef]

- Dunkel, E.; Swope, J.; Towfic, Z.; Chien, S.; Russell, D.; Sauvageau, J.; Sheldon, D.; Romero-Cañas, J.; Espinosa-Aranda, J.L.; Buckley, L.; et al. Benchmarking deep learning inference of remote sensing imagery on the qualcomm snapdragon and intel movidius myriad x processors onboard the international space station. In Proceedings of the IGARSS 2022-2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 5301–5304. [Google Scholar]

- Dunkel, E.R.; Swope, J.; Candela, A.; West, L.; Chien, S.A.; Towfic, Z.; Buckley, L.; Romero-Cañas, J.; Espinosa-Aranda, J.L.; Hervas-Martin, E.; et al. Benchmarking Deep Learning Models on Myriad and Snapdragon Processors for Space Applications. J. Aerosp. Inf. Syst. 2023, 20, 660–674. [Google Scholar] [CrossRef]

- Furano, G.; Meoni, G.; Dunne, A.; Moloney, D.; Ferlet-Cavrois, V.; Tavoularis, A.; Byrne, J.; Buckley, L.; Psarakis, M.; Voss, K.O.; et al. Towards the Use of Artificial Intelligence on the Edge in Space Systems: Challenges and Opportunities. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 44–56. [Google Scholar] [CrossRef]

- Buckley, L.; Dunne, A.; Furano, G.; Tali, M. Radiation test and in orbit performance of mpsoc ai accelerator. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–9. [Google Scholar]

- Chappa, R.T.N.; El-Sharkawy, M. Deployment of SE-SqueezeNext on NXP BlueBox 2.0 and NXP i.MX RT1060 MCU. In Proceedings of the 2020 IEEE Midwest Industry Conference (MIC), Champaign, IL, USA, 7–8 August 2020; Volume 1, pp. 1–4. [Google Scholar] [CrossRef]

- Desai, S.R.; Sinha, D.; El-Sharkawy, M. Image Classification on NXP i.MX RT1060 using Ultra-thin MobileNet DNN. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2020; pp. 474–480. [Google Scholar] [CrossRef]

- Ayi, M.; El-Sharkawy, M. Real-time Implementation of RMNv2 Classifier in NXP Bluebox 2.0 and NXP i.MX RT1060. In Proceedings of the 2020 IEEE Midwest Industry Conference (MIC), Champaign, IL, USA, 7–8 August 2020; Volume 1, pp. 1–4. [Google Scholar] [CrossRef]

- NXP-eIQ ML Software Development Environment. Available online: https://www.nxp.com/design/design-center/software/eiq-ml-development-environment:EIQ (accessed on 12 June 2024).

- NXP. S32K1 Microcontrollers for Automotive General Purpose. 2020. Available online: https://www.nxp.com/products/processors-and-microcontrollers/s32-automotive-platform/s32k-auto-general-purpose-mcus/s32k1-microcontrollers-for-automotive-general-purpose:S32K1 (accessed on 5 June 2024).

- NXP. S33K1 Microcontrollers for Automotive General Purpose. 2022. Available online: https://www.nxp.com/products/processors-and-microcontrollers/s32-automotive-platform/s32k-auto-general-purpose-mcus/s32k3-microcontrollers-for-automotive-general-purpose:S32K3 (accessed on 5 June 2024).

- Semiconductor, N. Bluetooth Low Energy and Bluetooth Mesh Development Kit for the nRF52810 and nRF52832 SoCs. 2022. Available online: https://www.nordicsemi.com/Products/Development-hardware/nRF52-DK (accessed on 5 June 2024).

- ARM. Arm Total Access—Accelerate Development and Time-to-Market. 2022. Available online: https://www.arm.com/products/licensing/arm-total-access (accessed on 5 June 2024).

- Semiconductor, N. Nordic to Acquire AI/ML Technology in the US. 2022. Available online: https://www.nordicsemi.com/Nordic-news/2023/08/Nordic-to-acquire-AI-ML-technology-in-the-US (accessed on 5 June 2024).

- Infineon, A. 32-bit TriCoreTM AURIXTM—TC2xx. 2022. Available online: https://www.infineon.com/cms/en/product/microcontroller/32-bit-tricore-microcontroller/32-bit-tricore-aurix-tc2xx/ (accessed on 5 June 2024).

- Infineon, A. 32-bit TriCoreTM AURIXTM—TC3xx. 2022. Available online: https://www.infineon.com/cms/en/product/microcontroller/32-bit-tricore-microcontroller/32-bit-tricore-aurix-tc3xx/?_gl=1*16hlusy*_up*MQ..&gclid=CjwKCAjwmYCzBhA6EiwAxFwfgBRAswH3Tly-AZ6-ADyxjsCXa2yu8Dey2HkYe-zmKJidyyweXxgvghoCm4wQAvD_BwE&gclsrc=aw.ds (accessed on 5 June 2024).

- Infineon, A. 32-bit TriCoreTM AURIXTM—TC4xx. 2022. Available online: https://www.infineon.com/cms/en/product/microcontroller/32-bit-tricore-microcontroller/32-bit-tricore-aurix-tc4x/?_gl=1*yej4gb*_up*MQ..&gclid=CjwKCAjwmYCzBhA6EiwAxFwfgBRAswH3Tly-AZ6-ADyxjsCXa2yu8Dey2HkYe-zmKJidyyweXxgvghoCm4wQAvD_BwE&gclsrc=aw.ds (accessed on 5 June 2024).

- Wang, J.; Gu, S. FPGA Implementation of Object Detection Accelerator Based on Vitis-AI. In Proceedings of the 2021 11th International Conference on Information Science and Technology (ICIST), Chengdu, China, 21–23 May 2021; pp. 571–577. [Google Scholar] [CrossRef]

- Kathail, V. Xilinx vitis unified software platform. In Proceedings of the FPGA ’20: The 2020 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Seaside, CA, USA, 23–25 February 2020; pp. 173–174. [Google Scholar]

- Ushiroyama, A.; Watanabe, M.; Watanabe, N.; Nagoya, A. Convolutional neural network implementations using Vitis AI. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 26–29 January 2022; pp. 0365–0371. [Google Scholar] [CrossRef]

- Sallang, N.C.A.; Islam, M.T.; Islam, M.S.; Arshad, H. A CNN-Based Smart Waste Management System Using TensorFlow Lite and LoRa-GPS Shield in Internet of Things Environment. IEEE Access 2021, 9, 153560–153574. [Google Scholar] [CrossRef]

- Labrèche, G.; Evans, D.; Marszk, D.; Mladenov, T.; Shiradhonkar, V.; Soto, T.; Zelenevskiy, V. OPS-SAT Spacecraft Autonomy with TensorFlow Lite, Unsupervised Learning, and Online Machine Learning. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022; pp. 1–17. [Google Scholar] [CrossRef]

- Manor, E.; Greenberg, S. Custom Hardware Inference Accelerator for TensorFlow Lite for Microcontrollers. IEEE Access 2022, 10, 73484–73493. [Google Scholar] [CrossRef]

- De Vita, F.; Nocera, G.; Bruneo, D.; Tomaselli, V.; Falchetto, M. On-Device Training of Deep Learning Models on Edge Microcontrollers. In Proceedings of the 2022 IEEE International Conferences on Internet of Things (iThings) and IEEE Green Computing & Communications (GreenCom) and IEEE Cyber, Physical & Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics (Cybermatics), Espoo, Finland, 22–25 August 2022; pp. 62–69. [Google Scholar] [CrossRef]

- Akhtari, S.; Pickhardt, F.; Pau, D.; Pietro, A.D.; Tomarchio, G. Intelligent Embedded Load Detection at the Edge on Industry 4.0 Powertrains Applications. In Proceedings of the 2019 IEEE 5th International forum on Research and Technology for Society and Industry (RTSI), Florence, Italy, 9–12 September 2019; pp. 427–430. [Google Scholar] [CrossRef]

- Crocioni, G.; Pau, D.; Delorme, J.M.; Gruosso, G. Li-Ion Batteries Parameter Estimation With Tiny Neural Networks Embedded on Intelligent IoT Microcontrollers. IEEE Access 2020, 8, 122135–122146. [Google Scholar] [CrossRef]

- Pau, D.P.; Randriatsimiovalaza, M.D. Electromyography Gestures Sensing with Deeply Quantized Neural Networks. In Proceedings of the 2023 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), Milano, Italy, 25–27 October 2023; pp. 711–716. [Google Scholar] [CrossRef]

- Ronco, A.; Schulthess, L.; Zehnder, D.; Magno, M. Machine Learning In-Sensors: Computation-enabled Intelligent Sensors For Next Generation of IoT. In Proceedings of the 2022 IEEE Sensors, Dallas, TX, USA, 30 October–2 November 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Hung, C.W.; Wu, J.R.; Lee, C.H. Device Light Fingerprints Identification Using MCU-Based Deep Learning Approach. IEEE Access 2021, 9, 168134–168140. [Google Scholar] [CrossRef]

- Safi, M.; Dadkhah, S.; Shoeleh, F.; Mahdikhani, H.; Molyneaux, H.; Ghorbani, A.A. A survey on IoT profiling, fingerprinting, and identification. ACM Trans. Internet Things 2022, 3, 1–39. [Google Scholar] [CrossRef]

- Kim, R.; Kim, J.; Yoo, H.; Kim, S.C. Implementation of deep learning based intelligent image analysis on an edge AI platform using heterogeneous AI accelerators. In Proceedings of the 2023 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 11–13 October 2023; pp. 1347–1349. [Google Scholar]

- Mika, K.; Griessl, R.; Kucza, N.; Porrmann, F.; Kaiser, M.; Tigges, L.; Hagemeyer, J.; Trancoso, P.; Azhar, M.W.; Qararyah, F.; et al. VEDLIoT: Next generation accelerated AIoT systems and applications. In Proceedings of the CF ’23: 20th ACM International Conference on Computing Frontiers, Bologna, Italy, 9–11 May 2023; pp. 291–296. [Google Scholar]

- Griessl, R.; Porrmann, F.; Kucza, N.; Mika, K.; Hagemeyer, J.; Kaiser, M.; Porrmann, M.; Tassemeier, M.; Flottmann, M.; Qararyah, F.; et al. Evaluation of heterogeneous AIoT Accelerators within VEDLIoT. In Proceedings of the 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE), Ingrid Verbauwhede, KU Leuven, Leuven, Belgium, 13 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Sengupta, J.; Kubendran, R.; Neftci, E.; Andreou, A. High-Speed, Real-Time, Spike-Based Object Tracking and Path Prediction on Google Edge TPU. In Proceedings of the 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Genova, Italy, 31 August–2 September 2020; pp. 134–135. [Google Scholar] [CrossRef]

- Seshadri, K.; Akin, B.; Laudon, J.; Narayanaswami, R.; Yazdanbakhsh, A. An Evaluation of Edge TPU Accelerators for Convolutional Neural Networks. In Proceedings of the 2022 IEEE International Symposium on Workload Characterization (IISWC), Austin, TX, USA, 6–8 November 2022; pp. 79–91. [Google Scholar] [CrossRef]

- Barnell, M.; Raymond, C.; Smiley, S.; Isereau, D.; Brown, D. Ultra Low-Power Deep Learning Applications at the Edge with Jetson Orin AGX Hardware. In Proceedings of the 2022 IEEE High Performance Extreme Computing Conference (HPEC), Virtually, 19–23 September 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Pham, H.V.; Tran, T.G.; Le, C.D.; Le, A.D.; Vo, H.B. Benchmarking Jetson Edge Devices with an End-to-end Video-based Anomaly Detection System. In Future of Information and Communication Conference; Springer: Berlin/Heidelberg, Germany, 2024; pp. 358–374. [Google Scholar]

- Alexey, G.; Klyachin, V.; Eldar, K.; Driaba, A. Autonomous mobile robot with AI based on Jetson Nano. In Future Technologies Conference (FTC) 2020; Springer: Berlin/Heidelberg, Germany, 2021; Volume 1, pp. 190–204. [Google Scholar]

- Leon, V.; Minaidis, P.; Lentaris, G.; Soudris, D. Accelerating AI and Computer Vision for Satellite Pose Estimation on the Intel Myriad X Embedded SoC. Microprocess. Microsyst. 2023, 103, 104947. [Google Scholar] [CrossRef]

- Bajer, M. Securing and Hardening Embedded Linux Devices—Case study based on NXP i.MX6 Platform. In Proceedings of the 2022 9th International Conference on Future Internet of Things and Cloud (FiCloud), Rome, Italy (and Online), 22–24 August 2022; pp. 181–189. [Google Scholar] [CrossRef]

- Pathak, D.; El-Sharkawy, M. Architecturally Compressed CNN: An Embedded Realtime Classifier (NXP Bluebox2.0 with RTMaps). In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 331–336. [Google Scholar] [CrossRef]

- Cao, Y.F.; Cheung, S.W.; Yuk, T.I. A Multiband Slot Antenna for GPS/WiMAX/WLAN Systems. IEEE Trans. Antennas Propag. 2015, 63, 952–958. [Google Scholar] [CrossRef]

- Bajaj, R.; Ranaweera, S.; Agrawal, D. GPS: Location-tracking technology. Computer 2002, 35, 92–94. [Google Scholar] [CrossRef]

- Takai, M.; Martin, J.; Bagrodia, R.; Ren, A. Directional virtual carrier sensing for directional antennas in mobile ad hoc networks. In Proceedings of the MobiHoc02: ACM Symposium on Mobile Ad Hoc Networking and Networking, Lausanne, Switzerland, 9–11 June 2002; pp. 183–193. [Google Scholar]

- Díaz, E.; Mezzetti, E.; Kosmidis, L.; Abella, J.; Cazorla, F.J. Modelling multicore contention on the aurix tm tc27x. In Proceedings of the DAC ’18: The 55th Annual Design Automation Conference 2018, San Francisco, CA, USA, 24–29 June 2018; pp. 1–6. [Google Scholar]

- Mezzetti, E.; Barbina, L.; Abella, J.; Botta, S.; Cazorla, F.J. AURIX TC277 Multicore Contention Model Integration for Automotive Applications. In Proceedings of the 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE), Florence, Italy, 25–29 March 2019; pp. 1202–1203. [Google Scholar] [CrossRef]

- Azad, F.; Islam, Y.; Md Ruslan, C.Z.; Aye Mong Marma, C.; Kalpoma, K.A. Efficient Lane Detection and Keeping for Autonomous Vehicles in Real-World Scenarios. In Proceedings of the 2023 26th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 13–15 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

| Model | Pros | Cons |

|---|---|---|

| CNN |

|

|

| RNN |

|

|

| LSTM |

|

|

| GRU |

|

|

| GAN |

|

|

| Autoenc. |

|

|

| Application | Description | Benefits | Challenges | Examples |

|---|---|---|---|---|

| Predictive Maintenance | Utilizing IoT sensors to monitor the condition of machinery and equipment in real-time, enabling predictive maintenance to prevent costly breakdowns. |

|

| General Electric’s Predix, Siemens MindSphere, Schneider Electric’s EcoStruxure |

| Asset Tracking and Management | Tracking the location, status, and condition of assets (such as equipment, vehicles, or inventory) using IoT devices and sensors. |

|

| IBM Watson IoT Platform, Cisco Kinetic for Manufacturing, Microsoft Azure IoT Suite |

| Remote Monitoring and Control | Monitoring and controlling industrial processes, equipment, and systems remotely through IoT-enabled sensors and actuators. |

|

| Honeywell Sentience, ABB Ability, Emerson Plantweb |

| Quality Control and Assurance | Implementing IoT sensors to monitor and analyze product quality, identify defects, and ensure compliance with quality standards throughout the manufacturing process. |

|

| Bosch IoT Suite, PTC ThingWorx, Rockwell Automation FactoryTalk Analytics |

| Energy Management and Efficiency | Monitoring and optimizing energy consumption, usage patterns, and efficiency of industrial facilities and equipment through IoT sensors and analytics. |

|

| Sensital iBOTics, General Electric’s Advanced Energy Management System, ABB Energy Management solutions |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dini, P.; Diana, L.; Elhanashi, A.; Saponara, S. Overview of AI-Models and Tools in Embedded IIoT Applications. Electronics 2024, 13, 2322. https://doi.org/10.3390/electronics13122322

Dini P, Diana L, Elhanashi A, Saponara S. Overview of AI-Models and Tools in Embedded IIoT Applications. Electronics. 2024; 13(12):2322. https://doi.org/10.3390/electronics13122322

Chicago/Turabian StyleDini, Pierpaolo, Lorenzo Diana, Abdussalam Elhanashi, and Sergio Saponara. 2024. "Overview of AI-Models and Tools in Embedded IIoT Applications" Electronics 13, no. 12: 2322. https://doi.org/10.3390/electronics13122322

APA StyleDini, P., Diana, L., Elhanashi, A., & Saponara, S. (2024). Overview of AI-Models and Tools in Embedded IIoT Applications. Electronics, 13(12), 2322. https://doi.org/10.3390/electronics13122322