An Analysis of Traditional Methods and Deep Learning Methods in SSVEP-Based BCI: A Survey

Abstract

:1. Introduction

1.1. Literature Search and Inclusion Criteria

1.2. Contributions of This Survey

- (1)

- A detailed overview of the progress of SSVEP from paradigm to signal decoding and, finally, application is provided to help researchers better understand the various research progresses of SSVEP-BCI.

- (2)

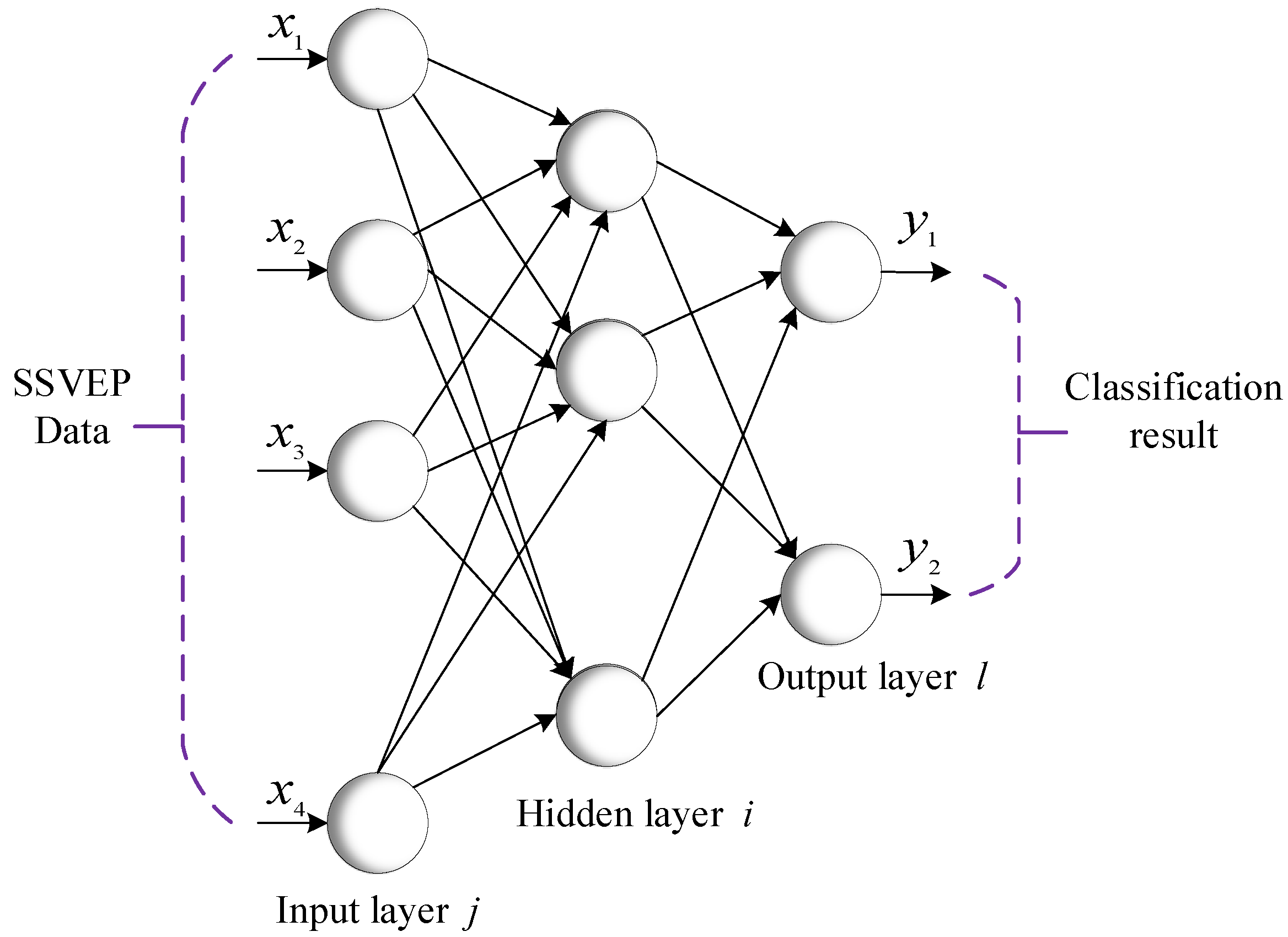

- We analyze and summarize the current mainstream research direction and research content of SSVEP-BCI based on deep learning, and outline the development history of deep learning. The traditional KNN, MLP, and SVM classification algorithms and neural-network-based deep learning classification algorithms are described in detail, comparing and analyzing the advantages, disadvantages, and adaptation scenarios to provide design guidelines for researchers.

- (3)

- We point out SSVEP-BCI’s deficiencies and difficulties, the main breakthrough points in future research, and provide an outlook on the future direction of SSVEP-BCI application based on deep learning classification algorithms.

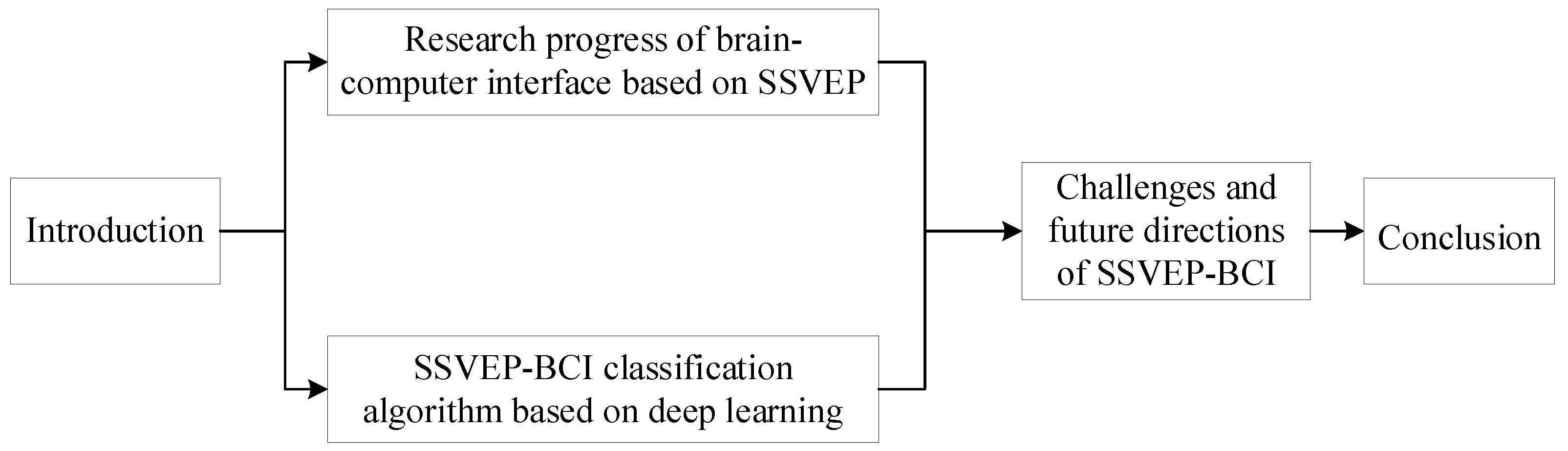

1.3. The Structure of This Survey

2. Research Progress of BCI Based on SSVEP

2.1. Research Progress of SSVEP Paradigm

2.2. Research Progress of Decoding Methods

2.3. Research Progress of System and Application

3. SSVEP-BCI Classification Algorithm Based on Deep Learning

3.1. The Development Process of Deep Learning

- Inception: From 1980, when the backpropagation (BP) algorithm was proposed, to 2006, the BP algorithm was proposed to make the training of neural networks simple and feasible [65]. However, due to the problems of neural network algorithms and the limitations of computer power, very few scientists were able to stay in the field. Shallow learning methods became the mainstream of the era, and algorithms such as KNN, MLP, and SVM have received widespread attention [66,67,68]. These shallow learning methods are achieving good results in practice, which makes deep neural networks unpopular.

- Rapid development period: From 2006 to 2012. In 2006, Hinton and his team [69,70] published a paper in Science, proposing a downscaling and layer-by-layer pretraining method that made the practical implementation of deep networks possible. In the same year, they also published an important paper proposing a solution to the problem of gradient disappearance during deep network training, and proposed the concept of “deep autoencoder”. Since then, researchers’ studies on neural networks began to enter the era of deep learning, and the curtain of the development of deep learning was opened. In 2012, Hinton et al. [71] made a breakthrough in research, proposing the “dropout” technology, which can effectively reduce the overfitting in deep learning, improve the generalization ability, and simplify the design of neural networks; the proposed technology has inspired the deep learning research boom.

- Explosion period: from 2012 to present. In 2012, Hinton’s student Krizhevsky et al. [72] used convolutional neural networks as the basis for ImageNet image recognition and achieved superior performance results to all traditional methods. From then on, convolutional neural networks began to shine in the field of computer vision. In 2014, Krizhevsky [73] proposed two parallelization methods, data parallelism and model parallelism, which significantly improved the training speed of convolutional neural networks through parallelized training and promoted the development of deep learning frameworks. In 2016, He et al. [74] proposed residual networks, which solved the problem of difficult training of deep networks and provided important ideas and methods. In 2017, Vaswani et al. [75] proposed the Transformer model for the first time, which revolutionized the field of deep learning, and not only improved the performance of deep learning models, but also greatly accelerated the training speed and inference speed of the model. In 2018, Devlin et al. [76] proposed the Bidirectional Encoder Representations from Transformers (BERT), a new language model, which has had a significant impact on the application of deep learning in the field of natural language. In 2019, Rejer et al. [77] minimized the number of channels by adjusting the number of channels for acquiring EEG signals while maintaining high accuracy, which greatly contributed to the research on SSVEP-BCI. In 2022, Du et al. [78] discussed in-depth research on SSVEP for augmented reality (AR) and provided a comparative analysis on the color selection of visual stimuli in AR-SSVEP for researchers using SSVEP.

3.2. Data Preprocessing

3.2.1. Frequency Filter

3.2.2. Time–Frequency Conversion

3.2.3. Filter Bank

3.3. Types and Layers of Network Architecture

3.3.1. Traditional Classification Algorithm

3.3.2. Deep Learning Classification Algorithms

3.3.3. Comparative Analysis of Classification Algorithms

4. Challenges and Future Directions of SSVEP-BCI

- The background noise of SSVEP signal data is relatively large, and external sound, light source interference, magnetic field, electric field, etc., may cause interference to the data acquisition process.

- The ITR of the SSVEP-BCI system has room for improvement. When the number of stimulus targets is certain, the information transfer rate mainly depends on the length of the recognition window of the classification recognition algorithm, and there are still very few SSVEP recognition algorithms that can achieve high efficiency with short time windows.

- Most current SSVEP-BCIs use low-frequency stimulus targets as the stimulus source, but prolonged use of low-frequency SSVEP-BCIs can fatigue users, and low-frequency SSVEP-BCIs increase the risk of inducing photosensitive epilepsy.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, B.; Yuan, H.; Meng, J.; Gao, S. Brain–computer interfaces. In Neural Engineering; Springer: Berlin/Heidelberg, Germany, 2020; pp. 131–183. [Google Scholar]

- Rezeika, A.; Benda, M.; Stawicki, P.; Gembler, F.; Saboor, A.; Volosyak, I. Brain–computer interface spellers: A review. Brain Sci. 2018, 8, 57. [Google Scholar] [CrossRef] [PubMed]

- Revuelta Herrero, J.; Lozano Murciego, Á.; López Barriuso, A.; Hernández de la Iglesia, D.; Villarrubia González, G.; Corchado Rodríguez, J.M.; Carreira, R. Non intrusive load monitoring (nilm): A state of the art. In Trends in Cyber-Physical Multi-Agent Systems. In Proceedings of the 15th ProThe PAAMS Collection-15th International Conference, PAAMS, Porto, Portugal, 21–23 June 2017; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 125–138. [Google Scholar]

- Cohen, M.X. Where does EEG come from and what does it mean? Trends Neurosci. 2017, 40, 208–218. [Google Scholar] [CrossRef] [PubMed]

- Zou, X.L.; Huang, T.J.; Wu, S. Towards a new paradigm for brain-inspired computer vision. Mach. Intell. Res. 2022, 19, 412–424. [Google Scholar] [CrossRef]

- Liu, B.; Huang, X.; Wang, Y.; Chen, X.; Gao, X. BETA: A large benchmark database toward SSVEP-BCI application. Front. Neurosci. 2020, 14, 627. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Wang, Y.; Zhang, S.; Gao, S.; Hu, Y.; Gao, X. A novel stimulation method for multi-class SSVEP-BCI using intermodulation frequencies. J. Neural Eng. 2017, 14, 026013. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.W. The study of mechanical arm and intelligent robot. IEEE Access 2020, 8, 119624–119634. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Leaman, J.; La, H.M. A comprehensive review of smart wheelchairs: Past, present, and future. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 486–499. [Google Scholar] [CrossRef]

- Zerafa, R.; Camilleri, T.; Falzon, O.; Camilleri, K.P. To train or not to train? A survey on training of feature extraction methods for SSVEP-based BCIs. J. Neural Eng. 2018, 15, 051001. [Google Scholar] [CrossRef]

- Xing, X.; Wang, Y.; Pei, W.; Guo, X.; Liu, Z.; Wang, F.; Chen, H. A high-speed SSVEP-based BCI using dry EEG electrodes. Sci. Rep. 2018, 8, 14708. [Google Scholar] [CrossRef]

- Fernández-Rodríguez, Á.; Velasco-Álvarez, F.; Ron-Angevin, R. Review of real brain-controlled wheelchairs. J. Neural Eng. 2016, 13, 061001. [Google Scholar] [CrossRef]

- Chen, X.; Liu, B.; Wang, Y.; Gao, X. A spectrally-dense encoding method for designing a high-speed SSVEP-BCI with 120 stimuli. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2764–2772. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Wang, Y.; Nakanishi, M.; Gao, X.; Jung, T.P.; Gao, S. High-speed spelling with a noninvasive brain–computer interface. Proc. Natl. Acad. Sci. USA 2015, 112, E6058–E6067. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Yang, C.; Ye, X.; Chen, X.; Wang, Y.; Gao, X. Implementing a calibration-free SSVEP-based BCI system with 160 targets. J. Neural Eng. 2021, 18, 046094. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Yang, R.; Huang, M.; Wang, Z.; Liu, X. Single-source to single-target cross-subject motor imagery classification based on multisubdomain adaptation network. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 1992–2002. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Wang, Y.; Maye, A.; Hong, B.; Gao, X.; Engel, A.K.; Zhang, D. A spatially-coded visual brain-computer interface for flexible visual spatial information decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 926–933. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Wang, Z.; Zhang, M.; Hu, H. A comfortable steady state visual evoked potential stimulation paradigm using peripheral vision. J. Neural Eng. 2021, 18, 056021. [Google Scholar] [CrossRef] [PubMed]

- Jiang, L.; Pei, W.; Wang, Y. A user-friendly SSVEP-based BCI using imperceptible phase-coded flickers at 60 Hz. China Commun. 2022, 19, 1–14. [Google Scholar] [CrossRef]

- Zhou, Y.; He, S.; Huang, Q.; Li, Y. A hybrid asynchronous brain-computer interface combining SSVEP and EOG signals. IEEE Trans. Biomed. Eng. 2020, 67, 2881–2892. [Google Scholar] [CrossRef]

- Jiang, L.; Li, X.; Pei, W.; Gao, X.; Wang, Y. A hybrid brain-computer interface based on visual evoked potential and pupillary response. Front. Hum. Neurosci. 2022, 16, 834959. [Google Scholar] [CrossRef]

- Merel, J.; Carlson, D.; Paninski, L.; Cunningham, J.P. Neuroprosthetic decoder training as imitation learning. PLoS Comput. Biol. 2016, 12, e1004948. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Li, W.; Ma, X.; He, J.; Huang, J.; Li, Q. Feature-selection-based transfer learning for intracortical brain–machine interface decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 29, 60–73. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Wen, X.; Gao, F.; Gao, C.; Cao, R.; Xiang, J.; Cao, R. Subject-Independent EEG Classification of Motor Imagery Based on Dual-Branch Feature Fusion. Brain Sci. 2023, 13, 1109. [Google Scholar] [CrossRef] [PubMed]

- Nakanishi, M.; Wang, Y.; Chen, X.; Wang, Y.T.; Gao, X.; Jung, T.P. Enhancing detection of SSVEPs for a high-speed brain speller using task-related component analysis. IEEE Trans. Biomed. Eng. 2017, 65, 104–112. [Google Scholar] [CrossRef] [PubMed]

- Duan, F.; Jia, H.; Sun, Z.; Zhang, K.; Dai, Y.; Zhang, Y. Decoding premovement patterns with task-related component analysis. Cogn. Comput. 2021, 13, 1389–1405. [Google Scholar] [CrossRef]

- Wong, C.M.; Wan, F.; Wang, B.; Wang, Z.; Nan, W.; Lao, K.F.; Rosa, A. Learning across multi-stimulus enhances target recognition methods in SSVEP-based BCIs. J. Neural Eng. 2020, 17, 016026. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Han, X.; Wang, Y.; Saab, R.; Gao, S.; Gao, X. A dynamic window recognition algorithm for SSVEP-based brain–computer interfaces using a spatio-temporal equalizer. Int. J. Neural Syst. 2018, 28, 1850028. [Google Scholar] [CrossRef]

- Liu, B.; Chen, X.; Shi, N.; Wang, Y.; Gao, S.; Gao, X. Improving the performance of individually calibrated SSVEP-BCI by task-discriminant component analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1998–2007. [Google Scholar] [CrossRef]

- Li, M.; Wu, L.; Xu, G.; Duan, F.; Zhu, C. A robust 3D-convolutional neural network-based electroencephalogram decoding model for the intra-individual difference. Int. J. Neural Syst. 2022, 32, 2250034. [Google Scholar] [CrossRef] [PubMed]

- Guney, O.B.; Oblokulov, M.; Ozkan, H. A deep neural network for ssvep-based brain-computer interfaces. IEEE Trans. Biomed. Eng. 2021, 69, 932–944. [Google Scholar] [CrossRef]

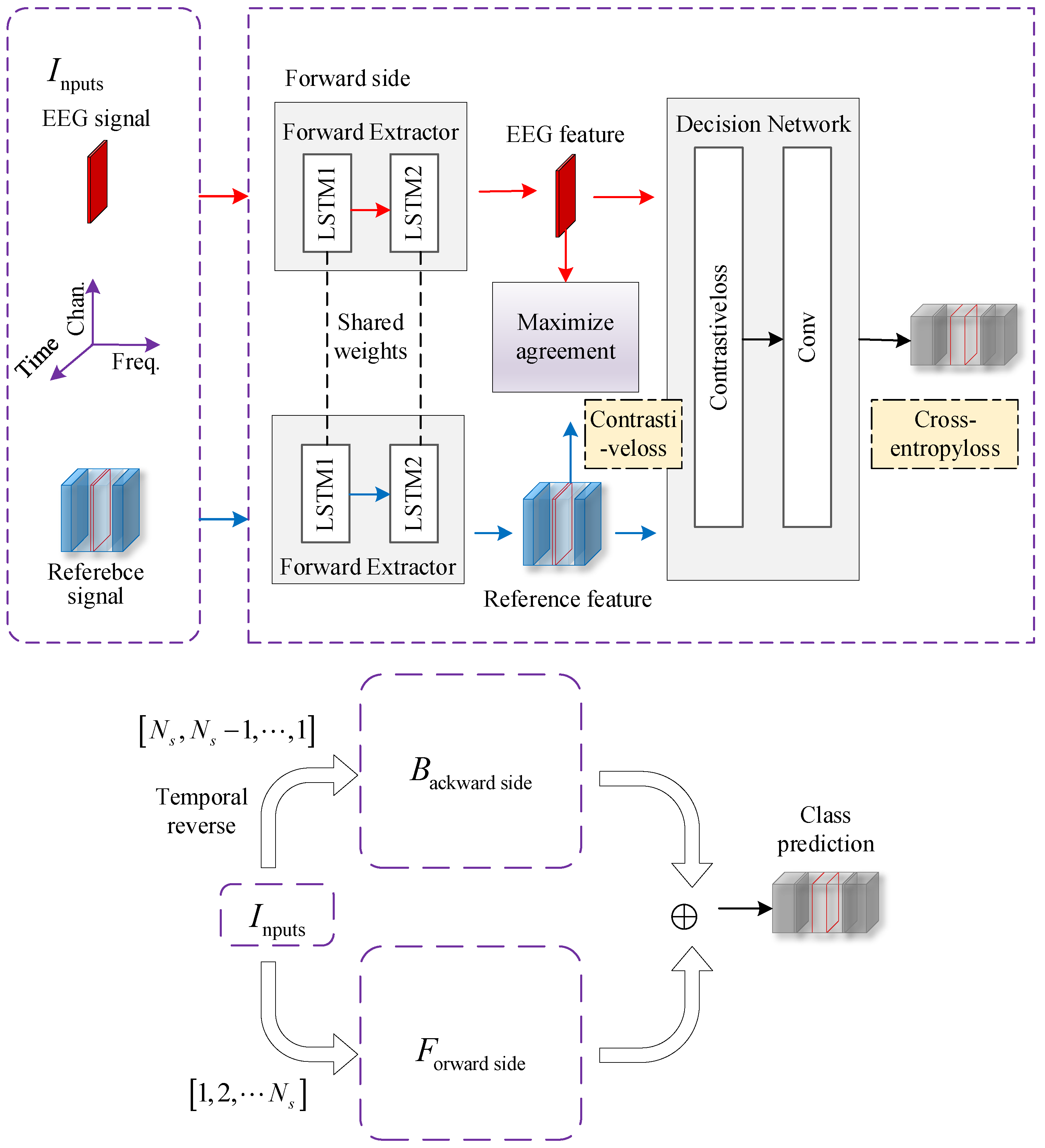

- Zhang, X.; Qiu, S.; Zhang, Y.; Wang, K.; Wang, Y.; He, H. Bidirectional Siamese correlation analysis method for enhancing the detection of SSVEPs. J. Neural Eng. 2022, 19, 046027. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Chen, X.; Li, X.; Wang, Y.; Gao, X.; Gao, S. Align and pool for EEG headset domain adaptation (ALPHA) to facilitate dry electrode based SSVEP-BCI. IEEE Trans. Biomed. Eng. 2021, 69, 795–806. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Li, Y.; Lu, J.; Li, P. EEGNet with ensemble learning to improve the cross-session classification of SSVEP based BCI from ear-EEG. IEEE Access 2021, 9, 15295–15303. [Google Scholar] [CrossRef]

- Ding, W.; Shan, J.; Fang, B.; Wang, C.; Sun, F.; Li, X. Filter bank convolutional neural network for short time-window steady-state visual evoked potential classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2615–2624. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Zhang, Y.; Pan, Y.; Xu, P.; Guan, C. A Transformer-based deep neural network model for SSVEP classification. Neural Netw. 2023, 164, 521–534. [Google Scholar] [CrossRef] [PubMed]

- Yan, W.; Wu, Y.; Du, C.; Xu, G. An improved cross-subject spatial filter transfer method for SSVEP-based BCI. J. Neural Eng. 2022, 19, 046028. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Shen, H.; Li, M.; Hu, D. Brain biometrics of steady state visual evoked potential functional networks. IEEE Trans. Cogn. Dev. Syst. 2022, 15, 1694–1701. [Google Scholar] [CrossRef]

- Seymour, K.M.; Higginson, C.I.; DeGoede, K.M.; Bifano, M.K.; Orr, R.; Higginson, J.S. Cellular telephone dialing influences kinematic and spatiotemporal gait parameters in healthy adults. J. Mot. Behav. 2016, 48, 535–541. [Google Scholar] [CrossRef] [PubMed]

- Allison, W. Unplanned obsolescence: Interpreting the automatic telephone dialing system after the smartphone epoch. Mich. L. Rev. 2020, 119, 147. [Google Scholar] [CrossRef]

- Sakuntharaj, R.; Mahesan, S. A novel hybrid approach to detect and correct spelling in Tamil text. In Proceedings of the 2016 IEEE international conference on information and automation for sustainability (ICIAfS), Galle, Sri Lanka, 16–19 December 2016; pp. 1–6. [Google Scholar]

- Kasmaiee, S.; Kasmaiee, S.; Homayounpour, M. Correcting spelling mistakes in Persian texts with rules and deep learning methods. Sci. Rep. 2023, 13, 19945. [Google Scholar] [CrossRef]

- Ciancio, A.L.; Cordella, F.; Barone, R.; Romeo, R.A.; Bellingegni, A.D.; Sacchetti, R.; Zollo, L. Control of prosthetic hands via the peripheral nervous system. Front. Neurosci. 2016, 10, 116. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Yao, L.; Ng, K.A.; Li, P.; Wang, W.; Je, M.; Xu, Y.P. A power-efficient current-mode neural/muscular stimulator design for peripheral nerve prosthesis. Int. J. Circuit Theory Appl. 2018, 46, 692–706. [Google Scholar] [CrossRef]

- Wei, Y.; Li, W.; An, D.; Li, D.; Jiao, Y.; Wei, Q. Equipment and intelligent control system in aquaponics: A review. IEEE Access 2019, 7, 169306–169326. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Q.; Meng, S.; Xu, Z.; Lv, C.; Zhou, C. A safety fault diagnosis method on industrial intelligent control equipment. Wirel. Netw. 2024, 30, 4287–4299. [Google Scholar] [CrossRef]

- Lee, Y.C.; Lin, W.C.; Cherng, F.Y.; Ko, L.W. A visual attention monitor based on steady-state visual evoked potential. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 399–408. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Xiu, L.; Chen, X.; Yu, G. Do emotions conquer facts? A CCME model for the impact of emotional information on implicit attitudes in the post-truth era. Humanit. Soc. Sci. Commun. 2023, 10, 1–7. [Google Scholar] [CrossRef]

- Kuo, Y.W.; Jung, T.P.; Shieh, H.P.D. Polychromatic SSVEP Stimuli with Subtle Flickering Adapted to Brain-Display Interactions. J. Neural Eng. 2017, 14, 016018. [Google Scholar]

- Yoon, H.S.; Park, K.R. Cyclegan-based deblurring for gaze tracking in vehicle environments. IEEE Access 2020, 8, 137418–137437. [Google Scholar] [CrossRef]

- Nakanishi, M.; Wang, Y.T.; Jung, T.P. Detecting Glaucoma with a Portable Brain-Computer Interface for Objective Assessment of Visual Function Loss. JAMA 2017, 135, 550–557. [Google Scholar] [CrossRef]

- Ouyang, R.; Jin, Z.; Tang, S.; Fan, C.; Wu, X. Low-quality training data detection method of EEG signals for motor imagery BCI system. J. Neurosci. Methods 2022, 376, 109607. [Google Scholar] [CrossRef]

- Phothisonothai, M. An investigation of using SSVEP for EEG-based user authentication system. In Proceedings of the 2015 IEEE Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Hong Kong, China, 16–19 December 2015; pp. 923–926. [Google Scholar]

- Zhao, H.; Wang, Y.; Liu, Z.; Pei, W.; Chen, H. Individual identification based on code-modulated visual-evoked potentials. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3206–3216. [Google Scholar] [CrossRef]

- Shi, N.; Wang, L.; Chen, Y.; Yan, X.; Yang, C.; Wang, Y.; Gao, X. Steady-state visual evoked potential (SSVEP)-based brain–computer interface (BCI) of Chinese speller for a patient with amyotrophic lateral sclerosis: A case report. J. Neurorestoratol. 2020, 8, 40–52. [Google Scholar] [CrossRef]

- Yang, C.; Yan, X.; Wang, Y.; Chen, Y.; Zhang, H.; Gao, X. Spatio-temporal equalization multi-window algorithm for asynchronous SSVEP-based BCI. J. Neural Eng. 2021, 18, 0460b7. [Google Scholar] [CrossRef] [PubMed]

- Wong, C.M.; Wang, Z.; Nakanishi, M.; Wang, B.; Rosa, A.; Chen, C.P.; Wan, F. Online adaptation boosts SSVEP-based BCI performance. IEEE Trans. Biomed. Eng. 2021, 69, 2018–2028. [Google Scholar] [CrossRef] [PubMed]

- Marinou, A.; Saunders, R.; Casson, A.J. Flexible inkjet printed sensors for behind-the-ear SSVEP EEG monitoring. In Proceedings of the 2020 IEEE International Conference on Flexible and Printable Sensors and Systems (FLEPS), Manchester, UK, 16–19 August 2020; pp. 1–4. [Google Scholar]

- Liang, L.; Bin, G.; Chen, X.; Wang, Y.; Gao, S.; Gao, X. Optimizing a left and right visual field biphasic stimulation paradigm for SSVEP-based BCIs with hairless region behind the ear. J. Neural Eng. 2021, 18, 066040. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Huang, X.; Wang, Y.; Gao, X. Combination of augmented reality based brain-computer interface and computer vision for high-level control of a robotic arm. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 3140–3147. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Chen, P.; Zhao, S.; Luo, Z.; Chen, W.; Pei, Y.; Yin, E. Adaptive asynchronous control system of robotic arm based on augmented reality-assisted brain–computer interface. J. Neural Eng. 2021, 18, 066005. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Xu, G.; Du, C.; Wu, Y.; Zheng, X.; Zhang, S.; Chen, R. Weak feature extraction and strong noise suppression for SSVEP-EEG based on chaotic detection technology. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 862–871. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Nguyen, T.H.; Chung, W.Y. A bipolar-channel hybrid brain-computer interface system for home automation control utilizing steady-state visually evoked potential and eye-blink signals. Sensors 2020, 20, 5474. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Asikainen, A.; Kolehmainen, M.; Ruuskanen, J.; Tuppurainen, K. Structure-based classification of active and inactive estrogenic compounds by decision tree, LVQ and kNN methods. Chemosphere 2006, 62, 658–673. [Google Scholar] [CrossRef] [PubMed]

- Bandyopadhyay, S.; Pal, S.K. Relation between VGA-classifier and MLP: Determination of network architecture. Fundam. Informaticae 1999, 37, 177–199. [Google Scholar] [CrossRef]

- Wong, W.T.; Hsu, S.H. Application of SVM and ANN for image retrieval. Eur. J. Oper. Res. 2006, 173, 938–950. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1–9. [Google Scholar] [CrossRef]

- Krizhevsky, A. One weird trick for parallelizing convolutional neural networks. arXiv 2014, arXiv:1404.5997. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Rejer, I.; Cieszyński, Ł. Independent component analysis for a low-channel SSVEP-BCI. Pattern Anal. Appl. 2019, 22, 47–62. [Google Scholar] [CrossRef]

- Du, Y.; Zhao, X. Visual stimulus color effect on SSVEP-BCI in augmented reality. Biomed. Signal Process. Control 2022, 78, 103906. [Google Scholar] [CrossRef]

- Yan, W.; Du, C.; Wu, Y.; Zheng, X.; Xu, G. SSVEP-EEG denoising via image filtering methods. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1634–1643. [Google Scholar] [CrossRef] [PubMed]

- Yan, W.; He, B.; Zhao, J.; Wu, Y.; Du, C.; Xu, G. Frequency domain filtering method for SSVEP-EEG preprocessing. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 2079–2089. [Google Scholar] [CrossRef] [PubMed]

- Kwak, N.S.; Müller, K.R.; Lee, S.W. A convolutional neural network for steady state visual evoked potential classification under ambulatory environment. PLoS ONE 2017, 12, e0172578. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Xu, G.; Mou, X.; Ravi, A.; Li, M.; Wang, Y.; Jiang, N. A convolutional neural network for the detection of asynchronous steady state motion visual evoked potential. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1303–1311. [Google Scholar] [CrossRef] [PubMed]

- Ravi, A.; Beni, N.H.; Manuel, J.; Jiang, N. Comparing user-dependent user-independent training of CNN for SSVEPBCI. J. Neural Eng. 2020, 17, 026028. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.R.; Molla, M.K.I.; Nakanishi, M.; Tanaka, T. Unsupervised frequency-recognition method of SSVEPs using a filter bank implementation of binary subband CCA. J. Neural Eng. 2017, 14, 026007. [Google Scholar] [CrossRef]

- Shao, X.; Lin, M. Filter bank temporally local canonical correlation analysis for short time window SSVEPs classification. Cogn. Neurodynamics 2020, 14, 689–696. [Google Scholar] [CrossRef]

- Zhao, D.; Wang, T.; Tian, Y.; Jiang, X. Filter bank convolutional neural network for SSVEP classification. IEEE Access 2021, 9, 147129–147141. [Google Scholar] [CrossRef]

- Xu, D.; Tang, F.; Li, Y.; Zhang, Q.; Feng, X. FB-CCNN: A Filter Bank Complex Spectrum Convolutional Neural Network with Artificial Gradient Descent Optimization. Brain Sci. 2023, 13, 780. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Principe, J.C. Blinking artifact removal in cognitive EEG data using ICA. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 5273–5276. [Google Scholar]

- Arab, M.R.; Suratgar, A.A.; Ashtiani, A.R. Electroencephalogram signals processing for topographic brain mapping and epilepsies classification. Comput. Biol. Med. 2010, 40, 733–739. [Google Scholar] [CrossRef] [PubMed]

- Mannan MM, N.; Kim, S.; Jeong, M.Y.; Kamran, M.A. Hybrid EEG—Eye tracker: Automatic identification and removal of eye movement and blink artifacts from electroencephalographic signal. Sensors 2016, 16, 241. [Google Scholar] [CrossRef] [PubMed]

- Shukla, P.K.; Roy, V.; Shukla, P.K.; Chaturvedi, A.K.; Saxena, A.K.; Maheshwari, M.; Pal, P.R. An advanced EEG motion artifacts eradication algorithm. Comput. J. 2023, 66, 429–440. [Google Scholar] [CrossRef]

- Rizal, A.; Hadiyoso, S.; Ramdani, A.Z. FPGA-based implementation for real-time epileptic EEG classification using Hjorth descriptor KNN. Electronics 2022, 11, 3026. [Google Scholar] [CrossRef]

- Yang, P.; Wang, J.; Zhao, H.; Li, R. Mlp with riemannian covariance for motor imagery based eeg analysis. IEEE Access 2020, 8, 139974–139982. [Google Scholar] [CrossRef]

- Janapati, R.; Dalal, V.; Desai, U.; Sengupta, R.; Kulkarni, S.A.; Hemanth, D.J. Classification of Visually Evoked Potential EEG Using Hybrid Anchoring-based Particle Swarm Optimized Scaled Conjugate Gradient Multi-Layer Perceptron Classifier. Int. J. Artif. Intell. Tools 2023, 32, 2340016. [Google Scholar] [CrossRef]

- Ma, P.; Dong, C.; Lin, R.; Ma, S.; Liu, H.; Lei, D.; Chen, X. Effect of Local Network Characteristics on the Performance of the SSVEP Brain-Computer Interface. IRBM 2023, 44, 100781. [Google Scholar] [CrossRef]

- Xu, D.; Tang, F.; Li, Y.; Zhang, Q.; Feng, X. An analysis of deep learning models in SSVEP-based BCI: A survey. Brain Sci. 2023, 13, 483. [Google Scholar] [CrossRef]

- Wang, Z.; Wong, C.M.; Wang, B.; Feng, Z.; Cong, F.; Wan, F. Compact Artificial Neural Network Based on Task Attention for Individual SSVEP Recognition with Less Calibration. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 2525–2534. [Google Scholar] [CrossRef]

- Tarafdar, K.K.; Pradhan, B.K.; Nayak, S.K.; Khasnobish, A.; Chakravarty, S.; Ray, S.S.; Pal, K. Data mining based approach to study the effect of consumption of caffeinated coffee on the generation of the steady-state visual evoked potential signals. Comput. Biol. Med. 2019, 115, 103526. [Google Scholar] [CrossRef] [PubMed]

- Hashemnia, S.; Grasse, L.; Soni, S.; Tata, M.S. Human EEG and recurrent neural networks exhibit common temporal dynamics during speech recognition. Front. Syst. Neurosci. 2021, 15, 617605. [Google Scholar] [CrossRef] [PubMed]

- Samee, N.A.; Mahmoud, N.F.; Aldhahri, E.A.; Rafiq, A.; Muthanna, M.S.A.; Ahmad, I. RNN and BiLSTM fusion for accurate automatic epileptic seizure diagnosis using EEG signals. Life 2022, 12, 1946. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Ma, C.; Dang, W.; Wang, R.; Liu, Y.; Gao, Z. DSCNN: Dilated shuffle CNN model for SSVEP signal classification. IEEE Sens. J. 2022, 22, 12036–12043. [Google Scholar] [CrossRef]

- Rajalakshmi, A.; Sridhar, S.S. Classification of yoga, meditation, combined yoga–meditation EEG signals using L-SVM, KNN, and MLP classifiers. Soft Comput. 2024, 28, 4607–4619. [Google Scholar] [CrossRef]

- Choubey, H.; Pandey, A. A combination of statistical parameters for the detection of epilepsy and EEG classification using ANN and KNN classifier. Signal Image Video Process. 2021, 15, 475–483. [Google Scholar] [CrossRef]

- Joshi, S.; Joshi, F. Human Emotion Classification based on EEG Signals Using Recurrent Neural Network And KNN. arXiv 2022, arXiv:2205.08419. [Google Scholar]

- Al-Quraishi, M.S.; Tan, W.H.; Elamvazuthi, I.; Ooi, C.P.; Saad, N.M.; Al-Hiyali, M.I.; Ali, S.S.A. Cortical signals analysis to recognize intralimb mobility using modified RNN and various EEG quantities. Heliyon 2024, 10, e30406. [Google Scholar] [CrossRef] [PubMed]

- Tocoglu, M.A.; Ozturkmenoglu, O.; Alpkocak, A. Emotion analysis from Turkish tweets using deep neural networks. IEEE Access 2019, 7, 183061–183069. [Google Scholar] [CrossRef]

- Xiong, H.; Song, J.; Liu, J.; Han, Y. Deep transfer learning-based SSVEP frequency domain decoding method. Biomed. Signal Process. Control 2024, 89, 105931. [Google Scholar] [CrossRef]

- Cui, H.; Chi, X.; Wang, L.; Chen, X. A High-Rate Hybrid BCI System Based on High-Frequency SSVEP and sEMG. IEEE J. Biomed. Health Inform. 2023, 27, 5688–5698. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Li, R.; Zhang, R.; Li, G.; Zhang, D. A wearable SSVEP-based BCI system for quadcopter control using head-mounted device. IEEE Access 2018, 6, 26789–26798. [Google Scholar] [CrossRef]

- Veeranki, Y.R.; Diaz LR, M.; Swaminathan, R.; Posada-Quintero, H.F. Non-Linear Signal Processing Methods for Automatic Emotion Recognition using Electrodermal Activity. IEEE Sens. J. 2024, 24, 8079–8093. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, J.; Wang, J. An Analysis of Traditional Methods and Deep Learning Methods in SSVEP-Based BCI: A Survey. Electronics 2024, 13, 2767. https://doi.org/10.3390/electronics13142767

Wu J, Wang J. An Analysis of Traditional Methods and Deep Learning Methods in SSVEP-Based BCI: A Survey. Electronics. 2024; 13(14):2767. https://doi.org/10.3390/electronics13142767

Chicago/Turabian StyleWu, Jiaxuan, and Jingjing Wang. 2024. "An Analysis of Traditional Methods and Deep Learning Methods in SSVEP-Based BCI: A Survey" Electronics 13, no. 14: 2767. https://doi.org/10.3390/electronics13142767