Benchmarking Time-Frequency Representations of Phonocardiogram Signals for Classification of Valvular Heart Diseases Using Deep Features and Machine Learning

Abstract

:1. Introduction

- Employing resizing techniques to TFRs to improve sorting performance.

- The use of the Boruta feature selector to narrow down the features to the most important ones.

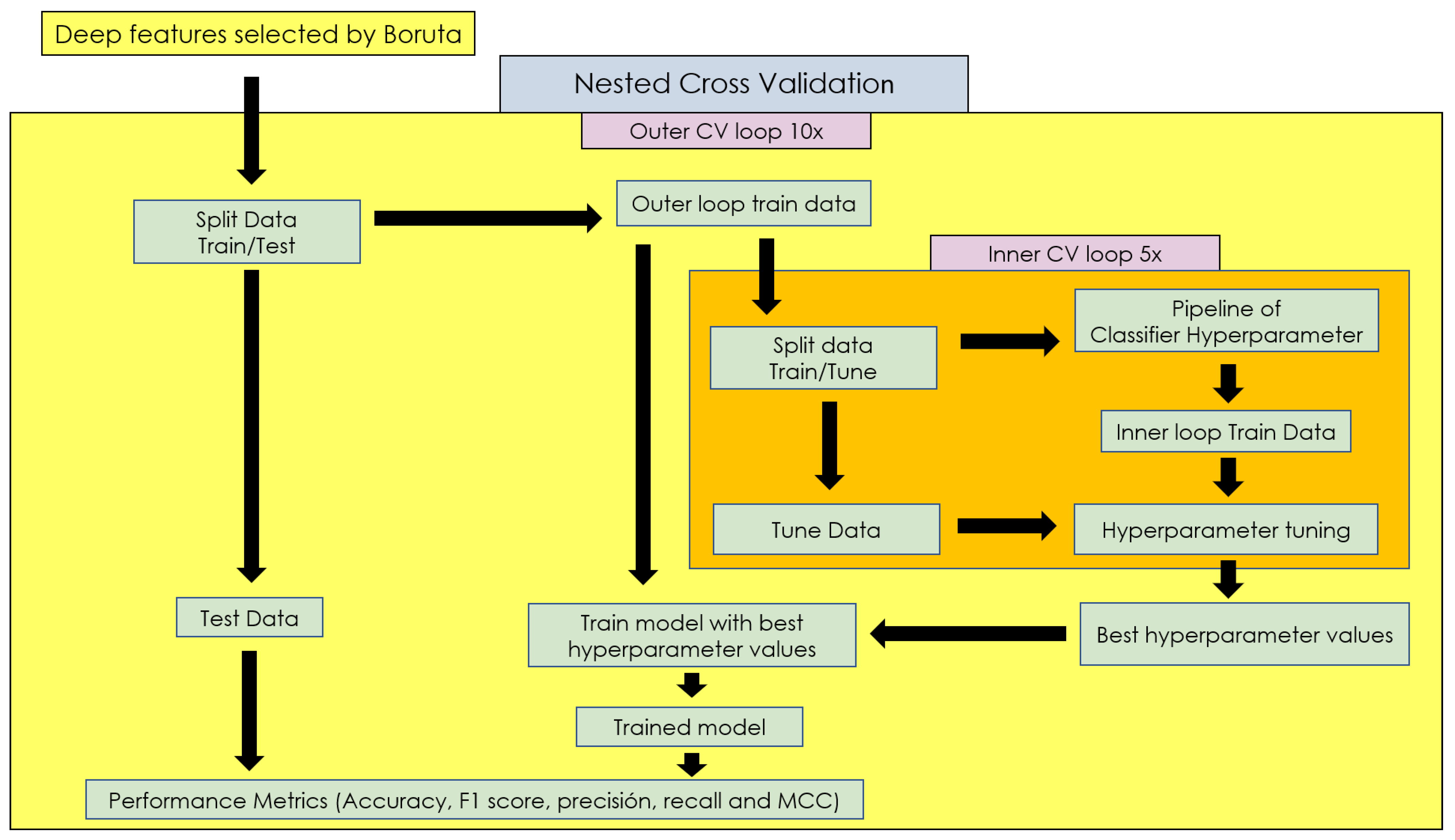

- Performing a nested cross-validation (nCV) approach that combines cross-validation with an additional model selection process.

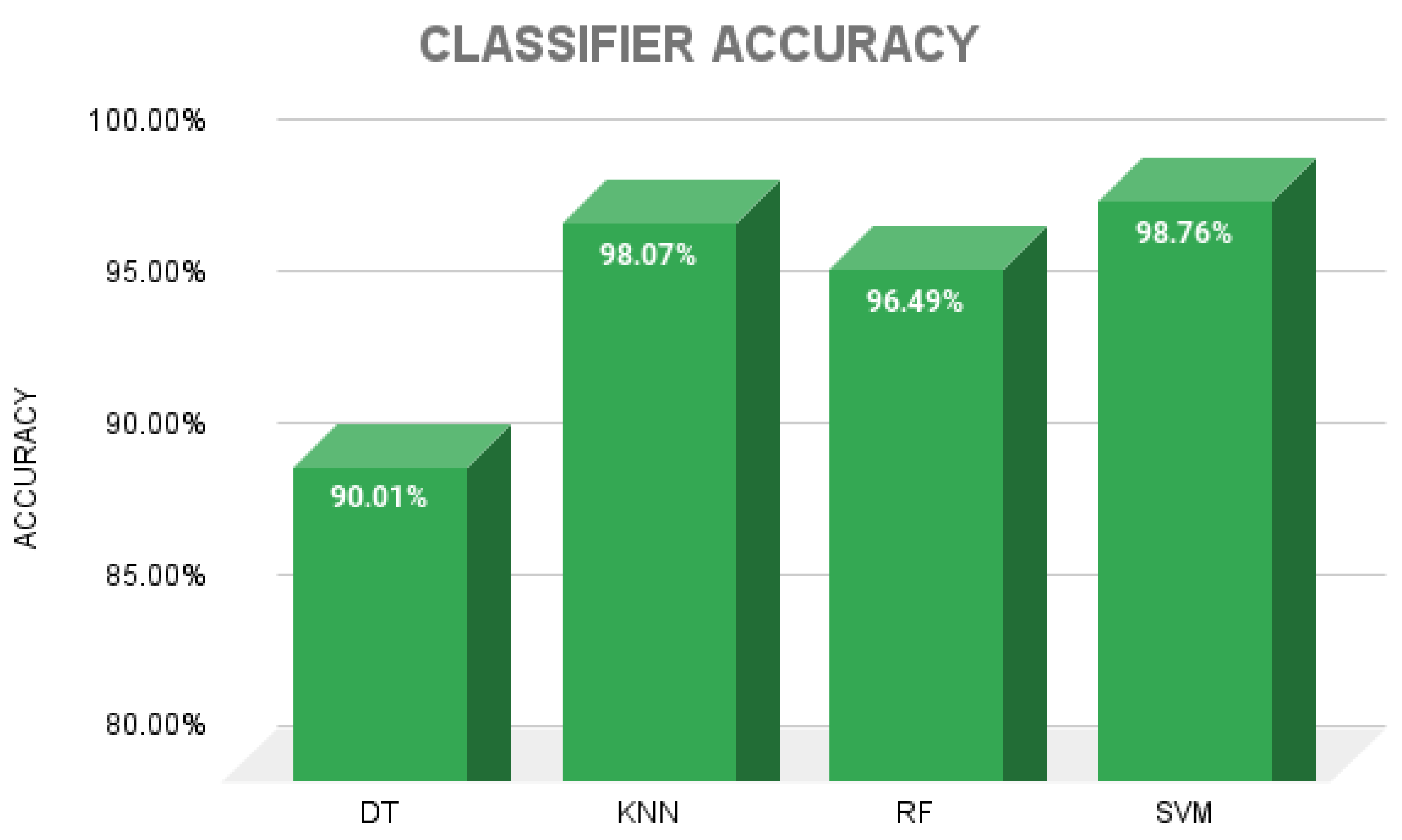

- Comparison of the classification performance of machine learning algorithms such as decision trees (DTs), K-nearest neighbors (KNN), random forests (RF) and support vector machines (SVM).

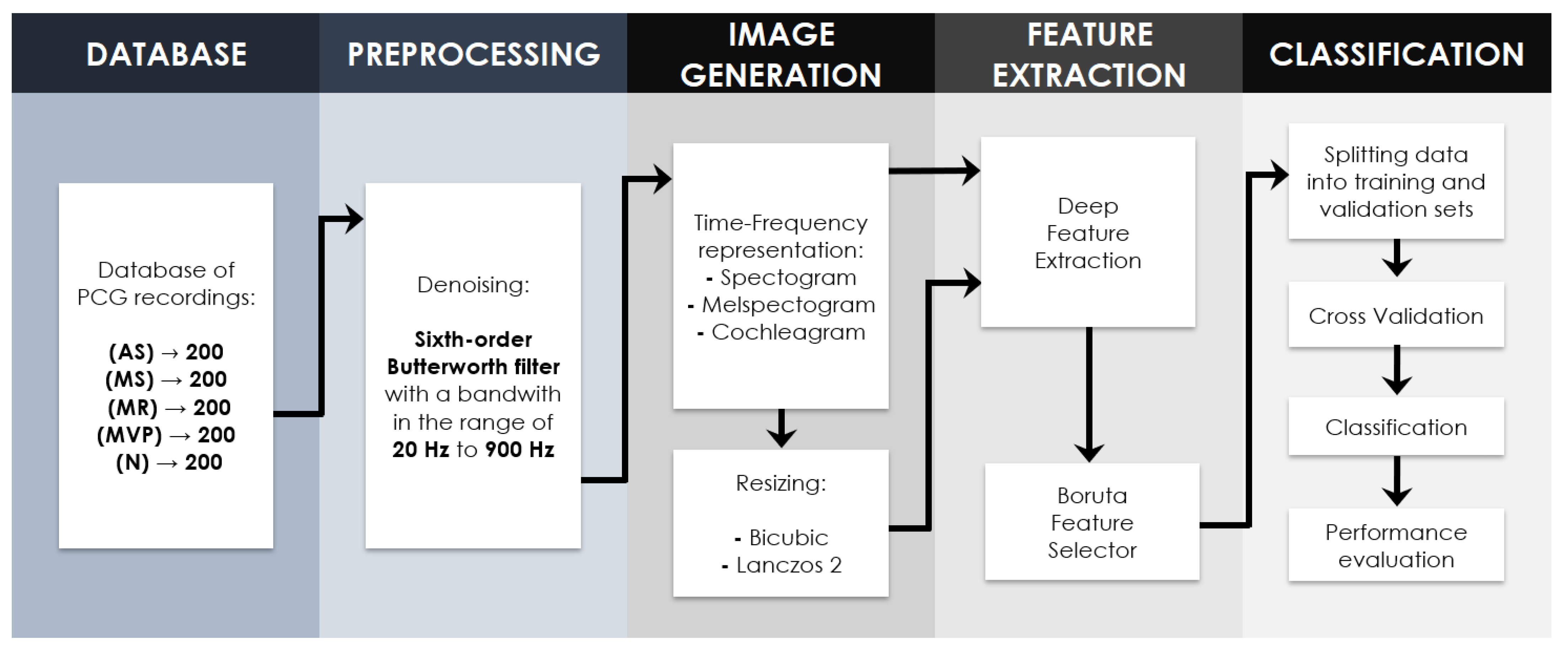

2. Materials and Methods

2.1. Dataset

2.2. Proposed Methodology

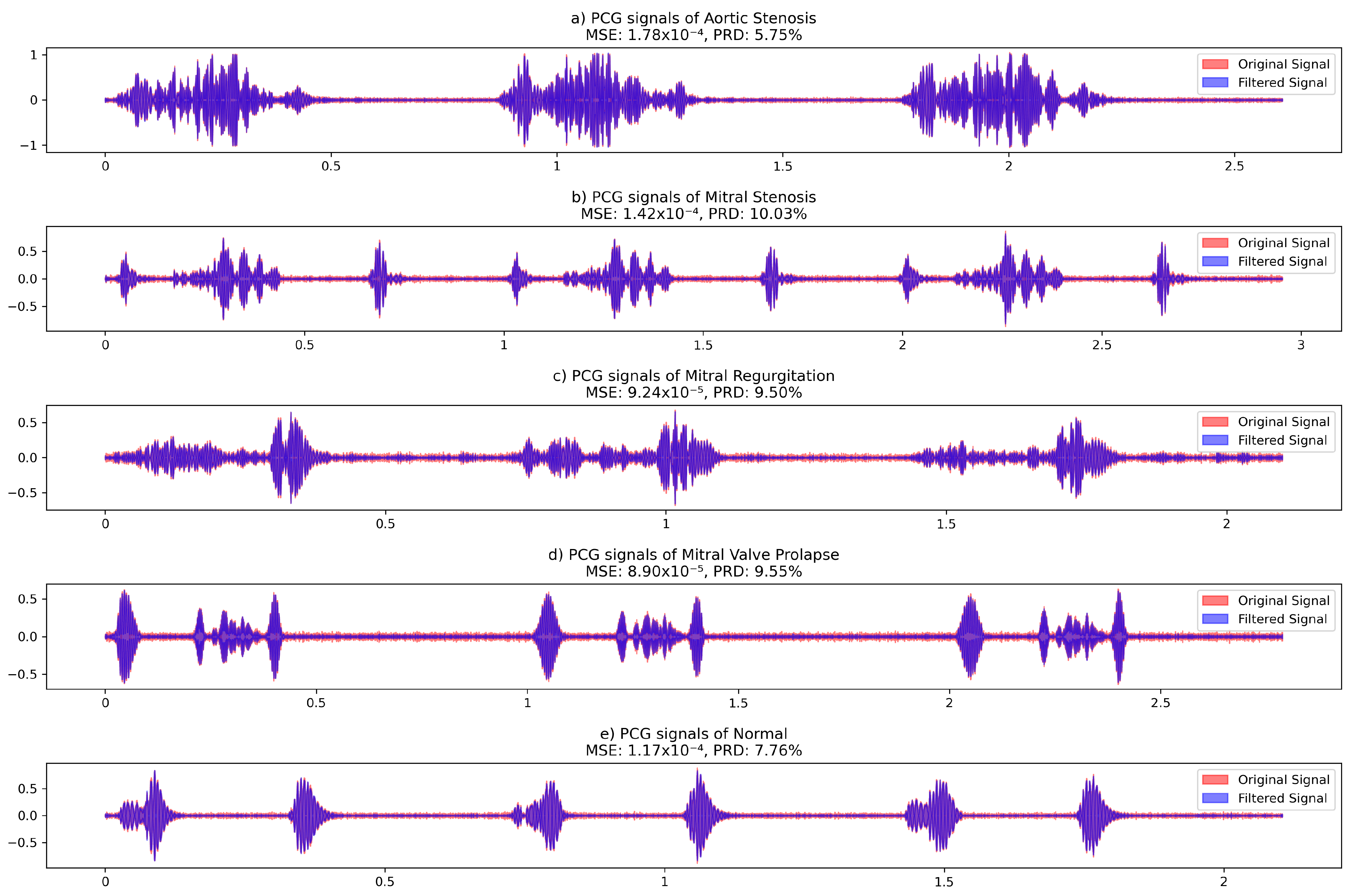

2.2.1. Signal Preprocessing

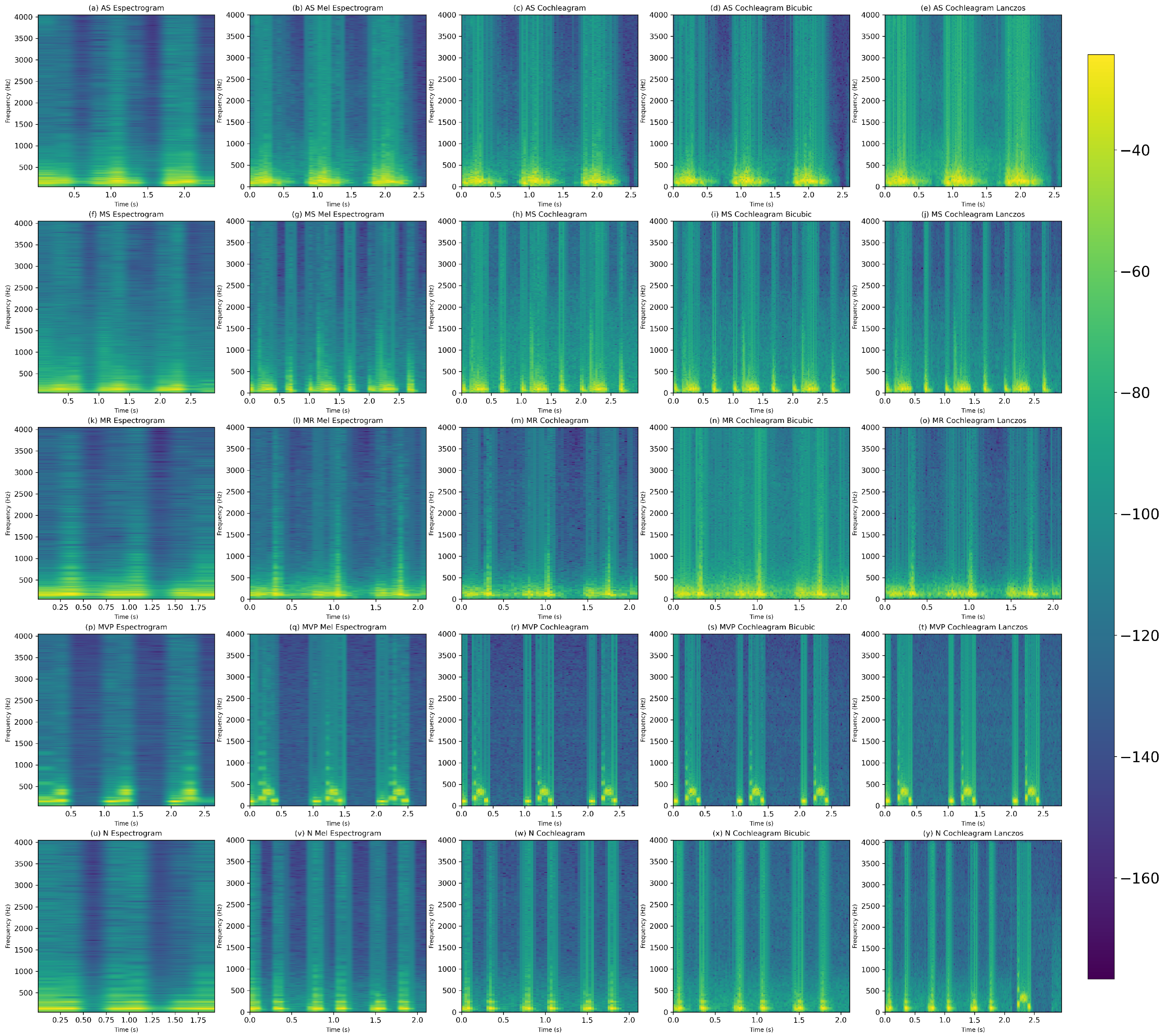

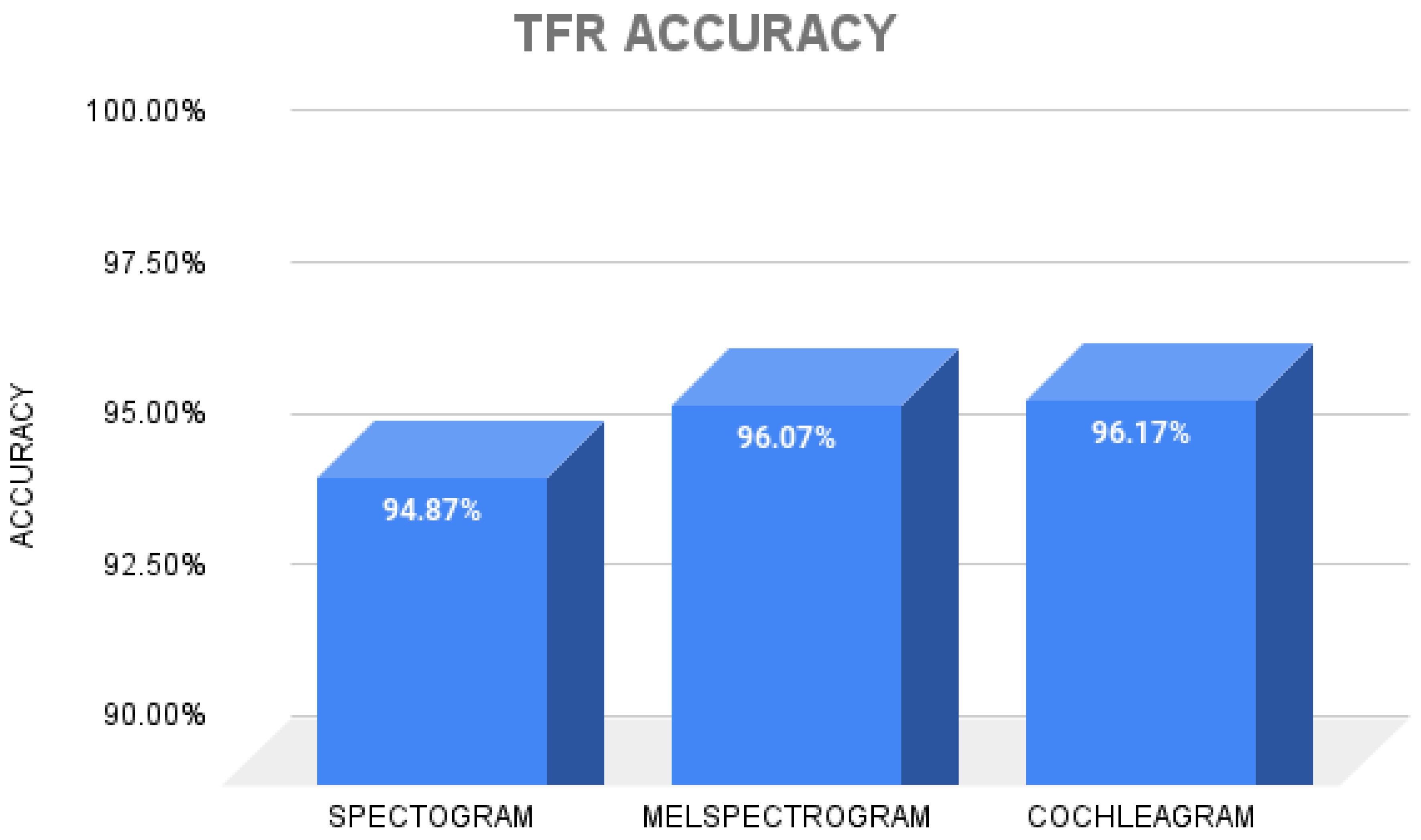

2.3. Time–Frequency Representations

2.3.1. Spectrogram

2.3.2. Mel-Spectogram

2.3.3. Cochleagram

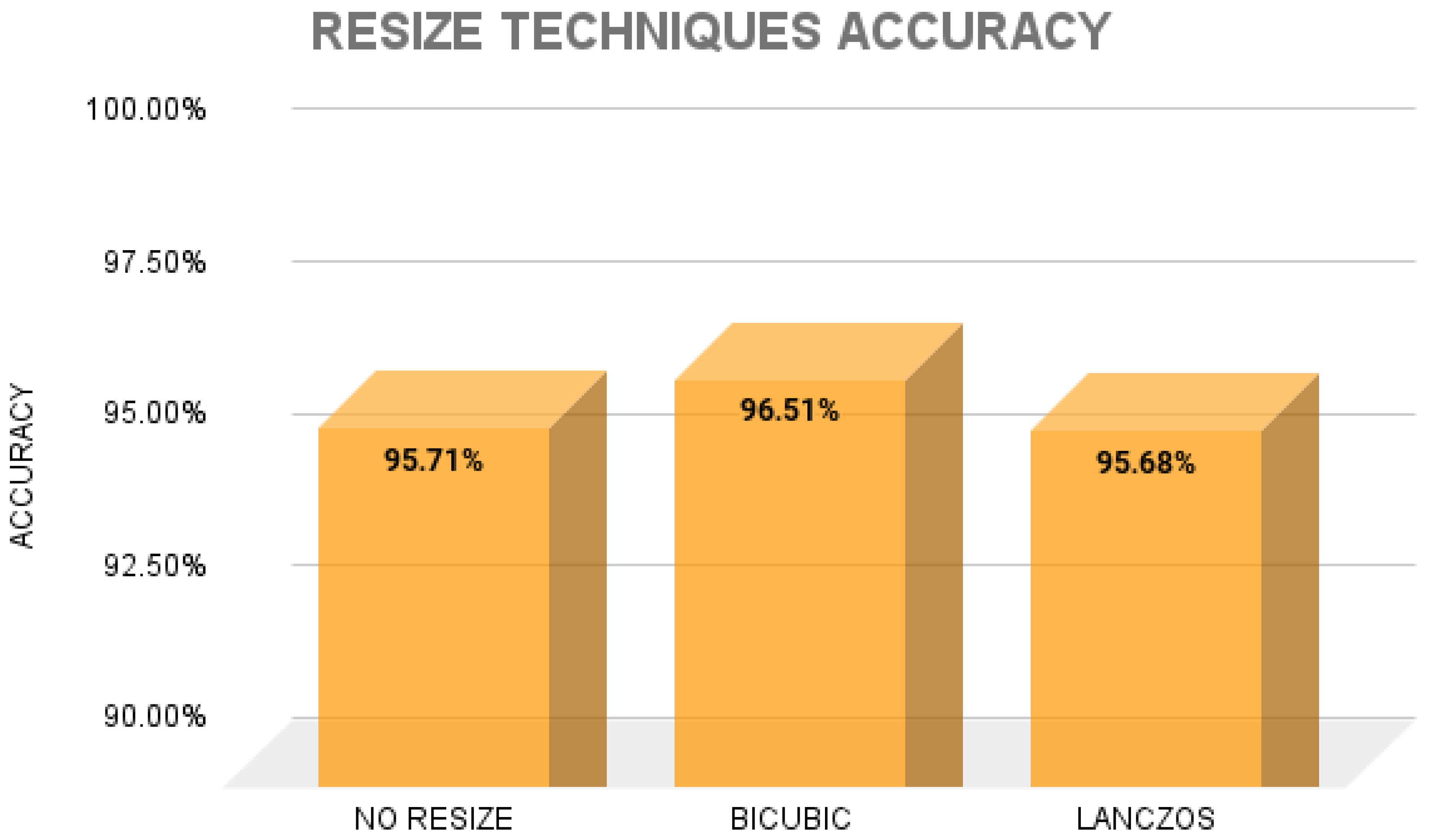

2.4. Resizing Image Techniques

2.4.1. Bicubic

2.4.2. Lanczos

2.4.3. Deep Feature Extraction

2.4.4. Boruta Feature Selection Algorithm

2.4.5. Nested Cross-Validation

2.4.6. Classifiers

2.4.7. Performance Evaluation Metrics

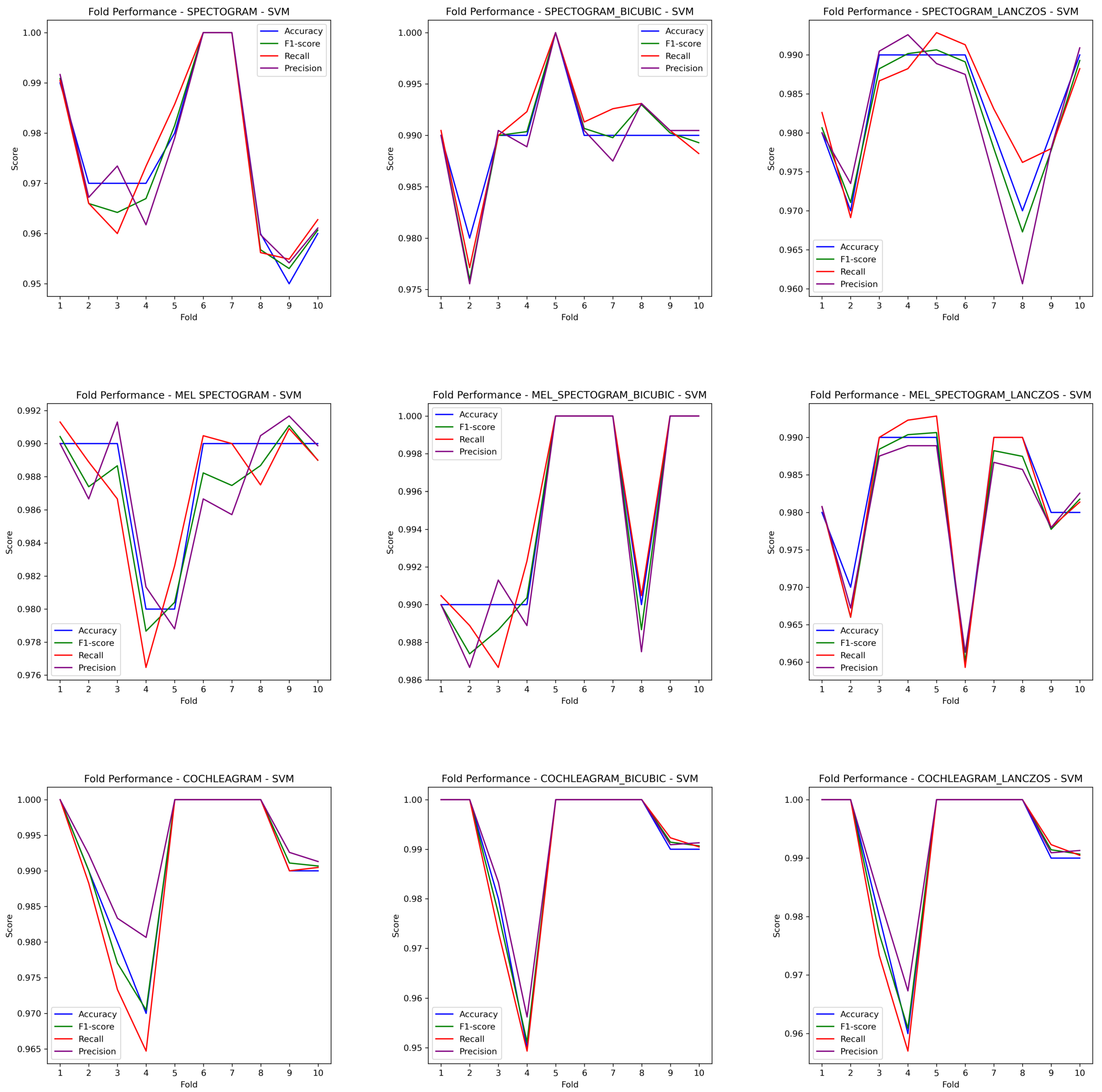

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CVD | Cardiovascular disorders |

| VHD | Valvular heart diseases |

| PCG | Phonocardiogram |

| AS | Aortic stenosis |

| MI | Mitral regurgitation |

| MS | Mitral stenosis |

| MVP | Mitral valve prolapse |

| MCC | Matthews correlation coefficient |

References

- Coffey, S.; Roberts-Thomson, R.; Brown, A.; Carapetis, J.; Chen, M.; Enriquez-Sarano, M.; Zühlke, L.; Prendergast, B.D. Global epidemiology of valvular heart disease. Nat. Rev. Cardiol. 2021, 18, 853–864. [Google Scholar] [CrossRef]

- Milne-Ives, M.; de Cock, C.; Lim, E.; Shehadeh, M.H.; de Pennington, N.; Mole, G.; Normando, E.; Meinert, E. The Effectiveness of Artificial Intelligence Conversational Agents in Health Care: Systematic Review. J. Med. Internet Res. 2020, 22, e20346. [Google Scholar] [CrossRef] [PubMed]

- Domenech, B.; Pomar, J.L.; Prat-González, S.; Vidal, B.; López-Soto, A.; Castella, M.; Sitges, M. Valvular heart disease epidemics. J. Heart Valve Dis. 2016, 25, 1–7. [Google Scholar] [PubMed]

- Aluru, J.S.; Barsouk, A.; Saginala, K.; Rawla, P.; Barsouk, A. Valvular Heart Disease Epidemiology. Med. Sci. 2022, 10, 32. [Google Scholar] [CrossRef] [PubMed]

- Sharan, R.V.; Moir, T.J. Time-Frequency Image Resizing Using Interpolation for Acoustic Event Recognition with Convolutional Neural Networks. In Proceedings of the 2019 IEEE International Conference on Signals and Systems (ICSigSys), Bandung, Indonesia, 16–18 July 2019; pp. 8–11. [Google Scholar]

- Zhou, J.; Lee, S.; Liu, Y.; Chan, J.S.K.; Li, G.; Wong, W.T.; Jeevaratnam, K.; Cheng, S.H.; Liu, T.; Tse, G.; et al. Predicting Stroke and Mortality in Mitral Regurgitation: A Machine Learning Approach. Curr. Probl. Cardiol. 2023, 48, 101464. [Google Scholar] [CrossRef] [PubMed]

- Shvartz, V.; Sokolskaya, M.; Petrosyan, A.; Ispiryan, A.; Donakanyan, S.; Bockeria, L.; Bockeria, O. Predictors of Mortality Following Aortic Valve Replacement in Aortic Stenosis Patients. Pathophysiology 2022, 29, 106–117. [Google Scholar] [CrossRef]

- Ghosh, S.K.; Ponnalagu, R.; Tripathy, R.; Acharya, U.R. Automated detection of heart valve diseases using chirplet transform and multiclass composite classifier with PCG signals. Comput. Biol. Med. 2020, 118, 103632. [Google Scholar] [CrossRef] [PubMed]

- Maknickas, V.; Maknickas, A. Recognition of normal–abnormal phonocardiographic signals using deep convolutional neural networks and mel-frequency spectral coefficients. Physiol. Meas. 2017, 38, 1671. [Google Scholar] [CrossRef] [PubMed]

- Demir, F.; Şengür, A.; Bajaj, V.; Polat, K. Towards the classification of heart sounds based on convolutional deep neural network. Health Inf. Sci. Syst. 2019, 7, 16. [Google Scholar] [CrossRef]

- Mutlu, A.Y. Detection of epileptic dysfunctions in EEG signals using Hilbert vibration decomposition. Biomed. Signal Process. Control 2018, 40, 33–40. [Google Scholar] [CrossRef]

- Netto, A.N.; Abraham, L. Detection and Classification of Cardiovascular Disease from Phonocardiogram using Deep Learning Models. In Proceedings of the 2021 Second International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 4–6 August 2021; pp. 1646–1651. [Google Scholar]

- Sharan, R.V.; Moir, T.J. Acoustic event recognition using cochleagram image and convolutional neural networks. Appl. Acoust. 2019, 148, 62–66. [Google Scholar] [CrossRef]

- Das, S.; Pal, S.; Mitra, M. Deep learning approach of murmur detection using Cochleagram. Biomed. Signal Process. Control 2022, 77, 103747. [Google Scholar] [CrossRef]

- Moraes, T.; Amorim, P.; Da Silva, J.V.; Pedrini, H. Medical image interpolation based on 3D Lanczos filtering. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2020, 8, 294–300. [Google Scholar] [CrossRef]

- Hu, Q.; Hu, J.; Yu, X.; Liu, Y. Automatic heart sound classification using one dimension deep neural network. In Proceedings of the Security, Privacy, and Anonymity in Computation, Communication, and Storage: SpaCCS 2020 International Workshops, Nanjing, China, 18–20 December 2020; Proceedings 13. Springer: Berlin/Heidelberg, Germany, 2021; pp. 200–208. [Google Scholar]

- Varghees, V.N.; Ramachandran, K. A novel heart sound activity detection framework for automated heart sound analysis. Biomed. Signal Process. Control 2014, 13, 174–188. [Google Scholar] [CrossRef]

- Nogueira, D.M.; Ferreira, C.A.; Gomes, E.F.; Jorge, A.M. Classifying heart sounds using images of motifs, MFCC and temporal features. J. Med. Syst. 2019, 43, 168. [Google Scholar] [CrossRef] [PubMed]

- Ibarra-Hernández, R.F.; Bertin, N.; Alonso-Arévalo, M.A.; Guillén-Ramírez, H.A. A benchmark of heart sound classification systems based on sparse decompositions. In Proceedings of the 14th International Symposium on Medical Information Processing and Analysis, Mazatlán, Mexico, 24–26 October 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10975, pp. 26–38. [Google Scholar]

- Khan, K.N.; Khan, F.A.; Abid, A.; Olmez, T.; Dokur, Z.; Khandakar, A.; Chowdhury, M.E.; Khan, M.S. Deep learning based classification of unsegmented phonocardiogram spectrograms leveraging transfer learning. Physiol. Meas. 2021, 42, 095003. [Google Scholar] [CrossRef] [PubMed]

- Yaseen; Son, G.Y.; Kwon, S. Classification of heart sound signal using multiple features. Appl. Sci. 2018, 8, 2344. [Google Scholar] [CrossRef]

- Abbas, Q.; Hussain, A.; Baig, A.R. Automatic detection and classification of cardiovascular disorders using phonocardiogram and convolutional vision transformers. Diagnostics 2022, 12, 3109. [Google Scholar] [CrossRef]

- Arslan, Ö.; Karhan, M. Effect of Hilbert-Huang transform on classification of PCG signals using machine learning. J. King Saud-Univ.-Comput. Inf. Sci. 2022, 34, 9915–9925. [Google Scholar] [CrossRef]

- Adiban, M.; BabaAli, B.; Shehnepoor, S. Statistical feature embedding for heart sound classification. J. Electr. Eng. 2019, 70, 259–272. [Google Scholar] [CrossRef]

- Baghel, N.; Dutta, M.K.; Burget, R. Automatic diagnosis of multiple cardiac diseases from PCG signals using convolutional neural network. Comput. Methods Programs Biomed. 2020, 197, 105750. [Google Scholar] [CrossRef]

- Alkhodari, M.; Fraiwan, L. Convolutional and recurrent neural networks for the detection of valvular heart diseases in phonocardiogram recordings. Comput. Methods Programs Biomed. 2021, 200, 105940. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.U.; Samer, S.; Alshehri, M.D.; Baloch, N.K.; Khan, H.; Hussain, F.; Kim, S.W.; Zikria, Y.B. Artificial neural network-based cardiovascular disease prediction using spectral features. Comput. Electr. Eng. 2022, 101, 108094. [Google Scholar] [CrossRef]

- Jabari, M.; Rezaee, K.; Zakeri, M. Fusing handcrafted and deep features for multi-class cardiac diagnostic decision support model based on heart sound signals. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 2873–2885. [Google Scholar] [CrossRef]

- Supo, E.; Galdos, J.; Rendulich, J.; Sulla, E. PRD as an indicator proposal in the evaluation of ECG signal acquisition prototypes in real patients. In Proceedings of the 2022 IEEE Andescon, Barranquilla, Colombia, 16–19 November 2022; pp. 1–4. [Google Scholar]

- Sulla, T.R.; Talavera, S.J.; Supo, C.E.; Montoya, A.A. Non-invasive glucose monitor based on electric bioimpedance using AFE4300. In Proceedings of the 2019 IEEE XXVI International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 12–14 August 2019; pp. 1–3. [Google Scholar]

- Talavera, J.R.; Mendoza, E.A.S.; Dávila, N.M.; Supo, E. Implementation of a real-time 60 Hz interference cancellation algorithm for ECG signals based on ARM cortex M4 and ADS1298. In Proceedings of the 2017 IEEE XXIV International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Cusco, Peru, 15–18 August 2017; pp. 1–4. [Google Scholar]

- Huisa, C.M.; Elvis Supo, C.; Edward Figueroa, T.; Rendulich, J.; Sulla-Espinoza, E. PCG Heart Sounds Quality Classification Using Neural Networks and SMOTE Tomek Links for the Think Health Project. In Data Analytics and Management: Proceedings of ICDAM 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 803–811. [Google Scholar]

- Arslan, Ö. Automated detection of heart valve disorders with time-frequency and deep features on PCG signals. Biomed. Signal Process. Control 2022, 78, 103929. [Google Scholar] [CrossRef]

- Ismail, S.; Ismail, B.; Siddiqi, I.; Akram, U. PCG classification through spectrogram using transfer learning. Biomed. Signal Process. Control 2023, 79, 104075. [Google Scholar] [CrossRef]

- Leo, J.; Loong, C.; Subari, K.S.; Abdullah, N.M.K.; Ahmad, N.; Besar, R. Comparison of MFCC and Cepstral Coefficients as a Feature Set for PCG Biometric Systems. World Acad. Sci. Eng. Technol. Int. J. Med. Health Biomed. Bioeng. Pharm. Eng. 2010, 4, 335–339. [Google Scholar]

- Bituin, R.C.; Antonio, R.B. Ensemble Model of Lanczos and Bicubic Interpolation with Neural Network and Resampling for Image Enhancement. In Proceedings of the International Conferences on Software Engineering and Information Management, Suva, Fiji, 23–25 January 2024. [Google Scholar]

- Triwijoyo, B.; Adil, A. Analysis of Medical Image Resizing Using Bicubic Interpolation Algorithm. J. Ilmu Komput. 2021, 14, 20–29. [Google Scholar] [CrossRef]

- Bentbib, A.; El Guide, M.; Jbilou, K.; Reichel, L. A global Lanczos method for image restoration. J. Comput. Appl. Math. 2016, 300, 233–244. [Google Scholar] [CrossRef]

- Qiao, Q.; Yunusa-Kaltungo, A.; Edwards, R.E. Developing a machine learning based building energy consumption prediction approach using limited data: Boruta feature selection and empirical mode decomposition. Energy Rep. 2023, 9, 3643–3660. [Google Scholar] [CrossRef]

- Kumar, S.S.; Shaikh, T. Empirical evaluation of the performance of feature selection approaches on random forest. In Proceedings of the 2017 International Conference on Computer and Applications (ICCA), Doha, Qatar, 6–7 September 2017; pp. 227–231. [Google Scholar]

- Kursa, M.B.; Rudnicki, W.R. Feature selection with the Boruta package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef]

- Parvandeh, S.; Yeh, H.W.; Paulus, M.P.; McKinney, B.A. Consensus features nested cross-validation. Bioinformatics 2020, 36, 3093–3098. [Google Scholar] [CrossRef] [PubMed]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man, Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Sun, S.; Huang, R. An adaptive k-nearest neighbor algorithm. In Proceedings of the 2010 Seventh International Conference on Fuzzy Systems and Knowledge Discovery, Yantai, China, 10–12 August 2010; Volume 1, pp. 91–94. [Google Scholar]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- Lau, K.; Wu, Q. Online training of support vector classifier. Pattern Recognit. 2003, 36, 1913–1920. [Google Scholar] [CrossRef]

| Authors | Database | Classes | Feature Extraction | Classifier | Accuracy |

|---|---|---|---|---|---|

| Yaseen et al. [18] | Yanseen Database | Five | MFCCs + DWT | SVM | 97.9% |

| O. Arslan [10] | Yanseen Database | Five | Features-based ML-ELM + RFE feature selection | Random forest | 98.9% |

| Q. Abbas et al. [19] | Yanseen Database | Five | CWT + spectogram | CVT + ATTF | 99% |

| S. Das et al. [7] | Physionet/Cinc Database | Two | Cochleagram | DNN | 98.3% |

| K. Ghosh et al. [3] | Yanseen Database | Five | LEN and LENT- based features using CT | Multiclass composite | 99.4% |

| Valve Heart Disease | Files (Wav.) Amount | Sample Frequency (Hz) |

|---|---|---|

| Aortic Stenosis (AS) | 200 | 8000 |

| Mitral Regurgitation (MR) | 200 | 8000 |

| Mitral Stenosis (MS) | 200 | 8000 |

| Mitral Valve Prolapse (MVP) | 200 | 8000 |

| Normal (N) | 200 | 8000 |

| Signal | MSE | PRD (%) |

|---|---|---|

| Aortic Stenosis | 0.000282 | 5.75 |

| Mitral Stenosis | 0.000246 | 10.03 |

| Mitral Regurgitation | 0.000199 | 9.50 |

| Mitral Valve Prolapse | 0.000186 | 9.55 |

| Normal | 0.000218 | 7.76 |

| TFR Type | Spect | Mel Spect | Coch | Coch-B | Coch-L | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| MSE | PSNR | MSE | PSNR | MSE | PSNR | MSE | PSNR | MSE | PSNR | |

| AS | 0.0012 | 49.50 | 0.0010 | 49.70 | 0.0009 | 49.80 | 0.0008 | 50.10 | 0.0007 | 50.20 |

| MS | 0.0011 | 49.60 | 0.0010 | 49.80 | 0.0009 | 49.90 | 0.0007 | 50.30 | 0.0006 | 50.40 |

| MR | 0.0008 | 49.90 | 0.0007 | 50.00 | 0.0006 | 50.10 | 0.0005 | 50.50 | 0.0004 | 50.60 |

| MVP | 0.0009 | 49.80 | 0.0008 | 49.90 | 0.0007 | 50.00 | 0.0006 | 50.40 | 0.0005 | 50.50 |

| N | 0.0007 | 50.00 | 0.0006 | 50.10 | 0.0005 | 50.20 | 0.0004 | 50.60 | 0.0004 | 50.50 |

| TFRs | Resize Technique | Confirmed | Tentative | Rejected |

|---|---|---|---|---|

| Spectogram | - | 1028 | 272 | 2796 |

| Spectogram | Bicubic | 1031 | 154 | 2911 |

| Spectogram | Lanczos | 959 | 198 | 2939 |

| Mel-spectogram | - | 1007 | 394 | 2695 |

| Mel-spectogram | Bicubic | 936 | 337 | 2823 |

| Mel-spectogram | Lanczos | 980 | 300 | 2816 |

| Cochleagram | - | 1130 | 400 | 2566 |

| Cochleagram | Bicubic | 1142 | 398 | 2556 |

| Cochleagram | Lanczos | 1124 | 410 | 2562 |

| Methods/Algorithm | Performances (%) for Confirmed Features | ||||

|---|---|---|---|---|---|

| Spec/DT | 86.36 | 86.30 | 86.31 | 82.88 | 86.20 |

| Spec/KNN | 96.71 | 96.70 | 96.70 | 95.87 | 96.70 |

| Spec/RF | 94.95 | 94.90 | 94.88 | 93.64 | 94.90 |

| Spec/SVM | 97.51 | 97.50 | 97.49 | 96.88 | 97.50 |

| Mel/DT | 91.50 | 91.50 | 91.47 | 89.39 | 91.50 |

| Mel/KNN | 97.95 | 97.90 | 97.89 | 97.39 | 97.90 |

| Mel/RF | 95.55 | 95.55 | 95.47 | 94.40 | 95.50 |

| Mel/SVM | 98.90 | 98.90 | 98.89 | 98.62 | 98.90 |

| Coch/DT | 91.04 | 91.00 | 91.01 | 88.75 | 91.00 |

| Coch/KNN | 98.61 | 98.60 | 98.59 | 98.25 | 98.60 |

| Coch/RF | 97.52 | 97.50 | 97.49 | 96.88 | 97.50 |

| Coch/SVM | 99.20 | 99.20 | 99.19 | 99.00 | 99.20 |

| Methods/Algorithm | Performances (%) for Confirmed Features | ||||

|---|---|---|---|---|---|

| Spec + Bic/DT | 91.59 | 91.60 | 91.59 | 89.50 | 91.60 |

| Spec + Bic/KNN | 98.90 | 98.90 | 98.89 | 98.62 | 98.90 |

| Spec + Bic/RF | 97.80 | 97.80 | 97.79 | 97.25 | 97.80 |

| Spec + Bic/SVM | 99.00 | 99.00 | 99.00 | 98.75 | 99.00 |

| Mel + Bic/DT | 93.83 | 93.70 | 93.72 | 92.14 | 93.70 |

| Mel + Bic/KNN | 99.30 | 99.30 | 99.29 | 99.12 | 99.30 |

| Mel + Bic/RF | 97.39 | 97.40 | 97.39 | 96.75 | 97.40 |

| Mel + Bic/SVM | 99.40 | 99.40 | 99.39 | 99.25 | 99.40 |

| Coch + Bic/DT | 91.81 | 91.80 | 91.80 | 89.75 | 91.80 |

| Coch + Bic/KNN | 98.51 | 98.50 | 98.49 | 98.13 | 98.50 |

| Coch + Bic/RF | 97.40 | 97.40 | 97.39 | 96.75 | 97.40 |

| Coch + Bic/SVM | 99.10 | 99.10 | 99.09 | 99.00 | 99.10 |

| Methods/Algorithm | Performances (%) for Confirmed Features | ||||

|---|---|---|---|---|---|

| Spec + Lz/DT | 85.55 | 85.60 | 85.56 | 82.00 | 85.60 |

| Spec + Lz/KNN | 96.80 | 96.80 | 96.79 | 96.00 | 96.80 |

| Spec + Lz/RF | 95.09 | 95.10 | 95.06 | 93.88 | 95.10 |

| Spec + Lz/SVM | 98.31 | 98.30 | 98.29 | 97.87 | 98.30 |

| Mel + Lz/DT | 88.98 | 88.91 | 88.9 | 86.13 | 88.90 |

| Mel + Lz/KNN | 97.40 | 97.40 | 97.41 | 96.75 | 97.40 |

| Mel + Lz/RF | 95.48 | 95.50 | 95.48 | 94.37 | 95.50 |

| Mel + Lz/SVM | 98.20 | 98.20 | 98.19 | 97.75 | 98.20 |

| Coch + Lz/DT | 90.77 | 90.60 | 90.64 | 88.27 | 90.60 |

| Coch + Lz/KNN | 98.52 | 98.50 | 98.49 | 98.13 | 98.50 |

| Coch + Lz/RF | 97.31 | 97.30 | 97.29 | 96.63 | 97.30 |

| Coch + Lz/SVM | 99.20 | 99.20 | 99.19 | 99.00 | 99.20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chambi, E.M.; Cuela, J.; Zegarra, M.; Sulla, E.; Rendulich, J. Benchmarking Time-Frequency Representations of Phonocardiogram Signals for Classification of Valvular Heart Diseases Using Deep Features and Machine Learning. Electronics 2024, 13, 2912. https://doi.org/10.3390/electronics13152912

Chambi EM, Cuela J, Zegarra M, Sulla E, Rendulich J. Benchmarking Time-Frequency Representations of Phonocardiogram Signals for Classification of Valvular Heart Diseases Using Deep Features and Machine Learning. Electronics. 2024; 13(15):2912. https://doi.org/10.3390/electronics13152912

Chicago/Turabian StyleChambi, Edwin M., Jefry Cuela, Milagros Zegarra, Erasmo Sulla, and Jorge Rendulich. 2024. "Benchmarking Time-Frequency Representations of Phonocardiogram Signals for Classification of Valvular Heart Diseases Using Deep Features and Machine Learning" Electronics 13, no. 15: 2912. https://doi.org/10.3390/electronics13152912