PerFication: A Person Identifying Technique by Evaluating Gait with 2D LiDAR Data

Abstract

1. Introduction

2. Related Works

2.1. Recognition of Individuals through Facial Features

2.2. Gait-Based Person Recognition

2.3. Person Recognition Using Lidar Data

3. Proposed Method

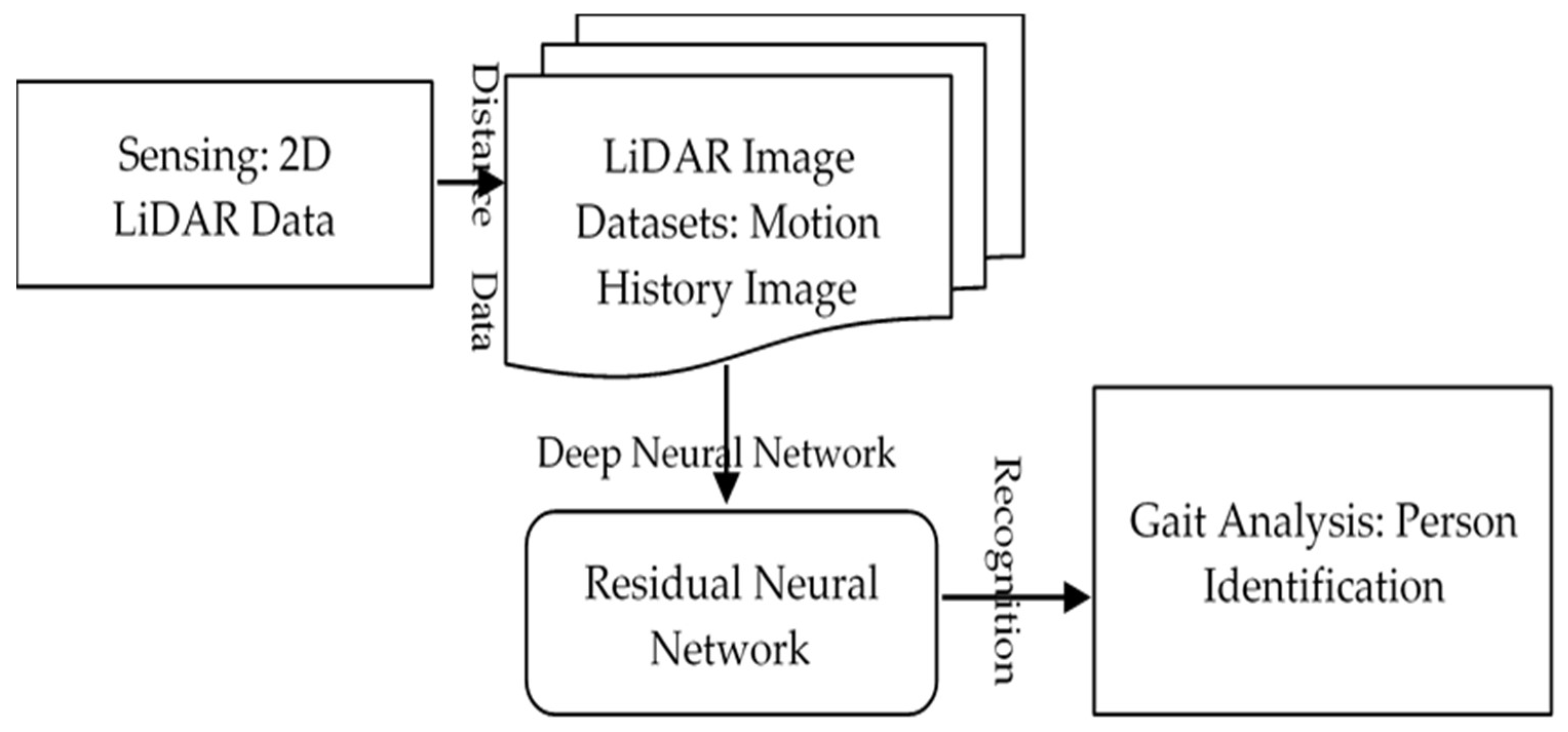

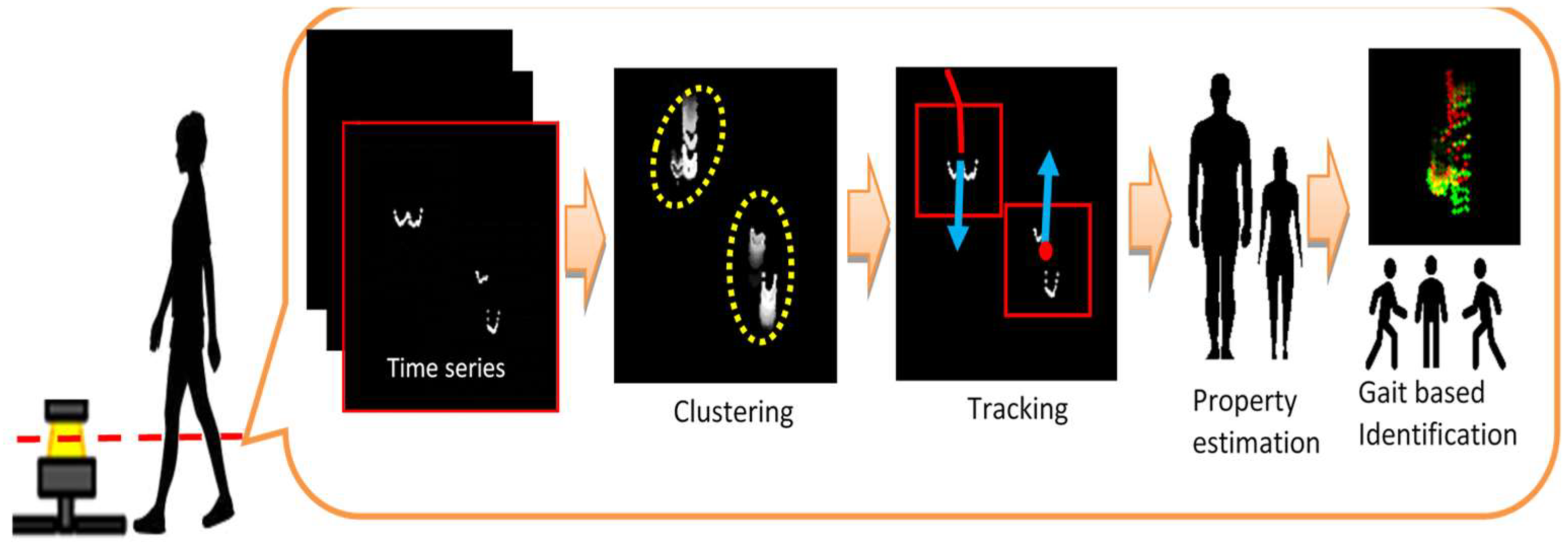

3.1. Overview of the Integrated System

3.2. Person Identification Based on Gait Data

3.2.1. Experimental Configuration

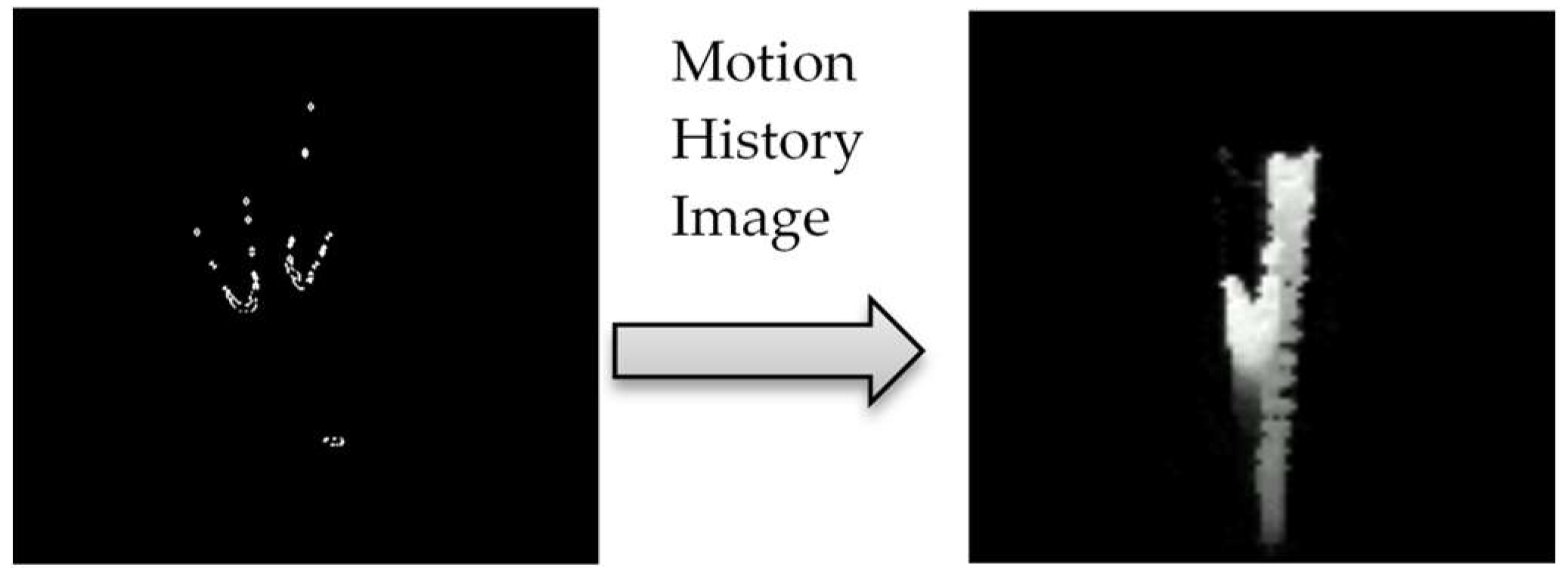

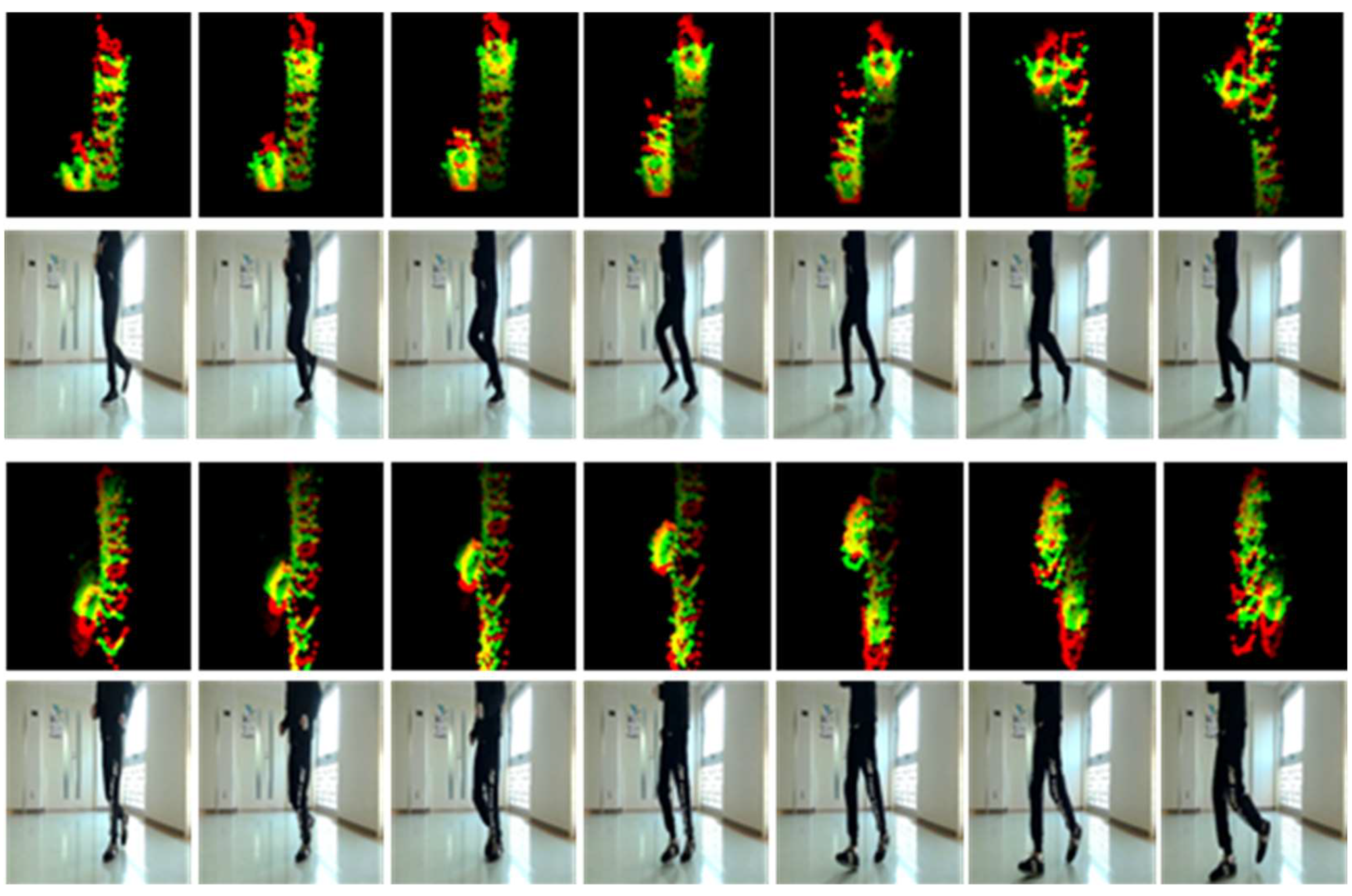

3.2.2. Preparing the Dataset

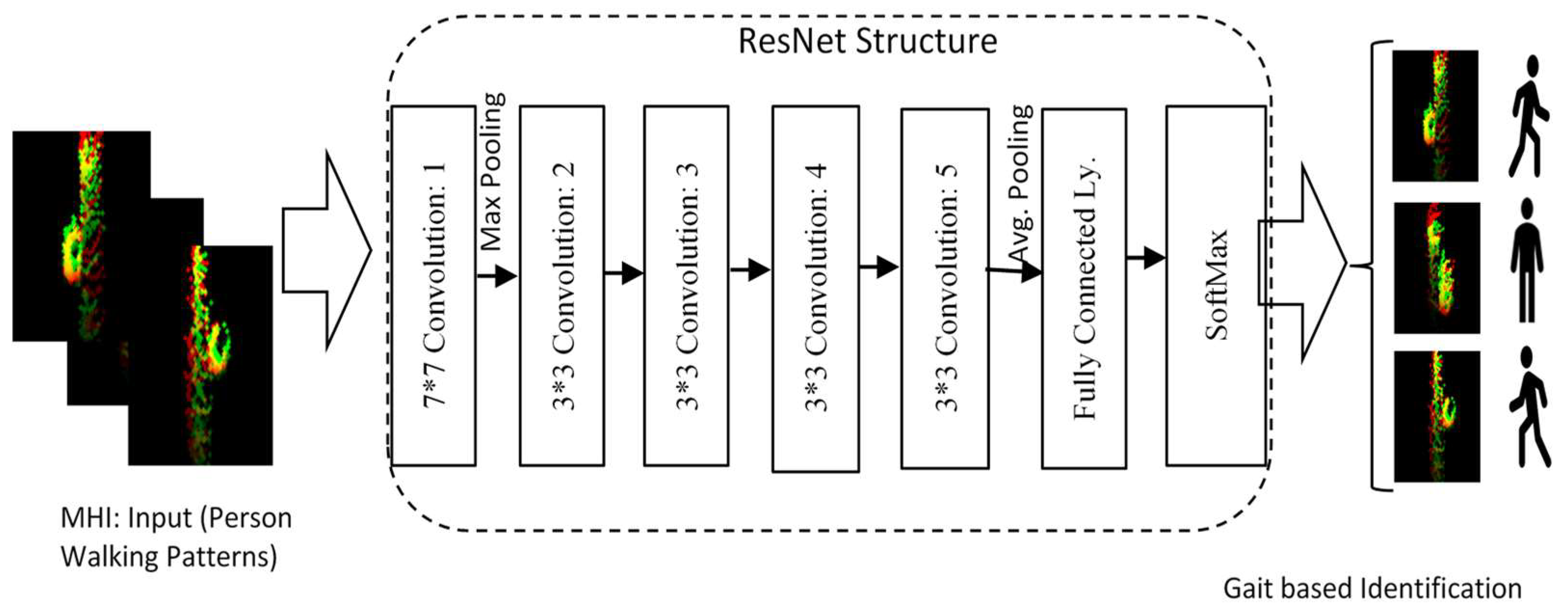

3.2.3. Identification with ResNet

4. Discussion and Technical Experiments

4.1. Identification of Individuals Based on Gait

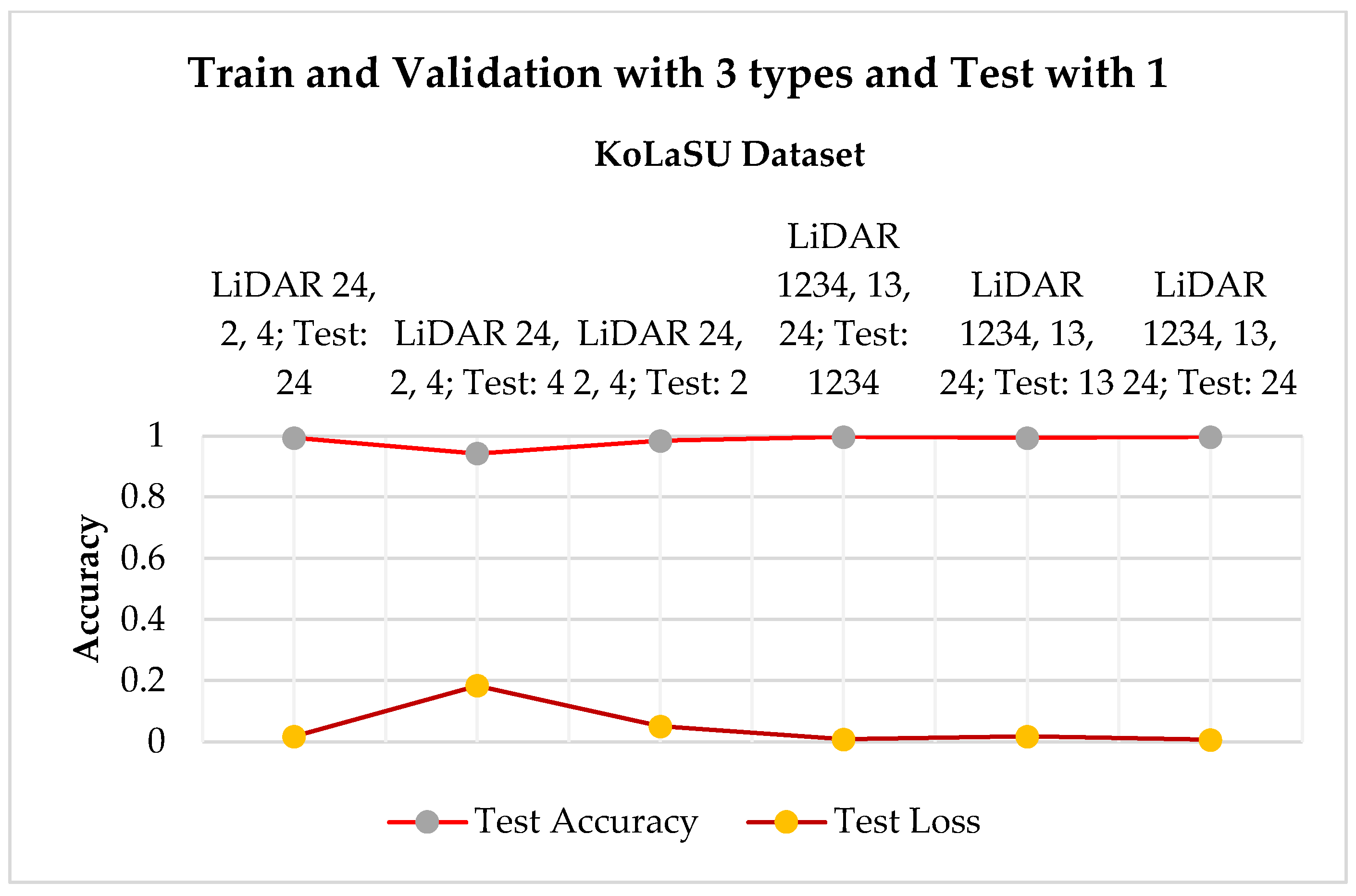

4.2. Comparison across Various Data Types

4.3. Comparison with Other Modern Studies

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bolle, R.M.; Connell, J.; Pankanti, S.; Ratha, N.K.; Senior, A.W. Guide to Biometrics; Springer Science & Business Media: Berlin, Germany, 2013. [Google Scholar]

- Wan, C.; Wang, L.; Phoha, V.V. A survey on gait recognition. ACM Comput. Surv. 2018, 51, 89. [Google Scholar] [CrossRef]

- Dargan, S.; Kumar, M. A comprehensive survey on the biometric recognition systems based on physiological and behavioral modalities. Expert. Syst. Appl. 2020, 143, 113114. [Google Scholar] [CrossRef]

- Bouazizi, M.; Ye, C.; Ohtsuki, T. 2-D LIDAR-Based Approach for Activity Identification and Fall Detection. IEEE Internet Things J. 2022, 9, 10872–10890. [Google Scholar] [CrossRef]

- Bi, S.; Yuan, C.; Liu, C.; Cheng, J.; Wang, W.; Cai, Y. A Survey of Low-Cost 3D Laser Scanning Technology. Appl. Sci. 2021, 11, 3938. [Google Scholar] [CrossRef]

- Yusuf, M.; Zaidi, A.; Haleem, A.; Bahl, S.; Javaid, M.; Garg, S.B.; Garg, J. IoT-based low-cost 3D mapping using 2D Lidar for different materials. Mater. Today Proc. 2022, 57, 942–947. [Google Scholar] [CrossRef]

- Raj, T.; Hashim, F.H.; Huddin, A.B.; Ibrahim, M.F.; Hussain, A. A Survey on LiDAR Scanning Mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Kang, X.; Yin, S.; Fen, Y. 3D Reconstruction & Assessment Framework based on affordable 2D Lidar. In Proceedings of the 2018 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Auckland, New Zealand, 9–12 July 2018; pp. 292–297. [Google Scholar] [CrossRef]

- Hasan, M.; Hanawa, J.; Goto, R.; Fukuda, H.; Kuno, Y.; Kobayashi, Y. Tracking People Using Ankle-Level 2D LiDAR for Gait Analysis. In Advances in Artificial Intelligence, Software and Systems Engineering AHFE 2020; Advances in Artificial Intelligence; Ahram, T., Ed.; Springer: Cham, Switzerland, 2021; p. 1213. [Google Scholar] [CrossRef]

- Hasan, M.; Hanawa, J.; Goto, R.; Fukuda, H.; Kuno, Y.; Kobayashi, Y. Person Tracking Using Ankle-Level LiDAR Based on Enhanced DBSCAN and OPTICS. IEEJ Trans. Elec Electron. Eng. 2021, 16, 778–786. [Google Scholar] [CrossRef]

- Hasan, M.; Goto, R.; Hanawa, J.; Fukuda, H.; Kuno, Y.; Kobayashi, Y. Person Property Estimation Based on 2D LiDAR Data Using Deep Neural Network. In Intelligent Computing Theories and Application. ICIC 2021; Lecture Notes in Computer Science; Huang, D.S., Jo, K.H., Li, J., Gribova, V., Bevilacqua, V., Eds.; Springer: Cham, Switzerland, 2021; p. 12836. [Google Scholar] [CrossRef]

- Read, J.D. The availability heuristic in person identification: The sometimes misleading consequences of enhanced contextual information. Appl. Cognit. Psychol. 1995, 9, 91–121. [Google Scholar] [CrossRef]

- Choudhury, T.; Clarkson, B.; Jebara, T.; Pentland, A. Multimodal person recognition using unconstrained audio and video. In Proceedings of the International Conference on Audio- and Video-Based Person Authentication, Washington, DC, USA, 22–24 March 1999. [Google Scholar]

- Matta, F.; Jean-Luc, D. Person recognition using facial video information: A state of the art. J. Vis. Lang. Comput. 2009, 20, 180–187. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, R.; Shan, S.; Chen, X. Projection Metric Learning on Grassmann Manifold with Application to Video based Face Recognition. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 140–149. [Google Scholar] [CrossRef]

- Liu, H.; Feng, J.; Jie, Z.; Jayashree, K.; Zhao, B.; Qi, M.; Jiang, J.; Yan, S. Neural Pers. Search. Machines. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 493–501. [Google Scholar] [CrossRef]

- Zhu, X.; Lei, Z.; Yan, J.; Yi, D.; Li, S.Z. High-fidelity Pose and Expression Normalization for face recognition in the wild. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 787–796. [Google Scholar] [CrossRef]

- Wang, M.; Deng, W. Deep face recognition: A survey. Neurocomputing 2021, 29, 215–244. [Google Scholar] [CrossRef]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. DeepFace: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar] [CrossRef]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar] [CrossRef]

- Meng, Q.; Zhao, S.; Huang, Z.; Zhou, F. MagFace: A Universal Representation for Face Recognition and Quality Assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14225–14234. [Google Scholar]

- Wang, L.; Tan, T.; Ning, H.; Hu, W. Silhouette analysis-based gait recognition for human identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1505–1518. [Google Scholar] [CrossRef]

- Bashir, K.; Xiang, T.; Gong, S. Gait recognition without subject cooperation. Pattern Recognit. Lett. 2010, 31, 2052–2060. [Google Scholar] [CrossRef]

- Liu, Z.; Sarkar, S. Improved gait recognition by gait dynamics normalization. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 863–876. [Google Scholar] [CrossRef] [PubMed]

- Singh, J.P.; Jain, S. Person identification based on Gait using dynamic body parameters. In Proceedings of the Trendz in Information Sciences & Computing (TISC2010), Chennai, India, 17–19 December 2010; pp. 248–252. [Google Scholar] [CrossRef]

- Mansur, A.; Makihara, Y.; Aqmar, R.; Yagi, Y. Gait Recognition under Speed Transition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 2521–2528. [Google Scholar] [CrossRef]

- Lombardi, S.; Nishino, K.; Makihara, Y.; Yagi, Y. Two-Point Gait: Decoupling Gait from Body Shape. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 1041–1048. [Google Scholar] [CrossRef]

- Sepas-Moghaddam, A.; Etemad, A. Deep Gait Recognition: A Survey. arXiv 2021, arXiv:2102.09546. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Huang, Y.; Wang, L.; Wang, X.; Tan, T. A Comprehensive Study on Cross-View Gait Based Human Identification with Deep CNNs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 209–226. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Wang, Y.; Li, A. Cross-View Gait Recognition with Deep Universal Linear Embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9095–9104. [Google Scholar]

- Fan, C.; Peng, Y.; Cao, C.; Liu, X.; Hou, S.; Chi, J.; Huang, Y.; Li, Q.; He, Z. GaitPart: Temporal Part-Based Model for Gait Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14213–14221. [Google Scholar] [CrossRef]

- Yamada, H.; Ahn, J.; Mozos, O.M.; Iwashita, Y.; Kurazume, R. Gait-based person identification using 3D LiDAR and long short-term memory deep networks. Adv. Robot. 2020, 34, 1201–1211. [Google Scholar] [CrossRef]

- Benedek, C.; Gálai, B.; Nagy, B.; Jankó, Z. Lidar-based gait analysis and activity recognition in a 4d surveillance system. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 101–113. [Google Scholar] [CrossRef]

- Benedek, C.; Nagy, B.; Gálai, B.; Jankó, Z. Lidar-based gait analysis in people tracking and 4D visualization. In Proceedings of the 2015 23rd European Signal Processing Conference (EUSIPCO), Nice, France, 31 August–4 September 2015; pp. 1138–1142. [Google Scholar] [CrossRef]

- Yoon, S.; Jung, H.-W.; Jung, H.; Kim, K.; Hong, S.-K.; Roh, H.; Oh, B.-M. Development and validation of 2D-LiDAR-Based Gait Analysis Instrument and Algorithm. Sensors 2021, 21, 414. [Google Scholar] [CrossRef]

- Yan, Z.; Duckett, T.; Bellotto, N. Online learning for 3D LiDAR-based human detection: Experimental analysis of point cloud clustering and classification methods. Auton. Robot. 2020, 44, 147–164. [Google Scholar] [CrossRef]

- Koide, K.; Miura, J.; Menegatti, E. A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement. Int. J. Adv. Robot. Syst. 2019, 16, 1–16. [Google Scholar] [CrossRef]

- Bobick, A.; Davis, J. The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 257–267. [Google Scholar] [CrossRef]

- Enoki, M.; Watanabe, K.; Noguchi, H. Single Person Identification and Activity Estimation in a Room from Waist-Level Contours Captured by 2D Light Detection and Ranging. Sensors 2024, 24, 1272. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

| Data | Training | Testing | Validation | |||

|---|---|---|---|---|---|---|

| Accuracy (%) | F1 Score (%) | Accuracy (%) | F1 Score (%) | Accuracy (%) | F1 Score (%) | |

| KoLaSU LiDAR 1 | 0.9942 | 0.9937 | 0.9843 | 0.9838 | 0.9851 | 0.9846 |

| KoLaSU LiDAR 2 | 0.9932 | 0.9927 | 0.9846 | 0.9841 | 0.9850 | 0.9845 |

| KoLaSU LiDAR 3 | 0.9772 | 0.9767 | 0.9336 | 0.9331 | 0.9348 | 0.9343 |

| KoLaSU LiDAR 4 | 0.9804 | 0.9799 | 0.9479 | 0.9474 | 0.9471 | 0.9466 |

| KoLaSU LiDAR 13 | 0.9982 | 0.9977 | 0.9962 | 0.9957 | 0.9960 | 0.9955 |

| KoLaSU LiDAR 24 | 0.9983 | 0.9978 | 0.9962 | 0.9957 | 0.9961 | 0.9956 |

| KoLaSU LiDAR 12 | 0.9972 | 0.9967 | 0.9934 | 0.9929 | 0.9931 | 0.9926 |

| KoLaSU LiDAR 34 | 0.9982 | 0.9977 | 0.9934 | 0.9929 | 0.9942 | 0.9937 |

| KoLaSU LiDAR 1234 | 0.9987 | 0.9982 | 0.9966 | 0.9961 | 0.9971 | 0.9966 |

| Data | KoLaSU LiDAR 1234 TestCross24 | KoLaSU LiDAR 1234TestCross24 |

|---|---|---|

| Experiment Type | 26 Individual Persons (60%, 20%, and 20%) | 26 Individual Persons (60%, 20%, and 20%) |

| Batch Size | 38 | 38 |

| Epoch | 25 | 40 |

| GPU | Yes | Yes |

| Model | ResNet18 | ResNet50_2 |

| Train Accuracy | 0.99864 (%) | 0.99999 (%) |

| Train Loss | 0.00589 (%) | 0.000354 (%) |

| Test Accuracy | 0.379 (%) | 0.4007 (%) |

| Test Loss | 4.2741 (%) | 3.5852 (%) |

| Validation Accuracy | 0.99721 (%) | 0.99956 (%) |

| Validation Loss | 0.00589 (%) | 0.001578 (%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasan, M.; Uddin, M.K.; Suzuki, R.; Kuno, Y.; Kobayashi, Y. PerFication: A Person Identifying Technique by Evaluating Gait with 2D LiDAR Data. Electronics 2024, 13, 3137. https://doi.org/10.3390/electronics13163137

Hasan M, Uddin MK, Suzuki R, Kuno Y, Kobayashi Y. PerFication: A Person Identifying Technique by Evaluating Gait with 2D LiDAR Data. Electronics. 2024; 13(16):3137. https://doi.org/10.3390/electronics13163137

Chicago/Turabian StyleHasan, Mahmudul, Md. Kamal Uddin, Ryota Suzuki, Yoshinori Kuno, and Yoshinori Kobayashi. 2024. "PerFication: A Person Identifying Technique by Evaluating Gait with 2D LiDAR Data" Electronics 13, no. 16: 3137. https://doi.org/10.3390/electronics13163137

APA StyleHasan, M., Uddin, M. K., Suzuki, R., Kuno, Y., & Kobayashi, Y. (2024). PerFication: A Person Identifying Technique by Evaluating Gait with 2D LiDAR Data. Electronics, 13(16), 3137. https://doi.org/10.3390/electronics13163137