DNN Adaptive Partitioning Strategy for Heterogeneous Online Inspection Systems of Substations

Abstract

:1. Introduction

- (1)

- In response to the limited computing power of edge devices, which cannot handle complex computing tasks in a timely manner, resulting in increased latency and the inability to process and analyze data in a timely manner, we constructed task-layered computation latency and energy consumption prediction models. When training the models, we considered the characteristics of drone power, network status, task priority, etc. While reducing the model size and prediction time, we ensured that the models maintained high prediction accuracy and improved the real-time performance of data processing.

- (2)

- In response to the problem of insufficient consideration of nonlinear features in existing partitioning methods, which leads to unreasonable model partitioning, the optimal partitioning method is selected based on the resource utilization rate, communication status, task delay, and energy consumption weight of each edge node in the substation. Then, based on the prediction results of delay and energy consumption, the optimal partitioning nodes of the DNN are inferred, and the DNN is adaptively partitioned into terminal devices and multiple edge nodes for collaborative processing, fully utilizing the computing power of heterogeneous nodes and accelerating task processing speed.

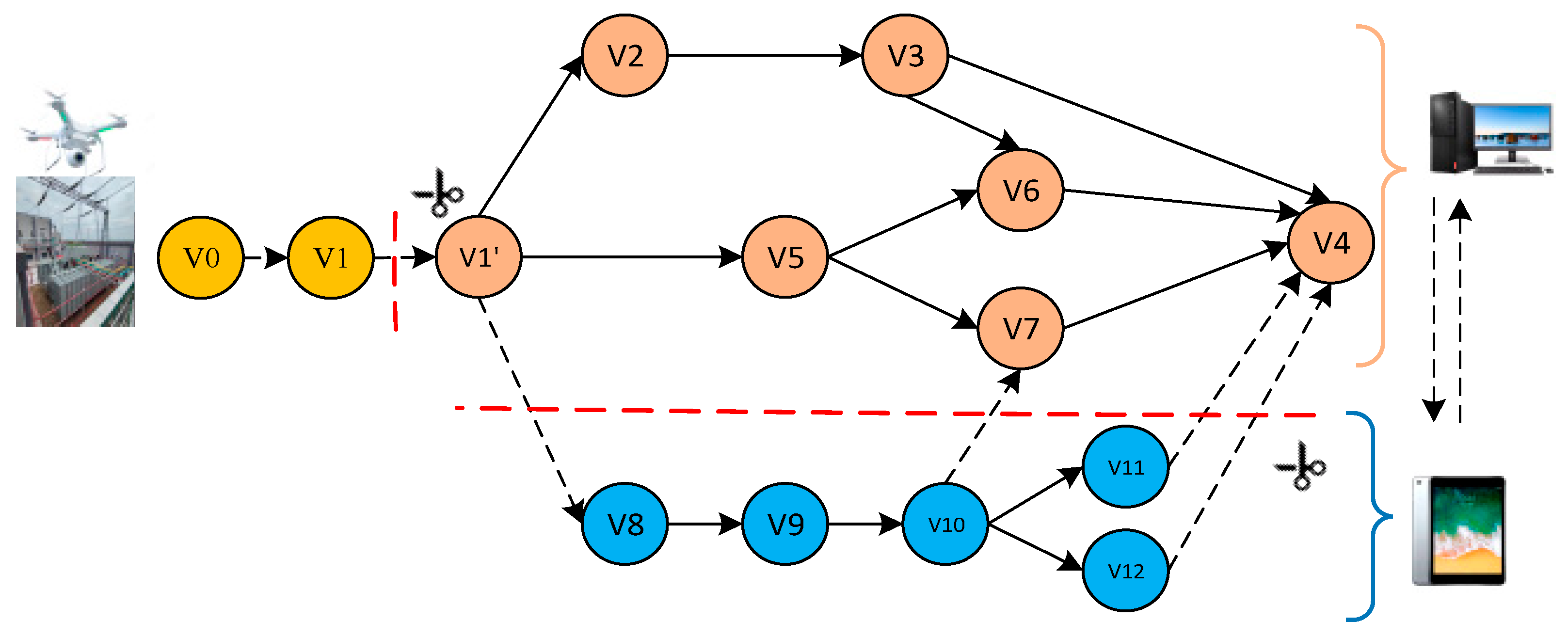

2. DNN Adaptive Hybrid Partitioning Architecture for a Heterogeneous System of Unmanned Aerial Vehicle Inspection in Substations

3. Prediction Stage

- (1)

- Calculate latency and energy consumption: This task aims to predict the computation latency and energy consumption of DNN layers on heterogeneous nodes. This article considers four types of deep neural network layers, namely the activation layer (ACT), convolutional layer (CONV), pooling layer (POOL), and fully connected layer (FC). Analyze and calculate the computation latency, energy consumption, DNN layer types, heterogeneous node computing capabilities, etc.

- (2)

- Analyze data transmission latency and energy consumption: This task aims to predict the data transmission latency and energy consumption of neural network tasks allocated between heterogeneous nodes. This article considers the input data size, output data size, network transmission rate of different types of DNN layers, allocation scheme for synchronous collaborative processing, and additionally calculates the data exchange latency and energy consumption between heterogeneous nodes.

- (3)

- Training inference delay and energy consumption prediction model: This task aims to integrate DNN calculation delay and energy consumption, data transmission delay and energy consumption, and construct a prediction model through neural networks to better learn nonlinear features related to delay and energy consumption, such as task-queuing network status, data transmission distance, drone propulsion energy consumption, task priority, etc.

3.1. Modeling Inference Delay

3.2. Modeling Inference Energy Consumption

- (1)

- Local calculation of energy consumption for drones: when the i-th DNN vertex is assigned to the drone device for execution (i.e., Si = 1), the required energy consumption for all layers in that vertex of the drone is defined as follows:

- (2)

- Drone data transmission energy consumption: when the calculation after the i-th vertex is allocated to the local server for calculation, the energy consumption is required for task transmission to the specified node. The signal power gain from drones to local servers follows a path loss model in free space [30].

- (3)

3.3. Problem Description

4. Adaptive Partitioning Stage

- Analyze task delay and energy consumption weight: This task aims to determine the task inference delay weight and energy consumption weight for different terminal devices in the substation, such as drones, surveillance cameras, inspection robots, etc., in different states. Due to the fact that cameras and other devices are not constrained by power consumption, the weight of energy consumption for energy consumption task processing is relatively low. Devices such as drones and inspection robots are easily constrained by electricity and equipment temperature, and the strict setting of task inference delay weight and energy consumption weight is particularly important.

- Determine the division method and collaborative edge nodes: This task aims to determine the DNN division method and collaborative reasoning nodes by monitoring the resource utilization and communication status between heterogeneous edge nodes.

- Search for partition points: Based on the prediction results of the DNN’s inference delay prediction model and inference energy consumption prediction model, search for the optimal partition point on the heterogeneous inspection system calculation platform of substations, so as to minimize the end-to-end inference delay and inference energy consumption weighted values of the DNN. Adaptively divide the DNN computing into terminal devices and heterogeneous nodes for collaborative processing in sequence.

A Hybrid Partition Strategy Based on DAG-Type DNN

5. Collaborative Reasoning Phase

| Algorithm 1: Collaborative Reasoning Framework—daptive DAG DNN Computing Partitioning Algorithm |

| Input: Current Network Status |

| Output: |

| Set the end-to-end latency as = 0 |

| Set the first partition point as = 0 |

| = +∞ |

| for i = 0:N do |

| for j = 1:n do |

| Set = 0 |

| Set = 0 |

| Set = 0 |

| = + (i,j) |

| = + (i,j) |

| end |

| = + + |

| = α + β |

| for I = i:N do |

| for J = j + 1:n do |

| = + (i,j) |

| tend |

| = + |

| = α |

| = + |

| if < then |

| = |

| = i |

| end |

6. Experimental and Simulation Verification

6.1. Experimental Platform and Simulation Parameters

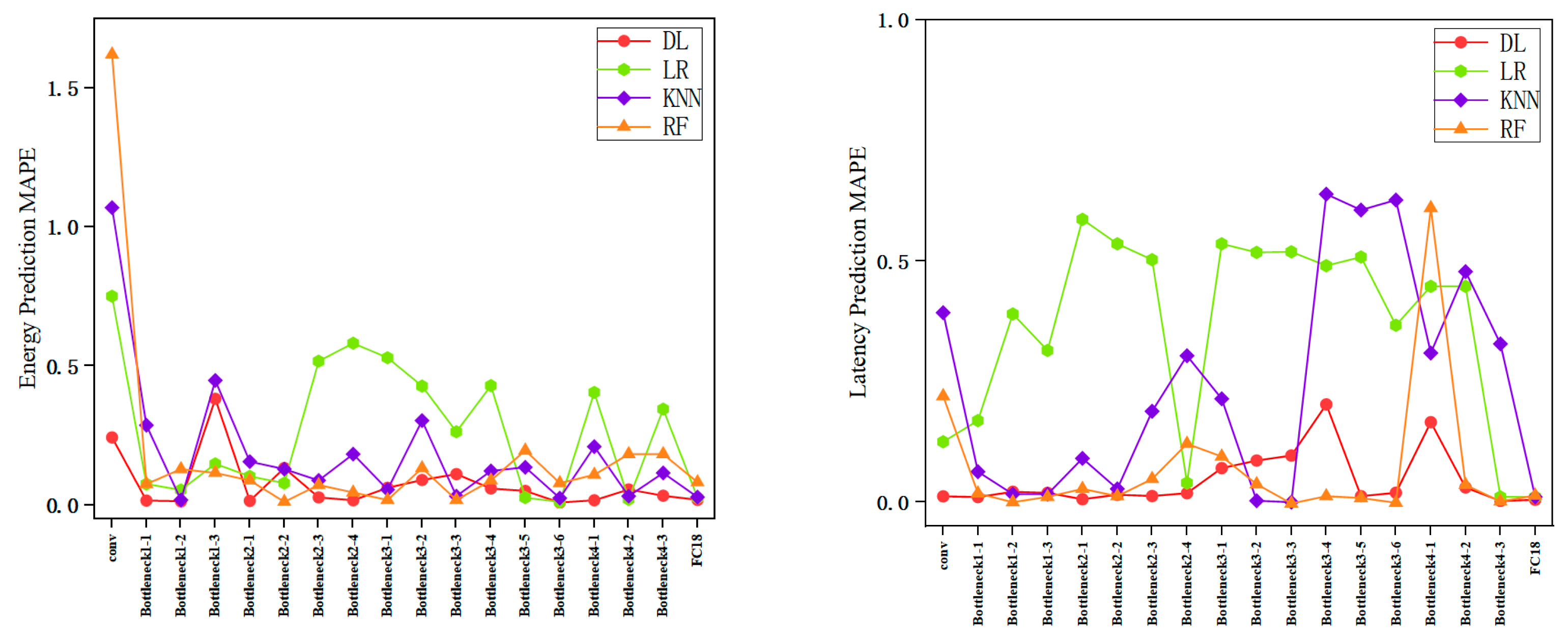

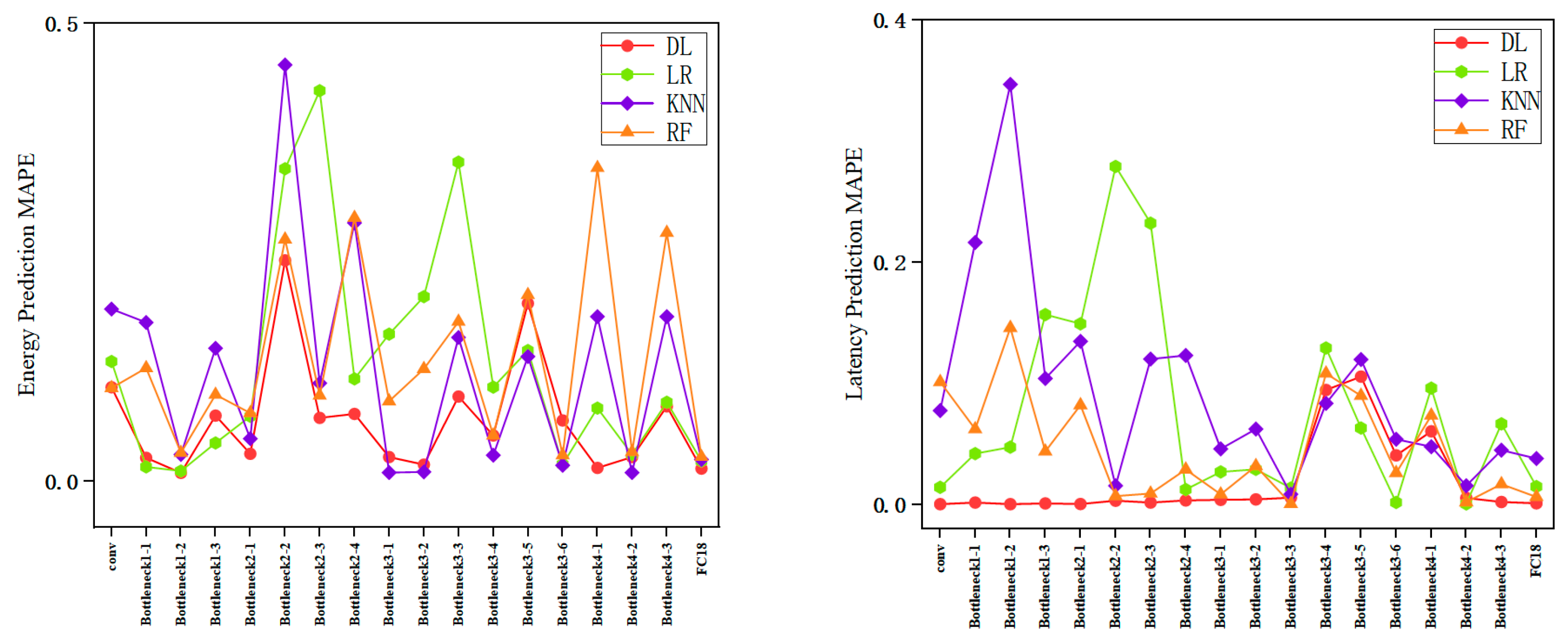

6.2. Prediction Model Experimental Results and Analysis

Prediction Model Accuracy Evaluation

6.3. Reasonability Analysis of Adaptive Hybrid Partitioning

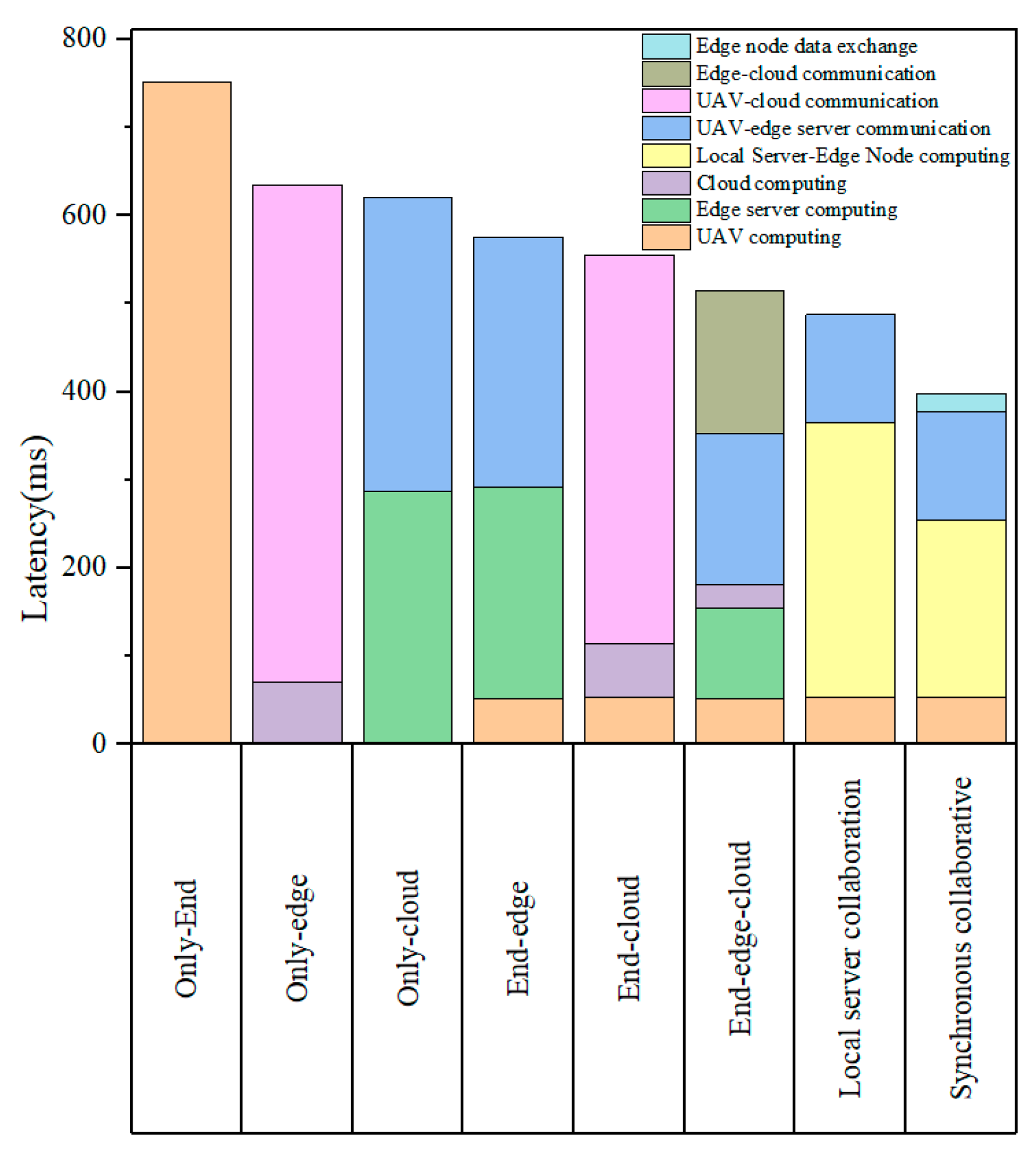

6.4. Comparative Analysis of Total Delay in Multi Partition Systems

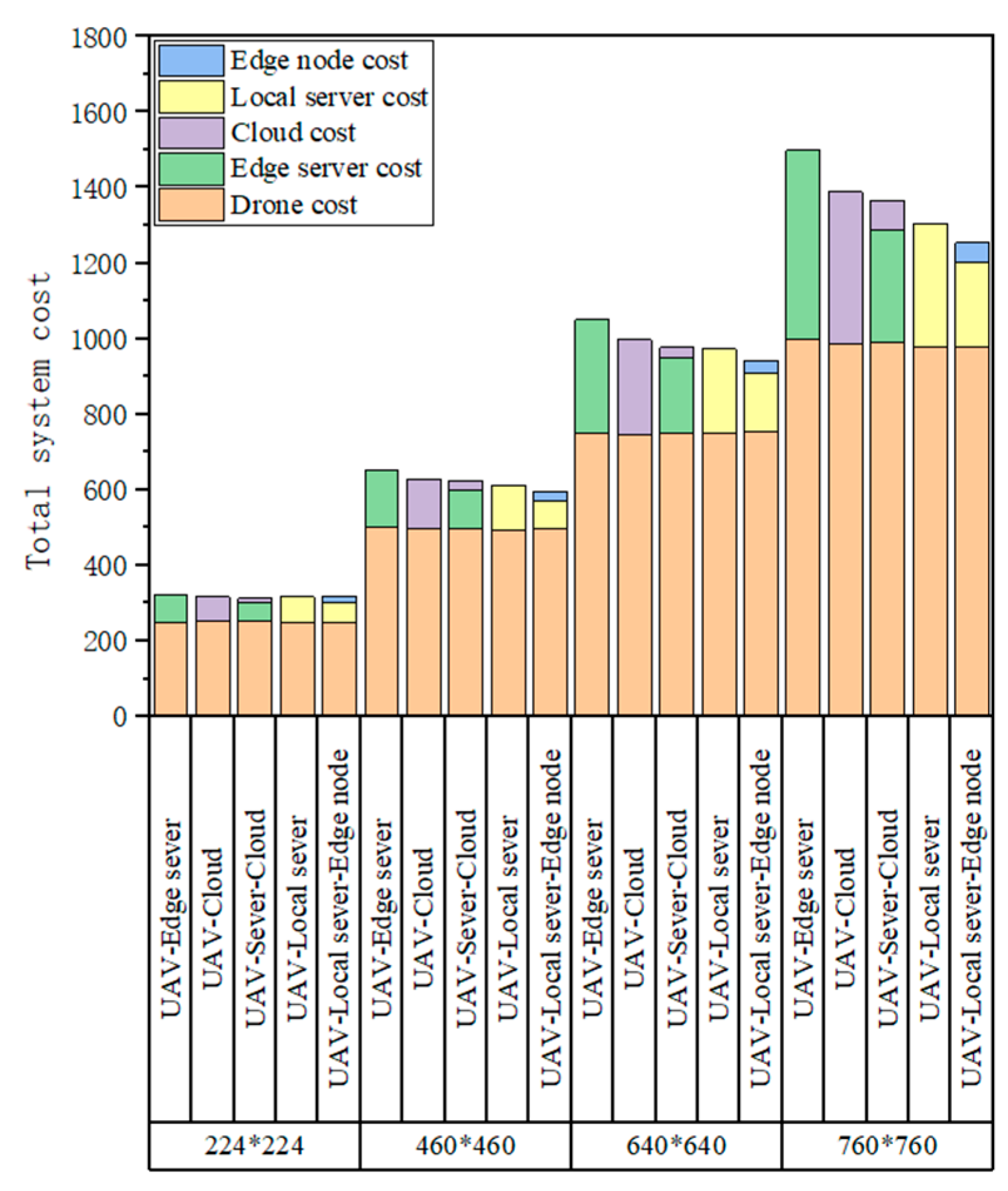

6.5. Analysis of the Impact of Image Size on the Total Cost of the System

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xia, C.; Zhao, J.; Cui, H.; Feng, X.; Xue, J. DNNTune: Automatic benchmarking DNN models for mobile-cloud computing. ACM Trans. Archit. Code Optim. 2019, 16, 49. [Google Scholar] [CrossRef]

- Chen, Z.; Hu, J.; Chen, X.; Hu, J.; Zheng, X.; Min, G. Computation offloading and task scheduling for DNN-based applications in cloud-edge computing. IEEE Access 2020, 8, 115537–115547. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, S.; Yan, Z.; Huang, J. Joint DNN Partitioning and Task Offloading in Mobile Edge Computing via Deep Reinforcement Learning. J. Cloud Comput. 2023, 12, 116. [Google Scholar] [CrossRef]

- Miao, W.; Zeng, Z.; Wei, L.; Li, S.; Jiang, C.; Zhang, Z. Adaptive DNN partition in edge computing environments 2020. In Proceedings of the IEEE 26th International Conference on Parallel and Distributed Systems (ICPADS), Hong Kong, China, 2–4 December 2020; pp. 685–690. [Google Scholar]

- Li, X.; Li, W.; Yang, Q.; Yan, W.; Zomaya, A.Y. Edge-computing-enabled unmanned module defect detection and diagnosis system for large-scale photovoltaic plants. IEEE Internet Things J. 2020, 7, 9651–9663. [Google Scholar] [CrossRef]

- Tang, W.; Yang, Q.; Hu, X.; Yan, W. Deep learning-based linear defects detection system for large-scale photovoltaic plants based on an edge-cloud computing infrastructure. Sol. Energy 2022, 231, 527–535. [Google Scholar] [CrossRef]

- Xu, C.; Li, Q.; Zhou, Q.; Zhang, S.; Yu, D.; Ma, Y. Power line-guided automatic electric transmission line inspection system. IEEE Trans. Instrum. Meas. 2022, 71, 3512118. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, Z.; Li, D.; Su, Z. Joint optimization of computing offloading and service caching in edge computing-based smart grid. IEEE Trans. Cloud Comput. 2022, 11, 1122–1132. [Google Scholar] [CrossRef]

- Wu, Y.; Guo, H.; Chakraborty, C.; Khosravi, M.R.; Berretti, S.; Wan, S. Edge computing driven low-light image dynamic enhancement for object detection. IEEE Trans. Netw. Sci. Eng. 2022, 10, 3086–3098. [Google Scholar] [CrossRef]

- Cheng, Q.; Wang, H.; Zhu, B.; Shi, Y.; Xie, B. A Real-Time UAV Target Detection Algorithm Based on Edge Computing. Drones 2023, 7, 95. [Google Scholar] [CrossRef]

- Song, C.; Xu, W.; Han, G.; Zeng, P.; Wang, Z.; Yu, S. A cloud edge collaborative intelligence method of insulator string defect detection for power IIoT. IEEE Internet Things J. 2020, 8, 7510–7520. [Google Scholar] [CrossRef]

- Shuang, F.; Chen, X.; Li, Y.; Wang, Y.; Miao, N.; Zhou, Z. PLE: Power Line Extraction Algorithm for UAV-Based Power Inspection. IEEE Sens. J. 2022, 22, 19941–19952. [Google Scholar] [CrossRef]

- Ren, P.; Qiao, X.; Huang, Y.; Liu, L.; Pu, C.; Dustdar, S. Fine-grained elastic partitioning for distributed DNN towards mobile web AR services in the 5G era. IEEE Trans. Serv. Comput. 2021, 15, 3260–3274. [Google Scholar]

- Sharma, S.K.; Wang, X. Live data analytics with collaborative edge and cloud processing in wireless IoT networks. IEEE Access 2017, 5, 4621–4635. [Google Scholar] [CrossRef]

- Kang, Y.; Hauswald, J.; Gao, C.; Rovinski, A.; Mudge, T.; Mars, J.; Tang, L. Neurosurgeon: Collaborative intelligence between the cloud and mobile edge. ACM SIGARCH Comput. Archit. News 2017, 45, 615–629. [Google Scholar] [CrossRef]

- Chen, Y.Y.; Lin, Y.H.; Hu, Y.C.; Hsia, C.H.; Lian, Y.A.; Jhong, S.Y. Distributed real-time object detection based on edge-cloud collaboration for smart video surveillance applications. IEEE Access 2022, 10, 93745–93759. [Google Scholar] [CrossRef]

- Ding, C.; Zhou, A.; Liu, Y.; Chang, R.N.; Hsu, C.-H.; Wang, S. A cloud-edge collaboration framework for cognitive service. IEEE Trans. Cloud Comput. 2020, 10, 1489–1499. [Google Scholar] [CrossRef]

- Liu, G.; Dai, F.; Xu, X.; Fu, X.; Dou, W.; Kumar, N.; Bilal, M. An adaptive DNN inference acceleration framework with end–edge–cloud collaborative computing. Future Gener. Comput. Syst. 2023, 140, 422–435. [Google Scholar] [CrossRef]

- Li, E.; Zeng, L.; Zhou, Z.; Chen, X. Edge AI: On-demand accelerating deep neural network inference via edge computing. IEEE Trans. Wireless Commun. 2019, 19, 447–457. [Google Scholar] [CrossRef]

- Li, J.; Liang, W.; Li, Y.; Xu, Z.; Jia, X.; Guo, S. Throughput maximization of delay-aware DNN inference in edge computing by exploring DNN model partitioning and inference parallelism. IEEE Trans. Mob. Comput. 2021, 22, 3017–3030. [Google Scholar] [CrossRef]

- Wei, Z.; Yu, X.; Zou, L. Multi-Resource Computing Offload Strategy for Energy Consumption Optimization in Mobile Edge Computing. Processes 2022, 10, 1762. [Google Scholar] [CrossRef]

- Shao, S.; Liu, S.; Li, K.; You, S.; Qiu, H.; Yao, X.; Ji, Y. LBA-EC: Load Balancing Algorithm Based on Weighted Bipartite Graph for Edge Computing. Chin. J. Electron. 2023, 32, 313–324. [Google Scholar] [CrossRef]

- Sun, Z.; Yang, H.; Li, C.; Yao, Q.; Wang, D.; Zhang, J.; Vasilakos, A.V. Cloud-edge collaboration in industrial internet of things: A joint offloading scheme based on resource prediction. IEEE Internet Things J. 2021, 9, 17014–17025. [Google Scholar] [CrossRef]

- Nayyer, M.Z.; Raza, I.; Hussain, S.A.; Jamal, M.H.; Gillani, Z.; Hur, S.; Ashraf, I. LBRO: Load Balancing for Resource Optimization in Edge Computing. IEEE Access 2022, 10, 97439–97449. [Google Scholar] [CrossRef]

- Deng, Y.; Wu, T.; Chen, X.; Ashrafzadeh, A.H. Multi-Classification and Distributed Reinforcement Learning-Based Inspection Swarm Offloading Strategy. Intell. Autom. Soft Comput. 2022, 34, 1157–1174. [Google Scholar] [CrossRef]

- Yuan, H.; Zhou, M.C. Profit-maximized collaborative computation offloading and resource allocation in distributed cloud and edge computing systems. IEEE Trans. Autom. Sci. Eng. 2020, 18, 1277–1287. [Google Scholar] [CrossRef]

- Xu, W.; Yin, Y.; Chen, N.; Tu, H. Collaborative inference acceleration integrating DNN partitioning and task offloading in mobile edge computing. Int. J. Softw. Eng. Knowl. Eng. 2023, 33, 1835–1863. [Google Scholar] [CrossRef]

- Liang, H.; Sang, Q.; Hu, C.; Cheng, D.; Zhou, X.; Wang, D.; Bao, W.; Wang, Y. DNN Surgery: Accelerating DNN Inference on the Edge through Layer Partitioning. IEEE Trans. Cloud Comput. 2023, 11, 3111–3125. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, Q. Offloading schemes in mobile edge computing for ultra-reliable low latency communications. IEEE Access 2018, 6, 12825–12837. [Google Scholar] [CrossRef]

- Shi, L.; Xu, Z.; Sun, Y.; Shi, Y.; Fan, Y.; Ding, X. A DNN inference acceleration algorithm combining model partition and task allocation in heterogeneous edge computing system. Peer Peer Netw. Appl. 2021, 14, 4031–4045. [Google Scholar] [CrossRef]

- Ren, W.Q.; Qu, Y.B.; Dong, C.; Jing, Y.Q.; Sun, H.; Wu, Q.H.; Guo, S. A survey on collaborative DNN inference for edge intelligence. Mach. Intell. Res. 2023, 20, 370–395. [Google Scholar] [CrossRef]

- Xu, J.; Ota, K.; Dong, M. Aerial edge computing: Flying attitude-aware collaboration for multi-UAV. IEEE Trans. Mob. Comput. 2022, 22, 5706–5718. [Google Scholar] [CrossRef]

- Cheng, K.; Fang, X.; Wang, X. Energy efficient edge computing and data compression collaboration scheme for UAV-assisted network. IEEE Trans. Veh. Technol. 2023, 72, 16395–16408. [Google Scholar] [CrossRef]

| c1 | Drone wing area constant | 0.002 |

| c2 | Load factor constant of unmanned aerial vehicles | 70.698 |

| η | Drone chip structure constant | 10−11 |

| FUAV | Drone computing power | 1 GHz |

| KUAV | The cycle required for drone processing per bit | 100 cycles/bite |

| Hardware | Specifications |

| System | Harmony OS 2.0.0 |

| CPU | HUAWEI Kirin 985 |

| Memory | 8 GB |

| HDD | 128 GB |

| Hardware | Specifications |

| System | Windows 10 |

| CPU | 12 × Intel(R) Xeon(R) CPU E5-2678 2.50 GHz |

| Memory | Samsung 64 GB 2400 MHz |

| HDD | 4 × WDC PC SN730 512 GB |

| GPU | 3 × NVIDIA GeForce GTX 1080 Ti |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, Q.; Deng, F.; Xue, X.; Zeng, J.; Wei, B. DNN Adaptive Partitioning Strategy for Heterogeneous Online Inspection Systems of Substations. Electronics 2024, 13, 3383. https://doi.org/10.3390/electronics13173383

Fu Q, Deng F, Xue X, Zeng J, Wei B. DNN Adaptive Partitioning Strategy for Heterogeneous Online Inspection Systems of Substations. Electronics. 2024; 13(17):3383. https://doi.org/10.3390/electronics13173383

Chicago/Turabian StyleFu, Qincui, Fangming Deng, Xianfa Xue, Jianjun Zeng, and Baoquan Wei. 2024. "DNN Adaptive Partitioning Strategy for Heterogeneous Online Inspection Systems of Substations" Electronics 13, no. 17: 3383. https://doi.org/10.3390/electronics13173383

APA StyleFu, Q., Deng, F., Xue, X., Zeng, J., & Wei, B. (2024). DNN Adaptive Partitioning Strategy for Heterogeneous Online Inspection Systems of Substations. Electronics, 13(17), 3383. https://doi.org/10.3390/electronics13173383