Research on Energy Management in Hydrogen–Electric Coupled Microgrids Based on Deep Reinforcement Learning

Abstract

1. Introduction

- Intelligent hydrogen–electric coupled microgrid energy management strategy: This paper proposes an energy management strategy based on the DDPG. A deep neural network is used to simulate and optimize the energy management strategy of the microgrid by combining the forecast data of PV generation and load demand. The strategy can effectively cope with the influence of uncertain factors, such as PV generation, EV charging loads, and hydrogen charging loads on the optimization results, and ensure that the system supply and demand are balanced throughout the dispatch cycle.

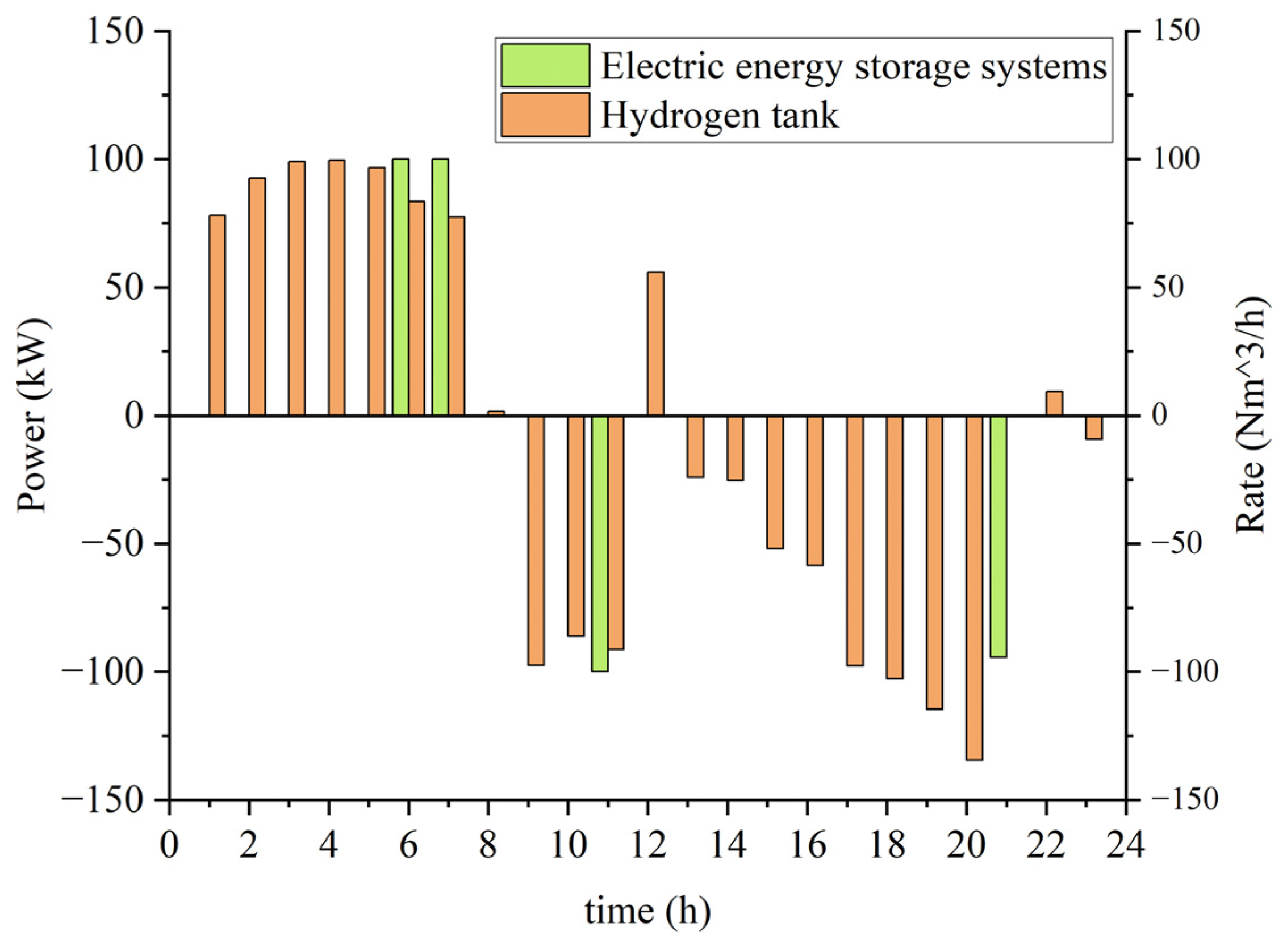

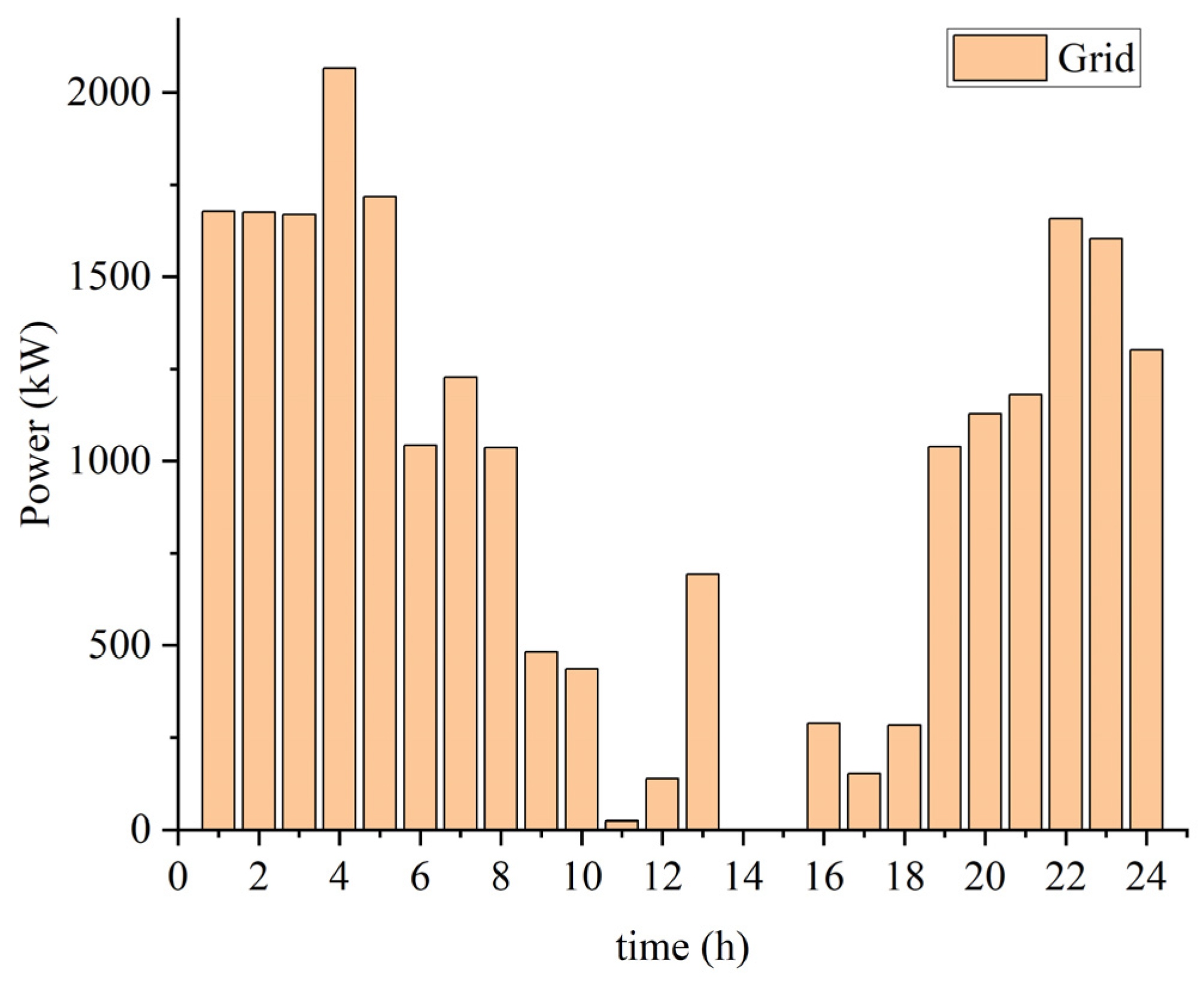

- Optimization of system operation economics and the reduction in light shedding: The DDPG algorithm operates hydrogen production from excess power during peak PV generation hours, which achieves full utilization of PV power and reduces light shedding. In addition, the method achieves a reduction in the system power purchase cost and improves the overall economic efficiency through the operation of charging and hydrogen production during low-price hours and discharging and selling power during high-price hours.

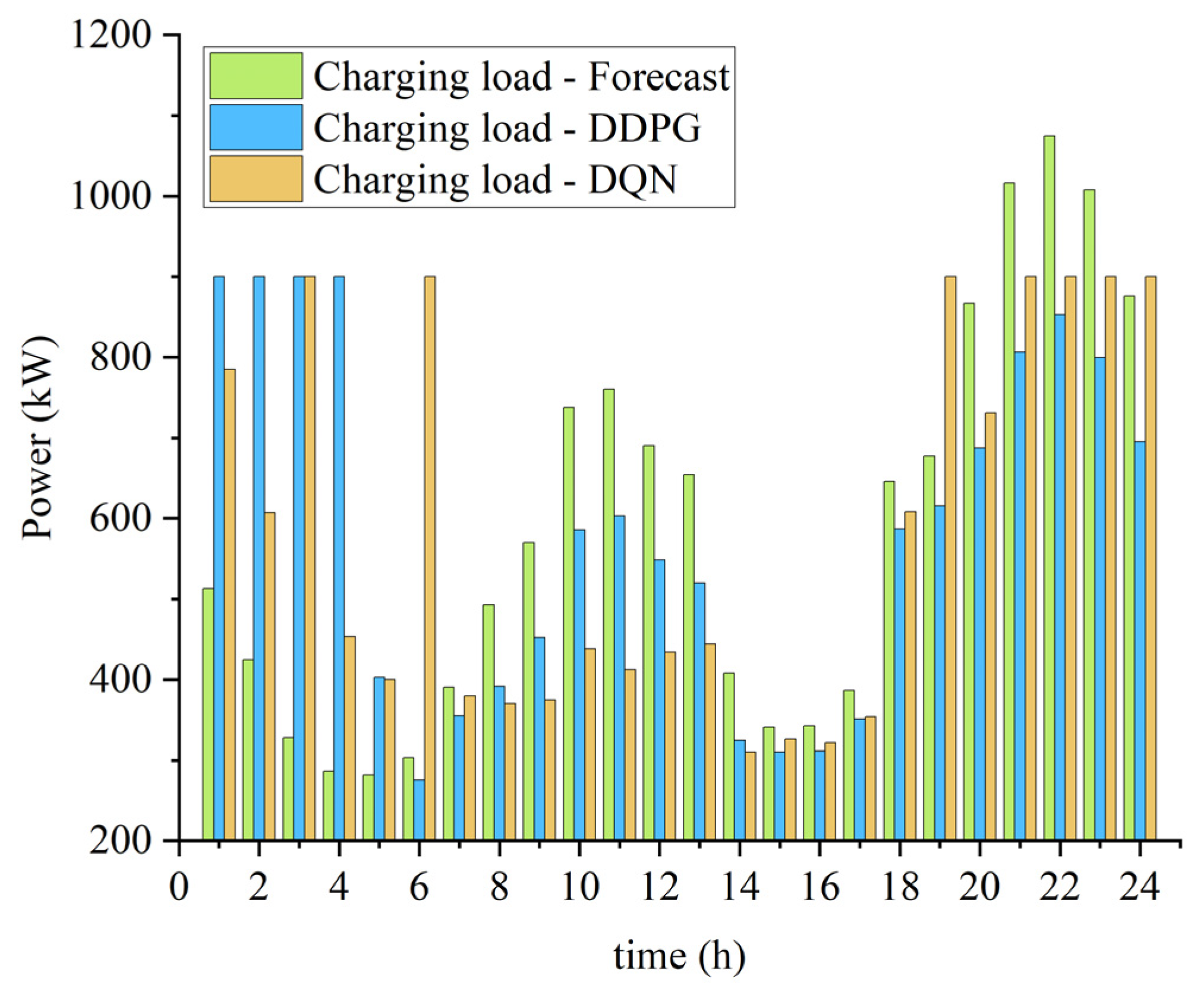

- Load smoothing and grid stability enhancement: Through the optimal scheduling of EV charging loads, the time and magnitude of peak loads are reduced, and the optimized charging load curves are smoother, which significantly reduces the gap between the peaks and valleys of the grid loads and thus enhances the stability and operational efficiency of the grid.

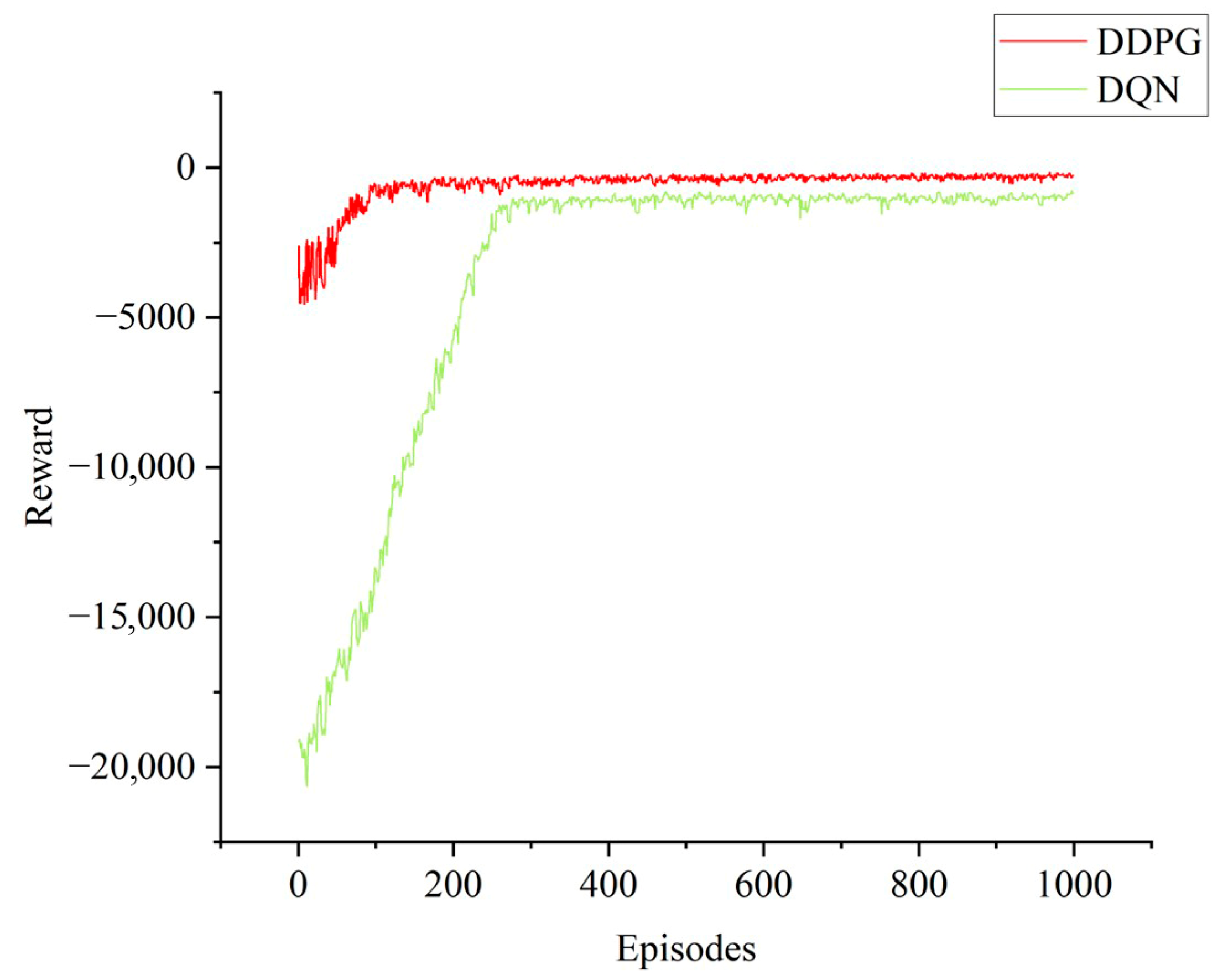

- The effectiveness and superiority of the DDPG algorithm are verified: The accuracy and effectiveness of the DDPG algorithm over the traditional DQN in dealing with continuous action decision-making problems are verified through case studies. The DDPG algorithm is more capable of optimizing the energy management of the microgrid under complex constraints, which significantly reduces the operating cost of the microgrid.

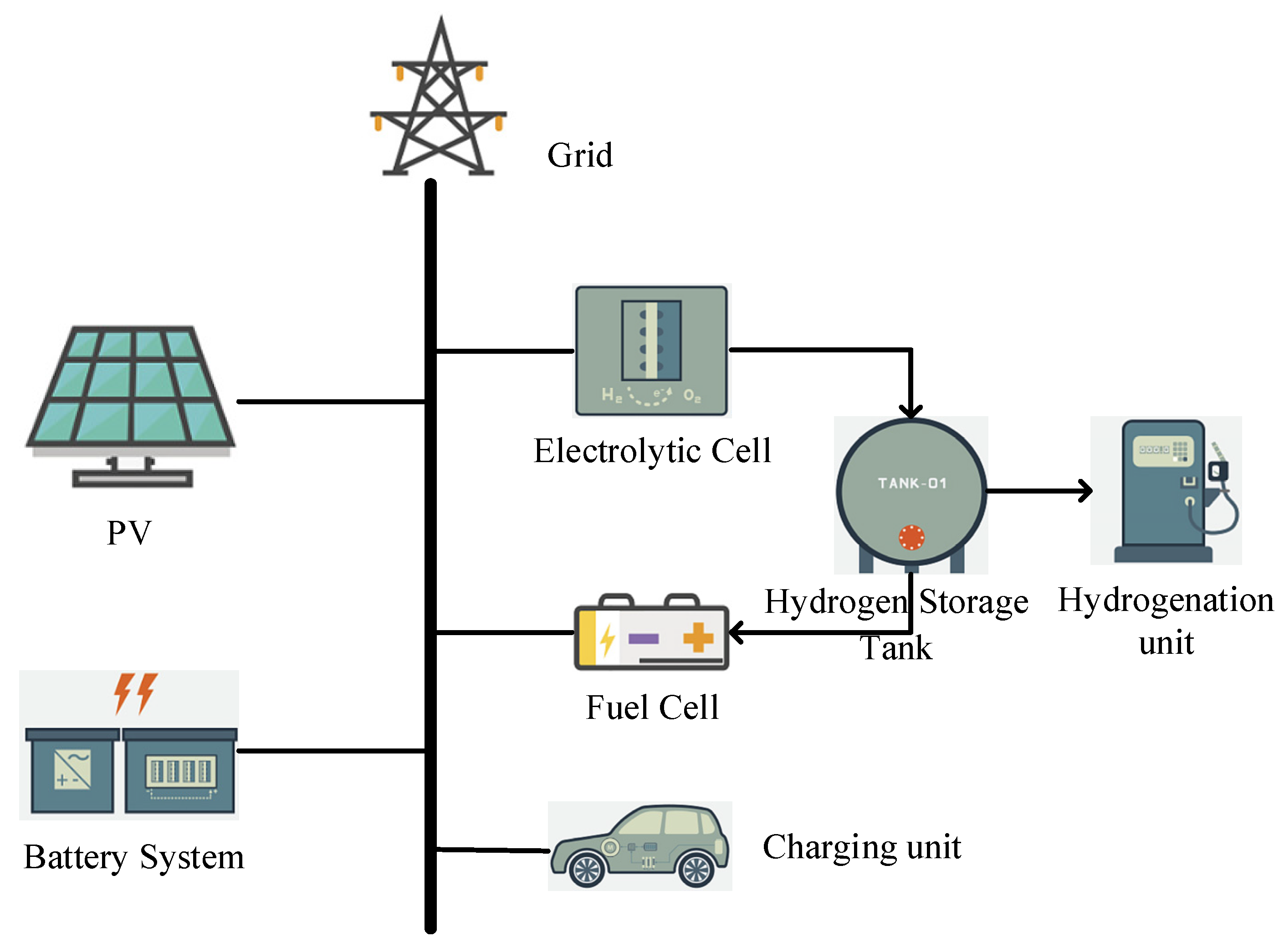

2. Hydrogen–Electric Coupled Microgrid Structure

3. Distributed Energy System Models

3.1. Photovoltaic Power Generation Model

3.2. Battery Energy Storage System Model

3.3. Electrolytic Hydrogen Production Model

3.4. Hydrogen Fuel Cell Model

3.5. Model of Hydrogen Storage Facilities

4. Decision-Making Model for Microgrid Energy Management

4.1. Objective Function

4.2. Constraints

4.2.1. Power and Energy Balance Constraints

4.2.2. Constraints on the Operation of Photovoltaic Power Generation Systems

4.2.3. Electrolytic Hydrogen Production System Operational Constraints

- Operational Constraints of Electrolytic Cells

- 2.

- Constraints on Fuel Cell Operation

- 3.

- Hydrogen Storage Tank Operational Constraints

4.2.4. Electrochemical Energy Storage Operational Constraints

4.2.5. Constraints on the Operation of Charging/Hydrogen Cells

- Charging Load Constraints

- 2.

- Constraints on Charging/Hydrogen Stations

5. Optimization Algorithms for Deep Reinforcement Learning

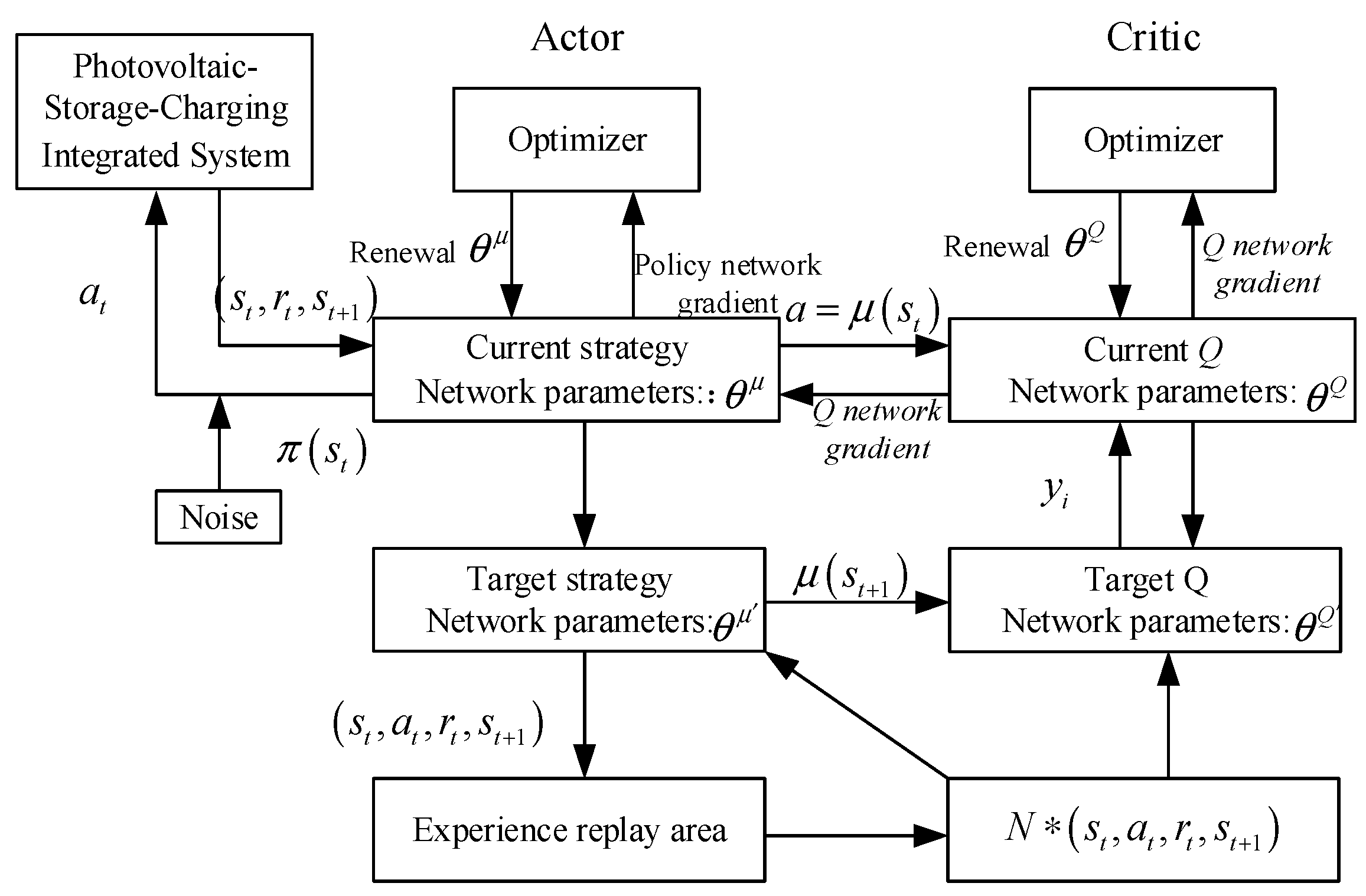

5.1. The Principles of the DDPG Algorithm

5.2. Implementation of the DDPG Algorithm

- Definition of the State Space

- 2.

- Definition of the Action Space

- 3.

- Definition of Reward and Penalty Functions

| Algorithm 1: Energy Management Method for PV-Storage-Charging Integrated System Based on DDPG. | |

| 1: | |

| 2: | Initialize target networks and , |

| 3: | Initialize replay buffer |

| 4: | Set soft update coefficient and learning rate |

| 5: | for episode =1 to max_episodes do |

| 6: | Initialize random process for action exploration |

| 7: | |

| 8: | for to max_steps do |

| 9: | based on the current policy and exploration |

| noise | |

| 10: | |

| 11: | in replay buffer |

| 12: | from |

| 13: | |

| 14: | |

| 15: | Update Actor network using the sampled policy gradient: |

| 16: | Soft update target networks: |

| 17: | |

| 18: | end for |

| 19: | end for |

6. Case Study Analysis

6.1. Case Description

6.2. Simulation Analysis

7. Conclusions

- In hydrogen–electric coupled microgrids, the energy management system can intelligently adjust charging and discharging strategies based on electricity price signals and photovoltaic generation through the DDPG algorithm, achieving “buy low, sell high” operations.

- The DDPG algorithm takes into account the volatility of photovoltaic generation, the uncertainties of charging/hydrogen loads, and other uncertain factors, ensuring supply–demand balance between photovoltaic generation, electric vehicle charging/hydrogen loads, and the energy storage system during the scheduling period, thus enhancing the reliability and stability of system operation.

- Through the DDPG algorithm, hydrogen–electric coupled microgrids can participate in flexible grid regulation based on electricity price incentive signals by adjusting charging loads and energy storage systems, reducing peak loads, and improving grid stability and economic efficiency.

- The accuracy of the DDPG algorithm in continuous action problems has been validated through comparisons with the DQN algorithm.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shi, T.; Sheng, J.; Chen, Z.; Zhou, H. Simulation Experiment Design and Control Strategy Analysis in Teaching of Hydrogen-Electric Coupling System. Processes 2024, 12, 138. [Google Scholar] [CrossRef]

- Cai, G.; Chen, C.; Kong, L.; Peng, L.; Zhang, H. Modeling and Control of grid-connected system of wind power/photovoltaic/Hydrogen production/Supercapacitor. Power Syst. Technol. 2016, 40, 2982–2990. [Google Scholar] [CrossRef]

- Zhang, R.; Li, X.; Wang, X.; Wang, Q.; Qi, Z. Optimal scheduling for hydrogen-electric hybrid microgrid with vehicle to grid technology. In 2021 China Automation Congress (CAC); IEEE: Piscataway, NJ, USA, 2021; pp. 6296–6300. [Google Scholar]

- Guanghui, L. Research on Modeling and Optimal Control of Wind-Wind Hydrogen Storage Microgrid System. Master’s Thesis, North China University of Technology, Beijing, China, 2024. [Google Scholar]

- Huo, Y.; Wu, Z.; Dai, J.; Huo, Y.; Wu, Z.; Dai, J.; Duan, W.; Zhao, H.; Jiang, J.; Yao, R. An Optimal Dispatch Method for the Hydrogen-Electric Coupled Energy Microgrid. In World Hydrogen Technology Convention; Springer Nature Singapore: Singapore, 2023; pp. 69–75. [Google Scholar]

- Hou, L.; Dong, J.; Herrera, O.E.; Mérida, W. Energy management for solar-hydrogen microgrids with vehicle-to-grid and power-to-gas transactions. Int. J. Hydrogen Energy 2023, 48, 2013–2029. [Google Scholar] [CrossRef]

- Yu, L.; Qin, S.; Zhang, M.; Shen, C.; Jiang, T.; Guan, X. Deep reinforcement learning for smart building energy management: A survey. arXiv 2020, arXiv:2008.05074. [Google Scholar]

- Zheng, J.; Song, Q.; Wu, G.; Chen, H.; Hu, Z.; Chen, Z.; Weng, C.; Chen, J. Low-carbon operation strategy of regional integrated energy system based on the Q learning algorithm. J. Electr. Power Sci. Technol. 2022, 37, 106–115. [Google Scholar]

- Xu, H.; Lu, J.; Yang, Z.; Li, Y.; Lu, J.; Huang, H. Decision optimization model of incentive demand response based on deep reinforcement learning. Autom. Electr. Power Syst. 2021, 45, 97–103. [Google Scholar]

- Shuai, C. Microgrid Energy Management and Scheduling Based on Reinforcement Learning. Ph.D. Thesis, University of Science and Technology Beijing, Beijing, China, 2023. [Google Scholar]

- Kim, B.; Zhang, Y.; Van Der Schaar, M.; Lee, J.W. Dynamic pricing and energy consumption scheduling with reinforcement learning. IEEE Trans. Smart Grid 2016, 7, 2187–2198. [Google Scholar] [CrossRef]

- Shi, T.; Xu, C.; Dong, W.; Zhou, H.; Bokhari, A.; Klemeš, J.J.; Han, N. Research on energy management of hydrogen electric coupling system based on deep reinforcement learning. Energy 2023, 282, 128174. [Google Scholar] [CrossRef]

- Liu, J.; Chen, J.; Wang, X.; Zeng, J.; Huang, Q. Research on Energy Management and Optimization Strategy of micro-energy networks based on Deep Reinforcement Learning. Power Syst. Technol. 2020, 44, 3794–3803. [Google Scholar] [CrossRef]

- Ji, Y.; Wang, J.; Xu, J.; Fang, X.; Zhang, H. Real-Time Energy Management of a Microgrid Using Deep Reinforcement Learning. Energies 2019, 12, 2291. [Google Scholar] [CrossRef]

- Darshi, R.; Shamaghdari, S.; Jalali, A.; Arasteh, H. Decentralized Reinforcement Learning Approach for Microgrid Energy Management in Stochastic Environment. Int. Trans. Electr. Energy Syst. 2023, 2023, 1190103. [Google Scholar] [CrossRef]

- Kolodziejczyk, W.; Zoltowska, I.; Cichosz, P. Real-Time Energy Purchase Optimization for a Storage-Integrated Photovoltaic System by Deep Reinforcement Learning. Control Eng. Pract. 2021, 106, 104598. [Google Scholar] [CrossRef]

- Nicola, M.; Nicola, C.I.; Selișteanu, D. Improvement of the Control of a Grid Connected Photovoltaic System Based on Synergetic and Sliding Mode Controllers Using a Reinforcement Learning Deep Deterministic Policy Gradient Agent. Energies 2022, 15, 2392. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, J.; Wang, A.; Wang, Z.; Yang, N.; Zhao, Z.; Lai, C.S.; Lai, L.L. Prioritized sum-tree experience replay TD3 DRL-based online energy management of a residential microgrid. Appl. Energy 2024, 368, 123471. [Google Scholar] [CrossRef]

- Guo, C.; Wang, X.; Zheng, Y.; Zhang, F. Real-time optimal energy management of microgrid with uncertainties based on deep reinforcement learning. Energy 2022, 238, 121873. [Google Scholar] [CrossRef]

- Benhmidouch, Z.; Moufid, S.; Ait-Omar, A.; Abbou, A.; Laabassi, H.; Kang, M.; Chatri, C.; Ali, I.H.O.; Bouzekri, H.; Baek, J. A novel reinforcement learning policy optimization based adaptive VSG control technique for improved frequency stabilization in AC microgrids. Electr. Power Syst. Res. 2024, 230, 110269. [Google Scholar] [CrossRef]

| Parameter | Values |

|---|---|

| Photovoltaic array | 600 kW |

| Capacity of electrical energy storage system | 72–288 kW·h |

| Electrical energy storage power rating | 100 kW |

| Electrolyzer rated power | 750 kW |

| Capacity of hydrogen storage tank | 1000 Nm3 |

| Capacity of charging | 30 × 30 kW |

| Total refueling rate | 30 × 5 Nm3/h |

| Batch size | 64 |

| Hydrogen refueling service price | 5.8 ¥/Nm3 |

| Carbon trading price | 0.07 ¥/kg |

| Parameter | Values |

|---|---|

| Hidden layer | [400, 300, 256, 128] |

| Actor network learning rate | 0.001 |

| Critic network learning rate | 0.001 |

| Target network learning rate | 0.001 |

| Discount factor | 0.99 |

| Episodes | 1000 |

| Step size | 100 |

| Batch size | 64 |

| Experience playback pool capacity | 20,000 |

| Hidden layer | [400, 300, 256, 128] |

| Actor network learning rate | 0.001 |

| Cost (CNY) | Before Optimization | DQN | DDPG |

|---|---|---|---|

| Power purchase cost | 9625.27 | 9038.19 | 8677.2 |

| Charging income | 8056.33 | 7783.61 | 7838.3 |

| Hydrogen charge yield | 11,314.79 | 11,201.6421 | 11,314.79 |

| Carbon revenue | 147.89 | 147.89 | 147.89 |

| Net revenue | 9893.74 | 10,094.9521 | 10,623.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, T.; Zhou, H.; Shi, T.; Zhang, M. Research on Energy Management in Hydrogen–Electric Coupled Microgrids Based on Deep Reinforcement Learning. Electronics 2024, 13, 3389. https://doi.org/10.3390/electronics13173389

Shi T, Zhou H, Shi T, Zhang M. Research on Energy Management in Hydrogen–Electric Coupled Microgrids Based on Deep Reinforcement Learning. Electronics. 2024; 13(17):3389. https://doi.org/10.3390/electronics13173389

Chicago/Turabian StyleShi, Tao, Hangyu Zhou, Tianyu Shi, and Minghui Zhang. 2024. "Research on Energy Management in Hydrogen–Electric Coupled Microgrids Based on Deep Reinforcement Learning" Electronics 13, no. 17: 3389. https://doi.org/10.3390/electronics13173389

APA StyleShi, T., Zhou, H., Shi, T., & Zhang, M. (2024). Research on Energy Management in Hydrogen–Electric Coupled Microgrids Based on Deep Reinforcement Learning. Electronics, 13(17), 3389. https://doi.org/10.3390/electronics13173389