Effects of Industrial Maintenance Task Complexity on Neck and Shoulder Muscle Activity During Augmented Reality Interactions

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

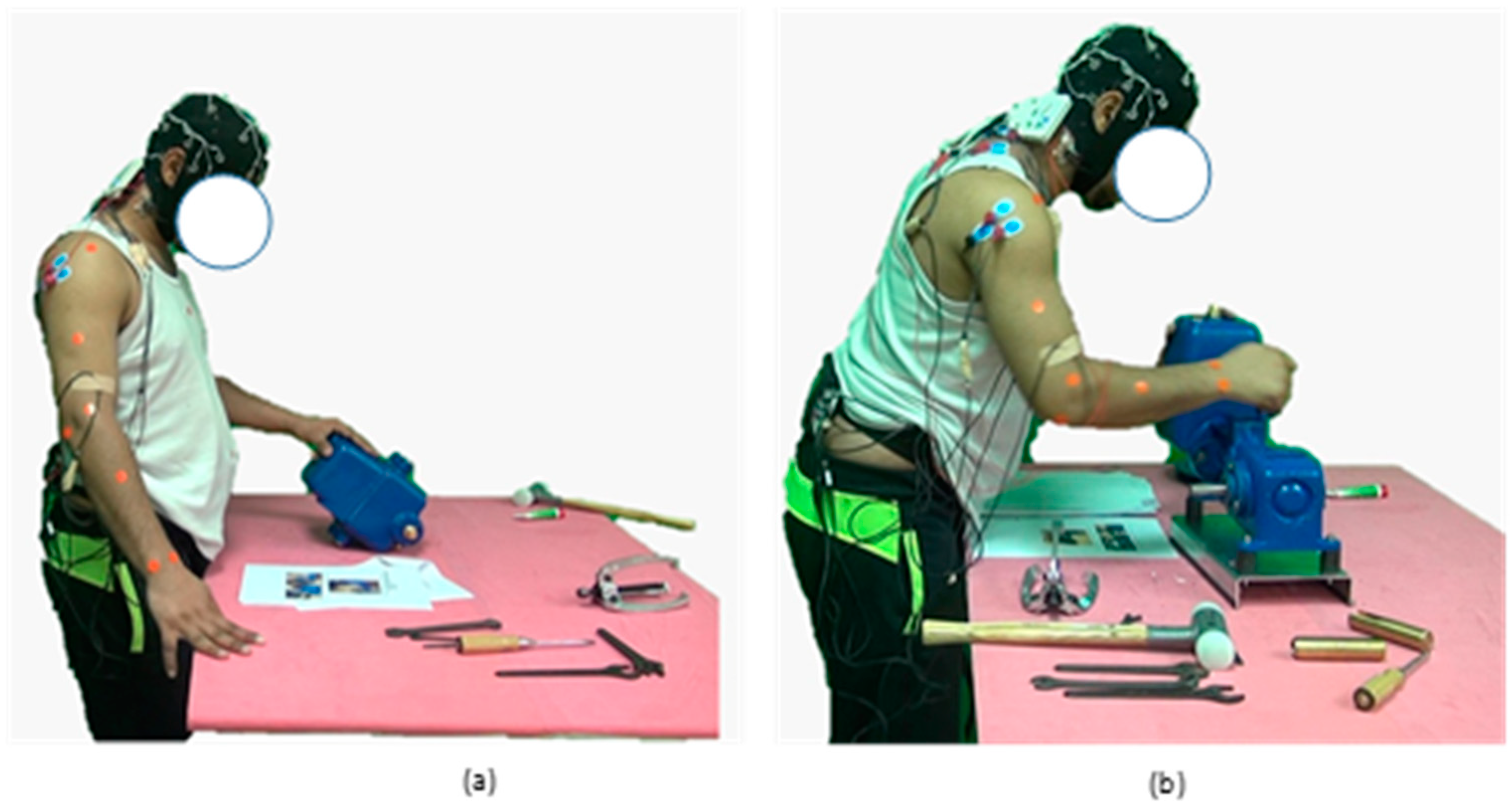

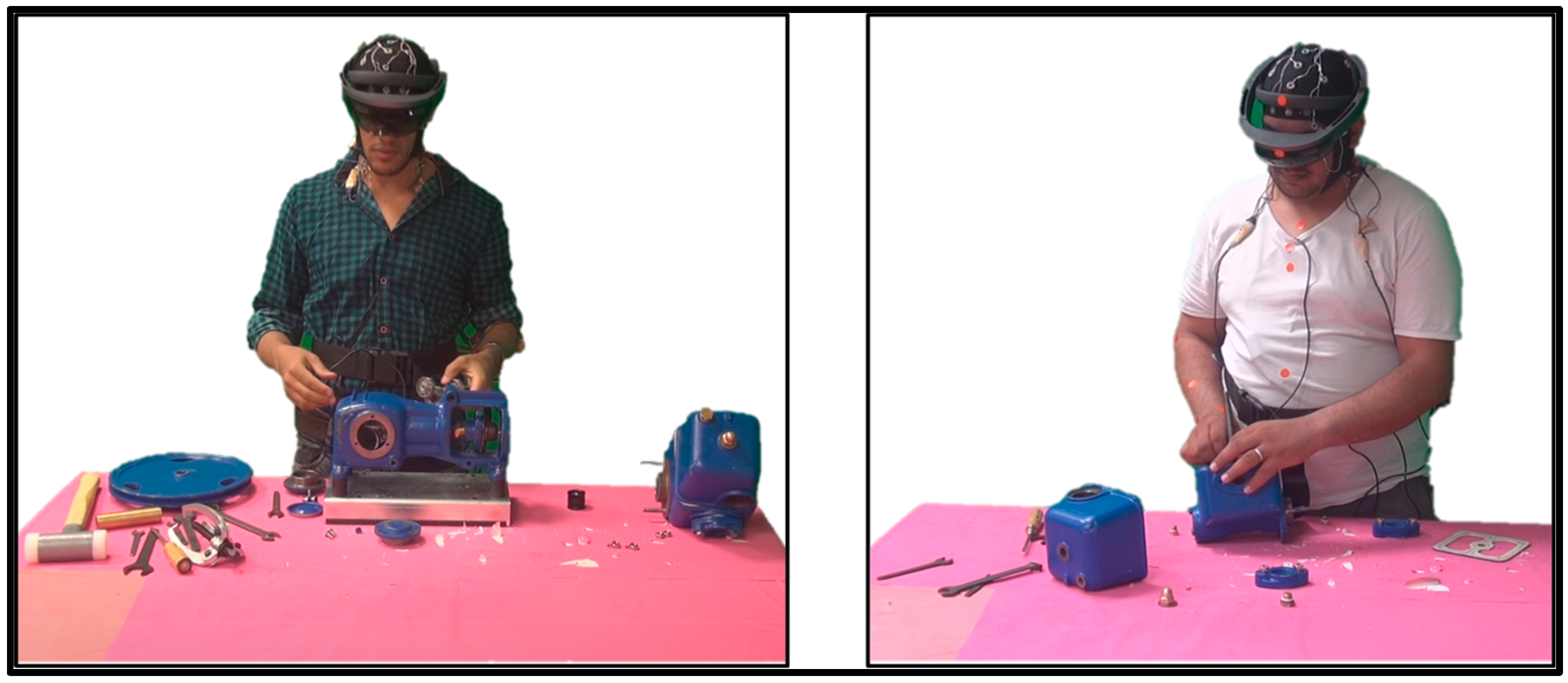

2.2. Experiment Variables

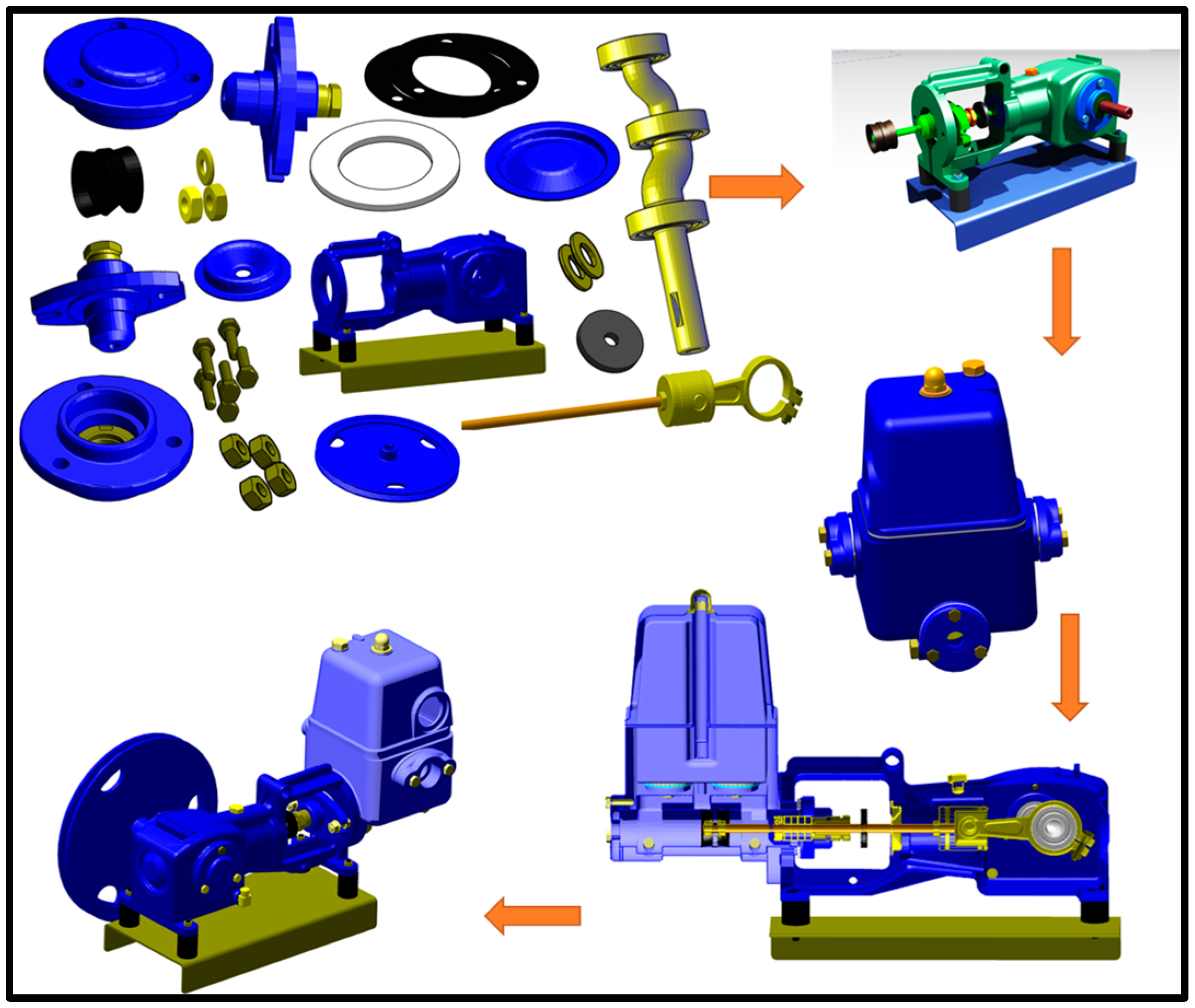

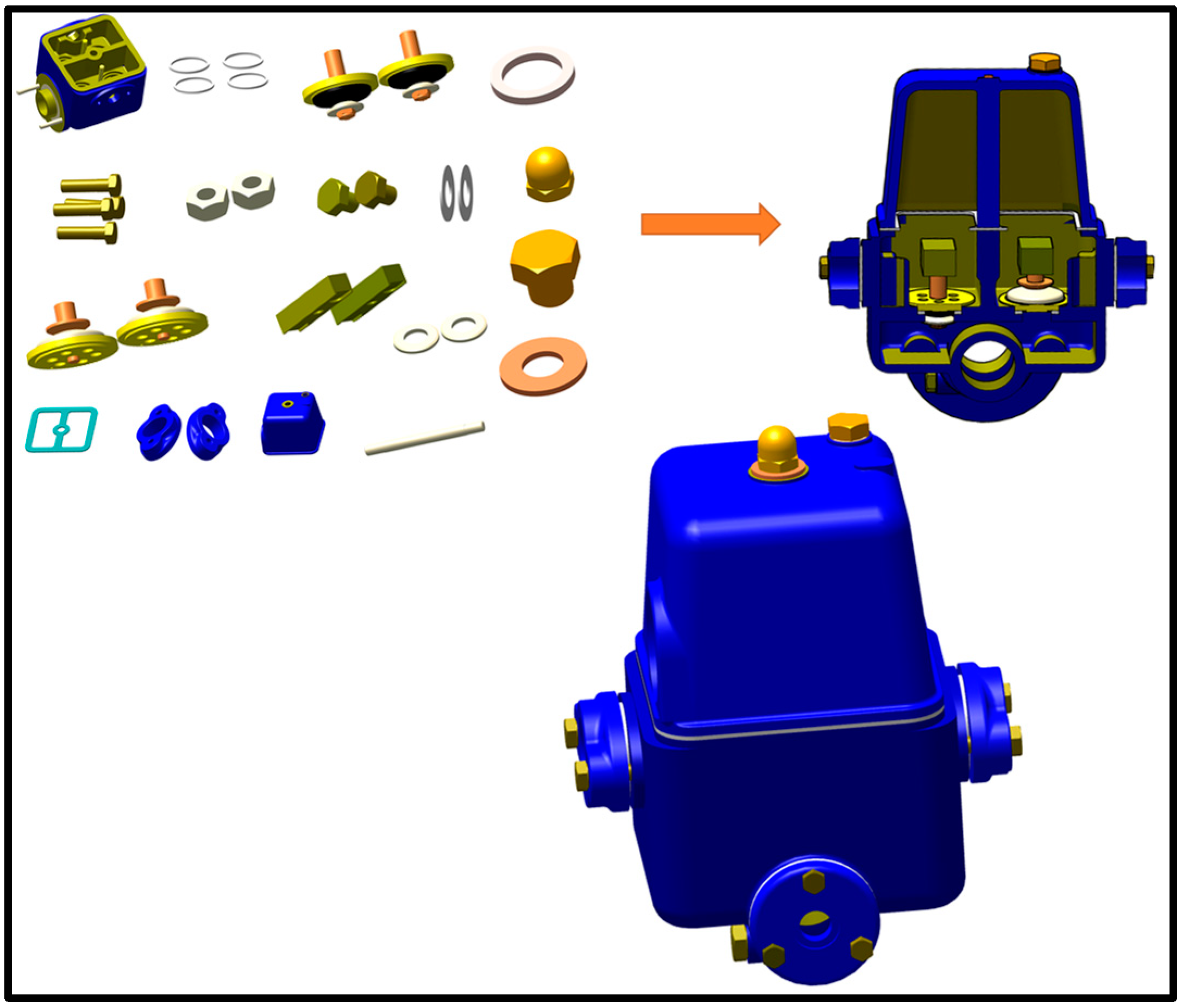

2.2.1. Maintenance Tasks

2.2.2. Instruction Methods

2.3. Experimental Design

2.4. Response Variables

2.4.1. EMG Responses

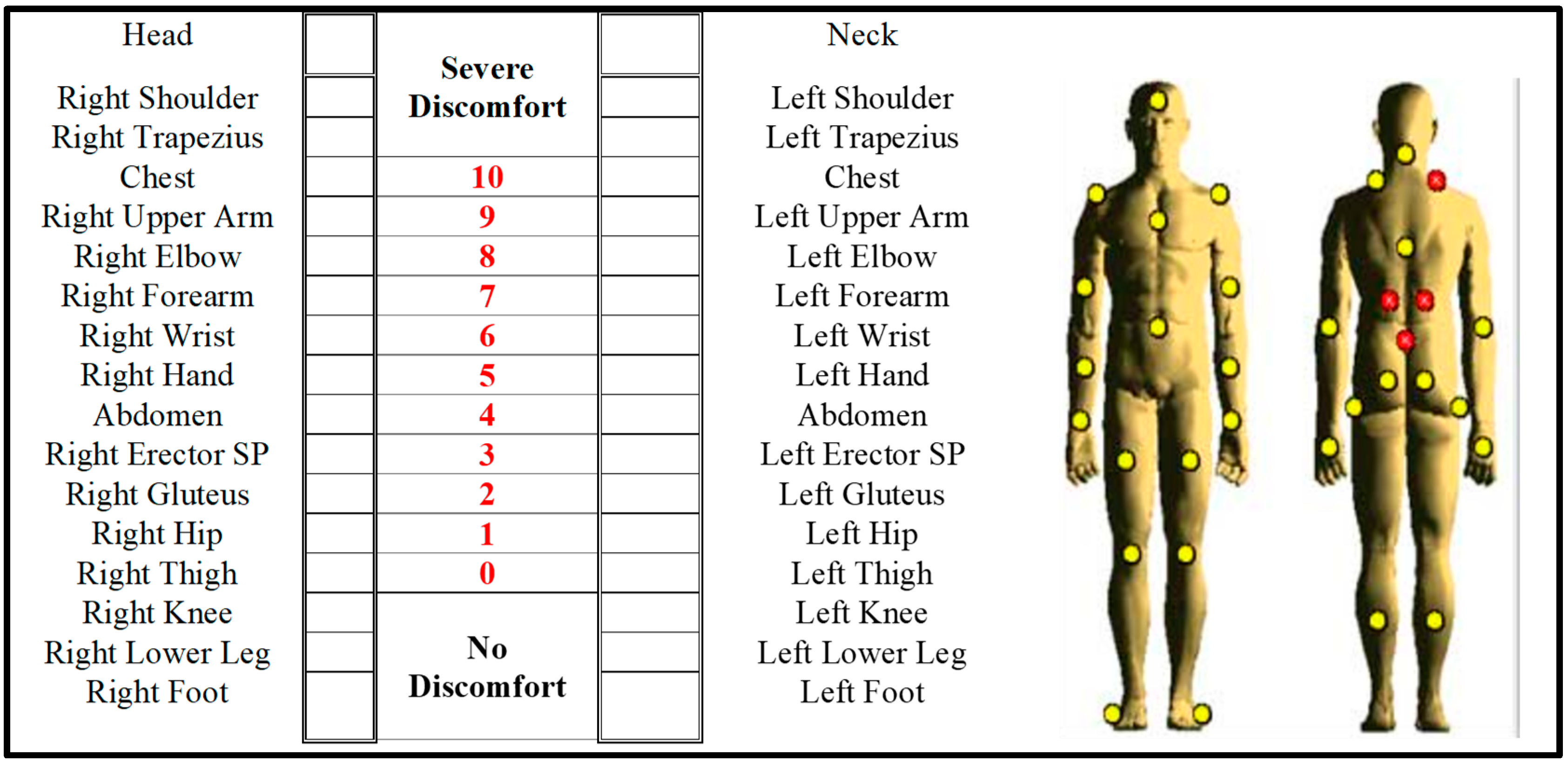

2.4.2. Body Discomfort Ratings

2.4.3. Mental Effort and Perceived Exertion Ratings

2.5. Experimental Procedure

2.6. Statistical Analysis

3. Results

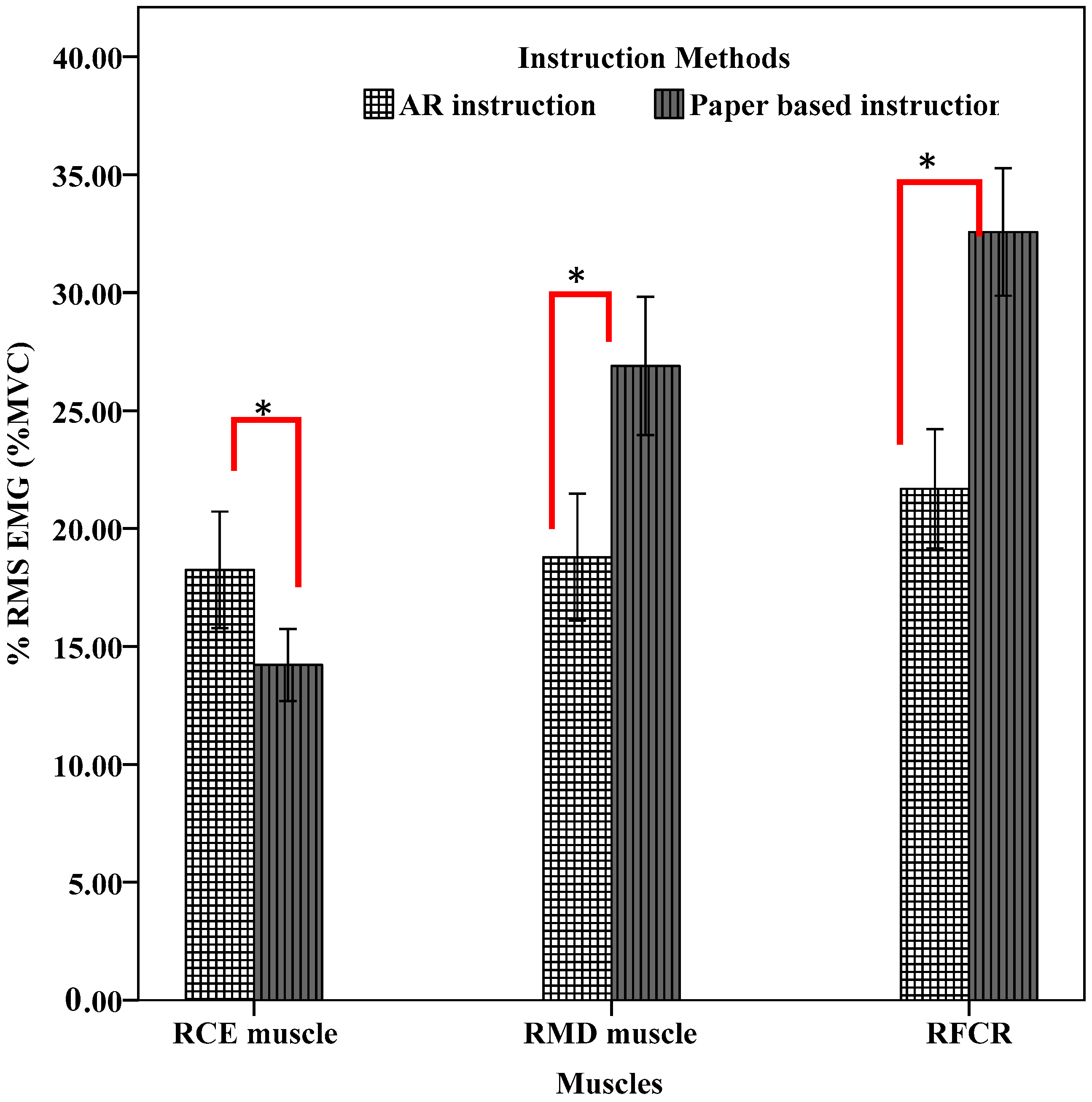

3.1. EMG Response

3.2. Body Discomfort Ratings

3.3. Perceived Exertion and Mental Workload

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cipresso, P.; Giglioli, I.A.C.; Raya, M.A.; Riva, G. The Past, Present, and Future of Virtual and Augmented Reality Research: A Network and Cluster Analysis of the Literature. Front. Psychol. 2018, 9, 2086. [Google Scholar] [CrossRef] [PubMed]

- Cometti, C.; Païzis, C.; Casteleira, A.; Pons, G.; Babault, N. Effects of Mixed Reality Head-Mounted Glasses during 90 Minutes of Mental and Manual Tasks on Cognitive and Physiological Functions. PeerJ 2018, 6, e5847. [Google Scholar] [CrossRef]

- Hanna, M.G.; Ahmed, I.; Nine, J.; Prajapati, S.; Pantanowitz, L. Augmented Reality Technology Using Microsoft HoloLens in Anatomic Pathology. Arch. Pathol. Lab. Med. 2018, 142, 638–644. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Q.; Chen, H.; Song, X.; Tang, H.; Tian, M. An Overview of Augmented Reality Technology. Proc. J. Phys. Conf. Ser. 2019, 1237, 22082. [Google Scholar] [CrossRef]

- Kiyokawa, K. Trends and Vision of Head Mounted Display in Augmented Reality. In Proceedings of the 2012 International Symposium on Ubiquitous Virtual Reality, Daejeon, Republic of Korea, 22–25 August 2012; pp. 14–17. [Google Scholar]

- Xu, W.; Liang, H.-N.; He, A.; Wang, Z. Pointing and Selection Methods for Text Entry in Augmented Reality Head Mounted Displays. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 14–18 October 2019; pp. 279–288. [Google Scholar]

- Chen, Y.; Wang, X.; Xu, H. Human Factors/Ergonomics Evaluation for Virtual Reality Headsets: A Review. CCF Trans. Pervasive Comput. Interact. 2021, 3, 99–111. [Google Scholar] [CrossRef]

- Wang, Y.; Zhai, G.; Chen, S.; Min, X.; Gao, Z.; Song, X. Assessment of Eye Fatigue Caused by Head-Mounted Displays Using Eye-Tracking. Biomed. Eng. Online 2019, 18, 111. [Google Scholar] [CrossRef] [PubMed]

- Mustonen, T.; Berg, M.; Kaistinen, J.; Kawai, T.; Häkkinen, J. Visual Task Performance Using a Monocular See-through Head-Mounted Display (HMD) While Walking. J. Exp. Psychol. Appl. 2013, 19, 333. [Google Scholar] [CrossRef] [PubMed]

- Zhuang, J.; Liu, Y.; Jia, Y.; Huang, Y. User Discomfort Evaluation Research on the Weight and Wearing Mode of Head-Wearable Device. In Advances in Human Factors in Wearable Technologies and Game Design, Proceedings of the AHFE 2018 International Conferences on Human Factors and Wearable Technologies, and Human Factors in Game Design and Virtual Environments, Orlando, FL, USA, 21–25 July 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 98–110. [Google Scholar]

- Mai, C.; Steinbrecher, T. Evaluation of Visual Discomfort Factors in the Context of HMD Usage. Proc. IEEE VR 2018, 2018, 1–4. [Google Scholar]

- Drouot, M.; Le Bigot, N.; Bricard, E.; De Bougrenet, J.-L.; Nourrit, V. Augmented Reality on Industrial Assembly Line: Impact on Effectiveness and Mental Workload. Appl. Ergon. 2022, 103, 103793. [Google Scholar] [CrossRef]

- Suzuki, Y.; Wild, F.; Scanlon, E. Measuring Cognitive Load in Augmented Reality with Physiological Methods: A Systematic Review. J. Comput. Assist. Learn. 2024, 40, 375–393. [Google Scholar] [CrossRef]

- Souchet, A.D.; Philippe, S.; Lourdeaux, D.; Leroy, L. Measuring Visual Fatigue and Cognitive Load via Eye Tracking While Learning with Virtual Reality Head-Mounted Displays: A Review. Int. J. Hum.-Comput. Interact. 2022, 38, 801–824. [Google Scholar] [CrossRef]

- Ajanki, A.; Billinghurst, M.; Gamper, H.; Järvenpää, T.; Kandemir, M.; Kaski, S.; Koskela, M.; Kurimo, M.; Laaksonen, J.; Puolamäki, K.; et al. An Augmented Reality Interface to Contextual Information. Virtual Real. 2011, 15, 161–173. [Google Scholar] [CrossRef]

- Evans, G.; Miller, J.; Pena, M.I.; MacAllister, A.; Winer, E. Evaluating the Microsoft HoloLens through an Augmented Reality Assembly Application. In Degraded Environments: Sensing, Processing, and Display; SPIE: San Francisco, CA, USA, 2017; Volume 10197, p. 101970V. [Google Scholar]

- Andersen, D.; Popescu, V. Ar Interfaces for Mid-Air 6-Dof Alignment: Ergonomics-Aware Design and Evaluation. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Virtual, 9–13 November 2020; pp. 289–300. [Google Scholar]

- Reipschlager, P.; Flemisch, T.; Dachselt, R. Personal Augmented Reality for Information Visualization on Large Interactive Displays. IEEE Trans. Vis. Comput. Graph. 2020, 27, 1182–1192. [Google Scholar] [CrossRef]

- Villagran-Vizcarra, D.C.; Luviano-Cruz, D.; Pérez-Domínguez, L.A.; Méndez-González, L.C.; Garcia-Luna, F. Applications Analyses, Challenges and Development of Augmented Reality in Education, Industry, Marketing, Medicine, and Entertainment. Appl. Sci. 2023, 13, 2766. [Google Scholar] [CrossRef]

- Gao, Y.; Gonzalez, V.A.; Yiu, T.W. The Effectiveness of Traditional Tools and Computer-Aided Technologies for Health and Safety Training in the Construction Sector: A Systematic Review. Comput. Educ. 2019, 138, 101–115. [Google Scholar] [CrossRef]

- Alessa, F.M.; Alhaag, M.H.; Al-harkan, I.M.; Nasr, M.M.; Kaid, H.; Hammami, N. Evaluating Physical Stress across Task Difficulty Levels in Augmented Reality-Assisted Industrial Maintenance. Appl. Sci. 2023, 14, 363. [Google Scholar] [CrossRef]

- Carroll, J.; Hopper, L.; Farrelly, A.M.; Lombard-Vance, R.; Bamidis, P.D.; Konstantinidis, E.I. A Scoping Review of Augmented/Virtual Reality Health and Wellbeing Interventions for Older Adults: Redefining Immersive Virtual Reality. Front. Virtual Real. 2021, 2, 655338. [Google Scholar] [CrossRef]

- Gavish, N.; Gutiérrez, T.; Webel, S.; Rodríguez, J.; Peveri, M.; Bockholt, U.; Tecchia, F. Evaluating Virtual Reality and Augmented Reality Training for Industrial Maintenance and Assembly Tasks. Interact. Learn. Environ. 2015, 23, 778–798. [Google Scholar] [CrossRef]

- Watanabe, M.; Kaneoka, K.; Okubo, Y.; Shiina, I.; Tatsumura, M.; Miyakawa, S. Trunk Muscle Activity While Lifting Objects of Unexpected Weight. Physiotherapy 2013, 99, 78–83. [Google Scholar] [CrossRef]

- Moghaddam, M.; Wilson, N.C.; Modestino, A.S.; Jona, K.; Marsella, S.C. Exploring Augmented Reality for Worker Assistance versus Training. Adv. Eng. Inform. 2021, 50, 101410. [Google Scholar] [CrossRef]

- Söderberg, C.; Johansson, A.; Mattsson, S. Design of Simple Guidelines to Improve Assembly Instructions and Operator Performance. In Proceedings of the 6th Swedish Production Symposium, Gothenburg, Sweden, 16–18 September 2014. [Google Scholar]

- Vanneste, P.; Huang, Y.; Park, J.Y.; Cornillie, F.; Decloedt, B.; den Noortgate, W. Cognitive Support for Assembly Operations by Means of Augmented Reality: An Exploratory Study. Int. J. Hum. Comput. Stud. 2020, 143, 102480. [Google Scholar] [CrossRef]

- Jeffri, N.F.S.; Rambli, D.R.A. A Review of Augmented Reality Systems and Their Effects on Mental Workload and Task Performance. Heliyon 2021, 7, e06277. [Google Scholar] [CrossRef] [PubMed]

- Tumler, J.; Doil, F.; Mecke, R.; Paul, G.; Schenk, M.; Pfister, E.A.; Huckauf, A.; Bockelmann, I.; Roggentin, A. Mobile Augmented Reality in Industrial Applications: Approaches for Solution of User-Related Issues. In Proceedings of the 2008 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, 15–18 September 2008; pp. 87–90. [Google Scholar]

- Kolla, S.S.V.K.; Sanchez, A.; Plapper, P. Comparing Effectiveness of Paper Based and Augmented Reality Instructions for Manual Assembly and Training Tasks. Available SSRN 3859970 2021. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3859970 (accessed on 10 October 2024).

- Havard, V.; Baudry, D.; Savatier, X.; Jeanne, B.; Louis, A.; Mazari, B. Augmented Industrial Maintenance (AIM): A Case Study for Evaluating and Comparing with Paper and Video Media Supports. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 15–18 June 2016; pp. 302–320. [Google Scholar]

- Havard, V.; Baudry, D.; Jeanne, B.; Louis, A.; Savatier, X. A Use Case Study Comparing Augmented Reality (AR) and Electronic Document-Based Maintenance Instructions Considering Tasks Complexity and Operator Competency Level. Virtual Real. 2021, 25, 999–1014. [Google Scholar] [CrossRef]

- Illing, J.; Klinke, P.; Grünefeld, U.; Pfingsthorn, M.; Heuten, W. Time Is Money! Evaluating Augmented Reality Instructions for Time-Critical Assembly Tasks. In Proceedings of the 19th International Conference on Mobile and Ubiquitous Multimedia, Essen, Germany, 22–25 November 2020; pp. 277–287. [Google Scholar]

- Hoover, M.; Miller, J.; Gilbert, S.; Winer, E. Measuring the Performance Impact of Using the Microsoft Hololens 1 to Provide Guided Assembly Work Instructions. J. Comput. Inf. Sci. Eng. 2020, 20, 61001. [Google Scholar] [CrossRef]

- Brice, D.; Rafferty, K.; McLoone, S. AugmenTech: The Usability Evaluation of an AR System for Maintenance in Industry. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 7–10 September 2020; pp. 284–303. [Google Scholar]

- Gonzalez-Franco, M.; Pizarro, R.; Cermeron, J.; Li, K.; Thorn, J.; Hutabarat, W.; Tiwari, A.; Bermell-Garcia, P. Immersive Mixed Reality for Manufacturing Training. Front. Robot. AI 2017, 4, 3. [Google Scholar] [CrossRef]

- Pringle, A.; Campbell, A.G.; Hutka, S.; Keane, M.T. Using an Industry-Ready AR HMD on a Real Maintenance Task: AR Benefits Performance on Certain Task Steps More than Others. In Proceedings of the ISMAR: The International Symposium on Mixed and Augmented Reality 2018, Munich, Germany, 16–20 October 2018. [Google Scholar]

- Jetter, J.; Eimecke, J.; Rese, A. Augmented Reality Tools for Industrial Applications: What Are Potential Key Performance Indicators and Who Benefits? Comput. Human Behav. 2018, 87, 18–33. [Google Scholar] [CrossRef]

- Knight, J.F.; Baber, C. Effect of Head-Mounted Displays on Posture. Hum. Factors 2007, 49, 797–807. [Google Scholar] [CrossRef]

- Kong, Y.-K.; Park, S.-S.; Shim, J.-W.; Choi, K.-H.; Shim, H.-H.; Kia, K.; Kim, J.H. A Passive Upper-Limb Exoskeleton Reduced Muscular Loading during Augmented Reality Interactions. Appl. Ergon. 2023, 109, 103982. [Google Scholar] [CrossRef]

- Penumudi, S.A.; Kuppam, V.A.; Kim, J.H.; Hwang, J. The Effects of Target Location on Musculoskeletal Load, Task Performance, and Subjective Discomfort during Virtual Reality Interactions. Appl. Ergon. 2020, 84, 103010. [Google Scholar] [CrossRef]

- Sardar, S.K.; Lim, C.H.; Yoon, S.H.; Lee, S.C. Ergonomic Risk Assessment of Manufacturing Works in Virtual Reality Context. Int. J. Hum.-Comput. Interact. 2024, 40, 3856–3872. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and Challenges Associated with Augmented Reality for Education: A Systematic Review of the Literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Kia, K.; Hwang, J.; Kim, I.-S.; Ishak, H.; Kim, J.H. The Effects of Target Size and Error Rate on the Cognitive Demand and Stress during Augmented Reality Interactions. Appl. Ergon. 2021, 97, 103502. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.H.; Ari, H.; Madasu, C.; Hwang, J. Evaluation of the Biomechanical Stress in the Neck and Shoulders during Augmented Reality Interactions. Appl. Ergon. 2020, 88, 103175. [Google Scholar] [CrossRef]

- Alessa, F.M.; Alhaag, M.H.; Al-Harkan, I.M.; Ramadan, M.Z.; Alqahtani, F.M. A Neurophysiological Evaluation of Cognitive Load during Augmented Reality Interactions in Various Industrial Maintenance and Assembly Tasks. Sensors 2023, 23, 7698. [Google Scholar] [CrossRef]

- Alhaag, M.H.; Ramadan, M.Z.; Al-harkan, I.M.; Alessa, F.M.; Alkhalefah, H.; Abidi, M.H.; Sayed, A.E. Determining the Fatigue Associated with Different Task Complexity during Maintenance Operations in Males Using Electromyography Features. Int. J. Ind. Ergon. 2022, 88, 103273. [Google Scholar] [CrossRef]

- Potvin, J.R. Effects of Muscle Kinematics on Surface EMG Amplitude and Frequency during Fatiguing Dynamic Contractions. J. Appl. Physiol. 1997, 82, 144–151. [Google Scholar] [CrossRef]

- Khalaf, T.M.; Ramadan, M.Z.; Ragab, A.E.; Alhaag, M.H.; AlSharabi, K.A. Psychophysiological Responses to Manual Lifting of Unknown Loads. PLoS ONE 2021, 16, e0247442. [Google Scholar] [CrossRef]

- Corlett, E.N.; Bishop, R.P. A Technique for Assessing Postural Discomfort. Ergonomics 1976, 19, 175–182. [Google Scholar] [CrossRef]

- Paas, F.G.W.C. Training Strategies for Attaining Transfer of Problem-Solving Skill in Statistics: A Cognitive-Load Approach. J. Educ. Psychol. 1992, 84, 429. [Google Scholar] [CrossRef]

- Borg, G.; Bratfisch, O.; Dorni’c, S. On the Problems of Perceived Difficulty. Scand. J. Psychol. 1971, 12, 249–260. [Google Scholar] [CrossRef]

- Werrlich, S.; Daniel, A.; Ginger, A.; Nguyen, P.-A.; Notni, G. Comparing HMD-Based and Paper-Based Training. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; pp. 134–142. [Google Scholar]

- Hanusz, Z.; Tarasińska, J. Normalization of the Kolmogorov--Smirnov and Shapiro--Wilk Tests of Normality. Biom. Lett. 2015, 52, 85–93. [Google Scholar] [CrossRef]

- Standring, S. Gray’s Anatomy E-Book: Gray’s Anatomy E-Book; Elsevier Health Sciences: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Moore, K.L.; Dalley, A.F. Clinically Oriented Anatomy; Wolters Kluwer India Pvt Ltd.: Gurgaon, India, 2018. [Google Scholar]

- Kendall, F.P.; McCreary, E.K.; Provance, P.G.; Rodgers, M.M.; Romani, W.A. Muscles: Testing and Function with Posture and Pain; Lippincott Williams & Wilkins Baltimore: Baltimore, MD, USA, 2005; Volume 5. [Google Scholar]

- Palastanga, N.; Field, D.; Soames, R. Anatomy and Human Movement: Structure and Function; Elsevier Health Sciences: Amsterdam, The Netherlands, 2006; Volume 20056. [Google Scholar]

- Kar, G.; Vu, A.; Juliá Nehme, B.; Hedge, A. Effects of Mouse, Trackpad and 3D Motion and Gesture Control on Performance, Posture, and Comfort. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Los Angeles, CA, USA, 26–30 October 2015; Volume 59, pp. 327–331. [Google Scholar]

- Penumudi, S.A.; Kuppam, V.A.; Kim, J.H.; Hwang, J. Biomechanical Exposures in the Neck and Shoulders During Virtual Reality Interactions. Graduate Research Theses & Dissertations, Northern Illinois University, DeKalb, IL, USA, 2019. [Google Scholar]

- Samani, A.; Pontonnier, C.; Dumont, G.; Madeleine, P. Shoulder Kinematics and Spatial Pattern of Trapezius Electromyographic Activity in Real and Virtual Environments. PLoS ONE 2015, 10, e0116211. [Google Scholar] [CrossRef] [PubMed]

- Ram, S.; Mahadevan, A.; Rahmat-Khah, H.; Turini, G.; Young, J.G. Effect of Control-Display Gain and Mapping and Use of Armrests on Accuracy in Temporally Limited Touchless Gestural Steering Tasks. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Rome, Italy, 28–30 November 2017; Volume 61, pp. 380–384. [Google Scholar]

- Lim, C.H.; Cha, M.C.; Lee, S.C. Physical Loads on Upper Extremity Muscles While Interacting with Virtual Objects in an Augmented Reality Context. Appl. Ergon. 2024, 120, 104340. [Google Scholar] [CrossRef] [PubMed]

- Marklin, R.W., Jr.; Toll, A.M.; Bauman, E.H.; Simmins, J.J.; LaDisa, J.F., Jr.; Cooper, R. Do Head-Mounted Augmented Reality Devices Affect Muscle Activity and Eye Strain of Utility Workers Who Do Procedural Work? Studies of Operators and Manhole Workers. Hum. Factors 2022, 64, 305–323. [Google Scholar] [CrossRef]

- Kang, H.; Shin, G. Hand Usage Pattern and Upper Body Discomfort of Desktop Touchscreen Users. Ergonomics 2014, 57, 1397–1404. [Google Scholar] [CrossRef]

- Kang, H.; Shin, G. Effects of Touch Target Location on Performance and Physical Demands of Computer Touchscreen Use. Appl. Ergon. 2017, 61, 159–167. [Google Scholar] [CrossRef]

- Boring, S.; Jurmu, M.; Butz, A. Scroll, Tilt or Move It: Using Mobile Phones to Continuously Control Pointers on Large Public Displays. In Proceedings of the 21st Annual Conference of the Australian Computer-Human Interaction Special Interest Group: Design: Open 24/7, Melbourne, Australia, 23–27 November 2009; pp. 161–168. [Google Scholar]

- Shin, G.; Zhu, X. User Discomfort, Work Posture and Muscle Activity While Using a Touchscreen in a Desktop PC Setting. Ergonomics 2011, 54, 733–744. [Google Scholar] [CrossRef]

- Syamala, K.R.; Ailneni, R.C.; Kim, J.H.; Hwang, J. Armrests and Back Support Reduced Biomechanical Loading in the Neck and Upper Extremities during Mobile Phone Use. Appl. Ergon. 2018, 73, 48–54. [Google Scholar] [CrossRef]

- Astrologo, A.N. The Effects of Head-Mounted Displays (HMDS) and Their Inertias on Cervical Spine Loading. Master’s Thesis, Northeastern University, Boston, MA, USA, 2022. [Google Scholar]

| Variable | Mean (SD) | Statistics p (η2) | |||||

|---|---|---|---|---|---|---|---|

| Instruction Methods | Paper-Based Method | AR-Based Method | Instruction | Complexity | Interaction | ||

| Task Complexity | High Difficulty | Low Difficulty | High Difficulty | Low Difficulty | |||

| RSPL | 12.76(2.36) | 9.86(2.57) | 8.50(2.88) | 7.21(2.26) | 0.00(0.36) | 0.00(0.63) | 0.015(0.21) |

| LSPL | 12.58(4.53) | 9.07(4.07) | 7.79(1.48) | 6.93(1.59) | 0.00(0.30) | 0.00(0.31) | 0.04(0.14) |

| RCE | 15.50(3.94) | 12.92(3.61) | 19.36(5.96) | 17.14(6.78) | 0.04(0.15) | 0.00(0.369) | 0.77(0.00) |

| RUT | 15.36(3.56) | 10.64(2.68) | 16.85(4.82) | 16.36(5.02) | 0.01(0.21) | 0.00(0.31) | 0.01(0.23) |

| RMD | 29.57(5.26) | 24.21(8.69) | 21.57(6.17) | 16.25(6.69) | 0.00(0.31) | 0.00(0.54) | 0.92(0.00) |

| RFCR | 35.53(7.39) | 30.14(5.82) | 24.71(5.94) | 18.64(5.76) | 0.00(0.51) | 0.00(0.51) | 0.57(0.01) |

| Variables | Wilcoxon Signed-Rank Test (Z, p) | Mann–Whitney U Test (U1,2, Z1,2, p1,2) |

|---|---|---|

| Body Part | Instruction Method | Task Complexity |

| Overall body | −4.183, 0.0001 | 70.0 vs. 32.5, −1.308 vs. −3.521, 0.191 vs. 0.0001 |

| Head | −2.529, 0.011 | 80.0 vs. 42.0, −0.927 vs. −3.243, 0.354 vs. 0.009 |

| Neck | −1.944, 0.05 | 79.0 vs. 41.0, −0.951 vs. −2.987, 0.342 vs. 0.003 |

| Right and left shoulder | −2.256, 0.024; −2.514, 0.012 | 86 vs. 33, −0.568 vs. −3.2, 0.57 vs. 0.001; 97.5 vs. 49, −0.026 vs. −2.975, 0.98 vs. 0.003 |

| Right and left trapezius | −3.942, 0.0001; −2.392, 0.017 | 73 vs. 75, −1.172 vs. −1.208, 0.241 vs. 0.227; 78 vs. 56, −1.046 vs. −2.696, 0.296 vs. 0.007 |

| Right and left upper arm | −2.884, 0.004; −2.725, 0.006 | 86 vs. 95.5, −0.58 vs. −0.137, 0.562 vs. 0.891; 96.5 vs. 93.5, −0.74 vs. −0.254, 0.941 vs. 0.800 |

| Right and left elbow | −2.380, 0.017; −2.373, 0.018 | 76 vs. 93, −1.109 vs. −0.305, 0.268 vs. 0.761; 84 vs. 63, −0.756 vs. −2.423, 0.450 vs. 0.015 |

| Right and left forearm | −2.825, 0.005; −2.288, 0.022 | 63 vs. 64, −1.706 vs. −1.974, 0.088 vs. 0.048; 62 vs. 70, −1.863 vs. −2.121, 0.062 vs. 0.034 |

| Rightand left wrist | −2.741, 0.006; −2.224, 0.026 | 49.5 vs. 65.5, −2.299 vs. −1.762, 0.022 vs. 0.78; 57 vs. 63, −2.160 vs. −2.415, 0.031 vs. 0.016 |

| Right and left hand | −3.210, 0.001; −3.017, 0.003 | 73 vs. 42, −1.245 vs. −3.266, 0.213 vs. 0.001; 64.5 vs. 70, −1.759 vs. −2.115, 0.079 vs. 0.034 |

| Right and left erector spine | −2.980, 0.003; −2.461, 0.014 | 69 vs. 94, −1.362 vs. −0.205, 0.173 vs. 0.837; 85 vs. 92, −0.616 vs. −0.311, 0.538 vs. 0.756 |

| Right and left hip | −2.546, 0.011; −2.530, 0.011 | 51.5 vs. 70, −2.586 vs. −2.115, 0.010 vs. 0.034; 66 vs. 70, −1.934 vs. −2.115, 0.053 vs. 0.034 |

| Right and left thigh | −2.714, 0.007; −2.53, 0.011 | 67 vs. 84, −1.795 vs. −1.441, 0.073 vs. 0.15; 68 vs. 77, −1.742 vs. −1.80, 0.082 vs. 0.072 |

| Right and left knee | −2.701, 0.007; −2.714, 0.007 | 54 vs. 91, −2.368 vs. −1.00, 0.018 vs. 0.317; 58 vs. 91, −2.152 vs. −1.00, 0.031 vs. 0.317 |

| Right and left leg | −2.63, 0.009; −1.913, 0.056 | 60 vs. 63, −2.12 vs. −2.423, 0.034 vs. 0.015; 71 vs. 77, −1.563 vs. −1.8, 0.118 vs. 0.072 |

| Right and left foot | −2.598, 0.009; −1.768, 0.077 | 68 vs. 63, −1.742 vs. −2.423, 0.082 vs. 0.015; 69 vs. 63, −1.562 vs. −2.412, 0.118 vs. 0.016 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alhaag, M.H.; Alessa, F.M.; Al-harkan, I.M.; Nasr, M.M.; Ramadan, M.Z.; AlSaleem, S.S. Effects of Industrial Maintenance Task Complexity on Neck and Shoulder Muscle Activity During Augmented Reality Interactions. Electronics 2024, 13, 4637. https://doi.org/10.3390/electronics13234637

Alhaag MH, Alessa FM, Al-harkan IM, Nasr MM, Ramadan MZ, AlSaleem SS. Effects of Industrial Maintenance Task Complexity on Neck and Shoulder Muscle Activity During Augmented Reality Interactions. Electronics. 2024; 13(23):4637. https://doi.org/10.3390/electronics13234637

Chicago/Turabian StyleAlhaag, Mohammed H., Faisal M. Alessa, Ibrahim M. Al-harkan, Mustafa M. Nasr, Mohamed Z. Ramadan, and Saleem S. AlSaleem. 2024. "Effects of Industrial Maintenance Task Complexity on Neck and Shoulder Muscle Activity During Augmented Reality Interactions" Electronics 13, no. 23: 4637. https://doi.org/10.3390/electronics13234637

APA StyleAlhaag, M. H., Alessa, F. M., Al-harkan, I. M., Nasr, M. M., Ramadan, M. Z., & AlSaleem, S. S. (2024). Effects of Industrial Maintenance Task Complexity on Neck and Shoulder Muscle Activity During Augmented Reality Interactions. Electronics, 13(23), 4637. https://doi.org/10.3390/electronics13234637