Abstract

Backdoor attacks aim to implant hidden backdoors into Deep Neural Networks (DNNs) so that the victim models perform well on clean images, whereas their predictions would be maliciously changed on poisoned images. However, most existing backdoor attacks lack the invisibility and robustness required for real-world applications, especially when it comes to resisting image compression techniques, such as JPEG and WEBP. To address these issues, in this paper, we propose a Backdoor Attack Method based on Trigger Generation (BATG). Specifically, a deep convolutional generative network is utilized as the trigger generation model to generate effective trigger images and an Invertible Neural Network (INN) is utilized as the trigger injection model to embed the generated trigger images into clean images to create poisoned images. Furthermore, a noise layer is used to simulate image compression attacks for adversarial training, enhancing the robustness against real-world image compression. Comprehensive experiments on benchmark datasets demonstrate the effectiveness, invisibility, and robustness of the proposed BATG.

1. Introduction

In recent years, Deep Neural Networks (DNNs) have been widely and successfully applied in an increasing variety of areas [1,2,3]. Training DNNs requires a large amount of data storage and a lot of computational power consumption. In order to reduce the overhead, users and researchers can use third-party data and train their model with third-party servers, but they will no longer have full control over the training process of DNNs and it can bring some security threats. Among all these threats, backdoor attacks have recently attracted widespread attention. Backdoor attacks maliciously manipulate the predictions of the attacked model through data poisoning. Specifically, the attacker embeds the specified backdoor trigger in clean images to create poisoned images and replaces the corresponding label with a target label. Consequently, the victim model would perform normally on clean images, whereas it behaves incorrectly on poisoned images containing the specific trigger. Hence, this threat can cause serious damage to many mission-critical applications, such as image classification [4], speech recognition [5], and autonomous driving [6].

In general, backdoor attacks should possess two properties: invisibility and robustness. On the one hand, invisibility is required for launching an imperceptible attack. Compared to invisible backdoor attacks, visible backdoor attacks are often too obvious to escape detection by human observers. On the other hand, most existing backdoor attacks are not robust to image compression during data transformation and preprocessing. This is because image compression can corrupt the triggers embedded in the poisoned images. To address both the invisibility and robustness of backdoor attacks, we propose a Backdoor Attack method based on Trigger Generation (BATG). It contains a trigger injection model, a trigger generation model, a surrogate victim model, and a noise layer. Specifically, we first use the trigger generation model to generate effective trigger images and then use the trigger injection model to embed the generated trigger images into clean images to create poisoned images. Both the clean images and poisoned images are utilized to train the surrogate victim model. The trigger injection model is designed to address the invisibility of backdoor attacks and the trigger generation model and the noise layer are designed to address the robustness of backdoor attacks, while the surrogate victim model acts as a discriminator to evaluate the effectiveness of the generated trigger. We propose the backdoor attack method BATG essentially to expose vulnerabilities in DNNs, with the aim of better guiding the enhancement of their robustness going forward.

The main contributions of our work can be summarized as follows:

- A novel Backdoor Attack method based on Trigger Generation called BATG is proposed, wherein the trigger can be automatically generated for launching an effective and invisible backdoor attack.

- A noise layer is applied in the proposed BATG to further enhance the robustness against real-world image compression.

- Comprehensive experiments are conducted. The results show that the proposed BATG achieves a great attack performance under different image compressions.

The rest of this paper is organized as follows. In Section 2, we give the foundation of the backdoor scheme, including backdoor attacks, backdoor defenses, and image steganography. In Section 3, we introduce the proposed BATG in detail. In Section 4, we conduct extensive experiments to demonstrate the superior performance of the proposed BATG. In Section 5, we draw the conclusions of this paper.

2. Related Works

2.1. Backdoor Attack

Until now, backdoor attacks against Deep Neural Networks have been intensively investigated. Gu et al. [7] first proposed a new threat method called BadNets, which is the first backdoor attack method that uses a stamp-like approach to transfer clean images to poisoned images. Visible backdoor attacks which use obvious backdoor triggers can be easily detected by human observers, and as a result, many researchers have started to study invisible backdoor attacks. Chen et al. [8] first explored the necessary conditions for invisible backdoor attacks and used small-amplitude random noise as a backdoor trigger, superimposed on the pixel values of clean images to generate poisoned images. Zhong et al. [9] proposed to generate backdoor triggers using two adversarial attacks and to minimize the distance of the perturbations to ensure the invisibility of the trigger. Li et al. [10] proposed the perturbation increment obtained by regularizing the distance constraint as a backdoor trigger. Nguyen and Tran proposed WaNet [11] to create poisoned images with distorted deformations. Turner et al. [12] proposed an effective backdoor attack method for the clean-label setting. Inspired by image steganography, Li et al. [13] used an encoder–decoder network to encode the attacker’s specific strings into clean images as backdoor triggers to create poisoned images in their proposed ISSBA. Zhang et al. [14] proposed PoisonInk to improve the backdoor attack performance under data transformations by filling the structure of clean images with an attacker’s specific color to create poisoned images. Jiang et al. [15] proposed a backdoor attack that resists common preprocessing-based defenses by applying a uniform color space shift as the trigger. Yu et al. [16] proposed a frequency-based trigger injection model that can successfully inject multiple backdoors with corresponding triggers into a deep image compression model.

2.2. Backdoor Defenses

While backdoor attack techniques continue to evolve, backdoor defense methods against backdoor attacks are also proposed by researchers. Liu et al. [17] proposed Fine-Pruning to eliminate possible backdoor neurons according to their average activation values. Wang et al. [18] proposed Neural Cleanse to prune the victim model’s neurons based on the difference in average neuron activations between clean and poisoned images. Devi Parikh et al. [19] proposed GradCAM to detect areas where backdoor triggers are likely to be present on an image based on a heat map. Gao et al. [20] proposed STRIP to filter poisoned images by overlaying various image patterns to potential poisoned images. Tran et al. [21] proposed Spectral Signature to distinguish between clean images and poisoned images using the covariance of latent space features. Xue et al. [22] systematically revealed that most existing backdoor attacks are susceptible to image compression.

2.3. Image Steganography

Deep image steganography utilizes advanced machine learning models, especially DNNs, to embed secret information within cover images while ensuring the alterations are imperceptible to human eyes and resistant to detection algorithms. The original image before hiding the secret information is called the cover image, and the image after hiding the secret information is called the stego image. Baluja [23] proposed the first deep image steganographic method for hiding images within images in the pixel domain. Wu et al. [24] proposed StegNet, which controls the noise distribution in stego images by designing a new loss function and minimizing the weighted loss. Duan et al. [25] leveraged the U-Net encoding network [26] to improve the quality of both the stego images and the extracted secret images. Zhu et al. [27] proposed HiDDeN, a method that uses a noise layer for adversarial training to enhance the robustness of steganography. Tang et al. [28] proposed the SPAR-RL framework, a more secure image steganography strategy, using deep reinforcement learning. Tang et al. [29] proposed a scheme called JEC-RL, which works with the embedding action sampling mechanism, to apply image steganography to JPEG format images. Ying et al. [30] proposed a method for hiding images within images robustly based on Generative Adversarial Networks (GANs) [31]. Jing et al. [32] proposed HiNet, a method that utilizes INN [33,34] to hide secret images in the frequency domain of cover images rather than in the pixel domain. Tang et al. [35] proposed a joint cost learning framework for batch steganography called JoCoP. Cui et al. [36] introduced meta-learning into deep image steganography and proposed the MSM-DIH framework based on INN to enhance the anti-steganalysis capability of steganographic models.

3. Methodology

In this section, we discuss the objectives and necessary conditions for launching invisible and robust backdoor attacks. Following this discussion, we introduce the proposed BATG in detail.

3.1. Attack Requirements and Goals

In general, backdoor attackers aim to implant hidden backdoors into DNNs through data poisoning. For the context of this research, we assume that the attacker’s capabilities are constrained to contaminating a portion of the training data [37] while lacking access to other critical training elements (e.g., loss functions, training schedules, and model design). The attacker is limited to injecting poisoned data during the training phase. During the inference phase, the attacker leverages poisoned images to elicit the malicious behavior by querying the victim model without requiring interference with other images.

As an invisible and robust backdoor attack, the proposed BATG should achieve the following three main goals:

- Effectiveness: the poisoned images containing the specified trigger image should be misclassified into the target label with a high probability.

- Invisibility: the poisoned images should appear identical to the original clean images in order to avoid detection by human observers during the inference phase.

- Robustness: the attack should still be effective when the poisoned images are processed by existing preprocessing operations, especially JPEG and WEBP image compression.

3.2. The Proposed BATG

First of all, we provide an overview of the proposed BATG. After that, we describe its components in detail. Finally, we present the loss functions used to train each model.

3.2.1. The Overview of BATG

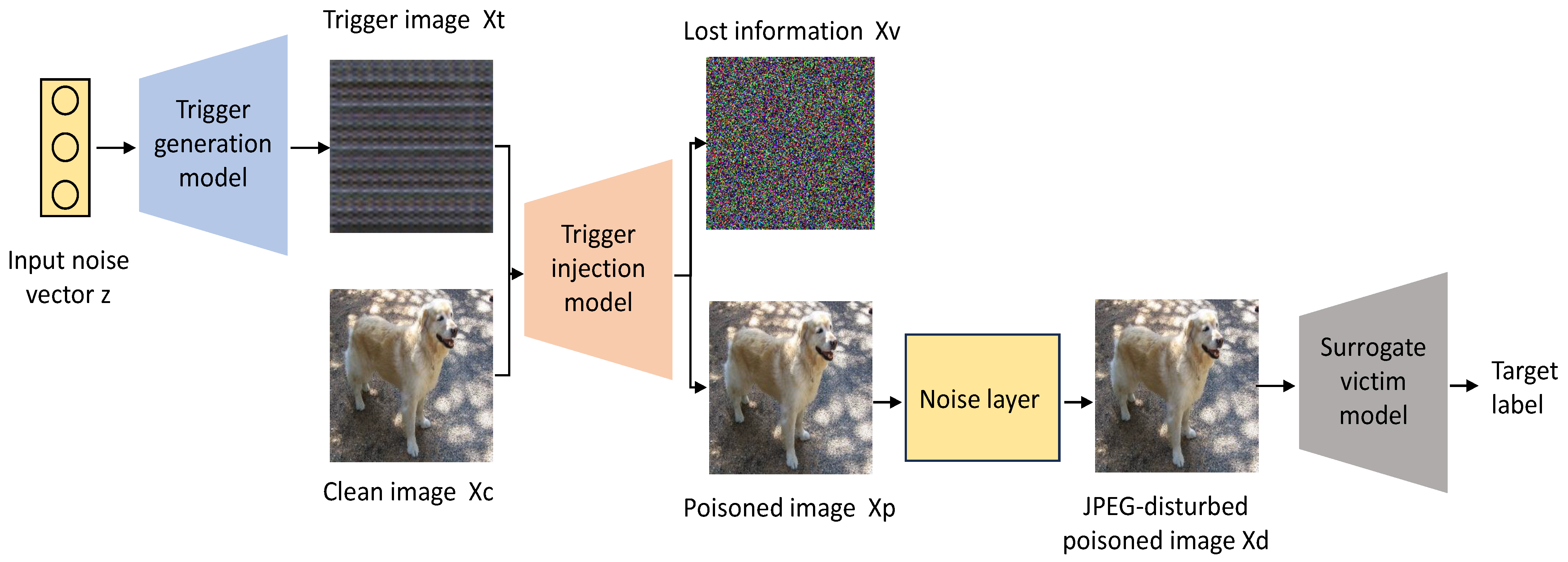

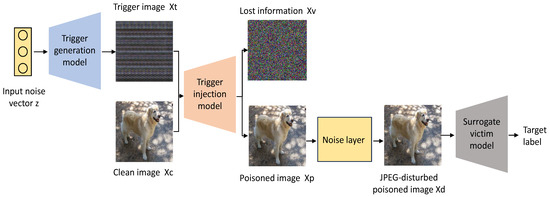

The schematic diagram of the BATG is shown in Figure 1. The proposed BATG framework encompasses three models and a noise layer, namely the trigger generation model, the trigger injection model, the surrogate victim model, and the JPEG_Mask [27].

Figure 1.

The pipeline of the proposed BATG. It contains a trigger injection model, a trigger generation model, a surrogate victim model, and a noise layer.

The trigger generation model constitutes a form of deep convolutional network designed for image generation. Its purpose is to generate effective trigger images. The trigger generation model leverages an input noise vector z to produce a trigger image . The processing performed by the trigger injection model is essentially an image hiding. Its purpose is to embed trigger images into clean images in an invisible manner. The trigger injection model receives the generated trigger images and clean images and embeds the former into the latter to create poisoned images and output lost information . These poisoned images are processed by the noise layer JPEG_Mask to obtain the JPEG-disturbed poisoned images . The role of the surrogate victim model is to detect any remaining trigger patterns in the JPEG-distorted poisoned images . The surrogate victim model takes the JPEG-distorted poisoned images as input and returns probability vectors P representing the predictions of .

In the noise layer, the JPEG_Mask is an approximation of real-world JPEG image compression, omitting the quantization step and instead directly setting some high-frequency coefficients to zero. Specifically, the JPEG_Mask employs a fixed mask that only keeps 25 low-frequency DCT coefficients in the luminance (Y) channel and 9 low-frequency coefficients in the chrominance (U, V) channels of the poisoned images. The noise layer is differentiable, allowing for the backpropagation of gradients and facilitating model training.

3.2.2. The Structure of Trigger Generation Model

In the proposed BATG, we utilize the generator from a Deep Convolutional Generative Adversarial Network (DCGAN) [38] as the trigger generation model. The architecture of the trigger generation model is designed to progressively increase the spatial resolution of the generated image through a series of layers. It starts with an input noise vector z, which is sampled from a standard Gaussian distribution. The input noise vector z is then fed into a fully connected layer to obtain a low-resolution feature map. This initial feature map is subsequently passed through a sequence of transposed convolution layers. Each transposed convolution layer applies a filter that expands the spatial dimensions of the feature map. These layers are often paired with batch normalization [39] to stabilize the training process and improve the quality of the generated trigger image. Activation functions, including ReLU (Rectified Linear Unit) [40] for intermediate layers and Sigmoid for the final layer, are used to introduce nonlinearities and ensure that the output is scaled appropriately. The entire trigger generation process can be represented as

where is defined as the trigger generation process and denotes the parameters of the trigger generation model.

3.2.3. The Structure of Trigger Injection Model

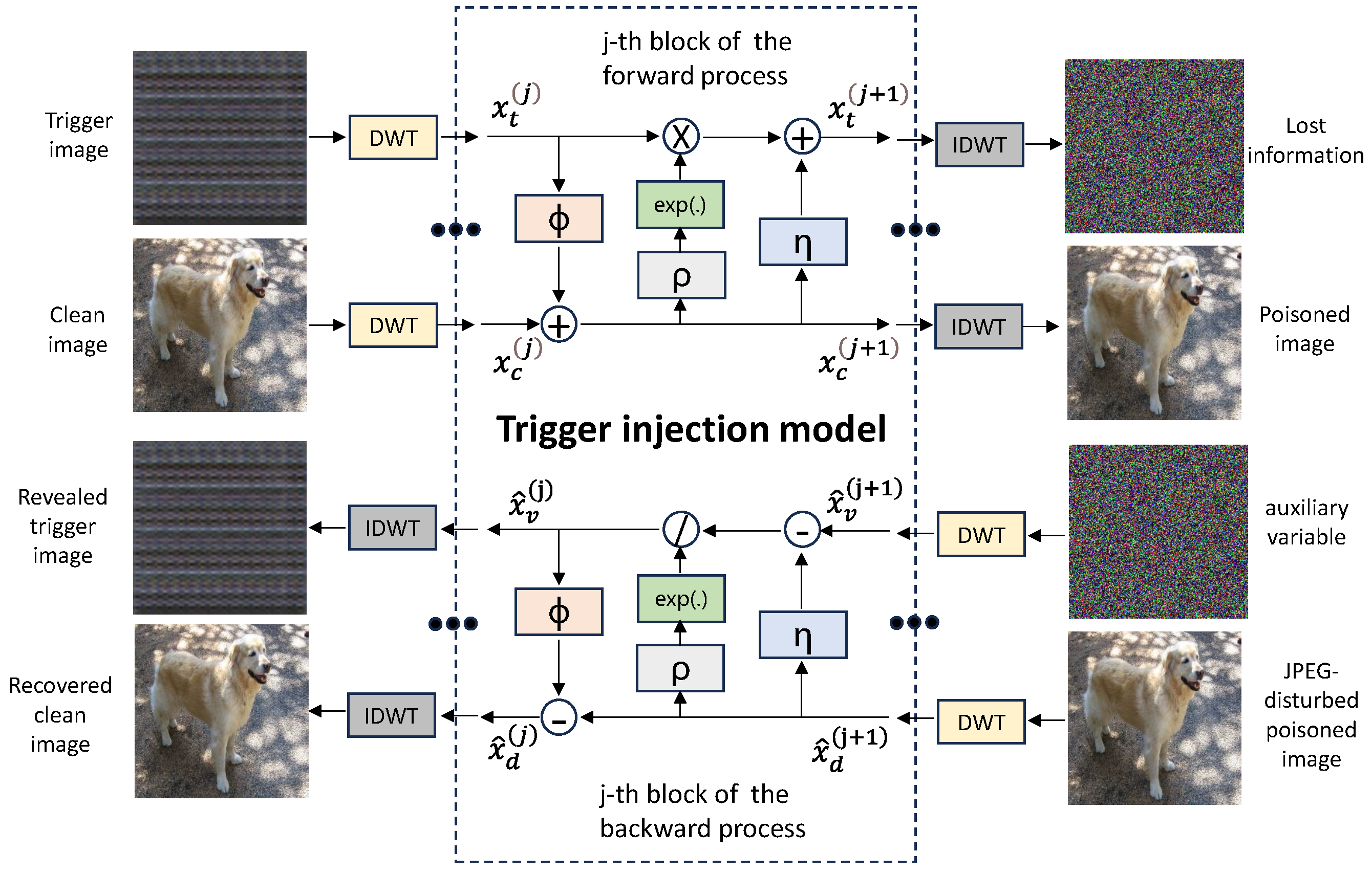

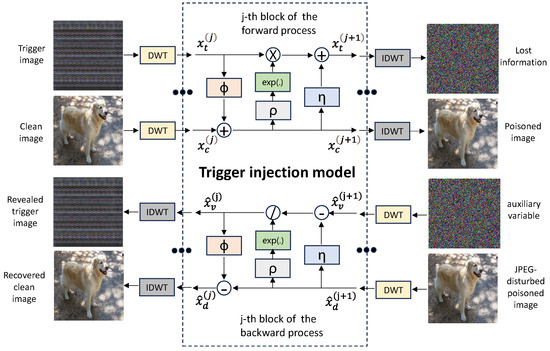

In the proposed BATG, we utilize an INN as the trigger injection model. The schematic diagram of the trigger injection model is shown in Figure 2. The architecture of the trigger injection model consists of 16 blocks designed to facilitate efficient and robust image embedding and revealing processes. These blocks typically include convolutional layers for feature extraction, normalization layers for maintaining stability across different inputs, and nonlinear activation functions to introduce complexity and enable the network to learn richer representations. The combination of these components allows the INN to integrate one image into another during the forward process and accurately reveal the embedded image during the backward process, thereby ensuring high fidelity in both embedding and revealing phases. When using the INN as the trigger injection model, the input first undergoes a Discrete Wavelet Transform (DWT), and the final output is processed through an Inverse Discrete Wavelet Transform (IDWT).

Figure 2.

The schematic diagram of the trigger injection model. The same block shares parameters in the forward process and the backward process.

During the forward process, the trigger injection model receives the generated triggers and clean images and outputs poisoned images and lost information as

where is defined as the forward process of the trigger injection model and denotes the parameters of the trigger injection model. The poisoned images are processed by the noise layer JPEG_Mask to obtain the JPEG-disturbed poisoned images as

where is defined as the process of the noise layer JPEG_Mask. During the backward process, the trigger injection model receives the JPEG-distorted poisoned images and auxiliary variable and outputs revealed trigger images and recovered clean images as

where is defined as the backward process of the trigger injection model.

In the forward process of the trigger injection model, the affine transformation of the j-th block can be formulated as

where is equal to and is equal to , with , , and indicating dense blocks [41] of the same structure and different parameters. In the backward process of the trigger injection model, the affine transformation of the j-th block can be formulated as

where is equal to and is equal to .

3.2.4. Loss Function

In the proposed BATG, the actual training process is as follows. First, we select a specific image as the trigger to train the trigger injection model. Next, we use the trained trigger injection model to create poisoned images, following the standard procedure of backdoor attacks to train the surrogate victim model. Finally, we fix the parameters of both the trigger injection model and the surrogate victim model and train the trigger generation model.

- Since an INN acts as the trigger injection model, its training loss is formulated aswhere indicates the operation of extracting low-frequency sub-bands after wavelet decomposition and measures the distance. In the training of the trigger injection model, hyperparameters , , and are set to 5.0, 1.0, and 10.0.

- As for the trigger generation model, its training loss is formulated aswhere y denotes the original label of or the target label of and P denotes their probability vector predicted by the surrogate victim model. In the training of the trigger generation model, hyperparameters and are set to 10.0 and 1.0.

4. Experiment

In this section, we conduct extensive experiments to demonstrate the attack performance of the proposed BATG. The details of the adopted experimental setups are given in Section 4.1. The attack performance of the proposed BATG is presented in Section 4.2. The visual quality of the poisoned images is displayed in Section 4.3. The ablation study conducted to investigate the impact of different factors in the proposed BATG is reported in Section 4.4. The resistance of the proposed BATG against different backdoor defense techniques is evaluated in Section 4.5. The generalization test of the proposed BATG is shown in Section 4.6.

4.1. Experimental Setups

Datasets. We use the ImageNet [42] dataset. For simplicity, we randomly select a subset containing 200 classes, with 100,000 images for training (500 images per class) and 10,000 images for testing (50 images per class). The image size is 3 × 224 × 224.

Victim model. To perform evaluations, we consider two popular recognition networks for the victim models: ResNet-18 [1] and VGG-19 [43]. For the training of our victim models, the victim model is trained using an SGD optimizer with a momentum of 0.9 and an initial learning rate of 0.001, which is further adjusted to 0.0001 and 0.00001 at epoch 15 and epoch 25, respectively.

Backdoor attack methods. Without loss of generality, we compare the proposed BATG with the state-of-the-art robust backdoor attack method ISSBA [13]. The significant architectural differences between BATG and ISSBA are as follows:

- ISSBA utilizes a convolutional model [44] as the trigger injection model, whereas BATG utilizes an INN.

- ISSBA uses specified strings as triggers, while BATG uses generated trigger images.

Computational overhead. For the proposed BATG, the trigger injection model was trained for 40 epochs, and the trigger generation model was trained for 60 epochs. Training the trigger injection model took ten days, while training the trigger generation model took three days. The experiments are implemented by PyTorch 1.3.1 and executed on NVIDIA TESLA V100 GPU. When training the trigger injection model, the batch size was set to eight, which occupied 22 GB of memory. When training the trigger generation model, the batch size was set to four, which occupied 17 GB of memory.

Metrics. We use the Clean Data Accuracy (CDA) and Attack Success Rate (ASR) to evaluate the influence of backdoor attacks on the original tasks and the effectiveness of backdoor attacks. Specifically, CDA is the performance on the clean test set, i.e., the ratio of trigger-free test images that are correctly predicted to their ground-truth labels, and the ASR is the performance on the poisoned test set, i.e., the ratio of poisoned images that are correctly classified as the target attack labels. For invisibility evaluation, we compare clean and poisoned images with PSNR [45].

4.2. Attack Performance Under Image Compression

In this part, the attack performance of BATG and ISSBA against different victim models on the ImageNet dataset under different image compressions is evaluated. The default setup indicates that clean and poisoned images are not processed by any image compression operation. The JPEG and WEBP setups indicate that clean and poisoned images are processed by the corresponding image compression attacks. JPEG is an image compression format that uses lossy compression to reduce file sizes. It is commonly used for photographs and web images, balancing quality and file size effectively. WEBP is an image format developed by Google that provides both lossy and lossless compression. It offers smaller file sizes than traditional formats like JPEG and PNG while maintaining a high image quality, making it ideal for web use.

Experimental results targeting the victim model ResNet-18 on the ImageNet dataset with poisoning rates of 10% and 1% are given in Table 1 and Table 2. Experimental results targeting the victim model VGG-19 on the ImageNet dataset with poisoning rates of 10% and 1% are given in Table 3 and Table 4.

Table 1.

The attack performance of different backdoor attack methods against victim model ResNet-18 on the ImageNet dataset with poisoning rate of 10%. The bold number indicates the best value within the column.

Table 2.

The attack performance of different backdoor attack methods against victim model ResNet-18 on the ImageNet dataset with poisoning rate of 1%. The bold number indicates the best value within the column.

Table 3.

The attack performance of different backdoor attack methods against victim model VGG-19 on the ImageNet dataset with poisoning rate of 10%. The bold number indicates the best value within the column.

Table 4.

The attack performance of different backdoor attack methods against victim model VGG-19 on the ImageNet dataset with poisoning rate of 1%. The bold number indicates the best value within the column.

- The proposed BATG outperforms the state-of-the-art robust backdoor attack method ISSBA, as shown in Table 1, Table 2, Table 3 and Table 4. In the case of targeting the victim model ResNet-18 with a poisoning rate of 10%, the improvement in the ASR is 0.60%, 0.30%, and 0.23% for the setups of default, JPEG, and WEBP. In the case of targeting the victim model ResNet-18 with a poisoning rate of 1%, the improvement in the ASR is 6.16%, 4.58%, and 0.28% for the setups of default, JPEG, and WEBP. These results demonstrate that the proposed BATG is an effective and robust backdoor attack against different image compressions.

- The proposed BATG is also effective when targeting another victim model VGG-19, as shown in Table 3 and Table 4, despite the fixed surrogate victim model (ResNet-18). In the case of targeting the victim model VGG-19 with a poisoning rate of 10%, the ASR also reaches high values of 99.89%, 99.01%, and 98.21% for the setups of default, JPEG, and WEBP. These results demonstrate that the trigger images generated by the trigger generation model are both general and effective.

- The proposed BATG still performs well even with a very low poisoning rate (1%), as shown in Table 2 and Table 4, despite using a high poisoning rate (10%) during the training process of the trigger generation model. In the case of targeting the victim model ResNet-18 with a poisoning rate of 1%, the ASR also reaches high values of 99.69%, 98.21%, and 96.06% for the setups of default, JPEG, and WEBP. These results demonstrate that the proposed BATG is also effective with a low poisoning rate.

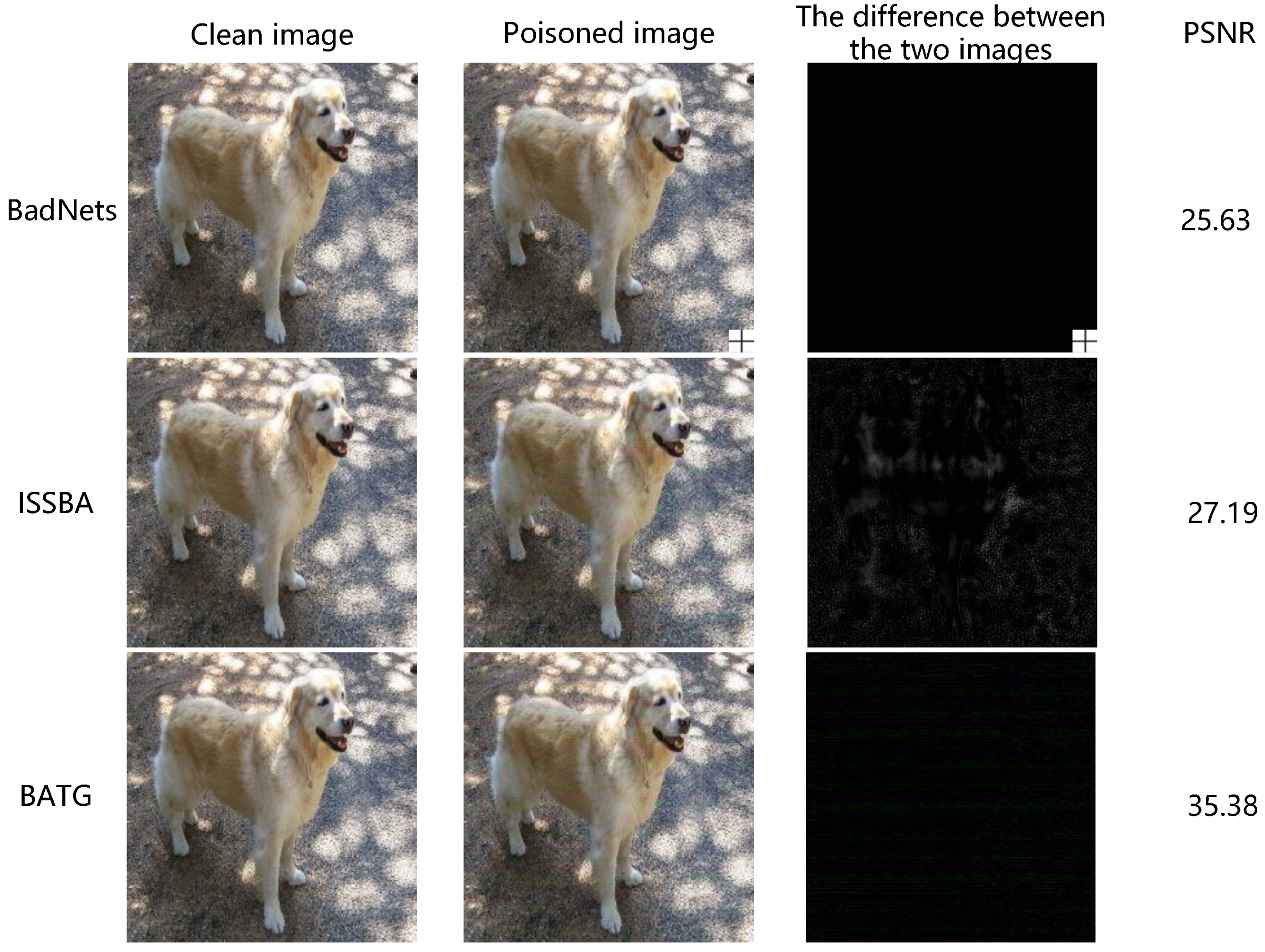

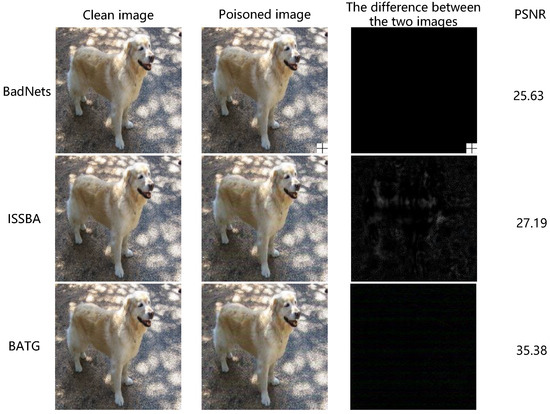

4.3. Visual Quality of Poisoned Images

In this part, the visual quality of the poisoned images of BATG, ISSBA, and a visible backdoor attack BadNets [7] is compared from two perspectives, including the subjective evaluation and the objective evaluation. As shown in Figure 3, the observations can be made as follows:

Figure 3.

Visual quality of different methods on the ImageNet dataset. The difference between the two images is displayed by subtracting the pixel values of the corresponding clean image from the poisoned image. The PSNR values of different methods represent the average distinction between their clean and poisoned images.

- For subjective evaluation, the difference between the clean image and the poisoned image in the proposed BATG is rather weak. It can be subjectively observed that the proposed BATG is more imperceptible to human observers compared to other methods.

- For objective evaluation, the PSNR values for BadNets, ISSBA, and BATG, respectively, are 25.63, 27.19, and 35.38. The higher the PSNR value, the smaller the difference between the clean image and the poisoned image. It can be objectively observed that the proposed BATG exhibits greater stealth compared to other methods.

4.4. Ablation Study

In this part, ablation studies are conducted to investigate the impact of different factors in the proposed BATG, including the trigger generation model, the trigger injection model, and the noise layer. The original BATG is taken as the baseline, and six variants are designed as follows.

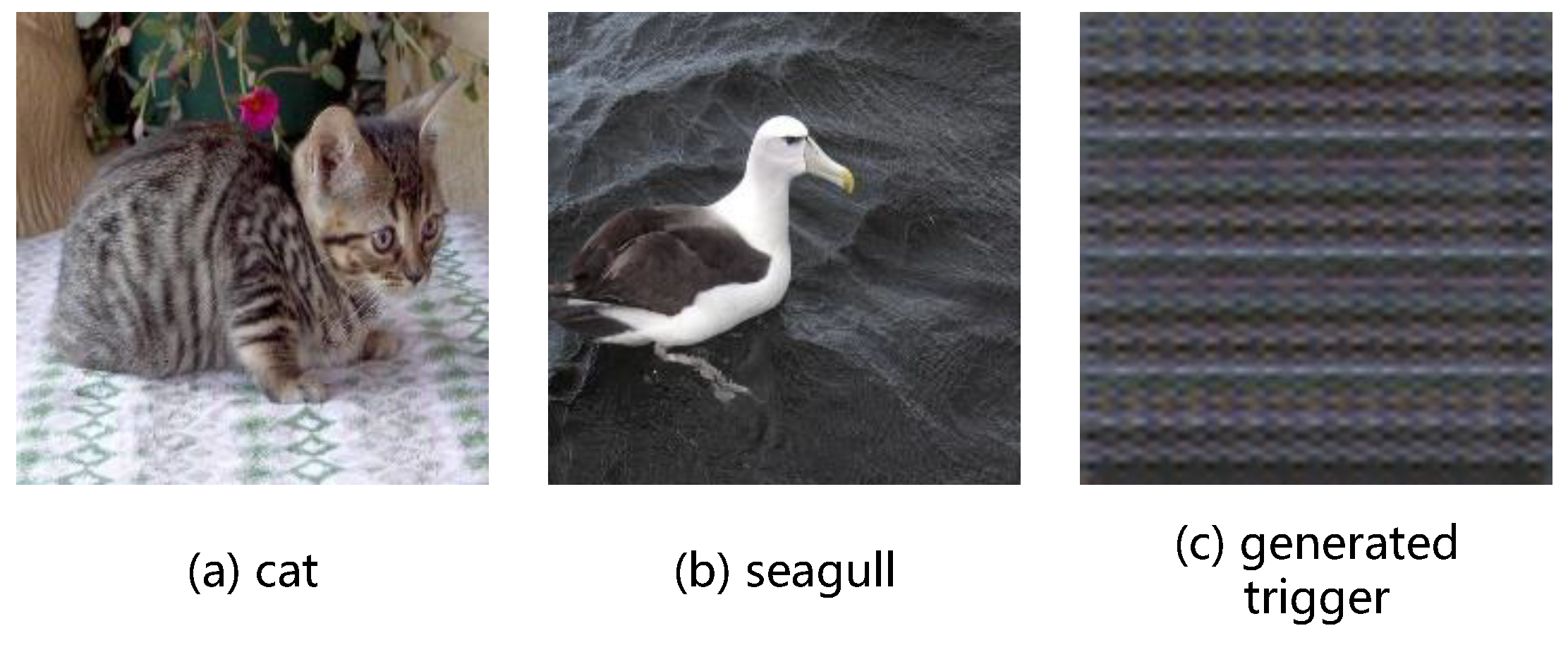

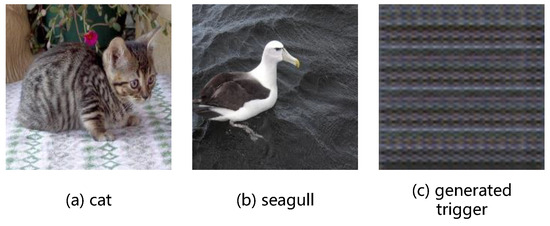

Variant I removes the trigger generation model and uses a selected image of a cat as the specific trigger. Variant II removes the trigger generation model and the noise layer while using a selected image of a cat as the specific trigger. Variant III removes the trigger generation model and uses a selected image of a seagull as the specific trigger. Variant IV removes the trigger generation model and the noise layer while using a selected image of a seagull as the specific trigger. Variant V only removes the noise layer compared to the baseline BATG. Variant VI trained its trigger injection model for 200 epochs, while the baseline BATG trained its trigger injection model for 40 epochs. The attack performance of all these variants and the baseline BATG is evaluated against the victim model ResNet-18 on the ImageNet dataset with a poisoning rate of 10% for the setups of JPEG. The mentioned images selected by these variants and the generated trigger are shown in Figure 4. As shown in Table 5, some conclusions can be drawn as follows:

Figure 4.

The mentioned images selected by these variants and the generated trigger image in this paper.

Table 5.

Clean Data Accuracy (CDA), Attack Success Rate (ASR), and average PSNR of the baseline BATG and its variants.

- By comparing Variant I and II, Variant III and IV, and Variant V with the baseline BATG, it can be observed that methods with the noise layer outperform their counterparts without the noise layer. These results demonstrate that the noise layer JPEG_Mask is essential to the proposed BATG.

- By comparing Variant I, Variant III, and the baseline BATG, it can be observed that the attack performance of backdoor attacks varies greatly when different images are used as triggers. These results demonstrate that the trigger generation model in the design of the proposed BATG is necessary and the generated triggers are the most effective among all the tested triggers.

- By comparing Variant VI with the baseline BATG, it can be observed that the backdoor attack performance of Variant VI for the setups of JPEG is very poor. These results demonstrate that the experimental setups of the proposed BATG are optimal and the trigger injection model should not be over-trained.

- By comparing Variant III and V, it can be observed that the trigger generation model contributes more significantly to BATG’s performance than the noise layer. These results demonstrate that the trigger generation method is more effective than the conventional adversarial training technique.

4.5. Resistance to Backdoor Defense

In this part, we evaluate the resistance of the proposed BATG against different backdoor defense techniques. During the testing phase, the proposed BATG demonstrates robustness against state-of-the-art defense methods without requiring any specific adversarial techniques.

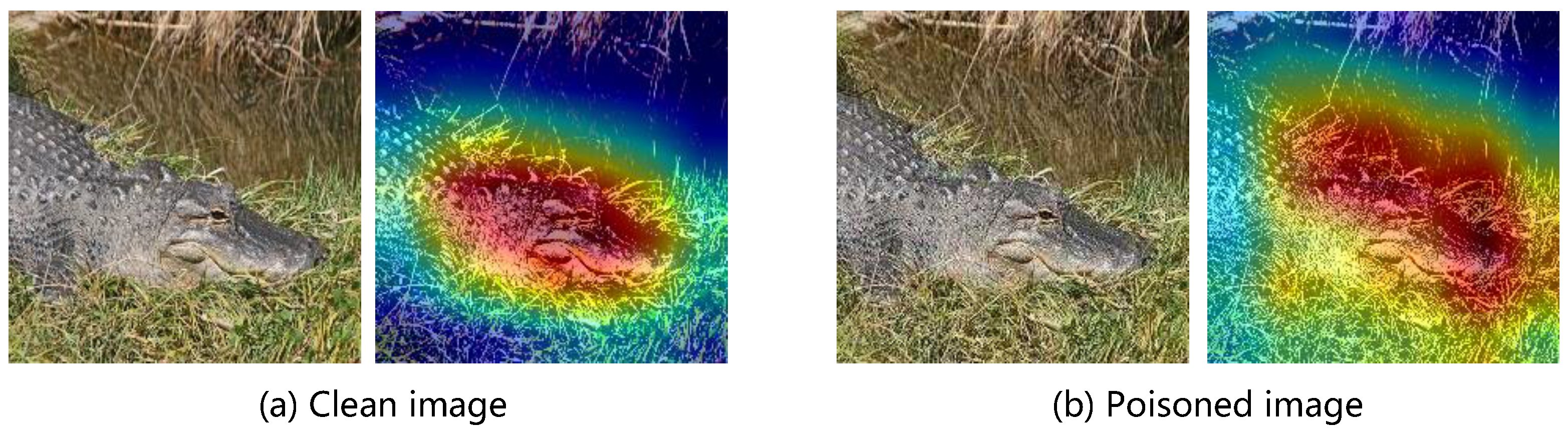

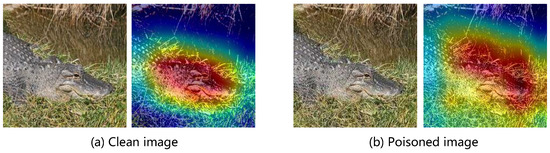

- Grad-CAM visualizes the attention map of suspicious images and regards the area with the highest score as the trigger region and then removes this region and restores it with image inpainting techniques. As shown in Figure 5, the heat map generated by Grad-CAM is similar for both the clean image and poisoned image so that the proposed BATG cannot be detected by Grad-CAM.

Figure 5. The Grad-CAM of clean image and poisoned image according to the victim model attacked by the proposed BATG. Areas with higher scores of the Grad-CAM are marked with red color.

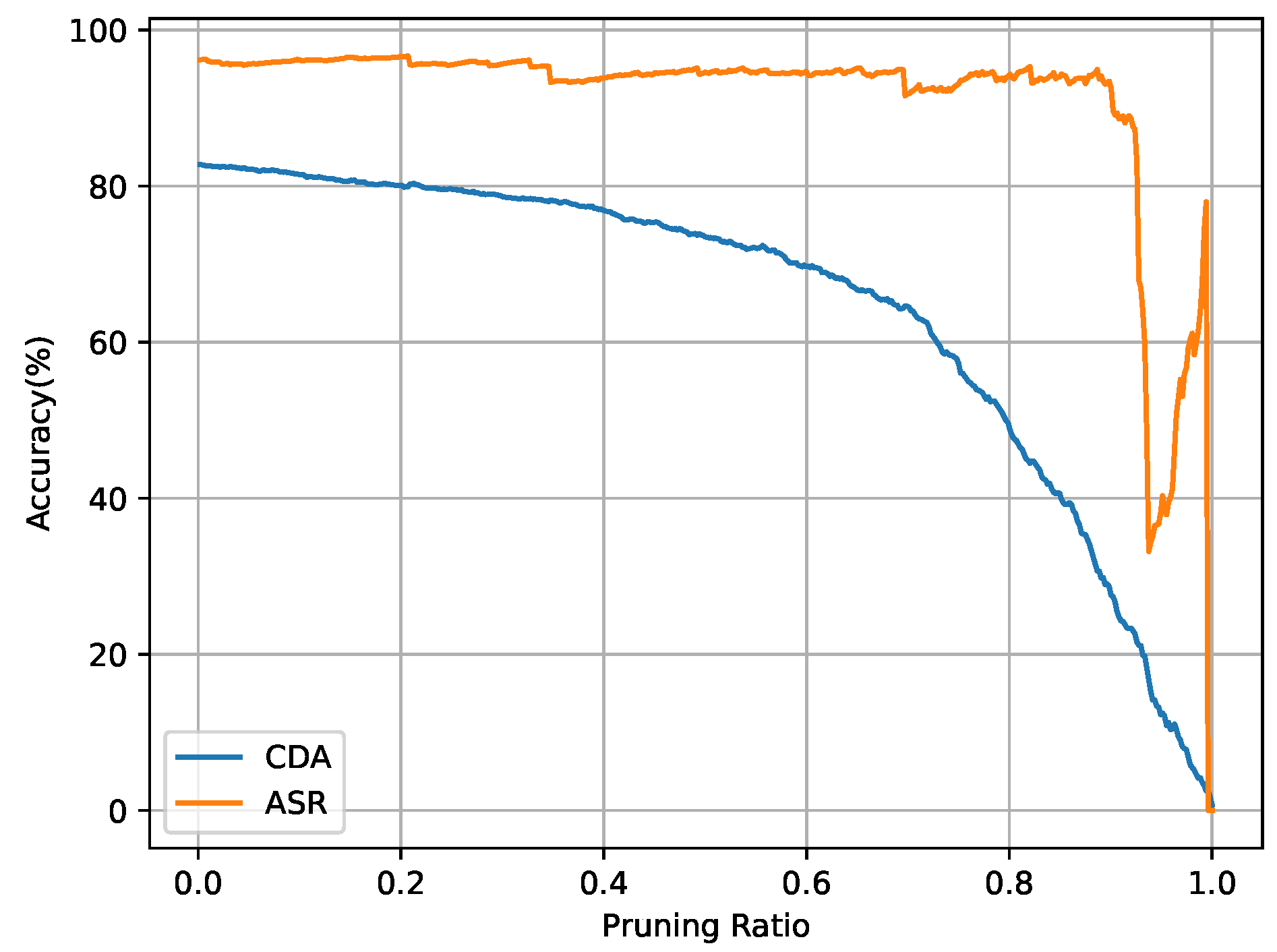

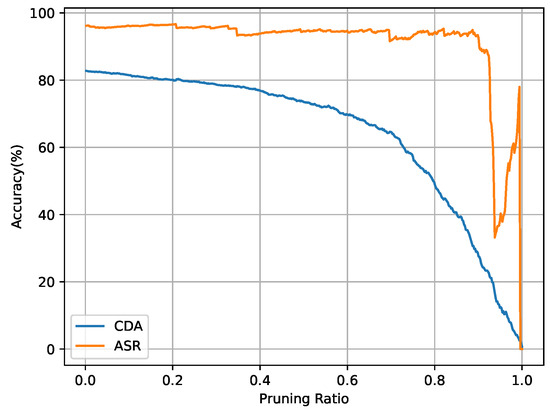

Figure 5. The Grad-CAM of clean image and poisoned image according to the victim model attacked by the proposed BATG. Areas with higher scores of the Grad-CAM are marked with red color. - Fine-Pruning prunes neurons according to their average activation values to mitigate backdoor behaviors. As shown in Figure 6, the ASR of the proposed BATG only decreases slightly with the increase in the fraction of pruned neurons so that the proposed BATG is resistant to the pruning-based defense.

Figure 6. The ASR and CDA of the victim model attacked by the proposed BATG when pruning the neurons of the last fully connected layer of the victim model in Fine-Pruning.

Figure 6. The ASR and CDA of the victim model attacked by the proposed BATG when pruning the neurons of the last fully connected layer of the victim model in Fine-Pruning.

4.6. Generalization Test

In this part, we investigate whether the proposed BATG without any fine-tuning can successfully implant a backdoor into a victim model on an out-of-dataset. In the BelgiumTS dataset [46], there are 13,773 images that depict 62 different types of traffic signs. We train the trigger generation model on the ImageNet dataset and evaluate the attack performance on the BelgiumTS dataset.

As shown in Table 6, the proposed BATG on the out-of-dataset can also achieve a very high ASR while maintaining a high CDA. In other words, the trigger generation model trained on a specific dataset can be applied to launch an attack on other datasets, indicating that the proposed BATG has a great generalization ability. A well-designed model architecture can capture high-level abstract features in the data. The design of the trigger image generation model should be appropriate so that it can generate effective trigger images.

Table 6.

The generalization test of the proposed BATG on the out-of-dataset BelgiumTS compared to its original attack performance on the ImageNet dataset.

5. Conclusions

In this paper, we propose a novel method called BATG, which is based on trigger generation for launching an invisible and robust backdoor attack. It contains a trigger injection model, a trigger generation model, a surrogate victim model, and a noise layer. Specifically, the trigger generation model generates effective trigger images and the trigger generation model embeds the generated trigger images into clean images to create poisoned images in an invisible manner. Due to the adversarial training with the noise layer, the proposed BATG exhibits robustness against real image compressions. The following three aspects will be investigated in our future work. Firstly, additional loss functions could be added to train the trigger injection model to improve the visual quality of the poisoned images. Secondly, more attention should be paid to scenarios with a relatively low poisoning rate. Thirdly, the proposed BATG could be extended to a clean-label setting.

Author Contributions

Conceptualization, W.T.; methodology, H.X. and Y.R.; software, W.T. and H.X.; validation, T.Q. and Z.Z.; investigation, H.X. and M.L.; data curation, T.Q. and M.L.; writing—original draft, W.T. and H.X.; writing—review and editing, Y.R. and M.L.; supervision, Y.R. and M.L.; funding acquisition, W.T. and Y.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by National Natural Science Foundation of China (NSFC) under grant 62302117, in part by the Guangdong Basic and Applied Basic Research Foundation under grant 2023A1515011428 and grant 2024A1515010666, in part by the Science and Technology Foundation of Guangzhou under grant 2023A04J1723 and grant 2024A04J4143, and in part by the Liaoning Collaboration Innovation Center For CSLE.

Data Availability Statement

The source code can be obtained from https://github.com/xiehaokeke/BATG.git (accessed on 11 November 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 6, 84–90. [Google Scholar] [CrossRef]

- Graves, A.; Mohamed, A.-r.; Hinton, G.E. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Wenger, E.; Passananti, J.; Bhagoji, A.N.; Yao, Y.; Zheng, H.; Zhao, B.Y. Backdoor attacks against deep learning systems in the physical world. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6206–6215. [Google Scholar]

- Chen, J.; Teo, T.H.; Kok, C.L.; Koh, Y.Y. A Novel Single-Word Speech Recognition on Embedded Systems Using a Convolution Neuron Network with Improved Out-of-Distribution Detection. Electronics 2024, 13, 530. [Google Scholar] [CrossRef]

- Jiang, W.; Li, H.; Liu, S.; Luo, X.; Lu, R. Poisoning and evasion attacks against deep learning algorithms in autonomous vehicles. IEEE Trans. Veh. Technol. 2020, 69, 4439–4449. [Google Scholar] [CrossRef]

- Gu, T.; Liu, K.; Dolan-Gavitt, B.; Garg, S. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access 2019, 7, 47230–47244. [Google Scholar] [CrossRef]

- Chen, X.; Liu, C.; Li, B.; Song, D.; Lu, K. Targeted Backdoor Attacks on Deep Learning Systems Using Data Poisoning. arXiv 2017, arXiv:1712.05526. [Google Scholar]

- Zhong, H.; Liao, C.; Squicciarini, A.C.; Zhu, S.; Miler, D. Backdoor Embedding in Convolutional Neural Network Models via Invisible Perturbation. In Proceedings of the Tenth ACM Conference on Data and Application Security and Privacy, New Orleans, LA, USA, 16–18 March 2020; pp. 97–108. [Google Scholar]

- Li, S.; Xue, M.; Zhao, B.Z.H.; Zhang, X. Invisible Backdoor Attacks on Deep Neural Networks via Steganography and Regularization. IEEE Trans. Dependable Secur. Comput. 2021, 18, 2088–2105. [Google Scholar] [CrossRef]

- Nguyen, A.; Tran, A. WaNet—Imperceptible Warping-based Backdoor Attack. arXiv 2021, arXiv:2102.1036. [Google Scholar]

- Turner, A.; Tsipras, D.; Madry, A. Label-Consistent Backdoor Attacks. arXiv 2019, arXiv:1912.02771. [Google Scholar]

- Li, Y.; Li, Y.; Wu, B.; Li, L.; He, R.; Lyu, S. Invisible backdoor attack with samplespecific triggers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 16463–16472. [Google Scholar]

- Zhang, J.; Chen, D.; Huang, Q.; Zhang, W.; Feng, H.; Liao, J. Poison Ink: Robust and Invisible Backdoor Attack. IEEE Trans. Image Process. 2022, 31, 5691–5705. [Google Scholar] [CrossRef]

- Jiang, W.; Li, H.; Xu, G.; Zhang, T. Color Backdoor: A Robust Poisoning Attack in Color Space. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 8133–8142. [Google Scholar]

- Yu, Y.; Wang, Y.; Yang, W.; Lu, S.; Tan, Y.-P.; Kot, A.C. Backdoor Attacks Against Deep Image Compression via Adaptive Frequency Trigger. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12250–12259. [Google Scholar]

- Liu, K.; Dolan-Gavitt, B.; Garg, S. Fine-Pruning: Defending Against Backdooring Attacks on Deep Neural Networks. arXiv 2018, arXiv:1805.12185. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Viswanath, B.; Zheng, H. Neural Cleanse: Identifying and Mitigating Backdoor Attacks in Neural Networks. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 707–723. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE/CVF International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Gao, Y.; Xu, C.; Wang, D.; Chen, S.; Ranasinghe, D.C.; Nepal, S. Strip: A defence against trojan attacks on deep neural networks. In Proceedings of the 35th Annual Computer Security Applications Conference, San Juan, PR, USA, 9–13 December 2019; pp. 113–125. [Google Scholar]

- Tran, B.; Li, J.; Madry, A. Spectral signatures in backdoor attacks. In Proceedings of the 32nd International Conference on Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 3–8 December 2018; pp. 8011–8021. [Google Scholar]

- Xue, M.; Wang, X.; Sun, S.; Zhang, Y.; Wang, J.; Liu, W. Compression-resistant backdoor attack against deep neural networks. arXiv 2022, arXiv:2201.00672. [Google Scholar] [CrossRef]

- Baluja, S. Hiding images in plain sight: Deep steganography. In Proceedings of the 31st Conference on Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 2066–2076. [Google Scholar]

- Wu, P.; Yang, Y.; Li, X. StegNet: Mega Image Steganography Capacity with Deep Convolutional Network. Future Internet 2018, 10, 54. [Google Scholar] [CrossRef]

- Duan, X.; Jia, K.; Li, B.; Guo, D.; Zhang, E.; Qin, C. Reversible Image Steganography Scheme Based on a U-Net Structure. IEEE Access 2019, 7, 9314–9323. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Zhu, J.; Kaplan, R.; Johnson, J.; Li, F.F. HiDDeN: Hiding Data With Deep Networks. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8 September 2018; pp. 682–697. [Google Scholar]

- Tang, W.; Li, B.; Barni, M.; Li, J.; Huang, J. An Automatic Cost Learning Framework for Image Steganography Using Deep Reinforcement Learning. IEEE Trans. Inf. Forensics Secur. 2021, 16, 952–967. [Google Scholar] [CrossRef]

- Tang, W.; Li, B.; Barni, M.; Li, J.; Huang, J. Improving Cost Learning for JPEG Steganography by Exploiting JPEG Domain Knowledge. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 4081–4095. [Google Scholar] [CrossRef]

- Ying, Q.; Zhou, H.; Zeng, X.; Xu, H.; Qian, Z.; Zhang, X. Hiding Images Into Images with Real-World Robustness. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 25–28 October 2022; pp. 111–115. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27, pp. 2672–2680. [Google Scholar]

- Jing, J.; Deng, X.; Xu, M.; Wang, J.; Guan, Z. HiNet: Deep Image Hiding by Invertible Network. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4713–4722. [Google Scholar]

- Dinh, L.; Krueger, D.; Yoshua Bengio, Y. NICE: Non-linear Independent Components Estimation. arXiv 2014, arXiv:1410.8516. [Google Scholar]

- Dinh, L.; Sohl-Dickstein, J.; Bengio, S. Density estimation using Real NVP. arXiv 2017, arXiv:1605.08803. [Google Scholar]

- Tang, W.; Zhou, Z.; Li, B.; Choo, K.-K.; Huang, J. Joint Cost Learning and Payload Allocation With Image-Wise Attention for Batch Steganography. IEEE Trans. Inf. Forensics Secur. 2024, 19, 2826–2839. [Google Scholar] [CrossRef]

- Cui, Q.; Tang, W.; Zhou, Z.; Meng, R.; Nan, G.; Shi, Y.-Q. Meta Security Metric Learning for Secure Deep Image Hiding. IEEE Trans. Dependable Secur. Comput. 2024, 21, 4907–4920. [Google Scholar] [CrossRef]

- Lin, J.; Xu, L.; Liu, Y.; Zhang, X. Composite backdoor attack for deep neural network by mixing existing benign features. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, Virtual Event, USA, 9–13 November 2020; pp. 113–131. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 7th International Conference on International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C.C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8 September 2018; pp. 63–79. [Google Scholar]

- Deng, J.; Wei, D.; Socher, R.; Li, F.F. ImageNet:A large scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 18 August 2009; pp. 248–255. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Tancik, M.; Mildenhall, B.; Ng, R. StegaStamp: Invisible Hyperlinks in Physical Photographs. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 2114–2123. [Google Scholar]

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Mathias, M.; Timofte, R.; Benenson, R.; Van Gool, L. Traffic sign recognition—How far are we from the solution? In Proceedings of the 2013 International Joint Conference on Neural Networks (ILJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).