Synthetic Dataset Generation Using Photo-Realistic Simulation with Varied Time and Weather Axes

Abstract

:1. Introduction

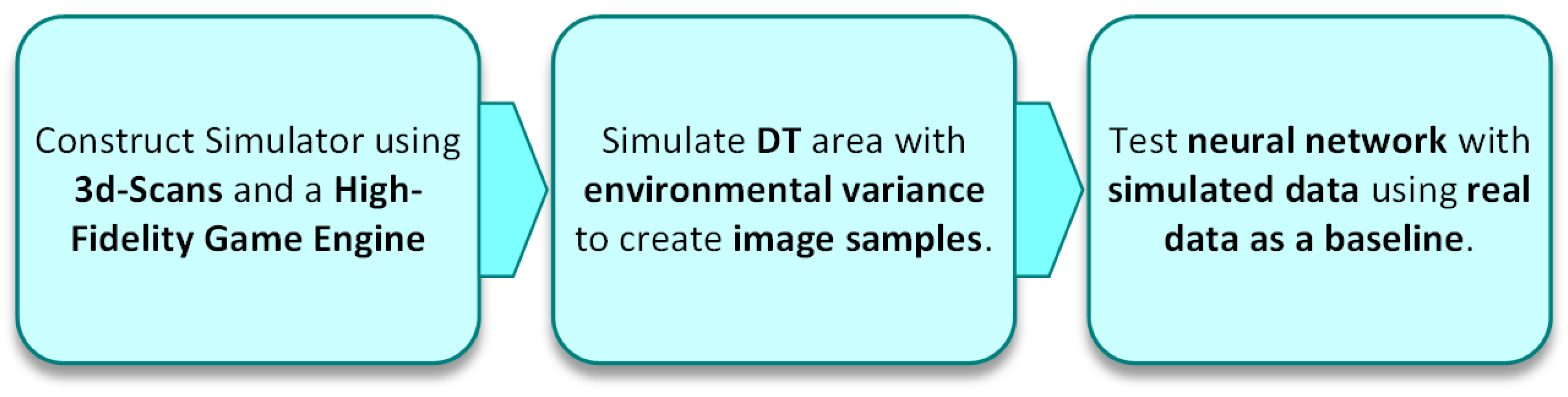

- It outlines a novel method for the generation of synthetic samples for image-based training by using 3D scanning and a photo-realistic game engine.

- It simulates weather and light changes to create a contextually varied image dataset for the potential training and testing of image-based autonomous navigators.

- It tests the environmentally varied dataset against a commonly used generic YoloV3 object detection model and compares it to a realistic baseline to demonstrate the consistency in simulation performance against real image data.

1.1. Autonomous Navigation

1.2. Specificity

1.3. Areas as Digital Twins

2. Background

2.1. Trained Autonomous Features

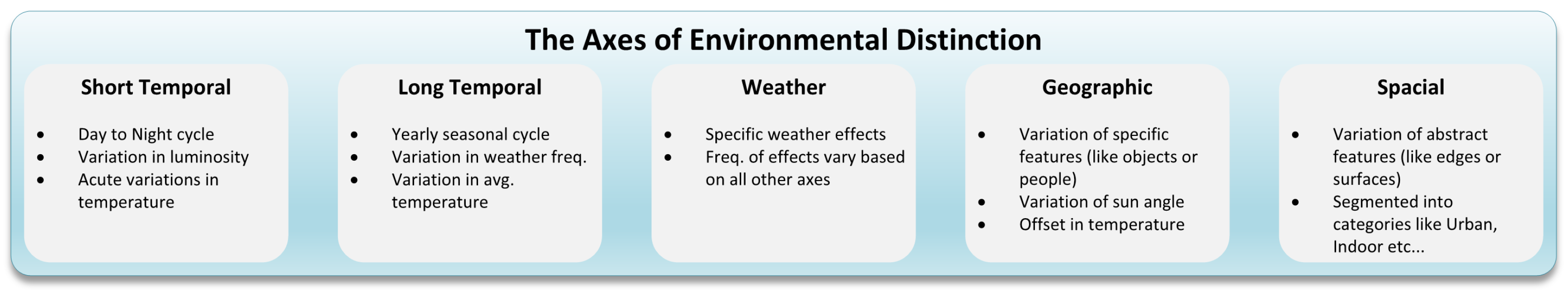

2.2. Environmental Variation

2.3. Simulating Data

3. Method

3.1. Three-Dimensional Area Scans

3.2. Scene Configuration

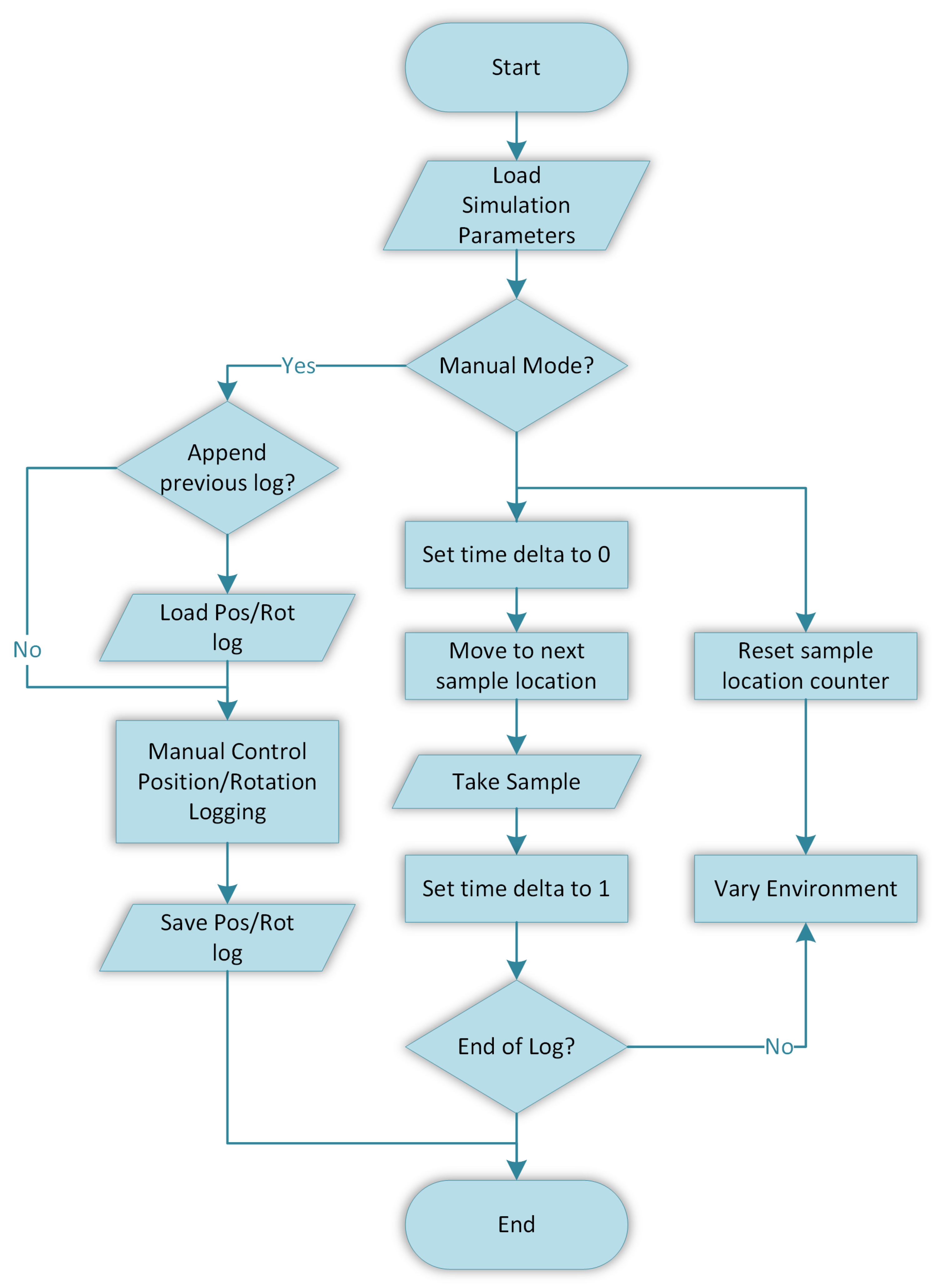

3.3. Sampling

4. Results

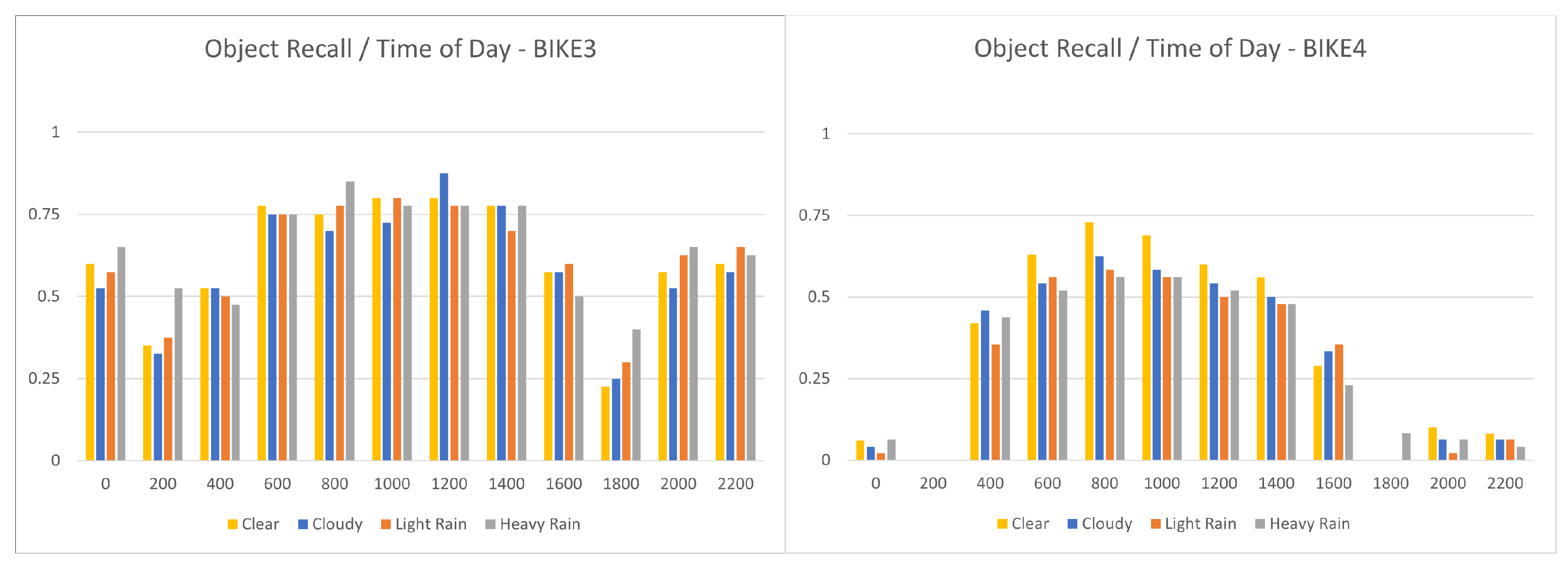

4.1. Weather/Time Variation

4.2. Issues

5. Conclusions

Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DT | Digital Twin |

| UAV | Unmanned Aerial Vehicle |

| GPU | Graphics Processing Unit |

| GAN | Generative Adversarial Network |

| SFI | Scientific Foundation Ireland |

References

- Shukla, P.; Sureshkumar, S.; Stutts, A.C.; Ravi, S.; Tulabandhula, T.; Trivedi, A.R. Robust Monocular Localization of Drones by Adapting Domain Maps to Depth Prediction Inaccuracies. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Samma, H.; Sama, A.S.B. Optimized deep learning vision system for human action recognition from drone images. Multimed. Tools Appl. 2023, 83, 1143–1164. [Google Scholar] [CrossRef] [PubMed]

- Gandhi, D.; Pinto, L.; Gupta, A. Learning to fly by crashing. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2017; pp. 3948–3955. [Google Scholar]

- Rojas-Perez, L.O.; Martinez-Carranza, J. DeepPilot: A CNN for Autonomous Drone Racing. Sensors 2020, 20, 4524. [Google Scholar] [CrossRef] [PubMed]

- Lee, T.; Mckeever, S.; Courtney, J. Flying Free: A Research Overview of Deep Learning in Drone Navigation Autonomy. Drones 2021, 5, 52. [Google Scholar] [CrossRef]

- Udacity. Udacity, “Become a Self-Driving Car Engineer”. 2016. Available online: https://www.udacity.com/course/self-driving-car-engineer-nanodegree–nd013 (accessed on 23 April 2020).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Takahashi, R.; Matsubara, T.; Uehara, K. Data Augmentation Using Random Image Cropping and Patching for Deep CNNs. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2917–2931. [Google Scholar] [CrossRef]

- Wu, F.; Zou, D. Learning Visual Navigation System in Simulation for Autonomous Ground Vehicles in Real World. In Proceedings of the 2023 4th International Conference on Artificial Intelligence in Electronics Engineering, Haikou, China, 6–8 January 2023; pp. 16–23. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Khan, A.; Hebert, M. Learning safe recovery trajectories with deep neural networks for unmanned aerial vehicles. In Proceedings of the 2018 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2018; pp. 1–9. [Google Scholar]

- Al-Sharman, M.K.; Zweiri, Y.; Jaradat, M.A.K.; Al-Husari, R.; Gan, D.; Seneviratne, L.D. Deep-learning-based neural network training for state estimation enhancement: Application to attitude estimation. IEEE Trans. Instrum. Meas. 2020, 69, 24–34. [Google Scholar] [CrossRef]

- Dai, X.; Zhou, Y.; Meng, S.; Wu, Q. Unsupervised Feature Fusion Combined with Neural Network Applied to UAV Attitude Estimation. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics, ROBIO 2018, Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 874–879. [Google Scholar]

- Matthews, M.T.; Yi, S. Model Reference Adaptive Control and Neural Network Based Control of Altitude of Unmanned Aerial Vehicles. In Proceedings of the 2019 SoutheastCon, Huntsville, AL, USA, 11–14 April 2019; pp. 1–8. [Google Scholar]

- Giusti, A.; Guzzi, J.; Ciresan, D.C.; He, F.L.; Rodriguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Caro, G.D.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2016, 1, 661–667. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, X.; Yang, X. Real-Time object detection for 360-degree panoramic image using CNN. In Proceedings of the 2017 International Conference on Virtual Reality and Visualization, ICVRV 2017, Zhengzhou, China, 21–22 October 2017; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2017; pp. 18–23. [Google Scholar]

- Yang, R.; Wang, X. UAV Landmark Detection Based on Convolutional Neural Network. In Proceedings of the 2nd IEEE Eurasia Conference on IOT, Communication and Engineering 2020, ECICE 2020, Yunlin, Taiwan, 23–25 October 2020; pp. 5–8. [Google Scholar]

- Shiri, H.; Park, J.; Bennis, M. Remote UAV Online Path Planning via Neural Network-Based Opportunistic Control. IEEE Wirel. Commun. Lett. 2020, 9, 861–865. [Google Scholar] [CrossRef]

- Han, X.; Wang, J.; Xue, J.; Zhang, Q. Intelligent Decision-Making for 3-Dimensional Dynamic Obstacle Avoidance of UAV Based on Deep Reinforcement Learning. In Proceedings of the 2019 11th International Conference on Wireless Communications and Signal Processing, WCSP 2019, Xi’an, China, 23–25 October 2019. [Google Scholar]

- European Union Aviation Safety Agency. Special Condition for Light Unmanned Aircraft Systems—Medium Risk INTRODUCTORY; Technical Report; European Union Aviation Safety Agency: Cologne, Germany, 2020.

- Loquercio, A.; Maqueda, A.I.; Del-Blanco, C.R.; Scaramuzza, D. DroNet: Learning to Fly by Driving. IEEE Robot. Autom. Lett. 2018, 3, 1088–1095. [Google Scholar] [CrossRef]

- Alshehri, A.; Member, S.; Bazi, Y.; Member, S. Deep Attention Neural Network for Multi-Label Classification in Unmanned Aerial Vehicle Imagery. IEEE Access 2019, 7, 119873–119880. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- Loquercio, A.; Kaufmann, E.; Ranftl, R.; Dosovitskiy, A.; Koltun, V.; Scaramuzza, D. Deep Drone Racing: From Simulation to Reality With Domain Randomization. IEEE Trans. Robot. 2020, 36, 1–14. [Google Scholar] [CrossRef]

- Jones, D.; Snider, C.; Nassehi, A.; Yon, J.; Hicks, B. Characterising the Digital Twin: A systematic literature review. CIRP J. Manuf. Sci. Technol. 2020, 29, 36–52. [Google Scholar] [CrossRef]

- Wang, Z.; Han, K.; Tiwari, P. Digital twin simulation of connected and automated vehicles with the unity game engine. In Proceedings of the 2021 IEEE 1st International Conference on Digital Twins and Parallel Intelligence, DTPI 2021, Beijing, China, 15 July–15 August 2021; pp. 180–183. [Google Scholar]

- Wenna, W.; Weili, D.; Changchun, H.; Heng, Z.; Feng, H.; Yao, Y. A digital twin for 3D path planning of large-span curved-arm gantry robot. Robot. Comput.-Integr. Manuf. 2022, 76, 102330. [Google Scholar] [CrossRef]

- Stark, R.; Damerau, T. Digital Twin in CIRP Encyclopedia of Production Engineering; Springer: Berlin, Germany, 2019; pp. 1–8. [Google Scholar]

- Kahlen, F.J.; Flumerfelt, S.; Alves, A. Transdisciplinary Perspectives on Complex Systems: New Findings and Approaches; Springer International Publishing: Berlin, Germany, 2016; pp. 1–327. [Google Scholar]

- Liu, M.; Fang, S.; Dong, H.; Xu, C. Review of digital twin about concepts, technologies, and industrial applications. J. Manuf. Syst. 2021, 58, 346–361. [Google Scholar] [CrossRef]

- Buyuksalih, I.; Bayburt, S.; Buyuksalih, G.; Baskaraca, A.P.; Karim, H.; Rahman, A.A. 3D Modelling and Visualization Based on the Unity Game Engine—Advantages and Challenges. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 161–166. [Google Scholar] [CrossRef]

- Meng, W.; Hu, Y.; Lin, J.; Lin, F.; Teo, R. ROS+unity: An efficient high-fidelity 3D multi-UAV navigation and control simulator in GPS-denied environments. In Proceedings of the IECON 2015—41st Annual Conference of the IEEE Industrial Electronics Society, Yokohama, Japan, 9–12 November 2015; pp. 2562–2567. [Google Scholar]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital Twin: Enabling Technologies, Challenges and Open Research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Frigg, R.; Reiss, J. The philosophy of simulation: Hot new issues or same old stew? Synthese 2009, 169, 593–613. [Google Scholar] [CrossRef]

- Hussein, A.; Garcia, F.; Olaverri-Monreal, C. ROS and Unity Based Framework for Intelligent Vehicles Control and Simulation. In Proceedings of the 2018 IEEE International Conference on Vehicular Electronics and Safety, ICVES 2018, Madrid, Spain, 12–14 September 2018. [Google Scholar]

- Koenig, N.; Howard, A. Design and use paradigms for gazebo, an open-source multi-robot simulator. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2149–2154. [Google Scholar]

- Codd-Downey, R.; Forooshani, P.M.; Speers, A.; Wang, H.; Jenkin, M. From ROS to unity: Leveraging robot and virtual environment middleware for immersive teleoperation. In Proceedings of the 2014 IEEE International Conference on Information and Automation, ICIA 2014, Hailar, China, 28–30 July 2014; pp. 932–936. [Google Scholar]

- Lee, T.; Mckeever, S.; Courtney, J. Generating Reality-Analogous Datasets for Autonomous UAV Navigation using Digital Twin Areas. In Proceedings of the 2022 33rd Irish Signals and Systems Conference (ISSC), Cork, Ireland, 9–10 June 2022; pp. 1–6. [Google Scholar]

- Hartawan, D.R.; Purboyo, T.W.; Setianingsih, C. Disaster victims detection system using convolutional neural network (CNN) method. In Proceedings of the 2019 IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology, IAICT 2019, Bali, Indonesia, 1–3 July 2019; pp. 105–111. [Google Scholar]

- Sulistijono, I.A.; Imansyah, T.; Muhajir, M.; Sutoyo, E.; Anwar, M.K.; Satriyanto, E.; Basuki, A.; Risnumawan, A. Implementation of Victims Detection Framework on Post Disaster Scenario. In Proceedings of the 2018 International Electronics Symposium on Engineering Technology and Applications, IES-ETA 2018, Bali, Indonesia, 29–30 October 2018; pp. 253–259. [Google Scholar]

- Yong, S.P.; Yeong, Y.C. Human Object Detection in Forest with Deep Learning based on Drone’s Vision. In Proceedings of the 2018 4th International Conference on Computer and Information Sciences: Revolutionising Digital Landscape for Sustainable Smart Society, ICCOINS 2018, Kuala Lumpur, Malaysia, 13–14 August 2018; pp. 1–5. [Google Scholar]

- Rodriguez-Ramos, A.; Sampedro, C.; Bavle, H.; Moreno, I.G.; Campoy, P. A Deep Reinforcement Learning Technique for Vision-Based Autonomous Multirotor Landing on a Moving Platform. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1010–1017. [Google Scholar]

- Rasyad, F.; Kongguasa, H.A.; Onggususilo, N.C.; Anderies; Kurniawan, A.; Gunawan, A.A.S. A Systematic Literature Review of Generative Adversarial Network Potential in AI Artwork. In Proceedings of the 2023 International Conference on Computer Science, Information Technology and Engineering (ICCoSITE), Jakarta, Indonesia, 16 February 2023; pp. 853–857. [Google Scholar] [CrossRef]

- Vierling, A.; Sutjaritvorakul, T.; Berns, K. Dataset Generation Using a Simulated World. In International Conference on Robotics in Alpe-Adria Danube Region; Springer: Cham, Switzerland, 2020; pp. 505–513. [Google Scholar]

- Richter, S.R.; Vineet, V.; Roth, S.; Koltun, V. Playing for data: Ground truth from computer games. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2016; Volume 9906, pp. 102–118. [Google Scholar]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar]

- Dionisio-Ortega, S.; Rojas-Perez, L.O.; Martinez-Carranza, J.; Cruz-Vega, I. A deep learning approach towards autonomous flight in forest environments. In Proceedings of the 2018 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 21–23 February 2018; pp. 139–144. [Google Scholar]

- Perri, D.; Simonetti, M.; Gervasi, O. Synthetic data generation to speed-up the object recognition pipeline. Electronics 2022, 11, 2. [Google Scholar] [CrossRef]

- Song, Y.; Shi, K.; Penicka, R.; Scaramuzza, D. Learning Perception-Aware Agile Flight in Cluttered Environments. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2022; pp. 1989–1995. [Google Scholar] [CrossRef]

- Griwodz, C.; Gasparini, S.; Calvet, L.; Gurdjos, P.; Castan, F.; Maujean, B.; Lillo, G.D.; Lanthony, Y. AliceVision Meshroom: An open-source 3D reconstruction pipeline. In Proceedings of the 12th ACM Multimedia Systems Conference—MMSys ’21, Istanbul, Turkey, 28 September–1 October 2021; ACM Press: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Nedo. Small Town Draft Modus. Available online: https://skfb.ly/YGyC (accessed on 27 September 2021).

- Neo_minigan. Bike. Available online: https://sketchfab.com/3d-models/bike-429aceab4aa84a8d8e66a85c015070fb (accessed on 27 April 2022).

- Alban. Cinelli Bike. Available online: https://sketchfab.com/3d-models/cinelli-bike-d9dac9f5af5e4c0990bad44e13cd7d85 (accessed on 27 April 2022).

- Coldesina, F. Mini Bike. Available online: https://sketchfab.com/3d-models/mini-bike-9603f6fb503140bf9c5da898dd2b55e2 (accessed on 27 April 2022).

- Design, L. Jamis Coda Sport Bicycle 3D Scan with Artec Leo. Available online: https://sketchfab.com/3d-models/jamis-coda-sport-bicycle-3d-scan-with-artec-leo-ff5cd417826247ad825efcf3f3b8f8cf (accessed on 27 April 2022).

- Haupt, H. Enviro—Sky and Weather. Unity Asset Store. Available online: https://assetstore.unity.com/packages/tools/particles-effects/enviro-sky-and-weather-33963 (accessed on 15 January 2022).

- Sanagapati, P. Images Dataset. Available online: https://www.kaggle.com/datasets/pavansanagapati/images-dataset (accessed on 24 September 2022).

- Chan, C.S. Exclusively Dark Image Dataset. Available online: https://github.com/cs-chan/Exclusively-Dark-Image-Dataset (accessed on 5 January 2023).

- Lee, T.; McKeever, S.; Courtney, J. Reality Analagous Synthetic Dataset Generation with Daylight Variance for Deep Learning Classification. In Proceedings of the 24th Irish Machine Vision and Image Processing Conference, Irish Pattern Recognition and Classification Society, Belfast, Ireland, 31 August 2022; pp. 181–188. [Google Scholar]

| Asset Label | Description |

|---|---|

| Bike 1 | Mountain bicycle with green frame, off road tires |

| Bike 2 | Racing bicycle with white frame, thin racing tires |

| Bike 3 | Children’s bicycle with small green frame, white tires |

| Bike 4 | Children’s bicycle with small pink frame, white tires |

| Bike 5 | City bicycle with red frame, road tires |

| - | Clear | Cloudy | Light Rain | Heavy Rain |

|---|---|---|---|---|

| Clouds | None | Light | Medium | Heavy |

| Sky | Visible | Partly Obscured | Obscured | Obscured |

| Rain | None | None | Light | Heavy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, T.; Mckeever, S.; Courtney, J. Synthetic Dataset Generation Using Photo-Realistic Simulation with Varied Time and Weather Axes. Electronics 2024, 13, 1516. https://doi.org/10.3390/electronics13081516

Lee T, Mckeever S, Courtney J. Synthetic Dataset Generation Using Photo-Realistic Simulation with Varied Time and Weather Axes. Electronics. 2024; 13(8):1516. https://doi.org/10.3390/electronics13081516

Chicago/Turabian StyleLee, Thomas, Susan Mckeever, and Jane Courtney. 2024. "Synthetic Dataset Generation Using Photo-Realistic Simulation with Varied Time and Weather Axes" Electronics 13, no. 8: 1516. https://doi.org/10.3390/electronics13081516

APA StyleLee, T., Mckeever, S., & Courtney, J. (2024). Synthetic Dataset Generation Using Photo-Realistic Simulation with Varied Time and Weather Axes. Electronics, 13(8), 1516. https://doi.org/10.3390/electronics13081516