Abstract

With the development of large language models (LLMs), they have demonstrated powerful capabilities across many downstream tasks. Existing Tibetan named entity recognition (NER) methods often suffer from a high degree of coupling between data and models, limiting them to identifying entities only within specific domain datasets and making cross-domain recognition difficult. Additionally, each dataset requires training a dedicated model, and when faced with new domains, retraining and redeployment are necessary. In practical applications, the ability to perform cross-domain NER is crucial to meeting real-world needs. To address this issue and decouple data from models, enabling cross-domain NER, this paper proposes a cross-domain joint learning approach based on large language models, which enhances model robustness by learning the shared underlying semantics across different domains. To reduce the significant computational costs incurred by LLMs during inference, we adopt an adaptive structured pruning method based on domain-dependent prompt, which effectively reduces the model’s memory requirements and improves the inference speed while minimizing the impact on performance. The experimental results show that our method significantly outperformed the baseline model across cross-domain Tibetan datasets. In the Tibetan medicine domain, our method achieved an F1 score improvement of up to 27.26% compared with the baseline model at its best. Our method achieved an average F1 score of 95.17% across domains, outperforming the baseline Llama2 + Prompt model by 5.12%. Furthermore, our method demonstrates strong generalization capabilities in NER tasks for other low-resource languages.

1. Introduction

The Tibetan named entity recognition (NER) task involves the automatic identification of entities with specific meanings in Tibetan text, including entity extraction and classification. Recently, large language models (LLMs) such as ChatGPT [1] and Llama [2] have demonstrated significant success across various fields, but their application in the Tibetan NER task is relatively infrequent.

Modern NER models typically require fine-tuning on domain-specific datasets to perform NER tasks within that domain. However, this approach has significant limitations, primarily due to the high coupling between the model and the dataset. This coupling restricts the model to performing well only within the training domain while lacking flexibility and cross-domain adaptability, making it ineffective for handling cross-domain NER tasks.

For instance, in NER tasks within the news domain, the model may focus on recognizing entities such as “person”, “location”, or “organization”. However, when applied to the Tibetan medical domain, the model may fail to correctly identify domain-specific entities such as “symptom”, “ medical examination”, or “drug” due to the significant differences in entity types and contextual environments. Moreover, the diversity of semantic expressions across domains can lead to misclassifications or reduced performance in new domains.

This strong domain dependency severely limits the scalability and generalization of the model. In practical applications, each new domain typically requires training and storing a new model, making this approach unsuitable for large-scale expansion.

As LLMs’ technology continues to evolve, they have demonstrated great potential for exploration and shown powerful capabilities across various fields [3,4,5,6,7]. LLMs have the ability to decouple datasets from a model, integrating label information from different domain datasets to handle various complex scenarios. By jointly training multiple domain datasets, a model can leverage shared underlying semantic information and learn greater semantic variability, further enhancing its flexibility and potentially outperforming models which are trained exclusively for single domains and highly coupled to their datasets. Specifically, when encountering polysemous words across different domains, LLMs can accurately interpret the meaning of the same word in different contexts, thereby potentially improving its robustness. LLMs offer a promising approach for achieving cross-domain Tibetan NER, leveraging their ability to generalize across diverse domains and languages.

Utilizing LLMs to achieve cross-domain Tibetan NER tasks comes at the cost of significant computational and memory demands. The model size is a critical factor, as the model layers are often wide and plentiful, posing challenges not only in terms of storage but also by affecting the inference speed. Without optimization, applying LLMs solely for Tibetan NER tasks can lead to resource inefficiency. LLMs exhibit sparse structures, particularly in feedforward (FF) blocks, where substantial computation is wasted on features with minimal impact on the final results. As FF blocks typically account for about two-thirds of LLM parameters, they represent a major bottleneck in memory and computation.

To address these challenges, we developed a model capable of handling cross-domain NER tasks and adopted a domain-dependent prompt based adaptive structured pruning method during inference. By leveraging the cross-domain joint learning approach, we decoupled the model from specific datasets, enabling the same model to generalize across domains and overcome the reliance of traditional NER models on domain-specific data. By explicitly incorporating domain-dependent prompt, the model effectively integrates domain-specific information, enhances its ability to capture domain-specific features, extracts shared semantic knowledge, reduces cross-domain interference, and achieves cross-domain NER without requiring additional training or large-scale annotated data. To conclude, our contributions are as follows:

- A Tibetan cross-domain NER model is proposed which is capable of learning shared knowledge across different domains and supports the extraction and recognition of entity types across various fields, achieving decoupling of the data and the model.

- Adaptive structured pruning based on domain-dependent prompt is proposed, which significantly reduces the model’s memory requirements and improves inference efficiency with minimal impact on model performance.

- The experimental results show that our cross-domain Tibetan NER model performs exceptionally well in Tibetan NER tasks across different domains, significantly surpassing existing baseline methods. Furthermore, our approach demonstrates excellent generalization capabilities, showing strong adaptability in handling NER tasks for other low-resource languages.

2. Related Work

2.1. Prompting-Based Approaches for NLP

The essence of prompt learning lies in fully leveraging the potential of pretrained language models by adding additional “hints” [8]. Inspired by this, numerous prompting methods have emerged, aiming to reframe downstream tasks as pretraining tasks to fully leverage the power of pretrained language models. These methods can be categorized based on their model architecture and pretraining tasks, including bidirectional encoder models like BERT [9], causal language decoder models like GPT-3 [10,11], and encoder-decoder models like T5 [12,13]. Additionally, some research has explored using prompts to unify various tasks, which aligns with our approach to using prompts. Such prompting-based explorations is relatively scarce in the field of Tibetan NER tasks.

2.2. Deep Learning Approaches for NER

Early NER methods [14,15] primarily relied on rule-based systems and machine learning-based deep learning models [16]. With the rise of pretrained language models, Transformer-based models [17] became mainstream, significantly improving NER performance through large-scale dataset pretraining [18,19]. Subsequently, the emergence of large language models such as GPT [11], BloomZ [20], and Llama [2] further advanced NER [21]. These models possess strong language generation and comprehension capabilities, enabling NER tasks through prompt learning [22], few-shot learning [23], and zero-shot learning [24].

Traditionally, most research treated NER as a sequence labeling task, where each token is assigned a predefined label (e.g., BIO scheme). However, with the advent of large language models, methods have emerged which redefine NER as a sequence generation task [23]. This approach not only enhances the applicability of large language models in NER tasks but also improves their ability to handle complex contextual challenges. Its excellent performance opens up new possibilities for low-resource NER tasks. Our method explores the potential of large language models in named entity recognition tasks for low-resource languages.

Research on cross-domain NER in the Tibetan language is still in its early stages. In the fields of Chinese and English NER, while many existing studies [25,26,27] have attempted to unify various NER subtasks across different domains, most approaches still face the challenge of strong coupling between datasets and models. Our work focuses on developing a general cross-domain model which not only accommodates diverse entity types across domains but also efficiently handles multiple datasets, making our approach both more appealing and practical.

2.3. Optimization Methods for Large Language Models

Pruning and mixture of experts (MoEs) are two important branches of large language model optimization methods. They leverage the sparsity of LLMs to address computational and memory bottlenecks. Pruning reduces storage requirements by removing low-impact pretrained weights, and using structured, hardware-friendly pruning methods [28,29,30,31,32] can significantly improve the speed, though this often results in greater performance degradation. MoEs, on the other hand, achieves sparse computation by adaptively selecting active model subsets based on the input, preserving the original performance more effectively. However, this requires the model to have been specifically trained using this approach [33,34]; otherwise, it will need to develop a cheap but effective gating function (expert selection mechanism) and sometimes even full fine-tuning.

3. Materials and Methods

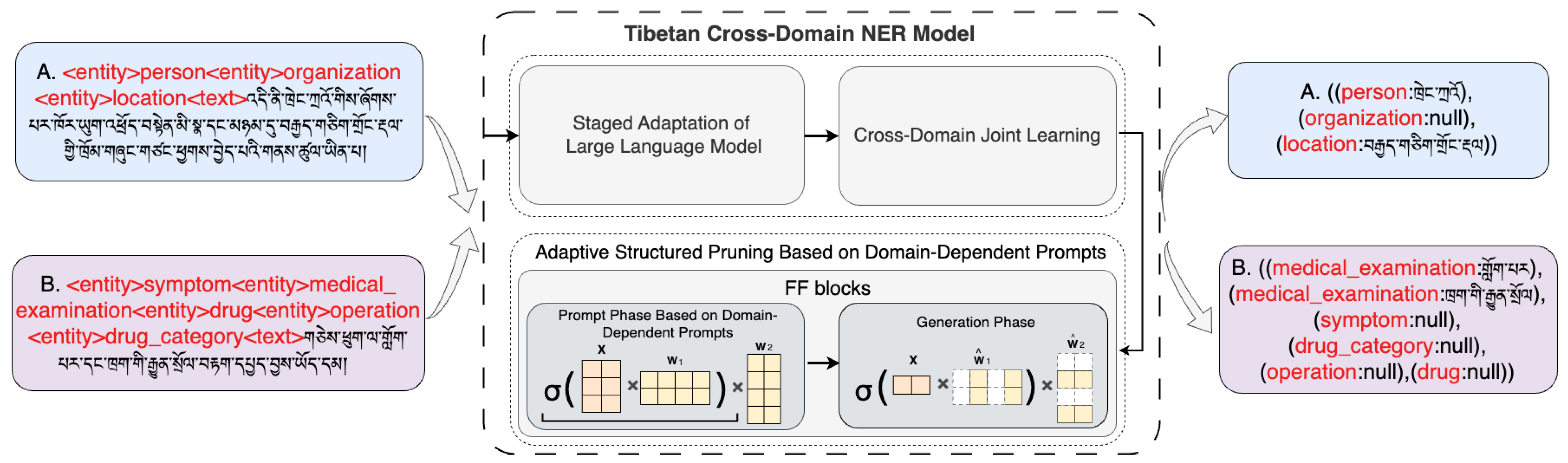

Our Tibetan cross-domain NER model is based on the latest autoregressive language model framework, Llama2, with the architecture shown in Figure 1. We first describe how we reformulate NER tasks from different domains into a unified seq2seq format with prompts. Then, we introduce the system architecture, followed by a detailed explanation of our system in three parts: a staged adaptation of a large language model, cross-domain joint learning, and adaptive structured pruning based on a domain-dependent prompt.

Figure 1.

This figure illustrates the proposed Tibetan cross-domain NER model, emphasizing the two-stage process and adaptive structured pruning based on the domain-dependent prompt process during the inference phase for cross-domain NER. The two-stage process involves a staged adaptation of a large language model and cross-domain joint learning. Additionally, the figure illustrates data examples from different domains in Tibetan NER. In the news domain, the sentence “In the morning in Changzhou, sanitation workers are cleaning the streets of Bayi Town” is used, where the model successfully identifies “Changzhou” and “Bayi Town”. In the Tibetan medicine domain, the sentence “Has the baby undergone a chest X-ray and routine urinalysis?” is used, and the model accurately extracts “chest X-ray” and “routine urinalysis”.

3.1. Task Redefining

As illustrated in Figure 1, we redefine NER as a seq2seq problem based on prompting. Specifically, given an initial text sequence x, we convert x into a source sequence by prepending it with a series of prompts as follows:

where is the model input; is a special token “<entity>”, indicating that the subsequent token is the entity type of interest; represents the entity type; and is the special token “<text>”, signifying that the following text sequence is the sentence from which entities should be extracted. Consequently, the target sequence is as follows:

where is the model output; are the entity types which match those specified in Equation (1); and represents the ground truth texts extracted from the input sequence.

This approach uses prompts as indicators of target entity types, helping guide the model in generating more accurate outputs. Unlike traditional methods which require training dedicated models for each domain, this approach leverages prefixed prompting to utilize shared knowledge across different datasets, effectively capturing shared representations between domains. This not only reduces storage and computational costs but also eliminates the redundancy and maintenance overhead associated with deploying multiple models.

3.2. Model Architecture

Our model is primarily constructed by reusing the pretrained Llama2 model. Compared with the original Transformer’s encoder-decoder architecture, Llama2 only uses the decoder, which simplifies the model structure and makes it more focused on generation and decoding tasks. Llama2 encodes the text and feeds it into multiple Transformer blocks. Then, the output layer is used for text prediction in an autoregressive manner. The Transformer block in Llama2 differs from the standard Transformer decoder by employing methods like RMSNorm, rotary position encoding (RoPE), and group query attention to enhance model performance.

3.3. Staged Adaptation of Large Language Model

Llama2 supports multiple languages, but Tibetan is not included. To adapt Llama2 to Tibetan linguistic characteristics and improve its performance in Tibetan NER tasks, we employed a staged adaptation of a large language model for incremental pretraining.

In the first stage of incremental pretraining, we focused on introducing a large-scale, domain-general Tibetan corpus to enhance the model’s language representation capabilities. Specifically, we utilized a large Tibetan corpus covering multiple domains, including Tibetan medicine, Buddhist studies, news, law, and daily life, with the goal of enriching the model’s understanding and processing of Tibetan linguistic features. The training objective was to optimize the model’s language generation abilities by maximizing the probability of generating the next word given the context. This approach helped the model capture Tibetan grammar, lexical relationships, and contextual semantics, laying a solid foundation for adapting the model to Tibetan-specific tasks.

In the second stage, we further extracted unlabeled text data from the Tibetan NER dataset and applied the same incremental pretraining method to better align the model with the domain requirements of the NER task. Unlike the first stage, the goal of this phase was to fine-tune the model parameters using Tibetan data which were more closely aligned with the NER task, reducing the domain gap. This process allowed the model to better learn domain-specific linguistic patterns and contextual features relevant to the Tibetan NER task. This stage of incremental pretraining not only allowed the model to retain domain-general language knowledge but also further enhanced its representation capabilities in the target domain, providing critical support for subsequent fine-tuning.

3.4. Cross-Domain Joint Learning

After the staged adaptation of a large language model, we adopted a simple yet effective cross-domain learning strategy for self-supervised fine-tuning of the model to shift the pretrained model to our downstream prompting-based seq2seq NER task in order to achieve decoupling between the datasets and the model. This approach leverages cross-domain shared knowledge by utilizing similar entities across different domains and diverse contextual information, enabling the model to deepen its understanding of entity semantics. This reduces the need for extensive labeled data in the target domain and enhances the model’s ability to capture semantic details. The cross-domain joint learning not only improves the granularity of knowledge acquisition, allowing for more fine-grained training, but also strengthens the model’s generalization ability, enabling it to better handle differences and similarities across domains while demonstrating greater robustness when dealing with rare entities. Samples for each batch are randomly selected from different datasets. We propose three prefixed prompt set-ups:

- Multi-Domain Prompt: The training uses all entities from all domains as prompts.

- Domain-Dependent Prompt + Exact Match: During the training phase, the original samples are prefixed in two different styles (resulting in two training samples). One approach uses the previously mentioned domain-dependent prompt, while the other employs exact entities from the ground truth as prefixed prompts (i.e., exact match). The purpose of introducing the “exact match” strategy in training is to incorporate precise information, helping the model narrow the entity space and thereby reduce the complexity of the training process.

- Domain-Dependent Prompt: The training utilizes all entities from the specific domain as prompts.

3.5. Adaptive Structured Pruning Based on Domain-Dependent Prompt

Although the above approach has successfully achieved decoupling of the data and model in the NER task, the large number of model parameters results in high computational costs and slow inference during deployment. The model still has significant optimization potential. If directly applied to a single downstream cross-domain NER task, it would lead to inefficiency.

The FF blocks of LLMs are the main bottleneck in terms of memory and computation. Additionally, there is significant sparsity within the FF blocks, leading to a large amount of computational resources being wasted on intermediate features which have little to no impact on the final output.

Since the FF blocks in Llama2 operate identically and independently for each token, we begin by defining the FF block [17] with a single column vector input :

where ⊙ signifies element-wise multiplication; ; and , where typically, .

To address the above issue, we propose using adaptive structured pruning based on domain-dependent prompt. To avoid the information leakage problem, we do not use exact match during the inference phase. By leveraging the flocking phenomenon, domain-dependent prompt guide the activation of neurons during inference, thereby reducing the number of active parameters in the FF block. Compression of the FF block ensures that when the FF block is reparameterized with matrices, the output value is preserved. Specifically, this involves finding , , and (as well as and if needed), where . Specifically, we have

“Flocking” [35] refers to the phenomenon in the FF blocks of LLMs where multiple tokens within the same input sequence activate the same or similar neurons, forming a consistent activation pattern. This indicates that tokens within the sequence share the same computational pathway, thus reducing redundant computations during inferencing. The flocking phenomenon is widespread and not limited to specific models or activation mechanisms, and it has broad applicability. However, despite the similarity in activation patterns within the same sequence, these patterns exhibit significant variation between different input sequences, indicating that each input sequence activates a distinct combination of neurons, enabling the model to adapt flexibly to different inputs.

We divide the efficient generation of each sample in the NER task into the prompt phase based on domain-dependent prompt and the generation phase. Tokens within the same sequence share activation patterns, and thus tokens in both the prompt phase based on domain-dependent prompt and the generation phases also share the same activation patterns. Therefore, neurons are selected during the prompt phase based on the domain-dependent prompt of each sample and used throughout the entire duration of the generation phase.

- Prompt phase based on domain-dependent prompt: Our expert neurons are selected at the sequence level. Thus, when choosing neurons, we need to consider the dynamics of the entire input sequence rather than just individual tokens. Consistent with the previously defined input format, domain-dependent prompt introduced during the prompting phase not only extend the input sequence length but also inject domain-specific information, providing clear and targeted guidance for the pruning process. This mechanism explicitly integrates domain knowledge into the input sequence, enabling the model to better understand contextual semantics, effectively reduce cross-domain semantic interference, and enhance its ability to capture domain-specific features.To select expert neurons, we use a statistic to inform the importance of each neuron. During the prompt phase, this is achieved by calculating the -norm of along the token axis:By selecting the first k indices from s, we can determine the neurons used for the sample generation phase and form the set E. Using the expert neurons in the set E, we can find the previously mentioned , , , , and by selecting corresponding rows and columns in , , , , and .

- Generation phase: When generating tokens, we utilize the pruned layers containing the expert neurons and to approximate for all subsequent tokens.

4. Results and Discussion

4.1. Experimental Settings

We used Llama2 7B [2] as the backbone of our pretrained language model and then performed staged adaptation on 4 GB of a Tibetan corpus to find the lowest validation loss as our subsequent backbone model. Our default prompt setting was the domain-dependent prompt. In the inference stage, the default batch size was set to one, with the FF sparsity fixed at 50%.

The Tibetan NER dataset we used is primarily divided into two domains—news and Tibetan medicine–sourced from Tibet University, with a total of 20,215 sentences. In the Tibetan medicine domain, the dataset includes five essential types of medically related entities: symptom, medical examination, drug, operation, and drug category, totaling 10,000 sentences. In the Tibetan news domain, the dataset covers three entity types—person, organization, and location—totaling 10,215 sentences.

We also evaluated our methods on the Thaiand and Vietnamese NER datasets. The Thai NER dataset [36] contains 14 entity types, including date, time, email, length, location, company or organization, person’s name, phone number, temperature, URL, zip code, amount, legislation, and percentage. As these entity types span multiple domains, we considered it a cross-domain dataset. PhoNER_COVID19 [37] is a Vietnamese NER dataset focused on extracting COVID-19-related information. It defines 10 entity types, including patient ID, name, age, gender, occupation, location, organization, symptoms and diseases, transportation, and date. This dataset is designed to support the extraction of COVID-19 patient information and related downstream applications, and we considered it a single-domain dataset. We used the F1 score to evaluate our experimental results. The F1 score [38], precision, and recall are key metrics for evaluating the performance of models in NER tasks. NER requires models to not only correctly identify entities but also accurately classify them, making a comprehensive evaluation of different aspects of model performance essential. The F1 score combines precision and recall, providing a balanced measure of the overall performance. It is defined as the harmonic mean of the precision and recall, which is calculated as follows:

Precision refers to the proportion of entities identified by the model which actually belong to the correct entity category. This reflects the model’s accuracy in predictions. Its formula is

Recall refers to the proportion of actual entities which the model correctly identifies. This reflects the model’s ability to cover the target entities. Its formula is

where TP (true positives) is the number of samples correctly predicted to be positive by the model; FP (false positives) is the number of samples incorrectly predicted to be positive by the model; and FN (false negatives) is the number of samples incorrectly predicted to be negative by the model.

4.2. Comparison to State-of-the-Art Approaches

In Table 1, we compare the performance of our model with the latest Tibetan NER methods, including Lattice LSTM, FLAT, SWS-FLAT, and Llama2 + prompt.

Table 1.

Comparison to recent state-of-the-art methods. All state-of-the-art methods are domain-specific models. TiMed represents Tibetan medicine domain dataset, while TiNews represents the Tibetan news domain dataset.

Lattice long short-term memory (LSTM) [39] incorporates lexical information into character sequences to enhance semantic representation, but it struggles with knowledge sharing and generalization across domains. Flat-lattice Transformer (FLAT) [40] improves upon lexical embedding by using a flat hierarchical structure to model the relationships between characters and words, achieving strong performance in high-resource domains but remaining limited in low-resource settings. Syllable-word-sentence embedding based on FLAT (SWS-FLAT) builds on FLAT by introducing BERT-based pretrained embeddings (syllable-word-sentence embedding), integrating multi-granularity information at the syllable, word, and sentence levels to improve generalization. Llama2 + prompt refers to fine-tuning the Llama2 model using task-defined prompts based on the staged adaptation of a large language model.

The experimental results show that our model outperformed the baseline models and achieved optimal performance across multiple Tibetan datasets. Our model achieved F1 scores of 93.12 and 97.21 on the TiMed and TiNews datasets, respectively, significantly outperforming the other models. Even compared with Llama2 with the prompt-based approach, our method demonstrated a clear advantage. Unlike traditional models, our approach is designed as a single model for cross-domain Tibetan NER tasks. Through cross-domain joint learning, it enables effective knowledge sharing across domains, avoiding the need for separate model training for each domain and thereby reducing computational and storage costs.

To eliminate the impact of the difference in data availability, we conducted mixed-domain training experiments on the relatively better-performing baseline model SWS-FLAT. The experimental results show that the F1 scores of SWS-FLAT in different domains dropped significantly when trained on cross-domain datasets, likely due to interference between the datasets. This issue was further exacerbated by the significant differences between the Tibetan news and medical domains. For instance, news texts are concise, straightforward, and information-dense, while medical texts are more specialized, featuring extensive medical terminology and complex sentence structures. These stylistic differences prevent effective feature transfer between domains, leading to performance degradation in both.

In contrast, our method successfully decoupled the model from the dataset, enabling accurate cross-domain entity recognition within a single framework. It effectively extracted shared features across domains while preserving domain-specific information, making it highly adaptable to diverse domain requirements.

4.3. Comparison to Single-Domain Performance

To verify that cross-domain joint learning can learn shared information between datasets and thus outperform seq2seq NER models trained on single-domain datasets, we selected the best-performing model on the validation set for single-domain training and reported its F1 score on the test set. For cross-domain joint learning, we selected the model with the highest average F1 score across all domain datasets and reported its F1 score on each dataset separately.

The experimental results are shown in Table 2. The results indicate that the cross-domain joint learning model can learn shared information across different domains, with an average improvement of 2.85 points, surpassing the models trained on a single domain and achieving the goal of decoupling the datasets from the model. Specifically, our model significantly outperformed the single-domain models in the TiNews (F1 93.12 versus 89.60) and TiMed (F1 97.21 versus 94.96) domain datasets.

Table 2.

Comparison to single-domain performance.

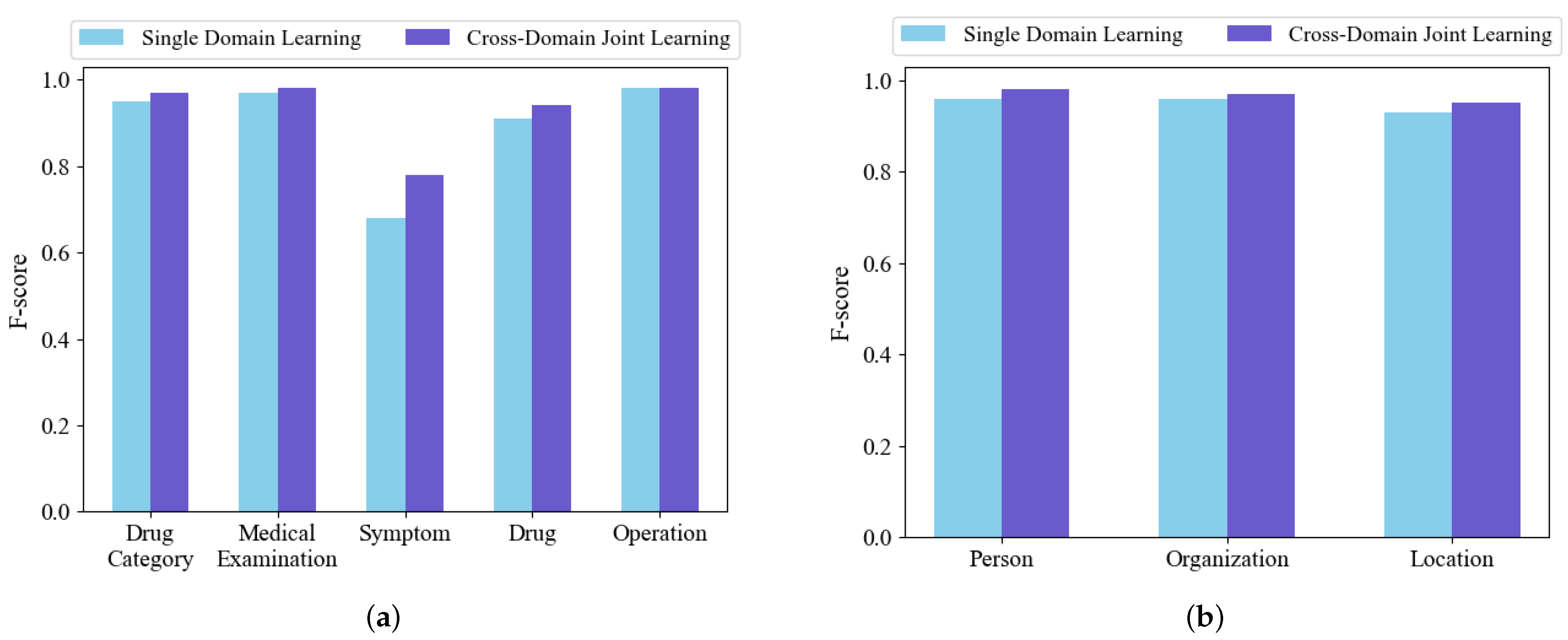

In Figure 2, we further report the F1 scores for each named entity type in the TiMed and TiNews domain datasets to provide a more detailed analysis. For these datasets, our model achieved higher F1 scores for all entity types compared with the models trained on single domains, demonstrating that cross-domain joint learning can leverage the shared knowledge across domains to improve its fine-grained learning capabilities. Additionally, the F1 score for the “symptom” entity in the TiMed domain dataset, as shown in Figure 2a, significantly surpassed that of the single-domain model, indicating that cross-domain joint learning can also provide additional discriminative ability for entity types which appear in only one domain dataset.

Figure 2.

Fine-grained named entity F-score of (a) TiMed (b) TiNews datasets.

4.4. Ablations on Prefixed Prompt Set-Ups During Cross-Domain Joint Learning

To verify our design choices for prefixed prompts during cross-domain joint learning, we conducted ablation experiments with different prefixed prompt settings and reported the results for the Tibetan news and Tibetan medicine datasets. The results are shown in Table 3. We also present the overall average performance in the rightmost column. Our default setting was the “domain-dependent prompt”. We compared this with two ablation settings: multi-domain prompt and domain-dependent prompt + exact match.

Table 3.

Ablations on prefixed prompt set-ups during cross-domain joint learning.

We observed that the “domain-dependent prompt” performed better compared with the multi-domain prompt (average F1 score of 95.17 versus 92.86) and the domain-dependent prompt + exact match (average F1 score of 95.17 versus 90.04). This was expected, as exact match can lead to information leakage during training, causing the model to learn less effectively and resulting in degraded performance during inference. On the other hand, the multi-domain prompt produced too many “NULL” examples, reducing the proportion of positive examples, and the model learned less about the actual labels. The “domain-dependent prompt” helped balance positive and “NULL” examples, reducing the risk of information leakage and improving learning efficiency.

Table 4 analyzes the impact of the cross-domain data training strategy and domain-dependent prompt on model performance. The results show that even with the same amount of cross-domain data, mixed-domain training without a domain-dependent prompt achieved an average F1 score of only 90.05. This indicates that the data volume and diversity alone do not directly lead to significant performance improvements, as the lack of domain-specific guidance significantly limits the model’s ability to capture domain-relevant features. In comparison, single-domain learning with a domain-dependent prompt improved the average F1 score to 92.28, a 2.23 point increase over mixed-domain training without a domain-dependent prompt. This indicates that even in single-domain training, a domain-dependent prompt can provide precise guidance to help the model achieve better performance. Our proposed method, cross-domain joint learning, which includes a domain-dependent prompt, further improved the average F1 score to 95.17, achieving a 2.89 point increase over single-domain learning and a 5.12 point increase over mixed-domain training without a domain-dependent prompt.

Table 4.

Impact of cross-domain data training strategy and domain-dependent prompt on performance.

These results strongly demonstrate that our method, cross-domain joint learning, is the key to performance improvement. It effectively leverages the advantages of cross-domain data and domain-dependent prompt, reduces data interference between domains, enhances the model’s ability to capture domain-specific information, extracts shared features across domains, facilitates knowledge sharing, and improves the model’s robustness and stability.

4.5. Ablations on Domain-Dependent Prompt Set-Ups During Inference

To verify our design choices for prefixed prompts during the inference phase, we conducted ablation experiments with different prefixed prompt settings and reported the results on the Tibetan news and Tibetan medicine datasets. The results are shown in Table 5. We also present the overall average performance in the rightmost column. Our default setting was the “domain-dependent prompt”. To avoid information leakage, we compared it with the ablation setting of the multi-domain prompt.

Table 5.

Ablations on domain-dependent prompt set-ups during inference.

We observed that the “domain-dependent prompt” outperformed the multi-domain prompt (average F1 score of 92.87 versus 21.30). Although the multi-domain prompt can increase the length of prompts during inference and reduce the difficulty of model generation, it results in an excessive number of “NULL” examples, leading to a decrease in the inference F1 score. At the same time, using adaptive structured pruning based on domain-dependent prompt can significantly increase the inference speed and reduce memory usage during the inference process, while the performance is nearly unaffected.

4.6. Applicability to Other Low-Resource Languages

To verify the generalization ability of our method, we conducted experiments on Thai and Vietnamese NER datasets. Since the original Llama2 model does not support these two languages, and the cost of continued pretraining is relatively high, we directly adopted cross-domain joint learning based on domain-dependent prompt. As shown in Table 6, our method still performed well, benefiting from the guidance of domain-dependent prompt, which enabled the model to accurately capture features related to entity types while mitigating the impact of data distribution differences on performance. If further pretraining on a large-scale corpus is possible, then this may further improve the F1 score.

Table 6.

The applicability to other low-resource languages.

4.7. Generation Phase Latency (s)

To validate the advantage of our model in terms of inference speed, we collected dataset samples of the same length and averaged the results. We measured the latency under different scenarios. “Prompt” represents the latency during the prompt phase based on domain-dependent prompt, and “latency (s)” refers to the latency during the generation phase.

As shown in Table 7, our method achieved a 1.07× improvement in latency, demonstrating that our approach can enhance the inference efficiency of large models while maintaining adaptability and preserving the accuracy of the full model.

Table 7.

Generation phase latency (s).

5. Conclusions

In this work, we transformed the Tibetan NER task into a sequence generation task and proposed cross-domain joint learning, enabling a single model to simultaneously recognize entities from different domains. This approach achieved decoupling of the model from datasets and significantly improved performance. Additionally, to reduce the computational cost of LLMs in the Tibetan NER task and improve the inference speed, we introduced an adaptive structured pruning method based on domain-dependent prompt during the inference phase. This approach significantly enhanced efficiency while minimally impacting the model’s performance. In the future, we will continue to expand and integrate more Tibetan NER datasets from various domains into the model’s training to further advance this field.

Author Contributions

Conceptualization, J.Z. and F.G.; methodology, J.Z.; software, J.Z. and X.W.; validation, J.Z. and F.G.; formal analysis, L.Y.; investigation, J.Z. and D.T.; resources, N.T. and G.L.; writing—original draft, J.Z.; writing—review and editing, J.Z. and G.L.; project administration, N.T. and G.L.; funding acquisition, N.T. and G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Key R&D Program of China (grant number 2022ZD0116100); in part by the National Natural Science Foundation of China Key Project (grant number 62436006); in part by the National Natural Science Foundation of China Youth Project (grant number 62406257); in part by the Tibet Autonomous Region Natural Science Foundation General Project (grant number XZ202401ZR0031); and in part by the Tibet University “High-Level Talent Training Program” Project (grant number 2022-GSP-S098).

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, X.; Zheng, Y.; Du, Z.; Ding, M.; Qian, Y.; Yang, Z.; Tang, J. GPT understands, too. AI Open 2024, 5, 208–215. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; et al. Llama2: Open foundation and fine-tuned chat models. arXiv 2023, arXiv:2307.09288. [Google Scholar]

- Su, D.; Xu, Y.; Winata, G.I.; Xu, P.; Kim, H.; Liu, Z.; Fung, P. Generalizing question answering system with pre-trained language model fine-tuning. In Proceedings of the 2nd Workshop on Machine Reading for Question Answering, Hong Kong, China, 4 November 2019; pp. 203–211. [Google Scholar]

- Wang, L.; Lyu, C.; Ji, T.; Zhang, Z.; Yu, D.; Shi, S.; Tu, Z. Document-level machine translation with large language models. arXiv 2023, arXiv:2304.02210. [Google Scholar]

- Sun, X.; Li, X.; Li, J.; Wu, F.; Guo, S.; Zhang, T.; Wang, G. Text classification via large language models. arXiv 2023, arXiv:2305.08377. [Google Scholar]

- Zhang, W.; Deng, Y.; Liu, B.; Pan, S.J.; Bing, L. Sentiment analysis in the era of large language models: A reality check. arXiv 2023, arXiv:2305.15005. [Google Scholar]

- Wei, X.; Cui, X.; Cheng, N.; Wang, X.; Zhang, X.; Huang, S.; Han, W. Chatie: Zero-shot information extraction via chatting with ChatGPT. arXiv 2023, arXiv:2302.10205. [Google Scholar]

- Wang, Y.; Mishra, S.; Alipoormolabashi, P.; Kordi, Y.; Mirzaei, A.; Arunkumar, A.; Khashabi, D. Super-naturalinstructions: Generalization via declarative instructions on 1600+ NLP tasks. arXiv 2022, arXiv:2204.07705. [Google Scholar]

- Lu, J.; Zhu, D.; Han, W.; Zhao, R.; Mac Namee, B.; Tan, F. What Makes Pre-trained Language Models Better Zero-shot Learners? arXiv 2022, arXiv:2209.15206. [Google Scholar]

- Xie, S.M.; Raghunathan, A.; Liang, P.; Ma, T. An explanation of in-context learning as implicit Bayesian inference. arXiv 2021, arXiv:2111.02080. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Khashabi, D.; Min, S.; Khot, T.; Sabharwal, A.; Tafjord, O.; Clark, P.; Hajishirzi, H. UNIFIEDQA: Crossing Format Boundaries with a Single QA System. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; pp. 1896–1907. [Google Scholar]

- Lester, B.; Al-Rfou, R.; Constant, N. The Power of Scale for Parameter-Efficient Prompt Tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 3045–3059. [Google Scholar]

- Sekine, S.; Nobata, C. Definition, Dictionaries and Tagger for Extended Named Entity Hierarchy. In Proceedings of the LREC, Lisbon, Portugal, 26–28 May 2004; pp. 1977–1980. [Google Scholar]

- Rau, L.F. Extracting company names from text. In Proceedings of the Conference on Artificial Intelligence Application, Miami Beach, FL, USA, 24–28 February 1991; pp. 29–30. [Google Scholar]

- Collobert, R. Deep learning for efficient discriminative parsing. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 224–232. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Mehta, R.; Varma, V. LLM-RM at SemEval-2023 Task 2: Multilingual complex NER using XLM-RoBERTa. arXiv 2023, arXiv:2305.03300. [Google Scholar]

- Abadeer, M. Assessment of DistilBERT performance on named entity recognition task for the detection of protected health information and medical concepts. In Proceedings of the 3rd Clinical Natural Language Processing Workshop, Online, 19 November 2020; Association for Computational Linguistics: Kerrville, TX, USA, 2020; pp. 158–167. [Google Scholar]

- Muennighoff, N.; Wang, T.; Sutawika, L.; Roberts, A.; Biderman, S.; Le Scao, T.; Bari, M.S.; Shen, S.; Yong, Z.-X.; Schoelkopf, H.; et al. Crosslingual generalization through multitask finetuning. arXiv 2022, arXiv:2211.01786. [Google Scholar]

- Laskar, M.T.R.; Bari, M.S.; Rahman, M.; Bhuiyan, M.A.H.; Joty, S.; Huang, J.X. A systematic study and comprehensive evaluation of ChatGPT on benchmark datasets. arXiv 2023, arXiv:2305.18486. [Google Scholar]

- Ashok, D.; Lipton, Z.C. Promptner: Prompting for named entity recognition. arXiv 2023, arXiv:2305.15444. [Google Scholar]

- Wang, S.; Sun, X.; Li, X.; Ouyang, R.; Wu, F.; Zhang, T.; Li, J.; Wang, G. GPT-NER: Named entity recognition via large language models. arXiv 2023, arXiv:2304.10428. [Google Scholar]

- Hu, Y.; Ameer, I.; Zuo, X.; Peng, X.; Zhou, Y.; Li, Z.; Li, Y.; Li, J.; Jiang, X.; Xu, H. Zero-shot clinical entity recognition using ChatGPT. arXiv 2023, arXiv:2303.16416. [Google Scholar]

- Li, J.; Fei, H.; Liu, J.; Wu, S.; Zhang, M.; Teng, C.; Li, F. Unified Named Entity Recognition as Word-Word Relation Classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 22 February–1 March 2022; Volume 36, No. 10. pp. 10965–10973. [Google Scholar]

- Zhao, S.; Wang, C.; Hu, M.; Yan, T.; Wang, M. MCL: Multi-Granularity Contrastive Learning Framework for Chinese NER. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, No. 11. pp. 14011–14019. [Google Scholar]

- Zheng, J.; Chen, H.; Ma, Q. Cross-Domain Named Entity Recognition via Graph Matching. arXiv 2024, arXiv:2408.00981. [Google Scholar]

- Xia, M.; Zhong, Z.; Chen, D. Structured pruning learns compact and accurate models. arXiv 2022, arXiv:2204.00408. [Google Scholar]

- Santacroce, M.; Wen, Z.; Shen, Y.; Li, Y. What matters in the structured pruning of generative language models? arXiv 2023, arXiv:2302.03773. [Google Scholar]

- Ma, X.; Fang, G.; Wang, X. LLM-Pruner: On the Structural Pruning of Large Language Models. Adv. Neural Inf. Process. Syst. 2023, 36, 21702–21720. [Google Scholar]

- Li, Y.; Yu, Y.; Zhang, Q.; Liang, C.; He, P.; Chen, W.; Zhao, T. Losparse: Structured compression of large language models based on low-rank and sparse approximation. arXiv 2023, arXiv:2306.11222. [Google Scholar]

- Xia, M.; Gao, T.; Zeng, Z.; Chen, D. Sheared Llama: Accelerating language model pre-training via structured pruning. arXiv 2023, arXiv:2310.06694. [Google Scholar]

- Fedus, W.; Zoph, B.; Shazeer, N. Switch transformers: Scaling to trillion parameter models with simple and efficient sparsity. J. Mach. Learn. Res. 2022, 23, 5232–5270. [Google Scholar]

- Jiang, A.Q.; Sablayrolles, A.; Roux, A.; Mensch, A.; Savary, B.; Bamford, C.; Chaplot, D.S.; Casas, D.D.; Hanna, E.B.; Bress, F.; et al. Mixtral of experts. arXiv 2024, arXiv:2401.04088. [Google Scholar]

- Dong, H.; Chen, B.; Chi, Y. Prompt-Prompted Adaptive Structured Pruning for Efficient LLM Generation. In Proceedings of the First Conference on Language Modeling, Philadelphia, PA, USA, 7–9 October 2024. [Google Scholar]

- Available online: https://doi.org/10.5281/zenodo.10795907 (accessed on 30 November 2024).

- Truong, T.H.; Dao, M.H.; Nguyen, D.Q. COVID-19 Named Entity Recognition for Vietnamese. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; Association for Computational Linguistics: Kerrville, TX, USA, 2021; pp. 2146–2153. [Google Scholar]

- Chinchor, N.; Sundheim, B.M. MUC-5 Evaluation Metrics. In Proceedings of the Fifth Message Understanding Conference (MUC-5), Baltimore, MD, USA, 25–27 August 1993. [Google Scholar]

- Zhang, Y.; Yang, J. Chinese NER using lattice LSTM. arXiv 2018, arXiv:1805.02023. [Google Scholar]

- Li, X.; Yan, H.; Qiu, X.; Huang, X. FLAT: Chinese NER Using Flat-Lattice Transformer. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6836–6842. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).