Abstract

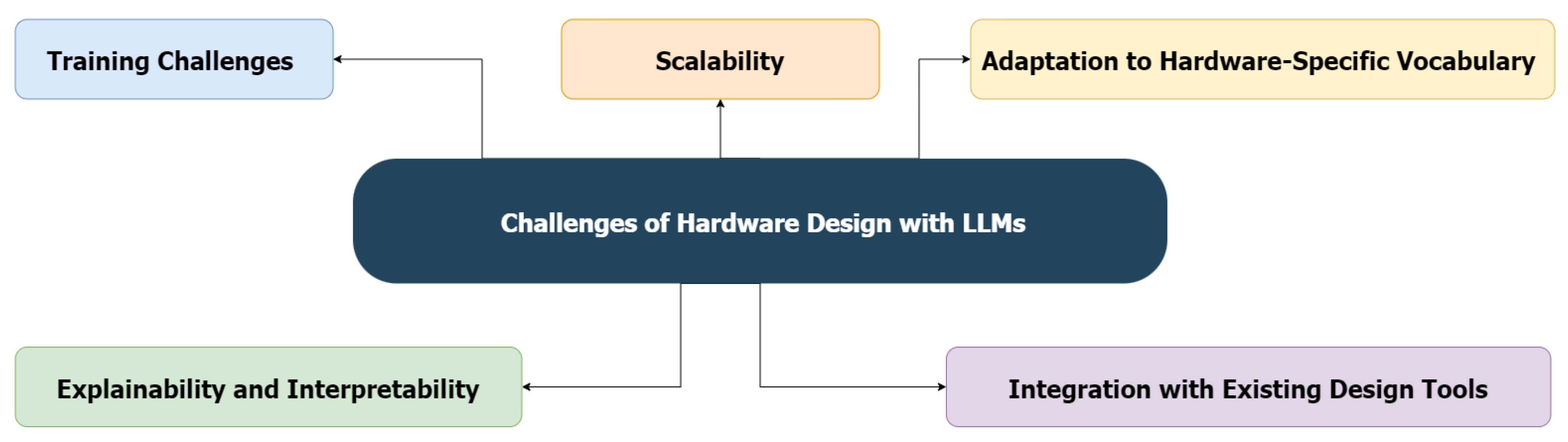

Background: Large Language Models (LLMs) are emerging as promising tools in hardware design and verification, with recent advancements suggesting they could fundamentally reshape conventional practices. Objective: This study examines the significance of LLMs in shaping the future of hardware design and verification. It offers an extensive literature review, addresses key challenges, and highlights open research questions in this field. Design: in this scoping review, we survey over 360 papers most of the published between 2022 and 2024, including 71 directly relevant ones to the topic, to evaluate the current role of LLMs in advancing automation, optimization, and innovation in hardware design and verification workflows. Results: Our review highlights LLM applications across synthesis, simulation, and formal verification, emphasizing their potential to streamline development processes while upholding high standards of accuracy and performance. We identify critical challenges, such as scalability, model interpretability, and the alignment of LLMs with domain-specific languages and methodologies. Furthermore, we discuss open issues, including the necessity for tailored model fine-tuning, integration with existing Electronic Design Automation (EDA) tools, and effective handling of complex data structures typical of hardware projects. Conclusions: this survey not only consolidates existing knowledge but also outlines prospective research directions, underscoring the transformative role LLMs could play in the future of hardware design and verification.

1. Introduction

LLMs such as OpenAI’s GPT-4 (https://chatgpt.com, accessed on 29 December 2024), Google’s Gemini (https://gemini.google.com/app, accessed on 29 December 2024), Google’s Bidirectional Encoder Representations from transformers (BERT) (https://github.com/google-research/bert, accessed on 29 December 2024), and Denoising Autoencoder from transformer (BART) (https://huggingface.co/docs/transformers/model_doc/bart, accessed on 29 December 2024), which is a transformer-based model introduced by Facebook, are at the forefront of Artificial Intelligence (AI) research, revolutionizing how machines understand and generate human language. These models process extensive datasets covering a wide spectrum of human discourse, enabling them to perform complex tasks including translation, summarization, conversation, and creative content generation [1,2,3,4,5]. Recent advancements in this field, driven by innovative model architectures, refined training methodologies, and expanded data processing capabilities, have significantly enhanced the ability of these models to deliver nuanced and contextually relevant outputs. This evolution reflects a growing sophistication in AI’s approach to Natural Language Processing (NLP), positioning LLMs as crucial tools in both academic research and practical applications, transforming interactions between humans and machines [5,6,7].

This evolution can be traced back to early statistical language models like n-gram models, which simply predicted word sequences based on the frequencies of previous sequences observed in a dataset. Although these models provided a foundational approach for text prediction, their limited ability to perceive broader contextual cues restricted their application to basic tasks [8,9,10,11,12]. The advent of neural network-based models, especially Recurrent Neural Networks (RNN), represented a significant advancement, offering the ability to retain information over longer text sequences and thus, managing more complex dialogues and text structures [13,14,15]. Although RNNs made advancements, they continued to struggle with scalability and long-term dependency issues, leading to the creation of transformer models. These models introduced an innovative self-attention mechanism, allowing simultaneous processing of different sentence segments to enhance relevance and contextuality in text interpretation. This breakthrough underpins modern LLMs, which are pre-trained in extensive web-text data and subsequently fine-tuned for specific tasks, enabling them to generate nuanced, stylistically diverse, and seemingly authentic human text [16,17,18,19,20,21].

Moreover, with the advent of highly sophisticated models, LLMs have become an indispensable domain for both academic research and practical applications. These models necessitate thorough evaluations to fully understand their potential risks and impacts, both at task-specific and societal levels. In recent years, significant efforts have been invested in assessing LLMs from multiple perspectives, enhancing their applicability and effectiveness. The adaptability and deep comprehension abilities of LLMs have led to their extensive deployment across numerous AI domains. They are utilized not only in fundamental NLP tasks but also in complex scenarios involving autonomous agents and multimodal systems that integrate textual data with other data forms [22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39]. The utility of LLMs spans several domains, including healthcare [40,41,42,43,44,45], education [46,47,48,49,50], law [51,52,53,54,55,56,57,58], finance [59,60,61,62,63], and sciences [64,65,66,67,68,69], where they substantially improve data analysis and decision-making processes. This wide-ranging application underscores the transformative impact of LLMs on both technological innovation and societal functions.

In particular, within domains like hardware design and verification, LLMs enhance productivity and innovation by automating and optimizing various stages of the design process. These models can assist engineers in generating design specifications, suggesting improvements, and even creating initial design drafts. By leveraging vast amounts of data and advanced algorithms, LLMs can identify patterns and propose design optimizations that might not be immediately apparent to human designers. This capability helps in reducing time-to-market and ensuring that hardware designs are both efficient and innovative [70].

In hardware design, verification is a critical step in the lifecycle of hardware development. Verification ensures that the hardware performs as intended and meets all specified requirements before going into production. Traditionally, this process has been time-consuming and prone to human error. LLMs can automate much of the verification process by generating test cases, simulating hardware behavior, and identifying potential faults or discrepancies. They can analyze a large amount of verification data to predict potential issues and provide solutions, thus enhancing the reliability and accuracy of the hardware verification process. This not only speeds up the verification process but also ensures a higher quality of the final product [71].

In addition to automation, LLMs facilitate better communication and collaboration among hardware design and verification teams. By providing a common platform where designers, engineers, and verification experts can interact with the model, LLMs help in bridging the gap between different teams. This collaborative approach ensures that all aspects of the hardware design and verification are aligned and that any issues are identified and addressed early in the process. Furthermore, LLMs can serve as knowledge repositories, offering solutions based on previous designs and verifications, thus ensuring that best practices are followed and past mistakes are not repeated [72].

Another significant benefit of LLMs in hardware design and verification is their ability to handle complex and high-dimensional data. Modern hardware designs are increasingly complex with numerous components and interdependencies. LLMs can manage this complexity by analyzing and processing large datasets to extract meaningful insights. They can model intricate relationships between different hardware components and predict how changes in one part of the design could impact the overall system. This holistic understanding is crucial for creating robust and reliable hardware systems [73].

In conclusion, the integration of LLMs in hardware design and verification not only fosters innovation but also ensures the development of cutting-edge hardware technologies [74,75]. This survey aims to explore the transformative role of LLMs in this domain, highlighting key contributions, addressing challenges, and discussing open issues that continue to shape this dynamic landscape. The goal is to provide a comprehensive overview that not only informs but also inspires continued research and application of LLMs to improve hardware design and verification processes. We hope that this study will contribute to a better understanding and use of LLMs. This scoping review aims to systematically map the research conducted in this area and identify gaps in existing knowledge. In summary, the contributions of this paper can be summarized as follows:

- Identification of core applications: we detail the fundamental ways in which LLMs are currently applied in hardware design, debugging, and verification, providing a solid foundation to understand their impact.

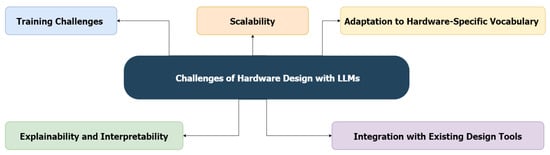

- Analysis of challenges: this paper presents a critical analysis of the inherent challenges in applying LLMs to hardware design, such as data scarcity, the need for specialized training, and integration with existing tools.

- Future directions and open issues: we outline potential future applications of LLMs in hardware design and verification and discuss methodological improvements to bridge the identified gaps.

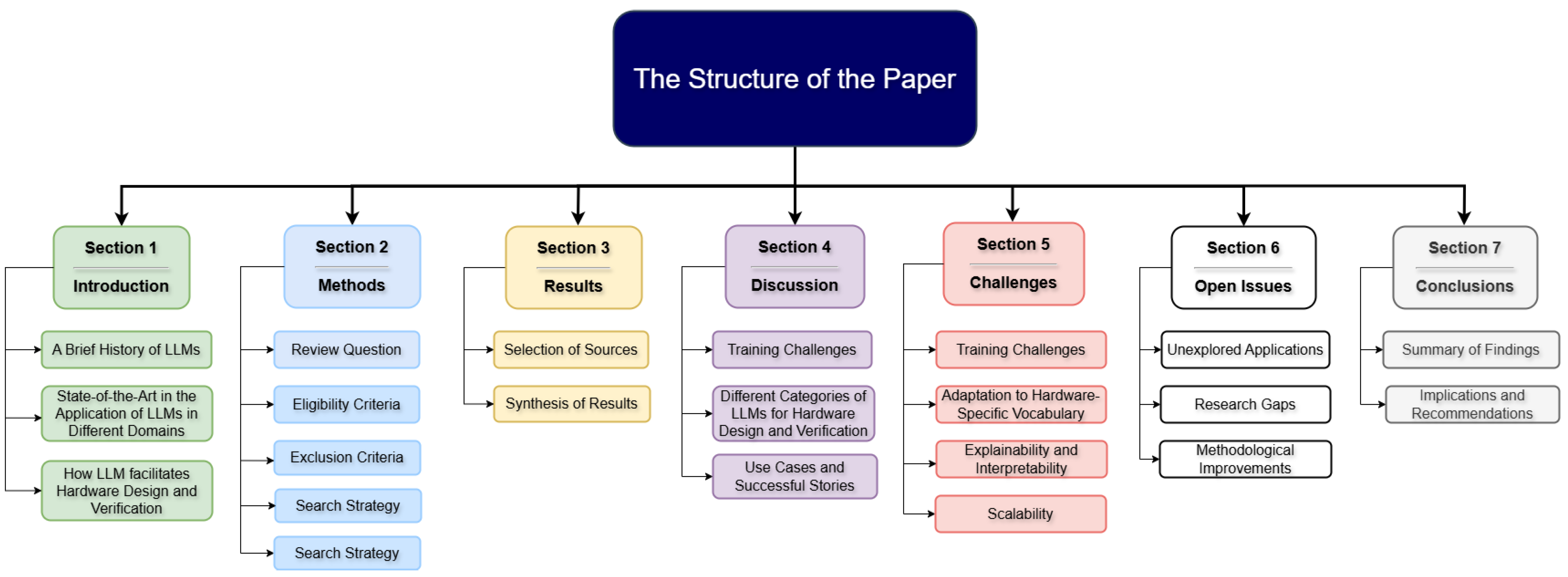

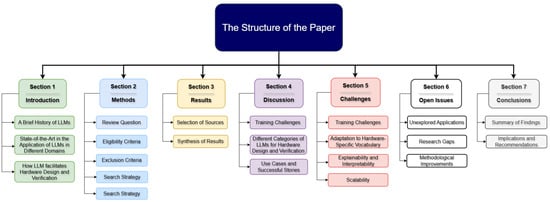

Figure 1 outlines the structure of the review paper, which is organized into five main sections. Section 1 serves as an introduction, offering an overview of LLMs, their historical development, related survey papers, and their role in facilitating hardware design and verification. Section 2 illustrates the methodology Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Reviews (PRISMA-ScR), which is followed by Section 3 discussing the main results of the methodology applied in this survey paper. Section 4 provides a detailed literature review, discussing the application of LLMs in hardware design, categorizing different hardware design and verification methods, and highlighting significant use cases and success stories. Section 5 focuses on the challenges associated with applying LLMs in this field, such as training difficulties, adapting to hardware-specific terminology, ensuring explainability and interpretability, and integrating these models with existing design tools. Section 6 explores open issues, including unexplored applications, research gaps, and the need for methodological improvements. Finally, Section 7 concludes the paper with a summary of the findings and offers implications and recommendations for future research. This structured framework ensures a comprehensive exploration of the role of LLMs in hardware design and verification while addressing critical challenges and opportunities.

Figure 1.

Section organization of the paper.

1.1. A Brief History of LLMs

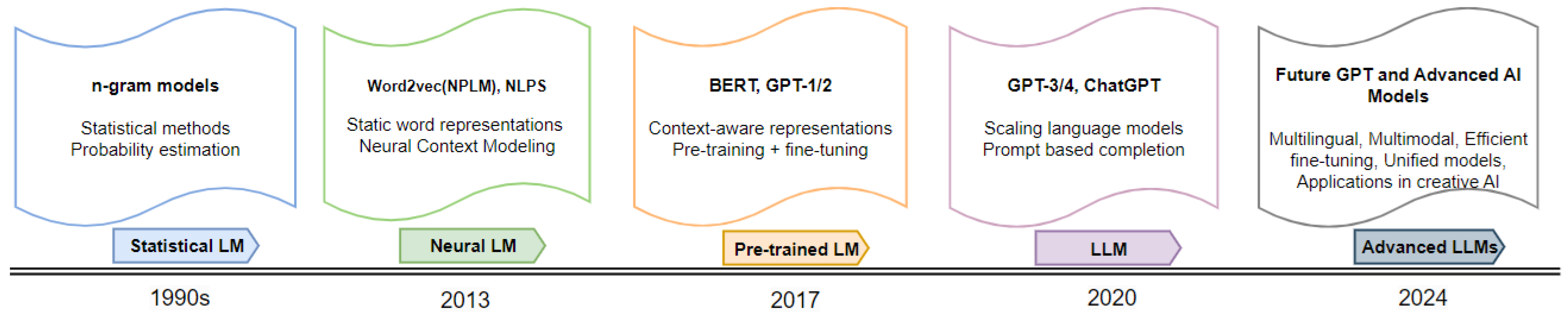

The evolution of LLMs represents a crucial aspect of the broader development in AI. This progression begins with the earliest models and extends through to the sophisticated systems that today significantly influence computational linguistics and AI applications. A very brief history of LLMs is shown by Figure 2.

Figure 2.

Brief history of language models [5].

Initially, LLMs operated on rule-based systems established in the mid-20th century, which were limited by strict linguistic rules and struggled to adapt to the variability of natural language. These systems, while foundational, offered limited utility for complex language tasks due to their inability to capture nuanced linguistic patterns [76]. The transition to statistical models like n-grams and Hidden Markov Models (HMMs) [77] in the late 20th century marked a pivotal enhancement. These models introduced a statistical approach to language processing, utilizing probabilities derived from large text corpora to predict language patterns. This shift allowed better handling of larger datasets, significantly improving real-world language processing capabilities. Despite these advancements, these models continued to struggle with deep contextual and semantic understanding, which later developments in algorithmic technology aimed to address [8,9,10,11,12].

By the early 2000s, the integration of advanced neural networks, specifically RNN [13,14,15] and Long Short-Term Memory (LSTM) networks [78], brought about substantial improvements in modeling sequential data. Additionally, the emergence of word embedding technologies like Word2Vec and GloVe advanced LLM capabilities by mapping words into dense vector spaces, capturing complex semantic and syntactic relationships more effectively. Despite these innovations, the increasing complexity of neural networks raised new challenges, particularly in model interpretability and the computational resources required [14,79,80].

The mid-2010s marked another significant advancement with the introduction of deep learning-based neural language models, notably the Recurrent Neural Network Language Model (RNNLM) in 2010, designed to effectively capture textual dependencies [81,82]. This development improved the generation of text that was more natural and contextually informed. However, these models also faced limitations such as restricted memory capacity and extensive training demands [83]. In 2015, Google’s breakthrough with the Neural Machine Translation (GNMT) model utilized deep learning to significantly enhance machine translation, moving away from traditional rule-based and statistical techniques towards a more robust neural approach. This development not only improved translation accuracy but also addressed complex NLP challenges with greater efficacy [84,85].

A major breakthrough occurred in 2017 with the development of the transformer model, which abandoned the sequential processing limitations of previous models in favor of self-attention mechanisms [16,17,86]. This innovation allowed for the parallel processing of words, drastically increasing efficiency and enhancing the model’s ability to manage long-range dependencies. The transformer architecture facilitated the creation of more sophisticated models such as BERT, which utilized bidirectional processing to achieve a deep understanding of text context, greatly improving performance across a multitude of NLP tasks [18,19,87]. Following BERT, models such as RoBERTa, T5, and DistilBERT have been tailored to meet the diverse requirements of various domains, illustrating the adaptability and expansiveness of LLM applications [88].

Subsequently, the introduction of OpenAI’s GPT series further pushed the boundaries of what LLMs could achieve. Starting with GPT-1 and evolving through GPT-3, these models demonstrated exceptional capabilities in generating coherent and contextually relevant text across various applications. GPT-3, in particular, with its wide array of parameters, showcased the potential of LLMs to perform complex language tasks such as translation, question–answering, and creative writing with minimal specific tuning. The advent of GPT-4 further broadened these capabilities by incorporating multimodal applications that process both text and images, thus significantly expanding the scope of LLMs. Recent developments, including enhancements in GPT-4 and the introduction of innovative models such as DALL-E 3, have continued this trend, emphasizing efficiency in fine-tuning and enhancing capabilities in creative AI fields, demonstrating the versatility and depth of current models [19,21,87,89,90,91,92].

This progression from statistical models to today’s advanced, multimodal, and domain-specific systems illustrates the dynamic and ongoing nature of LLMs development. These continual innovations not only advance the technology but also significantly impact the fields of AI and computational linguistics. Innovations such as sparse attention mechanisms, more efficient training algorithms, and the use of specialized hardware like Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) have enabled researchers to build increasingly larger and more powerful models. Moreover, efforts to improve model interpretability, reduce bias, and ensure ethical use are increasingly becoming central to the field.

In summary, the history of LLMs is a story of rapid progress driven by breakthroughs in Machine Learning (ML) and neural network architectures. From early statistical models to the transformative impact of the transformer architecture and the rise of models like GPT-4, LLMs have evolved dramatically, reshaping our understanding of language and AI. As research continues, LLMs are poised to become even more integral to technological innovation and human–computer interaction in the years to come.

1.2. State-of-the-Art in the Application of LLMs in Different Domains

Due to the success of LLMs on various tasks and their increasing integration into AI research, examining the extensive literature survey on these models is essential. A significant number of surveys [5,93,94,95,96,97,98,99,100,101,102,103] provide detailed insights into the application of LLMs across different fields, demonstrating their advancements and wide-ranging uses. By analyzing research papers, primarily published in 2022 and 2024, our aim is to gain a deeper understanding of LLMs’ applications and evaluate their potential impact across various sectors.

In this context, the work by Huang et al. [93] presented a comprehensive survey of reasoning in LLMs, focusing on the current methodologies and techniques to enhance and evaluate reasoning capabilities in these models. The authors provided an in-depth review of various reasoning types, including deductive, inductive, and abductive reasoning, and discussed how these reasoning forms can be applied in LLMs. They also explored key methods used to elicit reasoning, such as fully supervised fine-tuning, prompting, and techniques like “chain of thought” prompting, which encourages models to generate reasoning steps explicitly. The authors reviewed benchmarks and evaluation methods to assess reasoning abilities and analyze recent findings in this rapidly evolving field. Despite the advancements in reasoning with LLMs, the authors pointed out the limitations of current models, emphasizing that it remains unclear whether LLMs truly possess reasoning abilities or are merely following heuristics. Huang et al. concluded by offering insights into future directions, suggesting that better benchmarks and more robust reasoning techniques are needed to push the boundaries of LLMs’ reasoning capabilities. The research conducted by the authors served as an essential resource for researchers looking to understand the nuances of reasoning in LLMs and guide future research in this critical area.

In this study by Xi et al. [94], the authors comprehensively surveyed the rise and potential of LLM-based agents. The authors traced the evolution of AI agents, with a particular focus on LLMs as foundational components. They explored the conceptual framework of LLM-based agents, which consists of three main parts: brain, perception, and action. This survey discussed how LLMs, particularly transformer models, have been leveraged to enhance various agent capabilities, such as knowledge processing, reasoning, and decision-making, allowing them to interact effectively with their environments. Furthermore, the study delved into the real-world applications of LLM-based agents across different sectors, including single-agent and multi-agent systems, as well as human-agent cooperation scenarios. It also highlighted how LLM-based agents can exhibit behaviors akin to social phenomena when placed in societies of multiple agents. In addition, the authors examined the ethical, security, and trustworthiness challenges posed by these agents, stressing the need for robust evaluation frameworks to ensure their responsible deployment. Finally, they presented future research directions, particularly around scaling LLM-based agents, improving their capabilities in real-world settings, and addressing open problems related to their generalization and adaptability.

In [95], the authors presented a detailed review of LLMs’ evolution, applications, and challenges. The authors highlighted the architecture and training methods of LLMs, particularly focusing on transformer-based models, and emphasized their significant contributions across a range of sectors, including medicine, education, finance, and engineering. They also explored both the potential and limitations of LLMs, addressing ethical concerns such as biases, the need for vast computational resources, and issues of model interpretability. Furthermore, the survey delved into emerging trends, including efforts to improve model robustness and fairness, while anticipating future directions for research and development in the field. This comprehensive analysis served as a valuable resource for researchers and practitioners, offering insights into the current state and future prospects of LLM technologies.

Naveed et al. [96] provided a comprehensive overview of LLMs, focusing on their architectural design, training methodologies, and diverse applications across various domains. The authors delved deeply into transformer models and their role in advancing NLP tasks. They also highlighted the challenges associated with LLM deployment, including ethical concerns, computational resource demands, and the complexity of training these models. Additionally, the survey explored the impact of LLMs on different sectors such as healthcare, engineering, and social sciences and identified potential research directions for the future. This review served as a key resource for researchers and practitioners looking to understand the current landscape of LLM development and deployment.

Fan et al. [97] presented a comprehensive bibliometric analysis of over 5000 publications on LLMs spanning from 2017 to 2023. The authors aimed to provide a detailed map of the progression and trends in LLM research, offering valuable insights for researchers, practitioners, and policymakers. The analysis delved into key developments in LLM algorithms and explored their applications across a wide range of fields, including NLP, medicine, engineering, and the social sciences. Additionally, the authors revealed the dynamic and fast-paced evolution of LLM research, highlighting the core algorithms that have driven advancements and examining how LLMs have been applied in diverse domains. By tracing these developments, the study underscored the substantial impact LLMs have had on both scientific research and technological innovation and provided a roadmap for future research in the field.

The study by Zhao et al. [5] offered an extensive survey of the evolution and impact of LLMs within AI and NLP. The authors traced the development from early statistical and neural language models to modern pre-trained language models (PLMs) with vast parameter sets. They highlighted the unique capabilities that emerge as LLMs scale, such as in-context learning and instruction-following, which distinguish them from smaller models. A significant portion of the survey was dedicated to the contributions of LLMs, including their role in advancing AI applications like ChatGPT. Organized around four key areas—pre-training, adaptation tuning, utilization, and capacity evaluation—the study provided a comprehensive analysis of current evaluation techniques and benchmarks while also identifying future research directions for enhancing LLMs and exploring their full potential.

In the study by Raiaan et al. [98], the authors conducted a comprehensive review of LLMs, focusing on their architecture, particularly transformer-based models, and their role in advancing NLP tasks such as text generation, translation, and question answering. The authors explored the historical development of LLMs, beginning with early neural network-based models, and examined the evolution of architectures like transformers, which have significantly enhanced the capabilities of LLMs. They discussed key aspects such as training methods, datasets, and the implementation of LLMs across various domains, including healthcare, education, and business.

In another study, Minaee et al. [99] survey on LLMs illustrated an insightful analysis of the rise and development of LLMs, focusing on key models like GPT, LLaMA, and PaLM. The authors offered a comprehensive analysis of their architectures, training methodologies, and the scaling laws that underpin their performance in natural language tasks. Additionally, the survey examined key advancements in LLM development techniques, evaluated commonly used training datasets, and compared the effectiveness of different models through benchmark testing. Importantly, the study explored the emergent abilities of LLMs—such as in-context learning and multi-step reasoning—that differentiate them from smaller models while also addressing real-world applications, current limitations, and potential future research directions.

In [100], the authors provided an in-depth analysis of the methodologies and technological advancements in the training and inference phases of LLMs. The authors explored various aspects of LLM development, including data preprocessing, model architecture, pre-training tasks, and fine-tuning strategies. Additionally, they covered the deployment of LLMs, with a particular emphasis on cost-efficient training, model compression, and the optimization of computational resources. The review concluded by discussing future trends and potential developments in LLM technology, making it a valuable resource for understanding the current and future landscape of LLM research and deployment.

Cui et al. [101] presented a comprehensive survey on the role of Multimodal Large Language Models (MLLMs) in advancing autonomous driving technologies. The authors systematically explored the evolution and integration of LLMs with vision foundation models, focusing on their potential to enhance perception, decision-making, and control in autonomous vehicles. They reviewed current methodologies and real-world applications of MLLMs in the context of autonomous driving, including insights from the 1st WACV Workshop on Large Language and Vision Models for Autonomous Driving (LLVM-AD). The study highlighted emerging research trends, key challenges, and innovative approaches to improving autonomous driving systems through MLLM technology, emphasizing the importance of multimodal learning for the future of autonomous vehicles. Additionally, the authors stressed the need for further research to address critical issues like safety, data processing, and real-time decision-making in the deployment of these models.

Chang et al. [102] thoroughly explored the essential practices for assessing the performance and applicability of LLMs. The authors systematically reviewed evaluation methodologies focusing on what aspects of LLMs to evaluate, where these evaluations should occur, and the best practices on how to conduct them. They explored evaluations across various domains, including NLP, reasoning, medical applications, ethics, and education, among others. The study highlighted successful and unsuccessful case studies in LLM applications, providing critical insight into future challenges that might arise in LLM evaluation and stressing the need for a discipline-specific approach to effectively support the ongoing development of these models.

Kachris [103] provided a comprehensive analysis of the various hardware solutions designed to optimize the performance and efficiency of LLMs. The author explored a wide variety of accelerators, including GPUs, FPGAs, and Application-Specific Integrated Circuits (ASICs), providing a detailed discussion of their architectures, performance, and energy efficiency metrics. They focused on the significant computational demands of LLMs, particularly in both training and inference, and evaluated how these accelerators help meet these demands. The survey also highlighted the trade-offs in performance and energy consumption, making it a valuable resource for those seeking to optimize hardware solutions for LLM deployment in data centers and edge computing.

Table 1 provides a comparison between various review papers, categorizing them based on critical features such as LLM models, Application Programming Interfaces (APIs), datasets, domain-specific LLMs, ML-based comparisons, taxonomies, architectures, performance, hardware specifications for testing and training, and configurations.

Table 1.

Comparison of LLM review papers.

1.3. How LLM Facilitates Hardware Design and Verification?

By automating repetitive tasks, providing intelligent suggestions, and facilitating better communication and documentation, LLMs significantly improve the efficiency and effectiveness of hardware design and verification processes. They enable engineers to focus on higher-level problem-solving and innovation, thereby accelerating the development cycle and improving the quality of hardware products. A comprehensive series of these tasks is listed in Table 2.

Table 2.

Tasks in hardware design and verification that can be done by LLMs.

Before diving into the literature survey, we explore the potential of advanced LLMs such as GPT-4 and GPT-4o in addressing the current limitations of HDL design and verification. As one of the latest advancements in large-scale language models, GPT-4o introduces several enhancements over its predecessors, which can effectively tackle key challenges in hardware design and verification tasks [104,105].

- Improved Context Handling: GPT-4o can process significantly larger input contexts (e.g., up to 32k tokens) compared with GPT-3.5. This capability is critical for analyzing complex, multi-file HDL projects, where maintaining contextual relationships between modules, signals, and timing constraints is essential.

- Higher Code Accuracy and Reliability: GPT-4o demonstrates superior accuracy in generating and debugging HDL code. Its advanced reasoning capabilities reduce syntax errors and hallucinations, which are common issues in earlier models. In internal benchmarks (e.g., OpenAI Codex (https://openai.com/index/openai-codex/, accessed on 29 December 2024) vs. GPT-4o), GPT-4o achieved higher pass rates for code generation tasks across diverse programming languages, including Verilog and VHDL.

- Integration with Multi-Modal Systems: GPT-4o’s multi-modal capabilities allow it to process text and visual information simultaneously. In hardware verification, this means it can analyze waveforms, simulation results, and timing diagrams alongside textual descriptions, enhancing its ability to detect errors or inconsistencies.

- Applications in Code Verification: GPT-4o can enhance formal verification processes by assisting with property generation for assertions and test cases. For example, GPT-4o can translate specifications into formal properties expressed in System-Verilog Assertions (SVA)s.

2. Methods

The reporting process followed the PRISMA-ScR checklist (Supplementary Materials) to maintain transparency and completeness [106].

2.1. Review Question

The research question guiding this review is as follows: What does the literature reveal about the role of LLMs in the future of hardware design and verification, what are the primary challenges, and what open issues warrant further investigation?

2.2. Eligibility Criteria

Research articles, conference papers, related books, and arXiv papers that explicitly address the use of LLMs in hardware design, verification, or related domains were considered. Only works published in peer-reviewed journals or presented at reputable conferences were included. Furthermore, studies had to clearly articulate their methodologies and applications, focusing on at least one of the following areas: hardware design, hardware/software codesign, hardware accelerators, hardware security, hardware debugging, or hardware verification. The timeline for inclusion spanned publications from 2018 to the present, reflecting the rapid evolution of LLMs in the last five years.

2.3. Exclusion Criteria

Studies were excluded if they did not directly relate to the application of LLMs in hardware-related domains. General discussions on artificial intelligence without hardware-specific contexts, theoretical works without practical implications, and duplicate publications were omitted. Additionally, non-peer-reviewed content, such as blog posts and opinion articles, as well as works lacking sufficient technical detail, were excluded. Papers written in languages other than English were also excluded unless an accurate translation was available to ensure consistent comprehension and analysis.

2.4. Search Strategy

A comprehensive search strategy was implemented to identify relevant literature. We searched multiple academic databases, including Google Scholar, IEEE Xplore, ACM Digital Library, Scopus, and Web of Science, using a combination of keywords and Boolean operators (“AND”, “OR”). Keywords included “Large Language Models”, “Hardware Design”, “Hardware-Software Codesign”, “Hardware Verification”, “Hardware Accelerators”, and “Hardware Debugging”, among others. Supplementary searches were conducted on arXiv (https://arxiv.org/, accessed on 29 December 2024) and preprint servers to capture emerging trends. We manually screened the reference lists of included studies to identify additional relevant works. Search results were imported into the Mendeley reference management tool (https://www.mendeley.com/, accessed on 29 December 2024) for deduplication and systematic screening.

2.5. Data Extraction and Data Synthesis

Data extraction was performed using a standardized template to capture relevant information, including publication year, authorship, research objectives, methodologies, key findings, and implications. A thematic synthesis approach was used to organize the data into predefined categories aligned with the study focus areas: hardware design, hardware/software codesign, hardware accelerators, hardware security, hardware debugging, and hardware verification. The synthesis aimed to identify patterns, highlight challenges, and map open issues within these domains. Visual representations, such as tables and diagrams, were employed to summarize findings and facilitate comparative analysis across studies.

3. Results

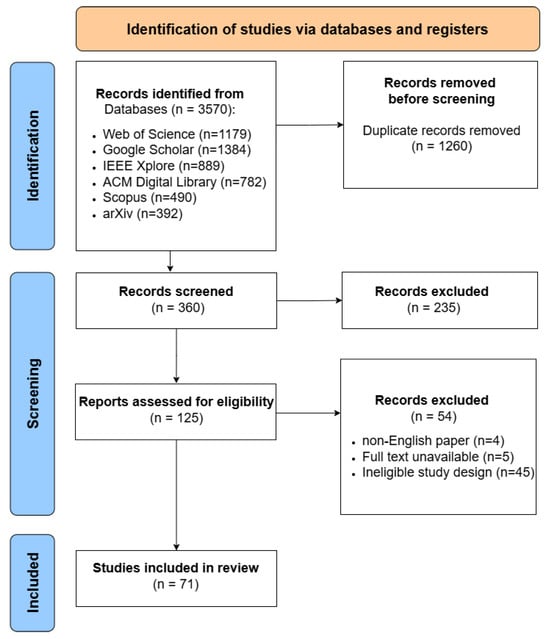

3.1. Selection of Sources

The literature review involved analyzing the titles and abstracts of studies to identify matches with the searched keywords. A total of 3570 studies were initially identified based on these keywords. After eliminating duplicates and excluding articles with unrelated content, the remaining full-text studies were selected, screened, and evaluated for inclusion in the systematic review. The flow chart of PRISMA method is shown in Figure 3.

Figure 3.

PRISMA flow chart.

Initially, we reviewed 360 articles, and after the first and second rounds of evaluation, we excluded those deemed irrelevant to the topic, non-English papers, and unavailable full-text papers, selecting 71 articles for our survey. As stated in Section 1.2, we also reviewed several survey articles with various LLM applications outside the scope of hardware design and verification. As follows, some statistical analysis will be discussed for better clarity.

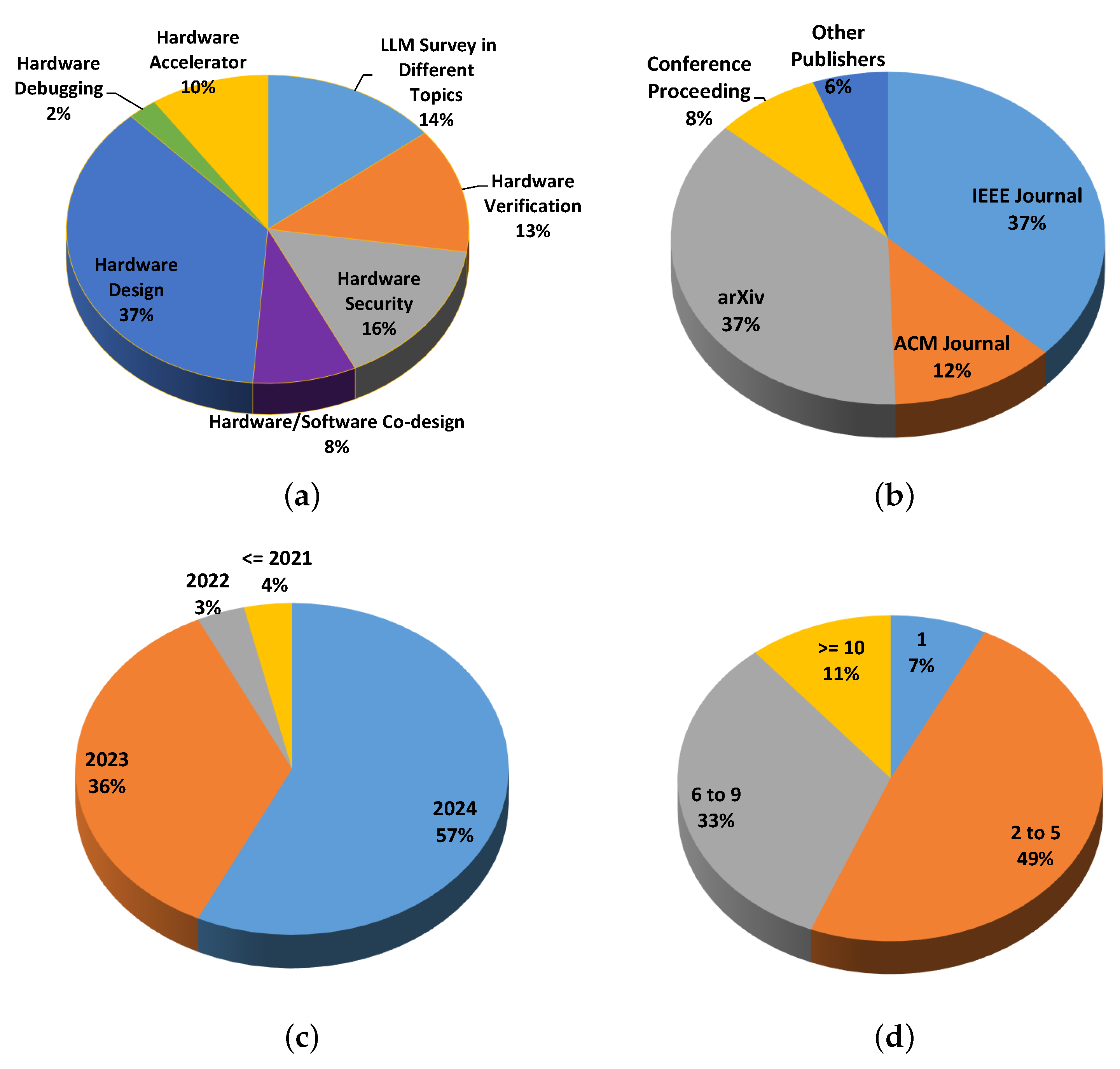

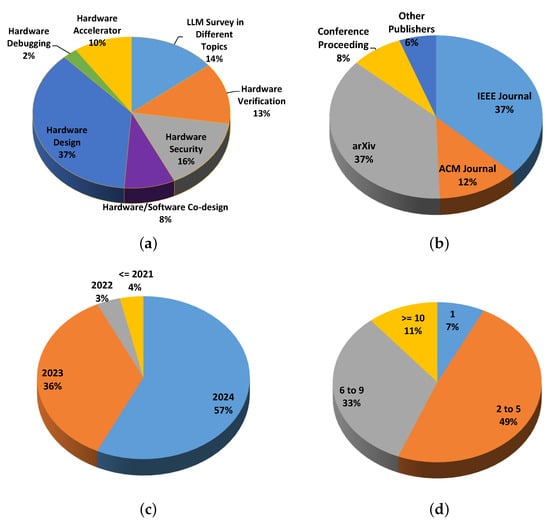

3.2. Synthesis of Results

Figure 4 provides a comprehensive breakdown of the reviewed publications. In terms of research focus (Figure 4a), the majority (37%) addressed hardware design, with significant attention also given to hardware security (16%) and verification (13%), showcasing the wide applicability of LLMs across hardware domains. The distribution of publishers (Figure 4b) highlights that arXiv and IEEE Journals dominate with 37%, followed by ACM Journals (12%), reflecting a mix of preprints and peer-reviewed dissemination. These trends illustrate both the growing engagement with LLMs in hardware domains and the diversity of publication forums. The publication timeline (Figure 4c) indicates a rapid increase in interest, with 91% of studies published in 2023–2024. Finally, the authorship data (Figure 4d) reveal that 49% of the works were authored by 2–5 contributors, showing a collaborative trend, while 7% had solo authorship and 11% involved large teams of 10 or more.

Figure 4.

Statistics analysis for reviewed papers in this survey. (a) Category; (b) publisher; (c) publishing year; (d) number of authors.

4. Discussion

4.1. Overview of LLMs in Hardware Design

LLMs have become a transformative tool in the field of hardware design and verification, bringing significant advancements in efficiency and accuracy. These models, powered by sophisticated AI and NLP capabilities, can analyze and interpret vast amounts of documentation, code, and design specifications, which accelerates the initial phases of hardware design. Using LLMs, engineers can automate the generation of design documents, ensuring consistency and reducing human error. This automation not only speeds up the design process but also enables the exploration of more complex and innovative designs, as the model can provide insights and suggestions based on a wide array of previous designs and industry standards.

In the realm of hardware verification, LLMs play a crucial role in improving the robustness and reliability of hardware systems. Verification is a critical step that ensures that the designed hardware functions correctly under all specified conditions. LLMs can help generate comprehensive test cases, identifying potential edge cases that could be overlooked by human designers. In addition, they can analyze the results of these tests more efficiently, highlighting discrepancies and providing detailed diagnostics that can pinpoint the root causes of failures. This capability significantly reduces the time and resources required for verification, allowing quicker iterations and more reliable hardware products. As a result, the integration of LLMs into hardware design and verification workflows is increasingly essential to maintain a competitive advantage in the fast-paced tech industry.

This survey [107] examines the applications, challenges, and potential of LLMs in hardware design, focusing on Verilog code generation and hardware security enhancement. It reviews existing techniques for automating HDL generation and highlights the need for robust LLM solutions to address verification, debugging, and security challenges in increasingly complex hardware. The paper identifies gaps in current research, such as limitations in dataset quality and the challenges of ensuring generated code correctness. Future directions include developing tailored benchmarks and datasets, enhancing interpretability, and improving LLM performance for domain-specific tasks.

These studies collectively illustrate the transformative potential of LLMs in hardware design and verification, offering new methodologies that enhance efficiency, accuracy, and innovation in the field. As technology continues to evolve, further research and development will likely uncover even more applications and benefits, solidifying the role of LLMs as a crucial tool in modern hardware engineering.

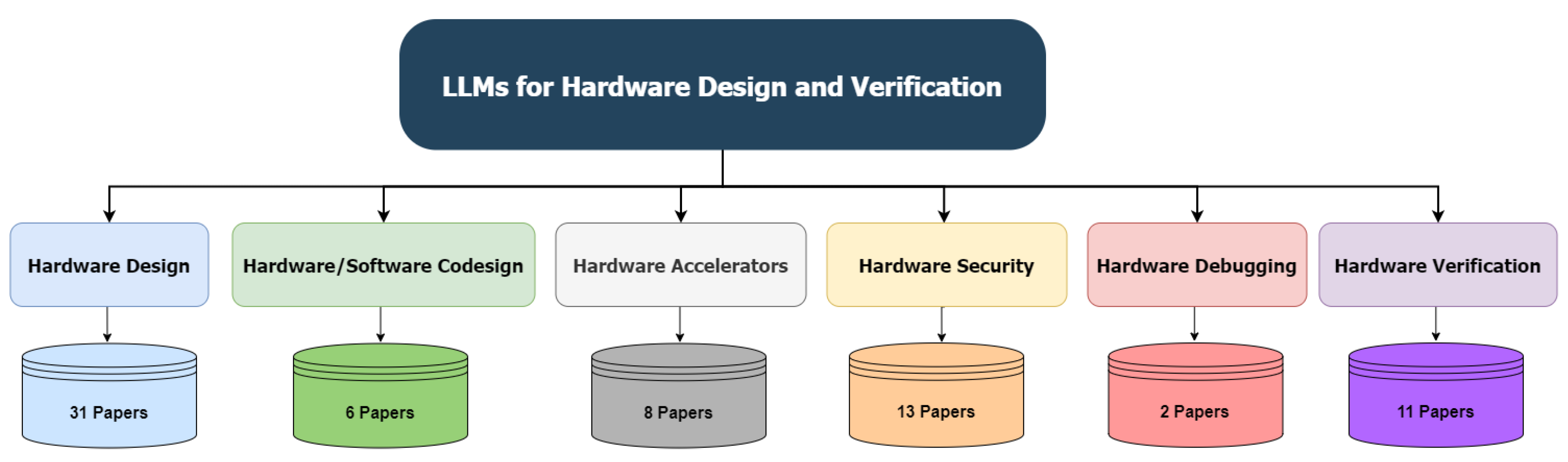

4.2. Different Categories of LLMs for Hardware Design and Verification

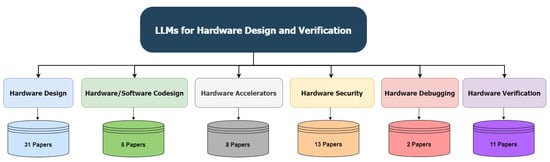

To the best of our knowledge, all articles in the literature can be categorized into six categories, as depicted in Figure 5. It should be noted that, although some papers could belong to two or more categories due to their approaches, we have decided to assign them to the category that most closely aligns with the majority of their content. In the following sections, each category will be discussed in detail together with all surveyed papers.

Figure 5.

Different Categories of LLMs for hardware design and verification.

4.2.1. Hardware Design

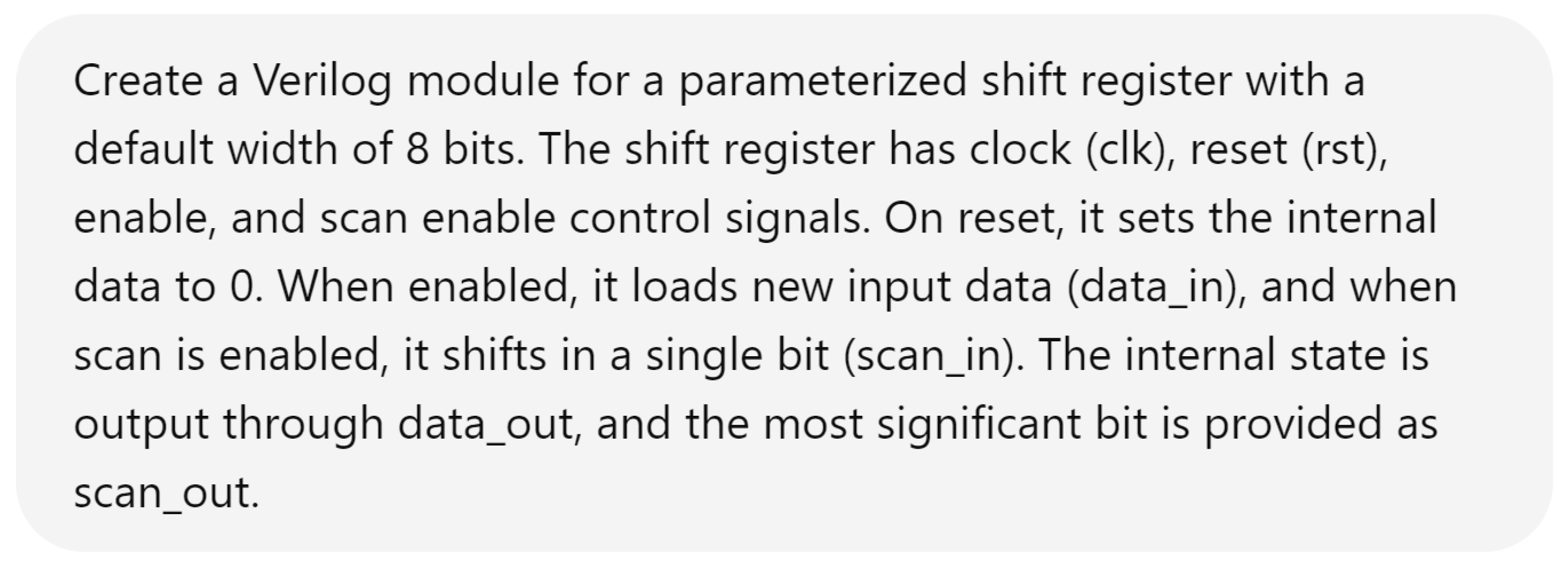

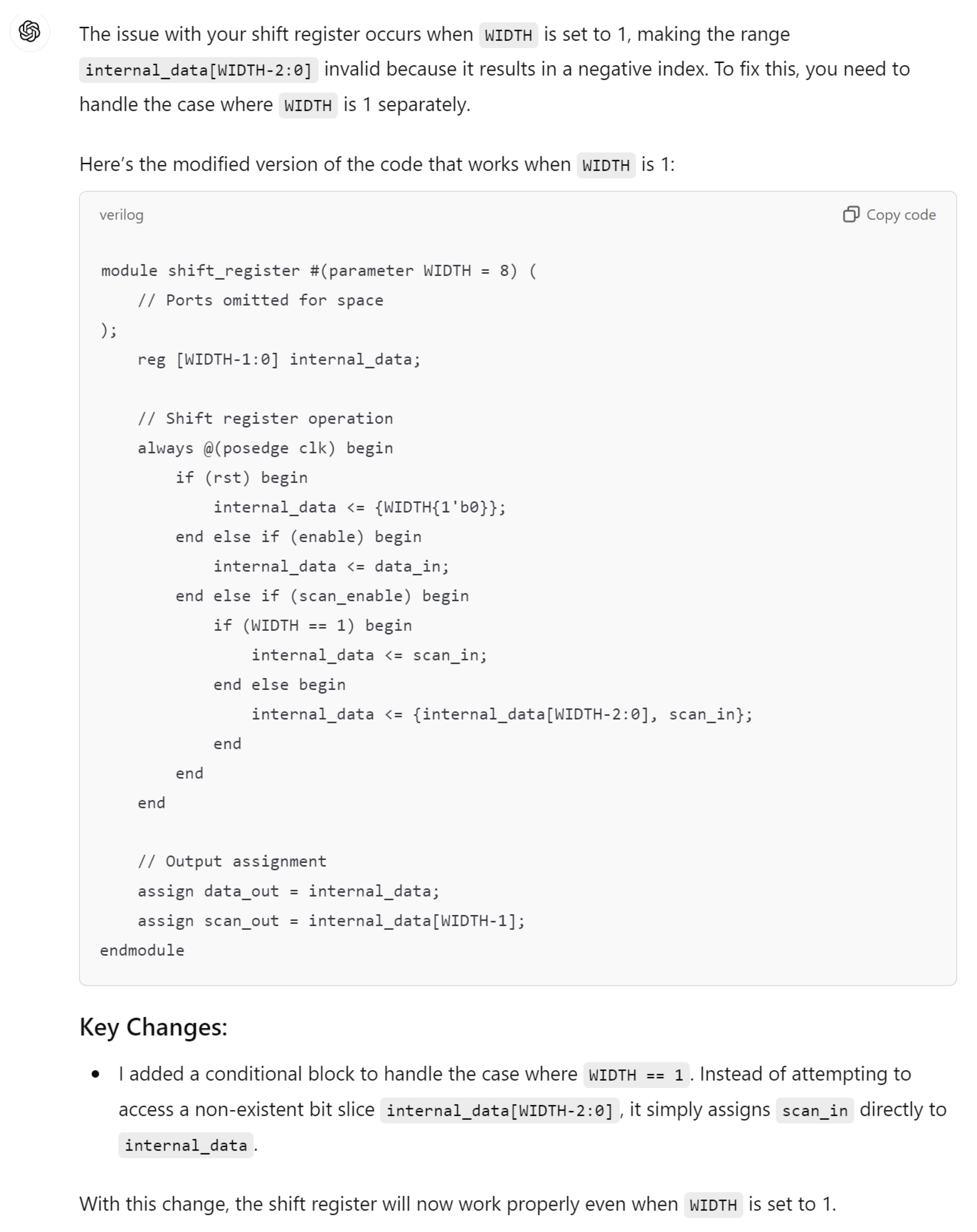

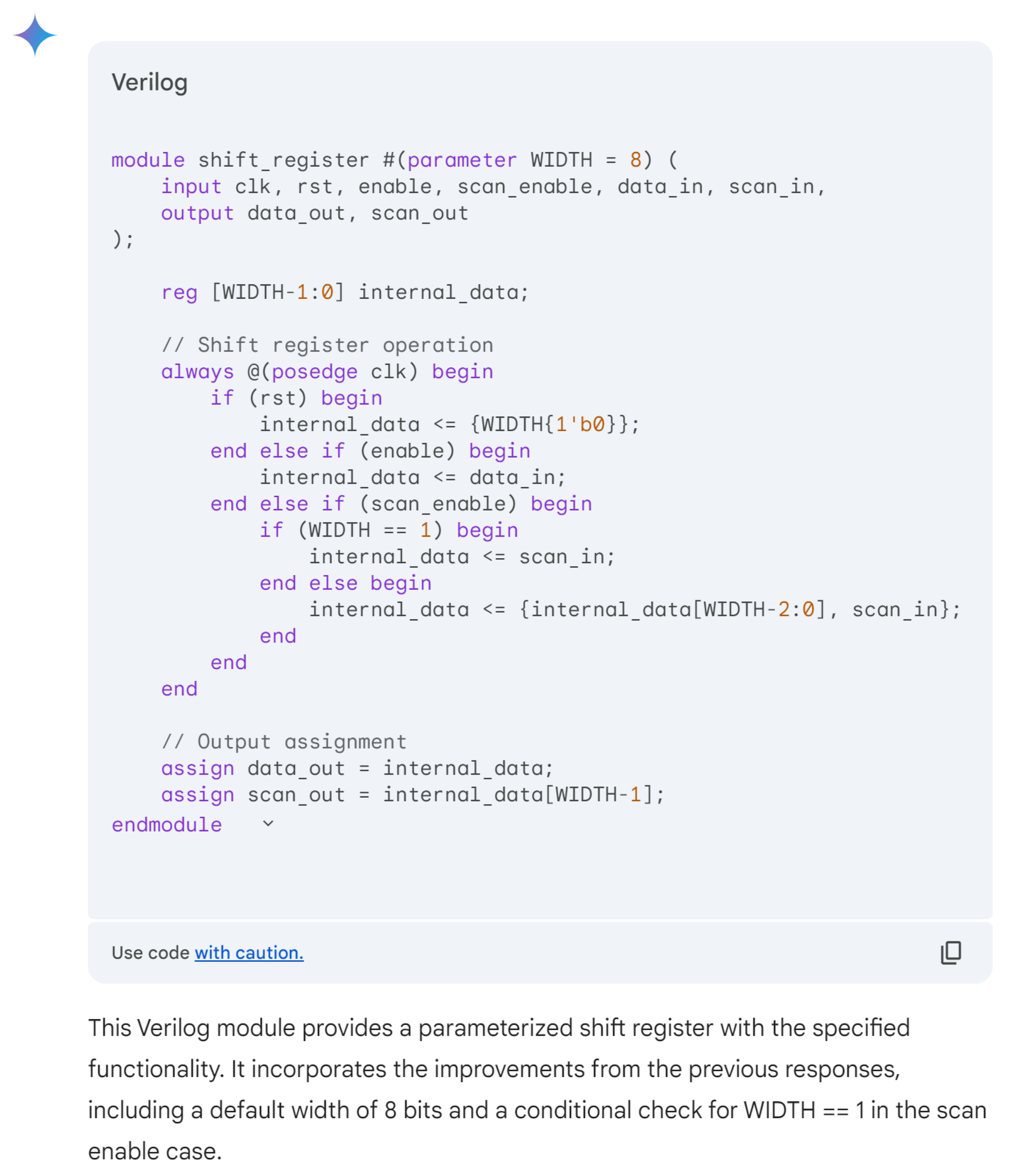

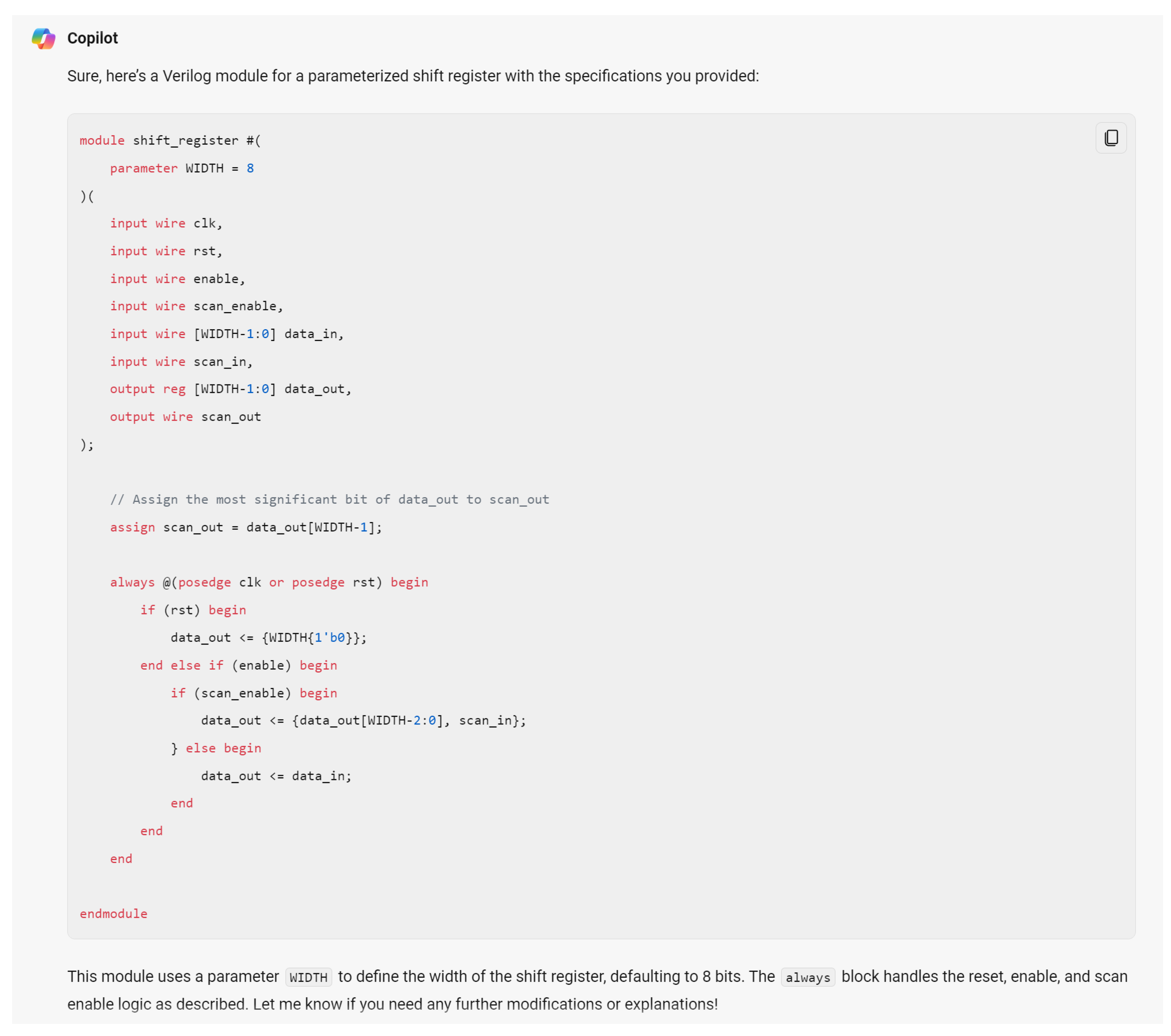

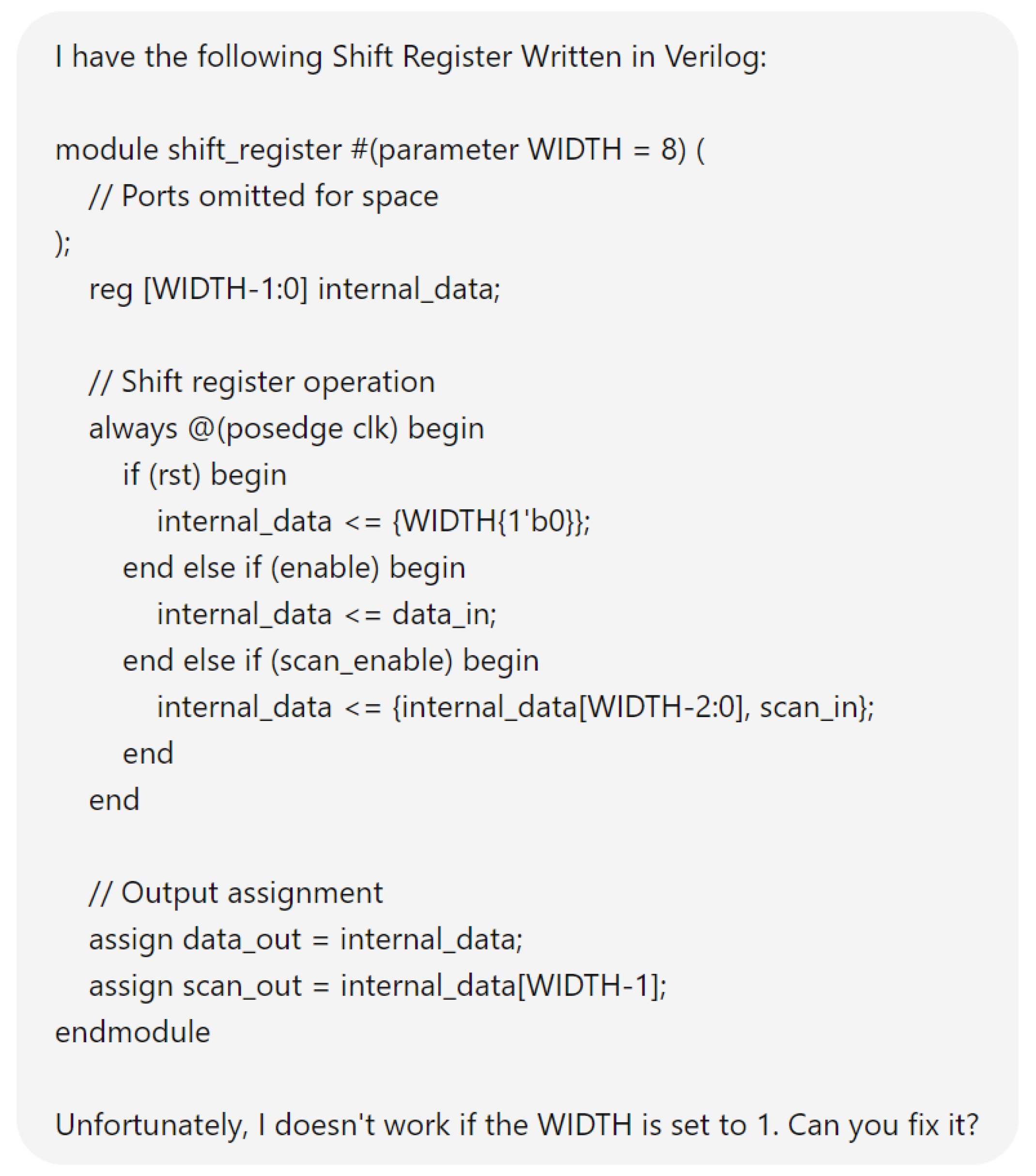

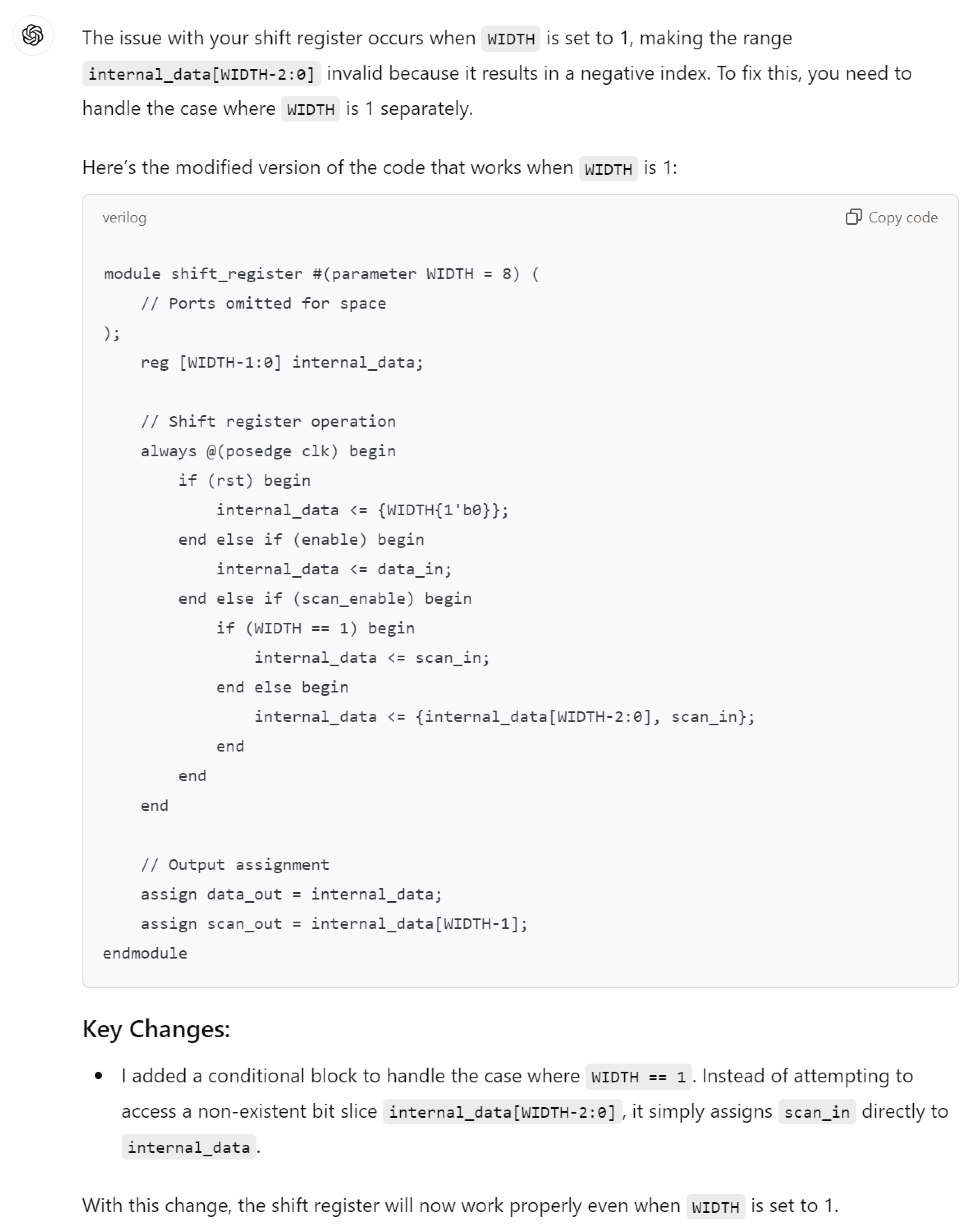

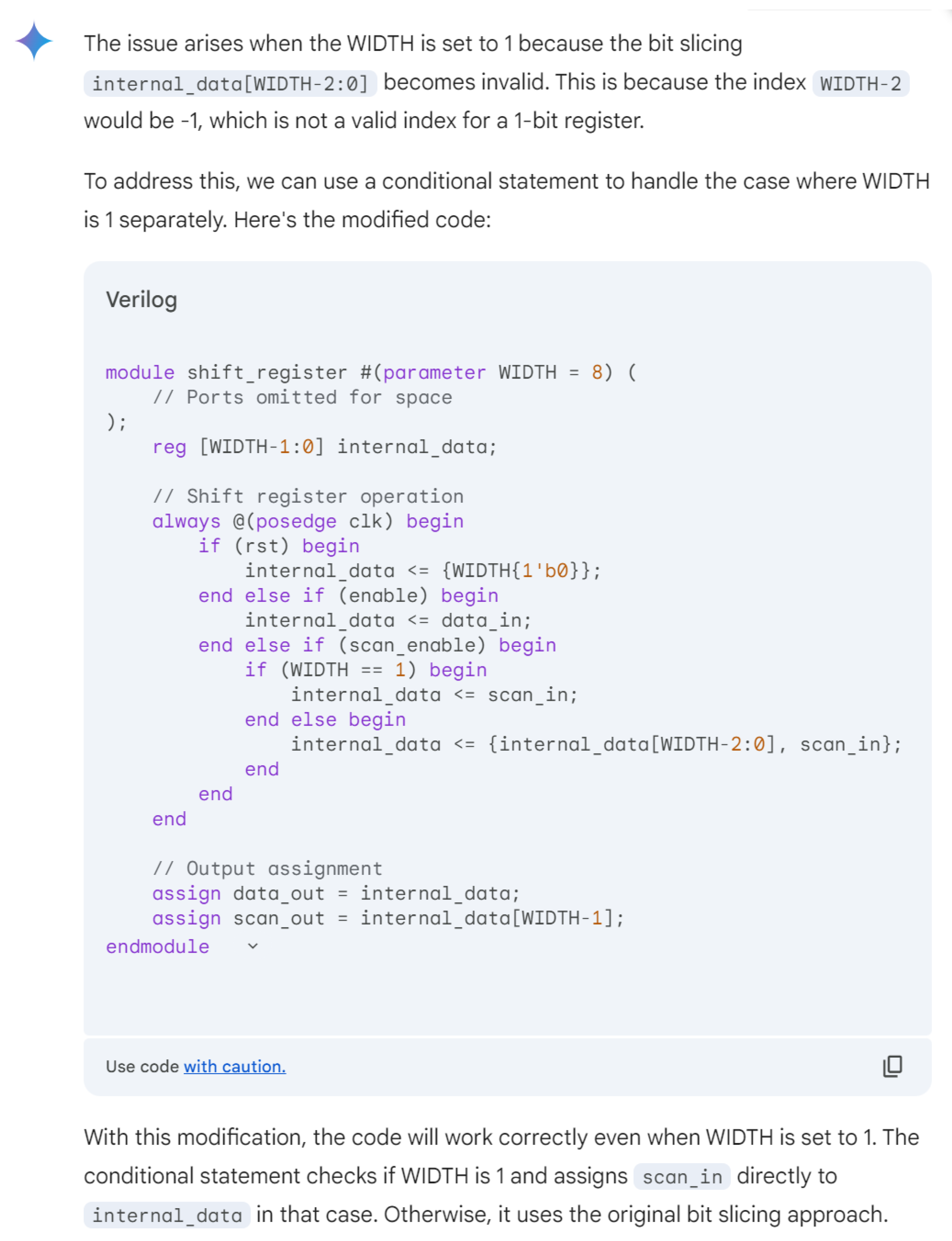

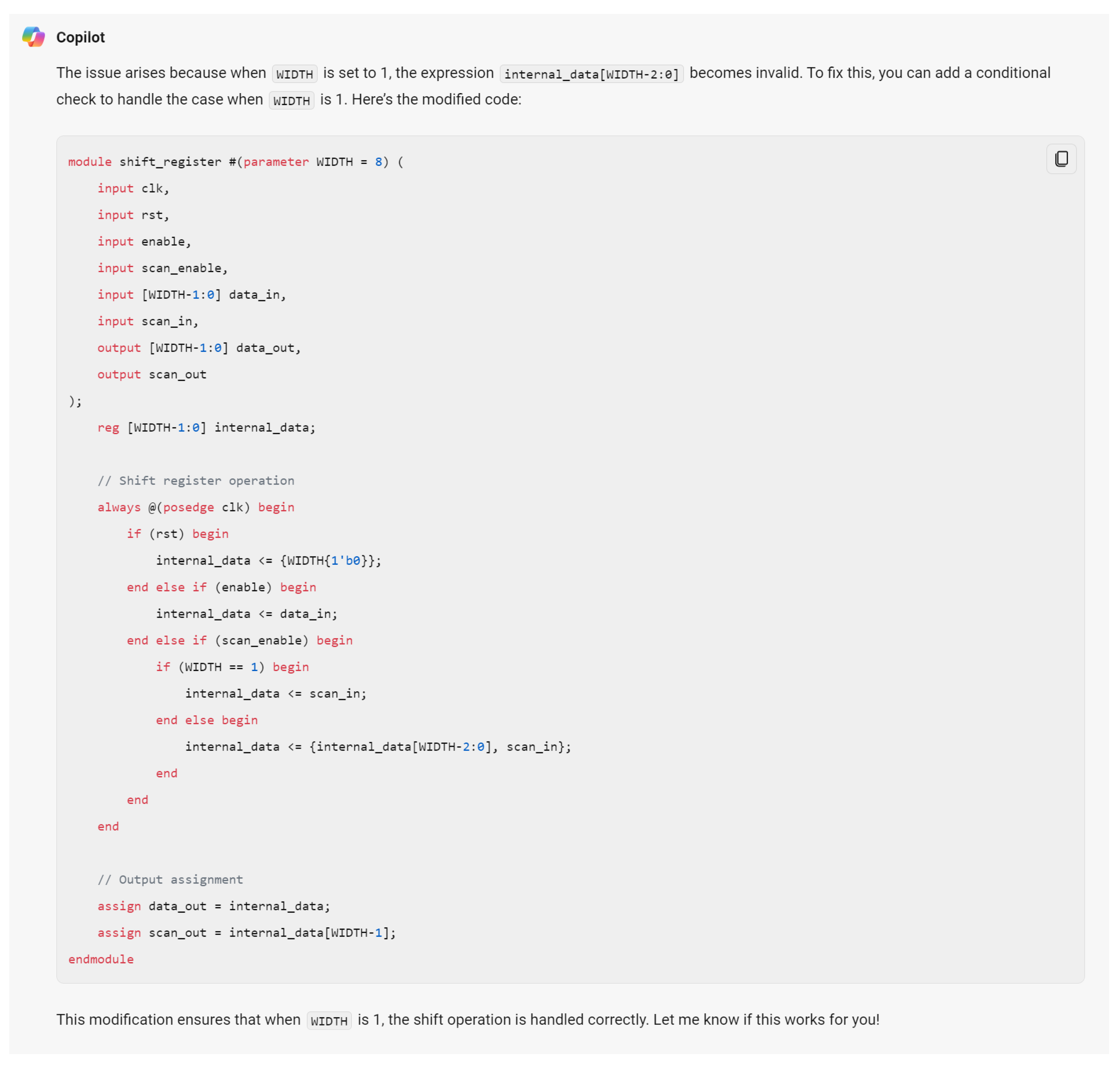

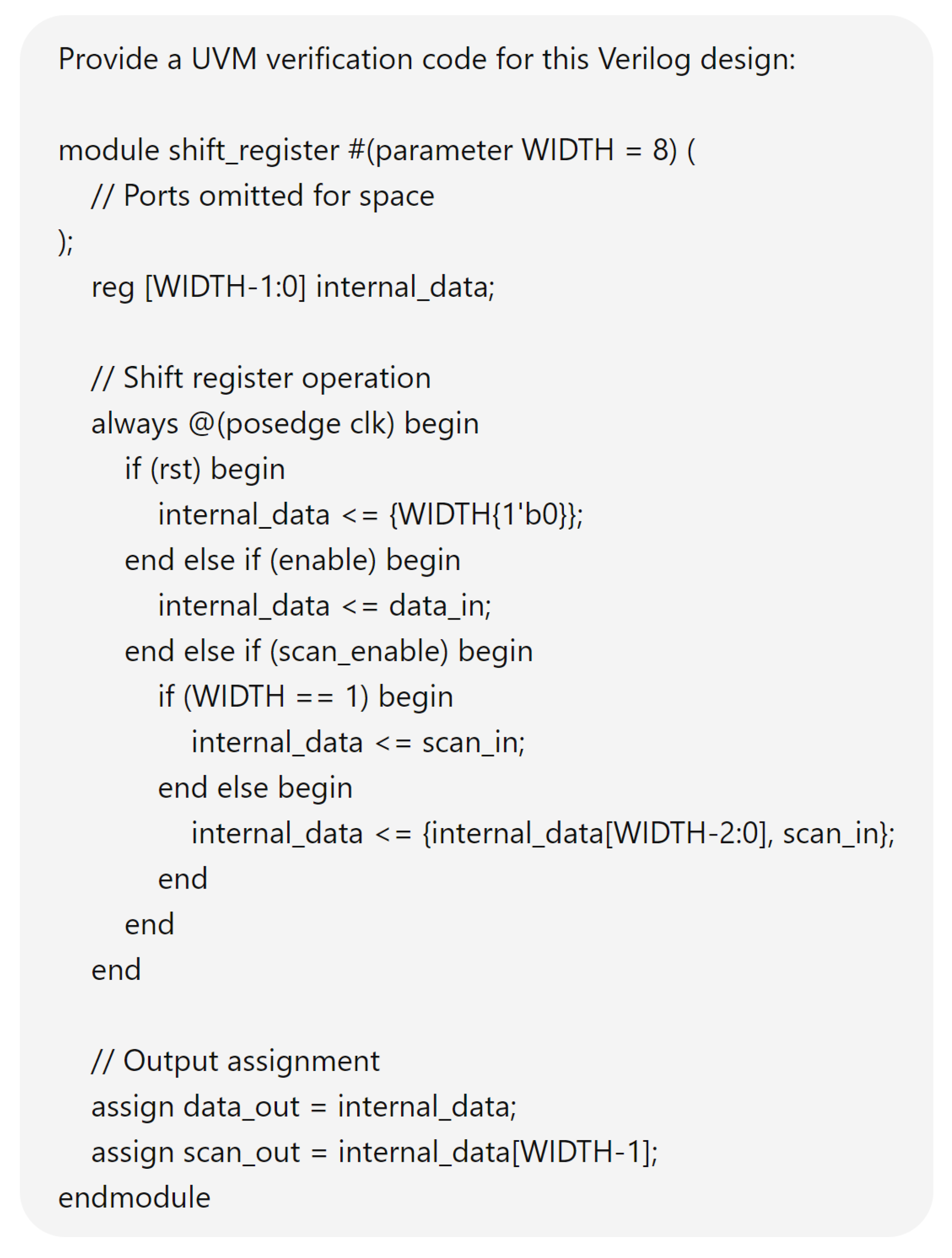

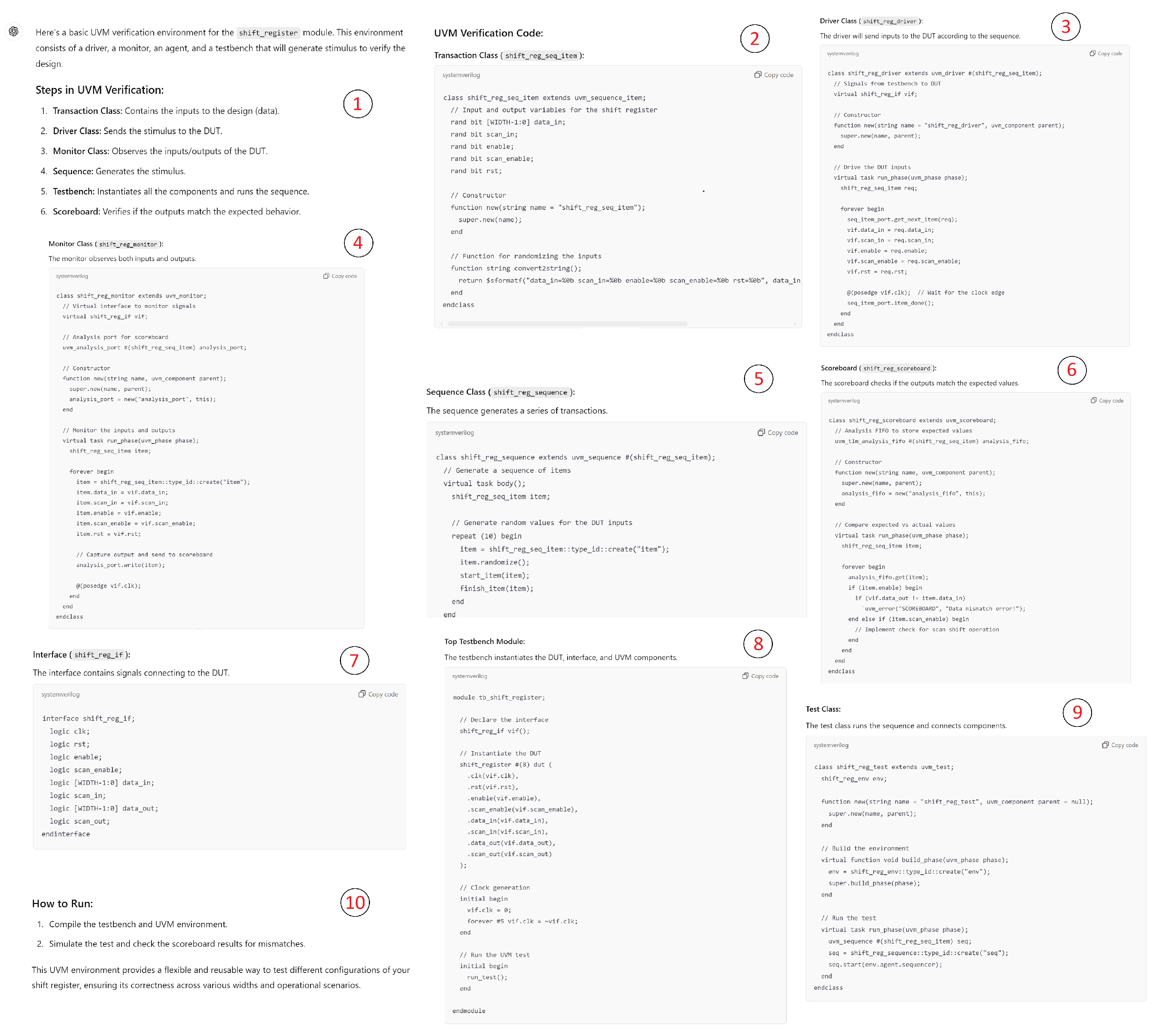

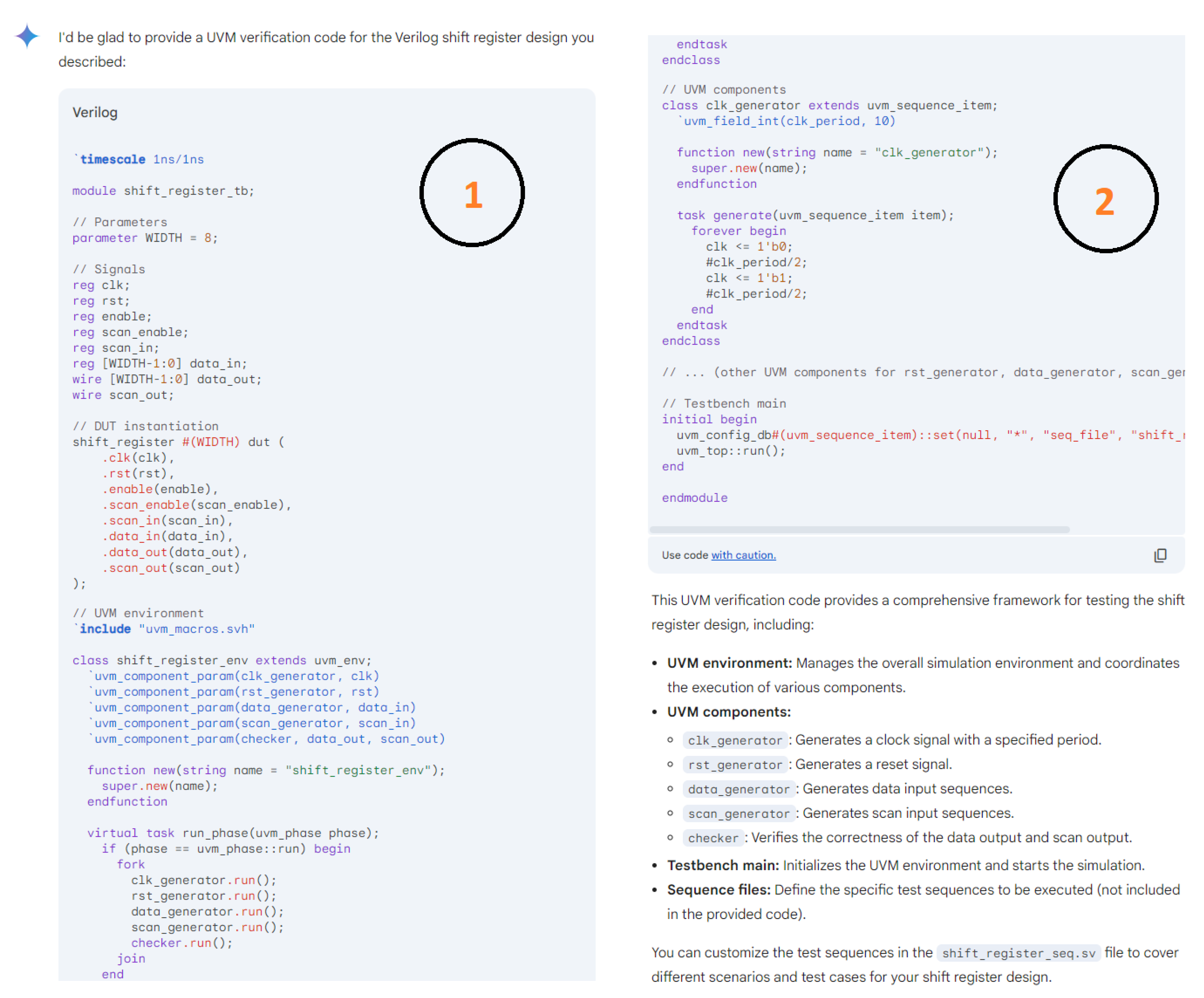

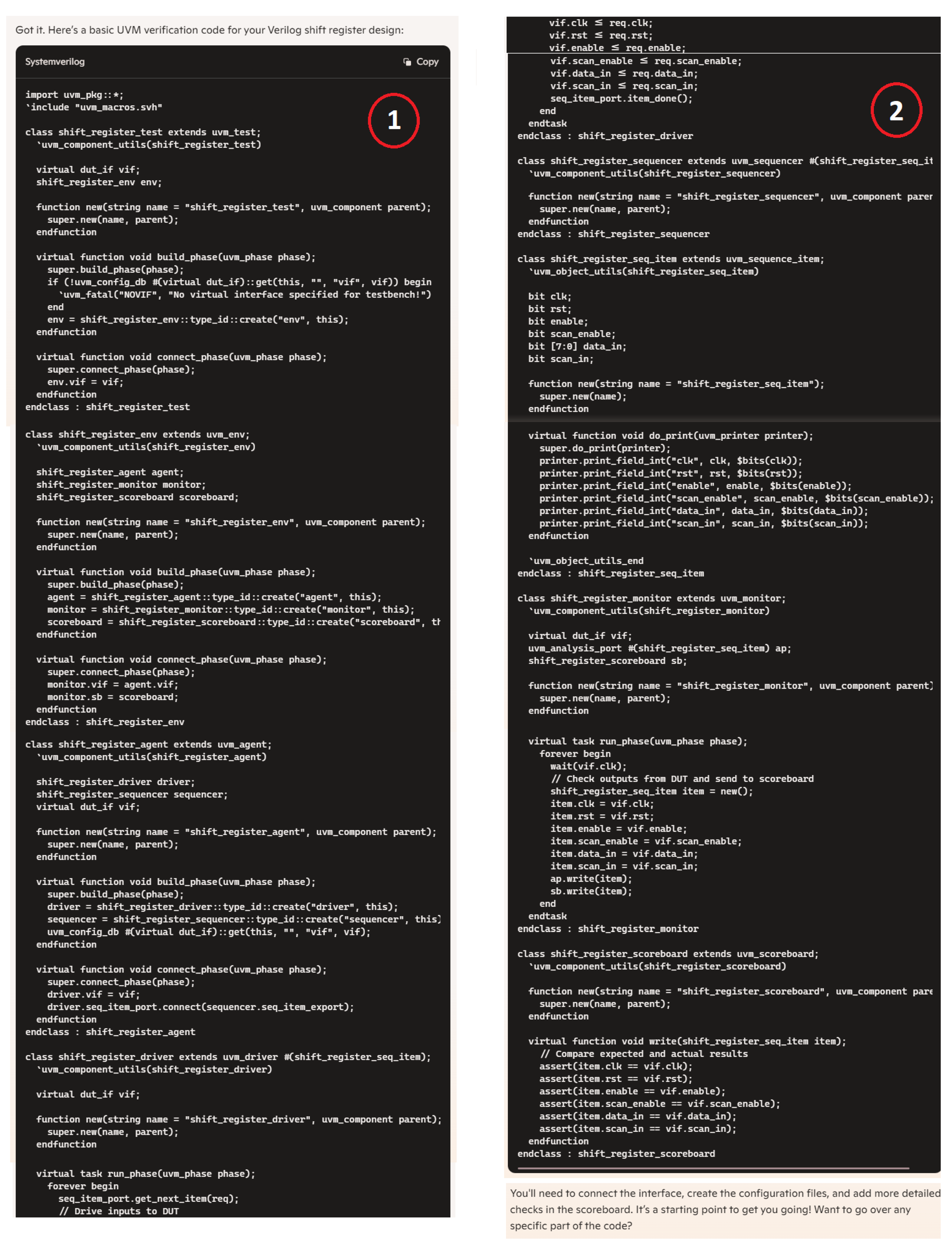

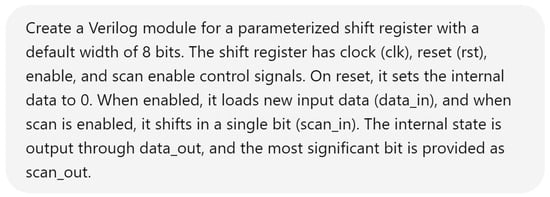

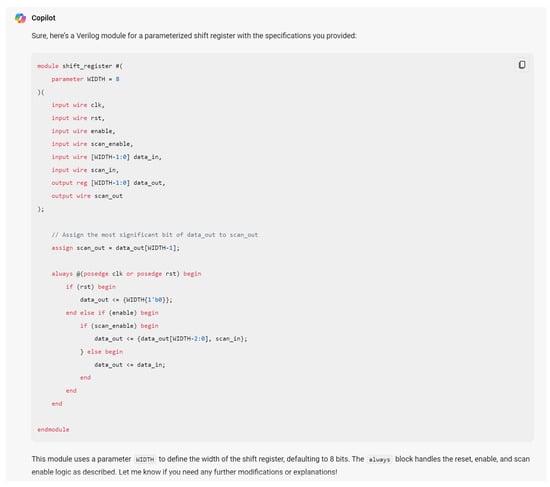

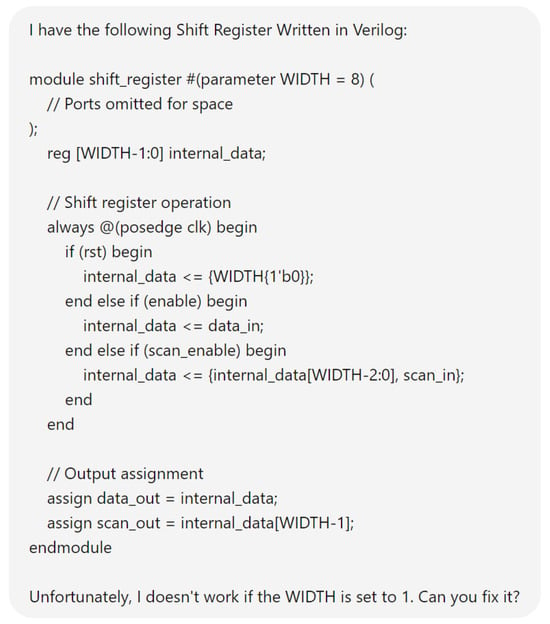

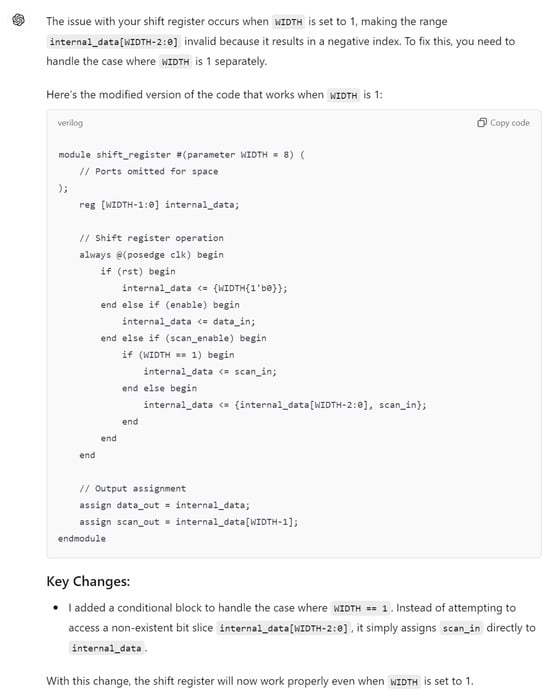

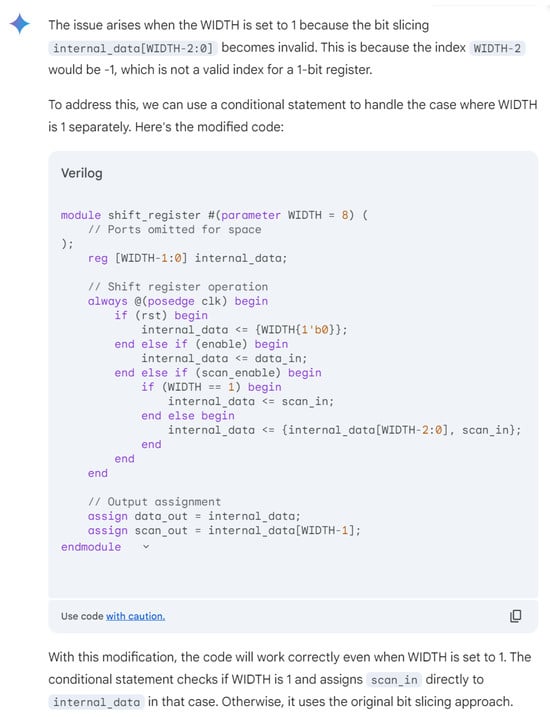

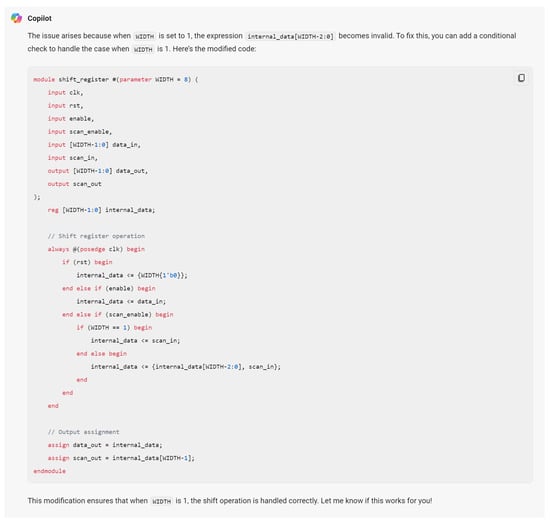

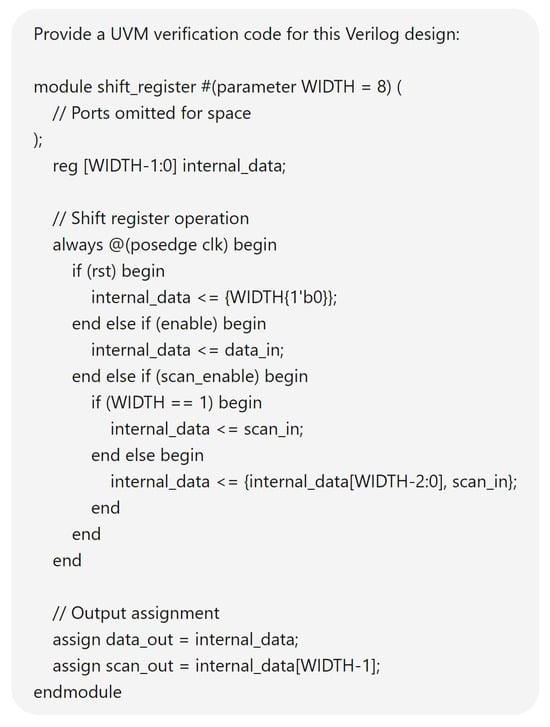

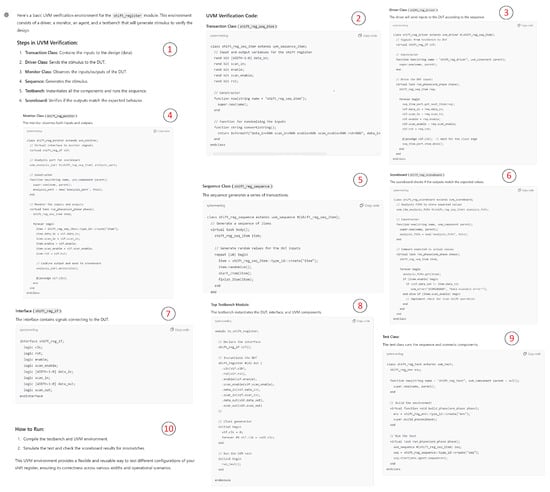

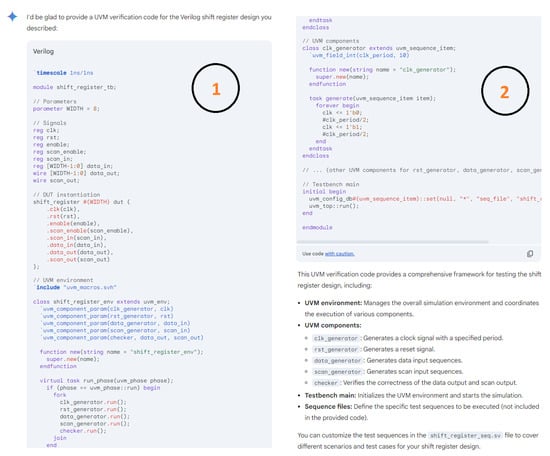

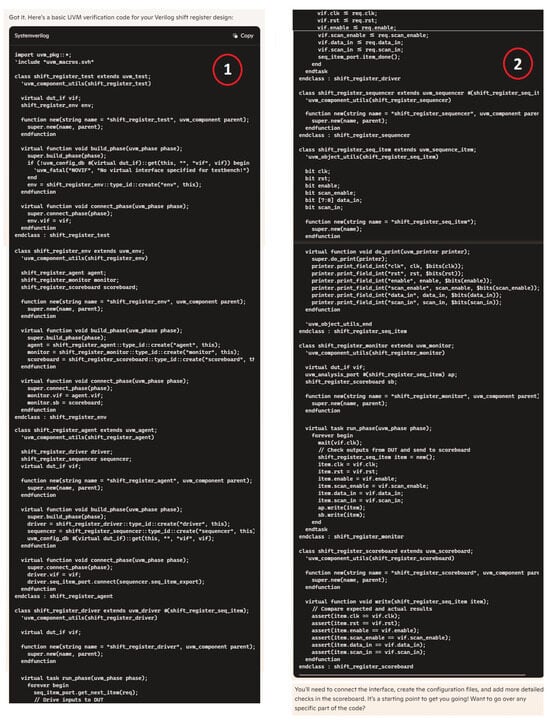

Hardware design using HDLs is evolving with the integration of LLMs such as OpenAI’s ChatGPT, Google Gemini, and Microsoft Copilot. These LLMs assist in modifying and generating HDL code by accepting design specifications as input prompts, streamlining the development process. In Figure 6, we demonstrate an HDL design for a shift register, where the input prompt describes the desired behavior and adjustments. The results of this input prompt processed by different LLMs are presented in Figure 7, Figure 8 and Figure 9, showing the outputs of ChatGPT 4o, Gemini, and Copilot, respectively. These figures illustrate the varying approaches taken by each model to interpret and modify the HDL design.

Figure 6.

Input prompt for an HDL design.

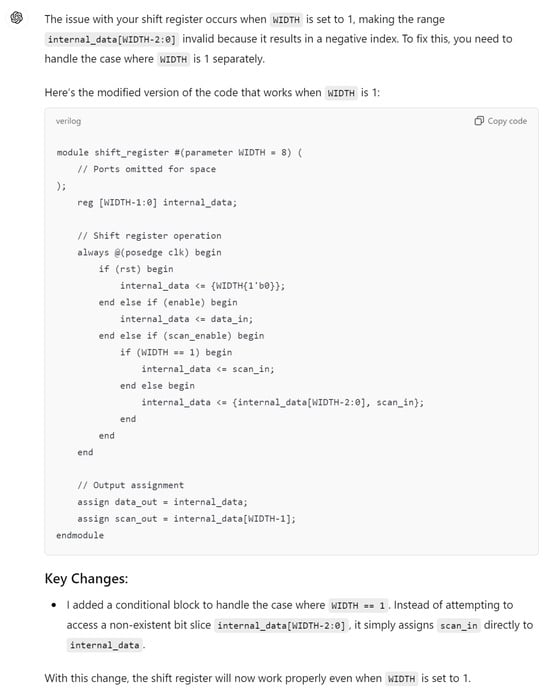

Figure 7.

ChatGPT output for the input prompt of the HDL design.

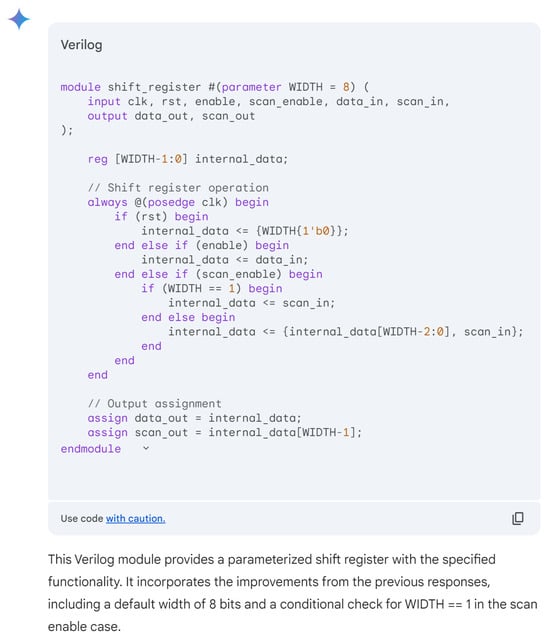

Figure 8.

Gemini output for the input prompt of the HDL design. Gemini uses various colors to distinguish between HDL keywords (purple), constants (red), variables and names (black).

Figure 9.

Copilot output for the input prompt of the HDL design. Copilot uses various colors to distinguish between HDL keywords (red), constants (blue), variables and names (black).

For more clarity, the main differences of these responses are mentioned in Table 3. The comparison highlights that ChatGPT’s design stands out for its comprehensive handling of both functionality and edge cases, including explicit checks for the critical scenario where WIDTH == 1, ensuring robustness against potential errors. Its code structure is clear, well-commented, and adheres strictly to the given specifications, making it highly reliable for implementation. While Copilot provides a parameterized design with basic functionality, it lacks the detailed error handling for edge cases, such as WIDTH == 1, which may lead to out-of-bounds indexing issues. Gemini’s implementation, though concise and readable, similarly misses critical edge-case handling and flexibility, focusing more on the general functionality without addressing all potential pitfalls. This comparison underscores the importance of comprehensive error handling and clarity in code when designing robust parameterized hardware modules.

Table 3.

Comparing different LLM responses to the same design input prompt.

The authors in [108] present a comprehensive framework for evaluating the performance of hardware setups used in the inference of LLMs. Their research aims to measure critical performance metrics such as latency, throughput, energy efficiency, and resource utilization to provide a detailed assessment of hardware capabilities. By standardizing these measurements, the framework facilitates consistent and comparable evaluations across different hardware platforms, enabling researchers and engineers to identify the most efficient configurations for LLM inference. The authors’ proposed framework addresses the growing complexity and computational demands of LLMs by offering a robust tool for hardware benchmarking. It integrates various testing scenarios to capture the diverse workloads LLMs handle during inference. This allows for a nuanced understanding of how different hardware components contribute to overall performance. Ultimately, the framework aims to guide the development of more efficient hardware solutions tailored to the specific needs of LLMs, promoting advancements in both hardware design and LLM deployment strategies.

In [109], the authors investigate the application of LLMs in optimizing and designing VHDL (VHSIC Hardware Description Language (HDL)) code, a critical aspect of digital circuit design. Their research explores how LLMs can automate the generation of efficient VHDL code, potentially reducing the time and effort required in the design process. Through a series of experiments, the authors demonstrate the capability of LLMs to provide high-quality code suggestions and optimizations, which can enhance the performance and reliability of digital circuits. The research also addresses the challenges associated with integrating LLMs into the VHDL design workflow. It highlights issues such as the need for domain-specific training data and the importance of understanding the context and constraints of hardware design. By addressing these challenges, the study provides insights into how LLMs can be effectively utilized to support and streamline VHDL code development, paving the way for more automated and intelligent design processes in digital electronics.

The authors of AutoChip [110] introduce a novel method to automate the generation of HDL code by using feedback from LLMs. Their research involves an iterative process where LLMs provide suggestions and improvements on initial HDL code drafts, leading to refined and optimized final versions. This method significantly reduces the need for manual intervention, making the design process more efficient and accessible, especially for complex hardware projects. The authors outline the technical details of implementing AutoChip, including training LLMs on domain-specific datasets and the integration of feedback loops into the design workflow. Their case studies demonstrate the effectiveness of AutoChip in producing high-quality HDL code with minimal human oversight. By automating routine and complex coding tasks, AutoChip has the potential to revolutionize the field of hardware design, enabling faster prototyping and more innovative solutions in hardware development.

The authors in [111] focus on benchmarking various LLMs to evaluate their performance in generating Verilog Register Transfer Level (RTL) code. Their research compares the accuracy, efficiency, and complexity handling capabilities of different models, providing a comprehensive assessment of their suitability for automated hardware design tasks. By establishing standardized benchmarks, the research offers valuable insight into the strengths and limitations of each model in the context of the generation of Verilog code RTL. The findings highlight significant differences in model performance, emphasizing the importance of selecting the right LLM for specific hardware design tasks. The authors also discuss the potential for further improving LLMs by incorporating more specialized training data and refining model architectures. Through detailed comparisons and practical examples, their study contributes to the ongoing effort to enhance the role of LLMs in automating and optimizing the hardware design process.

The authors in Chip-Chat [112] explore the emerging field of using conversational glsai, particularly LLMs, in hardware design. Their research discusses the potential benefits of natural language interfaces, such as increased accessibility and collaboration, by enabling designers to interact with design tools through simple conversational commands. This approach could democratize hardware design, making it more accessible to non-experts and fostering innovation through diverse contributions. However, the authors highlight significant challenges in this domain, including the current limitations of LLMs in understanding complex hardware design concepts and the need for precise and unambiguous communication in technical contexts. They propose potential solutions, such as improving model training with domain-specific data and developing more sophisticated interaction protocols. By addressing these challenges, their study aims to pave the way for more effective integration of conversational AI in hardware design, potentially transforming how engineers and designers approach complex projects.

The authors in ChipGPT [113] examine the current state of using LLMs for natural language-based hardware design, assessing their capabilities and identifying existing gaps. Their research evaluates various LLMs in terms of their ability to understand and generate hardware design code from natural language descriptions. The authors highlight the potential of these models to streamline the design process by enabling more intuitive and accessible interactions between designers and design tools. Despite the promising potential, the authors identify several challenges that need to be addressed to achieve seamless natural language hardware design. These include improving the models’ understanding of technical jargon and design constraints, enhancing the accuracy and efficiency of code generation, and ensuring robust handling of complex design scenarios. By outlining these challenges and suggesting areas for future research, their study provides a roadmap for advancing the integration of NLP in hardware design workflows.

Martínez et al. [114] explore the application of LLMs to detect code segments that can benefit from hardware acceleration. Their research presents techniques for identifying computation-intensive parts of code that, when offloaded to specialized hardware, can significantly improve overall system performance. The authors demonstrate the effectiveness of LLMs in pinpointing these critical segments and providing recommendations for hardware acceleration. The research also discusses the practical implementation of this approach, discussing how LLMs can be integrated into existing development workflows to automatically suggest and optimize code for hardware acceleration. The authors highlight the potential performance gains and efficiency improvements achievable through this method, making a strong case for the use of LLMs to optimize software for better hardware utilization. By leveraging LLMs for this purpose, developers can achieve more efficient and powerful computing solutions.

The authors in CreativEval [115] introduce a novel approach to evaluating the creativity of hardware design code generated by LLMs. The authors propose specific metrics and benchmarks to assess the originality, efficiency, and practicality of the generated code, emphasizing the importance of creativity in hardware design. By focusing on these aspects, their research aims to determine how well LLMs can innovate within the constraints of hardware development. The findings from the study suggest that while LLMs are capable of producing creative solutions, there are limitations to their ability to fully replicate human ingenuity in hardware design. The authors discuss the potential for improving LLMs through more diverse and comprehensive training data, as well as refining the evaluation metrics to better capture the nuances of creative design. Through this evaluation framework, CreativEval contributes to the understanding of LLM capabilities in generating novel and effective hardware design solutions.

The authors in Designing Silicon Brains using LLM [116] explore the use of ChatGPT, a type of LLM, for designing a spiking neuron array, which is a type of neuromorphic hardware that mimics the behavior of biological neurons. They demonstrate how ChatGPT can generate detailed and accurate descriptions of the neuron array, including its architecture and functionality, thereby aiding in the design process. This approach leverages the model’s ability to understand and articulate complex technical concepts in natural language. The authors showcase the potential of using LLMs for designing advanced neuromorphic systems, highlighting the benefits of automated description and specification generation. By providing a structured and comprehensive design output, ChatGPT can significantly streamline the development process of spiking neuron arrays. The study also discusses the challenges and future directions for integrating LLMs into neuromorphic hardware design, emphasizing the need for further refinement and domain-specific training to enhance the accuracy and utility of the generated descriptions.

The authors in Digital Application-Specific Integrated Circuit (ASIC) Design with Ongoing LLMs [117] explore the application of ongoing LLMs in the design of ASICs. They provide an overview of current methodologies and strategies for incorporating LLMs into various stages of the ASIC design process, from initial specification to final implementation. The authors highlight the potential of LLMs to automate routine tasks, enhance design accuracy, and reduce development time. They also discuss the prospects and challenges of using LLMs in digital ASIC design, including the need for specialized training data, the integration of LLMs into existing design workflows, and the importance of maintaining design integrity and performance. By addressing these issues, their study offers valuable insights into the future of ASIC design, suggesting that LLMs could play a significant role in advancing the field through increased automation and intelligent design support.

In [118], the authors review recent advancements in the development of efficient algorithms and hardware architectures specifically tailored for NLP tasks. They discuss various techniques for optimizing NLP algorithms to improve performance and reduce computational requirements. These optimizations are crucial for handling large-scale data and complex models typical of modern NLP applications, including LLMs. Their study also explores the design of specialized hardware that can support efficient NLP. This includes discussing hardware accelerators, such as GPUs and TPUs, and their role in enhancing the performance of NLP tasks. By combining algorithmic improvements with hardware advancements, the authors outline a comprehensive approach to achieving high efficiency and scalability in NLP applications. This integrated approach is essential for meeting the growing demands of NLP and leveraging the full potential of LLMs.

The authors in GPT4AIGChip [119] investigate the use of LLMs to automate the design of AI accelerators. They present methodologies for leveraging LLMs to generate design specifications and optimize the architecture of AI accelerators. By automating these processes, their study aims to streamline the development of specialized hardware for AI tasks, reducing both the time and costs associated with traditional design methods. The authors provide detailed case studies demonstrating the effectiveness of LLMs in producing high-quality design outputs for AI accelerators. They highlight the potential for LLMs to not only accelerate the design process but also to innovate and improve upon existing architectures. Their research discusses future directions, including the integration of more advanced LLMs and the development of more sophisticated automation tools. By advancing the use of LLMs in hardware design, GPT4AIGChip contributes to the ongoing evolution of AI hardware development.

The authors in Hardware Phi-1.5B [120] introduce an LLM trained specifically on hardware-related data, demonstrating its capability to encode domain-specific knowledge. In their research, the authors discuss the model’s architecture and training process, emphasizing the importance of specialized datasets for achieving high performance in hardware design tasks. The authors showcase various applications of Hardware Phi-1.5B, including code generation, optimization, and troubleshooting in hardware development. The study highlights the advantages of using domain-specific LLMs over general-purpose models, particularly in terms of the accuracy and relevance of the generated content. By tailoring the model to the hardware domain, Hardware Phi-1.5B is able to provide more precise and contextually appropriate outputs, which can significantly enhance the efficiency and effectiveness of hardware design processes. The authors conclude with a discussion of future research directions and potential improvements to further leverage domain-specific LLMs in hardware engineering.

The authors in Hardware-Aware Transformers (HAT) [121] introduce a novel approach to designing LLMs that consider hardware constraints during model training and inference. Their research details the development of transformers that are optimized for specific hardware configurations, aiming to improve performance and reduce resource consumption. This hardware-aware design is particularly important for deploying NLP tasks on various platforms, including edge devices and specialized accelerators. The study presents experimental results demonstrating the efficiency gains achieved by HAT models compared with traditional transformers. The authors highlight significant reductions in latency and energy usage, making these models more suitable for real-world applications where resource constraints are a critical factor. By focusing on the co-design of hardware and software, HAT offers a promising solution for enhancing the performance and scalability of NLP tasks in diverse deployment environments.

In the study by Chang et al. [122], the authors propose a post-processing technique to improve the quality of hardware design code generated by LLMs. Their approach involves applying search algorithms to refine and optimize the initial outputs from LLMs, ensuring higher quality and more reliable code generation. The authors detail the implementation of this technique and provide experimental results demonstrating its effectiveness in enhancing code quality. The study highlights the limitations of current LLM-generated code, such as inaccuracies and inefficiencies, and shows how post-LLM search can address these issues. By iteratively refining the code, the proposed method can significantly improve the final output, making it more suitable for practical hardware design applications. This approach underscores the potential for combining LLM capabilities with additional optimization techniques to achieve superior results in automated code generation.

The authors in OliVe [123] introduce a quantization technique designed to accelerate LLMs by focusing on hardware-friendly implementations. The concept of outlier–victim pair quantization is presented as a method to reduce the computational load and improve inference speed on hardware platforms. This technique targets specific outliers in the data, which typically require more resources, and optimizes their representation to enhance overall model efficiency. The authors provide a detailed analysis of the quantization process and its impact on model performance. Experimental results demonstrate significant improvements in inference speed and resource utilization without compromising the accuracy of the LLMs. By making LLMs more hardware-friendly, OliVe offers a practical solution for deploying these models in environments with limited computational resources, such as mobile devices and edge computing platforms.

The authors in RTLCoder [124] present a specialized model designed to outperform GPT-3.5 in generating RTL code. They highlight the use of an open-source dataset and a lightweight model architecture to achieve superior results in RTL code generation tasks. The authors provide a comprehensive comparison of RTLCoder’s performance against GPT-3.5, demonstrating significant improvements in accuracy, efficiency, and code quality. Their study also discusses the advantages of using a focused dataset and a streamlined model for specific applications, such as hardware design. By tailoring the model to the unique requirements of RTL code generation, RTLCoder can provide more relevant and high-quality outputs, reducing the need for extensive manual corrections. This approach underscores the potential for developing specialized models that can outperform general-purpose LLMs in targeted tasks.

The authors in RTLLM [125] introduced an open-source benchmark specifically designed to evaluate the performance of LLMs in generating RTL code. Their benchmark provides a standardized set of tasks and metrics to facilitate consistent and comprehensive assessments of LLM capabilities in RTL generation. By offering a common evaluation framework, RTLLM aims to drive improvements and innovation in the use of LLMs for hardware design. The authors detail the creation of the benchmark, including the selection of tasks, the development of evaluation criteria, and the compilation of relevant datasets. They present initial results from using RTLLM to evaluate various LLMs, highlighting strengths and areas for improvement. By providing an open-source tool for benchmarking, RTLLM encourages collaboration and transparency in the development and assessment of LLMs for RTL code generation, fostering advancements in this emerging field.

In this study by Pandelea et al. [126], the authors discuss the co-design approach to selecting features for language models and balancing software and hardware requirements to achieve optimal performance. They highlight the importance of considering both aspects in the development of LLMs, as hardware constraints can significantly impact model efficiency and scalability. By integrating software and hardware design, their study aims to create more efficient and effective LLMs. The authors present case studies and experimental results demonstrating the benefits of the co-design approach. These examples show how tailored features can enhance model performance on specific hardware platforms, reducing latency and resource consumption. They argue that this integrated approach is essential for developing LLMs that can meet the growing demands of real-world applications, offering a path forward for more sustainable and scalable NLP solutions.

The authors in SlowLLM [127] investigate the feasibility and performance of running LLMs on consumer-grade hardware. They address the challenges and limitations of deploying LLMs on less powerful devices, such as personal computers and smartphones, which often lack the computational resources of specialized hardware. The authors propose solutions to enhance performance, such as model compression and optimization techniques tailored for consumer hardware. Their study provides experimental results showcasing the performance of various LLMs on consumer devices, highlighting both successes and areas for improvement. The findings demonstrate that, while there are significant challenges, it is possible to achieve acceptable performance levels with appropriate optimizations. By exploring these possibilities, SlowLLM contributes to making advanced NLP capabilities more accessible to a broader audience, potentially expanding the applications and impact of LLMs in everyday technology use.

The authors in SpecLLM [128] investigate the use of LLMs for generating and reviewing Very-Large Scale Integration (VLSI) design specifications. They evaluate the ability of LLMs to produce accurate and comprehensive specifications, which are crucial for the development of complex integrated circuits. The authors present methods for training LLMs on domain-specific data to enhance their understanding and performance in VLSI design tasks. Their study provides experimental results demonstrating the effectiveness of LLMs in generating VLSI design specifications, highlighting their potential to streamline the design process and reduce errors. The authors discuss the challenges and future directions for improving the integration of LLMs in VLSI design, including the need for more sophisticated training techniques and better handling of technical jargon. By exploring these possibilities, SpecLLM contributes to the ongoing efforts to enhance the role of LLMs in the field of hardware design.

The authors in ZipLM [129] introduce a structured pruning technique designed to improve the inference efficiency of LLMs. They detail the development of pruning methods that selectively remove less important components of the model, reducing its size and computational requirements without significantly impacting performance. This inference-aware approach ensures that the pruned models remain effective for their intended tasks while benefiting from enhanced efficiency. Their study presents experimental results demonstrating the effectiveness of ZipLM in reducing model size and improving inference speed. The authors highlight the potential applications of this technique in environments with limited computational resources, such as edge devices and mobile platforms. By focusing on structured pruning, ZipLM offers a practical solution for deploying LLMs more efficiently, enabling broader accessibility and application of these powerful models in various real-world scenarios.

This study by Thorat et al. [130] explores the application of advanced language models (ALMs), such as GPT-3.5 and GPT-4, in the realm of electronic hardware design, particularly focusing on Verilog programming. Verilog is an HDL used for designing and modeling digital systems. The authors introduce the VeriPPA framework, which utilizes ALMs to generate and refine Verilog code. This framework incorporates a two-stage refinement process to enhance both the syntactic and functional correctness of the generated code and to align it with key performance metrics: power, performance, and area (PPA). This iterative approach involves leveraging diagnostic feedback from simulators to identify and correct errors systematically, akin to human problem-solving techniques. The methodology begins with ALMs generating initial Verilog code, which is then refined through the VeriRectify process. This process uses error diagnostics from simulators to guide the correction of syntactic and functional issues, ensuring the generated code meets specific correctness criteria. Following this, the code undergoes a PPA optimization stage where its power consumption, performance, and area efficiency are evaluated and further refined if necessary. This dual-stage approach significantly improves the quality of Verilog code, achieving an 81.37% linguistic accuracy and a 62.0% operational efficacy in programming synthesis, surpassing existing techniques. The study highlights the potential of ALMs in automating and improving the hardware design process, making it more accessible and efficient for those with limited expertise in chip design.

This study by Huang et al. [131] reviews and proposes various strategies to accelerate and optimize LLM, addressing the computational and memory challenges associated with their deployment. The authors cover algorithmic improvements, including early exiting and parallel decoding, and introduce hardware-specific optimizations through LLM-hardware co-design. They present frameworks like Medusa for parallel decoding, achieving speedups up to 2.8x, and SnapKV for memory efficiency improvements. Their research also explores High-Level Synthesis (HLS) applications with frameworks like ScaleHLS and HIDA, which convert LLM architectures into hardware accelerators. These advances improve LLM performance in real-time applications, such as NLP and EDA, while reducing energy consumption and improving efficiency.

From English to ASIC [132] explores the application of LLMs in automating hardware design using HDLs like Verilog for ASICs. The authors focus on improving the precision of LLM-generated HDL code by fine-tuning Mistral 7B and addressing challenges such as syntax errors and the scarcity of quality training datasets. By creating a labeled Verilog dataset and applying advanced optimization techniques such as LoRA and DeepSpeed ZeRO, their study demonstrates significant improvements in code generation accuracy, with up to a 20% increase in pass@1 metrics. The contributions of their research include optimizing memory usage and inference speed, making LLMs more practical for EDA in hardware development.

The authors in this paper introduce LLMCompass [133], a hardware evaluation framework specifically designed for optimizing hardware used in LLM inference. Addressing the high computational and memory demands of LLMs, LLMCompass provides an efficient, versatile tool for evaluating hardware designs. They incorporate a comprehensive performance and cost model that enables architects to assess trade-offs in hardware configuration. Validated against commercial hardware such as NVIDIA A100 and Google TPUv3, LLMCompass achieves low error rates (4.1% for LLM inference) while offering fast simulation times. By exploring architectural implications, such as memory bandwidth and compute capabilities, LLMCompass guides the development of cost-effective hardware designs, enabling the democratization of LLM deployment. For example, proposed modifications to existing architectures yielded up to a 3.41× improvement in performance/cost. This tool is positioned as a critical enabler for optimizing next-generation hardware for large-scale AI applications.

Addressing the scarcity of high-quality Verilog data for LLM fine-tuning, the authors in this paper [134] introduce a design-data augmentation framework to automate dataset generation for chip design tasks. The framework aligns Verilog files with natural language descriptions, error feedback, and EDA scripts to create comprehensive datasets. Fine-tuning Llama2 models on these datasets resulted in a substantial improvement in Verilog code generation accuracy, surpassing state-of-the-art benchmarks. The authors argue that such automated augmentation methods are critical for developing domain-specific LLMs capable of agile and accurate chip design, paving the way for scalable hardware development.

The authors in this paper [135] introduce a hierarchical prompting approach to improve the generation of HDL designs using LLMs. The authors highlight the limitations of standard “flat” prompting methods, especially for complex modules like finite-state machines or multi-component processors. They propose an eight-stage pipeline, Recurrent Optimization via Machine Editing (ROME), which automates the hierarchical design process by dividing designs into smaller submodules. Evaluations of benchmarks and case studies, including a 32-bit Reduced Instruction Set Computer (RISC)-V processor, demonstrate that hierarchical prompting improves both the quality of HDL generation and its scalability to complex designs. The study also depicts that smaller, fine-tuned open-source models can effectively compete with larger proprietary LLMs when using hierarchical commands.

The authors in this paper [136] explore the feasibility of using LLMs for designing a RISC processor, focusing on generating VHDL code for components such as the ALU, control unit, and memory modules. LLM-generated code was verified through simulation and implementation on an FPGA. The results reveal significant limitations, including frequent errors in generated code and a reliance on human intervention for debugging and refinement. While LLMs show potential for automating initial design steps, the authors in this study conclude that they currently serve better as complements to human designers rather than standalone tools.

The authors of this paper [137] present MG-Verilog, a dataset designed to improve LLM performance in Verilog generation tasks. The dataset includes multi-grained descriptions and diverse code samples, enabling fine-tuning for better generalization across hardware design tasks. The authors also propose a balanced fine-tuning scheme to leverage the dataset’s diversity effectively. Extensive evaluations demonstrate that MG-Verilog significantly enhances LLM-generated Verilog quality, promoting its use for inference and training in hardware design workflows. Table 4 provides an overview of all the papers discussed in the hardware design subsection for comparison.

Table 4.

Comparison of papers focusing on hardware design with LLMs.

4.2.2. Hardware/Software Codesign

This study by Mudigere et al. [138] explores co-design strategies to improve training speed and scalability of deep learning recommendation models. The authors emphasize the integration of software and hardware design to achieve significant performance gains, addressing the computational intensity and resource demands of training large models. They present various techniques for optimizing algorithms and hardware architectures, ensuring efficient utilization of resources. Their study showcases experimental results demonstrating the effectiveness of co-design strategies in accelerating model training. These results highlight improvements in training times and scalability, making it feasible to handle larger datasets and more complex models. By focusing on the co-design approach, their study provides valuable insights into achieving faster and more scalable training processes, which are essential for the ongoing advancement of deep learning recommendation systems.

This study by Wan et al. [139] explores the integration of software and hardware design principles to optimize the performance of LLMs. The authors focus on the co-design approach, which synchronizes the development of software algorithms and hardware architecture to achieve efficient processing and better utilization of resources. This synergy is essential in managing the computational demands of LLMs, which require significant processing power and memory bandwidth. They discuss various strategies for optimizing both hardware (such as specialized accelerators and memory hierarchies) and software (such as algorithmic improvements and efficient coding practices) to enhance the performance and efficiency of LLMs. Their research further delves into the application of this co-design methodology in design verification processes. Design verification, a critical phase in the development of digital systems, benefits from the enhanced capabilities of co-designed LLMs. By leveraging optimized LLMs, verification tools can process complex datasets and simulations more effectively, leading to more accurate and faster verification results. The integration of co-designed LLMs into verification workflows helps in identifying design flaws early, reducing the time and cost associated with the design and development of hardware systems. Their study presents case studies and experimental results that demonstrate the practical benefits and improvements achieved through the software/hardware co-design approach in real-world verification scenarios.

This study by Yan et al. [140] investigates the potential of leveraging LLMs in the co-design process of software and hardware, specifically for designing Compute-in-Memory (CiM) Deep Neural Network (DNN) accelerators. The authors explore how LLMs can be utilized to enhance the co-design process by providing advanced capabilities in generating design solutions and optimizing both software and hardware components concurrently. They emphasize the importance of LLMs in automating and improving the design workflow, leading to more efficient and effective development of CiM DNN accelerators. Their study presents a detailed case study demonstrating the application of LLMs in the co-design of CiM DNN accelerators. Through this case study, the authors illustrate how LLMs can aid in identifying optimal design configurations and addressing complex design challenges. The study shows that LLMs can significantly reduce the time and effort required for design iterations and verification, thereby accelerating the overall development process. The findings suggest that integrating LLMs into the co-design framework can result in substantial performance gains and resource savings, highlighting the viability and benefits of using LLMs in the co-design of advanced hardware accelerators.

The authors of C2HLSC [141] investigate the ability of LLMs to assist in refactoring software code into formats compatible with HLS tools. The authors showcase case studies where an LLM transforms C code, including the QuickSort algorithm and AES-128 encryption, into HLS-synthesizable code. By iteratively modifying software constructs such as dynamic memory allocation and recursion, the LLM produces hardware-compatible code that adheres to HLS constraints. This approach significantly reduces manual effort and errors, traditionally required in preparing software for hardware synthesis. The findings highlight the transformative potential of LLMs in bridging the software-to-hardware design gap, enabling more seamless hardware development processes.

The authors in this paper [142] evaluate LLMs for both hardware design and testing by examining their ability to generate functional Verilog modules and testbenches from plain language specifications. Benchmarks show that LLMs can automate significant portions of the design process, including functional verification. Successful tapeouts based on generated designs demonstrate the feasibility of using LLMs for end-to-end hardware workflows. The findings highlight the potential of LLMs to reduce manual effort and accelerate the development pipeline for digital hardware.

PyHDL-Eval [143] is a framework for evaluating LLMs on specification-to-RTL tasks using Python-embedded DSLs. The framework includes 168 problems across 19 RTL design categories and supports testing on DSLs like PyMTL3, MyHDL, and Migen. Experiments with multiple LLMs show better performance on Verilog than Python-embedded DSLs, underscoring challenges in leveraging LLMs for high-level languages. PyHDL-Eval serves as a valuable tool for advancing research in Python-embedded DSLs and their intersection with LLM capabilities.

4.2.3. Hardware Accelerators

Nazzal et al. [144] present a comprehensive dataset specifically designed to facilitate the generation of AI accelerators driven by LLMs. The dataset includes a diverse range of hardware design benchmarks, synthesis results, and performance metrics. The goal is to provide a robust foundation for training LLMs to understand and optimize hardware accelerators effectively. By offering detailed annotations and a variety of design scenarios, their dataset aims to enhance the ability of LLMs to generate efficient and optimized hardware designs. The authors detail the structure of the dataset, which covers various aspects of hardware accelerator design, including computational kernels, memory hierarchies, and interconnect architectures. They also discuss the potential applications of the dataset in training LLMs for tasks such as design space exploration, performance prediction, and design optimization. Their study demonstrates the utility of the dataset through several case studies, showing how LLMs can leverage the provided data to generate and optimize hardware accelerators with significant improvements in performance and efficiency.