Multiple Minor Components Extraction in Parallel Based on Möller Algorithm

Abstract

1. Introduction

2. Problem Statement

3. Adaptive Extracting Algorithm

4. Convergence Analysis

4.1. The Fixed Point of the Proposed Algorithm

4.2. Stability Analysis of the Proposed Algorithm

5. Numerical Example

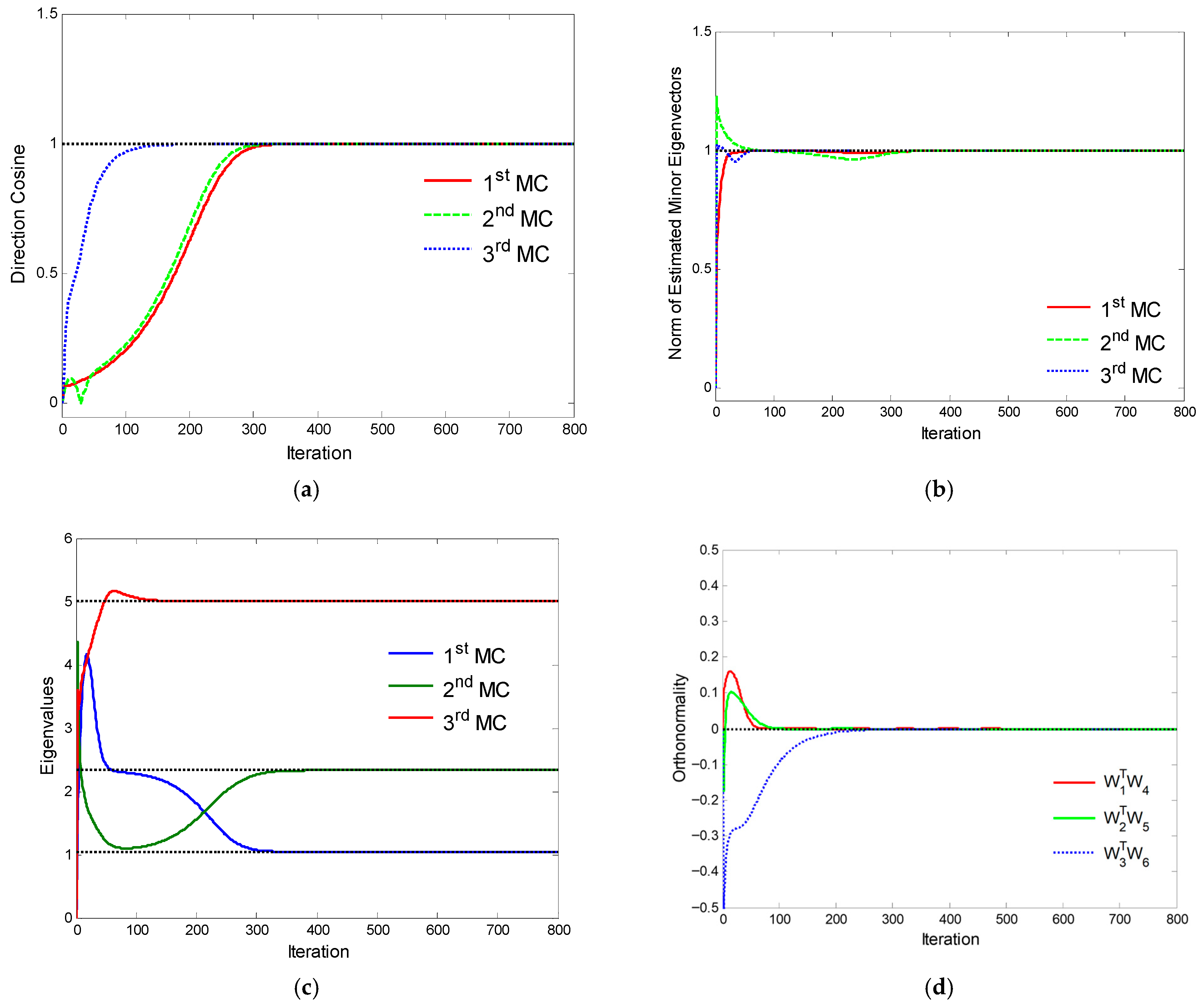

5.1. Transient Behavior

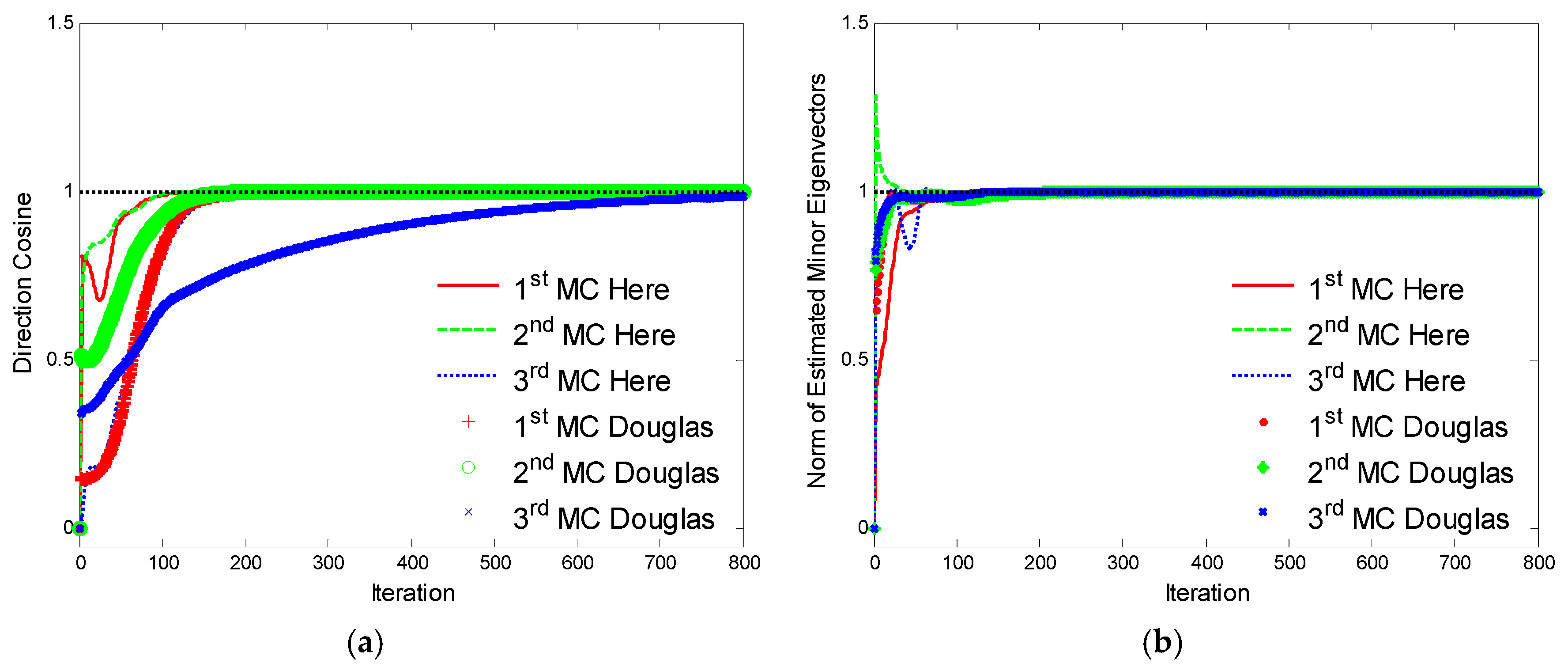

5.2. Comparison with the Douglas Algorithm

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhou, C.; Gu, Y.; Fan, X.; Shi, Z.; Mao, G.; Zhang, Y.D. Direction-of-Arrival Estimation for Coprime Array via Virtual Array Interpolation. IEEE Trans. Signal Process. 2018, 66, 5956–5971. [Google Scholar] [CrossRef]

- Mahouti, P.; Belen, A.; Tari, O.; Belen, M.A.; Karahan, S.; Koziel, S. Data-Driven Surrogate-Assisted Optimization of Metamaterial-Based Filtenna Using Deep Learning. Electronics 2023, 12, 1584. [Google Scholar] [CrossRef]

- Baderia, K.; Kumar, A.; Agrawal, N.; Kumar, R. Minor Component Analysis Based Design of Low Pass and BandPass FIR Digital Filter Using Particle Swarm Optimization and Fractional Derivative. In Proceedings of the 2021 International Conference on Control, Automation, Power and Signal Processing (CAPS), Jabalpur, India, 10–12 December 2021; pp. 1–6. [Google Scholar]

- Tuan, D.N.; Yamada, I. A unified convergence analysis of normalized PAST algorithms for estimating principal and minor components. Signal Process. 2013, 93, 176–184. [Google Scholar] [CrossRef]

- Xu, L.; Oja, E.; Suen, C.Y. Modified hebbian learning for curve and surface fitting. Neural Netw. 1992, 5, 441–457. [Google Scholar] [CrossRef]

- Huang, C.; Song, Y.; Ma, H.; Zhou, X.; Deng, W. A multiple level competitive swarm optimizer based on dual evaluation criteria and global optimization for large-scale optimization problem. Inf. Sci. 2025, 708, 122068. [Google Scholar] [CrossRef]

- Ma, Y.; Cheng, J. A novel joint denoising method for gear fault diagnosis with improved quaternion singular value decomposition. Measurement 2024, 226, 114165. [Google Scholar] [CrossRef]

- Yi, K.; Cai, C.; Tang, W.; Dai, X.; Wang, F.; Wen, F. A Rolling Bearing Fault Feature Extraction Algorithm Based on IPOA-VMD and MOMEDA. Sensors 2023, 23, 8620. [Google Scholar] [CrossRef]

- Chung, D.; Jeong, B. Analyzing Russia–Ukraine War Patterns Based on Lanchester Model Using SINDy Algorithm. Mathematics 2024, 12, 851. [Google Scholar] [CrossRef]

- Wang, Z.; Li, S.; Xuan, J.; Shi, T. Biologically Inspired Compound Defect Detection Using a Spiking Neural Network With Continuous Time–Frequency Gradients. Adv. Eng. Inform. 2025, 65, 103–132. [Google Scholar] [CrossRef]

- Zhang, X.; Li, Y.; Feng, X.; Hua, J.; Yue, D.; Wang, J. Application of Multiple-Optimization Filtering Algorithm in Remote Sensing Image Denoising. Sensors 2023, 23, 7813. [Google Scholar] [CrossRef] [PubMed]

- Giuliani, A.; Vici, A. On the (Apparently) Paradoxical Role of Noise in the Recognition of Signal Character of Minor Principal Components. Stats 2024, 7, 54–64. [Google Scholar] [CrossRef]

- Xuan, J.; Wang, Z.; Li, S.; Gao, A.; Wang, C.; Shi, T. Measuring compound defect of bearing by wavelet gradient integrated spiking neural network. Measurement 2023, 223, 10. [Google Scholar] [CrossRef]

- Ma, Q.; Sun, Y.; Wan, S.; Gu, Y.; Bai, Y.; Mu, J. An ENSO Prediction Model Based on Backtracking Multiple Initial Values: Ordinary Differential Equations–Memory Kernel Function. Remote Sens. 2023, 15, 3767. [Google Scholar] [CrossRef]

- Mathew, G.; Reddy, V. Orthogonal eigensubspace estimation using neural networks. IEEE Trans. Signal Process. 1994, 42, 1803–1811. [Google Scholar] [CrossRef]

- Rahmat, F.; Zulkafli, Z.; Ishak, A.J.; Abdulrahman, R.Z.; Stercke, S.D.; Buytaert, W.; Tahir, W.; Abrahman, J.; Ibrahim, S.; Ismail, M. Supervised feature selection using principal component analysis. Knowl. Inf. Syst. 2024, 66, 1955–1995. [Google Scholar] [CrossRef]

- Dai, H. Application of PCA Numalgorithm in Remote Sensing Image Processing. Mod. Electron. Technol. 2023, 7, 17–21. [Google Scholar] [CrossRef]

- Gao, Y. Adaptive Generalized Eigenvector Estimating Algorithm for Hermitian Matrix Pencil. IEEE/CAA J. Autom. Sin. 2022, 9, 1967–1979. [Google Scholar] [CrossRef]

- Cai, H.; Kaloorazi, M.F.; Chen, J.; Chen, W.; Richard, C. Online dominant generalized eigenvectors extraction via a randomized method. In Proceedings of the 28th European Signal Processing Conference, Amsterdam, The Netherlands, 18–21 January 2021. [Google Scholar]

- Möller, R. A self-stabilizing learning rule for minor component analysis. Int. J. Neural Syst. 2004, 14, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Peng, D.; Zhang, Y.; Xiang, Y.; Zhang, H. A globally convergent MC algorithm with an adaptive learning rate. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 359–365. [Google Scholar] [CrossRef] [PubMed]

- Tanaka, T. Generalized weighted rules for principal components tracking. IEEE Trans. Signal Process. 2005, 53, 1243–1253. [Google Scholar] [CrossRef]

- Jankovic, M.V.; Reljin, B. A new minor component analysis method based on Douglas-Kung-Amari minor subspace analysis method. IEEE Signal Process. Lett. 2005, 12, 859–862. [Google Scholar] [CrossRef]

- Ouyang, S.; Bao, Z. Fast Principal Component Extraction by a Weighted Information Criterion. IEEE Trans. Signal Process. 2002, 50, 1994–2002. [Google Scholar] [CrossRef]

- Du, B.; Kong, X.; Feng, X. Generalized principal component analysis-based subspace decomposition of fault deviations and its application to fault reconstruction. IEEE Access 2020, 8, 34177–34186. [Google Scholar] [CrossRef]

- Kong, X.; Hu, C.; Duan, Z. Principal Component Analysis Networks and Algorithms; Springer: Beijing, China, 2017. [Google Scholar]

- Tan, K.K.; Lv, J.; Zhang, Y.; Huang, S. Adaptive multiple minor directions extraction in parallel using a PCA neural network. Theor. Comput. Sci. 2010, 411, 4200–4215. [Google Scholar] [CrossRef]

- Jou, Y.-D.; Chen, F.-K. Design of equiripple FIR digital differentiators using neural weighted least-squares algorithm. In Proceedings of the 2011 8th International Conference on Information, Communications & Signal Processing, Singapore, 13–16 December 2011; pp. 1–5. [Google Scholar]

- Hasan, M.A. Diagonally weighted and shifted criteria for minor and principal component extraction. In Proceedings of the IEEE International Joint Conference on Neural Networks, IJCNN ‘05, Montreal, QC, Canada, 31 July–4 August 2005; Volume 1252, pp. 1251–1256. [Google Scholar]

- Jou, Y.-D.; Chen, F.-K.; Sun, C.-M. Neural weighted least-squares design of FIR higher-order digital differentiators. In Proceedings of the 2009 16th International Conference on Digital Signal Processing, Santorini, Greece, 5–7 July 2009; pp. 1–5. [Google Scholar]

- Du, K.-L.; Swamy, M.N. Neural Networks and Statistical Learning, 1st ed.; Springer: London, UK, 2019. [Google Scholar]

- Qiu, J.; Wang, H.; Lu, J.; Zhang, B. Neural network implementations for PCA and its extensions. ISRN Artif. Intell. 2012, 2012, 847305. [Google Scholar] [CrossRef]

| Proposed Algorithm | Douglas’s Algorithm | |

|---|---|---|

| Learning rate | ||

| Other parameter | A = diag ([1–3]) |

| Method | Proposed Algorithm | Douglas’s Algorithm |

|---|---|---|

| Time (ms) | 2.05 | 10.55 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Dong, H.; Xu, Z.; Li, H.; Li, J.; Yuan, S. Multiple Minor Components Extraction in Parallel Based on Möller Algorithm. Electronics 2025, 14, 4073. https://doi.org/10.3390/electronics14204073

Gao Y, Dong H, Xu Z, Li H, Li J, Yuan S. Multiple Minor Components Extraction in Parallel Based on Möller Algorithm. Electronics. 2025; 14(20):4073. https://doi.org/10.3390/electronics14204073

Chicago/Turabian StyleGao, Yingbin, Haidi Dong, Zhongying Xu, Haiyan Li, Jing Li, and Shenzhi Yuan. 2025. "Multiple Minor Components Extraction in Parallel Based on Möller Algorithm" Electronics 14, no. 20: 4073. https://doi.org/10.3390/electronics14204073

APA StyleGao, Y., Dong, H., Xu, Z., Li, H., Li, J., & Yuan, S. (2025). Multiple Minor Components Extraction in Parallel Based on Möller Algorithm. Electronics, 14(20), 4073. https://doi.org/10.3390/electronics14204073