Open-Access fNIRS Dataset for Classification of Unilateral Finger- and Foot-Tapping

Abstract

:1. Introduction

2. fNIRS Dataset

2.1. Participants

2.2. Apparatus

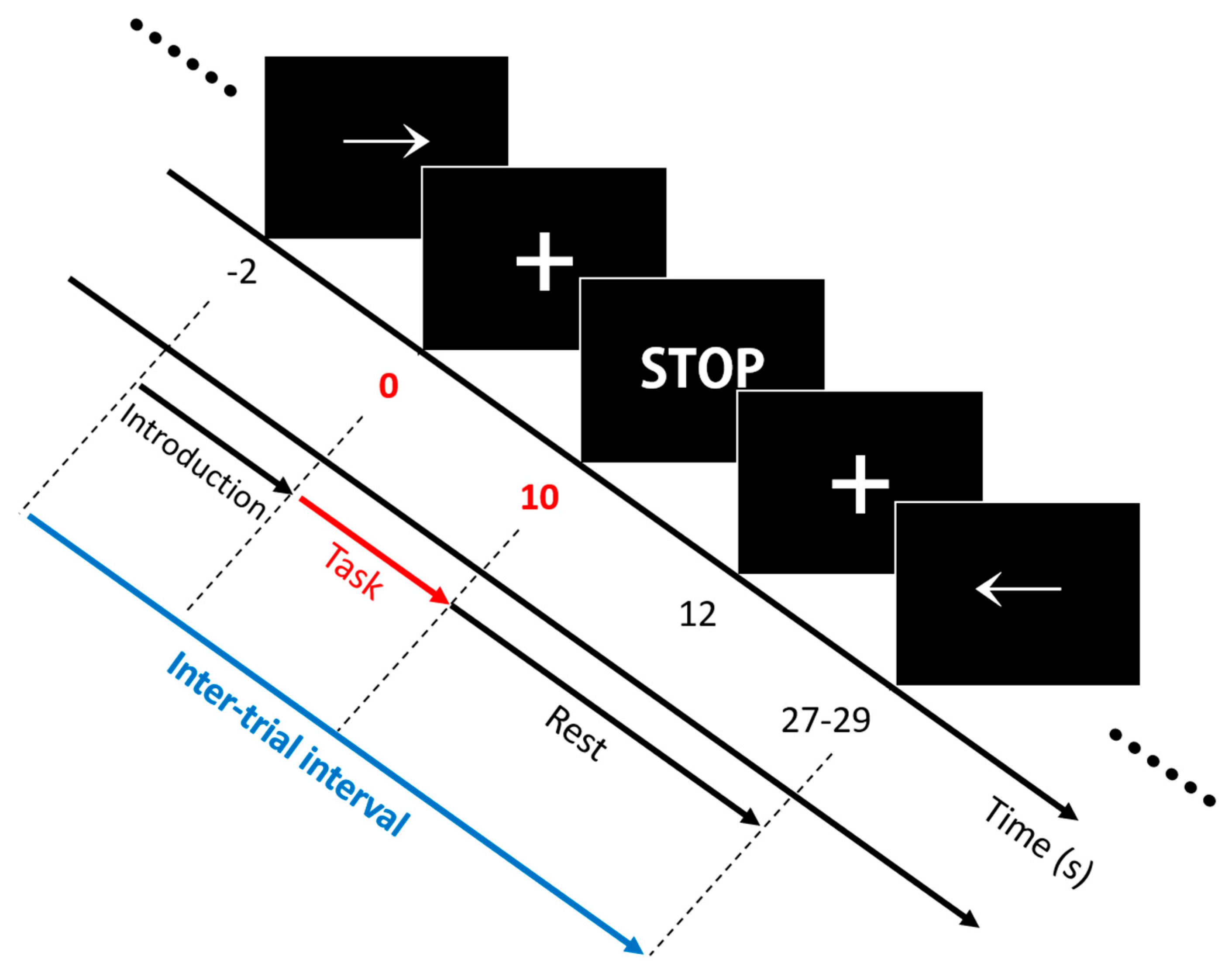

2.3. Experimental Paradigm

2.4. Dataset Description

3. Signal Processing

3.1. Preprocessing and Segmentation

3.2. Classification

4. Results

4.1. Temporal ΔHbO and ΔHbR

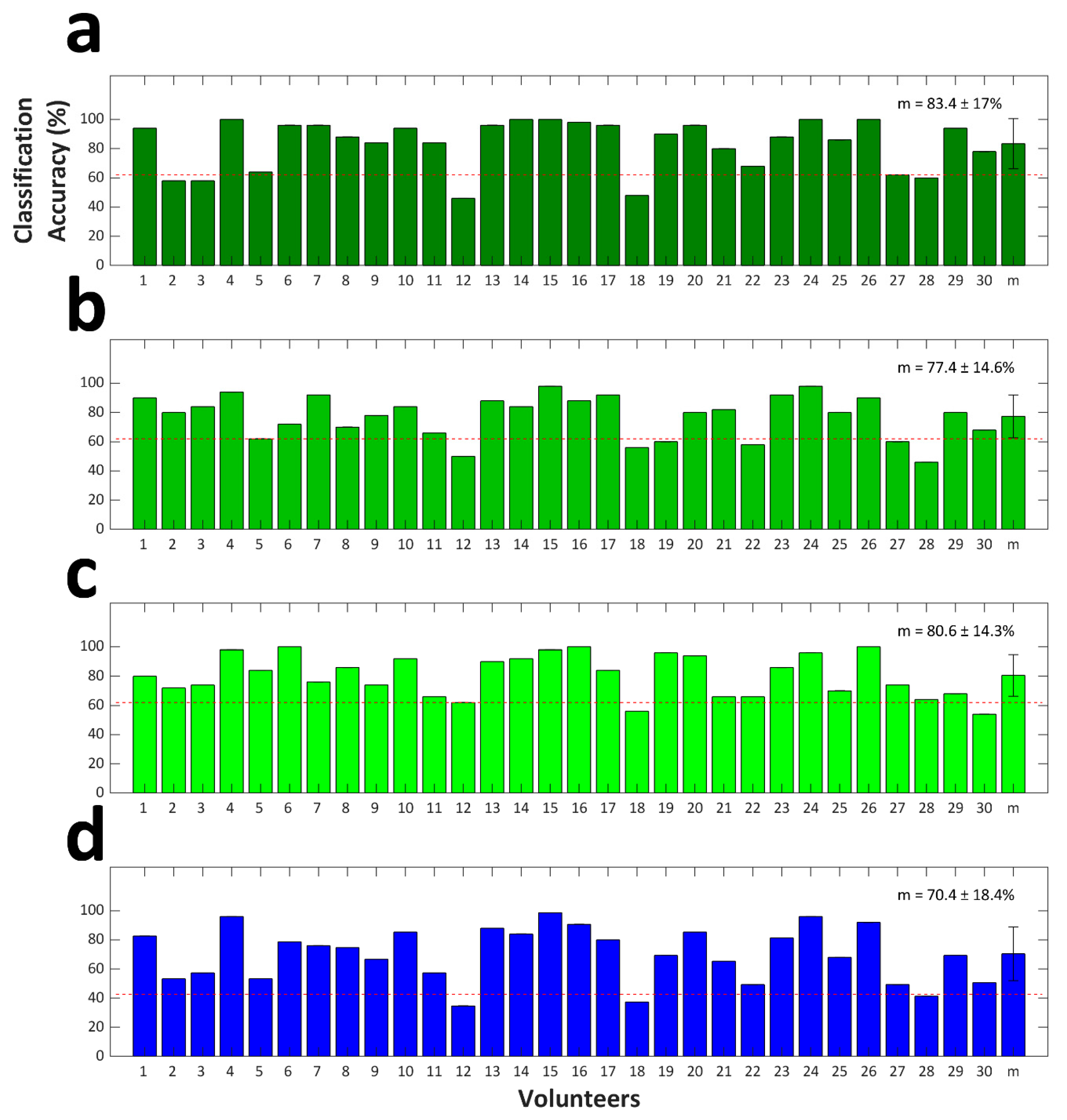

4.2. Classification Accuracy

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Coyle, S.; Ward, T.; Markham, C.; McDarby, G. On the suitability of near-infrared (NIR) systems for next-generation brain-computer interfaces. Physiol. Meas. 2004, 25, 815–822. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boas, D.A.; Elwell, C.E.; Ferrari, M.; Taga, G. Twenty years of functional near-infrared spectroscopy: Introduction for the special issue. Neuroimage 2014, 85, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Shin, J.; Kim, D.-W.; Müller, K.-R.; Hwang, H.-J. Improvement of information transfer rates using a hybrid EEG-NIRS brain-computer interface with a short trial length: Offline and pseudo-online analyses. Sensors 2018, 18, 1827. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hwang, H.-J.; Lim, J.-H.; Kim, D.-W.; Im, C.-H. Evaluation of various mental task combinations for near-infrared spectroscopy-based brain-computer interfaces. J. Biomed. Opt. 2014, 19, 077005. [Google Scholar] [CrossRef] [PubMed]

- Rueckert, L.; Lange, N.; Partiot, A.; Appollonio, I.; Litvan, I.; Le Bihan, D.; Grafman, J. Visualizing Cortical Activation during Mental Calculation with Functional MRI. Neuroimage 1996, 3, 97–103. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- MacDonald, A.; Cohen, J.; Stenger, V.; Carter, C. Dissociating the Role of the Dorsolateral Prefrontal and Anterior Cingulate Cortex in Cognitive Control. Science 2000, 288, 1835–1838. [Google Scholar] [CrossRef] [Green Version]

- Herrmann, M.J.; Ehlis, A.C.; Fallgatter, A.J. Prefrontal activation through task requirements of emotional induction measured with NIRS. Biol. Psychol. 2003, 64, 255–263. [Google Scholar] [CrossRef]

- Rowe, J.B.; Stephan, K.E.; Friston, K.; Frackowiak, R.S.; Passingham, R.E. The prefrontal cortex shows context-specific changes in effective connectivity to motor or visual cortex during the selection of action or colour. Cereb. Cortex 2005, 15, 85–95. [Google Scholar] [CrossRef] [Green Version]

- Nagamitsu, S.; Nagano, M.; Yamashita, Y.; Takashima, S.; Matsuishi, T. Prefrontal cerebral blood volume patterns while playing video games-a near-infrared spectroscopy study. Brain Dev. 2006, 28, 315–321. [Google Scholar] [CrossRef]

- Yang, H.; Zhou, Z.; Liu, Y.; Ruan, Z.; Gong, H.; Luo, Q.; Lu, Z. Gender difference in hemodynamic responses of prefrontal area to emotional stress by near-infrared spectroscopy. Behav. Brain Res. 2007, 178, 172–176. [Google Scholar] [CrossRef]

- Medvedev, A.V.; Kainerstorfer, J.M.; Borisov, S.V.; VanMeter, J. Functional connectivity in the prefrontal cortex measured by near-infrared spectroscopy during ultrarapid object recognition. J. Biomed. Opt. 2011, 16, 016008. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Power, S.D.; Kushki, A.; Chau, T. Towards a system-paced near-infrared spectroscopy brain–computer interface: Differentiating prefrontal activity due to mental arithmetic and mental singing from the no-control state. J. Neural Eng. 2011, 8, 066004. [Google Scholar] [CrossRef] [PubMed]

- Power, S.D.; Kushki, A.; Chau, T. Automatic single-trial discrimination of mental arithmetic, mental singing and the no-control state from prefrontal activity: Toward a three-state NIRS-BCI. BMC Res. Notes 2012, 5, 141. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chai, R.; Ling, S.H.; Hunter, G.P.; Nguyen, H.T. Mental task classifications using prefrontal cortex electroencephalograph signals. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 1831–1834. [Google Scholar]

- Herff, C.; Heger, D.; Fortmann, O.; Hennrich, J.; Putze, F.; Schultz, T. Mental workload during n-back task—quantified in the prefrontal cortex using fNIRS. Front. Hum. Neurosci. 2013, 7, 935. [Google Scholar] [CrossRef] [Green Version]

- Naseer, N.; Hong, M.J.; Hong, K.-S. Online binary decision decoding using functional near-infrared spectroscopy for the development of brain–computer interface. Exp. Brain Res. 2014, 232, 555–564. [Google Scholar] [CrossRef]

- Shin, J.; Müller, K.-R.; Hwang, H.-J. Near-infrared spectroscopy (NIRS) based eyes-closed brain-computer interface (BCI) using prefrontal cortex activation due to mental arithmetic. Sci. Rep. 2016, 6, 36203. [Google Scholar] [CrossRef]

- Zafar, A.; Hong, K.S. Detection and classification of three-class initial dips from prefrontal cortex. Biomed. Opt. Express 2017, 8, 367–383. [Google Scholar] [CrossRef]

- Shin, J.; Kwon, J.; Choi, J.; Im, C.H. Ternary near-infrared spectroscopy brain-computer interface with increased information transfer rate using prefrontal hemodynamic changes during mental arithmetic, breath-Holding, and idle State. IEEE Access 2018, 6, 19491–19498. [Google Scholar] [CrossRef]

- Shin, J.; Im, C.-H. Performance prediction for a near-infrared spectroscopy-brain–computer interface using resting-state functional connectivity of the prefrontal Cortex. Int. J. Neural Syst. 2018, 28, 1850023. [Google Scholar] [CrossRef]

- Zephaniah, P.V.; Kim, J.G. Recent functional near infrared spectroscopy based brain computer interface systems: Developments, applications and challenges. Biomed. Eng. Lett. 2014, 4, 223–230. [Google Scholar] [CrossRef]

- Ferrari, M.; Quaresima, V. A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. Neuroimage 2012, 63, 921–935. [Google Scholar] [CrossRef] [PubMed]

- Naseer, N.; Hong, K.-S. fNIRS-based brain-computer interfaces: A review. Front. Hum. Neurosci. 2015, 9, 00003. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dornhege, G.; Millán, J.R.; Hinterberger, T.; McFarland, D.; Müller, K.-R. Toward Brain-Computer Interfacing; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Hoshi, Y.; Tamura, M. Dynamic multichannel near-infrared optical imaging of human brain activity. J. Appl. Physiol. 1993, 75, 1842–1846. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tomita, Y.; Vialatte, F.B.; Dreyfus, G.; Mitsukura, Y.; Bakardjian, H.; Cichocki, A. Bimodal BCI using simultaneously NIRS and EEG. IEEE Trans. Biomed. Eng. 2014, 61, 1274–1284. [Google Scholar] [CrossRef] [PubMed]

- Shin, J.; Kwon, J.; Im, C.-H. A multi-class hybrid EEG-NIRS brain-computer interface for the classification of brain activation patterns during mental arithmetic, motor imagery, and idle state. Front. Neuroinform. 2018, 23, 5. [Google Scholar] [CrossRef]

- Shin, J.; Kwon, J.; Im, C.-H. A ternary hybrid EEG-NIRS brain-computer interface for the classification of brain activation patterns during mental arithmetic, motor imagery, and idle state. Front. Neuroinform. 2018, 12, 5. [Google Scholar] [CrossRef]

- Shin, J.; von Lühmann, A.; Kim, D.-W.; Mehnert, J.; Hwang, H.-J.; Müller, K.-R. Simultaneous acquisition of EEG and NIRS during cognitive tasks for an open access dataset. Sci. Data 2018, 5, 180003. [Google Scholar] [CrossRef]

- Shin, J.; Muller, K.R.; Hwang, H.J. Eyes-closed hybrid brain-computer interface employing frontal brain activation. PLoS ONE 2018, 13, e0196359. [Google Scholar] [CrossRef]

- Shin, J.; von Lühmann, A.; Blankertz, B.; Kim, D.-W.; Jeong, J.; Hwang, H.-J.; Müller, K.-R. Open access dataset for EEG+NIRS single-trial classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1735–1745. [Google Scholar] [CrossRef]

- Zhang, Q.; Brown, E.; Strangman, G. Adaptive filtering for global interference cancellation and real-time recovery of evoked brain activity: A Monte Carlo simulation study. J. Biomed. Opt. 2007, 12, 044014. [Google Scholar] [CrossRef]

- Dong, S.; Jeong, J. Improvement in recovery of hemodynamic responses by extended Kalman filter with non-linear state-space model and short separation measurement. IEEE Trans. Biomed. Eng. 2019, 66, 2152–2162. [Google Scholar] [CrossRef] [PubMed]

- Abibullaev, B.; An, J. Classification of frontal cortex haemodynamic responses during cognitive tasks using wavelet transforms and machine learning algorithms. Med. Eng. Phys. 2012, 34, 1394–1410. [Google Scholar] [CrossRef] [PubMed]

- Molavi, B.; Dumont, G.A. Wavelet-based motion artifact removal for functional near-infrared spectroscopy. Physiol. Meas. 2012, 33, 259–270. [Google Scholar] [CrossRef] [PubMed]

- Gagnon, L.; Cooper, R.J.; Yücel, M.A.; Perdue, K.L.; Greve, D.N.; Boas, D.A. Short separation channel location impacts the performance of short channel regression in NIRS. Neuroimage 2012, 59, 2518–2528. [Google Scholar] [CrossRef] [Green Version]

- Gagnon, L.; Yücel, M.A.; Boas, D.A.; Cooper, R.J. Further improvement in reducing superficial contamination in NIRS using double short separation measurements. Neuroimage 2014, 85, 127–135. [Google Scholar] [CrossRef] [Green Version]

- Brigadoi, S.; Cooper, R.J. How short is short? optimum source-detector distance for short-separation channels in functional near-infrared spectroscopy. Neurophotonics 2015, 2, 025005. [Google Scholar] [CrossRef] [Green Version]

- Abibullaev, B.; An, J.; Lee, S.H.; Moon, J.I. Design and evaluation of action observation and motor imagery based BCIs using near-infrared spectroscopy. Measurement 2017, 98, 250–261. [Google Scholar] [CrossRef]

- Virtanen, J.; Noponen, T.; Merilainen, P. Comparison of principal and independent component analysis in removing extracerebral interference from near-infrared spectroscopy signals. J. Biomed. Opt. 2009, 14, 054032. [Google Scholar] [CrossRef]

- Luu, S.; Chau, T. Decoding subjective preference from single-trial near-infrared spectroscopy signals. J. Neural Eng. 2009, 6, 016003. [Google Scholar] [CrossRef]

- Fazli, S.; Mehnert, J.; Steinbrink, J.; Curio, G.; Villringer, A.; Müller, K.-R.; Blankertz, B. Enhanced performance by a hybrid NIRS-EEG brain computer interface. Neuroimage 2012, 59, 519–529. [Google Scholar] [CrossRef]

- Naseer, N.; Noori, F.M.; Qureshi, N.K.; Hong, K.S. Determining optimal feature-combination for LDA classification of functional near-infrared spectroscopy signals in brain-computer interface application. Front. Hum. Neurosci. 2016, 10, 237. [Google Scholar] [CrossRef] [Green Version]

- Hong, K.S.; Bhutta, M.R.; Liu, X.L.; Shin, Y.I. Classification of somatosensory cortex activities using fNIRS. Behav. Brain Res. 2017, 333, 225–234. [Google Scholar] [CrossRef]

- Sitaram, R.; Zhang, H.H.; Guan, C.T.; Thulasidas, M.; Hoshi, Y.; Ishikawa, A.; Shimizu, K.; Birbaumer, N. Temporal classification of multichannel near-infrared spectroscopy signals of motor imagery for developing a brain-computer interface. Neuroimage 2007, 34, 1416–1427. [Google Scholar] [CrossRef] [PubMed]

- Power, S.D.; Falk, T.H.; Chau, T. Classification of prefrontal activity due to mental arithmetic and music imagery using hidden Markov models and frequency domain near-infrared spectroscopy. J. Neural Eng. 2010, 7, 026002. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hwang, H.J.; Choi, H.; Kim, J.Y.; Chang, W.D.; Kim, D.W.; Kim, K.W.; Jo, S.H.; Im, C.H. Toward more intuitive brain-computer interfacing: Classification of binary covert intentions using functional near-infrared spectroscopy. J. Biomed. Opt. 2016, 21, 091303. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schudlo, L.C.; Chau, T. Dynamic topographical pattern classification of multichannel prefrontal NIRS signals: II. Online differentiation of mental arithmetic and rest. J. Neural Eng. 2014, 11, 016003. [Google Scholar] [CrossRef]

- Noori, F.M.; Naseer, N.; Qureshi, N.K.; Nazeer, H.; Khan, R.A. Optimal feature selection from fNIRS signals using genetic algorithms for BCI. Neurosci. Lett. 2017, 647, 61–66. [Google Scholar] [CrossRef]

- Schudlo, L.C.; Chau, T. Development of a ternary near-infrared spectroscopy brain-computer interface: Online classification of verbal fluency task, stroop task and rest. Int. J. Neural Syst. 2018, 28, 1750052. [Google Scholar] [CrossRef]

- Schudlo, L.C.; Chau, T. Towards a ternary NIRS-BCI: Single-trial classification of verbal fluency task, Stroop task and unconstrained rest. J. Neural Eng. 2015, 12, 066008. [Google Scholar] [CrossRef]

- Molavi, B.; May, L.; Gervain, J.; Carreiras, M.; Werker, J.F.; Dumont, G.A. Analyzing the resting state functional connectivity in the human language system using near infrared spectroscopy. Front. Hum. Neurosci. 2014, 7, 921. [Google Scholar] [CrossRef] [Green Version]

- Wallois, F.; Mahmoudzadeh, M.; Patil, A.; Grebe, R. Usefulness of simultaneous EEG-NIRS recording in language studies. Brain Lang. 2012, 121, 110–123. [Google Scholar] [CrossRef] [PubMed]

- Kubota, Y.; Toichi, M.; Shimizu, M.; Mason, R.A.; Coconcea, C.M.; Findling, R.L.; Yamamoto, K.; Calabrese, J.R. Prefrontal activation during verbal fluency tests in schizophrenia—a near-infrared spectroscopy (NIRS) study. Schizophr. Res. 2005, 77, 65–73. [Google Scholar] [CrossRef] [PubMed]

- Cui, X.; Bray, S.; Reiss, A.L. Speeded near infrared spectroscopy (NIRS) response detection. PLoS ONE 2010, 5, 15474. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nambu, I.; Osu, R.; Sato, M.-a.; Ando, S.; Kawato, M.; Naito, E. Single-trial reconstruction of finger-pinch forces from human motor-cortical activation measured by near-infrared spectroscopy (NIRS). NeuroImage 2009, 47, 628–637. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.J.; Hong, K.-S. Hybrid EEG–fNIRS-based eight-command decoding for BCI: Application to quadcopter control. Front. Neurorobot. 2017, 11, 6. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blankertz, B.; Acqualagna, L.; Dähne, S.; Haufe, S.; Schultze-Kraft, M.; Sturm, I.; Ušćumlic, M.; Wenzel, M.A.; Curio, G.; Müller, K.-R. The Berlin brain-computer interface: Progress beyond communication and control. Front. Neurosci. 2016, 10, 530. [Google Scholar] [CrossRef] [Green Version]

- Bak, S.; Park, J.; Shin, J.; Jeong, J. Dataset: Open Access fNIRS Dataset for Classification of Unilateral Finger- and Foot-Tapping. Available online: https://doi.org/10.6084/m9.figshare.9783755.v1 (accessed on 23 October 2019).

- Bak, S.; Park, J.; Shin, J.; Jeong, J. Tutorials: Open Access fNIRS Dataset for Classification of Unilateral Finger- and Foot-Tapping. Available online: https://github.com/JaeyoungShin/fNIRS-dataset (accessed on 23 October 2019).

- Combrisson, E.; Jerbi, K. Exceeding chance level by chance: The caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J. Neurosci. Methods 2015, 250, 126–136. [Google Scholar] [CrossRef]

| Structure | Field | Description |

|---|---|---|

| cntHb | .fs | Sampling rate (Hz) |

| .clab | Channel labels | |

| .xUnit | X-axis unit | |

| .yUnit | Y-axis unit | |

| .snr | Signal-to-noise ratio | |

| .x | Concentration changes of oxygenated/reduced hemoglobin (ΔHbO/R) | |

| Mrk | .event.desc | Class labels’ descriptions |

| .time | Event occurrence times 1 | |

| .y | Class labels in vector form | |

| mnt | .clab | Channel labels |

| .box | Channel arrangement in Figure 3 and Figure 4 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bak, S.; Park, J.; Shin, J.; Jeong, J. Open-Access fNIRS Dataset for Classification of Unilateral Finger- and Foot-Tapping. Electronics 2019, 8, 1486. https://doi.org/10.3390/electronics8121486

Bak S, Park J, Shin J, Jeong J. Open-Access fNIRS Dataset for Classification of Unilateral Finger- and Foot-Tapping. Electronics. 2019; 8(12):1486. https://doi.org/10.3390/electronics8121486

Chicago/Turabian StyleBak, SuJin, Jinwoo Park, Jaeyoung Shin, and Jichai Jeong. 2019. "Open-Access fNIRS Dataset for Classification of Unilateral Finger- and Foot-Tapping" Electronics 8, no. 12: 1486. https://doi.org/10.3390/electronics8121486

APA StyleBak, S., Park, J., Shin, J., & Jeong, J. (2019). Open-Access fNIRS Dataset for Classification of Unilateral Finger- and Foot-Tapping. Electronics, 8(12), 1486. https://doi.org/10.3390/electronics8121486