1. Introduction

The evolution of optical communications in the last years has attracted the attention of the scientific community. Additionally, the quick expansion of the Internet of Things where the embedded systems in every kind of device must be connected has made traditional algorithms for solving problems derived from the transportation of digital data obsolete. These problems are now a real challenge for the most modern algorithms mainly due to the vast increase of network sizes. Most of these problems can be classified into two different types: finding the optimal route for the signal and reducing the costs of the equipment required to deploy and maintain the network [

1]. This paper is focused on solving one of the most extended problems of the second type, the Band Collocation Problem [

2].

The Band Collocation Problem cannot be defined without introducing the Bandpass Problem (BP). The BP was originally presented in Bell and Babayev [

3]. One of the main objectives to take into account in the design of efficient networks is to minimize the cost of the equipment required to maintain it without deteriorating its quality. In order to do so, it is necessary to optimize the traffic flow to reduce the hardware involved in the process.

A network is conformed by a set of

T target stations, with

, that are connected with fiber-optic cables. Then, a source station

transmits data to these target stations, which can be transported by different wavelengths

, using a technology named dense wavelength division multiplexing (DWDM) in a single fiber optic cable [

4]. It is worth mentioning that not all the target stations need to receive the data from all the wavelengths. A device named Add/Drop Multiplexer (ADM) is responsible for requesting the appropriate data from the fiber-optic cables at each station. The ADM uses a special card that controls the wavelengths required in each station. Some cards are able to receive all the data in consecutive wavelengths, so it is interesting to join in adjacent wavelengths all the data required by a single station. This structure conformed with consecutive wavelengths is named a Bandpass, and the number of consecutive wavelengths in a Bandpass is named as Bandpass number. Then, the objective of the BP is to select the optimal wavelength permutation that requires the minimum number of cards to be used in ADM for a given Bandpass number.

The BP was deeply analyzed in [

5], while the dataset of instances for this problem was originally presented in [

6]. The problem was proven to be

-hard in [

7] using a reduction for the Hamilton Problem for any Bandpass number. A game for understanding the permutations performed in the BP was also presented [

8], which implements a mathematical model for solving it. Some specific configuration for networks can be solvable in linear time, such as those with just three columns [

9]. Although the BP has attracted the focus of several works, see [

10,

11,

12], the best results in the literature are obtained by [

13,

14].

The original BP formulation has become obsolete due to the continuous evolution of communication networks. Therefore, it is necessary to revise the original model to adapt it to the new advances. Notice that it is not a correction but an adaptation of the new technologies. In particular, the cards in the ADM can now filter all data, not only the one requested for the station, from consecutive wavelengths [

15]. However, in the original problem, only the data requested for the station can be filtered. In new ADMs, more than one card can be used now, and the Bandpass number may be a power of two. Furthermore, the original BP does not evaluate the cost of the cards since it considers just a single type of card, while in the real application several different cards can be used. Thus, the cost must be taken into account for evaluating the quality of a solution.

The inclusion of all these new features result in a new problem called the Band Collocation Problem (BCP). In this problem, an ADM located at a target station can filter data even if it is transmitted to a different target station. The Bandpass number can vary in the same model, always being a power of two. Finally, the cost of each Bandpass number is different.

The network is represented with a binary matrix , with and , where n and m are the number of target stations (columns) and the number of wavelengths (rows), respectively. If data fragment i must be transmitted to target station j, the element is set to 1; otherwise, . Each band card is usually denoted with , with length and cost , where .

A solution of the BCP is usually represented as a permutation of the rows,

, where each

indicates which wavelength is located at row

i. Then, the aim of this optimization problem is to find the permutation of rows that minimizes the sum of costs of all

-Band cards used in the complete network. In mathematical terms:

where

,

, and

is a binary variable that is set to 1 if row

i is the first row of a

-Band in column

j; otherwise,

. Notice that each data fragment in every target station must be covered by exactly one band. We refer the reader to [

2] for a formal definition of the problem and a mathematical formulation.

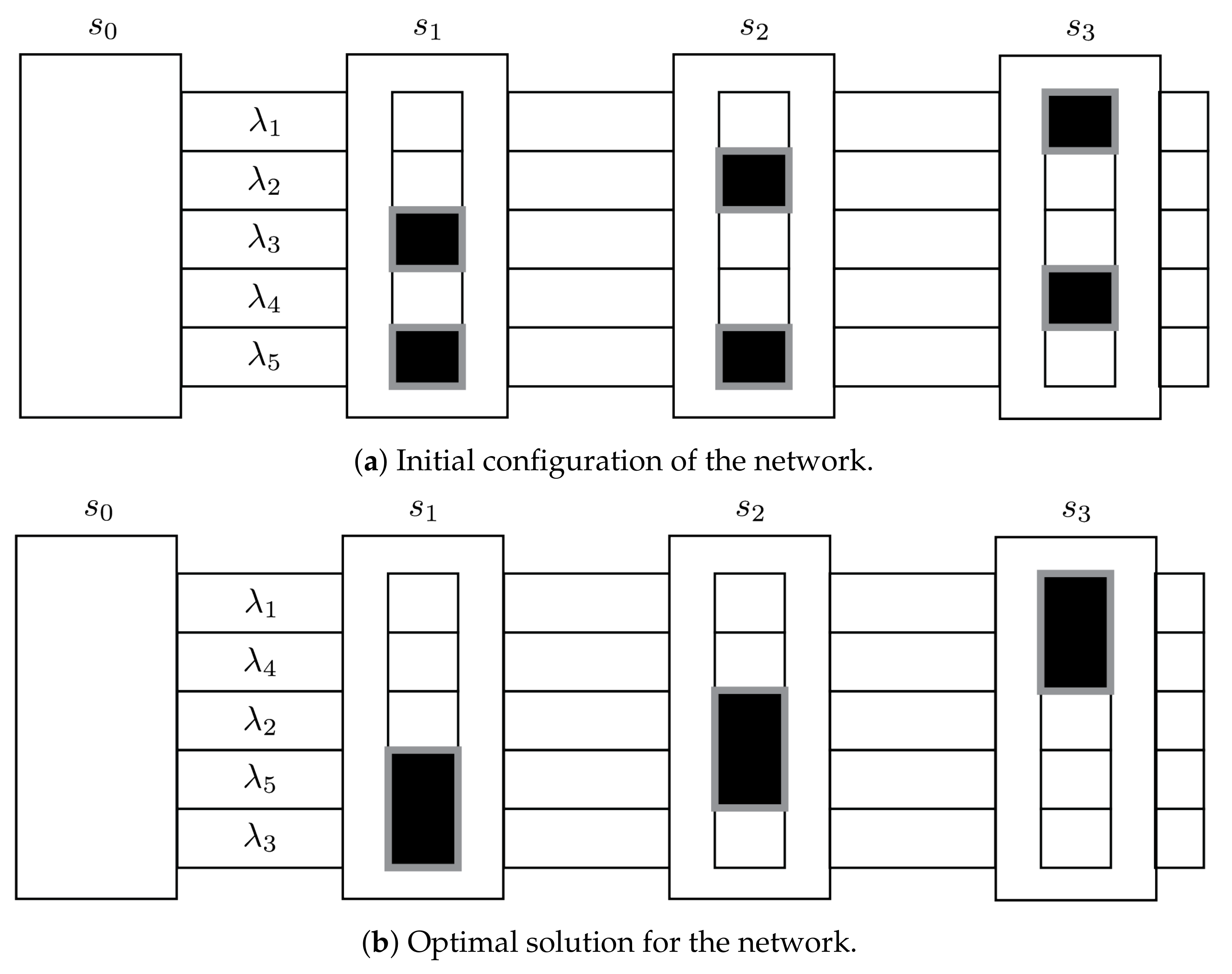

Figure 1 shows the representation of two different solutions for the BCP for a network with a single source station

, three target stations(named

, and

), and five wavelengths (named

,

,

,

, and

). In each target station, the data fragment that is requested is colored in black. In particular,

requires data from wavelengths

and

;

requires data from

and

; and

from

and

. The costs for the cards are

for

,

for

, and

for

.

Figure 1a depicts the initial configuration

of the network, where the wavelengths have been included in lexicographical order (i.e., from

to

). The wavelengths that have been grouped in the same card are highlighted with a thick grey border (with length

). Then, the initial cost of the network is evaluated as

.

If we now analyze

Figure 1b, the wavelengths have been set in a different ordering

. Due to this permutation, it is now possible to group more than one wavelength in the same card, resulting in a total cost of

(i.e., with three band cards of size

). Therefore, the ordering

results in a better solution than the initial configuration

.

Since the BCP has been recently proposed, it has not been widely studied yet. The first approach for solving the BCP is a fast heuristic algorithm [

16], while the first exact algorithm is a binary integer programming model, which is solved with GAMS and CPLEX [

17]. On the contrary, the first heuristic approach for the BCP is a classical Genetic Algorithm [

18]. Then, the same authors developed several bioinspired algorithms for further improving the results obtained [

2], but the results obtained were not satisfactory. In particular, a new Genetic Algorithm (GA), a Simulated Annealing (SA), an Artificial Bee Colony (ABC) algorithm are proposed. Additionally, a 0–1 integer model for the BCP is presented for solving small instances to verify the quality of the heuristic proposal. The detailed comparison provided by the author shows that the best method in the literature for the BCP is Simulated Annealing.

In this paper, we propose a novel approach based on the Variable Neighborhood Search (VNS) methodology [

19,

20] to deal with BCP. First of all, we introduce an extremely efficient strategy (in both, computing time and memory requirements) to evaluate the objective function. Then, we propose three different greedy constructive procedures to start the search from promising regions in the search space. Additionally, we define three different neighborhood structures and three local search methods (each one based on a different neighborhood). Finally, all these strategies are embedded within three VNS variants (e.g., Basic VNS, Variable Neighborhood Descent, and General VNS).

The main contributions of this work are:

A new dynamic programming algorithm for evaluating the objective function value is proposed. This new method leverages the Least Recently Used cache structure to drastically reduce the complexity of the objective function evaluation, thus reducing the computational effort.

Three Variable Neighborhood Search variants are proposed for analyzing the impact of intensification and diversification in the context of the Band Collocation Problem.

Three constructive procedures are presented, each one of them using different properties of the solution structure to generate initial solutions.

Three neighborhoods are defined, which allow us to explore the solution space through different approaches.

The remaining of the paper is structured as follows:

Section 2 describes the optimization proposed to increase the performance in the evaluation of the objective function.

Section 3 presents the algorithmic proposal of this work.

Section 4 is devoted to select the best configuration for the proposed algorithm and analyzes the results obtained when comparing it with the best previous method found in the state-of-the-art. Finally,

Section 5 draws some conclusions derived from the research.

2. Evaluation of the Objective Function

Given a solution of the BCP, representing a permutation of wavelengths, the selection of the optimal combination of cards to minimize the cost associated to that permutation is a highly computationally demanding task. Specifically, it is necessary to test every possible combination of cards and select the one with the minimum cost. Indeed, it is mandatory to evaluate all card combinations since, otherwise, we can miss high quality solutions or even the optimum.

In order to reduce the computational effort, the author of [

21] proposes a Dynamic Programming (DP) algorithm that memorizes the already explored solutions to reduce the computing time for finding the minimum cost. In particular, they consider each column as a subproblem, solving it with the DP method, memorizing during the search the solutions found. The complexity of the method is

.

In this work, we propose an improvement to this evaluation by increasing the number of elements memorized during the DP for reducing the total computing time. This behavior leads the procedure to require a large amount of memory (more than 32 GB RAM). In general, these hardware requirements are not available. Therefore, we propose a new memory structure, by using the Least Recently Used (LRU) algorithm, which allow overhead high performance buffer management replacement [

22]. This optimization strategy is able to keep in memory just the strictly necessary data, drastically reducing the required memory. It is totally scalable, adapting the memory requirements to the available memory in the computer.

The algorithm stores the memorized information (in the Dynamic Programming procedure) in a table (implemented with a hash map). Each table entry additionally stores information about the immediately less and more used element in the table with respect to itself. Therefore, when the table is complete and a new element must be stored, the algorithm replaces the least used element, updating it. If the table is rather small, the cache will be frequently updated, affecting the performance of the evaluation of the objective function (i.e., the larger the table, the faster the algorithm).

Figure 2 shows a graphical example of the LRU cache structure.

The structure depicted in the upper side of the figure represents the table where keys are stored. Similarly, in the lower side a double linked list is depicted, which stores the values in ascending order with respect to the last time it was accessed. In particular, in this example the keys , and are linked with the corresponding entries, i.e., , , and . For the sake of clarity, we have highlighted in grey these pairs of keys and values. Notice that, using the appropriate data structures, the complexity of inserting and deleting a new element in the table is .

3. Variable Neighborhood Search

Variable Neighborhood Search (VNS) is a metaheuristic [

23], which was originally proposed as a general framework for solving hard optimization problems. The main contribution of this methodology is to consider several neighborhoods during the search and to perform systematic changes in the neighborhood structures. Although it was originally presented as a simple metaheuristic, VNS has drastically evolved, resulting in several extensions and variants: Basic VNS, Reduced VNS, Variable Neighborhood Descent, General VNS, Skewed VNS, Variable Neighborhood Decomposition Search, or Variable Formulation Search, among others. See [

19,

20,

24] for a deep analysis of each variant. In this work, we propose a comparison among the most extended variants of VNS: Basic Variable Neighborhood Search (BVNS), Variable Neighborhood Descent (VND), and General Variable Neighborhood Search (GVNS).

3.1. Basic VNS

This variant combines deterministic and random changes of neighborhood structures in order to find a balance between diversification and intensification as presented in Algorithm 1.

| Algorithm 1 |

- 1:

- 2:

- 3:

- 4:

while

do - 5:

- 6:

- 7:

- 8:

end while - 9:

return

|

The algorithm receives as input parameters the matrix

A and the largest neighborhood to be explored,

. In step 1, an initial solution

is generated by considering one of the constructive procedures presented in

Section 3.4. Then, the solution is locally improved with one of the local search methods described in

Section 3.5 (step 2). Starting from the first predefined neighborhood (step 3), BVNS iterates until reaching the maximum considered neighborhood

(steps 4–8). For each iteration, the incumbent solution is perturbed with the shake method (step 5). This method is designed to escape from local optima by randomly exchanging the position of

k wavelengths, generating a solution

in the neighborhood under exploration. The local search method is then responsible for finding a local optimum

in the current neighborhood with respect to the perturbed solution

. Finally, the neighborhood change method selects the next neighborhood to be explored (step 7). In particular, if

outperforms

in terms of the objective function value, then it is updated (i.e.,

), and the search starts again from the first neighborhood (i.e.,

). Otherwise, the search continues in the next neighborhood (i.e.,

). The algorithm stops when reaching the largest considered neighborhood

, returning the best solution found during the search (step 9).

3.2. Variable Neighborhood Descent

This variant performs the changes in the neighborhood structure in a totally deterministic manner. Specifically, the diversification part of VNS is completely removed, focusing in the intensification phase. Algorithm 2 shows the pseudocode of the VND algorithm.

| Algorithm 2 |

- 1:

- 2:

while

do - 3:

- 4:

- 5:

end while - 6:

return

|

The algorithm receives an input solution

and a set of neighborhoods

to be explored. The proposed VND follows the sequential Basic VND scheme described in [

25]. Starting from the first neighborhood (step 1), the algorithm explores

following a sequential ordering. In particular, for each neighborhood

, VND finds a local optimum with respect to the neighborhood under exploration (step 3). Then, the neighborhood change method (step 4) resorts to the first predefined neighborhood if and improvement is found (

), otherwise continuing with the next neighborhood (

). The method ends when no improvement is found in any of the considered neighborhoods, returning the best solution found.

Notice that the final solution is a local minimum with respect to all the considered neighborhoods . Then, reaching a global optimum is more probable than when considering a single neighborhood structure.

3.3. General VNS

This variant combines BVNS and VND with the aim of balancing intensification and diversification for providing even better solutions. Specifically, the General VNS replaces the local search phase of BVNS with a complete VND algorithm. This modification allows GVNS to find better solutions in the improvement phase, thus increasing the probability of reaching a global optimum.

The main drawback of GVNS lies in the computing time required by the VND phase, which can eventually lead to a very computationally demanding algorithm. However, the efficient implementation of the objective function evaluation described in

Section 2 counteracts that disadvantage of GVNS (see

Section 4 for a thorough analysis of the performance).

For the sake of brevity, we do not provide the pseudocode for this variant since it consists of replacing step 6 from Algorithm 1 with the following sentence:

with

VND the variant described in Algorithm 2. Furthermore, the input parameter of GVNS is the set of neighborhoods

instead of the maximum neighborhood to be explored

.

3.4. Constructive Procedure

In the context of VNS, the initial solution can be generated at random, but several recent works have concluded that using an initial high quality solution leads the algorithm to converge faster than when starting from a random initial solution (see [

26,

27,

28,

29,

30,

31], for some successful results).

This work presents three different greedy constructive procedures that are able to find a high quality solution in negligible computing times.

Section 4 will discuss the results obtained with each constructive procedure to select the most adequate one for the complete VNS framework. The three constructive procedures follows the same greedy scheme but varying the greedy function used to select the next wavelength to be included in the solution.

The first constructive procedure, named

, is based on the idea that wavelengths that are required in similar target stations should be located consecutively. In particular, the method starts from an empty solution and generates a solution starting from a given wavelength. Once the first wavelength has been selected, the next wavelength to be considered would be the one with the maximum number of target stations in common with the previous one. Let

be a partial solution of the BCP,

be the wavelength located in row

i, and

j be the target station. We then define the score

as:

More formally, the greedy function value

for a given wavelength

is calculated as:

where

if and only if the wavelength

i must be transmitted to target station

j. Then, the next wavelength

to be included in the solution

under construction is evaluated as:

The method iterates until all the wavelengths have been included in the solution. Since the first wavelength selected is a key part in the constructive procedure, it generates a solution starting in each available (i.e., m solutions are constructed), returning the best solution in terms of objective function value.

The second constructive method,

, modifies the definition of the score. In this case, the score

evaluates the number of target stations for which the wavelength

under consideration is not necessary. More formally:

Analogously, the definition of and are computed in a similar way than and but replacing with .

Finally, the last constructive method,

considers both, the number of target stations that two wavelengths have in common and those for which the wavelength is not required, resulting in the score

. In mathematical terms:

Similarly, and are computed. Notice that the proposed constructive procedures are extremely fast since they do not need to evaluate the objective function during the construction, but a single evaluation after constructing the solution.

3.5. Neighborhood Structures

A neighborhood N for a given solution is defined as the set of solutions that can be reached from by performing a single movement. Therefore, before defining a neighborhood structure it is necessary to introduce the movements that are considered in this work.

The first movement consists in exchanging the position of two given wavelengths i and , with . Given a solution , the move results in a new solution where the wavelengths i and have exchanged original positions.

The second movement is based on insertions. In this case, inserting a wavelength i in a position , with extracts i from its original position in solution , and inserts it in the position of wavelength , displacing the wavelengths located in the range given by the position of wavelength i and position of wavelength . More formally, given a solution , the move results in solution .

The third and last movement is based on the

move, which is a widely used operator in vehicle routing problems [

32]. In that context, given a route, the

move reverses a certain part of the route. The adaptation to the BCP is performed as follows. Given a solution

, the movement

reverses all the wavelengths located in positions between

and

, resulting in solution

.

Once the movements have been defined, we can now define the neighborhoods considered in this work. Specifically, we define

,

, and

for neighborhoods based on swaps, insertions, and

, respectively, as:

For each neighborhood structure, we propose a local search method, which locally improves the input solution. A local search is conformed with the neighborhood to be explored and the order in which those neighbor solutions are traversed. In particular, we propose three local search methods, namely

,

, and

. All of them follows the same ordering when exploring the associated neighborhood. The search starts performing the movement over the wavelengths located at the first and second positions of the solution, continuing in ascending order until the complete neighborhood is explored. It is worth mentioning that the three proposed local search methods follow a first improvement approach, which usually leads to better results [

33]. In this scheme, the first movement that leads to a better solution is performed, restarting the search. The search stops when no improvement is found after exploring the complete neighborhood.

4. Computational Results

This section has two main objectives: to select the best combination of parameter values for the proposed algorithms, and to perform an in-depth comparison of the proposed algorithm and the best method found in the state-of-the-art. All the algorithms have been implemented in Java 11 and the experiments have been performed in an AMD Ryzen 53,600 (2.2 GHz) with 16 GB RAM.

Since the BCP have been recently proposed, there are not many research works on this problem. In particular, the best method found in the literature is the Simulated Annealing (SA) algorithm proposed in [

2], which is able to outperform the results obtained with the Genetic Algorithm, and the Artificial Bee Colony algorithm also presented in that work. Therefore, we will compare our best proposal with the SA procedure.

In order to have a fair comparison, we have considered the same set of instances than the ones used in the previous work. It is called the BPLIB and it is publicly available at

http://grafo.etsii.urjc.es/bcp. We select the same set of instances used in [

2], which consists in 72

matrices with

m ranging from 12 to 96 and

n ranging from 6 to 28. In these instances the optimal value is not known and the best known value is the one obtained in [

2].

This section is divided into two types of experiments. On the one hand, the preliminary experimentation is designed to find the best values for the input parameters of the proposed algorithms. In particular, it is required to perform the following analysis:

Selection of the best constructive procedure among , , and .

Selection of the best local search method among , , and .

Selection of the neighborhood exploration order within VND, testing all possibilities.

Selection of the best value for the Basic VNS algorithm among .

Selection of the best value for the General VNS algorithm among .

Selection of the best VNS algorithm for the BCP among Basic VNS, VND, and General VNS.

On the other hand, the competitive testing has the aim of analyzing the performance of the final version of the algorithm when comparing the results with the ones presented in the best method found in the literature. A subset of 15 out 72 representative instances (20%) are used in the preliminary experimentation to avoid overfitting. Then, in the competitive testing, the complete set of 72 instances is considered.

The metrics reported in all the experiments are: Avg., the average objective function value; Time(s), the computing time required by the algorithm to finish in seconds; Dev(%), the average deviation with respect to the best solution found in the experiment; and #Best, the number of times that the algorithm reaches the best solution of the experiment. For the sake of clarity, the best value of each metric is highlighted with bold font.

4.1. Preliminary Experimentation

The first preliminary experiment is intended to select the best constructive procedure among the ones presented in

Section 3.4:

,

,

.

Table 1 shows the results obtained when executing each constructive procedure over the preliminary set of instances.

As it can be seen, the computing time is negligible in all cases, being considerably smaller than 1 s. Hence, in terms of quality, emerges as the best constructive method with the largest number of best solutions found, missing the best solution in only 5 out of 15 instances. Notice that, in those cases in which the best solution is not found, remains close to it, as it can be seen in the obtained average deviation of 0.12%. From this experiment we can derive that it is interesting to locate the wavelengths that must be delivered to the same target stations in close rows. Therefore, we select as the best constructive method and it will be used in the final competitive testing.

The next experiment is designed to evaluate the influence of each local search method (

,

, and

) when coupling it with the best constructive procedure

.

Table 2 shows the results obtained in this experiment.

It is worth mentioning that the efficient evaluation of the objective function allows the local search to be executed also in small computing times. In this case, the superiority of is clear, reaching the best solution in every instance, with a deviation of 0.00%. Then, is the local search selected for BVNS.

The aim of the third experiment is to find the optimal ordering of the neighborhood structures in the context of VND. Although it is usually recommended to firstly explore the neighborhoods from the smallest to the largest [

34], we perform an empirical evaluation of all possibilities in order to select the best one. In this experiment, each variant of VND has been applied to the solution derived from

.

Figure 3 shows a graph comparing computing time (X-axis) with average deviation (Y-axis) of the six possibilities for the local search order within VND.

As it can be derived from the graph, the two best options are and , with the same average deviation of 0.06%. Since the computing time of is twice the time of , we select the last one for the VND and GVNS algorithm.

The next experiment analyzes the influence of the parameter

in the context of BVNS. The VNS literature [

19] recommends using small values for this parameter. This is mainly because large values of

will explore solutions that are very different to the incumbent one, being similar to constructing an entire new solution. Therefore, the values considered for this experiment are

. Notice that, to favor scalability, the value of the parameter is dependent on the number of wavelengths

m of the input instance.

Table 3 shows the results obtained in this experiment.

As it can be observed in this experiment, the quality of the solutions increase with the value of . However, when reaching , the search seems to be stagnated, but increasing the computing time required. Therefore, we select for the final BVNS algorithm.

The next experiment is designed to identify the best

value for the GVNS algorithm. Following the same reasoning as in the previous experiment, we have tested the same values,

.

Table 4 shows the results of this experiment.

Again, the larger the value of , the better the quality. However, the differences in quality (deviation and number of best solutions) between and are negligible, while the latter requires a larger computing time. Therefore, we select as the best value for GVNS.

The last preliminary experiment is intended to analyze which VNS variant is the most promising one.

Table 5 shows the results obtained by the best configuration of each VNS variant: BVNS, VND, and GVNS.

As it can be derived from the data, the worst variant is BVNS, with a deviation of 2.04%. However, it is the fastest algorithm, so it would be an interesting selection when requiring small computing times. Analyzing the results of VND and GVNS, the former is considerably faster, as expected, since GVNS executes a complete VND in each iteration. However, it is worth mentioning that VND is able to reach a small deviation of 0.50%, which makes it a relevant candidate if the computing time is one of the main requisites, although it only reaches 2 out of 15 best solutions. Finally, GVNS emerges as the best variant reaching all the best solutions found, requiring a larger, but reasonable, computing time.

4.2. Competitive Testing

Once the parameters of the proposed algorithm have been tested, this competitive testing is devoted to analyzing the efficiency of the best variant, which is GVNS, with the best previous method found in the state-of-the-art, which is based on a Simulated Annealing (SA) framework. In this case, the experiment is performed over the complete set of 72 instances. The results of the previous method are directly imported from the original work [

2].

Table 6 shows the summary table with the results obtained by both algorithms. To facilitate future comparisons, we report in

Appendix A (see

Table A1) individual results per instance.

In order to have a fair comparison, we have considered the same experimental methodology than the one used in the previous paper. In particular, the algorithm has executed 50 independent iterations, reporting the average objective function value and time in the first main row, and the best values in the second main row.

If we firstly analyze the average results, we can clearly see that GVNS is able to obtain the largest number of best solutions (65 vs. 7) and a smaller deviation (0.04% vs. 1.10%). This small deviation indicates that, in the seven instances in which GVNS does not reach the best solution, it remains very close to it. Regarding the best results obtained with both algorithms, GVNS is still the best option, with the smallest deviation (0.19% vs. 0.57%) and the largest number of best solutions found (44 vs. 38). Notice that the computing times are equivalent in both cases. It is worth mentioning that it is always a difficult task to compare execution times of different algorithms implemented in different programming languages but, in this case, the execution environment could be considered equivalent.

In order to confirm that there are statistically significant differences between both algorithms, we have conducted a non-parametric pairwise Wilcoxon test. The resulting p-value smaller than 0.05 confirms the superiority of our proposal.

5. Conclusions

This paper presents three Variable Neighborhood Search variants for dealing with the Band Collocation Problem. This problem arose in the context of telecommunication networks to solve some concerns with the original Bandpass Problem with respect to its practical application.

The evaluation of the objective function is very computationally demanding, so the proposed LRU cache optimization method is able to efficiently evaluate solutions without requiring large computational times. This optimization allows the VNS to perform a deeper analysis of the search space.

Three neighborhood structures are explored, as well as their combination in a Variable Neighborhood Descent scheme. Additionally, a Basic VNS, which considers the best neighborhood structure in its local search phase is presented, as well as a General VNS algorithm, which increases the diversification of VND. The experimental results show how the combination of several neighborhood structures in the GVNS scheme allows the algorithm to explore a wider portion of the search space, resulting in better results.

Finally, the General VNS algorithm is able to outperform the state-of-the-art method, based on Simulated Annealing in similar computing times, emerging GVNS as a competitive method for the Band Collocation Problem.