DeepHandsVR: Hand Interface Using Deep Learning in Immersive Virtual Reality

Abstract

:1. Introduction

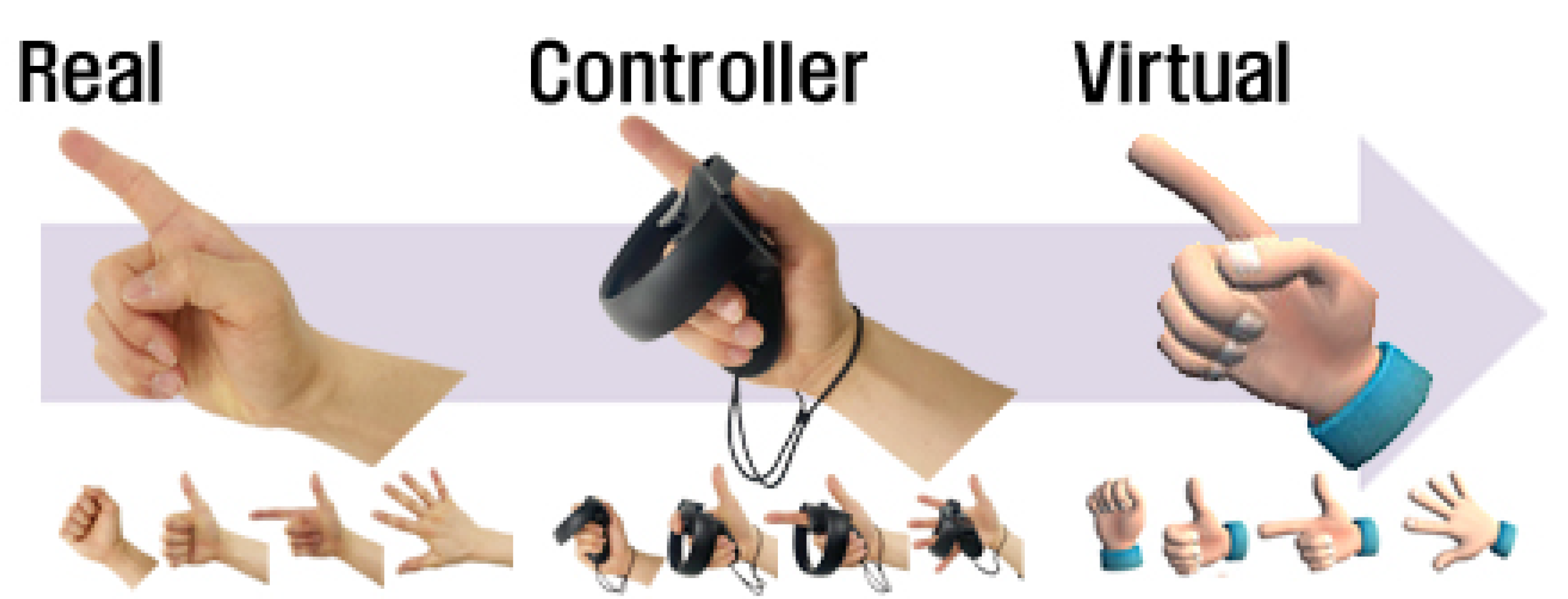

- Controller-based hand interface design that directly expresses the gestures taken from real hands to virtual hands

- Real-time interface design using a deep learning model (CNN) that intuitively expresses the process of gesture to action without GUIs

2. Related Work

3. DeepHandsVR

3.1. Real to Virtual Direct Hand Interface

| Algorithm 1 Process of real-to-virtual direct hand interface using controller. |

|

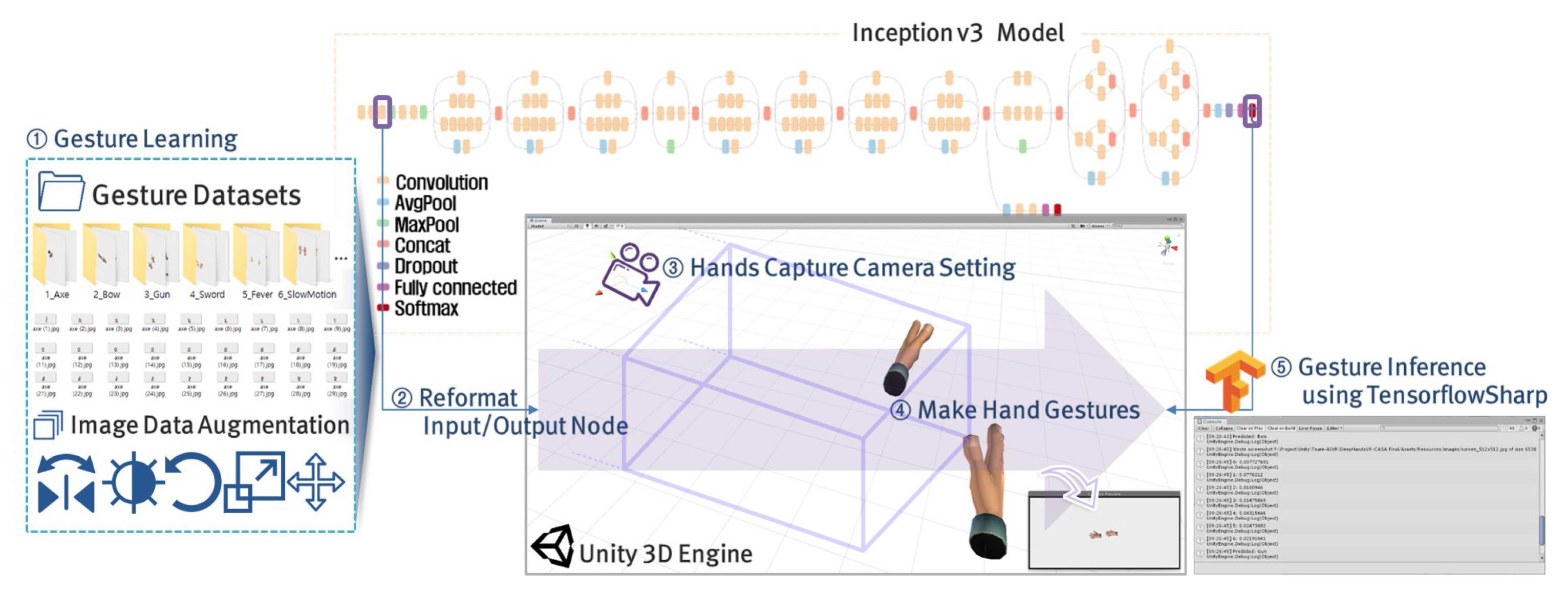

3.2. Gesture-to-Action Real-Time Interface

- (a)

- Generate and train graph objects (TFGraph), and load label data.

- (b)

- Input the gesture image after changing the format according to the set input node.

- (c)

- Calculate the probability result value for each label inferred from the output node.

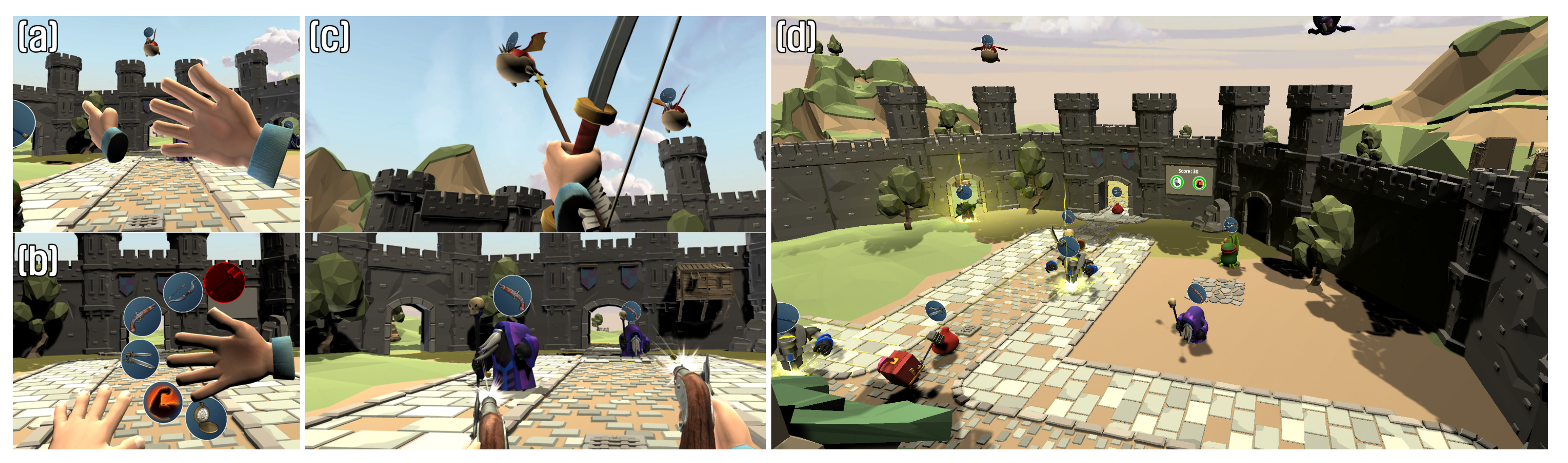

3.3. Immersive Interaction

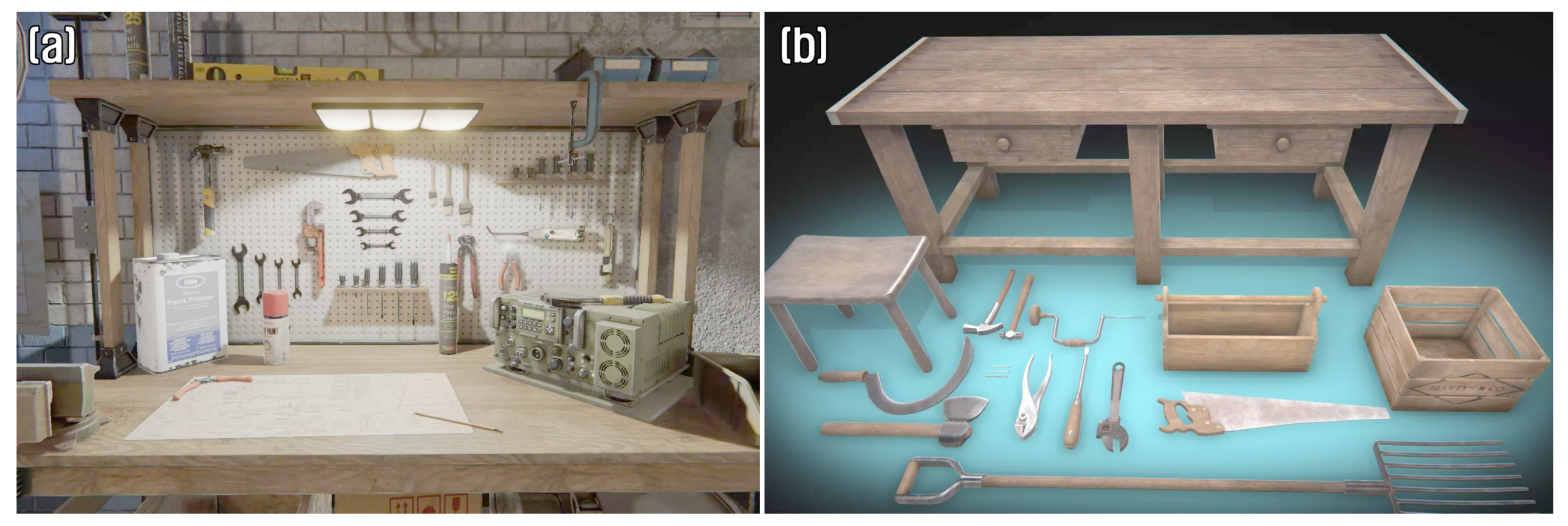

4. Application

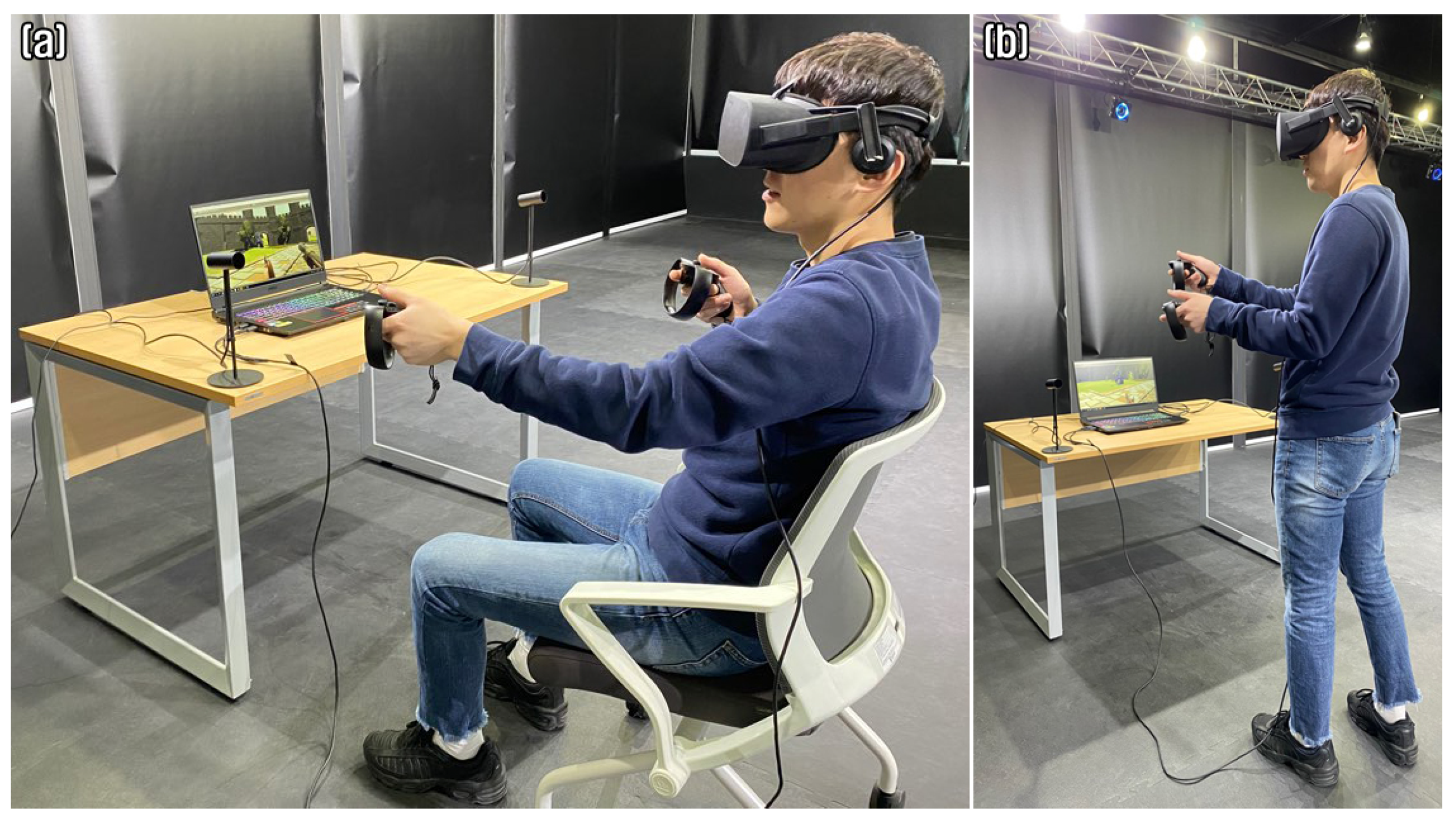

5. Experimental Results and Analysis

6. Limitation and Discussion

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Pfeiffer, T. Using virtual reality technology in linguistic research. In Proceedings of the 2012 IEEE Virtual Reality Workshops (VRW), Costa Mesa, CA, USA, 4–8 March 2012; pp. 83–84. [Google Scholar]

- Sidorakis, N.; Koulieris, G.A.; Mania, K. Binocular eye-tracking for the control of a 3D immersive multimedia user interface. In Proceedings of the 2015 IEEE 1st Workshop on Everyday Virtual Reality (WEVR), Arles, France, 23 March 2015; pp. 15–18. [Google Scholar]

- Jeong, K.; Lee, J.; Kim, J. A Study on New Virtual Reality System in Maze Terrain. Int. J. Hum. Comput. Interact. 2018, 34, 129–145. [Google Scholar] [CrossRef]

- Joo, H.; Simon, T.; Sheikh, Y. Total Capture: A 3D Deformation Model for Tracking Faces, Hands, and Bodies. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UH, USA, 18–23 June 2018; pp. 8320–8329. [Google Scholar]

- Metcalf, C.D.; Notley, S.V.; Chappell, P.H.; Burridge, J.H.; Yule, V.T. Validation and Application of a Computational Model for Wrist and Hand Movements Using Surface Markers. IEEE Trans. Biomed. Eng. 2008, 55, 1199–1210. [Google Scholar] [CrossRef] [PubMed]

- Zhao, W.; Chai, J.; Xu, Y.Q. Combining Marker-based Mocap and RGB-D Camera for Acquiring High-fidelity Hand Motion Data. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Lausanne, Switzerland, 29–31 July 2012; Eurographics Association: Aire-la-Ville, Switzerland, 2012; pp. 33–42. [Google Scholar]

- Inrak, C.; Eyal, O.; Hrvoje, B.; Mike, S.; Christian, H. CLAW: A Multifunctional Handheld Haptic Controller for Grasping, Touching, and Triggering in Virtual Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 654:1–654:13. [Google Scholar]

- Vasylevska, K.; Kaufmann, H.; Bolas, M.; Suma, E.A. Flexible spaces: Dynamic layout generation for infinite walking in virtual environments. In Proceedings of the 2013 IEEE Symposium on 3D User Interfaces, Orlando, FL, USA, 16–17 March 2013; pp. 39–42. [Google Scholar]

- Lee, J.; Jeong, K.; Kim, J. MAVE: Maze-based immersive virtual environment for new presence and experience. Comput. Anim. Virtual Worlds 2017, 28, e1756. [Google Scholar] [CrossRef]

- Carvalheiro, C.; Nóbrega, R.; da Silva, H.; Rodrigues, R. User Redirection and Direct Haptics in Virtual Environments. In Proceedings of the 2016 ACM on Multimedia Conference, Amsterdam, The Netherlands, 15–19 October 2016; ACM: New York, NY, USA, 2016; pp. 1146–1155. [Google Scholar]

- Pusch, A.; Martin, O.; Coquillart, S. HEMP-Hand-Displacement-Based Pseudo-Haptics: A Study of a Force Field Application. In Proceedings of the 2008 IEEE Symposium on 3D User Interfaces, Reno, NE, USA, 8–9 March 2008; pp. 59–66. [Google Scholar]

- Achibet, M.; Gouis, B.L.; Marchal, M.; Léziart, P.; Argelaguet, F.; Girard, A.; Lécuyer, A.; Kajimoto, H. FlexiFingers: Multi-finger interaction in VR combining passive haptics and pseudo-haptics. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 103–106. [Google Scholar]

- Lung-Pan, C.; Thijs, R.; Hannes, R.; Sven, K.; Patrick, S.; Robert, K.; Johannes, J.; Jonas, K.; Patrick, B. TurkDeck: Physical Virtual Reality Based on People. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, Daegu, Kyungpook, Korea, 8–11 November 2015; ACM: New York, NY, USA, 2015; pp. 417–426. [Google Scholar]

- Carl, S.; Aaron, N.; Ravish, M. Efficient HRTF-based Spatial Audio for Area and Volumetric Sources. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1356–1366. [Google Scholar] [CrossRef]

- Choi, I.; Hawkes, E.W.; Christensen, D.L.; Ploch, C.J.; Follmer, S. Wolverine: A wearable haptic interface for grasping in virtual reality. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 986–993. [Google Scholar]

- Sebastian, M.; Maximilian, B.; Lukas, W.; Cheng, L.-P.; Floyd, M.F.; Patrick, B. VirtualSpace—Overloading Physical Space with Multiple Virtual Reality Users. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018; pp. 241:1–241:10. [Google Scholar]

- Jeong, K.; Kim, J.; Kim, M.; Lee, J.; Kim, C. Asymmetric Interface: User Interface of Asymmetric Virtual Reality for New Presence and Experience. Symmetry 2019, 12, 53. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.; Lee, J.; Jeon, C.; Kim, J. A Study on Interaction of Gaze Pointer-Based User Interface in Mobile Virtual Reality Environment. Symmetry 2017, 9, 189. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.; Jeon, C.; Kim, J. A Study on Immersion and Presence of a Portable Hand Haptic System for Immersive Virtual Reality. Sensors 2017, 17, 1141. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Lee, J. Controlling your contents with the breath: Interactive breath interface for VR, games, and animations. PLoS ONE 2020, 15, e241498. [Google Scholar] [CrossRef] [PubMed]

- Jayasiri, A.; Ma, S.; Qian, Y.; Akahane, K.; Sato, M. Desktop versions of the string-based haptic interface—SPIDAR. In Proceedings of the 2015 IEEE Virtual Reality (VR), Arles, France, 23–27 March 2015; pp. 199–200. [Google Scholar]

- Leonardis, D.; Solazzi, M.; Bortone, I.; Frisoli, A. A 3-RSR Haptic Wearable Device for Rendering Fingertip Contact Forces. IEEE Trans. Haptics 2017, 10, 305–316. [Google Scholar] [CrossRef] [PubMed]

- Prattichizzo, D.; Chinello, F.; Pacchierotti, C.; Malvezzi, M. Towards Wearability in Fingertip Haptics: A 3-DoF Wearable Device for Cutaneous Force Feedback. IEEE Trans. Haptics 2013, 6, 506–516. [Google Scholar] [CrossRef] [PubMed]

- Andreas, P.; Anatole, L. Pseudo-haptics: From the Theoretical Foundations to Practical System Design Guidelines. In Proceedings of the 13th International Conference on Multimodal Interfaces, Alicante, Spain, 14–18 November 2011; ACM: New York, NY, USA, 2011; pp. 57–64. [Google Scholar]

- Kim, M.; Kim, J.; Jeong, K.; Kim, C. Grasping VR: Presence of Pseudo-Haptic Interface Based Portable Hand Grip System in Immersive Virtual Reality. Int. J. Hum. Comput. Interact. 2020, 36, 685–698. [Google Scholar] [CrossRef]

- Zhang, T.; McCarthy, Z.; Jow, O.; Lee, D.; Goldberg, K.; Abbeel, P. Deep Imitation Learning for Complex Manipulation Tasks from Virtual Reality Teleoperation. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Lalonde, J. Deep Learning for Augmented Reality. In Proceedings of the 2018 17th Workshop on Information Optics (WIO), Quebec City, QC, Canada, 16–19 July 2018; pp. 1–3. [Google Scholar]

- Yang, J.; Liu, T.; Jiang, B.; Song, H.; Lu, W. 3D Panoramic Virtual Reality Video Quality Assessment Based on 3D Convolutional Neural Networks. IEEE Access 2018, 6, 38669–38682. [Google Scholar] [CrossRef]

- Hu, Z.; Li, S.; Zhang, C.; Yi, K.; Wang, G.; Manocha, D. DGaze: CNN-Based Gaze Prediction in Dynamic Scenes. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1902–1911. [Google Scholar] [CrossRef]

- Kim, J. VIVR: Presence of Immersive Interaction for Visual Impairment Virtual Reality. IEEE Access 2020. [Google Scholar] [CrossRef]

- Mel, S.; Sanchez-Vives, M.V. Transcending the Self in Immersive Virtual Reality. Computer 2014, 47, 24–30. [Google Scholar]

- Han, S.; Kim, J. A Study on Immersion of Hand Interaction for Mobile Platform Virtual Reality Contents. Symmetry 2017, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.; Lee, J.; Kim, C.; Kim, J. TPVR: User Interaction of Third Person Virtual Reality for New Presence and Experience. Symmetry 2018, 10, 109. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Lund, A. Measuring Usability with the USE Questionnaire. Usability Interface 2001, 8, 3–6. [Google Scholar]

- Witmer, B.G.; Jerome, C.J.; Singer, M.J. The Factor Structure of the Presence Questionnaire. Presence Teleoper. Virtual Environ. 2005, 14, 298–312. [Google Scholar] [CrossRef]

| DeepHandsVR | Existing GUI | |

|---|---|---|

| Mean(SD) | ||

| usefulness | 5.625(1.048) | 4.610(1.467) |

| ease of use | 5.511(1.291) | 4.614(1.308) |

| ease of learning | 5.234(1.726) | 5.172(1.374) |

| satisfaction | 6.188(0.910) | 4.696(1.667) |

| Pairwise Comparison | ||

| usefulness | F(1,30) = 4.761, p < 0.05 * | |

| ease of use | F(1,30) = 4.512, p < 0.05 * | |

| ease of learning | F(1,30) = 0.012, p = 0.913 | |

| satisfaction | F(1,30) = 9.242, p < 0.01 * | |

| DeepHandsVR | Existing GUI | |

|---|---|---|

| Mean(SD) | ||

| total | 6.138(0.542) | 5.102(1.108) |

| realism | 6.308(0.596) | 5.174(1.088) |

| possibility to act | 6.101(0.631) | 5.109(1.019) |

| quality of interface | 5.938(0.757) | 4.833(1.958) |

| possibility to examine | 6.198(0.757) | 5.115(0.839) |

| self-evaluation of performance | 5.813(1.579) | 5.219(1.262) |

| Mean(SD) | ||

| Pairwise Comparison | ||

| total | F(1,30) = 10.594, p < 0.01 * | |

| realism | F(1,30) = 12.534, p < 0.001 * | |

| possibility to act | F(1,30) = 10.438, p < 0.01 * | |

| quality of interface | F(1,30) = 4.150, p < 0.05 * | |

| possibility to examine | F(1,30) = 13.779, p < 0.001 * | |

| self-evaluation of performance | F(1,30) = 1.293, p = 0.264 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, T.; Chae, M.; Seo, E.; Kim, M.; Kim, J. DeepHandsVR: Hand Interface Using Deep Learning in Immersive Virtual Reality. Electronics 2020, 9, 1863. https://doi.org/10.3390/electronics9111863

Kang T, Chae M, Seo E, Kim M, Kim J. DeepHandsVR: Hand Interface Using Deep Learning in Immersive Virtual Reality. Electronics. 2020; 9(11):1863. https://doi.org/10.3390/electronics9111863

Chicago/Turabian StyleKang, Taeseok, Minsu Chae, Eunbin Seo, Mingyu Kim, and Jinmo Kim. 2020. "DeepHandsVR: Hand Interface Using Deep Learning in Immersive Virtual Reality" Electronics 9, no. 11: 1863. https://doi.org/10.3390/electronics9111863

APA StyleKang, T., Chae, M., Seo, E., Kim, M., & Kim, J. (2020). DeepHandsVR: Hand Interface Using Deep Learning in Immersive Virtual Reality. Electronics, 9(11), 1863. https://doi.org/10.3390/electronics9111863