Calibration Venus: An Interactive Camera Calibration Method Based on Search Algorithm and Pose Decomposition

Abstract

1. Introduction

2. Basic Theory

2.1. Camera Imaging Principle

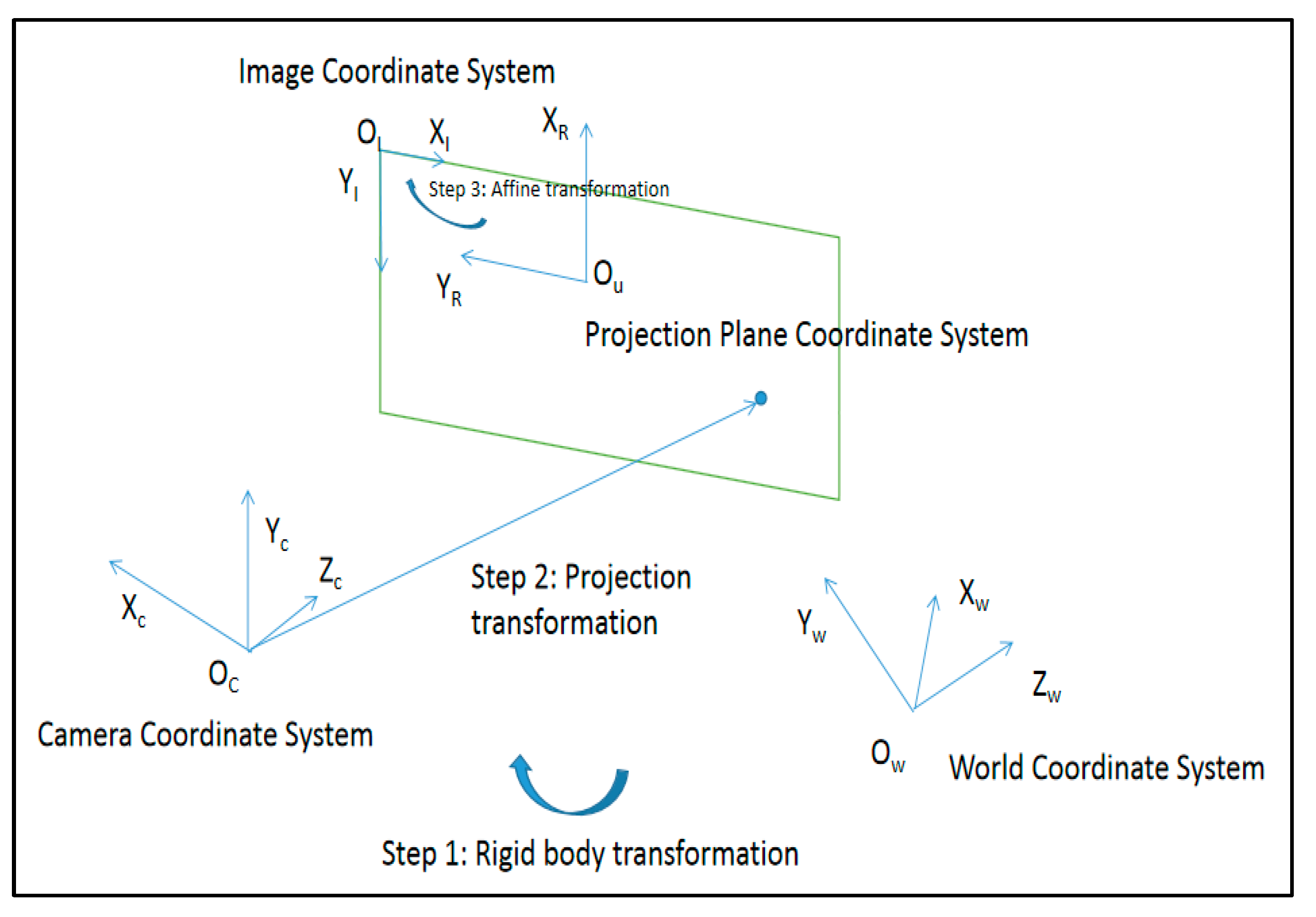

2.1.1. Coordinate System Definition

- World coordinate system: An absolute coordinate system used to measure the position of a camera or object.

- Camera coordinate system: A 3D rectangular coordinate system is established with the optical center of the camera as the origin and the optical axis as the positive half-axis of the Z-axis. It is the coordinate system when the camera is standing at its angle to measure objects.

- Projection plane coordinate system: A coordinate system established with the intersection of the camera’s optical axis and the projection plane as the origin to indicate the physical position of the pixel.

- Image coordinate system: A two-dimensional coordinate system based on the upper left corner of the digital image as the origin.

2.1.2. Camera Imaging Process

2.1.3. Distortion

2.2. Zhang’s Calibration Method

2.2.1. Calculation of Initial Value

2.2.2. Maximum Likelihood Estimation

3. Calibration Process

3.1. Bootstrapping

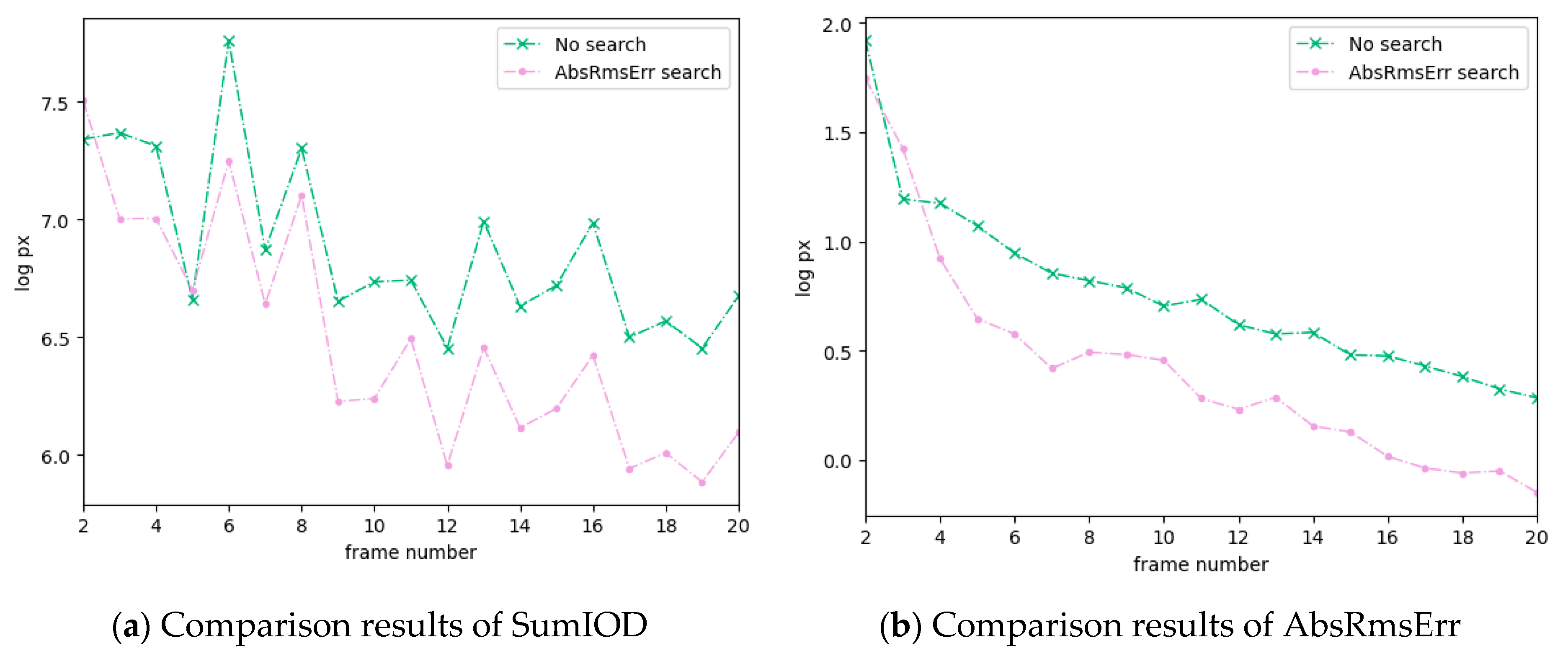

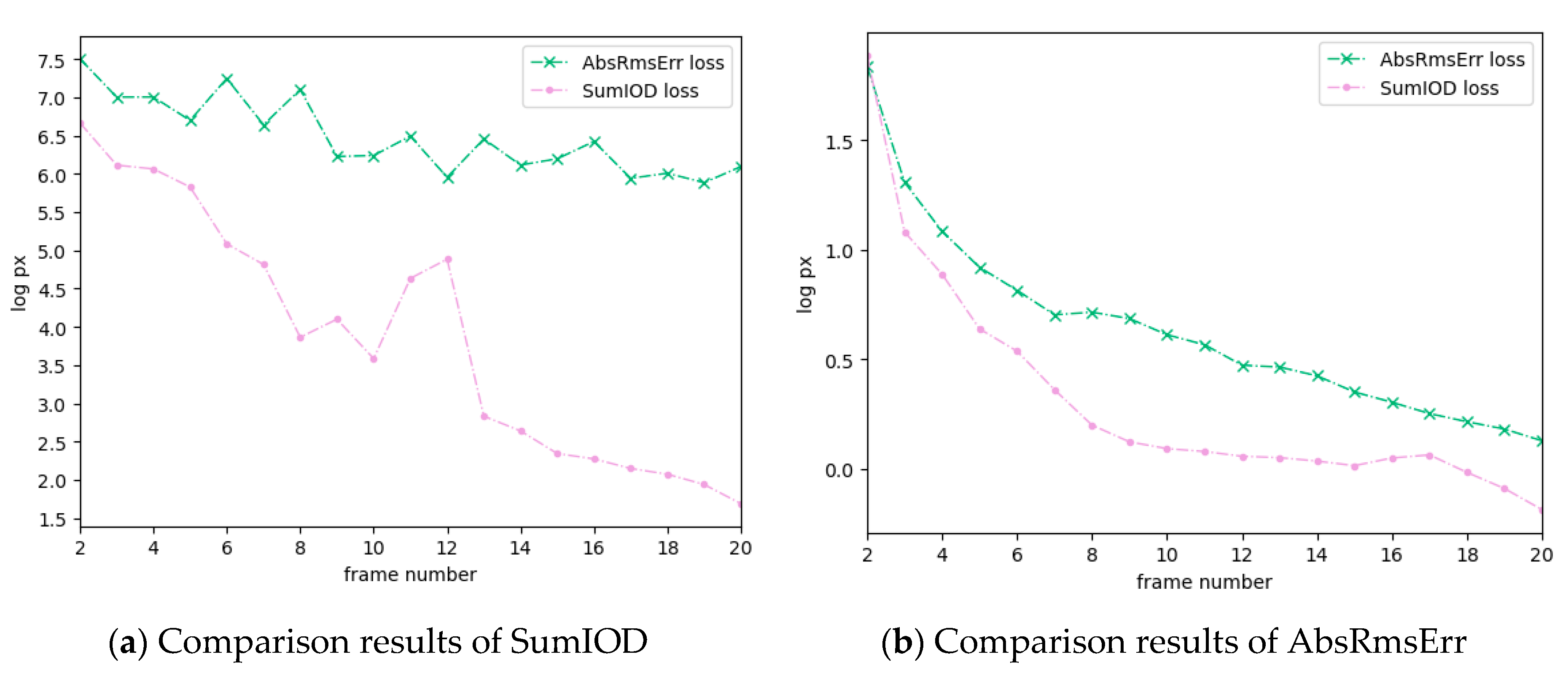

3.2. Pose Search

3.2.1. Algorithm Definition

- Solution: One solution is a pose that can be symbolized as , which represents the transformation from the coordinate system of the calibration board to the camera coordinate system, where represents the rotation angle under each coordinate axis, and represents the translation in the direction of each coordinate axis.

- Solution space: Because the rotation angle is too large, it is difficult to extract feature points, so the following constraints are made: .

- Initial solution: Take the pose generated by the method in Section 3.2.2 as the initial solution.

- Adjacent solution: An element in the current solution is randomly selected and a uniform sampling value with 0.01 times the value as the mean value is added to it. The adjacent solutions are obtained by replacing the element.

- Loss function: Perform hypothetical calibration based on the system state and solution, obtain the hypothetical estimated value and variance of the internal parameters, and calculate the sum of the index of dispersion (IOD) [14] values of all internal parameters as the loss value of the current solution. represents the variance and represents the value of the estimated internal parameter.

- Solution update method: After calculating the loss values of the two solutions, the solutions are updated according to Equation (12), where is the difference between these two loss values.

3.2.2. Initial Solution Method—Pose Generation

- Generate a distortion map based on the current calibration result (the value at each position represents the deviation caused by the distortion coefficient acting on that point).

- Find the rectangular area with the largest distortion in the image in the form of a sliding window.

- Perform pose estimation on the area to get the pose.

3.2.3. Search Process

| Algorithm 1 Simulated Annealing |

| 1: Function SA() |

| 2: Initialize |

| 3: while do |

| 4: |

| 5: while do |

| 6: |

| 7: |

| 8: |

| 9: |

| 10: end while |

| 11: |

| 12: end while |

| 13: return |

| 14: end Function |

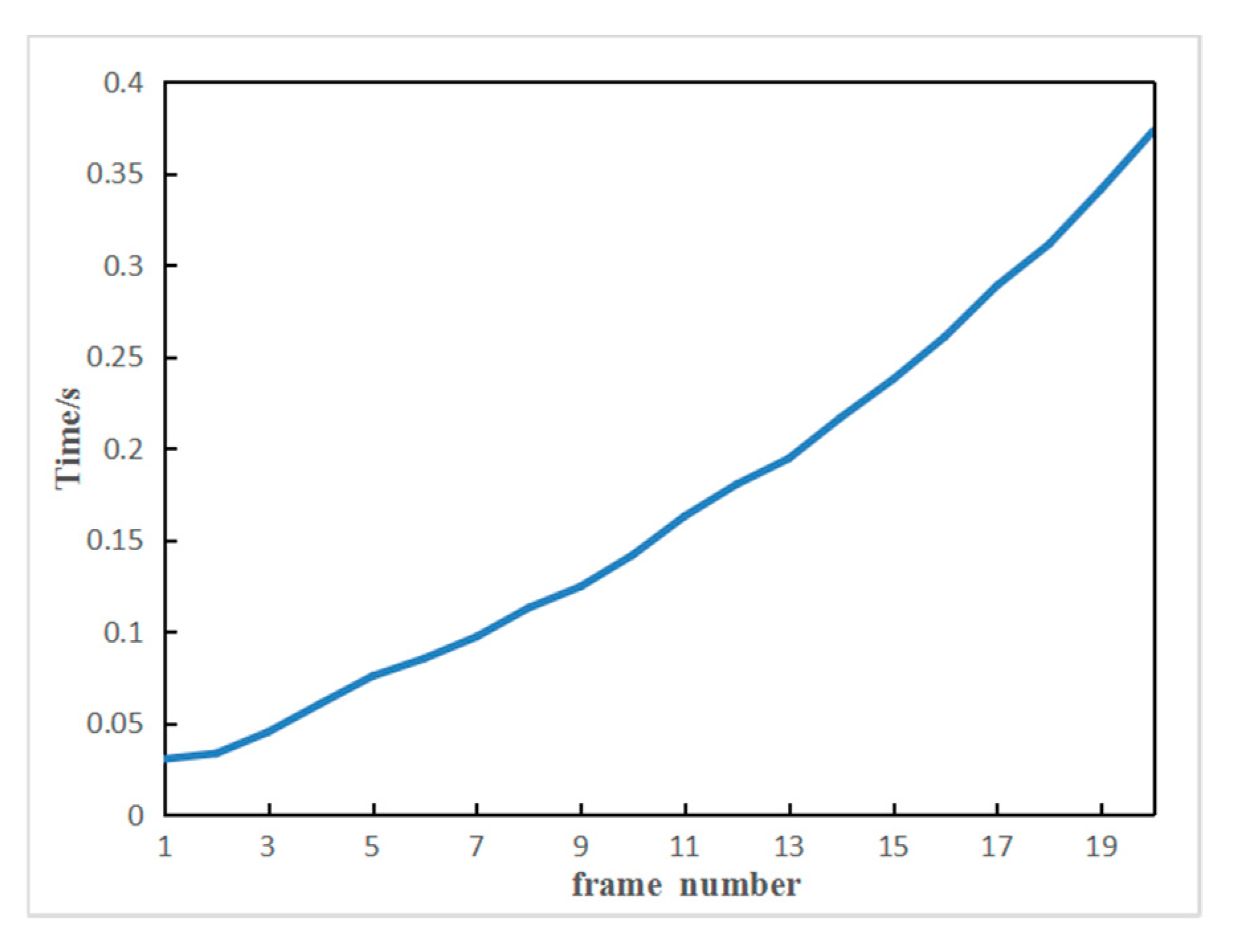

3.2.4. Time Complexity Analysis

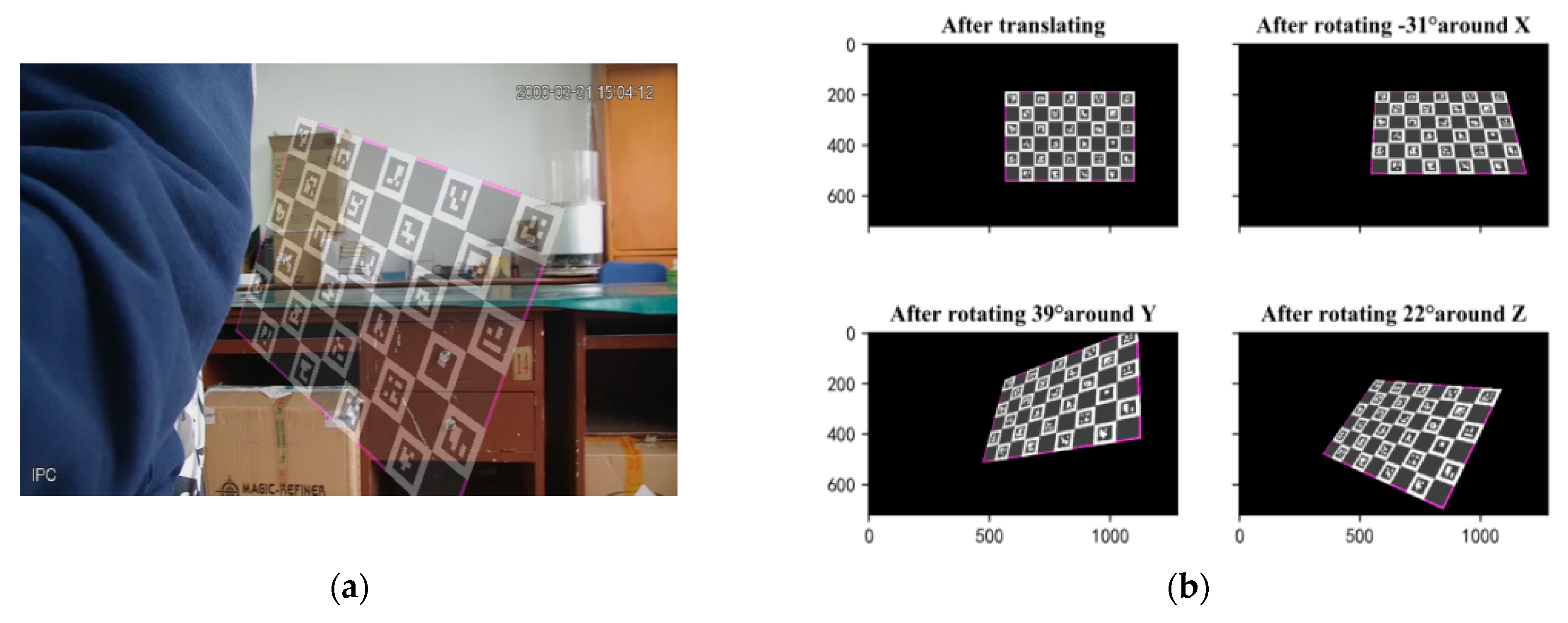

3.3. Pose Decomposition

3.4. System Convergence

4. Evaluation

4.1. Simulation Data

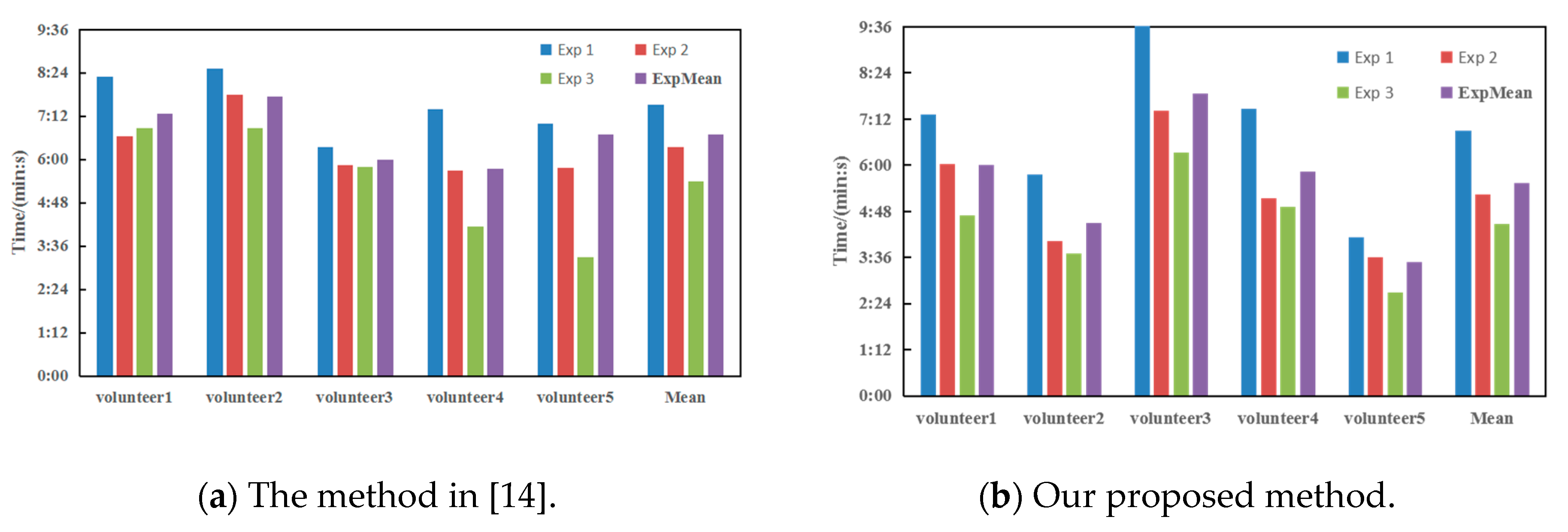

4.2. Real Data

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- di Lanzo, J.A.; Valentine, A.; Sohel, F.; Yapp, A.Y.T.; Muparadzi, K.C.; Abdelmalek, M. A review of the uses of virtual reality in engineering education. Comput. Appl. Eng. Educ. 2020, 28, 748–763. [Google Scholar] [CrossRef]

- Häne, C.; Heng, L.; Lee, G.H.; Fraundorfer, F.; Furgale, P.; Sattler, T.; Pollefeys, M. 3D Visual Perception for Self-Driving Cars using a Multi-Camera System: Calibration, Mapping, Localization, and Obstacle Detection. Image Vis. Comput. 2017, 68, 14–27. [Google Scholar] [CrossRef]

- Cai, Q. Research on Image-based 3D Reconstruction Technology. Master’s Thesis, Zhejiang University, Hangzhou, China, 2004. [Google Scholar]

- Guerchouche, R.; Coldefy, F. Camera calibration methods evaluation procedure for images rectification and 3D reconstruction. In Proceedings of the International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision, Plzen, Czech Republic, 4–7 February 2008. [Google Scholar]

- Faig, W. Calibration of close-range photogrammetry systems: Mathematical formulation. Photogramm. Eng. Remote Sens. 1975, 41, 1479–1486. [Google Scholar]

- Qi, W.; Li, F.; Zhenzhong, L. Review on camera calibration. In Proceedings of the Chinese Control and Decision Conference, Xuzhou, China, 26–28 May 2010. [Google Scholar]

- Weng, J.; Cohen, P. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 965–980. [Google Scholar] [CrossRef]

- Long, L.; Dongri, S. Review of Camera Calibration Algorithms. In Advances in Computer and Computational Sciences; Springer: Singapore, 2019; pp. 723–732. [Google Scholar]

- Zhan-Yi, H.U. A Review on Some Active Vision Based Camera Calibration Techniques. Chin. J. Comput. 2002, 25, 1149–1156. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Xie, Z.; Lu, W.; Wang, X.; Liu, J. Analysis of pose selection for binocular stereo calibration. Chin. J. Lasers 2015, 42, 237–244. [Google Scholar]

- Triggs, B. Autocalibration from Planar Scenes. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006. [Google Scholar]

- Sturm, P.F.; Maybank, S.J. On plane-based camera calibration: A general algorithm, singularities, applications. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Fort Collins, CO, USA, 23–25 June 1999. [Google Scholar]

- Rojtberg, P.; Kuijper, A. Efficient Pose Selection for Interactive Camera Calibration. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, Nantes, France, 9–13 October 2017. [Google Scholar]

- Richardson, A.; Strom, J.; Olson, E. AprilCal: Assisted and repeatable camera calibration. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Lazyloading. Available online: https://en.wikipedia.org/wiki/Lazy_loading (accessed on 5 November 2019).

- Fazakas, T.; Fekete, R.T. 3D reconstruction system for autonomous robot navigation. In Proceedings of the Computational Intelligence and Informatics, Budapest, Hungary, 18–20 November 2010. [Google Scholar]

- PinholeCamera. Available online: https://staff.fnwi.uva.nl/r.vandenboomgaard/IPCV20162017/LectureNotes/CV/PinholeCamera/index.html (accessed on 21 March 2020).

- Sun, W.; Cooperstock, J. Requirements for Camera Calibration: Must Accuracy Come with a High Price? In Proceedings of the IEEE Workshops on Applications of Computer Vision, Breckenridge, CO, USA, 5–7 January 2005. [Google Scholar]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997. [Google Scholar]

- Liu, Y.; Su, X. Camera calibration with planar crossed fringe patterns. Opt. Int. J. Light Electron Opt. 2012, 123, 171–175. [Google Scholar] [CrossRef]

- Yang, C.; Sun, F.; hu, Z. Planar conic based camera calibration. In Proceedings of the International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000. [Google Scholar]

- Moré, J. The Levenberg-Marquardt algorithm: Implementation and theory. Numer. Anal. 1978, 630, 105–116. [Google Scholar]

- Li, Z.; Ning, H.; Cao, L.; Zhang, T.; Gong, Y.; Huang, T.S. Learning to Search Efficiently in High Dimensions. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2012; pp. 1710–1718. [Google Scholar]

- SearchProblem. Available online: https://en.wikipedia.org/wiki/Search_problem (accessed on 15 January 2020).

- Simulated Annealing. Available online: https://en.wikipedia.org/wiki/Simulated_annealing (accessed on 10 February 2020).

- Konishi, T.; Kojima, H.; Nakagawa, H.; Tsuchiya, T. Using simulated annealing for locating array construction. Inf. Softw. Technol. 2020, 126, 106346. [Google Scholar] [CrossRef]

- Birdal, T.; Dobryden, I.; Ilic, S. X-Tag: A Fiducial Tag for Flexible and Accurate Bundle Adjustment. In Proceedings of the International Conference on 3D Vision, Stanford, CA, USA, 25–28 October 2016. [Google Scholar]

- Atcheson, B.; Heide, F.; Heidrich, W. CALTag: High Precision Fiducial Markers for Camera Calibration. In Proceedings of the Vision, Modeling, & Visualization Workshop, Siegen, Germany, 15–17 November 2010. [Google Scholar]

- Fiala, M.; Chang, S. Self-identifying patterns for plane-based camera calibration. Mach. Vis. Appl. 2008, 19, 209–216. [Google Scholar] [CrossRef]

- Garrido-Jurado, S. Detection of ChArUco Corners. Available online: http://docs.opencv.org/3.2.0/df/d4a/tutorial_charuco_detection.html (accessed on 7 February 2020).

- OpenCV Interactive Camera Calibration Application. Available online: https://docs.opencv.org/master/d7/d21/tutorial_interactive_calibration.html (accessed on 7 February 2020).

- Bradski, G. Learning-Based Computer Vision with Intel’s Open Source Computer Vision Library. Intel Technol. J. 2005, 9, 119. [Google Scholar]

| Method | Num. of Frames | ||

|---|---|---|---|

| The method in [14] | 0.4331 | 8.8 | 0.5139 |

| OpenCV | 0.62253 | 9 | 0.43771 |

| Our proposed method | 0.4086 | 7.8 | 0.4704 |

| Method | 5000 Frames (s) | Single Frame (ms) | Delay Per Second (s) |

|---|---|---|---|

| The method in [14] | 19.23 | 38.46 | 0.961 |

| Our proposed method | 12.62 | 25.24 | 0.631 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, W.; Xu, M.; Hou, F.; Jiang, W.; Wang, C.; Zhao, Y.; Xu, T.; Li, Y.; Zhao, Y.; Li, W. Calibration Venus: An Interactive Camera Calibration Method Based on Search Algorithm and Pose Decomposition. Electronics 2020, 9, 2170. https://doi.org/10.3390/electronics9122170

Lei W, Xu M, Hou F, Jiang W, Wang C, Zhao Y, Xu T, Li Y, Zhao Y, Li W. Calibration Venus: An Interactive Camera Calibration Method Based on Search Algorithm and Pose Decomposition. Electronics. 2020; 9(12):2170. https://doi.org/10.3390/electronics9122170

Chicago/Turabian StyleLei, Wentai, Mengdi Xu, Feifei Hou, Wensi Jiang, Chiyu Wang, Ye Zhao, Tiankun Xu, Yan Li, Yumei Zhao, and Wenjun Li. 2020. "Calibration Venus: An Interactive Camera Calibration Method Based on Search Algorithm and Pose Decomposition" Electronics 9, no. 12: 2170. https://doi.org/10.3390/electronics9122170

APA StyleLei, W., Xu, M., Hou, F., Jiang, W., Wang, C., Zhao, Y., Xu, T., Li, Y., Zhao, Y., & Li, W. (2020). Calibration Venus: An Interactive Camera Calibration Method Based on Search Algorithm and Pose Decomposition. Electronics, 9(12), 2170. https://doi.org/10.3390/electronics9122170