A Novel Motion Intention Recognition Approach for Soft Exoskeleton via IMU

Abstract

:1. Introduction

- We propose a recognition method with historical information based on neural network to recognize different terrain, which solves the problem of the single control of the current single terrain of the soft exoskeleton. This method enables the exoskeleton to adapt to different terrain and achieve better human–computer cooperation.

- We add the pattern transformation recognition, trying to predict the next motion mode in advance. The results of the experiments show that the recognition delay rate is about 23.97% in a gait cycle.

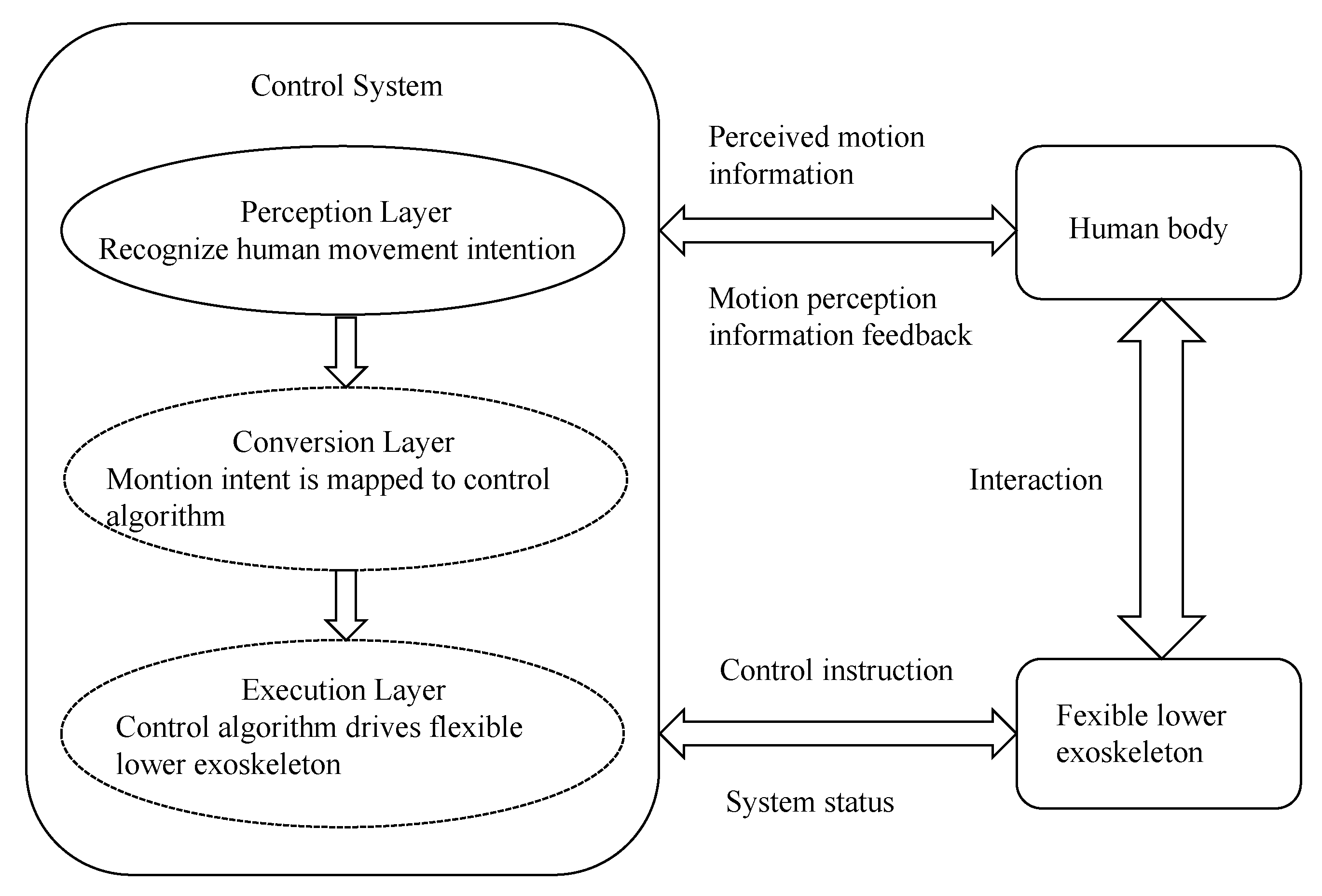

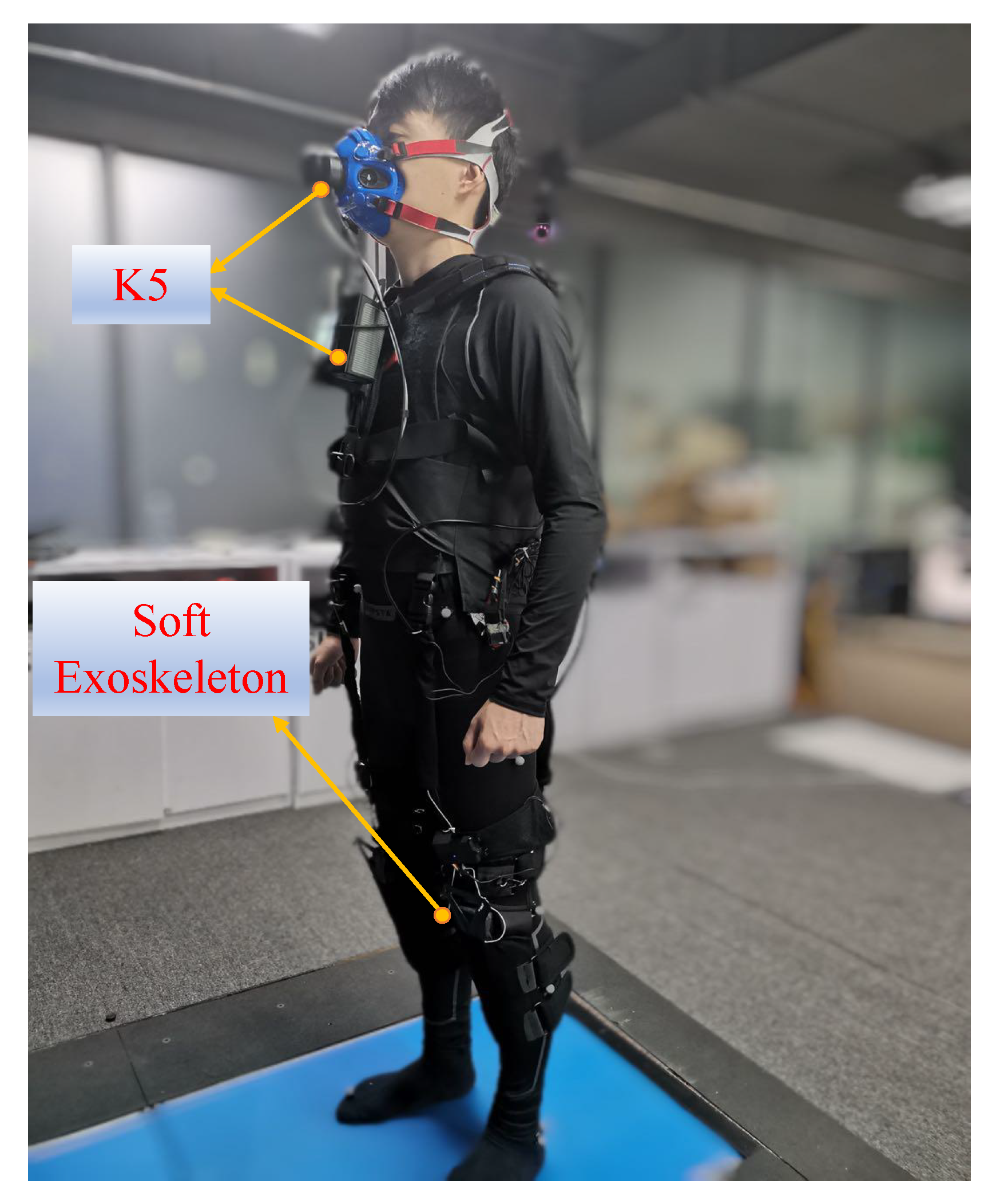

2. The Structure of Exoskeleton and Analysis of Motion Characteristics

2.1. The Structure of Soft Exoskeleton

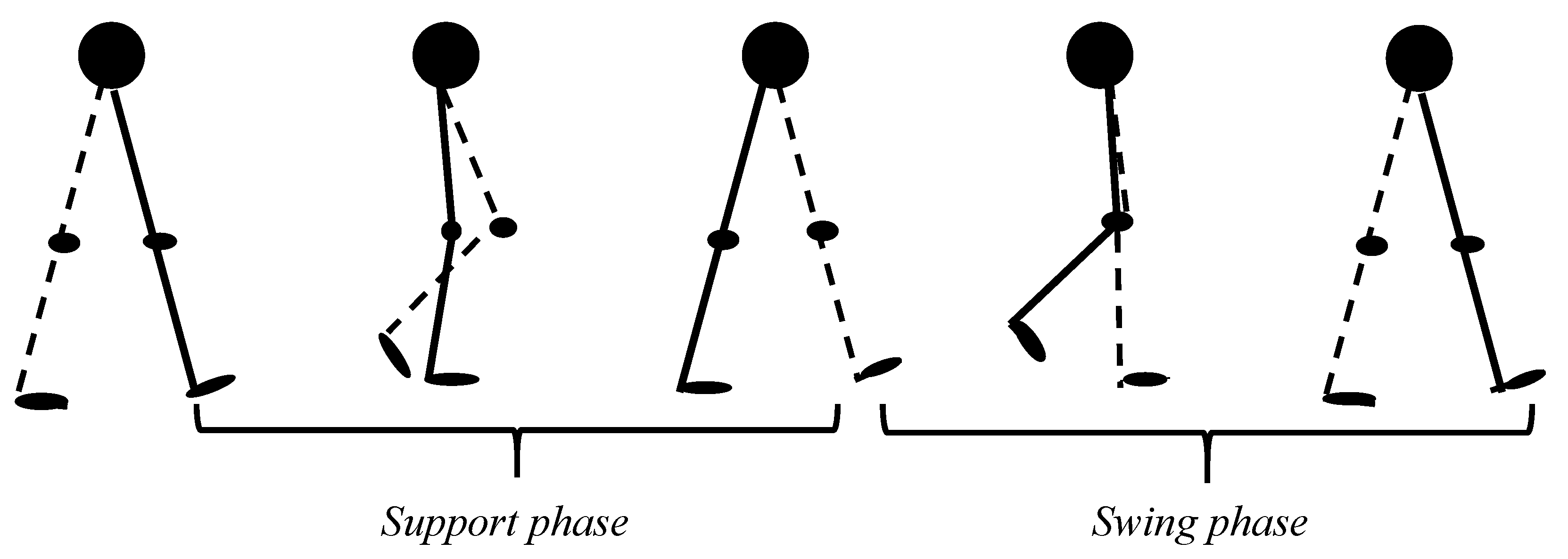

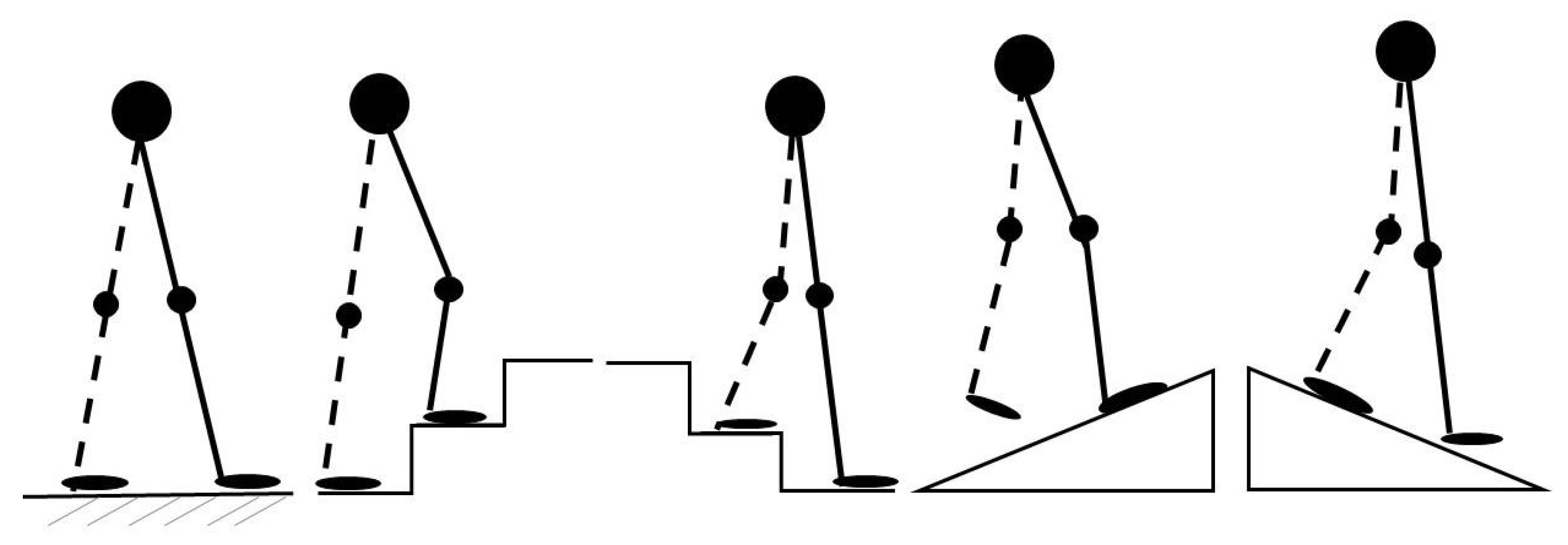

2.2. Analysis of Motion Characteristics

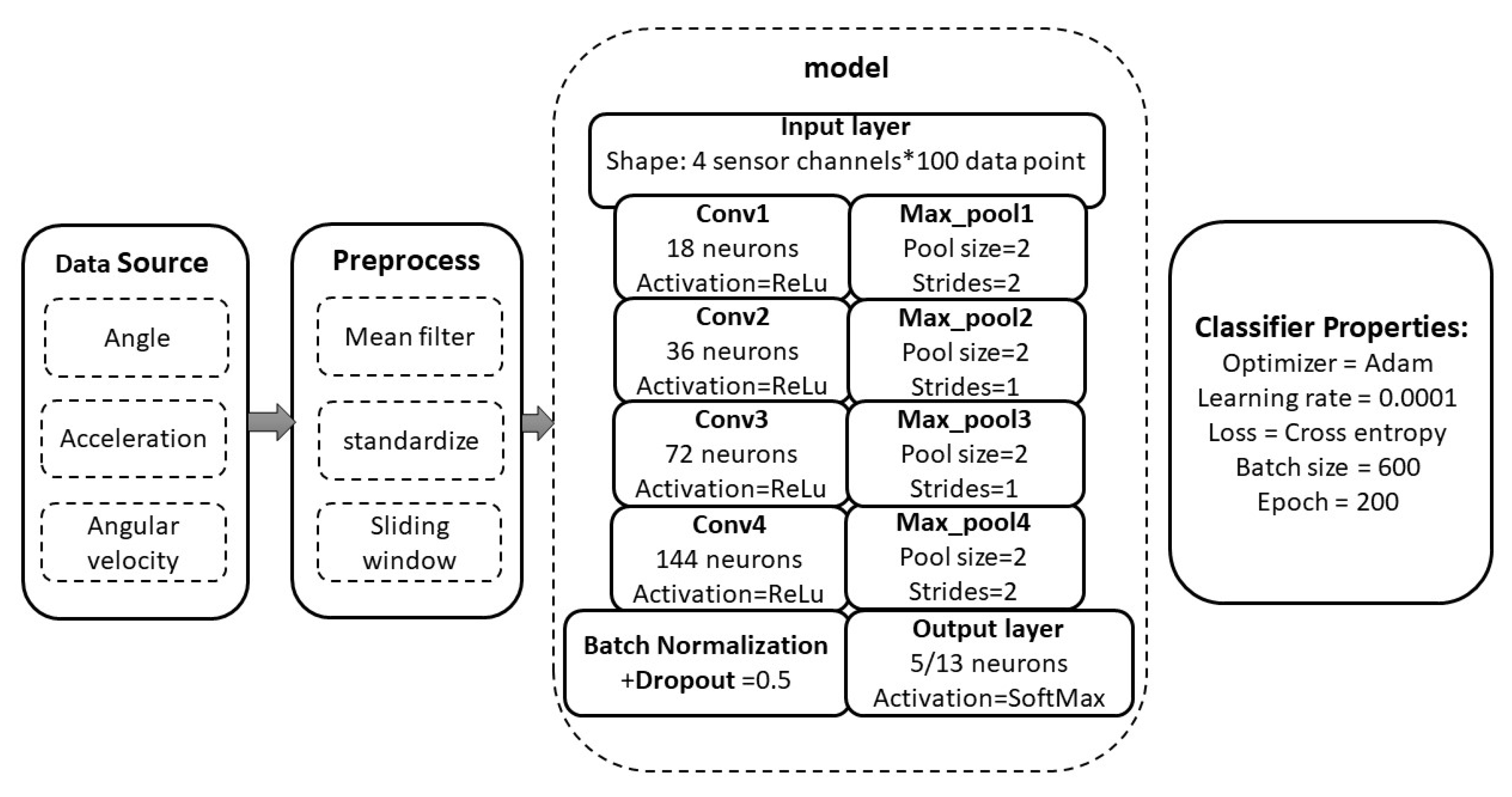

3. Identification Method

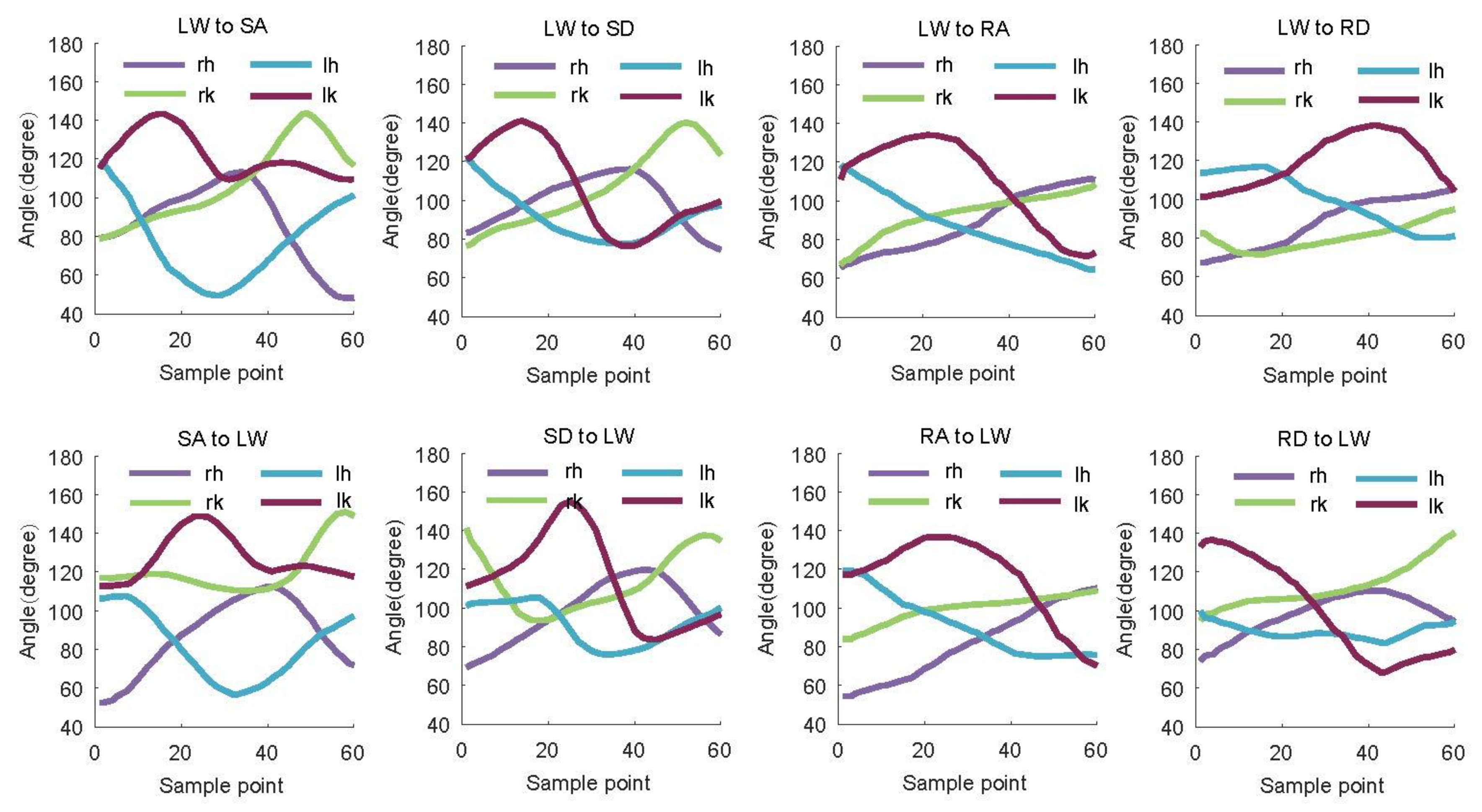

3.1. Gait Data Process

3.2. DNN-Based Deep Locomotion Mode Identification Model

3.3. Performance Evaluation

- 1.

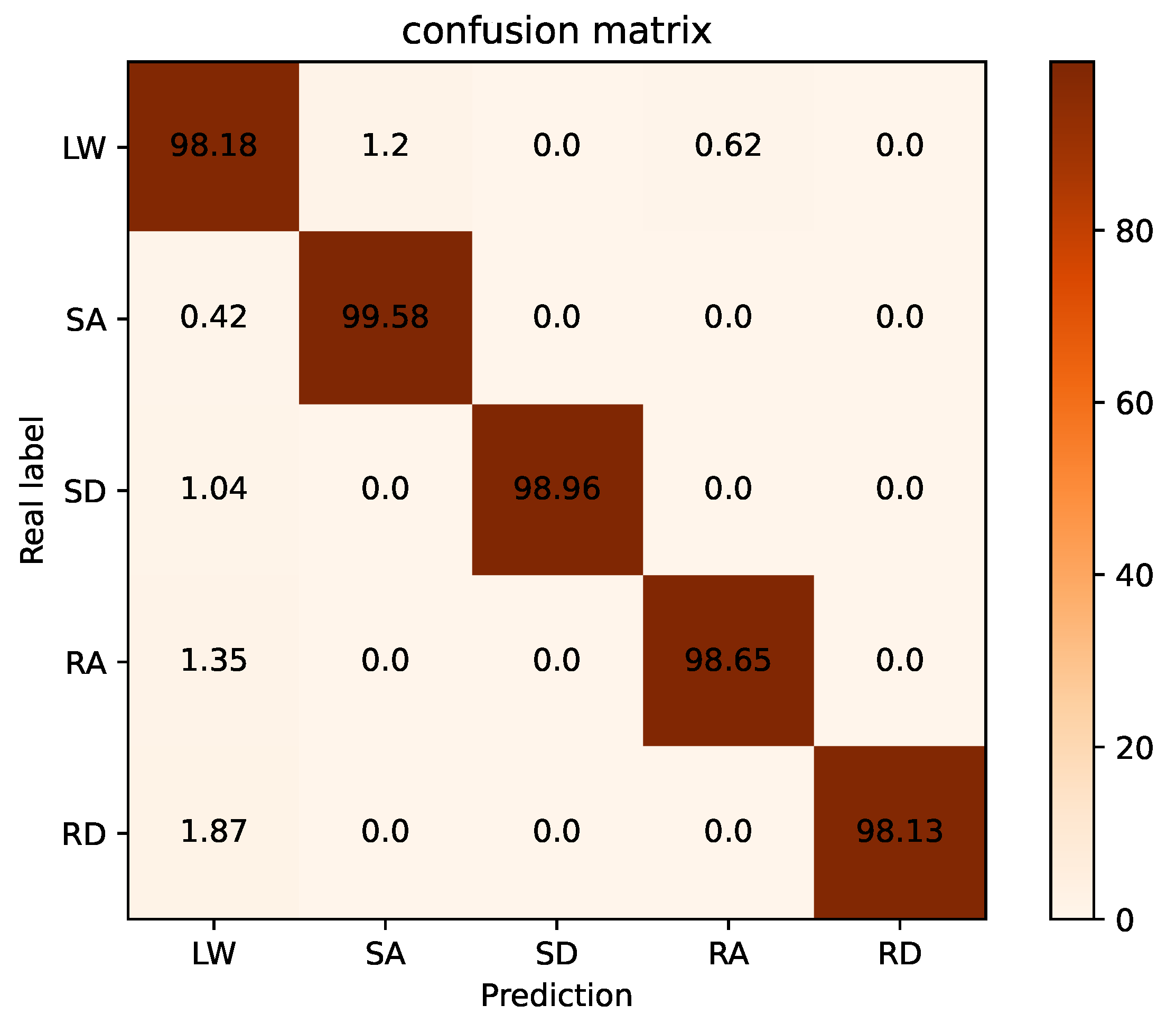

- Steady Locomotion Period: Normally, the identification success rate (ISR) is used for evaluating the accuracy of a classification [7], which is given Equation (8).where is the number of correct identification data while is the total number of test events in the experiment.To better illustrate the identification performance and quantify the error distribution, the confusion matrix is defined Equation (9).The element of the confusion matrix is expressed by Equation (10).where is the number of samples that terrain i wrongly recognized as terrain j, and is the total number of terrains i. The elements on the diagonal of the confusion matrix are the recognition accuracy. At the same time, those elements on the non-diagonal refer to the error rates.

- 2.

- Locomotion Transition Period: In order to judge whether the conversion pattern recognition is timely, we adopt the critical moment of [7] proposed, which refers to the moment when the user starts to change the current locomotion mode. The identification delay can be expressed by Equation (11).where is the moment when locomotion transition is identified, is the critical moment, and T is the average time of a gait cycle.

4. Experiments Results and Analysis

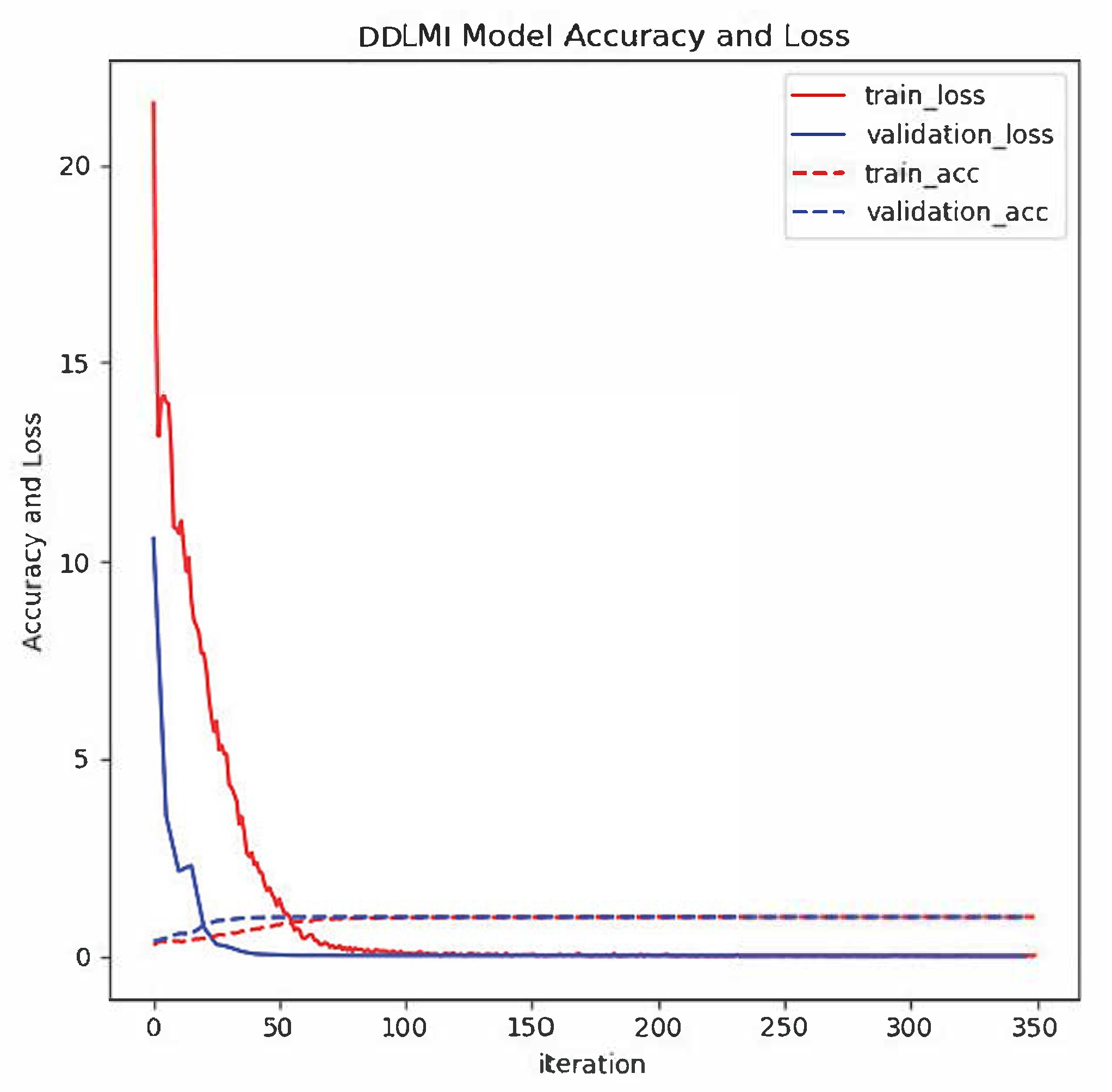

4.1. Model Validation

4.1.1. Subjects and Experiment Protocol

4.1.2. DDLMI Identification Performance

4.2. Metabolic Cost Test

4.2.1. Experimental Setup and Protocol

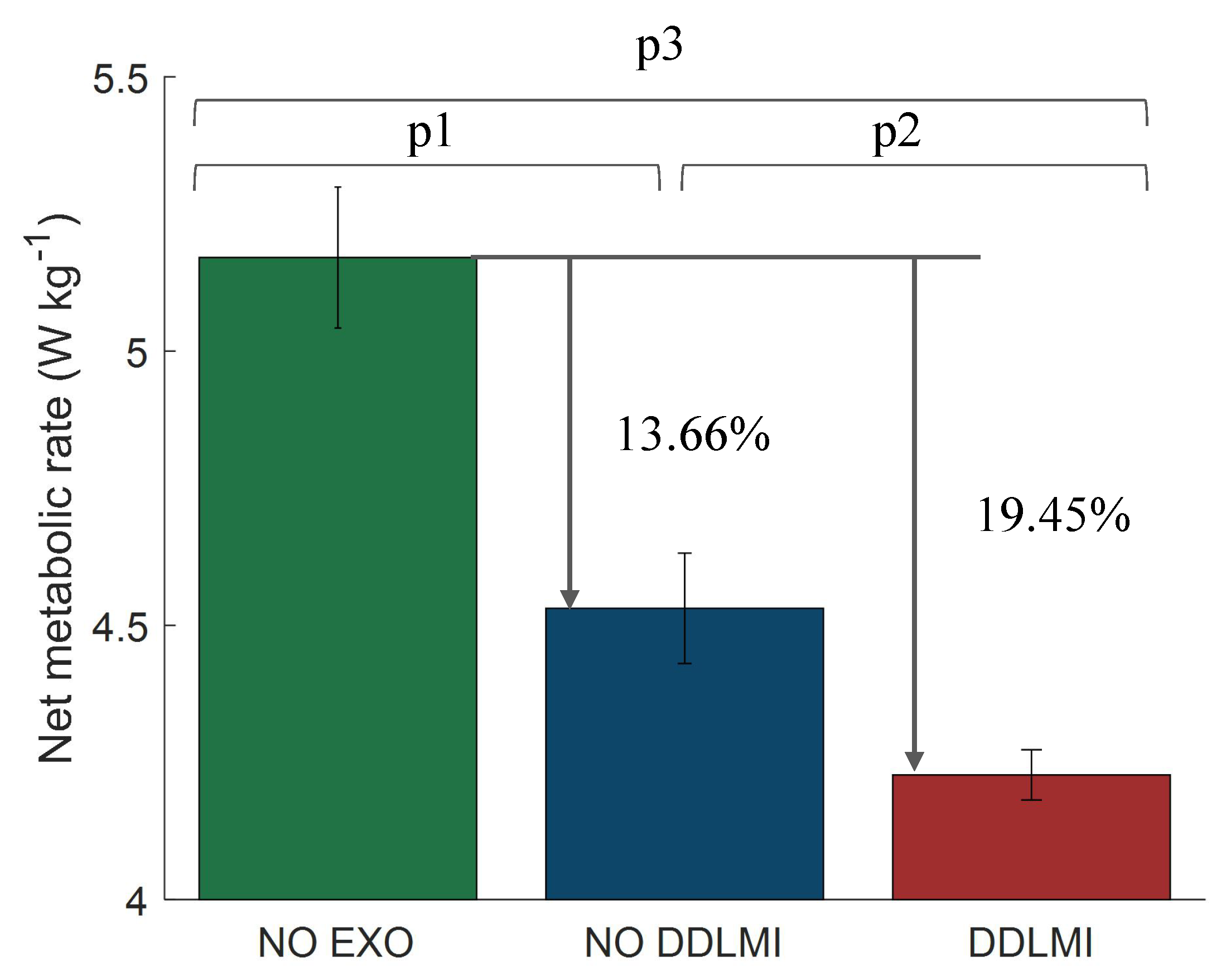

4.2.2. Metabolic Reduction by DDLMI

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| IMU | Inertial measurement unit |

| DDLMI | DNN-based deep locomotion mode identification |

| ISR | Identification success rate |

| LW | Level-ground walking |

| SA | Stair ascent |

| SD | Stair decent |

| RA | Ramp ascent |

| RD | Ramp decent |

References

- Chen, C.; Zheng, D.; Peng, A.; Wang, C.; Wu, X. Flexible design of a wearable lower limb exoskeleton robot. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013. [Google Scholar]

- Viteckova, S.; Kutilek, P.; Jirina, M. Wearable lower limb robotics: A review. Biocybern. Biomed. Eng. 2013, 33, 96–105. [Google Scholar] [CrossRef]

- Xu, T.; Guan, Y.; Liu, J. Image-Based Visual Servoing of Helical Microswimmers for Planar Path Following. IEEE Trans. Autom. Sci. Eng. 2020, 17, 325–333. [Google Scholar] [CrossRef]

- Xu, T.; Yu, J.; Vong, C. Dynamic Morphology and Swimming Properties of Rotating Miniature Swimmers with Soft Tails. IEEE ASME Trans. Mechatron. 2019, 24, 924–934. [Google Scholar] [CrossRef]

- Wu, X.; Liu, J.; Huang, C. 3-D Path Following of Helical Microswimmers With an Adaptive Orientation Compensation Model. IEEE Trans. Autom. Sci. Eng. 2020, 17, 823–832. [Google Scholar] [CrossRef]

- Joshi, D.; Nakamura, B.H.; Hahn, M.E. High energy spectrogram with integrated prior knowledge for EMG-based locomotion classification. Med. Eng. Phys. 2015, 37, 518–524. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Wu, X.; Ma, Y.; Wu, G.; Luo, Y. A Flexible Lower Extremity Exoskeleton Robot with Deep Locomotion Mode Identification. Complexity 2018, 2018, 5712108. [Google Scholar] [CrossRef]

- Eilenberg, M.F.; Geyer, H.; Herr, H. Control of a Powered Ankle–Foot Prosthesis Based on a Neuromuscular Model. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 164–173. [Google Scholar] [CrossRef]

- Peng, Z.; Cao, C.; Huang, J.; Pan, W. Human Moving Pattern Recognition toward Channel Number Reduction Based on Multipressure Sensor Network. Int. J. Distrib. Sens. Netw. 2013, 9, 510917. [Google Scholar] [CrossRef] [Green Version]

- Long, Y.; Du, Z.J.; Wang, W.D.; Zhao, G.Y.; Xu, G.Q.; He, L.; Mao, X.W.; Dong, W. PSO-SVM-Based Online Locomotion Mode Identification for Rehabilitation Robotic Exoskeletons. Sensors 2016, 16, 1408. [Google Scholar] [CrossRef] [Green Version]

- Shen, B.; Li, J.; Bai, F.; Chew, C.M. Motion intent recognition for control of a lower extremity assistive device (LEAD). In Proceedings of the IEEE International Conference on Mechatronics & Automation, Takamatsu, Japan, 4–7 August 2013. [Google Scholar]

- Duc, N.N.; Trong, B.D.; Huu, T.P.; Gu-Min, J. Classification of Five Ambulatory Activities Regarding Stair and Incline Walking Using Smart Shoes. IEEE Sens. J. 2018, 18, 5422–5428. [Google Scholar]

- Chen, B.; Zheng, E.; Fan, X.; Liang, T. Locomotion mode classification using a wearable capacitive sensing system. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 744–755. [Google Scholar] [CrossRef] [PubMed]

- David Li, Y.; Hsiaowecksler, E.T. Gait mode recognition and control for a portable-powered ankle-foot orthosis. In Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013. [Google Scholar]

- Liu, Z.; Lin, W.; Geng, Y.; Yang, P. Intent pattern recognition of lower-limb motion based on mechanical sensors. IEEE/CAA J. Autom. Sin. 2017, 4, 651–660. [Google Scholar] [CrossRef]

- Zhang, F.; Fang, Z.; Liu, M.; Huang, H. Preliminary design of a terrain recognition system. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011. [Google Scholar]

- Chen, B.; Zheng, E.; Wang, Q. A Locomotion Intent Prediction System Based on Multi-Sensor Fusion. Sensors 2014, 14, 12349–12369. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, H.; Zhang, F.; Hargrove, L.J.; Dou, Z.; Rogers, D.R.; Englehart, K.B. Continuous Locomotion-Mode Identification for Prosthetic Legs Based on Neuromuscular–Mechanical Fusion. IEEE Trans. Biomed. Eng. 2011, 58, 2867–2875. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ma, Y.; Wu, X.; Wang, C.; Yi, Z.; Liang, G. Gait Phase Classification and Assist Torque Prediction for a Lower LimbExoskeleton System Using Kernel Recursive Least-Squares Method. Sensors 2019, 19, 5449. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ren, H.; Shang, W.; Li, N.; Wu, X. A fast parameterized gait planning method for a lower-limb exoskeleton robot. Int. J. Adv. Robot. Syst. 2020, 17. [Google Scholar] [CrossRef] [Green Version]

- Yuan, K.; Parri, A.; Yan, T.; Wang, L.; Vitiello, N. A realtime locomotion mode recognition method for an active pelvis orthosis. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015. [Google Scholar]

- Zheng, E.; Wang, L.; Wei, K.; Wang, Q. A Noncontact Capacitive Sensing System for Recognizing Locomotion Modes of Transtibial Amputees. IEEE Trans. Biomed. Eng. 2014, 61, 2911–2920. [Google Scholar] [CrossRef]

- Yuan, K.; Wang, Q.; Wang, L. Fuzzy-Logic-Based Terrain Identification with Multisensor Fusion for Transtibial Amputees. IEEE/ASME Trans. Mechatron. 2015, 20, 618–630. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, Y.; Li, Y.; Wang, Z.; Wu, X. Iterative Learning Control for a Soft Exoskeleton with Hip and Knee Joint Assistance. Sensors 2020, 20, 4333. [Google Scholar] [CrossRef]

- Mcintosh, A.S.; Beatty, K.T.; Dwan, L.N.; Vickers, D.R. Gait dynamics on an inclined walkway. J. Biomech. 2006, 39, 2491–2502. [Google Scholar] [CrossRef]

- Ming, Z.; Le, T.N.; Bo, Y.; Mengshoel, O.J.; Zhang, J. Convolutional Neural Networks for Human Activity Recognition using Mobile Sensors. In Proceedings of the Sixth International Conference on Mobile Computing, Applications and Services (MobiCASE 2014), Austin, TX, USA, 6–7 November 2014. [Google Scholar]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Cho, H.; Sang, Y. Divide and Conquer-Based 1D CNN Human Activity Recognition Using Test Data Sharpening. Sensors 2018, 18, 1055. [Google Scholar]

- Zebin, T.; Sperrin, M.; Peek, N.; Casson, A.J. Human activity recognition from inertial sensor time-series using batch normalized deep LSTM recurrent networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 17–21 July 2018; pp. 1–4. [Google Scholar]

- Brockway, J.M. Derivation of formulae used to calculate energy expenditure in man. Hum. Nutr. Clin. Nutr. 1987, 41, 463–471. [Google Scholar] [PubMed]

- Young, A.J.; Simon, A.M.; Fey, N.P.; Hargrove, L.J. Intent Recognition in a Powered Lower Limb Prosthesis Using Time History Information. Ann. Biomed. Eng. 2013, 42, 631–641. [Google Scholar] [CrossRef]

- Omid, D.; Mojtaba, T.; Raghvendar, C.V. IMU-Based Gait Recognition Using Convolutional Neural Networks and Multi-Sensor Fusion. Sensors 2017, 17, 2735. [Google Scholar]

- Zheng, E.; Wang, Q. Noncontact Capacitive Sensing-Based Locomotion Transition Recognition for Amputees With Robotic Transtibial Prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 161–170. [Google Scholar] [CrossRef]

- Hawas, A.R.; El-Khobby, H.A.; Abd-Elnaby, M.; Abd El-Samie, F.E. Gait identification by convolutional neural networks and optical flow. Multimed. Tools Appl. 2019, 78, 25873–25888. [Google Scholar] [CrossRef]

- Yuan, Q.; Chen, I.M.; Lee, S.P. SLAC: 3D localization of human based on kinetic human movement capture. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar]

| Experimenter | LW | SA | SD | RA | RD |

|---|---|---|---|---|---|

| Subject 1 | 96.14% | 98.15% | 99.03% | 97.20% | 97.03% |

| Subject 2 | 96.36% | 99.42% | 98.96% | 98.05% | 96.57% |

| Subject 3 | 96.78% | 96.98% | 99.24% | 97.78% | 98.05% |

| Subject 4 | 97.25% | 96.25% | 100% | 96.42% | 96.34% |

| Subject 5 | 96.22% | 100% | 97.89% | 96.78% | 96.27% |

| Subject 6 | 97.06% | 100% | 98.09% | 99.01% | 97.36% |

| Subject 7 | 96.21% | 99.86% | 99.56% | 96.34% | 96.07% |

| Average | 96.57% | 98.66% | 98.96% | 97.36% | 96.67% |

| Conversion Mode | Identification Delay (Percent of One Gait Cycle) |

|---|---|

| LW to SA | 8.28% |

| LW to SD | 3.96% |

| LW to RA | 13.54% |

| LW to RD | 16.72% |

| SA to LW | 9.12% |

| SD to LW | 13.02% |

| RA to LW | 12.38% |

| RD to LW | 15.67% |

| Average | 23.97% |

| Research | Sensor | Signal Characteristics | Classifier | ISR | |

|---|---|---|---|---|---|

| Liu [15] | 1 acc, 1 gyr, 2 pre | ICC | HMM | 95.8% | |

| Young [32] | 1 IMU, 1 pre | Mean, Std, Max, Min | DBN | 94.7% | |

| Wang [7] | 6 IMU | ICC | LSTM | 30% | 98.3% |

| Zheng [22] | 2 acc, 2 gyr, 1 pre | Mean, Std, etc | SVM + QDA | 94.9% | |

| Omid [33] | 5 IMU | ICC | DCNN | 97.06% | |

| This work | 4 IMU | ICC | DDLMI | 23.97% | 97.64% |

| System | Number of IMU | Number of Plantar Pressure | ISR |

|---|---|---|---|

| Kinetic Human Movement Capture [36] | 8 | 2 | 98% |

| This work | 4 | 0 | 97.64% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, L.; Wang, Z.; Ning, Z.; Zhang, Y.; Liu, Y.; Cao, W.; Wu, X.; Chen, C. A Novel Motion Intention Recognition Approach for Soft Exoskeleton via IMU. Electronics 2020, 9, 2176. https://doi.org/10.3390/electronics9122176

Zhu L, Wang Z, Ning Z, Zhang Y, Liu Y, Cao W, Wu X, Chen C. A Novel Motion Intention Recognition Approach for Soft Exoskeleton via IMU. Electronics. 2020; 9(12):2176. https://doi.org/10.3390/electronics9122176

Chicago/Turabian StyleZhu, Lu, Zhuo Wang, Zhigang Ning, Yu Zhang, Yida Liu, Wujing Cao, Xinyu Wu, and Chunjie Chen. 2020. "A Novel Motion Intention Recognition Approach for Soft Exoskeleton via IMU" Electronics 9, no. 12: 2176. https://doi.org/10.3390/electronics9122176