Abstract

Due to the complicated operating environment and variable operating conditions, wind turbines (WTs) are extremely prone to failure and the frequency of fault increases year by year. Therefore, the solutions of effective condition monitoring and fault diagnosis are urgently demanded. Since the vibration signals contain a lot of health condition information, the fault diagnosis based on vibration signals has received extensive attention and achieved impressive progress. However, in practice, the collected health condition signals are very similar and contain a lot of noise, which makes the fault diagnosis of WTs more challenging. In order to handle this problem, this paper proposes a model called denoising stacked feature enhanced autoencoder with dynamic feature enhanced factor (DSFEAE-DF). Firstly, a feature enhanced autoencoder (FEAE) is constructed through feature enhancement so that the discriminative features can be extracted. Secondly, a feature enhanced factor which is independent of manual judgments is proposed and embedded into the training process. Finally, the DSFEAE-DF, combining one noise adding scheme, stacked FEAEs and dynamic feature enhanced factor, is established. Through experimental comparisons, the superiorities of the proposed DSFEAE-DF are verified.

1. Introduction

Nowadays, wind energy is becoming one of the most effective means to alleviate energy shortages and protect the environment, so wind turbines (WTs) are widely applied. However, due to the harsh working environment and variable working conditions, WTs are extremely susceptible to failure, resulting in unexpected shutdowns and additional maintenance costs. For example, the shutdown frequency of a wind farm investigated by Caithness Windfarm Information Forum increases from 156 times per year (2009–2014) to 176 times per year (2015–2019), which causes huge economic losses to investors. To reduce maintenance costs and avoid unplanned shutdowns, it is urgent to develop an effective condition monitoring and fault diagnosis model to detect weak faults as early as possible [1,2,3].

In recent years, the condition monitoring and fault diagnosis of WTs have been greatly developed. In summary, the signals and monitoring means currently employed mainly include the following categories—vibration [4,5,6], acoustic emission [7,8], strain [9], torque, temperature, oil [10], electrical parameters [11,12], supervisory control and data acquisition (SCADA) parameters [1], non-destructive testing, and so forth. Comprehensive consideration of several aspects such as monitorable componssnts, installation intrusion, installation complexity, installation costs, sampling frequency requirements, and commercialization, vibration monitoring has become the most widely used approach which provides rich data supports to the development of data-based health monitoring and fault diagnosis.

Benefiting from the development of signal processing and machine learning, many fault diagnosis models have been formed through feature extraction and feature classification [13,14,15,16,17]. Among them, deep neural networks (DNNs), which extract effective features from complex monitoring data automatically and construct a high-reliability model, have gradually become a hotspot in fault diagnosis of WTs [18]. Various DNNs, for example, autoencoder (AE) [13,19,20], sparse filter [4,21], deep belief network (DBN), convolutional neural network (CNN) [22,23], recurrent neural network (RNN) [24], have been employed widely for many challenging problems in fault diagnosis. AE, which minimizes the error in reconstructing the input, can adaptively perform feature extraction in an absolutely unsupervised manner with a simple network structure and few parameters [25,26,27]. Following this line of reasoning, many variants of autoencoders, for example, denoising autoencoder (DAE), contractive autoencoder (CAE), variational autoencoder (VAE), K-sparse autoencoder, locally connected autoencoder, and so forth., have been proposed recently. For example, considering the noise in signals and non-linearity of signals, Jiang et al. [28] utilized a stacked multilevel-DAE to extract more robust and discriminative fault features. Shen et al. [29] proposed a stacked CAE for anti-noise and robust fault diagnosis. Martin et al. [30] adopted a fully unsupervised deep VAE method for some latent fault feature extraction by variational inferences. These studies motivate us to develop a new AE-based fault diagnosis model for WTs. However, AE is a greedy neural network, and its extracted features are usually trivial. Especially for similar faults, the extracted features of AE are not distinctive and lack of meaning, which guides the AE to focus on important features by adding constraints. L1 regularizer, L2 regularizer, L1L2 regularizer, and KL divergence are widely applied while some of their parameters are usually needed manual judgments. Meanwhile, the signals from WTs operating in variable conditions often contain much noise, which forces AE to still hold the capability of extracting more robust features.

To improve the discriminative and robust feature extraction ability of traditional AE, the denoising stacked feature enhanced autoencoder with dynamic feature enhanced factor (DSFEAE-DF) for fault diagnosis of wind turbine is proposed. The main contributions of this paper are summarized into three folds.

- (1)

- A novel feature enhanced autoencoder (FEAE) is proposed. The FEAE, which introduces feature enhancement, can extract more representative and discriminative features from raw signals.

- (2)

- A dynamic feature enhanced factor is proposed in this paper. The dynamic feature enhanced factor, which involves the diversity of features and information amount between feature and input, is smoothly embedded into the training process and calculated without manual judgments.

- (3)

- DSFEAE-DF is proposed for fault diagnosis of WTs, which involves one noise adding scheme, stacked FEAEs and dynamic feature enhanced factor. Compared to the traditional stacked denoising autoencoder (SDAE), the DSFEAE-DF can extract hierarchical discriminative and robust features and therefore DSFEAE-DF has better ability of similar fault diagnosis and noise environment fault diagnosis.

2. Background

2.1. Autoencoder

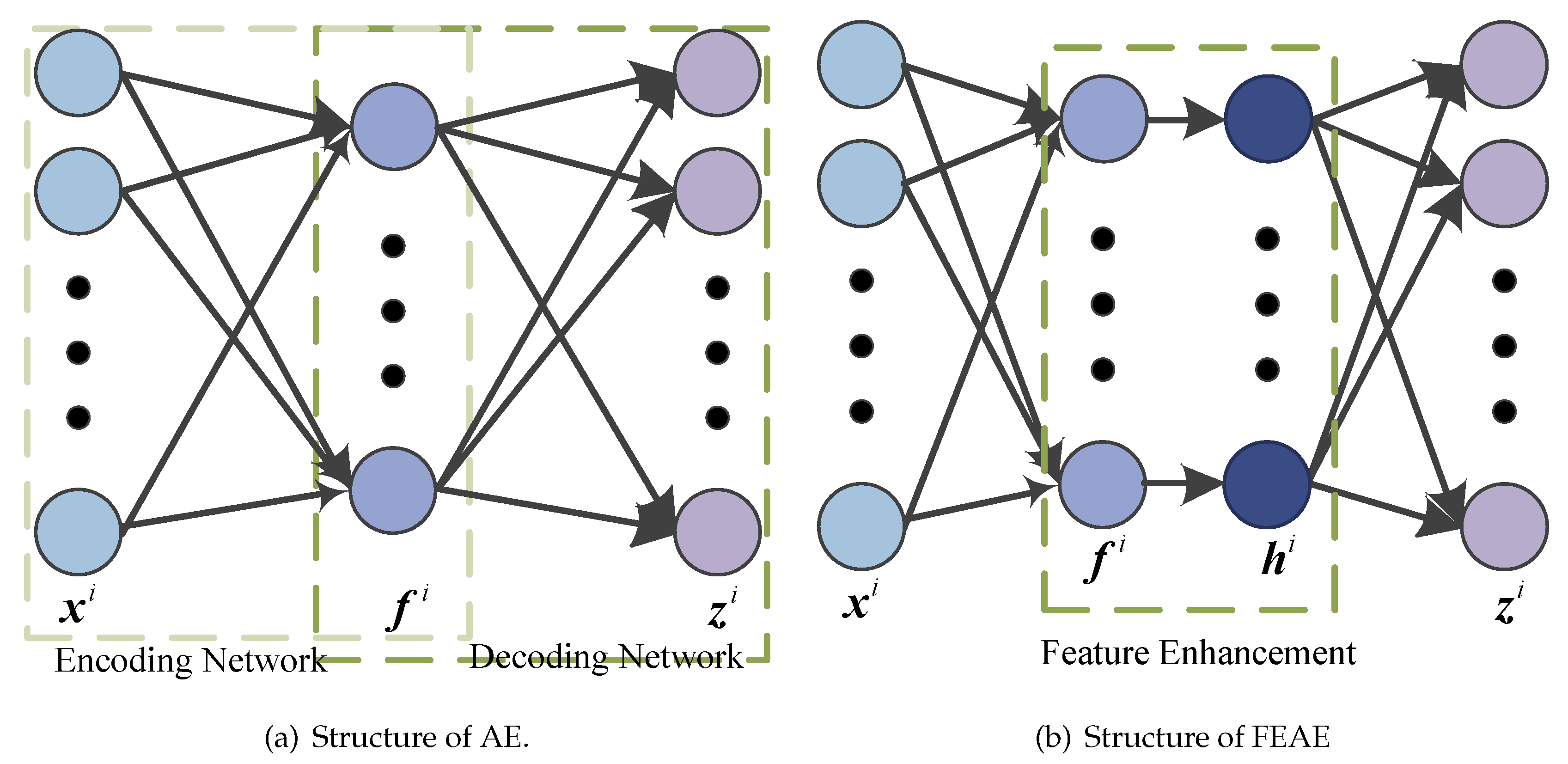

Autoencoder (AE) [13], as shown in Figure 1a, is a special DNN which can be divided into two parts: encoding network and decoding network. Given a training sample set , where and M is the number of training samples. The encoding network is to extract a hidden feature from the input sample , which is described as

where is the weight and is the bias. And the decoding network is to map the hidden feature to the reconstruction output , which is described as

where is the weight and is the bias. The and represent the activation functions. The training process of AE is to update the parameters by minimizing the error between and as follows:

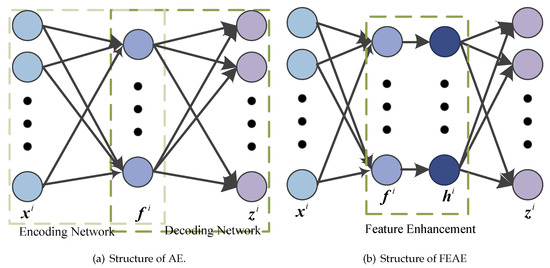

Figure 1.

Structures of autoencoder (AE) and feature enhanced autoencoder (FEAE).

2.2. Denoising

Due to the complicated environment, the collected signals often contain strong background noise, so the performance of AE with noise needs to be improved. To learn more robust features, noise sample is constructed by input sample through adding noise by , which is denoted as . Then, through the encoding network and the decoding network, the noise sample are mapped to the reconstruction output . Finally, by minimizing error in Equation (3), robust features are extracted.

3. Proposed Method

In this section, the proposed DSFEAE-DF is firstly presented, including FEAE, dynamic feature enhanced factor and the structure of DSFEAE-DF. Then, the fault diagnosis procedures are detailed.

3.1. Feature Enhancement

When observing the features extracted by AEs, they are not discriminatively different. That is, AEs greedily extract relatively trivial features to reconstruct input samples.To overcome this shortcoming, one approach guides the AE to focus on important features by adding constraints in the training process via mutual competition and enhancement. In competition and enhancement, neurons in the hidden layer compete for the right to respond to the input samples, then the specialization of neurons increases so that discriminative features can be extracted. Following this idea and inspired by Reference [31], the feature enhancement is proposed as two processes, as described below and detailed in Algorithm 1.

- (1)

- Competition: In the feature vector , the most competitive k neurons with the largest activation values are selected as the "winner" in competition, while the remaining “loser” are suppressed as 0.

- (2)

- Enhancement: In order to compensate for the energy loss caused by suppressing the “loser” neurons and make the competition among the neurons more obvious, the average “loser” neuron energy is redistributed to the “winner” neurons by energy enhanced factor , which achieves the enhancement. Given a feature enhanced factor , the most competitive k and energy enhanced factor can be denoted as below.

| Algorithm 1 function |

| Input: feature vector , feature enhanced factor , most competitive , energy enhanced factor Output:

|

3.2. Feature Enhanced Autoencoder

According to the descriptions of AE in Section 2.1 and feature enhancement in Section 3.1, feature enhanced autoencoder (FEAE), as shown in Figure 1b, is proposed as Equations (1), (6) and (7), where is denoted as feature and is denoted as enhanced feature. FEAE, as an unsupervised feature extractor, can extract discriminative features through feature enhancement. Similarly, the parameters can be trained by minimizing the Equation (3).

3.3. Dynamic Feature Enhanced Factor

As can be seen in Section 3.1, feature enhanced factor , as a hyperparameter, is a key factor of feature enhancement and represents the proportion of most competitive k neurons in the total neurons. With a large , k is also large so that too many features are enhanced and the significance of feature enhancement decreases. While with a small , k is also small causing few features are enhanced and the remaining features are set to 0 so that the features are lost. In the existing method [31], a stable is employed by prior knowledge and human judgment, but due to the complexity and diversity of overall , a stable cannot be suitable for all features, so a dynamic feature enhanced factor independent on human judgment is proposed as follows.

As for the training process, the batch size is B, the current batch is b, then the training sample set in current batch b is . Through the encoding network, the feature set can be obtained. Then, the similarity between the and the rest features in can be denoted as

where and . The average similarity of is calculated as

Meanwhile, the information amount I between feature and input sample is designed as

where d and n are the dimensions of and respectively. Finally, in current batch b, the dynamic feature enhanced factor is designed as

Furthermore, the , can be calculated by Equations (4) and (5).

Interpreting Equations (11), the dynamic feature enhanced factor consists of two terms. The first term is the average diversity of , which can be roughly regarded as the proportion of discriminative features. The second term I represents the information amount carried by the features from the input samples, which prevents too few features that can be enhanced due to the small average diversity of and ensures enough enhanced features to reconstruct the input. As can be seen in the above descriptions, the dynamic feature enhanced factor is proposed reasonably and acquired adaptively without prior knowledge in every training batch.

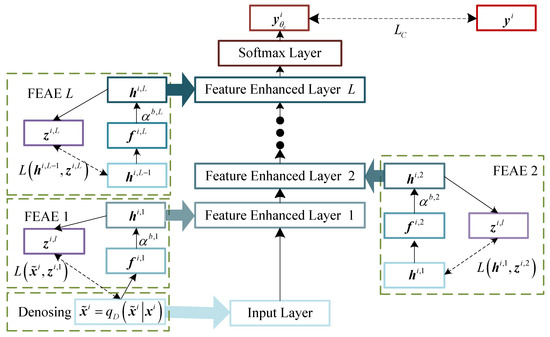

3.4. DSFEAE-DF Model

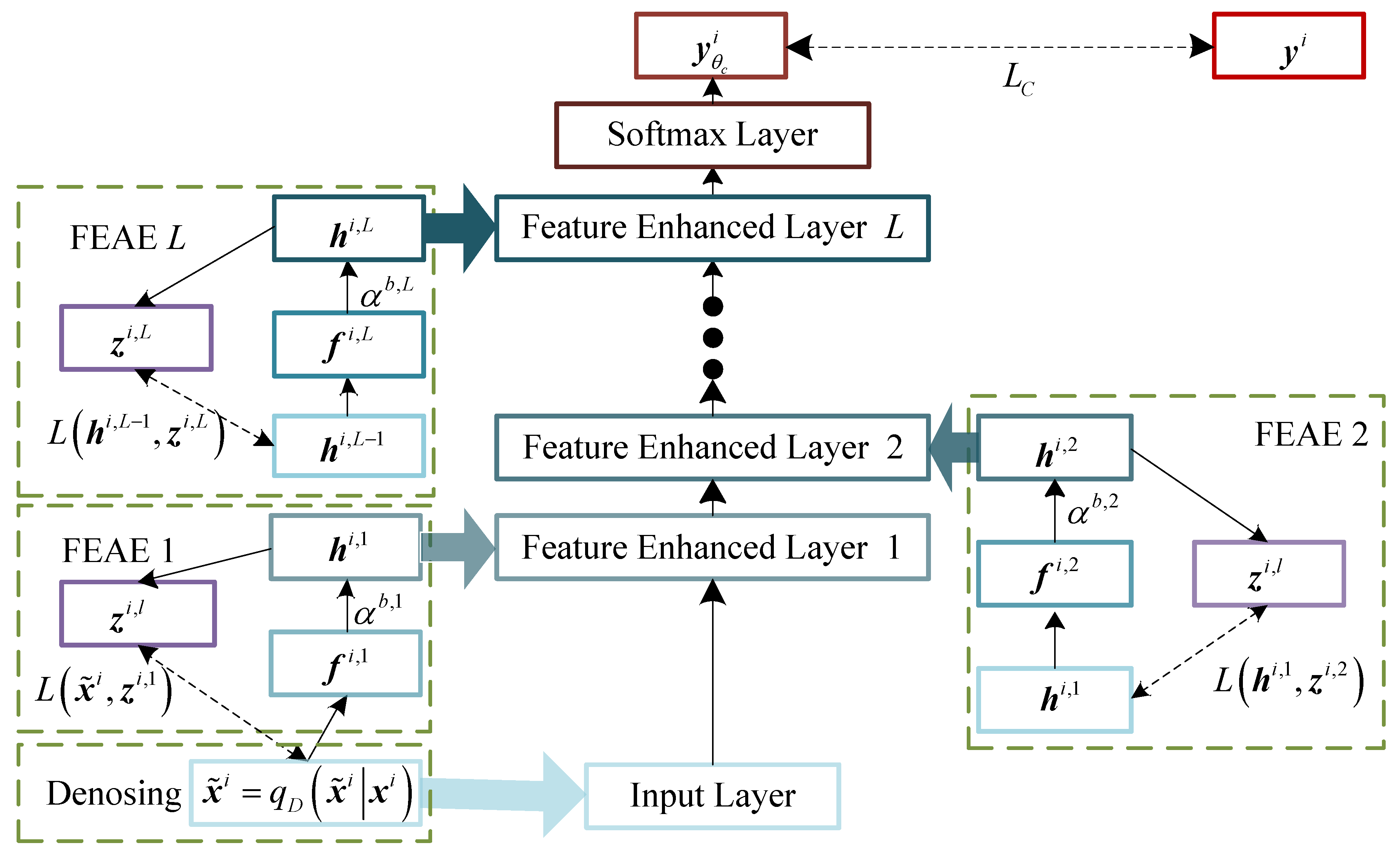

The proposed denoising stacked feature enhanced autoencoder with dynamic feature enhanced factor (DSFEAE-DF) model is a DNN with one noise adding scheme, multiple feature enhanced layers, and one softmax layer, as shown in Figure 2.

Figure 2.

Structure of denoising stacked feature enhanced autoencoder with dynamic feature enhanced factor (DSFEAE-DF) model.

The feature enhanced layers are composed of a set of FEAEs to achieve discriminatively and automatically extraction of enhanced features at different layers from the original signals. Assuming that the multiple feature enhanced layers has L FEAEs, represents the l-th FEAE. When , the input of the first FEAE is described as , which means that the input of DSFEAE-DF is the noise sample . Then enhanced features in first FEAE can be obtained by updating . And when , the input of the l-th FEAE is , and update to get the enhanced features .

The softmax layer, whose input is , is employed to make the prediction of the input sample . Supposing that the label of is , the discrepancy between and , computed by the crossentropy loss function in Equation (12), reach the minimum through updating the weight of softmax layer.

where is one-hot form of label .

Consequently, the stacked multiple FEAE layers can obtain hierarchical non-linear features, where enhanced features of lower layers are extracted in lower layers and enhanced features of higher layers are extracted in the higher layers. Meanwhile, the dynamic feature enhanced factor of the l-th FEAE in every batch can be smoothly embedded into the model and adaptively calculated by Equation (11) during the training process so that enhanced features are extracted automatically without any human judgment. Furthermore, the input of the first FEAE is the noise sample by one noise adding scheme, which corresponds to the denoising described in Section 2.2.

3.5. DSFEAE-DF for Fault Diagnosis

The fault diagnosis procedures based on the DSFEAE-DF model includes two phases: training phase and testing phase. During the training phase, the different health condition vibration signals are collected, segmented, normalized, and put into the established DSFEAE-DF model. Next, complete the training of the model and obtain the trained DSFEAE-DF. The detailed training process of the training phase is shown in Algorithm 2. In the testing phase, new health condition signals are acquired, segmented, normalized, and fed into the trained model, then the diagnosis result can be obtained.

| Algorithm 2 Training process of DSFEAE-DF |

| Input: Dataset ; Model DSFEAE-DF; Number of epochs E; Batch size B; Noise adding probability Output: and

|

4. Experiments and Verification

In this section, since the bearing is a core component of WTs, the dataset of bearing is applied to verify the effectiveness of the proposed method. All experiments are conducted with a computer with AMD A8-5550M APU, Linux OS, and Tensorflow Toolbox. All experiments repeat 10 trails to avoid the one-time occasionality.

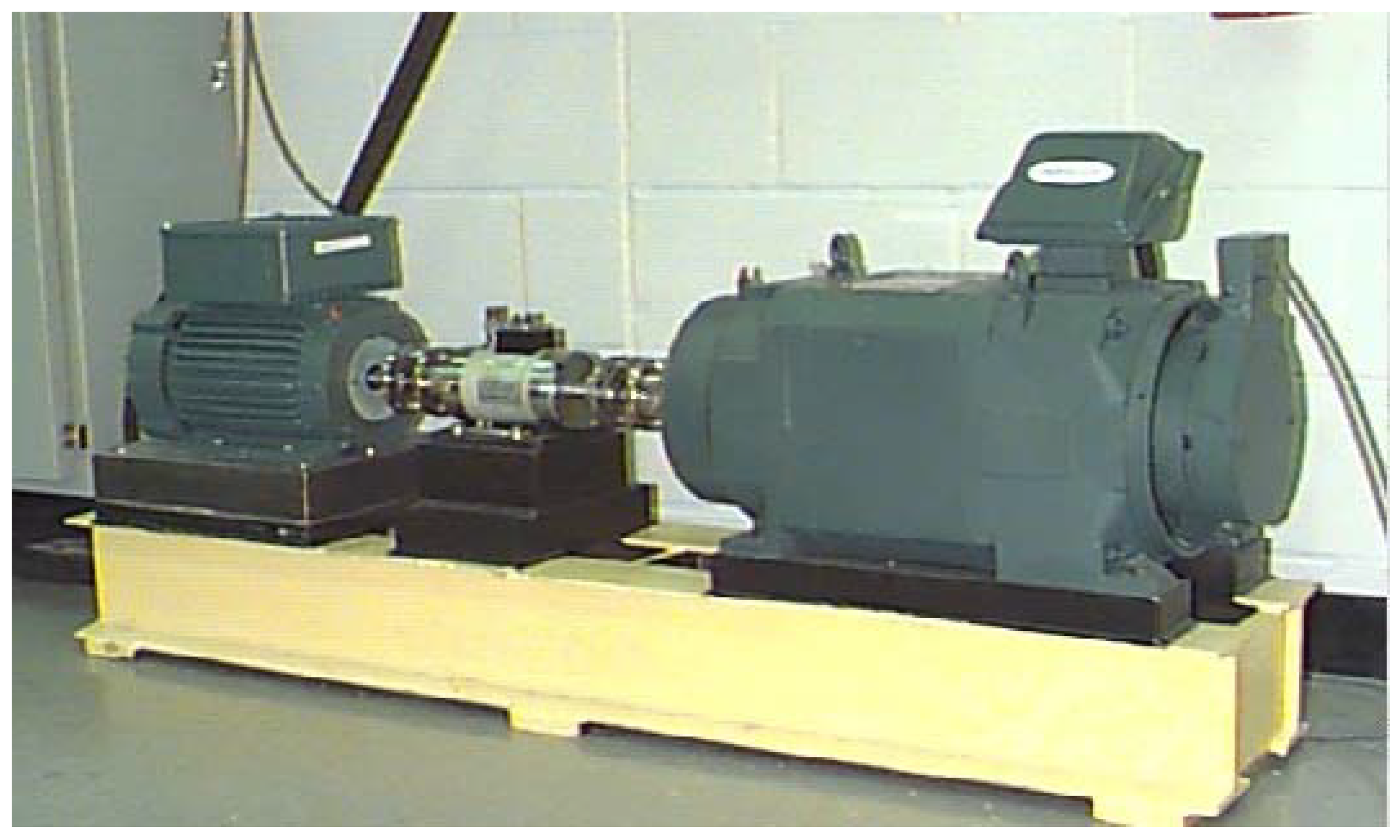

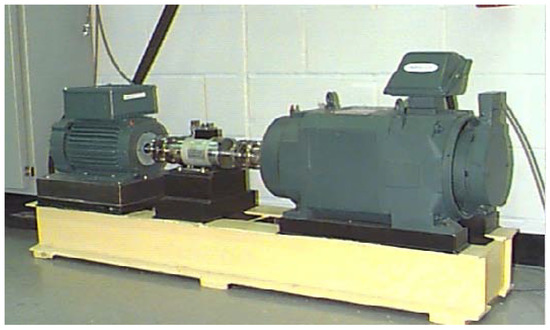

4.1. Data Description

The bearing vibration signals of Case Western Reserve University (CWRU) [32] are employed in this paper. All signals are obtained from artificially damaged bearings in the motor driving mechanical system shown in Figure 3. The signals under four fault locations (Normal, Ball, Inner race and Outer race) are collected by the acceleration sensors under four different loads with 48 kHz sampling frequency. For each fault location, three fault severities ( mm, mm and mm) are introduced, respectively. We use these situations to simulate actual bearing faults in WTs.

Figure 3.

Motor driving mechanical system.

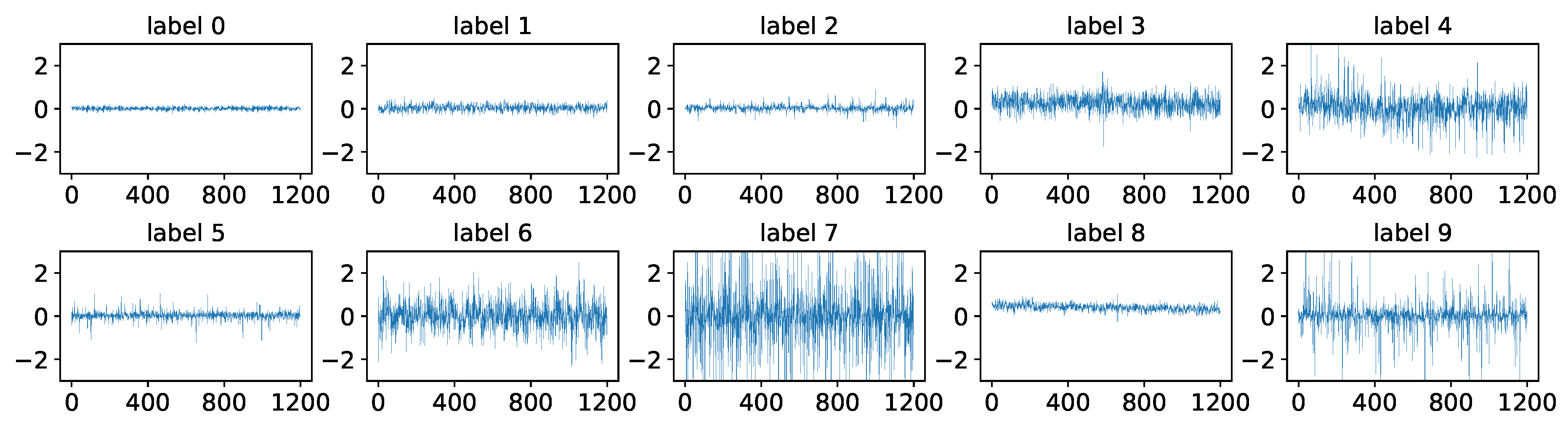

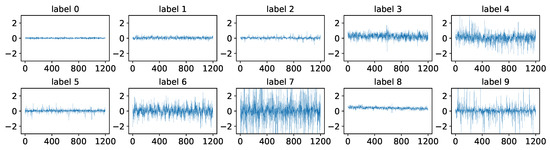

The vibration signal under one load and under one fault location with one fault severity contains 240,000 data points during 5 s sampling time. Through segmenting, 200 segments with a length of 1200 data points are obtained from one vibration signal. Through the same operation for all signals, the dataset is formed and detailed in Table 1. The dataset contains a total of 10 health conditions. For each health condition, there are 800 segments (200 segments under each load) and a segment (also called a sample) contains 1200 data points, which means the dataset containing a total of 8000 samples. Figure 4 provides examples of 10 health condition samples.

Table 1.

Dataset description.

Figure 4.

Each health condition sample.

4.2. Experimental Setup

The parameters of the DSFEAE-DF structure and training process have an impact on testing accuracy. To determine these parameters, exhaustive experiments on the bearing dataset are undertaken to obtain the optimized parameters and the testing accuracy is set to be the indicator. The parameters of five aspects under consideration are presented as follows.

- (1)

- The structure of DSFEAE-DF. Combining the structure in Reference [33] and testing accuracy, the structure of DSFEAE-DF includes an input layer, three feature enhanced layers, and a softmax layer. The dimension of the input layer is equal to the dimension of the input sample, and the dimensions of the three feature enhanced layers are 600, 400, and 200, respectively. The number of nodes in the softmax layer is 10, which is the number of health conditions.

- (2)

- Activation function. Compared to other nonlinear functions, for example, ReLU, Tanh, LogSig, and SoftSign, the and utilize the Sigmoid function.

- (3)

- Noise adding probability. The Gaussian noise is adopted in this paper. Combing noise settings in Reference [1] and testing accuracy, the noise adding probability is set as .

- (4)

- Optimization. Following Reference [34], Adam is adopted for stochastic optimization. The learning rates of the unsupervised training and the supervised fine-tuning are set to and , respectively. Comprehensive consideration of the degree of optimization and the speed of optimization, the epoch is set to 200 and batch size is set to 100.

- (5)

- Training sample number. Following the setting in Reference [35], random 7200 samples are used for training and the rest are used for testing.

To verify the effectiveness of dynamic feature enhanced factor , we embed 9 values of into the feature enhancement of the DSFEAE for comparison to DSFEAE-DF in fault diagnosis. Otherwise, in order to verify the performances of the proposed model in similar fault diagnosis and noise environment fault diagnosis, other AE-based models with the same establishment of DSFEAE-DF are employed for comparisons. The details are as follows.

- (1)

- SAE: a stacked AE, a traditional model described in Reference [33].

- (2)

- K-sparse SAE: the stacked AE with K-sparse, where K-sparse proposed in Reference [36] means only competition process but no enhancement process;

- (3)

- SDAE: the stacked denoising AE, a traditional model described in Reference [37];

- (4)

- DSFEAE-: the denoising stacked FEAE with the stable feature enhanced factor .

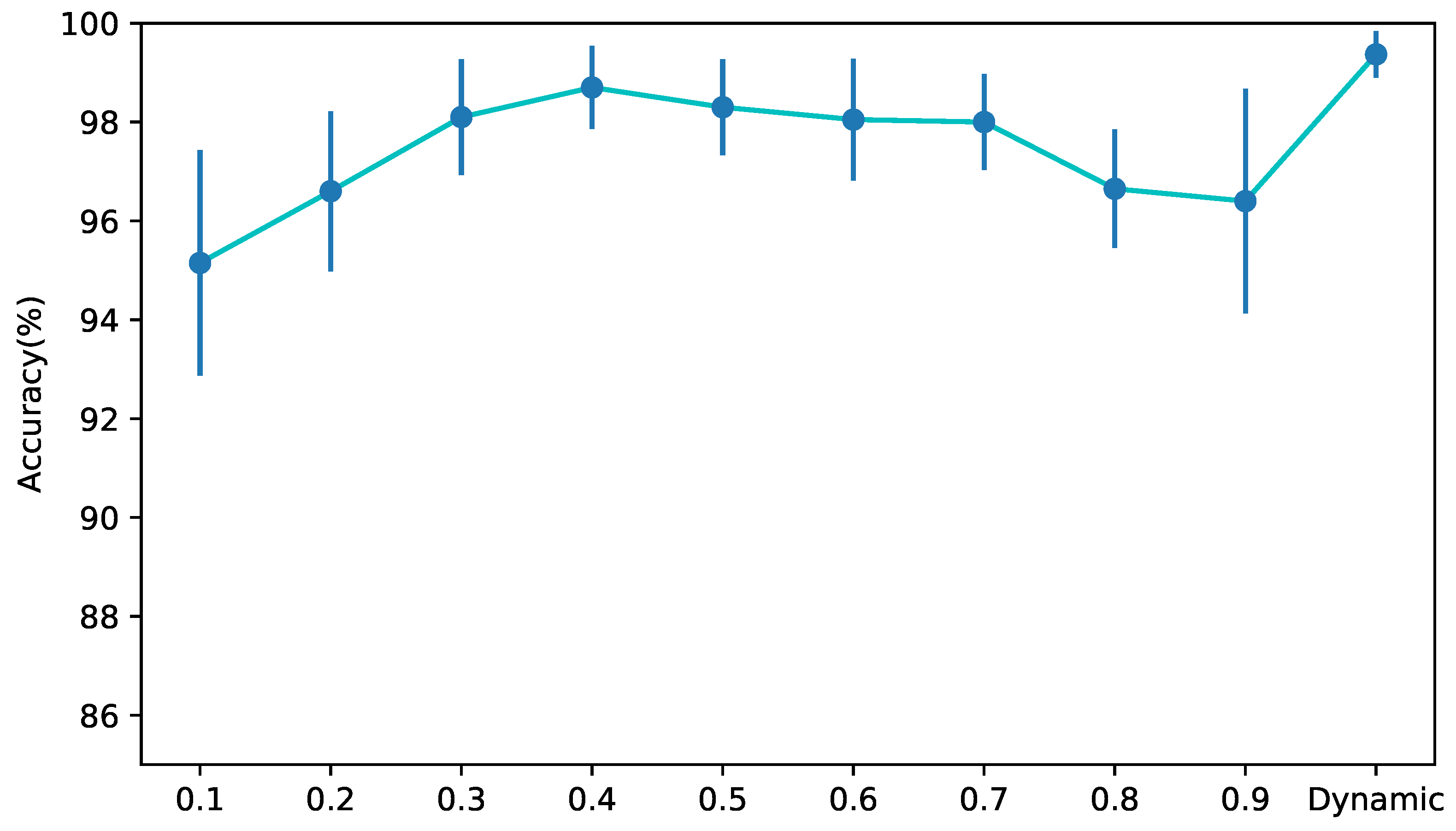

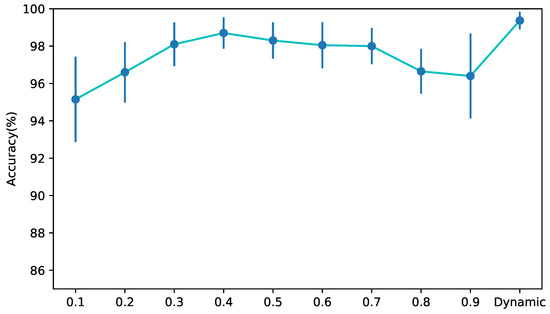

4.3. Effectiveness of Dynamic Feature Enhanced Factor

In this paper, the consideration of the diversity of corresponding features in a training batch and the information amount between feature and input in the current feature enhanced layer, the dynamic feature enhanced factor is designed, which is smoothly embedded into the training process. To verify the effectiveness of the dynamic feature enhanced factor, 9 values of are embedded in the feature enhancement of the DSFEAE. The diagnosis results of manually setting parameters and dynamic are shown in Figure 5. It can be seen that as the value increases from to , the testing accuracy continuously increases, while the value increases from to , the testing accuracy continuously decreases. That is because a small value means a small number of feature are enhanced and little information would be contained in enhanced features, which makes it difficult to reconstruct input samples. While too larger value means most of the features are enhanced so that the meaning of feature enhancement decreases. The DSFEAE-DF uses dynamic feature enhanced factor which can adaptively select features and enhance them. The accuracy of DSFEAE-DF is higher than that of DSFEAE with any stable , and its standard deviation is also smaller than others. These results verify the effectiveness of the dynamic feature enhanced factor.

Figure 5.

Accuracies of DSFEAE with stable and DSFEAE-DF.

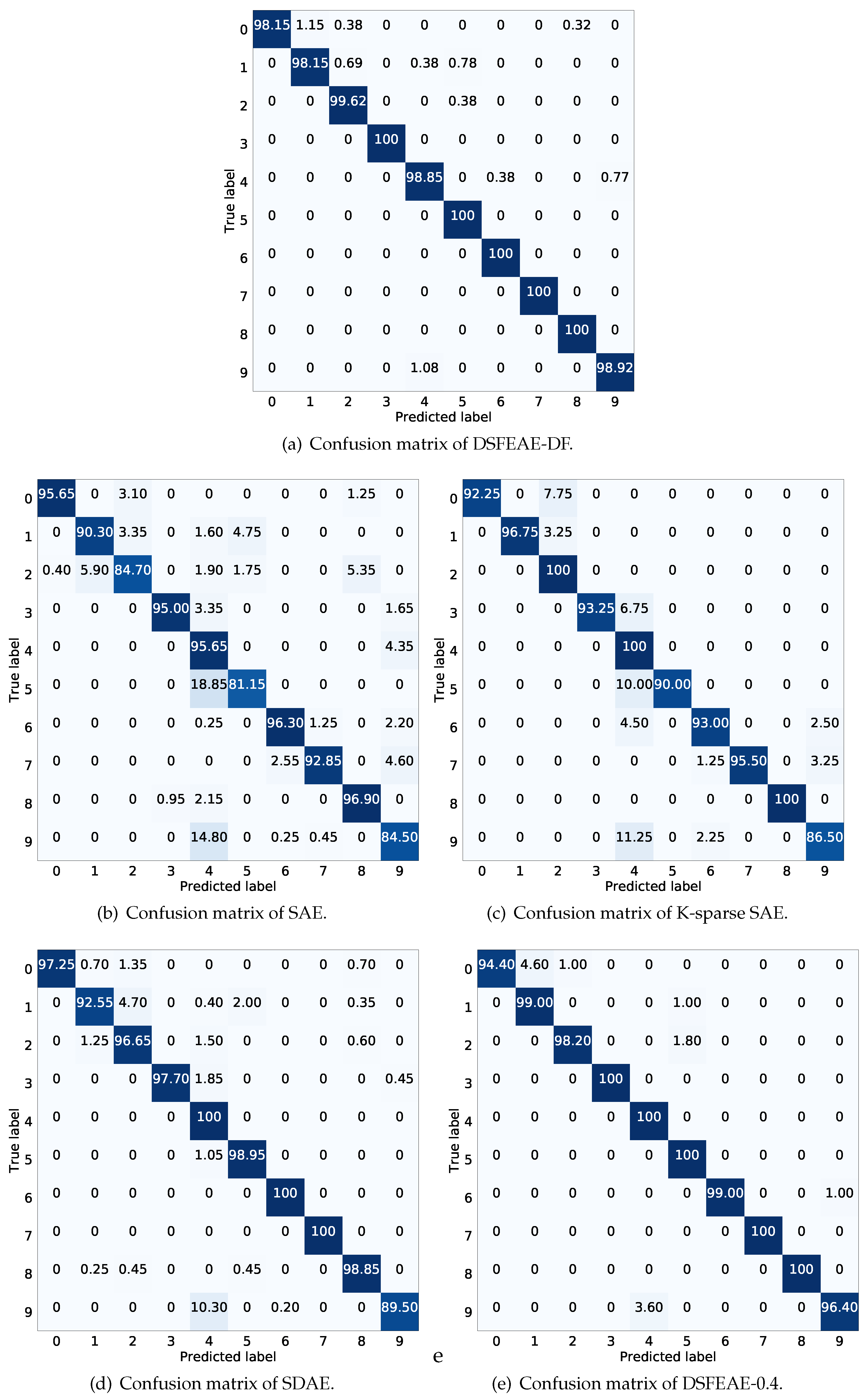

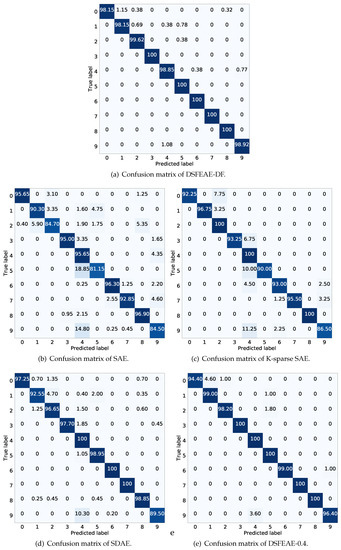

4.4. Performance of Fault Diagnosis

In this subsection, the performances of fault diagnosis and similar fault diagnosis are discussed. Table 2 shows the diagnosis results of the five models. The SAE achieves the worst accuracy of with a standard deviation of . K-sparse SAE, SDAE and DSFEAE- acquire the middle accuracies, which are , and with standard deviations of , and . It can be seen that DSFEAE-DF obtains the highest accuracy of and the lowest standard deviation of , which indicates the fault diagnosis performance of DSFEAE-DF is superior and stable.

Table 2.

Fault diagnosis results

Further, we explore the performance of similar fault diagnosis, so the confusion matrices of the testing results, which can detail the classification of each health condition, are drawn in Figure 6. According to Figure 4, the samples of the label 4 and label 9 are similar, so the classification of label 4 and label 9 is challenging. In Figure 6, of samples of label 9 are misclassified as label 4 in SAE, in K-sparse SAE, in SDAE, in DSFEAE-, but only in DSFEAE-DF. Similar situations also occur between label 1 and label 2 and between label 3 and label 4, which confirm the capability of classification of similar faults in DSFEAE-DF. Meanwhile, not only the average accuracy but also the accuracy of each label in DSFEAE-DF are generally higher than with other models. All these results illustrate the superiority of similar faults diagnosis.

Figure 6.

Confusion matrices of five models.

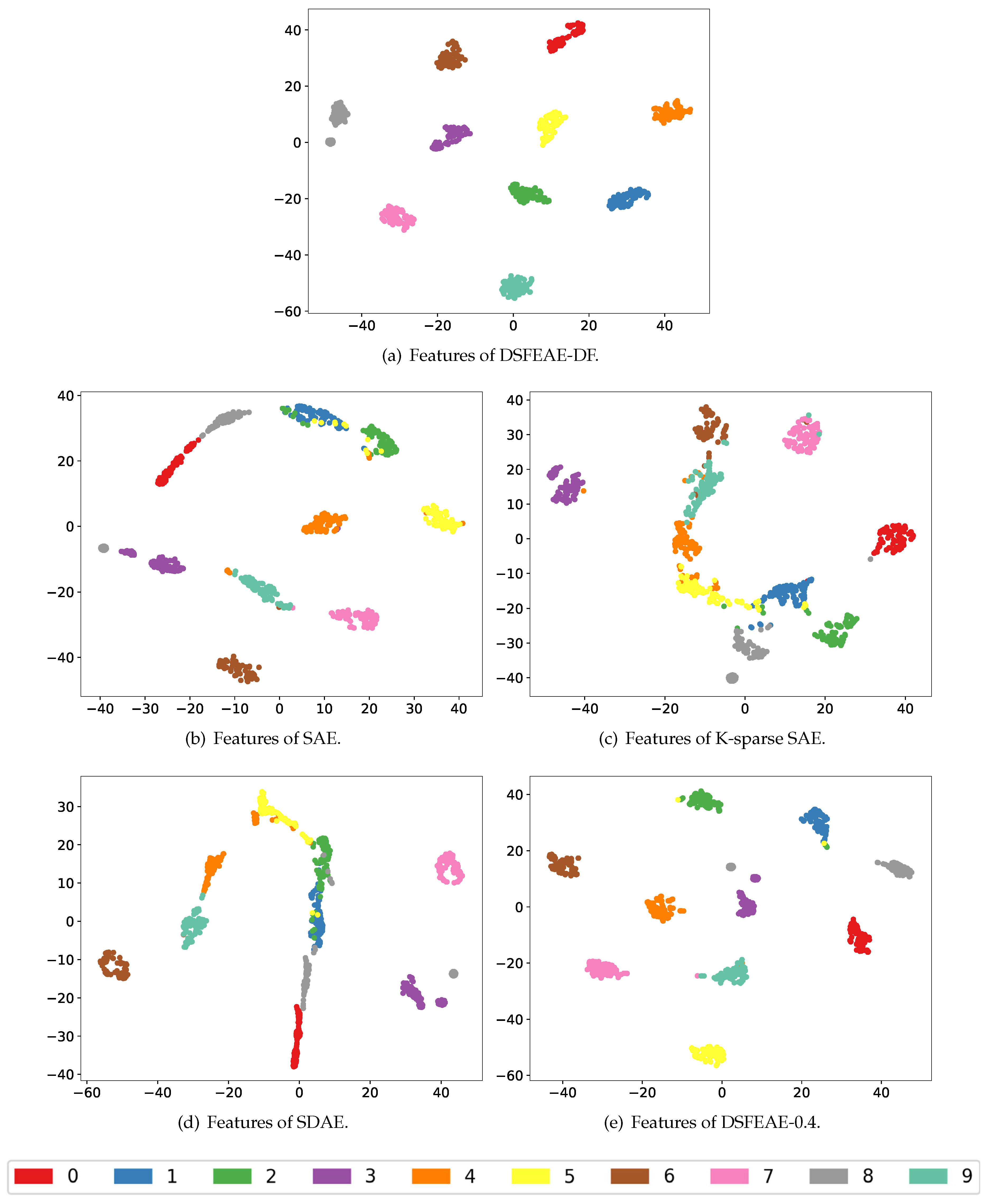

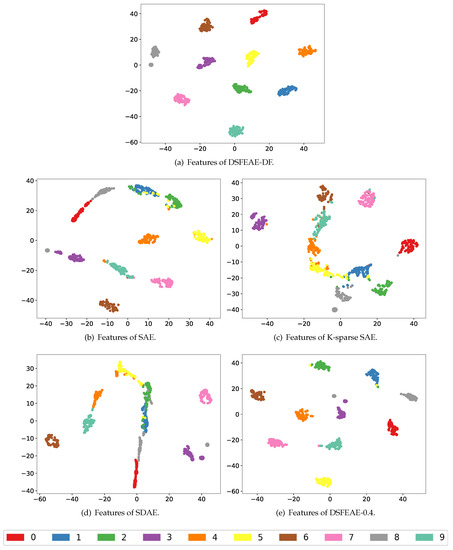

In Figure 7, the t-distributed stochastic neighbor embedding (t-SNE) [38] is used to map the features of last enhanced feature layer to two-dimensional features, thereby displaying the fault diagnosis results more intuitively. It can be seen that the features of SAE, K-sparse SAE, and SDAE are partially overlapped and the clustering effect is not satisfying. And in DSFEAE-, the clustering effect is good but some similar faults overlapped slightly, which matches the confusion matrix shown in Figure 6e. For the DSFEAE-DF, the features of the same health condition can be clustered together, and the separation between the features of different health conditions is clearer. These can verify the superiority of DSFEAE-DF.

Figure 7.

Features of five models.

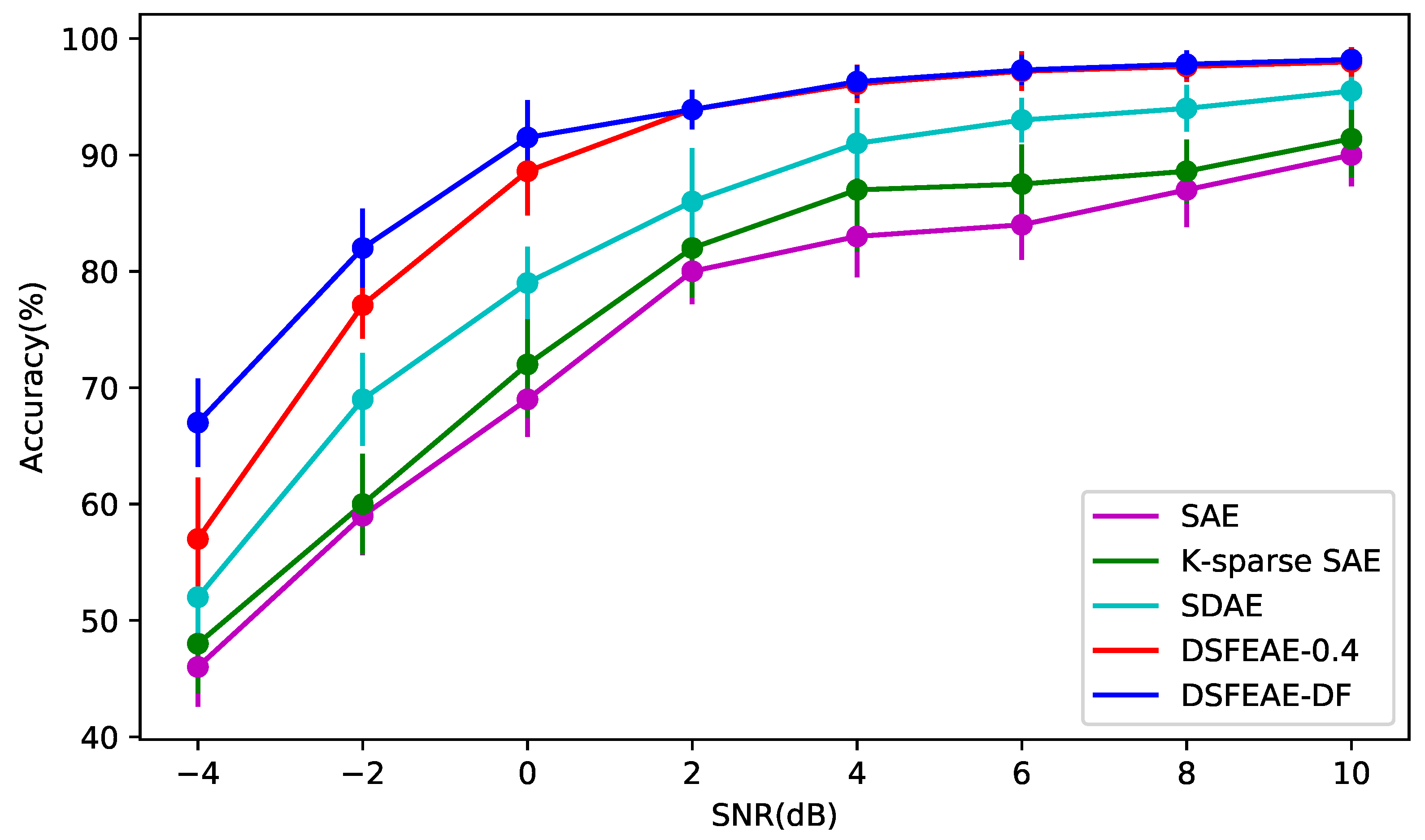

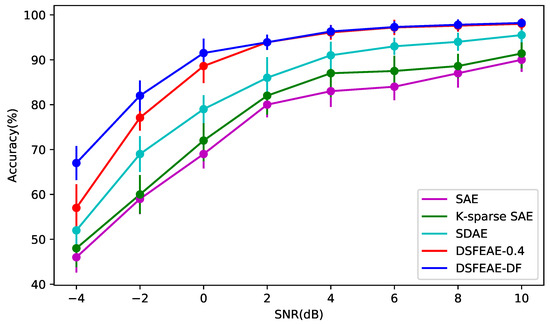

4.5. Performance Under Noise Environment

To verify the superiority of the proposed method in real WTs, the additive white Gaussian noise is added to the testing samples to synthesize signals with different signal-to-noise ratios to simulate the actual working conditions. The definition of the signal-to-noise ratio is defined as follows:

where and are the power of the original signal and noise, respectively.

Experiments of SAE, K-sparse SAE, SDAE, DSFEAE- and DSFEAE-DF under different noise environments are conducted, whose results are shown in Table 2 and Figure 8. According to Figure 8, when the SNR is 0, the DSFEAE-DF accuracy is as high as which is much higher than the other four models. When the SNR value is less than 0, the accuracy gaps between DSFEAE-DF and the other four models are obvious. The standard deviations of DSFEAE-DF are smaller than the other four methods, which means strong stability. When the SNR is greater than 0, the accuracies of DSFEAE-DF are still much higher than the accuracies of SAE, K-sparse SAE, and SDAE. Meanwhile, the accuracy gaps between DSFEAE-DF and DSFEAE- become tiny but DSFEAE-DF still has advantages with the fact that when SNR=10, the accuracies of DSFEAE-DF and DSFEAE- are and , respectively. These results can verify the superiority of the proposed method under noise environment.

Figure 8.

Accuracies with different SNR.

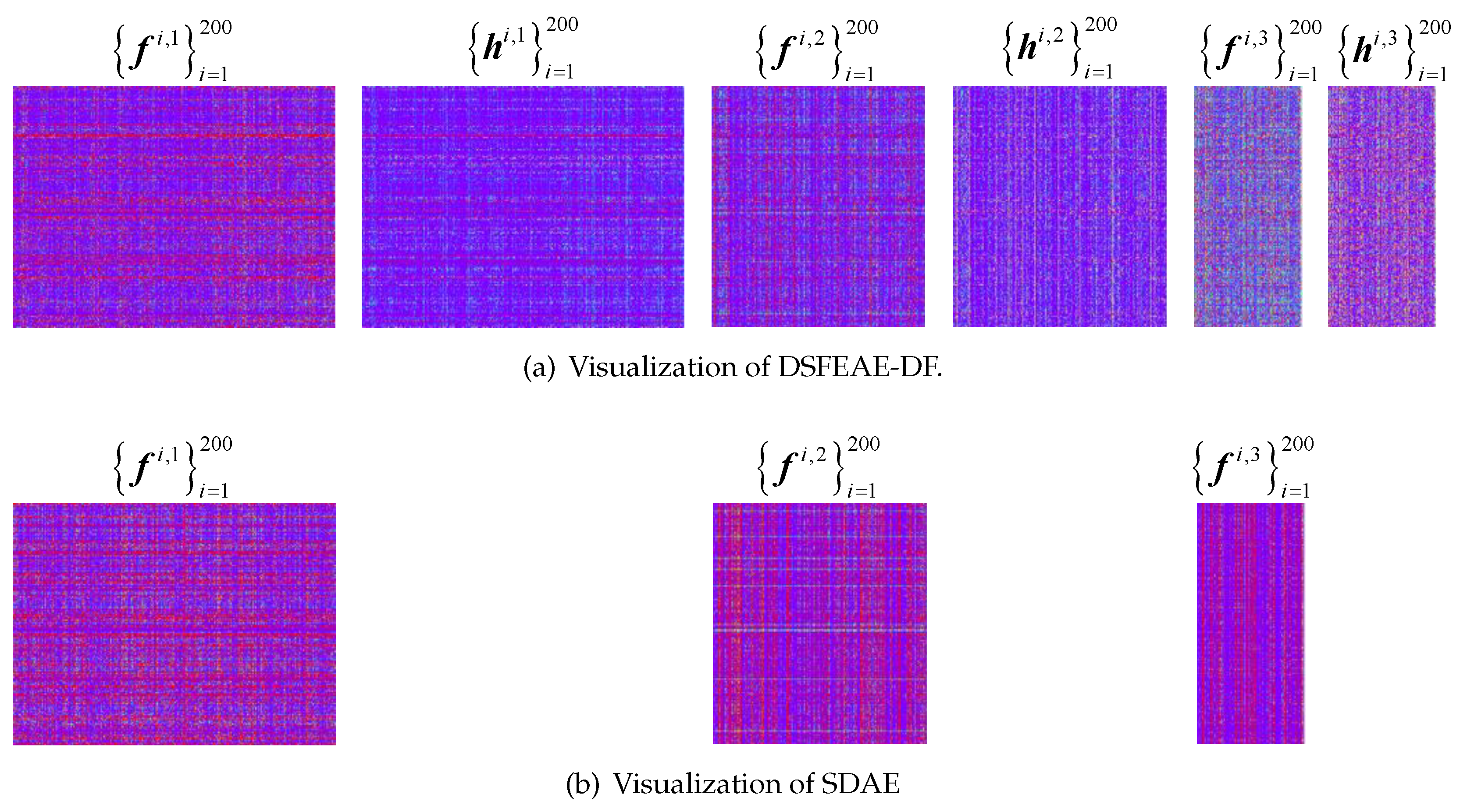

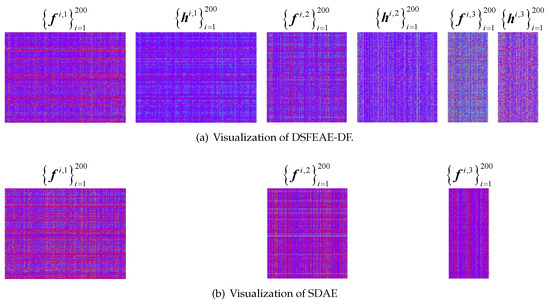

4.6. Visualization of Network

In order to visualize the reactions of neurons to gain some insights of feature enhancement, the features of random 200 testing samples of each hidden layer of DSFEAE-DF model and SDAE model are shown in Figure 9. In Figure 9a, the , and mean the features of the l-th FEAE, and , and represent the enhanced features of the l-th FEAE, respectively. In Figure 9b, the , and mean the features of l-th DAE, respectively. In Figure 9b, a large number of features in , and are activated slightly, which contains too much redundant information, increases the similarity of similar fault features, and degrades the diagnosis of similar faults seriously. While in Figure 9a, a certain amount features in and are enhanced to , in the first feature enhanced layer and the second feature enhanced layer. And the rest features are suppressed, which can increase the feature diversity between different samples. In the third FEAE, the most of the features in are enhanced with a relatively large . That is because the features after dimension reduction continue to decrease, but sufficient features must be retained and enhanced to prevent loss of sample information. These visualizations show the insights of feature enhancement.

Figure 9.

Visualization of DSFEAE-DF and stacked denoising autoencoder (SDAE)

5. Conclusions

In this paper, a novel model called denoising stacked feature enhanced autoencoder with dynamic feature enhanced factor (DSFEAE-DF) is proposed for fault diagnosis. The model, integrating a noise adding scheme, stacked feature enhanced autoencoders and dynamic feature enhanced factor, is proposed to discover more discriminative features from raw signals. Compared with traditional approaches, such as SAE, K-sparse SAE, SDAE and DSFEAE-, our proposed method achieves superior performances in similar faults diagnosis and noise environment faults diagnosis. In addition, the reactions of neurons are visualized to show the insights of feature enhancement.

Author Contributions

Conceptualization, X.N. and S.L.; methodology, S.L.; software, X.N. and S.L.; validation, X.N. and S.L.; writing–original draft preparation, X.N.; writing–review and editing, X.N.; supervision, G.X.; project administration, G.X.; funding acquisition, X.N. and G.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Research and Development Plan of Shanxi Province (Grant No. 201703D111027 ), Shanxi International Cooperation Project (Grant No. 201803D421039, Grant No. 201903D421045), and the Foundation of Shanxi Key Laboratory of Advanced Control and Equipment Intelligence (Grant No. ACEI202001).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wu, X.; Jiang, G.; Wang, X.; Xie, P.; Li, X. A Multi-Level-Denoising Autoencoder Approach for Wind Turbine Fault Detection. IEEE Access 2019, 7, 59376–59387. [Google Scholar] [CrossRef]

- Qiao, W.; Lu, D. A Survey on Wind Turbine Condition Monitoring and Fault Diagnosis Part I Components and Subsystems. IEEE Trans. Ind. Electron. 2015, 62, 6536–6545. [Google Scholar] [CrossRef]

- Mao, W.; Wang, L.; Feng, N. A New Fault Diagnosis Method of Bearings Based on Structural Feature Selection. Electronics 2019, 8, 1406. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, S.; Wang, J.; Xin, Y.; An, Z. General normalized sparse filtering: A novel unsupervised learning method for rotating machinery fault diagnosis. Mech. Syst. Sig. Process. 2019, 124, 596–612. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Ding, Q. Deep residual learning-based fault diagnosis method for rotating machinery. ISA Trans. 2019, 95, 295–305. [Google Scholar] [CrossRef]

- Hoang, D.T.; Kang, H.J. Rolling element bearing fault diagnosis using convolutional neural network and vibration image. Cognitive Syst. Res. 2019, 53, 42–50. [Google Scholar] [CrossRef]

- Glowacz, A. Fault diagnosis of single-phase induction motor based on acoustic signals. Mech. Syst. Sig. Process. 2019, 117, 65–80. [Google Scholar] [CrossRef]

- Glowacz, A.; Glowacz, W.; Glowacz, Z.; Kozik, J. Early fault diagnosis of bearing and stator faults of the single-phase induction motor using acoustic signals. Measurement 2018, 113, 1–9. [Google Scholar] [CrossRef]

- Alian, H.; Konforty, S.; Ben-Simon, U.; Klein, R.; Tur, M.; Bortman, J. Bearing fault detection and fault size estimation using fiber-optic sensors. Mech. Syst. Sig. Process. 2019, 120, 392–407. [Google Scholar] [CrossRef]

- Zhu, J.; Yoon, J.M.; He, D.; Bechhoefer, E. Online particle-contaminated lubrication oil condition monitoring and remaining useful life prediction for wind turbines. Wind Energy 2015, 18, 1131–1149. [Google Scholar] [CrossRef]

- Wang, J.; Qiao, W.; Qu, L. Wind turbine bearing fault diagnosis based on sparse representation of condition monitoring signals. IEEE Trans. Ind. Appl. 2018, 55, 1844–1852. [Google Scholar] [CrossRef]

- Lu, D.; Qiao, W.; Gong, X. Current-based gear fault detection for wind turbine gearboxes. IEEE Trans. Sustain. Energy 2017, 8, 1453–1462. [Google Scholar] [CrossRef]

- Yu, J. A selective deep stacked denoising autoencoders ensemble with negative correlation learning for gearbox fault diagnosis. Comput. Ind. 2019, 108, 62–72. [Google Scholar] [CrossRef]

- Wang, J.; Mo, Z.; Zhang, H.; Miao, Q. A Deep Learning Method for Bearing Fault Diagnosis Based on Time-Frequency Image. IEEE Access 2019, 7, 42373–42383. [Google Scholar] [CrossRef]

- Wang, H.; Li, S.; Song, L.; Cui, L. A novel convolutional neural network based fault recognition method via image fusion of multi-vibration-signals. Comput. Ind. 2019, 105, 182–190. [Google Scholar] [CrossRef]

- Razavi-Far, R.; Hallaji, E.; Farajzadeh-Zanjani, M.; Saif, M.; Kia, S.H.; Henao, H.; Capolino, G.A. Information Fusion and Semi-Supervised Deep Learning Scheme for Diagnosing Gear Faults in Induction Machine Systems. IEEE Trans. Ind. Electron. 2019, 66, 6331–6342. [Google Scholar] [CrossRef]

- Zeng, M.; Zhang, W.; Chen, Z. Group-Based K-SVD Denoising for Bearing Fault Diagnosis. IEEE Sens. J. 2019, 19, 6335–6343. [Google Scholar] [CrossRef]

- Mao, W.; Zhang, D.; Tian, S.; Tang, J. Robust Detection of Bearing Early Fault Based on Deep Transfer Learning. Electronics 2020, 9, 323. [Google Scholar] [CrossRef]

- Wang, X.; He, H.; Li, L. A Hierarchical Deep Domain Adaptation Approach for Fault Diagnosis of Power Plant Thermal System. IEEE Trans. Ind. Inf. 2019, 15, 5139–5148. [Google Scholar] [CrossRef]

- Pang, S.; Yang, X. A Cross-Domain Stacked Denoising Autoencoders for Rotating Machinery Fault Diagnosis Under Different Working Conditions. IEEE Access 2019, 7, 77277–77292. [Google Scholar] [CrossRef]

- Lei, Y.; Jia, F.; Lin, J.; Xing, S.; Ding, S.X. An Intelligent Fault Diagnosis Method Using Unsupervised Feature Learning Towards Mechanical Big Data. IEEE Trans. Ind. Electron. 2016, 63, 3137–3147. [Google Scholar] [CrossRef]

- Qiu, G.; Gu, Y.; Cai, Q. A deep convolutional neural networks model for intelligent fault diagnosis of a gearbox under different operational conditions. Measurement 2019, 145, 94–107. [Google Scholar] [CrossRef]

- Jiang, G.; He, H.; Yan, J.; Xie, P. Multiscale Convolutional Neural Networks for Fault Diagnosis of Wind Turbine Gearbox. IEEE Trans. Ind. Electron. 2019, 66, 3196–3207. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, J.; Zheng, Y.; Jiang, W.; Zhang, Y. Fault diagnosis of rolling bearings with recurrent neural network-based autoencoders. ISA Trans. 2018, 77, 167–178. [Google Scholar] [CrossRef] [PubMed]

- Jiao, J.; Zhao, M.; Lin, J.; Zhao, J. A multivariate encoder information based convolutional neural network for intelligent fault diagnosis of planetary gearboxes. Knowl.-Based Syst. 2018, 160, 237–250. [Google Scholar] [CrossRef]

- Li, Z.; Yin, Z.; Tang, T.; Gao, C. Fault Diagnosis of Railway Point Machines Using the Locally Connected Autoencoder. Appl. Sci. 2019, 9, 5139. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Guo, L.; Lin, J.; Xing, S. A neural network constructed by deep learning technique and its application to intelligent fault diagnosis of machines. Neurocomputing 2018, 272, 619–628. [Google Scholar] [CrossRef]

- Jiang, G.; He, H.; Xie, P.; Tang, Y. Stacked multilevel-denoising autoencoders: A new representation learning approach for wind turbine gearbox fault diagnosis. IEEE Trans. Instrum. Meas. 2017, 66, 2391–2402. [Google Scholar] [CrossRef]

- Shen, C.; Qi, Y.; Wang, J.; Cai, G.; Zhu, Z. An automatic and robust features learning method for rotating machinery fault diagnosis based on contractive autoencoder. Eng. Appl. Artif. Intell. 2018, 76, 170–184. [Google Scholar] [CrossRef]

- San Martin, G.; López Droguett, E.; Meruane, V.; das Chagas Moura, M. Deep variational auto-encoders: A promising tool for dimensionality reduction and ball bearing elements fault diagnosis. Struct. Health Monit. 2019, 18, 1092–1128. [Google Scholar] [CrossRef]

- Chen, Y.; Zaki, M.J. Kate: K-competitive autoencoder for text. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; ACM: Halifax, BC, Canada, 2017; pp. 85–94. [Google Scholar]

- Zhu, Z.; Peng, G.; Chen, Y.; Gao, H. A convolutional neural network based on a capsule network with strong generalization for bearing fault diagnosis. Neurocomputing 2019, 323, 62–75. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.; Lin, J.; Zhou, X.; Lu, N. Deep neural networks: A promising tool for fault characteristic mining and intelligent diagnosis of rotating machinery with massive data. Mech. Syst. Sig. Process. 2016, 72, 303–315. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, J.; Fu, P.; Zhang, L.; Gao, R.X.; Zhao, R. Multilevel Information Fusion for Induction Motor Fault Diagnosis. IEEE/ASME Trans. Mechatron. 2019, 24, 2139–2150. [Google Scholar] [CrossRef]

- Lan, R.; Li, Z.; Liu, Z.; Gu, T.; Luo, X. Hyperspectral image classification using k-sparse denoising autoencoder and spectral–restricted spatial characteristics. Appl. Soft Comput. 2019, 74, 693–708. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.Y.; Qin, W.L.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- Maaten, L.v.D.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).