Priority-Based Bandwidth Management in Virtualized Software-Defined Networks

Abstract

:1. Introduction

- a novel bandwidth management strategy

- a detailed description of the proposed priority-based admission control

- an implementation of the proposed approach and a discussion of the results obtained through experimental performance assessments.

2. Background

- Control and data planes decoupling. The network devices (e.g., switches) become simple forwarding elements, since the network logic is provided by the SDN controller.

- Control logic moving to an external entity. The SDN controller offers the key resources and abstractions to allow easy programming of forwarding devices, based on a logically centralized view of the network.

- Network programmability. The network is programmable through software applications running on top of the SDN controller that, in turn, interacts with the underlying networking devices.

- Flow-based forwarding. A flow is a sequence of packets exchanged between source and destination and is defined by a set of packet field values, acting as a match (filter) criterion, and a set of actions (instructions). The forwarding devices manage all the packets of a flow in the same way, thus the forwarding rules are flow-based rather than destination-based. As a result, flow programming offers high flexibility and makes it possible a unified behavior of different types of network devices.

3. Related Work

4. PrioSDN_RM: Bandwidth Management Strategy

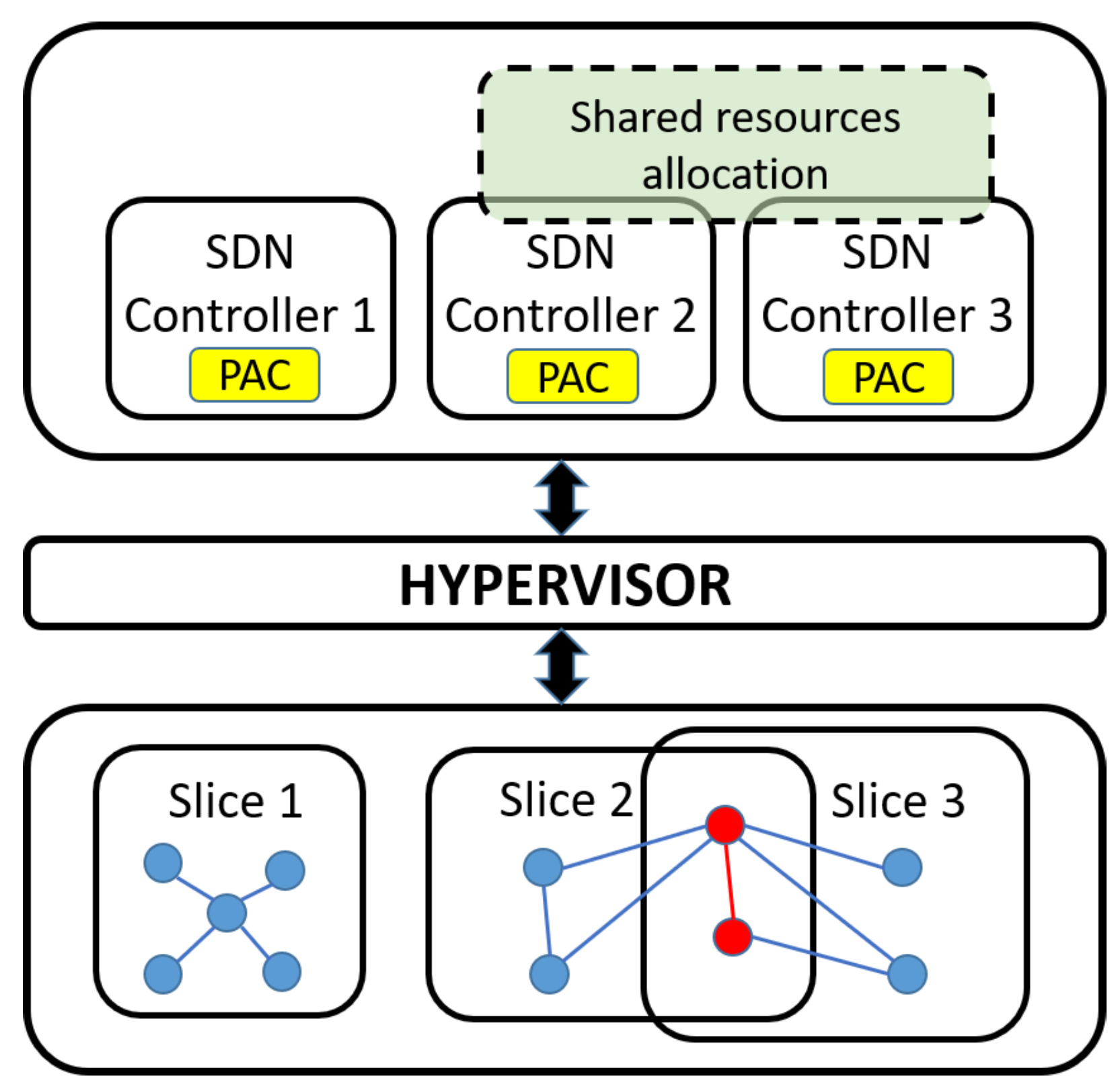

4.1. System Design

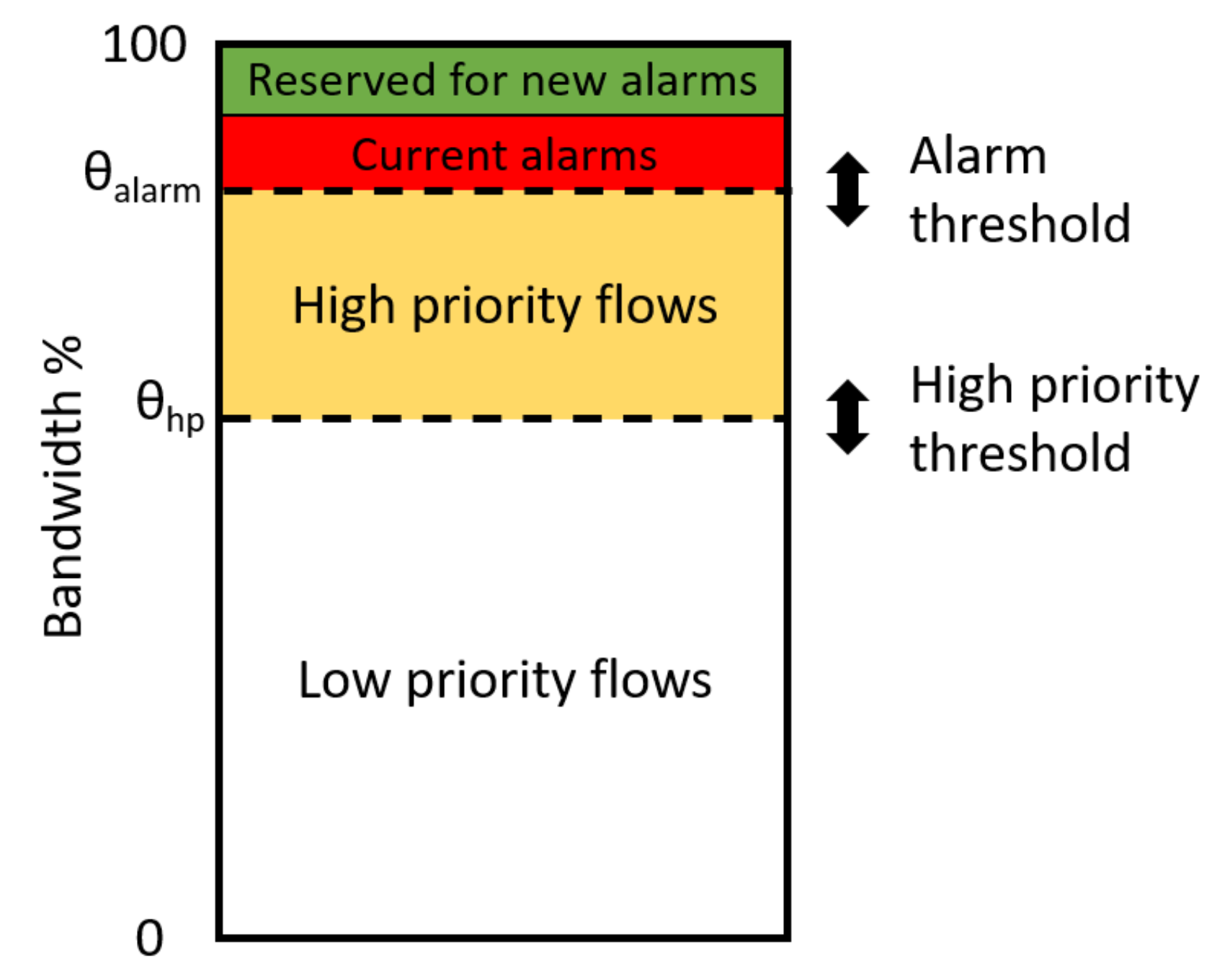

4.2. Priority-Based Admission Control-Basic Concepts

4.3. Priority-Based Admission Control-Bandwidth Allocation Procedure

5. A Use Case for the PrioSDN_RM

6. Implementation

- FloodLight. An open source (Apache-licensed) Java-based OpenFlow SDN controller, commonly used for research purposes [46]. The FloodLight architecture includes multiple modules that can be easily modified and improved.

- Zodiac FX. A small OpenFlow switch with an open source firmware. The Zodiac FX represents an excellent option for experimental purposes in research projects, as it is very cheap and it does not require complex setup or configuration.

- Raspberry Pi. A low cost single-board computer used as an end node.

Experimental Setup

7. Performance Evaluation

7.1. Throughput

7.2. Frame Drop Ratio

7.3. Priority-Based Admission Control Overhead

8. Comparative Assessment with the Relevant Mechanisms in the Literature

8.1. Configuration A

8.2. Configuration B

9. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Aslam, M.S.; Khan, A.; Atif, A.; Hassan, S.A.; Mahmood, A.; Qureshi, H.K.; Gidlund, M. Exploring Multi-Hop LoRa for Green Smart Cities. IEEE Netw. 2020, 34, 225–231. [Google Scholar] [CrossRef] [Green Version]

- Arasteh, H.; Hosseinnezhad, V.; Loia, V.; Tommasetti, A.; Troisi, O.; Shafie-khah, M.; Siano, P. Iot-based smart cities: A survey. In Proceedings of the 2016 IEEE 16th International Conference on Environment and Electrical Engineering (EEEIC), Florence, Italy, 7–10 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6. [Google Scholar]

- Qian, Y.; Wu, D.; Bao, W.; Lorenz, P. The Internet of Things for Smart Cities: Technologies and Applications. IEEE Netw. 2019, 33, 4–5. [Google Scholar] [CrossRef]

- Stojkoska, B.L.R.; Trivodaliev, K.V. A review of Internet of Things for smart home: Challenges and solutions. J. Clean. Prod. 2017, 140, 1454–1464. [Google Scholar] [CrossRef]

- Iannizzotto, G.; Lo Bello, L.; Nucita, A.; Grasso, G.M. A Vision and Speech Enabled, Customizable, Virtual Assistant for Smart Environments. In Proceedings of the 2018 11th International Conference on Human System Interaction (HSI), Gdansk, Poland, 4–6 July 2018; pp. 50–56. [Google Scholar]

- Kabalci, Y.; Kabalci, E.; Padmanaban, S.; Holm-Nielsen, J.B.; Blaabjerg, F. Internet of Things Applications as Energy Internet in Smart Grids and Smart Environments. Electronics 2019, 8, 972. [Google Scholar] [CrossRef] [Green Version]

- Simoens, P.; Dragone, M.; Saffiotti, A. The Internet of Robotic Things: A review of concept, added value and applications. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418759424. [Google Scholar] [CrossRef]

- Patti, G.; Leonardi, L.; Lo Bello, L. A Novel MAC Protocol for Low Datarate Cooperative Mobile Robot Teams. Electronics 2020, 9, 235. [Google Scholar] [CrossRef] [Green Version]

- Ansari, S.; Aslam, T.; Poncela, J.; Otero, P.; Ansari, A. Internet of Things-Based Healthcare Applications. In IoT Architectures, Models, and Platforms for Smart City Applications; IGI Global: Hershey, PA, USA, 2020; pp. 1–28. [Google Scholar]

- Catarinucci, L.; De Donno, D.; Mainetti, L.; Palano, L.; Patrono, L.; Stefanizzi, M.L.; Tarricone, L. An IoT-aware architecture for smart healthcare systems. IEEE Internet Things J. 2015, 2, 515–526. [Google Scholar] [CrossRef]

- Leonardi, L.; Lo Bello, L.; Battaglia, F.; Patti, G. Comparative Assessment of the LoRaWAN Medium Access Control Protocols for IoT: Does Listen before Talk Perform Better than ALOHA? Electronics 2020, 9, 553. [Google Scholar] [CrossRef] [Green Version]

- Pasetti, M.; Ferrari, P.; Silva, D.R.C.; Silva, I.; Sisinni, E. On the Use of LoRaWAN for the Monitoring and Control of Distributed Energy Resources in a Smart Campus. Appl. Sci. 2020, 10, 320. [Google Scholar] [CrossRef] [Green Version]

- Wan, J.; Tang, S.; Shu, Z.; Li, D.; Wang, S.; Imran, M.; Vasilakos, A.V. Software-Defined Industrial Internet of Things in the Context of Industry 4.0. IEEE Sens. J. 2016, 16, 7373–7380. [Google Scholar] [CrossRef]

- Sisinni, E.; Ferrari, P.; Fernandes Carvalho, D.; Rinaldi, S.; Marco, P.; Flammini, A.; Depari, A. LoRaWAN Range Extender for Industrial IoT. IEEE Trans. Ind. Inform. 2020, 16, 5607–5616. [Google Scholar] [CrossRef]

- Luvisotto, M.; Tramarin, F.; Vangelista, L.; Vitturi, S. On the Use of LoRaWAN for Indoor Industrial IoT Applications. Wirel. Commun. Mob. Comput. 2018, 2018, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Leonardi, L.; Ashjaei, M.; Fotouhi, H.; Lo Bello, L. A Proposal Towards Software-Defined Management of Heterogeneous Virtualized Industrial Networks. In Proceedings of the IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019. [Google Scholar] [CrossRef]

- Lucas-Estañ, M.C.; Raptis, T.P.; Sepulcre, M.; Passarella, A.; Regueiro, C.; Lazaro, O. A software defined hierarchical communication and data management architecture for industry 4.0. In Proceedings of the 2018 14th Annual Conference on Wireless On-Demand Network Systems and Services (WONS), Isola, France, 6–8 February 2018; pp. 37–44. [Google Scholar]

- Wan, M.; Yao, J.; Jing, Y.; Jin, X. Event-based Anomaly Detection for Non-public Industrial Communication Protocols in SDN-based Control Systems. Comput. Mater. Contin. 2018, 55, 447–463. [Google Scholar]

- Wang, J.; Yang, Y.; Wang, T.; Sherratt, R.S.; Zhang, J. Big Data Service Architecture: A Survey. J. Internet Technol. 2020, 21, 393–405. [Google Scholar]

- Zhang, J.; Zhong, S.; Wang, T.; Chao, H.C.; Wang, J. Blockchain-based systems and applications: A survey. J. Internet Technol. 2020, 21, 1–14. [Google Scholar]

- Liu, P.; Wang, X.; Chaudhry, S.; Javeed, K.; Ma, Y.; Collier, M. Secure video streaming with lightweight cipher PRESENT in an SDN testbed. Comput. Mater. Contin. 2018, 57, 353–363. [Google Scholar] [CrossRef]

- Aglianò, S.; Ashjaei, M.; Behnam, M.; Lo Bello, L. Resource management and control in virtualized SDN networks. In Proceedings of the 2018 Real-Time and Embedded Systems and Technologies (RTEST), Tehran, Iran, 9–10 May 2018; pp. 47–53. [Google Scholar] [CrossRef]

- Kreutz, D.; Ramos, F.M.V.; Veríssimo, P.E.; Rothenberg, C.E.; Azodolmolky, S.; Uhlig, S. Software-Defined Networking: A Comprehensive Survey. Proc. IEEE 2015, 103, 14–76. [Google Scholar] [CrossRef] [Green Version]

- Blenk, A.; Basta, A.; Reisslein, M.; Kellerer, W. Survey on Network Virtualization Hypervisors for Software Defined Networking. IEEE Commun. Surv. Tutor. 2016, 18, 655–685. [Google Scholar] [CrossRef] [Green Version]

- Sherwood, R.; Chan, M.; Covington, A.; Gibb, G.; Flajslik, M.; Handigol, N.; Huang, T.Y.; Kazemian, P.; Kobayashi, M.; Naous, J.; et al. Carving Research Slices out of Your Production Networks with OpenFlow. ACM Spec. Interest Group Data Commun. (SIGCOMM) Comput. Commun. Rev. 2010, 40, 129–130. [Google Scholar] [CrossRef]

- Alderisi, G.; Iannizzotto, G.; Lo Bello, L. Towards IEEE 802.1 Ethernet AVB for Advanced Driver Assistance Systems: A preliminary assessment. In Proceedings of the 2012 IEEE 17th International Conference on Emerging Technologies Factory Automation (ETFA), Krakow, Poland, 17–21 September 2012; pp. 1–4. [Google Scholar]

- Zhao, L.; Pop, P.; Zheng, Z.; Li, Q. Timing Analysis of AVB Traffic in TSN Networks Using Network Calculus. In Proceedings of the 2018 IEEE Real-Time and Embedded Technology and Applications Symposium (RTAS), Porto, Portugal, 11–13 April 2018; pp. 25–36. [Google Scholar]

- Alderisi, G.; Patti, G.; Lo Bello, L. Introducing support for scheduled traffic over IEEE audio video bridging networks. In Proceedings of the 2013 IEEE 18th Conference on Emerging Technologies Factory Automation (ETFA), Cagliari, Italy, 10–13 September 2013; pp. 1–9. [Google Scholar] [CrossRef]

- Ashjaei, M.; Patti, G.; Behnam, M.; Nolte, T.; Alderisi, G.; Lo Bello, L. Schedulability analysis of Ethernet Audio Video Bridging networks with scheduled traffic support. Real-Time Syst. 2017, 53, 526–577. [Google Scholar] [CrossRef] [Green Version]

- Ashjaei, M.; Girs, S. Dynamic Resource Distribution using SDN in Wireless Networks. In Proceedings of the 2020 IEEE International Conference on Industrial Technology (ICIT), Buenos Aires, Argentina, 26–28 February 2020; pp. 967–972. [Google Scholar]

- Girs, S.; Ashiaei, M. Designing a Bandwidth Management Scheme for Heterogeneous Virtualized Networks. In Proceedings of the 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA), Turin, Italy, 4–7 September 2018; Volume 1, pp. 1079–1082. [Google Scholar] [CrossRef]

- Paliwal, M.; Shrimankar, D. Effective Resource Management in SDN Enabled Data Center Network Based on Traffic Demand. IEEE Access 2019, 7, 69698–69706. [Google Scholar] [CrossRef]

- Trivisonno, R.; Guerzoni, R.; Vaishnavi, I.; Frimpong, A. Network Resource Management and QoS in SDN-Enabled 5G Systems. In Proceedings of the 2015 IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Lo Bello, L.; Lombardo, A.; Milardo, S.; Patti, G.; Reno, M. Experimental Assessments and Analysis of an SDN Framework to Integrate Mobility Management in Industrial Wireless Sensor Networks. IEEE Trans. Ind. Inform. 2020, 16, 5586–5595. [Google Scholar] [CrossRef]

- Satija, S.; Sharma, T.; Bhushan, B. Innovative approach to Wireless Sensor Networks: SD-WSN. In Proceedings of the 2019 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 18–19 October 2019; pp. 170–175. [Google Scholar]

- Jang, H.C.; Lin, J.T. Bandwidth management framework for smart homes using SDN: ISP perspective. Int. J. Internet Protoc. Technol. (IJIPT) 2019, 12, 110–120. [Google Scholar] [CrossRef]

- Li, C.; Guo, W.; Wang, W.; Hu, W.; Xia, M. Programmable bandwidth management in software-defined EPON architecture. Opt. Commun. 2016, 370, 43–48. [Google Scholar] [CrossRef]

- Chang, Y.; Chen, Y.; Chen, T.; Chen, J.; Chiu, S.; Chang, W. Software-Defined Dynamic Bandwidth Management. In Proceedings of the 2019 21st International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 17–20 February 2019; pp. 201–205. [Google Scholar] [CrossRef]

- Jimson, E.R.; Nisar, K.; bin Ahmad Hijazi, M.H. Bandwidth management using software defined network and comparison of the throughput performance with traditional network. In Proceedings of the International Conference on Computer and Drone Applications (IConDA), Kuching, Malaysia, 9–11 November 2017; pp. 71–76. [Google Scholar] [CrossRef]

- Becker, M.; Lu, Z.; Chen, D. An Adaptive Resource Provisioning Scheme for Industrial SDN Networks. In Proceedings of the 2019 IEEE 17th International Conference on Industrial Informatics (INDIN), Helsinki, Finland, 22–25 July 2019; Volume 1, pp. 877–880. [Google Scholar] [CrossRef]

- Min, S.; Kim, S.; Lee, J.; Kim, B.; Hong, W.; Kong, J. Implementation of an OpenFlow network virtualization for multi-controller environment. In Proceedings of the 2012 14th International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 19–22 February 2012; pp. 589–592. [Google Scholar]

- Priyadarsini, M.; Bera, P. A Secure Virtual Controller for Traffic Management in SDN. IEEE Lett. Comput. Soc. 2019, 2, 24–27. [Google Scholar] [CrossRef]

- Chen, J.; Ma, Y.; Kuo, H.; Yang, C.; Hung, W. Software-Defined Network Virtualization Platform for Enterprise Network Resource Management. IEEE Trans. Emerg. Top. Comput. 2016, 4, 179–186. [Google Scholar] [CrossRef]

- Mijumbi, R.; Serrat, J.; Rubio-Loyola, J.; Bouten, N.; Turck, F.D.; Latré, S. Dynamic resource management in SDN-based virtualized networks. In Proceedings of the 10th International Conference on Network and Service Management (CNSM) and Workshop, Rio de Janeiro, Brazil, 17–21 November 2014; pp. 412–417. [Google Scholar] [CrossRef] [Green Version]

- Struhár, V.; Ashjaei, M.; Behnam, M.; Craciunas, S.S.; Papadopoulos, A.V. DART: Dynamic Bandwidth Distribution Framework for Virtualized Software Defined Networks. In Proceedings of the 45th Annual Conference of the IEEE Industrial Electronics Society (IECON), Lisbon, Portugal, 14–17 October 2019; Volume 1, pp. 2934–2939. [Google Scholar]

- Asadollahi, S.; Goswami, B. Experimenting with scalability of floodlight controller in software defined networks. In Proceedings of the 2017 International Conference on Electrical, Electronics, Communication, Computer, and Optimization Techniques (ICEECCOT), Mysuru, India, 15–16 December 2017; pp. 288–292. [Google Scholar]

- Sherwood, R.; Gibb, G.; kiong Yap, K.; Casado, M.; Mckeown, N.; Parulkar, G. FlowVisor: A Network Virtualization Layer; OpenFlow Switch Consortium, Technical Report; ETH Zürich: Zürich, Switzerland, 2009. [Google Scholar]

| Flow ID | Bit Rate (Bit/s) | PCP |

|---|---|---|

| 1 | 90 | 1 |

| 2 | 25 | 1 |

| 3 | 90 | 2 |

| 4 | 30 | 5 |

| 5 | 40 | 0 |

| 6 | 50 | 4 |

| PCP | Configuration a | Configuration b | ||

|---|---|---|---|---|

| FDR | Timeout (s) | FDR | Timeout (s) | |

| 1 | 64% | 40 | 43% | 30 |

| 1 | 33% | 40 | 30% | 30 |

| 2 | 70% | 40 | 74% | 30 |

| 5 | 28% | 40 | 22% | 40 |

| 0 | 89% | 40 | 76% | 30 |

| 4 | 35% | 40 | 24% | 40 |

| 7 | 0% | 40 | 0% | 40 |

| PCP | Configuration a | Configuration b | ||

|---|---|---|---|---|

| FDR | Timeout (s) | FDR | Timeout (s) | |

| 1 | 52% | 40 | 47% | 30 |

| 1 | 58% | 40 | 26% | 30 |

| 2 | 84% | 40 | 74% | 30 |

| 5 | 29% | 40 | 22% | 40 |

| 0 | 100% | 40 | 82% | 30 |

| 4 | 60% | 40 | 27% | 40 |

| 7 | 0% | 40 | 0% | 40 |

| Flow ID | Bit Rate (Bit/s) | PCP |

|---|---|---|

| 1 | 30 | 0 |

| 2 | 40 | 1 |

| 3 | 30 | 1 |

| 4 | 40 | 2 |

| 5 | 30 | 2 |

| 6 | 20 | 3 |

| 7 | 25 | 4 |

| 8 | 20 | 5 |

| 9 | 30 | 5 |

| 10 | 20 | 6 |

| Flow ID | PCP | FDR-Slice 1 | FDR-Slice 2 | ||||

|---|---|---|---|---|---|---|---|

| PrioSDN_RM | Mechanism in [22] | DART [45] | PrioSDN_RM | Mechanism in [22] | DART [45] | ||

| 1 | 0 | 60% | 65% | 100% | 55% | 70% | 100% |

| 2 | 1 | 88% | 91% | 100% | 93% | 86% | 100% |

| 3 | 1 | 86% | 61% | 100% | 81% | 69% | 100% |

| 4 | 2 | 83% | 72% | 100% | 88% | 70% | 100% |

| 5 | 2 | 70% | 46% | 100% | 86% | 59% | 100% |

| 6 | 3 | 0% | 9% | 72% | 4.2% | 5% | 69% |

| 7 | 4 | 6% | 30% | 0% | 4% | 12% | 0% |

| 8 | 5 | 0% | 0% | 0% | 0% | 13% | 0% |

| 9 | 5 | 0% | 0% | 0% | 6.6% | 6% | 0% |

| 10 | 6 | 2% | 0% | 0% | 2% | 0% | 0% |

| Alarms | 7 | 0% | 15% | 0% | 0% | 14% | 0% |

| Flow ID | Bit Rate (Bit/s) | PCP |

|---|---|---|

| 1 | 30 | 0 |

| 2 | 40 | 1 |

| 3 | 40 | 2 |

| 4 | 30 | 2 |

| 5 | 30 | 3 |

| 6 | 40 | 4 |

| 7 | 30 | 5 |

| 8 | 40 | 5 |

| 9 | 30 | 6 |

| 10 | 50 | 6 |

| Flow ID | PCP | FDR-Slice 1 | FDR-Slice 2 | ||||

|---|---|---|---|---|---|---|---|

| PrioSDN_RM | Mechanism in [22] | DART [45] | PrioSDN_RM | Mechanism in [22] | DART [45] | ||

| 1 | 0 | 84% | 85% | 100% | 85% | 83% | 100% |

| 2 | 1 | 97% | 96% | 100% | 90% | 95% | 100% |

| 3 | 2 | 90% | 94% | 100% | 85% | 91% | 100% |

| 4 | 2 | 91% | 95% | 100% | 92% | 94% | 100% |

| 5 | 3 | 59% | 57% | 93% | 53% | 59% | 94% |

| 6 | 4 | 66% | 78% | 45% | 71% | 76% | 43% |

| 7 | 5 | 18% | 34% | 32% | 25% | 41% | 27% |

| 8 | 5 | 47% | 27% | 27% | 50% | 23% | 29% |

| 9 | 6 | 44% | 31% | 31% | 15% | 36% | 31% |

| 10 | 6 | 13% | 45% | 37% | 19% | 42% | 34% |

| Alarms | 7 | 0% | 34% | 47% | 0% | 35% | 50% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leonardi, L.; Lo Bello, L.; Aglianò, S. Priority-Based Bandwidth Management in Virtualized Software-Defined Networks. Electronics 2020, 9, 1009. https://doi.org/10.3390/electronics9061009

Leonardi L, Lo Bello L, Aglianò S. Priority-Based Bandwidth Management in Virtualized Software-Defined Networks. Electronics. 2020; 9(6):1009. https://doi.org/10.3390/electronics9061009

Chicago/Turabian StyleLeonardi, Luca, Lucia Lo Bello, and Simone Aglianò. 2020. "Priority-Based Bandwidth Management in Virtualized Software-Defined Networks" Electronics 9, no. 6: 1009. https://doi.org/10.3390/electronics9061009

APA StyleLeonardi, L., Lo Bello, L., & Aglianò, S. (2020). Priority-Based Bandwidth Management in Virtualized Software-Defined Networks. Electronics, 9(6), 1009. https://doi.org/10.3390/electronics9061009